Adaptive Selective Disturbance Elimination-Based Fixed-Time Consensus Tracking for a Class of Nonlinear Multiagent Systems

Abstract

1. Introduction

- Unlike [43], this study introduces adaptive selective disturbance elimination in each agent’s tracking control design, incorporating disturbance indicator and disturbance observation attenuation terms in the control law. This distinguishes between beneficial and detrimental disturbances, leveraging beneficial disturbances to accelerate tracking and suppressing detrimental ones to improve tracking accuracy.

- In this study, a fixed-time disturbance observer is introduced to rapidly and accurately estimate lumped disturbances and their derivatives arising from unmodeled dynamics, parameter perturbations, external disturbances, and nonlinear couplings. Utilizing Lyapunov theory, the fixed-time stability of the disturbance observer is proven, representing a novel contribution to the field of multiagent consensus tracking.

- Additionally, an application framework for a fixed-time tracking protocol based on adaptive selective disturbance elimination is proposed. The protocol includes a distributed fixed-time observer for estimating the leader’s output under specific network topologies, a fixed-time disturbance observer for rapid estimation of lumped disturbances, and a fixed-time active anti-disturbance controller based on adaptive selective disturbance elimination, integrating the strengths of backstepping, nonlinear dynamic inversion, and conditional disturbance negation. The protocol leverages the “separation principle” and analyzes its fixed-time stability based on Lyapunov theory, demonstrating significant innovation.

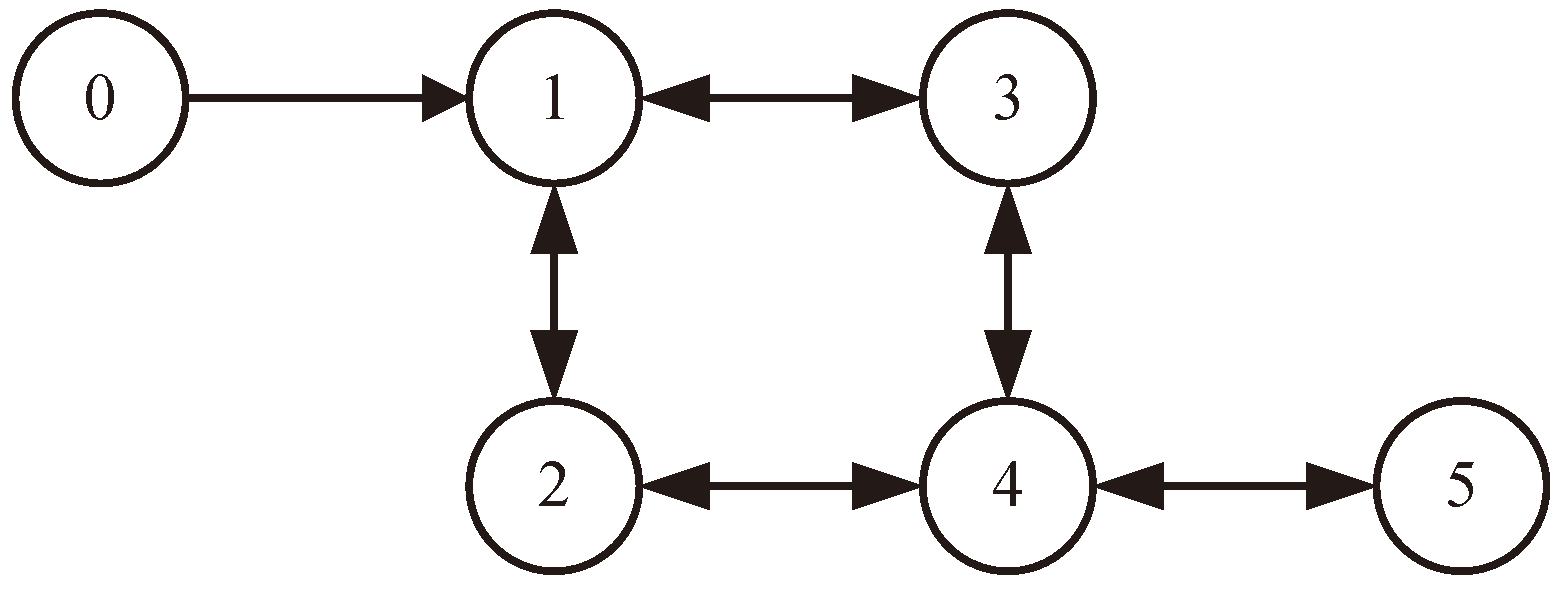

2. Problem Formulation

3. Adaptive Selective Disturbance Elimination Consensus Tracking

3.1. Distributed Fixed-Time Observer

3.2. Fixed-Time Disturbance Observer

3.3. Adaptive Selective Disturbance Elimination Backstepping Controller

3.3.1. Adaptive Selective Disturbance Elimination Backstepping Controller Design

3.3.2. Analysis of System Stability

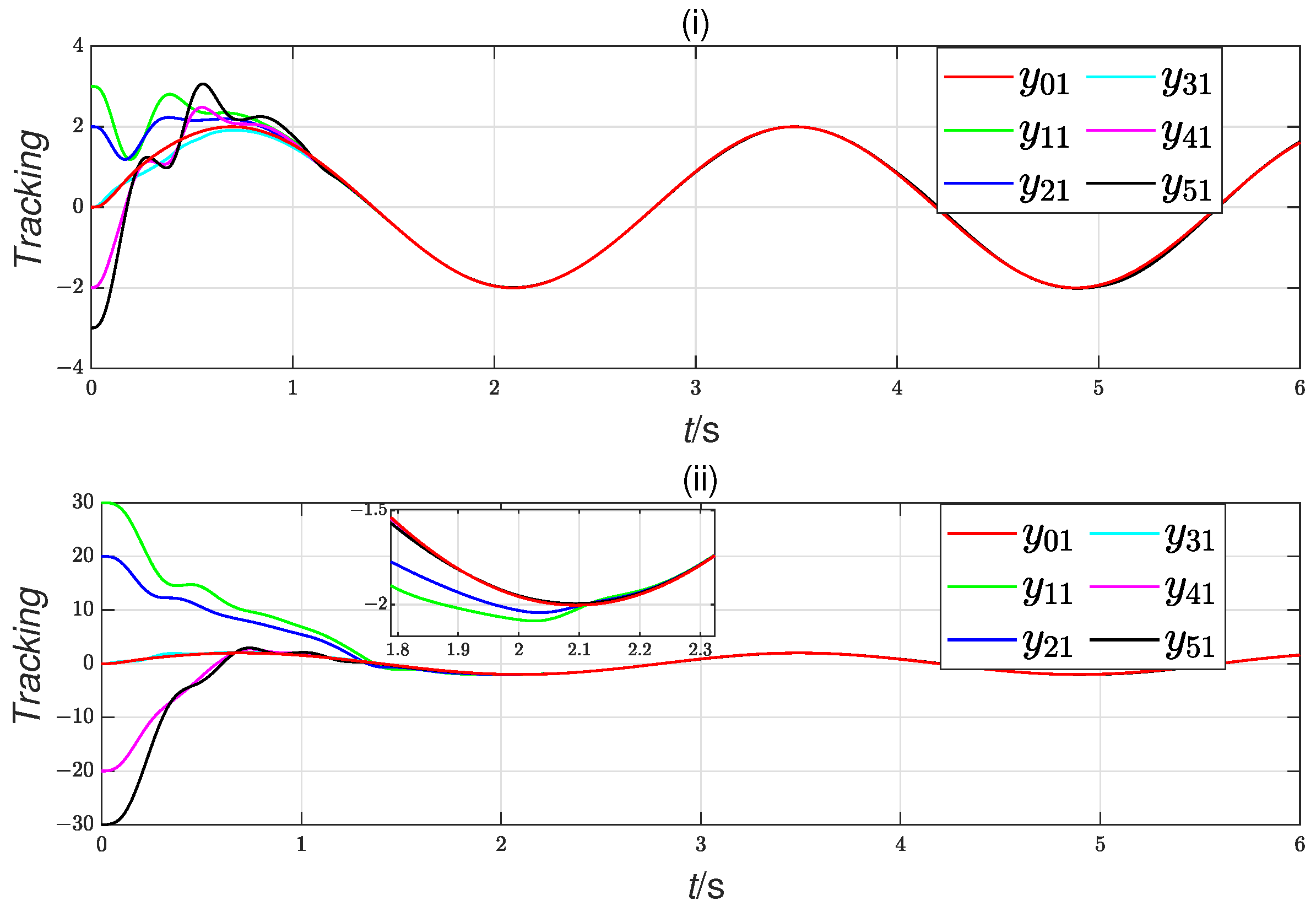

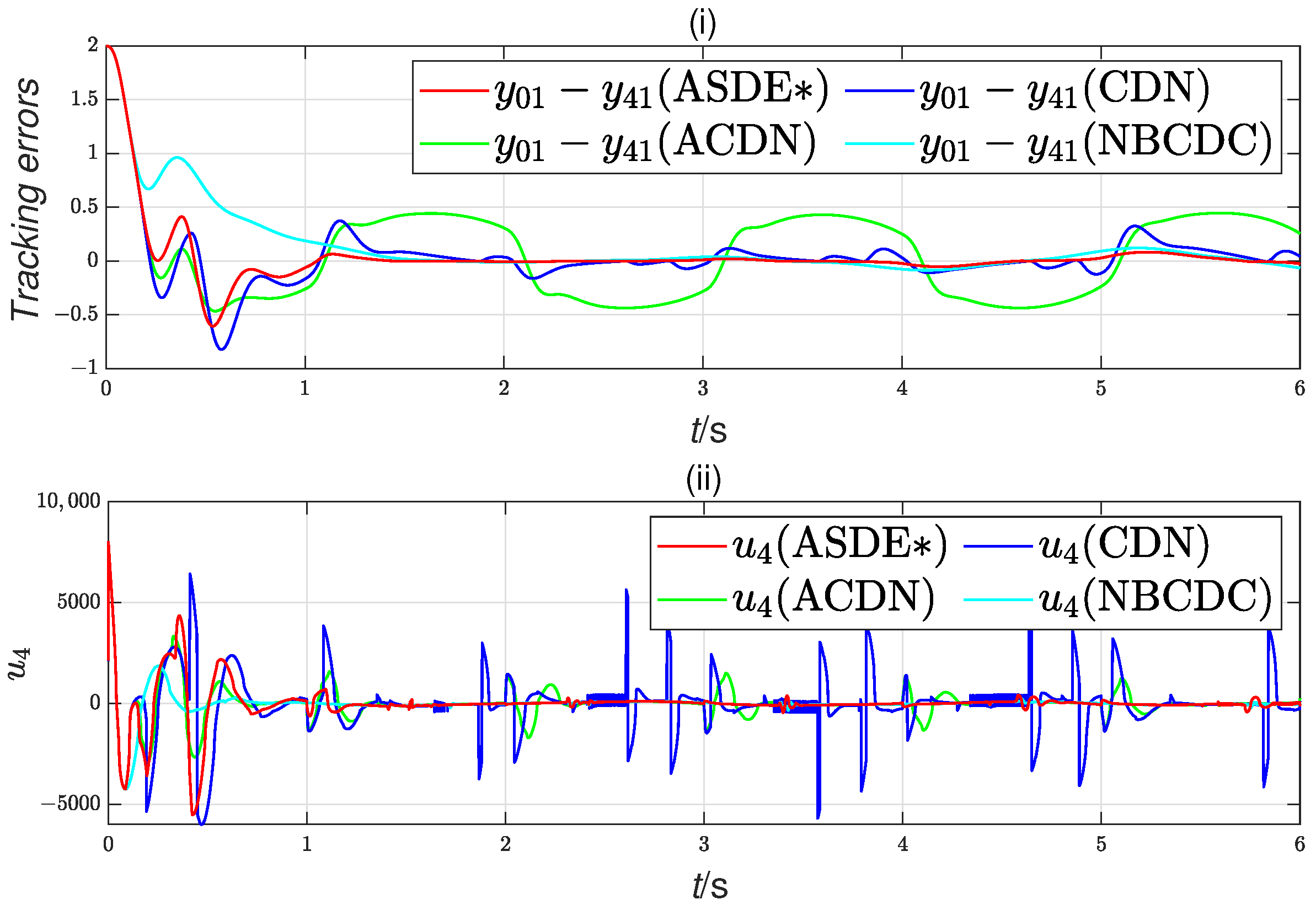

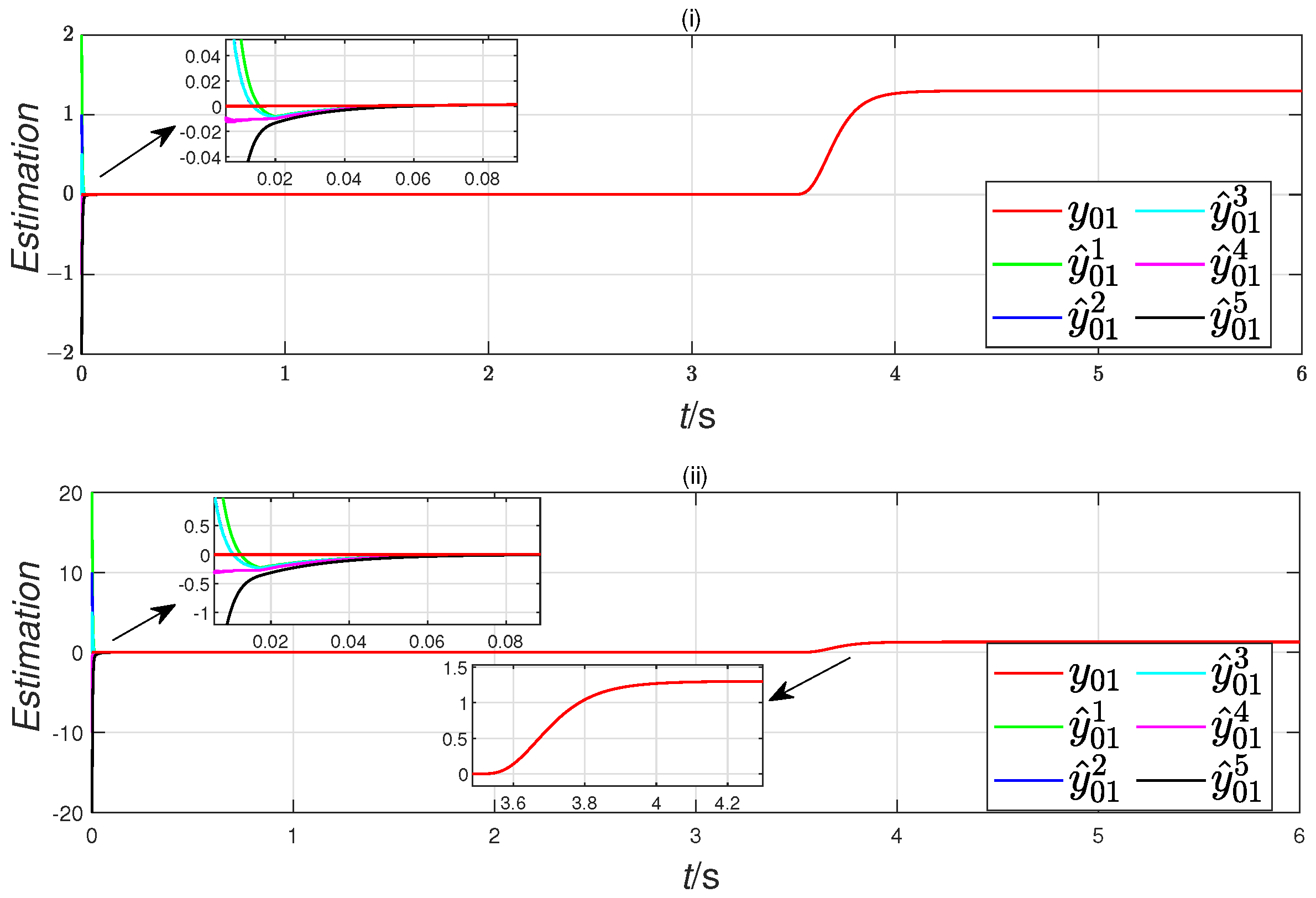

4. Simulation Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Olfati-Saber, R.; Murray, R. Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Control 2004, 49, 1520–1533. [Google Scholar]

- Kim, Y.; Mesbahi, M. On maximizing the second smallest eigenvalue of a state-dependent graph laplacian. IEEE Trans. Autom. Control. 2006, 51, 116–120. [Google Scholar]

- Zeng, S.; Doan, T.T.; Romberg, J. Finite-time convergence rates of decentralized stochastic approximation with applications in multi-agent and multi-task learning. IEEE Trans. Autom. Control 2023, 68, 2758–2773. [Google Scholar] [CrossRef]

- Mi, W.; Luo, L.; Zhong, S. Fixed-time consensus tracking for multi-agent systems with a nonholomonic dynamics. IEEE Trans. Autom. Control 2023, 68, 1161–1168. [Google Scholar]

- Wu, W.; Tong, S. Observer-based fixed-time adaptive fuzzy consensus dsc for nonlinear multiagent systems. IEEE Trans. Cybern. 2023, 53, 5881–5891. [Google Scholar] [PubMed]

- Hou, H.-Q.; Liu, Y.-J.; Lan, J.; Liu, L. Adaptive fuzzy fixed time time-varying formation control for heterogeneous multiagent systems with full state constraints. IEEE Trans. Fuzzy Syst. 2023, 31, 1152–1162. [Google Scholar]

- Guo, W.; Shi, L.; Sun, W.; Jahanshahi, H. Predefined-time average consensus control for heterogeneous nonlinear multi-agent systems. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 2989–2993. [Google Scholar]

- Song, Y.; Ye, H.; Lewis, F.L. Prescribed-time control and its latest developments. IEEE Trans. Syst. Man, Cybern. Syst. 2023, 53, 4102–4116. [Google Scholar]

- Ren, W.; Beard, R. Consensus seeking in multiagent systems under dynamically changing interaction topologies. IEEE Trans. Autom. Control 2005, 50, 655–661. [Google Scholar]

- Li, Z.; Duan, Z.; Chen, G.; Huang, L. Consensus of multiagent systems and synchronization of complex networks: A unified viewpoint. IEEE Trans. Circuits Syst. I Regul. Pap. 2010, 57, 213–224. [Google Scholar]

- Yu, W.; Chen, G.; Cao, M. Some necessary and sufficient conditions for second-order consensus in multi-agent dynamical systems. Automatica 2010, 46, 1089–1095. [Google Scholar]

- Ding, Z. Consensus disturbance rejection with disturbance observers. IEEE Trans. Ind. Electron. 2015, 62, 5829–5837. [Google Scholar] [CrossRef]

- Polyakov, A. Generalized Homogeneity in Systems and Control; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Polyakov, A. Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Autom. Control 2012, 57, 2106–2110. [Google Scholar]

- Polyakov, A.; Efimov, D.; Perruquetti, W. Finite-time and fixed-time stabilization: Implicit lyapunov function approach. Automatica 2015, 51, 332–340. [Google Scholar]

- Zuo, Z.; Tie, L. A new class of finite-time nonlinear consensus protocols for multi-agent systems. Int. J. Control 2014, 87, 363–370. [Google Scholar]

- Zuo, Z.; Tie, L. Distributed robust finite-time nonlinear consensus protocols for multi-agent systems. Int. J. Syst. Sci. 2016, 47, 1366–1375. [Google Scholar]

- Zuo, Z.; Han, Q.-L.; Ning, B.; Ge, X.; Zhang, X.-M. An overview of recent advances in fixed-time cooperative control of multiagent systems. IEEE Trans. Ind. Inform. 2018, 14, 2322–2334. [Google Scholar]

- Chen, C.-C.; Sun, Z.-Y. Fixed-time stabilisation for a class of high-order non-linear systems. IET Control. Theory Appl. 2018, 12, 2578–2587. [Google Scholar]

- Ning, B.; Han, Q.-L.; Zuo, Z.; Ding, L.; Lu, Q.; Ge, X. Fixed-time and prescribed-time consensus control of multiagent systems and its applications: A survey of recent trends and methodologies. IEEE Trans. Ind. Inform. 2023, 19, 1121–1135. [Google Scholar]

- Li, X.; Wen, C.; Wang, J. Lyapunov-based fixed-time stabilization control of quantum systems. J. Autom. Intell. 2022, 1, 100005. [Google Scholar]

- Wu, Z.; Ma, M.; Xu, X.; Liu, B.; Yu, Z. Predefined-time parameter estimation via modified dynamic regressor extension and mixing. J. Frankl. Inst. 2021, 358, 6897–6921. [Google Scholar] [CrossRef]

- Andrieu, V.; Praly, L.; Astolfi, A. Homogeneous approximation, recursive observer design, and output feedback. SIAM J. Control. Optim. 2008, 47, 1814–1850. [Google Scholar] [CrossRef]

- Parsegov, S.; Polyakov, A.; Shcherbakov, P. Fixed-time consensus algorithm for multi-agent systems with integrator dynamics. IFAC Proc. Vol. 2013, 46, 110–115. [Google Scholar] [CrossRef]

- Zuo, Z. Nonsingular fixed-time consensus tracking for second-order multi-agent networks. Automatica 2015, 54, 305–309. [Google Scholar] [CrossRef]

- Fu, J.; Wang, J. Fixed-time coordinated tracking for second-order multi-agent systems with bounded input uncertainties. Syst. Control. Lett. 2016, 93, 1–12. [Google Scholar] [CrossRef]

- Afifa, R.; Ali, S.; Pervaiz, M.; Iqbal, J. Adaptive backstepping integral sliding mode control of a mimo separately excited dc motor. Robotics 2023, 12, 105. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, F.; Huang, P.; Lu, Y. Fixed-Time Consensus Tracking for Second-Order Multiagent Systems Under Disturbance. IEEE Trans. Syst. Man, Cybern. Syst. 2021, 51, 4883–4894. [Google Scholar]

- Wang, J.; Chen, L.; Xu, Q. Disturbance estimation-based robust model predictive position tracking control for magnetic levitation system. IEEE/ASME Trans. Mechatronics 2022, 27, 81–92. [Google Scholar]

- Wang, F.; He, L. Fpga-based predictive speed control for pmsm system using integral sliding-mode disturbance observer. IEEE Trans. Ind. Electron. 2021, 68, 972–981. [Google Scholar] [CrossRef]

- Xu, W.; Junejo, A.K.; Liu, Y.; Islam, M.R. Improved continuous fast terminal sliding mode control with extended state observer for speed regulation of pmsm drive system. IEEE Trans. Veh. Technol. 2019, 68, 10465–10476. [Google Scholar] [CrossRef]

- Li, J.; Zhang, L.; Luo, L.; Li, S. Extended state observer based current-constrained controller for a pmsm system in presence of disturbances: Design, analysis and experiments. Control Eng. Pract. 2023, 132, 105412. [Google Scholar]

- Yuan, X.; Zuo, Y.; Fan, Y.; Lee, C.H.T. Model-free predictive current control of spmsm drives using extended state observer. IEEE Trans. Ind. Electron. 2022, 69, 6540–6550. [Google Scholar]

- Yang, J.; Liu, X.; Sun, J.; Li, S. Sampled-data robust visual servoing control for moving target tracking of an inertially stabilized platform with a measurement delay. Automatica 2022, 137, 110105. [Google Scholar]

- Xu, B.; Zhang, L.; Ji, W. Improved non-singular fast terminal sliding mode control with disturbance observer for pmsm drives. IEEE Trans. Transp. Electrif. 2021, 7, 2753–2762. [Google Scholar]

- Wang, F.; He, L.; Rodríguez, J. A robust predictive speed control for spmsm systems using a sliding mode gradient descent disturbance observer. IEEE Trans. Energy Convers. 2023, 38, 540–549. [Google Scholar] [CrossRef]

- Hou, Q.; Ding, S. Gpio based super-twisting sliding mode control for pmsm. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 747–751. [Google Scholar] [CrossRef]

- Tan, X.; Hu, C.; Cao, G.; Wei, Q.; Li, W.; Han, B. Fixed-time antidisturbance consensus tracking for nonlinear multiagent systems with matching and mismatching disturbances. IEEE/CAA J. Autom. Sin. 2024, 11, 1410–1423. [Google Scholar] [CrossRef]

- Pu, Z.; Sun, J.; Yi, J.; Gao, Z. On the principle and applications of conditional disturbance negation. IEEE Trans. Syst. Man, Cybern. Syst. 2021, 51, 6757–6767. [Google Scholar]

- Sun, J.; Pu, Z.; Yi, J. Conditional disturbance negation based active disturbance rejection control for hypersonic vehicles. Control. Eng. Pract. 2019, 84, 159–171. [Google Scholar]

- Ren, C.; Jiang, H.; Mu, C.; Ma, S. Conditional disturbance negation based control for an omnidirectional mobile robot: An energy perspective. IEEE Robot. Autom. Lett. 2022, 7, 11641–11648. [Google Scholar]

- Kong, S.; Sun, J.; Wang, J.; Zhou, Z.; Shao, J.; Yu, J. Piecewise compensation model predictive governor combined with conditional disturbance negation for underactuated auv tracking control. IEEE Trans. Ind. Electron. 2023, 70, 6191–6200. [Google Scholar] [CrossRef]

- Sun, J.; Xu, S.; Ding, S.; Pu, Z.; Yi, J. Adaptive conditional disturbance negation-based nonsmooth-integral control for pmsm drive system. IEEE/ASME Trans. Mechatronics 2024, 29, 3602–3613. [Google Scholar] [CrossRef]

- Sun, J.; Pu, Z.; Yi, J.; Liu, Z. Fixed-time control with uncertainty and measurement noise suppression for hypersonic vehicles via augmented sliding mode observers. IEEE Trans. Ind. Inform. 2020, 16, 1192–1203. [Google Scholar] [CrossRef]

- Qian, C.; Lin, W. A continuous feedback approach to global strong stabilization of nonlinear systems. IEEE Trans. Autom. Control 2001, 46, 1061–1079. [Google Scholar] [CrossRef]

- Li, Y.; Tong, S.; Liu, Y.; Li, T. Adaptive fuzzy robust output feedback control of nonlinear systems with unknown dead zones based on a small-gain approach. IEEE Trans. Fuzzy Syst. 2014, 22, 164–176. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiong, G.; Tan, X.; Cao, G.; Hong, X. Adaptive Selective Disturbance Elimination-Based Fixed-Time Consensus Tracking for a Class of Nonlinear Multiagent Systems. Electronics 2025, 14, 1503. https://doi.org/10.3390/electronics14081503

Xiong G, Tan X, Cao G, Hong X. Adaptive Selective Disturbance Elimination-Based Fixed-Time Consensus Tracking for a Class of Nonlinear Multiagent Systems. Electronics. 2025; 14(8):1503. https://doi.org/10.3390/electronics14081503

Chicago/Turabian StyleXiong, Guanghuan, Xiangmin Tan, Guanzhen Cao, and Xingkui Hong. 2025. "Adaptive Selective Disturbance Elimination-Based Fixed-Time Consensus Tracking for a Class of Nonlinear Multiagent Systems" Electronics 14, no. 8: 1503. https://doi.org/10.3390/electronics14081503

APA StyleXiong, G., Tan, X., Cao, G., & Hong, X. (2025). Adaptive Selective Disturbance Elimination-Based Fixed-Time Consensus Tracking for a Class of Nonlinear Multiagent Systems. Electronics, 14(8), 1503. https://doi.org/10.3390/electronics14081503