1. Introduction

Dynamic range in photography refers to the spectrum of luminance variations from the darkest to the brightest parts of an image. Throughout human evolution, our visual system has developed a high dynamic range to adapt to varying environmental conditions, whether exposed to bright sunlight during the day or navigating in low-light environments at night. Burns and Baylor found that the instantaneous dynamic range of the human visual system spans five orders of magnitude, with overall luminance detection extending up to fourteen orders of magnitude following adaptive adjustments [

1]. Traditional Standard Dynamic Range (SDR) displays exhibit limited detail and are unable to fully capture the visual fidelity sought by human perception. As display technology progresses, HDR technology is rapidly emerging as a preferred solution for achieving more realistic visual displays. The significant enhancement in peak brightness of HDR displays facilitates a more accurate replication of real-life scenes, closely mirroring human visual perception [

2,

3]. In addition to hardware factors such as peak brightness, control accuracy, and device characteristics, the transfer function also plays a crucial role in determining the final display effect.

The transfer function is extensively employed in image acquisition and display, illustrating the relationship between actual brightness and digital signals. Acquisition equipment employs the Opto-Electronic Transfer Function (OETF) to transform actual brightness into digital signals, whereas display terminals utilize the Electro-Optical Transfer Function (EOTF) to convert digital signals into optical signals for screen presentation [

4]. The design of the EOTF directly affects the level of quantization noise, potentially leading to color banding and artifacts that resemble contour lines. These imperfections introduce noticeable discontinuities in brightness or color within the otherwise smooth transitions in the image [

5].

Figure 1 demonstrates an extreme case of quantization noise, where discrepancies between the luminance mapping of the EOTF and human visual perception lead to pseudo-contours in otherwise smooth regions. To mitigate these artifacts, the nonlinear characteristics of the EOTF must align with human visual perception.

The SDR system employs a transfer function known as ‘gamma’, with the nonlinear mapping it achieves referred to as ‘gamma correction’. It is widely accepted that ‘gamma’ originates from the Stevens power law [

6]. The ‘gamma’ value typically ranges from 2.2 to 3.0 depending on the display material. In standards such as ITU-R BT.709 [

7] and ITU-R BT.1886 [

8], OETF is defined with a gamma value of 0.45, while EOTF is defined with a gamma value of 2.4. However, for HDR displays, ‘gamma’ alone is insufficient. With an increased dynamic range, a higher bit depth is necessary to effectively expand the luminance range; otherwise, visible artifacts may emerge [

9].

Numerous studies have explored the optimal alignment between display terminal characteristics and input digital signals to support HDR systems. The UK’s BBC and Japan’s NHK have jointly introduced Hybrid Log-Gamma (HLG) to meet the requirements of HDR technology in digital TV broadcasting. HLG is notable for its backward compatibility with standard dynamic range (SDR) content, enabling HLG content to deliver a more vibrant and dynamic visual experience on existing SDR display devices [

10,

11]. Miller et al. introduced an EOTF known as Perceptual Quantizer (PQ), which is derived from Barten’s Contrast Sensitivity Function (CSF). This EOTF effectively quantizes luminance levels ranging from

to 10,000 cd/m

2 using a 12-bit integer depth [

12]. Due to compatibility issues with current consumer electronics, the 10-bit PQ is more commonly used as the EOTF for HDR displays to conserve resources [

13]. PQ was standardized by the Society of Motion Picture and Television Engineers (SMPTE) in 2014 and is detailed in SMPTE ST-2084 [

14]. Currently, both PQ and HLG are standardized by the International Telecommunication Union (ITU) and described in ITU-R BT.2100 [

15]. Although 10-bit PQ has proven effective in many scenarios, distortion may still occur, particularly in high dynamic range conditions and low-brightness scenes where contrast information may not be fully preserved [

12]. Yi Liu put forth a multi-modeling Electro-Optical Transfer Function [

4] to enhance the 10-bit PQ. However, the method in question determines the parameter by calculating the average brightness of the content, which still carries the risk of visible artifacts for scenes with a considerable luminance span. In subsequent studies, Azimi et al. conducted further research in this area and proposed the PU21 encoding function [

16] based on perceptual optimization. It is important to note that the original design goal of the PU21 framework is primarily focused on developing a quality assessment system for HDR video content rather than addressing the brightness mapping requirements of display terminal devices. This method employs a floating-point numerical encoding scheme, which poses significant constraints at the engineering implementation level. Given that current display terminal devices typically use a brightness mapping method based on a lookup table (LUT), converting the floating-point encoding scheme of PU21 to an integer encoding format will inevitably introduce quantization errors, resulting in the loss of image details and color gradation breaks. Therefore, from the perspective of display system engineering implementation, the PU21 framework has considerable limitations in terms of adaptability for terminal device applications. Thus, developing a new luminance mapping approach based on the CSF is crucial to enhance contrast retention and optimize the display quality of HDR content.

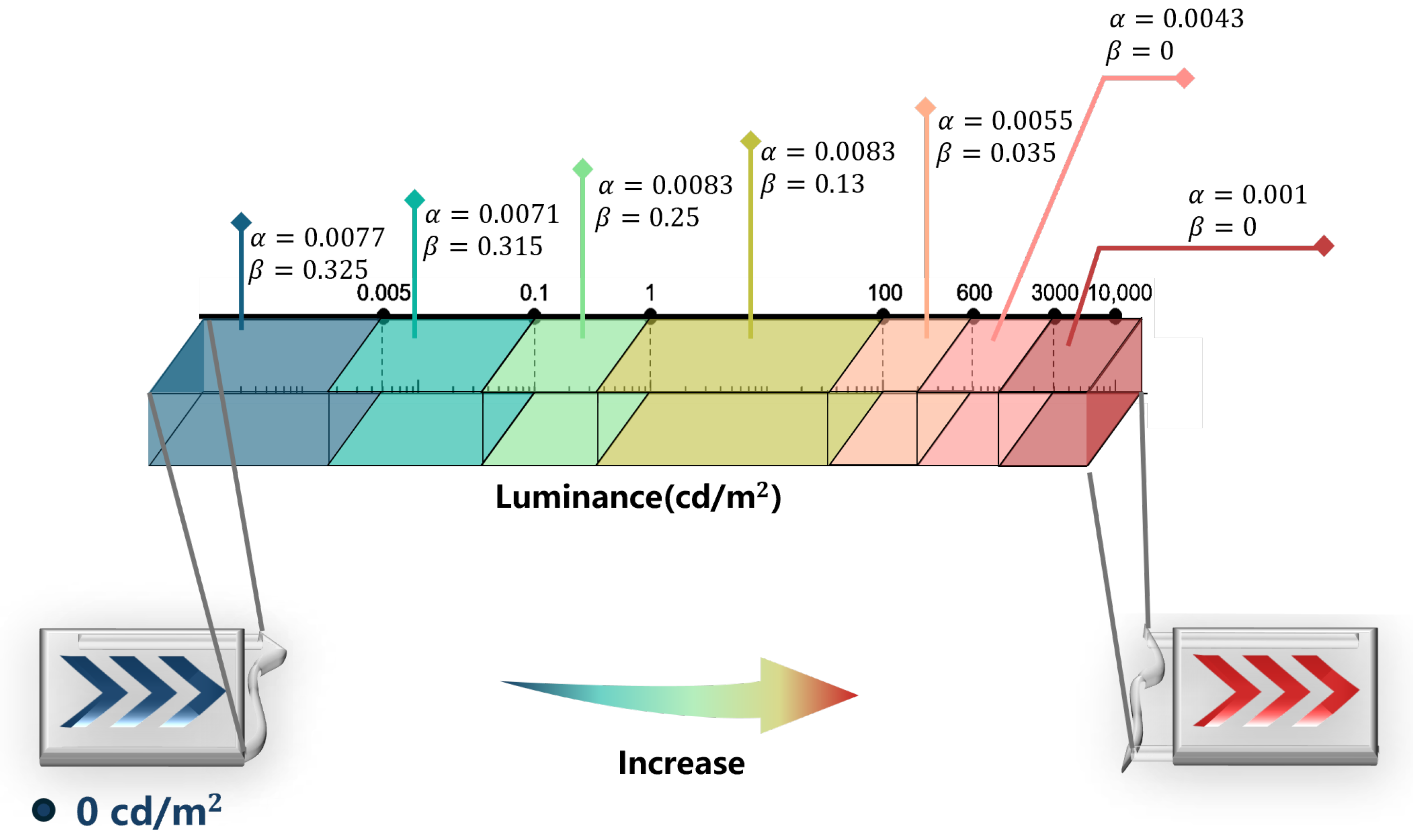

This paper examines the discrepancy in accuracy between the discrete relationship directly derived by Miller et al. [

12] using Barten’s Contrast Sensitivity Function and the digital signal sampled after fitting to the PQ EOTF. To address the limitations of 10-bit PQ, a solution is proposed by us. This solution involves segmenting the entire range of brightness into multiple intervals, incorporating adaptive adjustment factors specific to each interval, and modifying the JND fraction by utilizing different parameters to establish a revised mapping relationship. Notably, this new mapping relationship requires a bit depth of only 10 bits. To assess its effectiveness in the HDR display process, the evaluation method outlined in ITU BT.2246 [

17] was employed to predict and assess display results by comparing reproducible contrast. Additionally, the display effect was objectively evaluated through tPSNR and HDR-VDP-3 [

18,

19]. The results indicate that the mapping relation suggested in this study effectively preserves contrast information when compared to the standard 10-bit PQ. This helps in addressing issues like color banding and artifacts that can arise when displaying content in dark scenes, ultimately enhancing the visual quality of HDR content.

For the remainder of this paper, the structure is as follows:

Section 2 discusses the application of human visual perception and contrast in the classical EOTF while outlining the proposed mapping relationship and the corresponding computational procedure.

Section 3 presents the experimental results and compares them with those of the classical EOTF. Finally,

Section 4 concludes the paper.

3. Results and Discussions

This section verifies and discusses the performance of the proposed mapping relationship. To assess the effectiveness of the proposed mapping on HDR display, we implement it on HDR video sequences for objective evaluation. The comparison is made between 10-bit PQ, multi-PQ as proposed in Ref. [

4], and the mapping suggested in this paper. Given the article’s focus on addressing potential artifacts of 10-bit PQ in display terminals, the minimum detectable contrast outlined in the ITU BT.2246 report serves as the primary evaluation criterion, as illustrated in

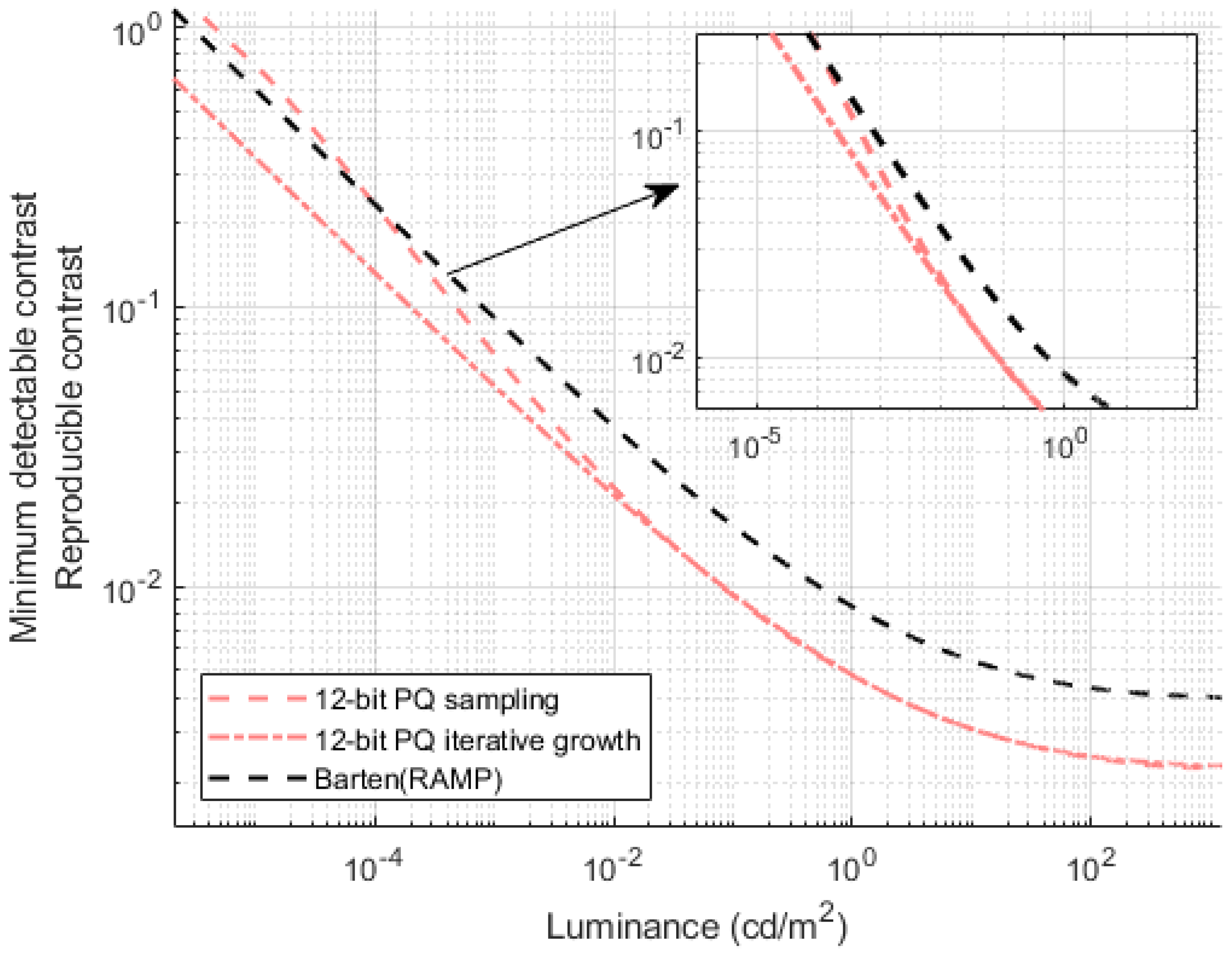

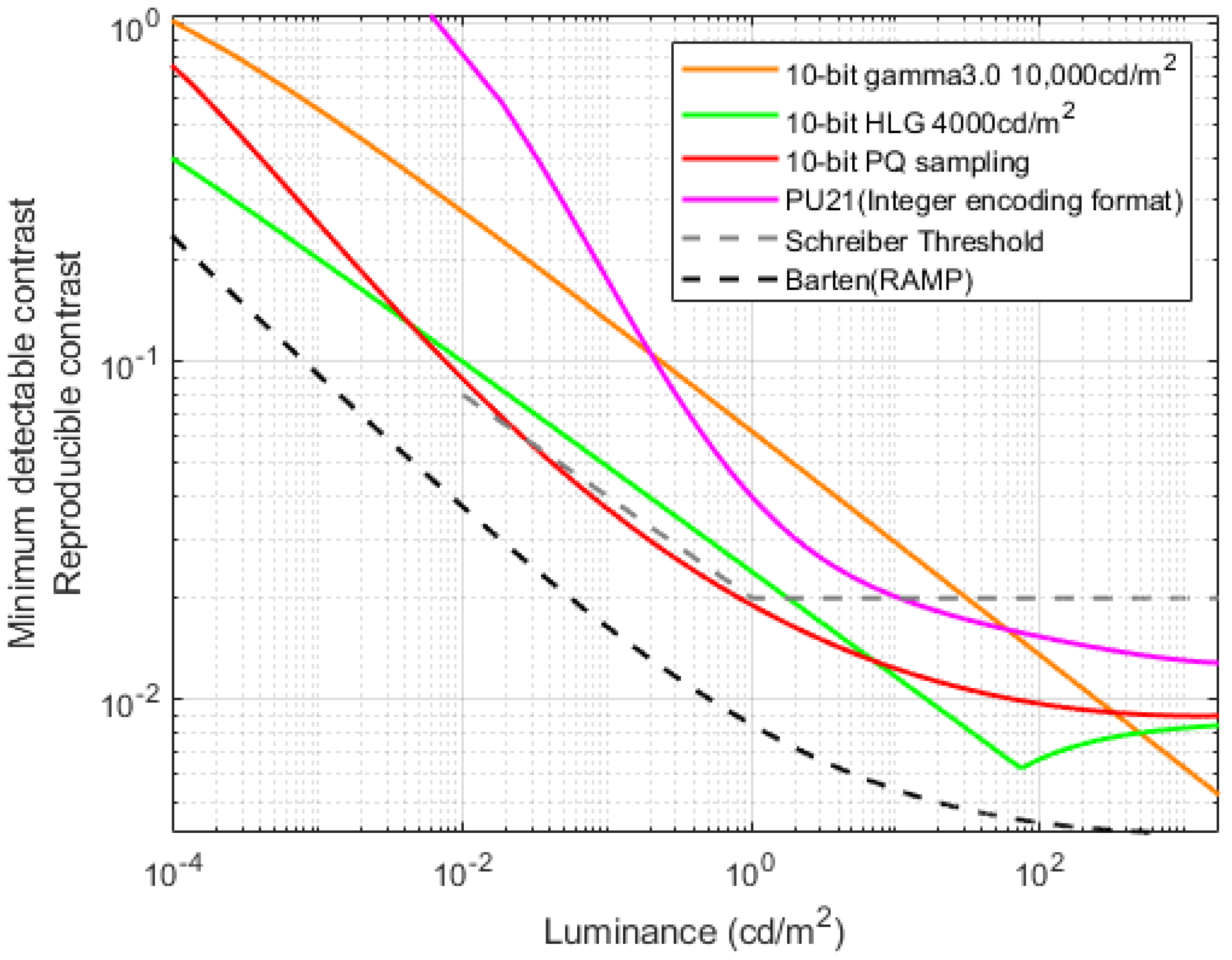

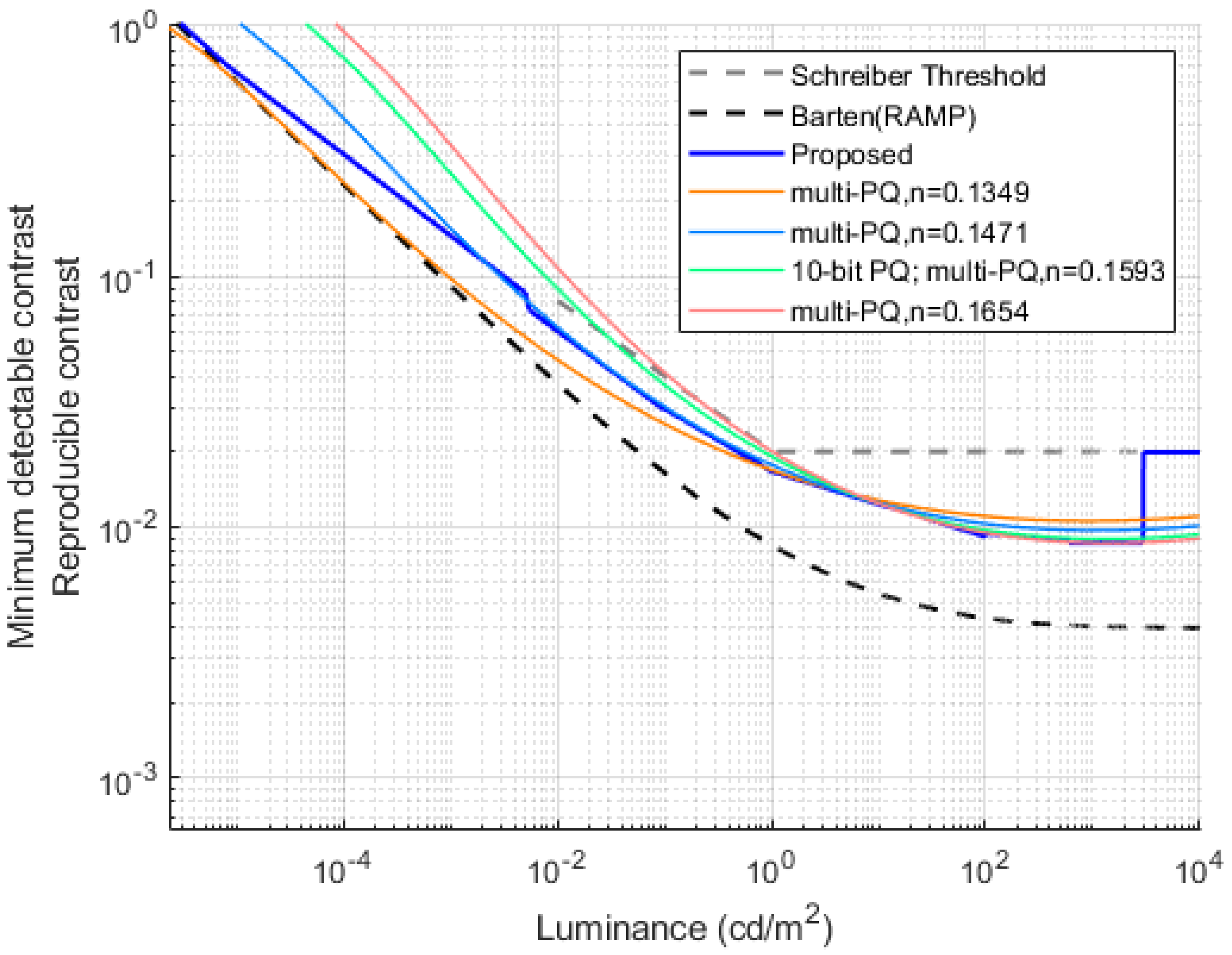

Figure 7.

The horizontal axis in

Figure 7 represents absolute luminance (cd/m

2), while the vertical axis denotes Minimum Detectable Contrast and Reproducible Contrast. Minimum Detectable Contrast refers to the smallest luminance difference perceivable by the human eye under specific conditions, namely the Schreiber threshold and Barten (RAMP) threshold, both derived from distinct human vision models. Its value is determined by the Contrast Sensitivity Function, which depends on spatial frequency, luminance, viewing angle, and other factors. A lower value implies greater sensitivity of the human eye to subtle differences, necessitating more stringent system requirements. The Barten (RAMP) threshold is highly stringent, as it considers spatial frequency in extreme conditions, rendering luminance differences below this threshold imperceptible to the human eye. The Schreiber threshold, on the other hand, is less stringent and represents the upper limit at which the human eye perceives a comfortable luminance difference. Luminance differences exceeding the Schreiber threshold are perceived as visual artifacts, causing discomfort to the human eye. Due to multiple influencing factors, the Schreiber threshold has been adopted as the standard for HDR displays to optimize resource utilization and is widely applied in visual systems, including broadcasting and television. Reproducible Contrast refers to the contrast that a display device or system can effectively reproduce. To meet industry standards and ensure high-quality visual performance, the Reproducible Contrast of a system must always remain below the Schreiber threshold. Additionally, the closer the Reproducible Contrast is to the Barten (RAMP) threshold, the more refined and accurate the display quality becomes. However, due to limitations in quantization precision, achieving the Barten (RAMP) threshold requires at least 11-bit precision, which exceeds the capability of current mainstream display systems. Therefore, to mitigate the risk of artifacts under the constraint of 10-bit precision, it is essential to optimize for human visual perception and reallocate precision across different luminance levels.

The multi-PQ method selects parameters to mitigate artifacts based on the average luminance of each image frame. However, as shown in

Figure 7, the reproducible contrast at low luminance levels still exceeds the Schreiber threshold at n = 0.1593 and n = 0.1654. This limitation arises because multi-PQ relies solely on average luminance as a selection criterion, overlooking individual pixel variations that can lead to suboptimal parameter choices. Additionally, the mean luminance value may be skewed by extreme luminance levels, failing to accurately represent the overall distribution. This issue becomes particularly pronounced in high dynamic range (HDR) images, potentially leading to display artifacts when using multi-PQ.

Figure 7 further demonstrates that both PQ and multi-PQ exhibit high accuracy with some redundancy at luminance levels above 1 cd/m

2. Since 3000 cd/m

2 satisfies 90% of viewers’ diffuse white light requirements [

24] and the dazzling regions of an image typically occupy a small proportion of pixels, the proposed solution involves increasing the Just Noticeable Difference (JND) fraction through parameter tuning. This adjustment reduces quantization precision in dazzling intervals, enabling more efficient codeword redistribution and optimizing the overall performance of the mapping function. By maintaining the JND score within acceptable boundaries, the luminance mapping strategy proposed in this study ensures that the reproducible contrast remains below the Schreiber threshold, effectively minimizing potential artifacts in 10-bit PQ display results. Furthermore, the method optimally leverages coding redundancy in high-luminance scenes by preserving 10-bit depth in the final mapping function.

In this study, HDR content is processed according to the method illustrated in

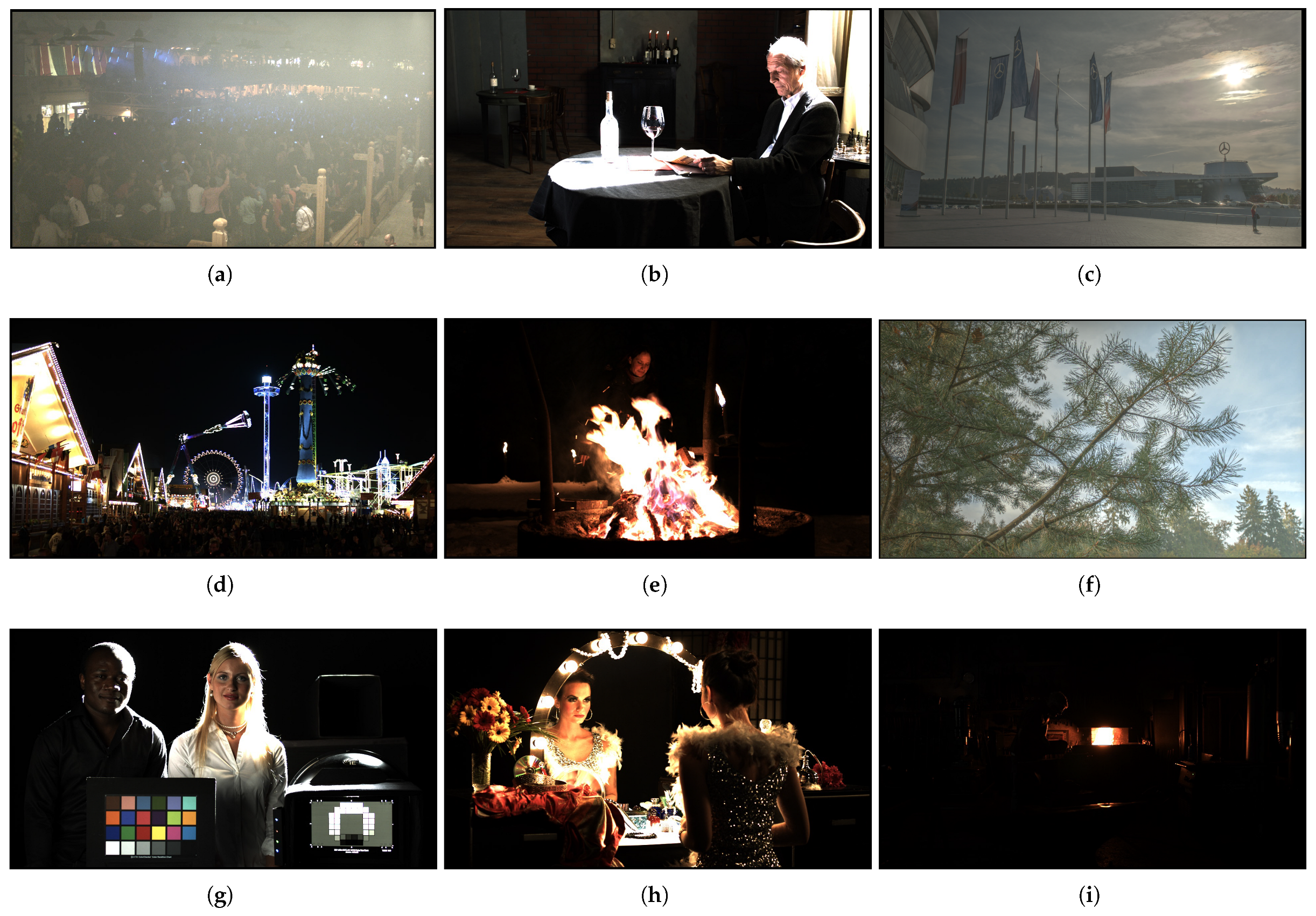

Figure 8. The test sequences employed in this study are stored as individual-frame images in OpenEXR format, which supports 32-bit floating-point pixel color values and facilitates scene-referred linear data recording. Each pixel’s color value is treated as an accurate representation of the scene. Specifically, we incorporate the 10-bit PQ, multi-PQ, and the mapping relationship introduced in this study into the OETF and EOTF, respectively. To objectively assess the output results, we use HDR-VDP-3.07 as a reference, offering empirical validation for the effectiveness of the mappings proposed in this study. Signal transmission and video compression effects on HDR content are not considered, as the focus is solely on display purposes. The HDR video sequence used for verification is detailed in

Table 1, and the tone mapping of the first frame is illustrated in

Figure 9.

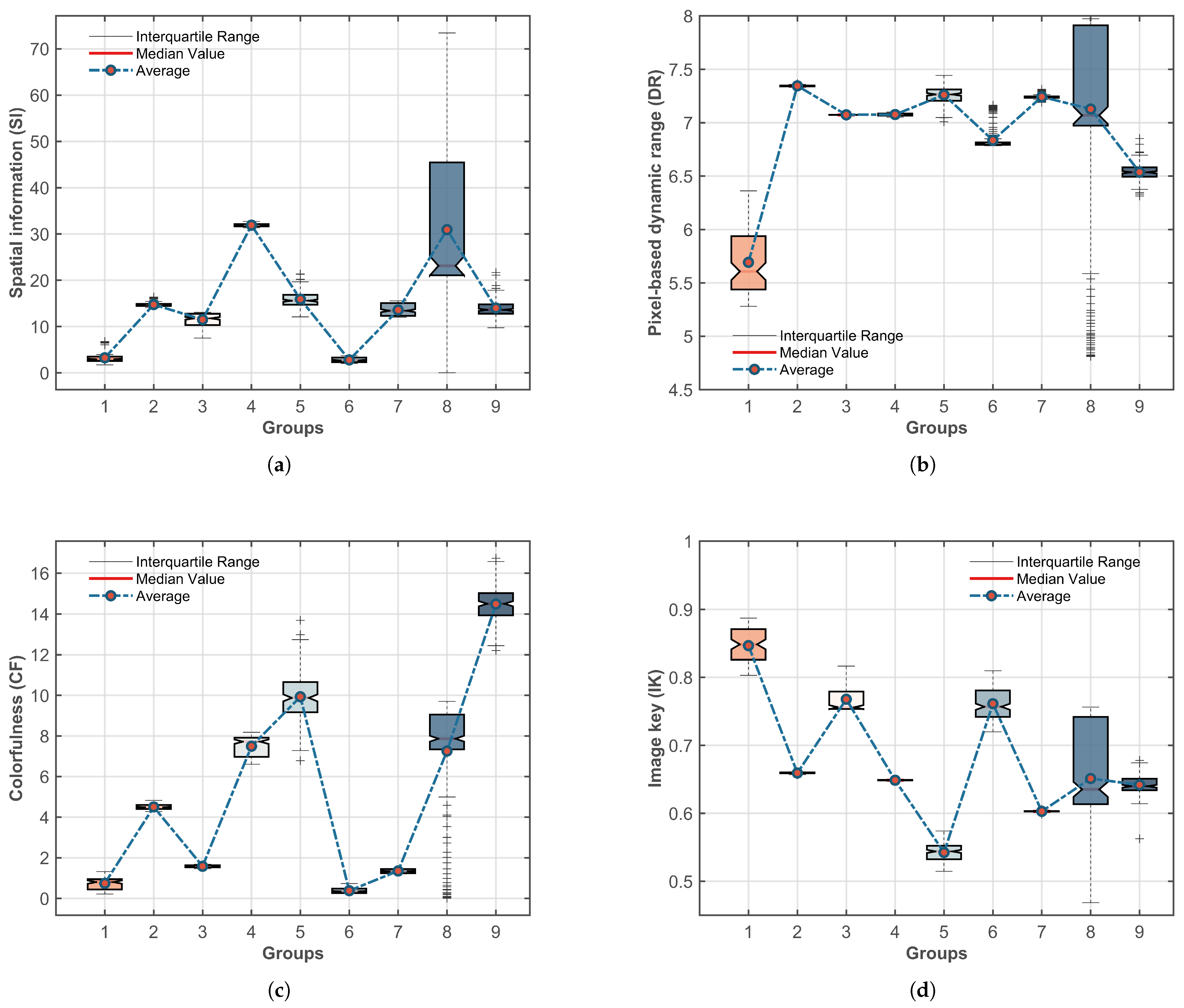

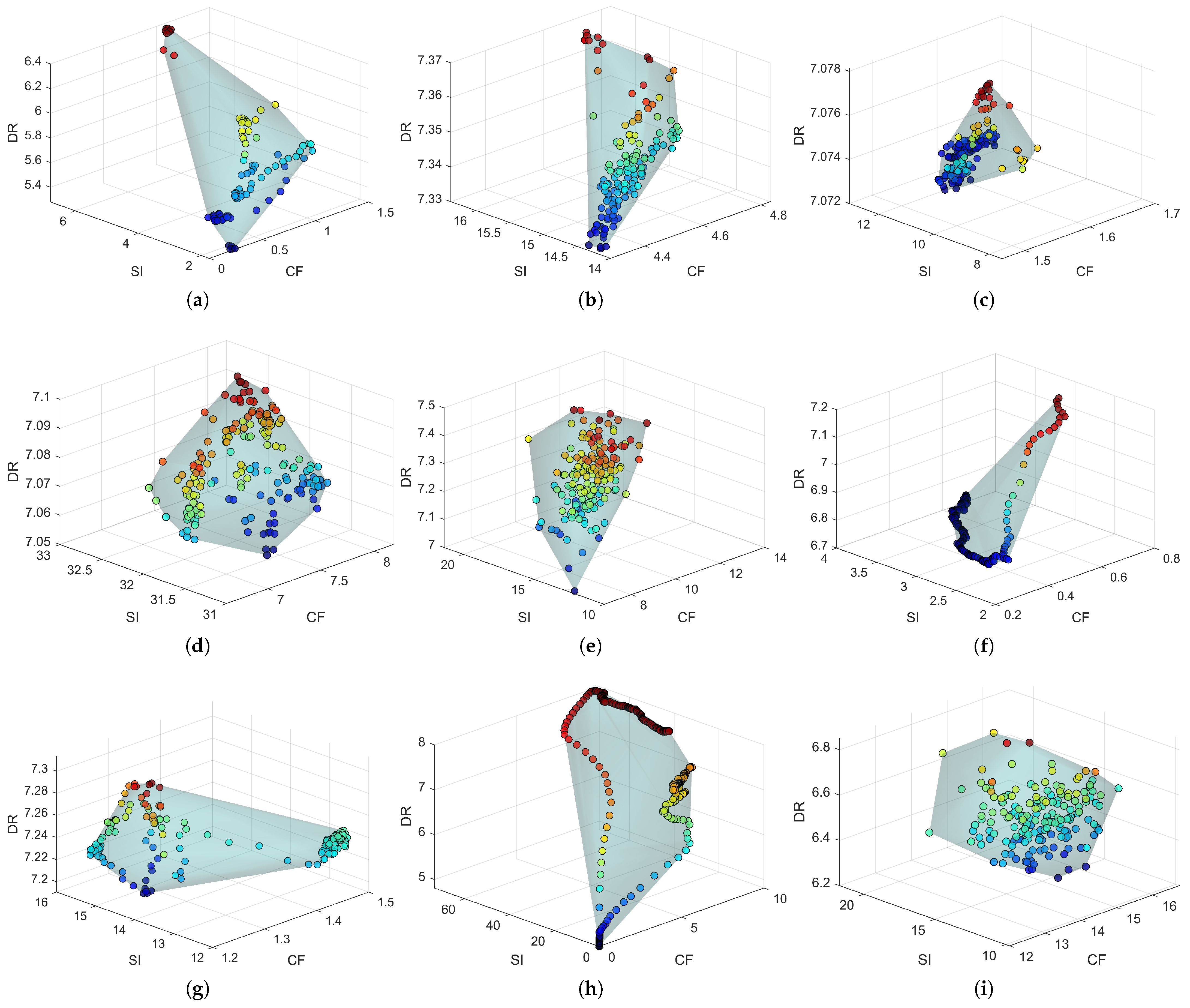

To investigate the performance of the proposed mapping relation under the influence of different scene types, illumination conditions, edge complexity, and color variations, this paper analyzes the video sequences using parameters such as Spatial Information (SI), Pixel-based Dynamic Range (DR), Colorfulness (CF), and Image Key (IK) [

25,

26]. The statistical information of the sequence parameters is presented in the box plot in

Figure 10, while the 3D joint distribution of SI, CF, and DR is illustrated in

Figure 11. Additionally, this study employs tPSNR to quantify temporal differences between frames, assessing temporal consistency during the display process, with results presented in

Table 2. To prevent tPSNR from becoming infinite when tMSE is 0, the maximum tPSNR value is capped at 100.

Table 1.

Introduction to HDR test sequence.

Table 1.

Introduction to HDR test sequence.

| HDR Test Sequences | Resolution (Width × Height) | Frames Encoded | Average DR (f-Stops) | Source/Copyrights |

|---|

| Beerfest lightshow 01 | 1920 × 1080 | 1591–1684 | 28.34 | |

| Bistro 01 | 1920 × 1080 | 296–445 | 29.31 | |

| Cars longshot | 1920 × 1080 | 658–837 | 29.15 | |

| Carousel fireworks 01 | 1920 × 1080 | 1187–1366 | 29.31 | |

| Fireplace 02 | 1920 × 1080 | 319–498 | 29.42 | HDM [27] |

| Fishing longshot | 1920 × 1080 | 445–624 | 23.72 | |

| Hdr test | 1920 × 1080 | 1191–1370 | 28.84 | |

| Show girl 02 | 1920 × 1080 | 348–688 | 28.50 | |

| Smith welding | 1920 × 1080 | 1163–1342 | 29.14 | |

The box plots in

Figure 10 illustrate the distribution patterns of the HDR video sequence characteristics across four dimensions, revealing significant differences among the data. In the SI dimension, Beerfest lightshow 01, Bistro 01, Carousel fireworks 01, and Fishing longshot exhibit narrower interquartile spacing, with the median closely aligning with the mean, indicating that these sequences are spatially stable, corresponding to static scenes or content with minimal texture variation. In contrast, the SI distribution of Show girl 02 exhibits a significant positive skew and a broader interquartile range, reflecting large variations in intra-frame complexity and a more dispersed distribution of SI values. This suggests that the sequence includes both high-complexity frames (e.g., dense edges) and low-complexity frames (e.g., extensive smoothing), with certain frames exhibiting extreme complexity, leading to an SI mean greater than the median. Additionally, Beerfest lightshow 01, Bistro 01, Fireplace 02, and Smith welding contain significant outliers, indicating that certain frames exhibit substantially higher complexity than the rest of the sequence.

Pixel-based Dynamic Range refers to the dynamic range measured after excluding the top 1% of extreme pixel values. Its distribution characteristics indicate that most sequences exhibit low dispersion and high dynamic range stability. With the exception of Beerfest lightshow 01 and Show girl 02, the remaining sequences exhibit minimal intra-sequence dynamic range variation. The brightness coverage is both strong and stable, typical of high-contrast scenes (e.g., backlit or heavily shaded environments). However, significant outliers are observed in the DR distributions of Show girl 02, Fishing longshot, and Smith welding, indicating that certain frames may experience dynamic range compression due to exposure imbalance. Beerfest lightshow 01 exhibits a significantly larger interquartile range in the DR dimension compared to other sequences, except for Show girl 02. Its distribution demonstrates positive skewness, indicating substantial dynamic range variation, with certain frames containing extreme luminance values (e.g., brief exposure to laser lights at night).

In the CF dimension, sequences Fireplace 02, Show girl 02, and Smith welding exhibit significant outliers, indicating substantial frame-to-frame variations in color richness. Notably, the CF distribution of Show girl 02 reveals a lower anomaly, suggesting the presence of extremely low-saturation content within certain frames. When combined with the findings from the SI and DR analyses, these anomalies can be attributed to exposure-related issues. Furthermore, the pronounced distribution differences across sequences highlight the strong color variability of the source content.

IK is used to quantify the overall luminance distribution of a video. Higher IK values indicate that the video is predominantly composed of highlights, whereas lower values suggest dominance by low-light environments. In both Show girl 02 and Cars longshot, the IK distribution exhibits bias, typically caused by the coexistence of localized bright and dark regions, which is characteristic of scenes with alternating light and shadow. Additionally, Show girl 02 presents a significant lower anomaly, indicating the presence of underexposed frames. Meanwhile, Beerfest lightshow 01 has a high median IK value of 0.85, which, when analyzed alongside the results from other dimensions, suggests that this sequence corresponds to a high-exposure scene with relatively low contrast and reduced image detail.

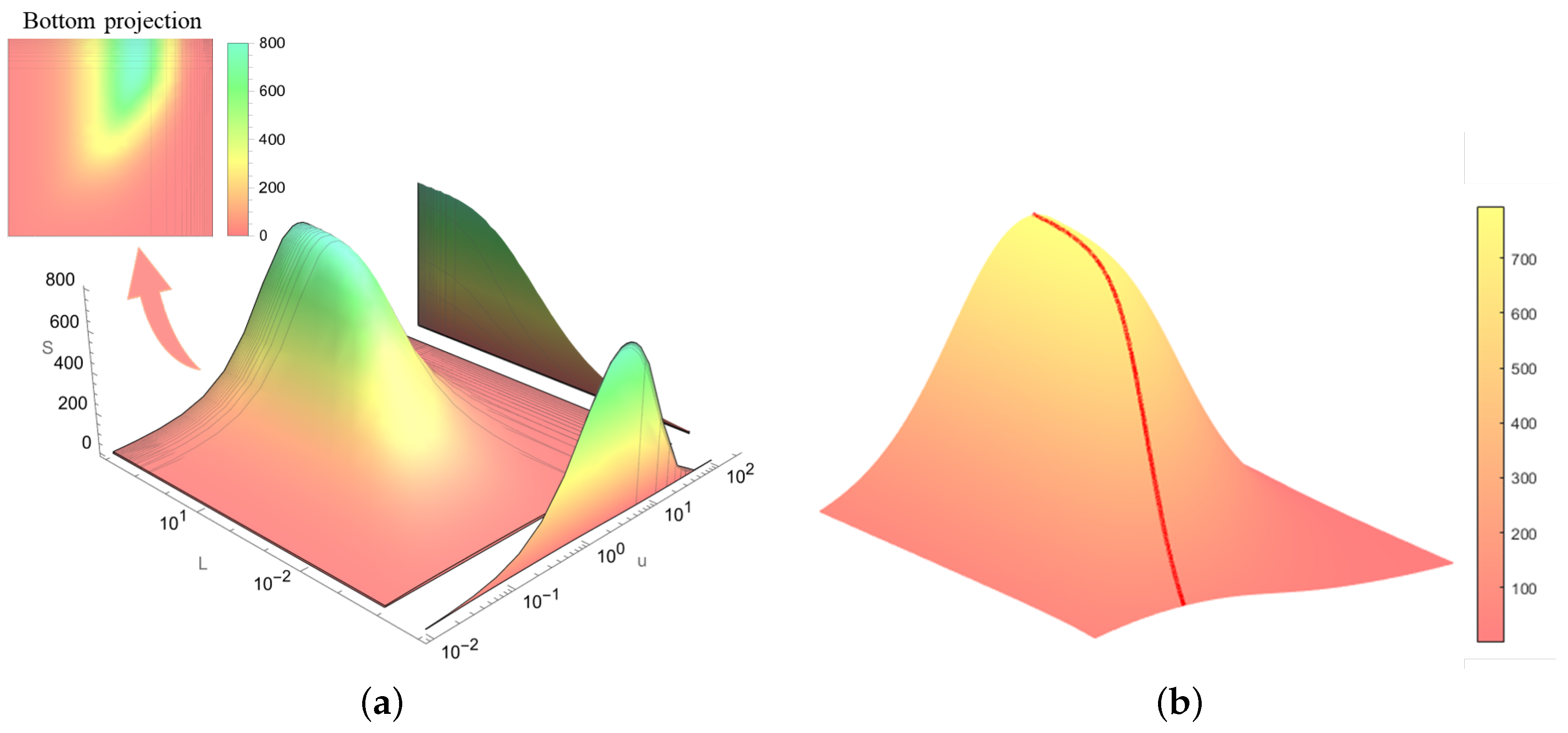

Figure 11 presents the 3D joint distribution of SI, DR, and CF. The scatter distributions and convex hull volumes of different video sequences highlight the intricate interactions between these multidimensional features. Notably, the convex hull volume serves as an indicator of the dispersion of the distribution, while the scatter position reveals the correlation trends among the three dimensions within each sequence.

For video sequences exhibiting a broad distribution without a distinct pattern of variation (e.g., Beerfest Lightshow 01, Fireplace 02, Smith Welding), the values of SI, DR, and CF display a high degree of randomness. This suggests a lack of intrinsic correlation between spatial complexity, dynamic range, and color richness in the video content. Such distributions typically arise from frequent scene transitions or the presence of multiple independently varying elements (e.g., rapidly edited mixed content or randomly moving objects), making it challenging to establish a synergistic trend across these metrics. The convex hull volume of these videos is generally large yet exhibits low eccentricity, with a relatively uniform distribution in all directions. This indicates that the content spans a broad coverage within the 3D feature space, encompassing diverse visual characteristics.

In contrast, video sequences exhibiting a broad scatter distribution yet following clear patterns of variation (e.g., Fishing Longshot, Show Girl 02) demonstrate significant positive or negative correlations. For instance, the scatter distribution of Fishing Longshot is narrowly concentrated along the DR axis, while SI and CF exhibit a strong positive correlation. This indicates that the dynamic range of the content gradually increases over time, while spatial detail and color saturation experience limit synergistic enhancement under fixed luminance conditions. Furthermore, such videos exhibit high convex hull eccentricity, characterized by pronounced elongation or stretching. This suggests that while the content maintains structural consistency, it simultaneously ensures feature diversity.

Additionally, the scatter plots of certain videos (e.g., Bistro 01, Carousel Fireworks 01, HDR Test) exhibit highly concentrated distributions without significant correlations. Such distribution patterns typically arise from static scenes with minimal variation, such as close-up shots of architectural details or character features. These videos are characterized by smaller convex hull sizes, lower eccentricity, and highly stable, localized features, making them ideal for evaluating the algorithm’s performance in static scenes with varying texture complexity and dynamic range.

Both tPSNR and HDR-VDP-3.07 use the absolute luminance of the content (cd/m

2) as an input parameter for predicting human visual perception across multiple dimensions and evaluating the test images.

Figure 12 presents the HDR-VDP-3.07 evaluation results, where the horizontal axis represents the frame sequence and the vertical axis represents the quality score. As an advanced high dynamic range visual disparity predictor, HDR-VDP-3.07 scores perceptual differences by simulating the human visual system, with scores expressed in Just Objectionable Differences (JODs) (which differ from JNDs as described in the previous section, with specific differences detailed in the literature [

28]). According to the scoring criteria, a difference of 1 JOD indicates that 75% of the population is able to perceive a display difference, and higher scores signify better visual quality. When the difference in scoring results is within 1 JOD, each increment of 0.01 JOD corresponds to 0.27% of the population perceiving a difference in display quality [

18,

29].

By analyzing

Figure 10,

Figure 11 and

Figure 12 comprehensively, we observe significant differences in the scores across different sequences, with the overall score trends closely related to the distributions of SI and IK. Specifically, a larger number of outliers and greater absolute deviations in SI lead to greater fluctuations in the score curves (e.g., Fireplace 02 and Smith welding). This is attributed to highly variable spatial information in certain frames, resulting in distributions with high variability. Such cases correspond directly to the randomness in the 3D scatter distribution, indicating a weak correlation between the metrics and the dispersion of extreme values among frames, which causes substantial score variations between consecutive frames. On the other hand, the range of the IK distribution determines the overall variations in the score curve, with wider interquartile spacing leading to more pronounced fluctuations. For sequences with a broad IK distribution but no outliers in the SI distribution (e.g., Cars longshot, Fishing longshot, and Show girl 02), score fluctuations between adjacent frames are smaller, resulting in a smoother overall trend with gradual variations influenced by IK distribution characteristics. This scenario is typically characterized by a moderate correlation between indicators and temporal continuity across frames.

As observed in

Figure 7 and

Figure 10, the multi-PQ method proposed in Ref. [

4] exhibits excellent display quality when processing content with luminance levels below 0.1 cd/m

2. This improvement is particularly evident when the scene exhibits a generally low DR distribution. For instance, in Beerfest lightshow 01 and Smith welding, multi-PQ achieved improvements of 0.086 and 0.025 over 10-bit PQ, respectively, indicating that 2.322% and 0.675% of observers could perceive the difference and preferred multi-PQ. However, when the dynamic range is significantly extended and the DR distribution improves overall (potentially accompanied by distribution skewness or outliers that introduce extreme brightness or special frames), the enhancement effect of multi-PQ diminishes significantly. In some cases, the display quality may fail to surpass that of 10-bit PQ, leading to noticeable differences (e.g., Carousel fireworks 01, Fireplace 02, and Hdr test). Compared to multi-PQ, the proposed mapping function effectively handles a large dynamic range, demonstrates strong robustness, and adapts well to feature-dispersed scenarios, showing significant improvements when applied to these sequences. Particularly for the Fireplace 02 sequence, which exhibits high parameter variability, an extensive dynamic range, and substantial inter-frame fluctuations, the proposed method demonstrates significant advantages in enhancing display quality compared to multi-PQ and 10-bit PQ. For sequences characterized by extensive parameter variations and strong internal correlation, such as Fishing Longshot and Show Girl 02, the proposed method exhibits performance comparable to multi-PQ without demonstrating a substantial improvement. Therefore, the primary advantage of the proposed method over multi-PQ lies in its superior performance in handling scenes with higher dynamic range and broader luminance span, whereas multi-PQ is specifically optimized for scenarios with a constrained dynamic range. Ultimately, for the video sequences analyzed in this study, the average HDR-VDP-3.07 score increases by 0.0743 and 0.0822 compared to 10-bit PQ and multi-PQ, respectively, indicating that 2.0061% and 2.2194% of the population can perceive the enhancement in visual quality.

During video playback, different EOTF directly influence the luminance distribution and dynamic range of video frames by modifying the nonlinear mapping of luminance signals, thereby affecting temporal coherence. When the absolute luminance of pixels within a frame undergoes nonlinear shifts, it results in greater-than-expected differences between consecutive frames, thereby increasing temporal variation and reducing the tPSNR. As shown in

Table 2, the average tPSNR of the proposed mapping approach across nine video sequences is 33.622 dB, exceeding the 33.14 dB of multi-PQ and marginally surpassing the 33.557 dB of 10-bit PQ. This suggests that the proposed method more effectively preserves image details, minimizes inter-frame luminance fluctuations, and mitigates nonlinear distortions in luminance trajectories of moving objects by dynamically adjusting the quantization step size, thereby reducing the mean-square error in the temporal domain. Moreover, the proposed method achieves the most significant improvements on the Fireplace 02 and Smith Welding sequences, which exhibit the highest inter-frame fluctuations, with increases of 0.68 dB and 0.66 dB over multi-PQ, respectively. This suggests that the proposed method enhances temporal consistency more effectively in highly complex scenarios involving intricate motion patterns or frequent scene transitions.

In terms of computational complexity, the mapping function proposed in this paper is independent of the image content and requires only the generation of the LUT based on the conversion relationship among the quantized value, the display system drive signal, and the absolute luminance. Once the LUT is generated, the computation for each image frame, regardless of its resolution, can be accomplished using a single lookup operation. In addition, the size of the LUT is fixed and remains constant regardless of the input scale. Therefore, the time complexity and space complexity of the proposed method are O(1), which is the same as that of the PQ algorithm. In contrast, multi-PQ requires real-time computation of the image’s average brightness during implementation and selects the corresponding LUT based on the computed result. Assuming that the number of pixels in the image is N, multi-PQ performs N−1 additions and one division, resulting in a time complexity of O(N), while the selection of the corresponding LUT based on the average brightness has a time complexity of O(1). Consequently, the final time complexity of multi-PQ is O(N), whereas its LUT remains independent of the input data size, maintaining a space complexity of O(1). In summary, compared with multi-PQ, the proposed method has a lower time complexity and better meets real-time processing requirements. In addition, regarding hardware cost, multi-PQ requires four LUTs, whereas the proposed method requires only one LUT, thereby reducing the storage requirement by 75% and offering significant practical advantages.