Abstract

Object-level SLAM is a new development direction in SLAM technology. To better understand the scene, it not only focuses on building an environmental map and robot localization but also emphasizes identifying, tracking, and constructing specific objects in the environment. To address the issues of localization and pose estimation caused by spatial geometric feature distortion of objects in complex application scenarios, we propose a distributed joint pose estimation optimization method. This method, based on globally dense fused features, provides accurate global feature representation and employs an iterative optimization algorithm within the algorithm framework for pose refinement. Simultaneously, it completes visual localization and object state optimization through a joint factor graph algorithm. Finally, by employing parallel processing, it achieves precise optimization of localization and object pose, effectively solving the optimization error drift problem and realizing accurate visual localization and object pose estimation.

1. Introduction

Traditional SLAM (Simultaneous Localization and Mapping) algorithms primarily rely on low-level geometric features, such as points, lines, and planes, for simultaneous localization and mapping [1,2]. These conventional SLAM methods often focus solely on the geometric structure of the environment and are unable to identify and track specific objects within the scene [3]. Consequently, they lack a richer capability for scene description and understanding. Although recent developments in dense SLAM [4] have progressed rapidly, the non-discriminative, globally dense maps have become challenging to handle in real-time scenarios.

Object-level SLAM is a new development direction in SLAM technology [5]. To better understand the scene, it not only focuses on constructing environmental maps and localizing the robot but also emphasizes identifying and tracking specific objects in the environment. Therefore, it is an effective technical means for object perception in three-dimensional space. Unlike traditional SLAM methods that typically only focus on the geometric structure of the environment, object-level SLAM further includes specific objects to provide a richer understanding of the scene [6,7]. Additionally, object-level SLAM has the capability to recognize and track objects [8]. This can provide more information for intelligent systems, such as robots, enabling them to make smarter behavioral decisions based on the recognition and understanding of objects, such as intelligent navigation, obstacle avoidance, and user interaction [9].

Therefore, object-level SLAM methods have stronger scene understanding capabilities, object perception and interaction abilities, and smarter decision-making capabilities compared to traditional SLAM methods. These advantages make object-level SLAM widely applicable in civilian, military, and other industries [10]. For example, in the civilian sector, it can be used for robotic grasping, localization, and augmented reality [11]. In the military field, unmanned reconnaissance systems can reconstruct and localize objects and determine their movement trajectories even in situations with occlusions, camouflage, and insufficient data, which traditional methods cannot handle. This meets the needs for reconnaissance, detection, and situational awareness of military objects.

Despite the exciting progress made by existing methods in the field of object-level SLAM, several challenges remain [12,13,14]. These methods either require dense mapping, cannot jointly optimize sensor poses, or cannot robustly edit the textures of objects. Therefore, to address issues in object-level SLAM such as the lack of color and texture information for objects, pose optimization errors caused by reusing map points on objects, and localization optimization drift, we propose an object-level SLAM algorithm based on distributed joint pose estimation and texture editing (DJPETE), referred to as DJPETE-SLAM. In exploring the frontier of Simultaneous Localization and Mapping (SLAM) technology, we have recognized the critical importance of enhancing and deepening the object construction system to improve the overall system performance. To this end, this study introduces texture editing as a core strategy to address challenges arising from insufficient perception of object texture information during the SLAM process. The lack of object texture information not only weakens the appearance and information expression of objects but also limits the detail and readability of maps, thereby affecting the overall effectiveness of the SLAM system. The incorporation of texture information can significantly enhance map detail and readability, providing a more intuitive and refined visual perception. Although texture information does not directly contribute to the improvement of localization accuracy, its value in enriching the map content and enhancing user experience cannot be overlooked. Therefore, the innovative integration of texture editing into the SLAM process not only accomplishes both localization and detailed object mapping tasks but also injects new vitality into the development of SLAM technology. However, such comprehensive functionality has rarely been seen in previous studies and papers. To fill this gap, this study further explores the application of texture editing in SLAM technology, aiming to accurately determine object positions and construct detailed, information-rich map objects, thereby greatly enhancing the practicality and functionality of the SLAM system. This innovative integration not only propels SLAM technology to higher levels but also opens new avenues for research in related fields.

Our main contributions are as follows:

- (1)

- We propose a new object-level SLAM method that reconstructs rich texture information for objects while performing simultaneous localization and mapping. By using a sparse representation for the environment map and detailed construction for objects, our method achieves map building and object perception in complex scenes.

- (2)

- To address the lack of color and texture information in object-level SLAM, we propose an iterative strategy-based object texture editing and reconstruction algorithm. By utilizing localization trajectories and iteratively merging texture information from different viewpoints, this method achieves texture editing and reconstruction during the object construction process.

- (3)

- To address the issue of optimization error drift caused by pose optimization and the reuse of map points on objects in object-level SLAM, we propose a distributed parallel BA algorithm based on object and map point clouds for optimization. By utilizing distributed joint optimization of map points and objects, this method achieves precise visual localization and object pose estimation.

2. Related Works

With the rapid development and widespread application of robotic technology, object perception technology, as a crucial component for robots to achieve autonomous navigation, environmental understanding, and human–machine interaction, has received extensive attention and research. Especially in complex real-world environments, accurate and efficient object perception and recognition are crucial for robots to make autonomous decisions and take actions. Visual SLAM technology, as an advanced technology that combines computer vision and robotic navigation, provides new solutions and methods for object perception.

SLAM++ [15] proposed a groundbreaking “object-oriented” 3D SLAM paradigm, which fully leverages prior knowledge of many scenes composed of repetitive, domain-specific objects and structures. It achieves real-time 3D object recognition and tracking, providing 6DoF camera–object constraints that feed explicit object shapes into the pose graph and continually improve through efficient pose graph optimization. However, SLAM++ adopts an instance-based approach, requiring prior knowledge of object models, and cannot scale to a large number of objects. In Quadricslam [16], double-quadric surfaces are used as 3D landmark representations, taking full advantage of their compact representation of object size, position, and orientation, demonstrating how to directly constrain the parameters of quadric surfaces through a novel geometric error formula. For further comprehensive object data association, Tian et al. [17] proposed a quadric surface initialization method based on quadric surface parameter separation, improving robustness against observation noise.

However, object-level SLAM based on ellipsoids often loses some details and structural information of the object. The comprehensive object data association algorithm and object-oriented optimization based on multiple clues make object pose estimation highly accurate and robust to local observations [18]. Sharma et al. [19] introduced a fast, scalable, and accurate SLAM localization algorithm, representing indoor scenes as object graphs. By structuring artificial environments and observed results occupied by recognizable objects, it generates explicit and persistent object landmarks through a novel semantic-assisted data association strategy. However, the constructed objects are often incomplete or have significant noise. To address the issues of missing and incomplete objects caused by reconstruction, Dong et al. [20] described a method for detecting objects in 3D space using video and inertial sensors. The optimal representation of objects in the scene is decomposed into two parts: one is the geometric positioning problem, which can be addressed through SLAM for localization, and the other is obtained through a discriminative convolutional neural network.

In addition, to adapt object-level SLAM for dynamic scenes [21], Mid-fusion [22] proposed a new multi-instance dynamic RGB-D SLAM method using voxel representation based on object-level octrees. It provides robust camera tracking in dynamic environments while continuously estimating the geometry, semantics, and motion attributes of any objects in the scene. Based on the estimated camera and object poses, it associates segmented masks with existing models and progressively fuses the corresponding color, depth, semantics, and foreground object probabilities into each object model. Although this method integrates more dimensions of information, such as color, depth, and semantics, it does not sufficiently perform joint optimization of object poses to improve their accuracy and reliability. DynaSLAM [23] combines Mask R-CNN [24] and depth inconsistency checks to segment moving objects and further color them based on the static background in regions. It exhibits stronger robustness in dynamic scenes but may lose relevant information about moving objects. CubeSLAM [13] introduces a method for three-dimensional cuboid object detection based on single images and multi-view object SLAM, suitable for both static and dynamic environments. It demonstrates that these two parts can mutually enhance each other. This method achieves state-of-the-art monocular camera pose estimation and simultaneously improves the accuracy of three-dimensional object detection.

Fusion++ [25] constructs persistent and accurate 3D graphical maps of arbitrarily reconstructed objects. Objects are progressively refined through depth fusion and used for tracking, relocalization, and loop detection. Loop closures adjust the relative pose estimation of object instances without changing the internal deformations of objects but do not perform joint optimization of object poses. Bowman et al. [26] formulated an optimization problem concerning sensor states and semantic landmark positions, integrating metric information, semantic information, and data association. This problem is decomposed into two interrelated problems: estimating discrete data associations and landmark category probabilities and the continuous optimization of metric states. The estimated landmarks and robot poses affect the associations and category distributions, thereby influencing robot–landmark pose optimization. This method primarily estimates sensor poses, landmark positions, and categories, without focusing on landmark pose estimation.

To further achieve accurate object poses, NodeSLAM [27] proposes an efficient and optimizable multi-category learning object descriptor, along with a novel probabilistic and differential rendering engine, for inferring complete object shapes principled from one or multiple RGB-D images. The NodeSLAM framework allows for accurate and robust 3D object reconstruction, enabling various applications such as robot grasping and placement, augmented reality, and the first object-level SLAM method capable of jointly optimizing object poses, shapes, and camera trajectories. However, it requires dense depth maps for shape optimization. Addressing this issue, DSP-SLAM [12] constructs rich and accurate joint maps containing dense 3D models of foreground objects and sparse landmark points representing the background. It also introduces object perception-based BA pose graph optimization to jointly optimize camera poses, object poses, and feature points. However, it does not perform texture information editing on perceived objects and suffers from overlapping 3D input points with objects, leading to issues of reuse.

3. Methods

3.1. Overall Framework

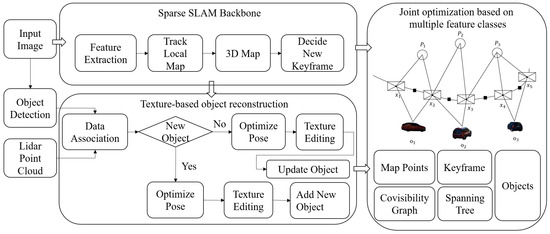

In this part, we have developed an object-level SLAM algorithm based on distributed pose optimization and texture editing. This algorithm accurately represents environmental features using sparse map points while detailing the texture information and pose status of objects. Our proposed object-level SLAM algorithm consists of several core modules, including the sparse SLAM framework, image-based object detection and segmentation, 3D point cloud object detection and reconstruction, and joint map optimization. The algorithm framework is illustrated in Figure 1.

Figure 1.

Diagram of object-level SLAM algorithm based on distributed BA and texture editing.

In constructing the sparse SLAM algorithm framework, we build upon ORB-SLAM2 to ensure the algorithm’s efficiency and stability. Additionally, we integrate the two-dimensional image detection and segmentation techniques of Mask R-CNN and the three-dimensional point cloud object detection algorithm of PointPillars to achieve a multidimensional accurate perception of objects. We also develop an object reconstruction algorithm based on texture editing to enhance object texture information. Finally, our approach incorporates distributed BA-based joint map optimization to ensure high accuracy and consistency of the map while processing large amounts of data.

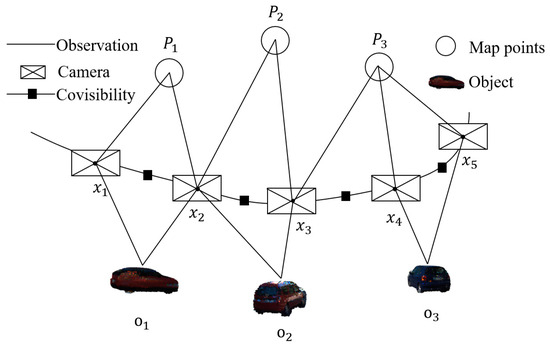

3.2. Distributed Joint Optimization Based on Multiple Feature Classes

In this paper, we use a nonlinear least squares optimization method to estimate the state of the camera. This method continuously utilizes the observed data to update and correct the camera state in real time, achieving precise tracking and state estimation of the camera’s motion trajectory. The observation relationships between the camera pose, map points, and objects are shown in Figure 2. This figure intuitively illustrates the spatial constraint relationships between the camera and map points, as well as the observation relationships between the camera and objects. Specifically, these constraint relationships not only cover the precise positioning relationship between the camera and map points but also include the observational data association between the camera and objects, namely the camera–object pose constraints and the camera–map point constraints. Together, these form the core content of the joint optimization problem.

Figure 2.

Diagram of the observation relationships between the camera and map landmarks.

3.2.1. Camera–Object Pose Constraints

In object-level SLAM, the pose constraints between the camera and the object, as well as those between the camera and map points, are key elements in constructing an accurate environmental model. The pose constraints between the camera and the object are established by observing the relative pose relationship between the object and the camera. These constraints help estimate the camera’s position and orientation in the environment and are used to optimize the position and pose of the object.

Suppose there is a camera and an object, and the relative rotation between them can be represented by the quaternion . The quaternion consists of a real part and an imaginary part , representing the axis of rotation. In the camera coordinate system, assume there is a point , and in the object’s coordinate system, there is another point . To accurately represent and compute the relative position and pose relationship between the camera and the object, we can use these points and the quaternion to define a camera–object pose constraint. The mathematical expression of this constraint will describe how to transform points between the two coordinate systems, considering their relative rotation. Through such constraints, the algorithm can accurately model and calculate the relative pose relationship between the camera and the object, thus optimizing and refining the measurement model. Mathematically, the camera–object pose constraint can be described by the following expression:

where denotes quaternion multiplication, and is the translation vector of the camera in the object’s coordinate system. This expression represents the transformation relationship between the point in the camera coordinate system and the point in the object’s coordinate system, which can be used to construct pose constraints in the SLAM algorithm. Specifically, a new pose observation can be viewed as an edge between the camera pose Twc and the object pose Two.

To quantify the difference between this observation and the true value, we define a residual term that measures the deviation between the observed pose and the theoretically expected pose. This residual definition helps continuously correct and refine the pose estimation of the camera and the object through the optimization process in the SLAM algorithm, thereby improving the accuracy of the entire observation model. Through the observation relationship, the residual of the camera–object pose constraint can be defined as

where log denotes the logarithmic map from SE(3) to .

3.2.2. Camera–Map Point Constraints

The core of the camera–map point constraint lies in establishing the correspondence between the feature point coordinates in the camera coordinate system and the corresponding feature point coordinates in the map. This correspondence can be achieved through feature point matching and geometric transformation. The constraint between the camera and the map points is established by observing the projection of the map points in the image. Once this correspondence is established, these constraints help determine the 3D positions of the map points, enabling the construction of camera–map point constraint equations to optimize the camera’s pose and the map points’ positions.

For the constraints between the camera and the map points, the proposed method uses reprojection error to measure the difference between the observed map point positions by the camera and the predicted map point positions. The reprojection error formula can be expressed as

where our algorithm E represents the reprojection error, and i serves as the index of the map points, used to distinguish different feature points. π denotes the projection function, responsible for mapping points from the 3D space onto the 2D image plane. Simultaneously, represents the transformation matrix from the camera coordinate system to the world coordinate system, containing the camera’s pose information, including rotation and translation. For each feature point on the map, its coordinates are denoted as , representing its coordinates in 3D space. When the camera captures these feature points, the algorithm extracts their pixel coordinates in the image using image feature point detection techniques, denoted as .

In our algorithm, the projection function π needs to be defined. In the perspective camera model, the projection function plays a crucial role, as it maps points from the 3D space to the 2D image plane. According to the principles of the perspective camera model, the projection function π can accurately represent the process of converting 3D points in the camera coordinate system to 2D pixel coordinates in the image coordinate system. This conversion process involves the camera’s intrinsic parameters (such as focal length, principal point coordinates, etc.). By precisely defining and applying the projection function π, the algorithm can achieve accurate matching between the camera observations and map points, providing observation constraints for accurate localization and map construction in the SLAM system. For the perspective camera model, the projection function can be expressed as follows:

where represents the homogeneous coordinates of the map point, and represent the camera’s focal lengths, and and represent the camera’s principal point coordinates. Finally, by substituting the reprojection error formula into the projection function and using an optimization algorithm to handle this optimization problem, the algorithm can iteratively adjust the camera pose and map point positions to minimize the reprojection error. This completes the optimization between the camera and map points.

3.2.3. Joint Graph Optimization Based on Multiple Feature Classes

To address the issue of optimization error drift caused by the repeated use of map points in object pose optimization within object-level SLAM, we propose a new solution: a distributed parallel BA algorithm based on object and map point clouds. This algorithm achieves fast and accurate optimization of both object poses and map points through parallel processing. Additionally, by incorporating the Levenberg–Marquardt algorithm, the proposed method further enhances the stability and convergence speed of the optimization process, effectively resolving the issue of optimization error drift.

With this optimization method, the algorithm can more accurately perform localization and estimate object poses while efficiently utilizing the point cloud data on the map. Consequently, it enables precise perception and modeling of the environment and objects.

In this paper, a least squares problem based on joint pose optimization is constructed by combining map features and objects. Within this framework, the proposed method’s joint map contains a set of camera poses , object poses , and map points . Specifically, this joint BA problem can be formulated as a complex nonlinear least squares optimization problem. The goal is to optimize the relative poses of the cameras and objects, as well as the positions of the map points, to minimize the reprojection error, thereby enhancing the accuracy and robustness of the 3D reconstruction. The formulation process is expressed as follows:

where and represent the measurement residuals for the camera–object and camera–point constraints, respectively, and Σ denotes the covariance matrix of the measurement residuals. Objects can serve as additional landmarks, thereby enhancing the perceptual performance of the algorithm, as demonstrated in our evaluation on the KITTI dataset.

The derivative of the error function with respect to the map points can be obtained as follows:

Similarly, the derivative of the error function with respect to the camera pose can be expressed as

Additionally,

According to the derivative calculation using Lie algebra, the left perturbation on and is applied as and . Consequently, the error term becomes.

Therefore, according to the derivative rule in Lie algebra, the Jacobian matrices of the error function with respect to the camera pose and object pose are, respectively:

where

In distributed joint pose optimization, we introduce a parallel thread that synchronizes the object-based BA and feature-based BA. Leveraging the powerful computational capacity of distributed computing, our proposed method divides the BA task into optimizations solely focused on feature points and camera poses, as well as optimizations solely focused on objects. This significantly enhances the computational efficiency. In this paper, we employ the BA method from sparse backbone SLAM. After completing the initial optimization, we fix the feature points and camera poses, concentrating on further optimizing the objects. This strategy not only effectively reduces the potential impact of object pose on odometry accuracy but also significantly improves real-time performance in terms of the frame rate. Consequently, while ensuring accuracy, the entire algorithm exhibits smoother operation.

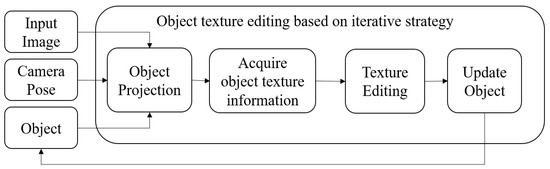

3.3. Iterative Strategy-Based Texture Feature Editing in Object-Level SLAM

In this section, we effectively integrate iterative strategies with object-level SLAM technology to achieve simultaneous localization and mapping while effectively addressing the challenge of the incomplete perception of object texture information. In this paper, the algorithm adopts an iterative strategy-based object texture construction algorithm and integrates it into the object-level SLAM localization algorithm. Through this strategy, we aim to enhance the completeness and richness of object perception information in the SLAM localization algorithm, enabling it to construct rich object information stably and reliably in complex and changing environments. The algorithm workflow is illustrated in Figure 3. The algorithm workflow mainly includes the following key steps: Firstly, input data such as images, camera poses, and point cloud objects are utilized. Then, through the object projection process, the object point cloud is accurately projected onto the image. Based on the projection results, the algorithm can accurately obtain object texture information and perform fine editing of the object’s texture. Finally, the update of the object is completed. The entire process iterates repeatedly with increasing perspectives, continuously refining the object’s texture information to obtain more comprehensive texture information.

Figure 3.

Diagram of object-level SLAM texture feature editing based on iterative strategy.

4. Experimental Results

4.1. Experimental Validation of Object Texture Editing Based on Object-Level SLAM

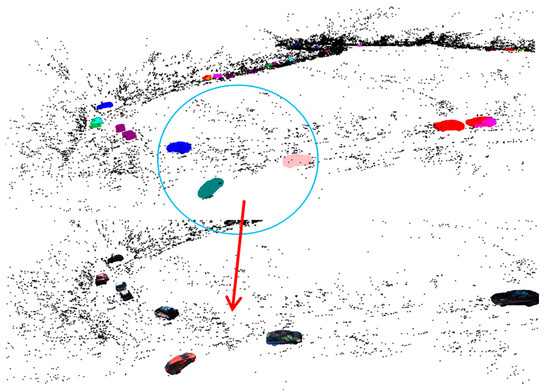

In this part, detailed experimental validation was conducted for the proposed object texture editing feature of the object-level SLAM algorithm, using the widely used KITTI dataset as the benchmark testing platform. First, we selected representative scenes from the KITTI dataset, as shown in Figure 4, and successfully implemented the object-level SLAM texture editing algorithm. In this process, the algorithm utilized an efficient object detection module to accurately identify objects, followed by precise tracking and detailed texture editing of these objects assisted by the SLAM localization algorithm.

Figure 4.

Schematic representation of scenes from the KITTI dataset.

The texture editing results are shown in Figure 5, from which it can be observed that the proposed method demonstrates excellent performance in texture editing within the object-level SLAM framework. Through this approach, it is possible to accurately capture and edit the texture information of objects, resulting in point cloud data that appear more realistic and lifelike after editing. A detailed comparison before and after texture editing reveals that the edited object point cloud information is more complete, with richer surface texture details. Not only has the texture detail significantly improved, but the overall visual effect is also more immersive. These experimental results further validate the effectiveness and superior performance of our proposed object-level SLAM method in the field of texture editing.

Figure 5.

Experimental results of texture editing. The position indicated by the objects in the circle corresponds to the objects after texture editing.

4.2. Validation of Object-Level SLAM Trajectory Accuracy

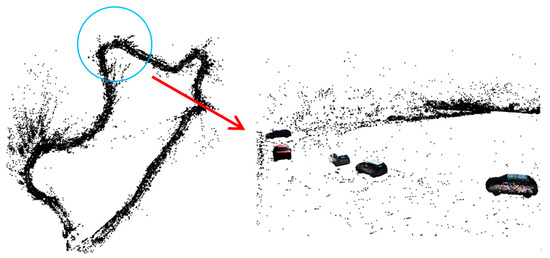

To validate its localization accuracy and object perception effectiveness, we also selected the widely recognized KITTI dataset as the experimental platform in this study. The algorithm initially executed an object-level SLAM trajectory tracking algorithm, which incorporates object detection into the SLAM algorithm for object construction and pose estimation. Subsequently, a complete instance was run on the KITTI dataset, utilizing point cloud data acquired from LiDAR and corresponding image data. The algorithm first extracted the point cloud data from the LiDAR that falls within the outline of the object vehicle, then constructed the shape of the vehicle’s point cloud. Next, it projected the constructed vehicle point cloud onto the image and performed color texture editing on the point cloud. Finally, the reconstructed vehicle object was inserted into the camera tracking trajectory based on its position and pose, achieving precise construction of the sparse map and accurate perception of the object. The experimental results are shown in Figure 6, Figure 7 and Figure 8. Figure 6 displays the sparse environmental map from a macro perspective. Figure 7 exhibits the object perception result, highlighting the accurate identification and localization of the object vehicle by the algorithm. Figure 8 integrates the sparse environmental map with the object perception result, further presenting a complete and rich experimental scene. From the experimental results, it is evident that our proposed algorithm not only effectively achieves the experimental goals of visual localization and object perception but also demonstrates competitive results in object perception and environmental construction.

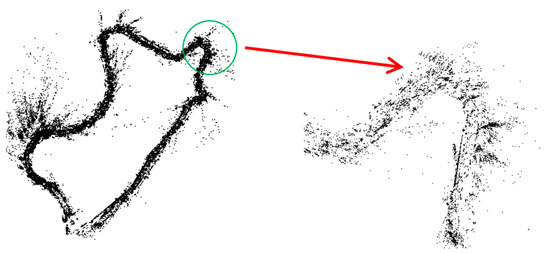

Figure 6.

Sparse environmental map experimental result. In the figure, the position indicated by the content in the circle refers to the close-up detail.

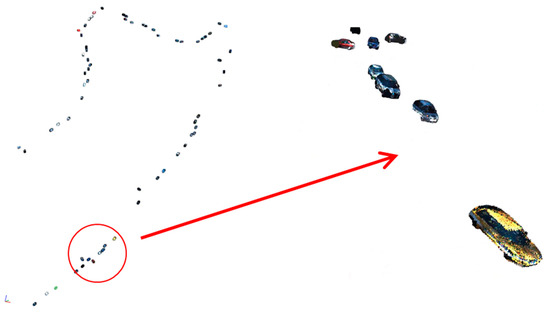

Figure 7.

Experimental result of object perception. In the figure, the position indicated by the content in the circle refers to the zoomed-in detail.

Figure 8.

Experimental result of SLAM motion tracking with object and map point mapping. In the figure, the arrow points to the zoomed-in detail corresponding to the content in the circle.

In addition, to comprehensively evaluate the performance of our proposed method, we conducted comparative experiments between our method and the ground truth trajectories from the KITTI dataset to assess the trajectory accuracy and precision of our method. To enhance the reliability and confidence of the experiments, we carefully selected and tested a comprehensive set of diverse datasets covering a wide range of scenarios and perspectives. To more thoroughly evaluate the effectiveness of the proposed method, detailed comparative experiments were conducted on these datasets, which include data from various environmental conditions such as outdoor, suburban, and urban areas. Additionally, changes in lighting conditions at different times of the day were fully considered, as shown in Figure 9, ensuring the comprehensiveness and validity of the evaluation.

Figure 9.

KITTI experimental dataset.

In addition, we conducted comparative experiments on the positioning accuracy between our proposed method and state-of-the-art SLAM methods, including ORB-SLAM2, ORB-SLAM3, DynaSLAM, and DSP-SLAM. The comparative results are shown in Table 1. In Table 1, we provide a detailed comparison of the absolute trajectory errors for various SLAM algorithms across different datasets. Through the comparative analysis of the data in the table, it is evident that our proposed method not only successfully achieves object perception and pose estimation but also demonstrates significant performance advantages in trajectory accuracy compared to traditional state-of-the-art algorithms such as ORB-SLAM2 and ORB-SLAM3. In contrast, although the DSP-SLAM method exhibits competitive performance on certain datasets, its performance on some datasets still lacks stability. As for the DynaSLAM method, while it shows advantages in handling datasets with dynamic scenes, its overall performance does not achieve satisfactory results.

Table 1.

Comparison of absolute trajectory errors, where 00–07 represent different image sequences in the KITTI dataset.

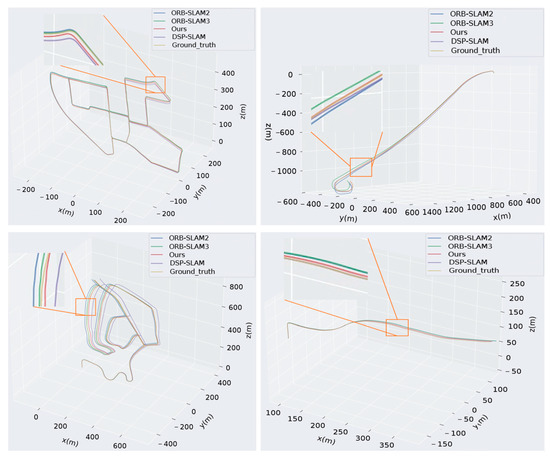

Additionally, to further describe and validate the feasibility and effectiveness of our proposed method in visual localization, we conducted spatial comparison experiments on localization trajectories. The experimental results are shown in Figure 10, where the red trajectory represents the localization trajectory of our proposed method, the yellow trajectory denotes the ground truth trajectory, the blue trajectory represents the localization trajectory of ORB-SLAM2, the green trajectory is for ORB-SLAM3, and the purple trajectory represents DSP-SLAM. From the experimental results, it can be observed that our algorithm exhibits similar localization accuracy to the trajectories of ORB-SLAM2 and ORB-SLAM3. Furthermore, while executing object perception, our method is closer to the ground truth trajectory compared to DSP-SLAM, further indicating the higher localization accuracy of our proposed method in visual localization and object perception scenarios.

Figure 10.

Localization trajectory comparison results.

Given the lack of direct ground truth evaluation standards for objects, we adopted a strategy of constructing objects and projecting them onto images. By quantifying the alignment accuracy between the images and projections, we evaluated the accuracy of object construction. The experimental results are shown in Figure 11, which visually presents the point cloud data of the constructed objects, the original images, the projection results, and the final construction outcomes of the objects. Through the correspondence of the projections, we observed that the constructed objects almost perfectly overlapped with the objects in the images. Therefore, the experimental results demonstrate that the proposed method achieves significant success in object construction. This experiment further validates the effectiveness of the proposed method in object construction tasks.

Figure 11.

Accuracy validation of the constructed objects projected onto the image. In the figure, from left to right, the first column represents the point cloud data for the object construction, the second column shows the original image data, the third column represents the projection of the point cloud data onto the image, and the fourth column displays the effect and position of the constructed object in the LiDAR data.

5. Conclusions

Object-level SLAM represents a new direction in SLAM technology, focusing not only on building environment maps and robot localization but also on identifying, tracking, and constructing specific objects in the environment. Addressing challenges such as the lack of color and texture information during object reconstruction and optimization errors due to object pose optimization and repeated use of map points on objects, we propose an object-level SLAM algorithm based on distributed BA and texture editing. To address the issue of reconstructed objects lacking texture information, we perform object texture editing simultaneously with object reconstruction and continuously optimize and refine it, achieving the construction of object texture information. Furthermore, to mitigate optimization errors caused by object pose optimization and repeated use of map points on objects, we utilize a distributed BA approach to separate and eliminate the impact of moving objects on localization trajectory accuracy. Extensive experiments demonstrate that our proposed method achieves superior performance in visual localization and object texture information.

Author Contributions

Conceptualization, Z.L.; methodology, C.Y.; formal analysis, Z.Z.; investigation, Z.L.; resources, Z.Z.; writing—original draft preparation, C.Y.; visualization, D.W.; supervision, Y.X.; project administration, D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by The National Engineering Laboratory for Integrated Aero-Space-Ground-Ocean Big Data Application Technology Funded Projects.

Data Availability Statement

The data are contained within the article.

Conflicts of Interest

The author Dan Wang was employed by the company Xi‘an ASN Technology Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Dong, Y.; Wang, S.; Yue, J.; Chen, C.; He, S.; Wang, H.; He, B. A novel texture-less object oriented visual SLAM system. IEEE Trans. Intell. Transp. Syst. 2019, 22, 36–49. [Google Scholar] [CrossRef]

- Iqbal, A.; Gans, N.R. Localization of classified objects in slam using nonparametric statistics and clustering. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Wimbauer, F.; Yang, N.; von Stumberg, L.; Zeller, N.; Cremers, D. MonoRec: Semi-supervised dense reconstruction in dynamic environments from a single moving camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Wu, Y.; Zhang, Y.; Zhu, D.; Feng, Y.; Coleman, S.; Kerr, D. Eao-slam: Monocular semi-dense object slam based on ensemble data association. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Zhong, F.; Wang, S.; Zhang, Z.; Wang, Y. Detect-SLAM: Making object detection and SLAM mutually beneficial. In Proceedings of the 2018 IEEE winter conference on applications of computer vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Choudhary, S.; Carlone, L.; Nieto, C.; Rogers, J.; Liu, Z.; Christensen, H.I.; Dellaert, F. Multi robot object-based slam. In Proceedings of the 2016 International Symposium on Experimental Robotics, Nagasaki, Japan, 3–8 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Jablonsky, N.; Milford, M.; Sünderhauf, N. An orientation factor for object-oriented SLAM. arXiv 2018, arXiv:1809.06977. [Google Scholar]

- Liu, Y.; Petillot, Y.; Lane, D.; Wang, S. Global localization with object-level semantics and topology. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Lin, S.; Wang, J.; Xu, M.; Zhao, H.; Chen, Z. Contour-SLAM: A Robust Object-Level SLAM Based on Contour Alignment. IEEE Trans. Instrum. Meas. 2023, 72, 5006812. [Google Scholar] [CrossRef]

- Choudhary, S.; Trevor, A.J.; Christensen, H.I.; Dellaert, F. SLAM with object discovery, modeling and mapping. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Wang, J.; Rünz, M.; Agapito, L. DSP-SLAM: Object oriented SLAM with deep shape priors. In Proceedings of the 2021 International Conference on 3D Vision (3DV), London, UK, 1–3 December 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Yang, S.; Scherer, S. Cubeslam: Monocular 3-d object slam. IEEE Trans. Robot. 2019, 35, 925–938. [Google Scholar] [CrossRef]

- Liao, Z.; Hu, Y.; Zhang, J.; Qi, X.; Zhang, X.; Wang, W. So-slam: Semantic object slam with scale proportional and symmetrical texture constraints. IEEE Robot. Autom. Lett. 2022, 7, 4008–4015. [Google Scholar] [CrossRef]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.J.; Davison, A.J. Slam++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Nicholson, L.; Milford, M.; Sünderhauf, N. Quadricslam: Dual quadrics from object detections as landmarks in object-oriented slam. IEEE Robot. Autom. Lett. 2018, 4, 1–8. [Google Scholar] [CrossRef]

- Tian, R.; Zhang, Y.; Feng, Y.; Yang, L.; Cao, Z.; Coleman, S.; Kerr, D. Accurate and robust object SLAM with 3D quadric landmark reconstruction in outdoors. IEEE Robot. Autom. Lett. 2021, 7, 1534–1541. [Google Scholar] [CrossRef]

- Zhang, J.; Gui, M.; Wang, Q.; Liu, R.; Xu, J.; Chen, S. Hierarchical topic model based object association for semantic SLAM. IEEE Trans. Vis. Comput. Graph. 2019, 25, 3052–3062. [Google Scholar] [CrossRef]

- Sharma, A.; Dong, W.; Kaess, M. Compositional and scalable object slam. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Dong, J.; Fei, X.; Soatto, S. Visual-inertial-semantic scene representation for 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yang, B.; Ran, W.; Wang, L.; Lu, H.; Chen, Y.P. Multi-classes and motion properties for concurrent visual slam in dynamic environments. IEEE Trans. Multimed. 2021, 24, 3947–3960. [Google Scholar] [CrossRef]

- Xu, B.; Li, W.; Tzoumanikas, D.; Bloesch, M.; Davison, A.; Leutenegger, S. Mid-fusion: Octree-based object-level multi-instance dynamic slam. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Bescos, B.; Fácil, J.M.; Civera, J.; Neira, J. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot. Autom. Lett. 2018, 3, 4076–4083. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- McCormac, J.; Clark, R.; Bloesch, M.; Davison, A.; Leutenegger, S. Fusion++: Volumetric object-level slam. In Proceedings of the 2018 International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Bowman, S.L.; Atanasov, N.; Daniilidis, K.; Pappas, G.J. Probabilistic data association for semantic slam. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Sucar, E.; Wada, K.; Davison, A. NodeSLAM: Neural object descriptors for multi-view shape reconstruction. In Proceedings of the 2020 International Conference on 3D Vision (3DV), Fukuoka, Japan, 25–28 November 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).