Abstract

In the textile quality control system, textile defect detection occupies a central position. In order to solve the problems of numerous model parameters, time-consuming computation, limited precision, and accuracy of tiny features of textile defects in the defect detection process, this paper proposes a textile defect detection method based on the YOLO-GCW network model. First, in order to solve the problem of detection accuracy of tiny defective targets, the CBAM (Convolutional Block Attention Module) attention mechanism was incorporated to guide the model to focus more on the spatial localization information of the defects. Meanwhile, the WIoU (Weighted Intersection over Union) loss function was adopted to enhance model training as well as to improve the detection accuracy, which can also provide a more accurate measure of match between the model-predicted bounding box and the real target to improve the detection capability of tiny defect targets. Consequently, in view of the need for performance optimization and lightweight deployment, the Ghost convolution structure was adopted to replace the traditional convolution for compressing the model parameter scale and promoting the detection speed of complex texture features in textiles. Finally, numerous experiments proved the positive performance of the presented model and demonstrated its efficiency and effectiveness in various scenes.

1. Introduction

As a traditional labor-intensive industry, the textile industry has a series of characteristics, such as sensitivity to the cost of labor and raw materials, high degree of external dependence, low added value, etc. [1]. During the production process, problems with equipment, operations, storage, or transport may lead to textile defects such as rub holes, missing warp, weft, skipping, stains, and so on. Textile defects not only affect the aesthetics and performance of the product but also product pricing. In order to ensure the quality of textile products, it is important for manufacturers to detect textile defects, and it has also become a core step in the manufacturing and quality management of textile companies. However, the detection of textile defects is particularly challenging due to the large number of textile defect categories, the inefficiency of manual inspection [2], and the impact of image quality under different lighting conditions [3].

Advancements in deep learning have led to significant progress in the field of object detection. With the help of deep learning methods, researchers can effectively achieve the detailed classification and accurate segmentation of images, which significantly enhances the image recognition performance and greatly improves the overall accuracy of object detection. In recent years, many scholars have begun to actively explore and apply deep learning technology to the field of textile defect detection, and many relevant methods and strategies have been proposed. For example, Wang et al. [4] proposed a vision-based hardware system and a grouped sparse dictionary method, which optimized the sparse dictionary and sped up the sparse coding in the detection stage while guaranteeing detection accuracy. Xiang et al. [5] proposed an advanced textile defect detection model based on deep learning algorithms, i.e., the PDAM (Parallel Dilated Attention Module), designed to establish the global channel dependency relationship and capture contextual information; the HookFPN was also used in order to carry out the detection of textile defects. Moreover, HookFPN was also utilized to perform context aggregation, which improved the detection efficiency of the model. However, the above work considered more the real-time detection problem of edge devices, not taking into account the characteristics of tiny targets for defect detection, which affected the detection accuracy to some extent. Jeyaraj et al. [6] used the NI Vision model to acquire images and preprocessed them to calculate the Kullback Leibler Divergence; then, the convolutional neural network was trained to classify defective and non-defective regions by using standard textiles. Finally, the test textile was inspected with the trained convolutional neural network, which performed accurate positioning for minor defects of the textile. Wei et al. [7] constructed a multilevel, multi-attention deep learning network, which improves the detecting capability for tiny-size defects by increasing the ability to focus on defect status and utilizing higher resolution feature maps for multiple defects. It improves the detection accuracy of tiny targets of textiles but also brings up some problems, such as increasing the model parameters and layers, which would reduce the detection speed.

In summary, the existing defect detection algorithms have shown significant progress and achieved certain results in practical applications, but there is still much to be performed in terms of model detection performance and model size optimization. On the one hand, textile defects are usually tiny targets, which are prone to omission and misdetection, affecting the detection accuracy. Meanwhile, the capability of existing detection algorithms tends to show obvious limitations when faced with defects that are tiny in size, different in shape, and highly integrated with the background texture. On the other hand, the optimization of model size is also a concern for current algorithms in practical applications. Although deep learning models possess higher expression ability and learning potential in theory, their large number of parameters and computational complexity often leads to huge consumption of hardware resources and slow inference, which is a stark contradiction with demands of efficient real-time response and low latency for the industrial production environments, especially in deployment on embedded or edge computing devices.

In summary, based on the foundation of the existing detection algorithms, by improving the YOLO algorithm, the YOLO-GCW textile defect detection method is proposed to obtain better results in the textile defect detection scenario. The key contributions of this paper are outlined as follows:

- (1)

- This paper incorporated the CBAM attention mechanism module to lead the model to focus on the spatial localization characteristics of textile defects, which could effectively solve the problem of tiny defect detection.

- (2)

- The WIoU loss function was adopted to quantify the matching degree between the predicted border of the model and the real target border in a more accurate way to enhance the model’s accuracy in detecting tiny textile defective targets.

- (3)

- The Ghost convolutional structure was utilized to replace the traditional convolutional operation to compress the model parameter scale and promote the real-time response detection speed of the model, enabling convenient, lightweight deployment on embedded and edge devices.

2. Related Work

Conventional target detection methods rely on features that are manually designed., which mainly include three stages: generation of candidate regions, feature extraction and classification, and recognition. Firstly, a series of candidate regions that may contain targets are generated by applying sliding windows of different scales on the image. Subsequently, feature vectors describing the visual characteristics of each candidate region are extracted from it, such as Haar features [8], Hog features [9], and the SIFT (Scale Invariant Feature Transform) algorithm [10]. Finally, a classifier, such as SVM (Support Vector Machine), is utilized to classify each candidate region to determine whether it contains a predefined target category. However, the generalization ability of these features is not ideal enough in practical applications, and the performance is not strong enough in complex scenarios.

Recently, with the rapid advancement of deep learning, it has been gradually applied to speech recognition, image processing, target detection, and other fields. One of the target detection algorithms is based on deep learning [11] by adopting the convolutional neural network to learn features. This approach can automatically identify the features required for target detection and classification. The original input information is transformed into more abstract and higher-dimensional features with the convolutional neural network; such high-dimensional features possess strong feature expression ability and generalization capabilities, which enhance performance in complex scenarios and make them suitable for a wide range of industrial applications [12,13,14,15]. Deep learning target detection methods are categorized into one-stage and two-stage methods based on whether or not the candidate frame is required. Currently, compared to the two-stage model like Faster R-CNN (Region-based Convolutional Neural Networks), the one-stage methods such as the YOLO series algorithms [16] have been widely used in many areas due to end-to-end mode, which has advantages in terms of real-time, accuracy and ease of deployment [17,18,19,20].

With the demand for intelligent development in the textile industry, intelligent inspection technologies are being widely applied in all stages of textile production, especially in the crucial field of textile defect detection. For instance, Li et al. [21] proposed a defect detection system based on improved YOLOv4 using DBSCAN to determine the number of anchor points and the ECA-DenseNet-BC-121 feature extraction network to improve the problem of low detection rate of tiny targets. Li et al. [22] proposed a textile defect detection method named PEI-YOLOv5 by introducing the PDConv, Enhance-BiFPN, and IN loss to the traditional deep learning method, which improved the detection speed and accuracy and increased the mAP from 3.61% to 87.89% on the Tenchi fabric defect dataset. Yang et al. [23] proposed a new nondestructive defect detection network, NDD-Net, which could improve the detection speed and accuracy of fabric defects by using an encoder-decoder network structure and an attention fusion block. It could better focus on the defective region by using residual densely connected convolutional blocks to obtain richer local feature information. Luo et al. [24] proposed a lightweight fabric defect detector based on the attention mechanism, which enhanced the network’s ability to extract defective features and improved the overall network’s detection speed with depth-separable convolution and the attention mechanism. Zhao et al. [25] proposed an RDD-YOLO model for surface defect detection by using Res2Net blocks in the backbone part to expand the receptive field and extract multiscale features. Then, a DFPN (Dual Feature Pyramid Network) was also designed for the neck part to enhance the neck and generate a rich representation. Moreover, a decoupled head was utilized to separate the regression and classification tasks. Luo et al. [26] proposed an algorithm based on YOLOv3 and a deformable convolutional network to address the issues of low accuracy, high leakage, false detection rates, and high labor costs in traditional manual inspection methods. Di et al. [27] proposed a textile defect detection method that integrates a context-sensitive field and adaptive feature fusion, which optimized the bounding box by introducing improved context-sensitive field blocks and an adaptive feature fusion network with inverse convolution. Furthermore, the exponential distance IoU (Intersection over Union) was presented to optimize the calculation of the bounding box loss, which could improve the detection accuracy of the model. Kumar et al. [28] proposed a supervised defect detection method using Gabor wavelet features for unsupervised textile defect detection, along with the introduction of a novel data fusion scheme. These innovations enhance the model’s ability to detect defects by effectively capturing texture information and combining multiple data sources for improved accuracy and robustness. Jing et al. [29] proposed an automatic fabric defect detection method based on a deep convolutional neural network. Their method decomposed the fabric image into localized chunks and labeled them, and then, the labeled chunks were subjected to migration learning by using a pre-trained deep convolutional neural network. Eventually, the class and location of the defects were identified during the sliding detection stage. The experimental results showed that it outperformed the existing methods in terms of quality and robustness.

3. Proposed Method

In this section, the textile defect detection method proposed will be described in detail, containing the network structure of YOLO-GCW, the attention mechanism involved, the loss function, and the model’s lightweighting.

3.1. Textile Defect Detection Framework Based on YOLO-GCW

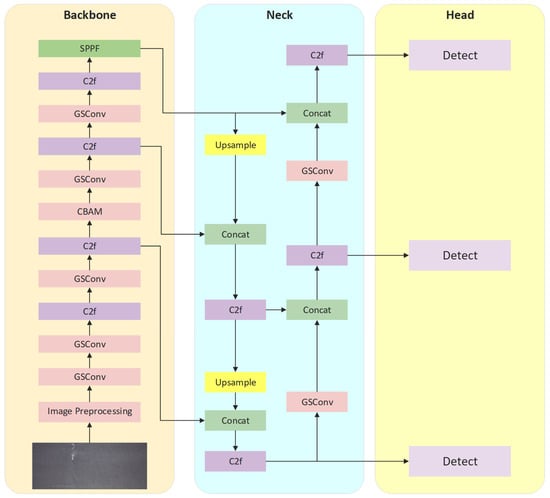

In this study, to address the challenges of tiny defect targets and slow detection speed in the textile production process, a lightweight model named YOLO-GCW, which is based on the YOLOv8, is proposed. Figure 1 illustrates the model’s structure.

Figure 1.

Structure of the YOLO-GCW Model.

The YOLO-GCW model mainly consists of image preprocessing, backbone network, necking network, and head layer. First, to enhance the detection ability of defect information and enhance feature extraction efficiency, the input images would be preprocessed by Gaussian filtering, binarization, and gray scaling techniques.

Subsequently, in both the backbone and neck networks, GSConv modules replace the original convolution modules to ensure the model’s lightweight.

Then, the CBAM attention mechanism was introduced into the backbone network, which could dynamically adjust the weight of the feature map so that the network could pay more attention to the defect-related regions and guide the model to pay more attention to the spatial localization features of textile defects.

Besides, the WIoU is used in the head layer to more accurately evaluate the match between the model’s predicted bounding boxes and the actual targets.

3.2. Convolutional Block Attention Module

The tiny target detection problem is the most challenging task in the textile defect detection process, owing to the fact that the textile defects are tiny targets. Moreover, textile defects usually involve fewer pixel points, making it difficult to locate and extract features. Therefore, we added CBAM after the C2f module of the backbone network to lead the mode to focus more on the spatial localization information of the textile defects, ensuring that the model could identify and locate tiny defects more accurately.

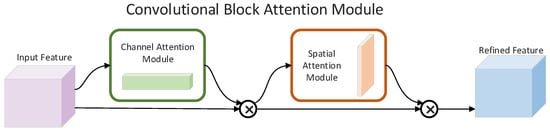

CBAM [30] consists of two distinct sub-modules: the Channel Attention Module (CAM) and the Spatial Attention Module (SAM), which perform channel and spatial attention, respectively. The structure of CBAM is shown in Figure 2.

Figure 2.

Convolutional Block Attention Module.

Figure 3a shows the structure of CAM. It processes the input feature map of size W × H × C using both maximum and average pooling to generate two 1 × 1 × C feature maps that capture global context. These maps are then passed through a multilayer perceptron (MLP) with two fully connected layers, each followed by a ReLU activation. The outputs are combined and passed through a sigmoid function. The resulting weights are multiplied by the original feature map to produce the weighted feature maps.

Figure 3.

(a) CAM Module Structure; (b) SAM Module Structure.

Figure 3b illustrates the structure of SAM. It takes the feature maps generated by the channel attention mechanism and applies channel-based maximum and average pooling to create two H × W × 1 feature maps, enabling multichannel information fusion. These maps are then concatenated and processed through a 7 × 7 convolution, with the output passed through a sigmoid function. Finally, the resulting features are multiplied by the input feature map to produce CBAM’s final output.

3.3. Loss Function

For the issue of tiny defects in textiles, it is necessary to improve the loss function of the model, which can more accurately measure the match between the predicted bounding boxes and the actual targets to make the model’s predictions more precise. In object detection, IoU refers to the intersection over the union between the model’s predicted box and the true box, which reflects the accuracy of the model’s detection. The calculation formula is as follows:

Here, denotes the area of the predicted bounding box, and refers to the area of the true bounding box.

In 2019, the concept of GioU [31] was introduced. It involves using the smallest enclosing rectangle of the predicted and actual boxes to gauge the proportion of these boxes within the enclosed area, addressing the issue of zero gradients when the bounding boxes do not intersect. However, when the predicted and actual bounding boxes are parallel, GIoU performs similarly to IoU. Therefore, Zheng et al. [32] proposed the concept of DIoU, which considers both the distance between the centers and the overlap area of the two boxes, improving the slow convergence speed and low precision issues of GIoU. Moreover, they enhanced the detection accuracy by considering the aspect ratio and introduced CIoU based on DIoU. The calculation formula for CIoU is as follows:

Here, b represents the center coordinates of the predicted bounding box, represents the center coordinates of the true bounding box, c is the Euclidean distance between the diagonally opposite vertices of the smallest rectangle, and are the width and height of the true bounding box, respectively, and w and h are the width and height of the predicted bounding box.

The loss function of the YOLOv8 model consists of object loss, classification loss, and regression loss. The regression loss is the CIoU loss function, and its calculation formula is as follows:

However, due to the unclear design of the aspect ratio weights of the CIoU loss function, the WIoUv3 [33] function will be used instead of the original loss function. The advantage of this loss function when dealing with objects of different shapes and sizes is that it can better balance the impact of large and small targets, which helps to improve the model’s ability to detect small targets and can improve the overall performance of the network model. WIoUv1 has a two-layer attention mechanism, which is calculated as follows:

Outlierness is defined to describe the quality of the anchor boxes; a lower outlierness indicates a higher quality of the anchor box. Building upon WIoUv1, a nonmonotonic focus coefficient is utilized to develop WIoU v3. The calculation formula is as follows:

Here, r is the nonmonotonic focus factor, where r = 1 when ; is the monotonic focus factor, is the moving average of L, with a momentum of m, and is a hyperparameter.

3.4. Ghost Convolution

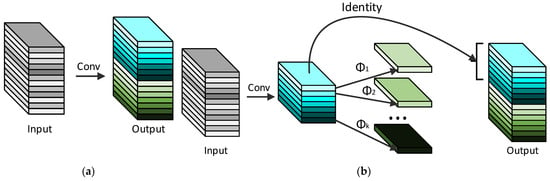

Due to hardware limitations, it is a challenge to deploy intelligent textile defect detection models on embedded devices. Thus, there is a higher demand for lightweight models. The Ghost module [34] can replace each convolutional layer in existing convolutional networks to compress the model and reduce the network parameters and computation while ensuring network accuracy to improve the computation speed. Figure 4 shows the structure of the ordinary and ghost convolutions. Therefore, in order to solve the issues of excessive network layers and the increasing number of parameters caused by utilizing the CBAM module, the Ghost convolution was adopted to replace the standard convolutions in the backbone and neck networks of YOLOv8. These improvements can allow for an increase in the detection speed of the model, as well as convenient, lightweight deployment on edge devices.

Figure 4.

(a) Standard Convolution; (b) Ghost Convolution.

The Ghost module first convolves the input feature map; the number of output channels is half the normal convolution. Then, the linear transformation is performed on the feature maps obtained from each output channel separately. Finally, the results of the linear transformation are spliced with the previously arrived feature map. The calculation process is as follows:

A total of n feature maps are input, where is the m feature maps formed by convolution; m ≤ n; is the convolution kernel; is the ith original feature map; is the linear transformation; is the jth feature map obtained; and are spliced to get the final feature map Y.

4. Experiment Results and Analysis

4.1. Dataset and Experimental Environment

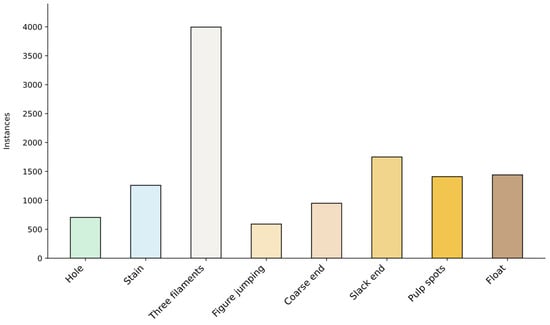

The dataset analyzed during the current study is available in the Ali Tianchi cloth defect detection public dataset repository: https://tianchi.aliyun.com/dataset/79336 (accessed on 22 November 2024). In order to verify the performance of the improved algorithm in the field of textile defect detection, we screened the images from the dataset for eight types of defects: Hole, Stain, Three Filaments, Figure jumping, Coarse end, Stack end, Pulp spots, and Float, with a total of 2420 images.

Image enhancement has been performed on the dataset by flipping, adjusting brightness, adding noise, etc. Thus, the dataset images were expanded to 12,100 images. The number of dataset images is shown in Figure 5. The dataset was also divided into two parts, the training set and the validation set, in a ratio of 8:2. The number of labels for each defective image is shown in Table 1 (a label file may contain multiple labels).

Figure 5.

Number of images in the textile defects dataset.

Table 1.

Number of labels in the textile defects dataset.

The experiments in this paper were conducted on Windows 10 with an i5-11400 CPU, NVIDIA GeForce RTX 3060 GPU, CUDA 11.6, Python 3.8.0, and PyTorch 1.10.2.

4.2. Evaluation Metrics

Several comprehensive evaluation metrics were adopted to comprehensively measure the performance of the proposed model, containing Precision (P), Recall (R), F1-Score, Mean Average Precision Mean (mAP@0.5, mAP@0.95), Number of Parameters (Params), and Frames Per Second (FPS).

P measures the proportion of correctly predicted positive samples out of all predicted positives. R reflects the model’s coverage, indicating the ratio of true positive samples successfully identified by the model. mAP evaluates target detection performance, with mAP@0.5 using an IoU threshold of 0.5, meaning detection is valid only if the IoU between the predicted and ground-truth boxes is greater than 0.5. The number of parameters indicates model size, and FPS measures the frames processed per second during inference, reflecting the model’s speed and real-time performance.

The following are the specific formulas for each evaluation index:

where TP refers to the defects correctly identified by the model, FP to non-defective samples misclassified as defects, and FN to defect samples that the model failed to identify

4.3. Comparative Experiments

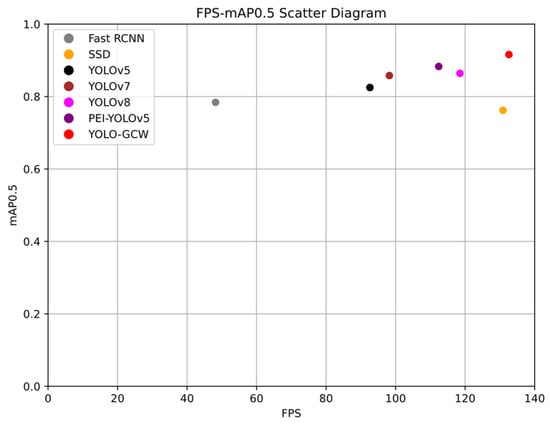

In order to evaluate the YOLO-GCW model more comprehensively, we conducted comparison experiments on the Tianchi public dataset and selected four existing mainstream models, PEI-YOLOv5, YOLOv5 [35], YOLOv7 [36], Fast RCNN [37], and SSD [38], as well as the YOLOv8 baseline model for comparison, and the results are shown in Table 2.

Table 2.

Comparative experimental results.

From Table 2, it can be seen that there is a slight difference in the detection accuracy between the SSD and the Fast RCNN on the textile defect detection task. Although the detection performance of Fast RCNN is better compared to the other algorithms, its higher number of parameters and relatively slower detection speed as a typical two-phase detection method limit its application in real factories.

Meanwhile, YOLOv5 and YOLOv7 have improved performance over the previous two algorithms when faced with the challenge of defect detection for tiny targets, but the detection results are also less satisfactory. Although the existing PEI-YOLOv5 has greatly improved detection accuracy compared with other models, it is still not satisfactory in terms of speed. In contrast, the improved YOLO-GCW model achieved a value of 0.916 on the mAP0.5 metric, and its detection accuracy and speed were also significantly better than those of the above models, which comprehensively improved the detection performance.

Figure 6 shows the scatter plots of FPS-mAP0.5 for each model, which can more intuitively see the differences between the models.

Figure 6.

FPS-mAP0.5 Scatter Diagram.

Based on the above findings, the algorithm proposed in this study shows better performance in the field of textile defect detection. Compared with other evaluation algorithms, YOLO-GCW can present better results in detection tasks.

Table 3 shows the detection effectiveness of the different models for each defect in the Tianchi defect dataset.

Table 3.

Effectiveness of different models in detecting defects.

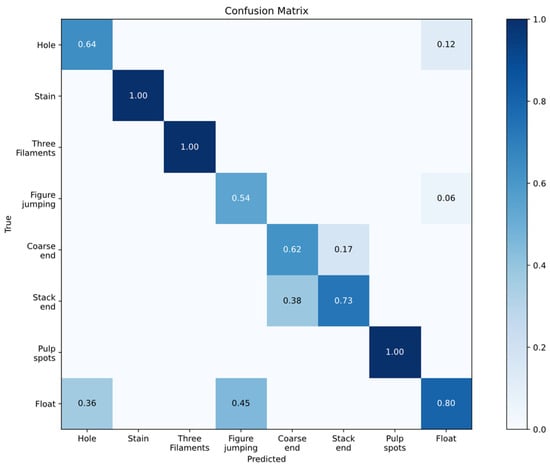

From Table 3, it can be seen that both SSD and Fast RCNN have different degrees of miss detection in the defect categories such as Stain, Three filaments, Coarse end, Slack end, Pulp spots, etc., but the YOLO series basically recognizes most of the defects, especially, the YOLO-GCW can recognize each type of defect with high accuracy. At the same time, we present the confusion matrix results of the dataset in Figure 7, where the x-axis represents the prediction result and the y-axis represents the real result, which can further verify the accuracy of the proposed method.

Figure 7.

Confusion matrix.

By further analysis, utilizing the CBAM with an improved loss function, YOLO-GCW can better focus on the defect localization information to achieve the accurate localization of tiny defective targets, as well as adopt the GhostConv to achieve the purpose of model lightweighting. The effectiveness and feasibility of the algorithm proposed in this paper are further verified by the above comparison experiments.

4.4. Ablation Experiments

To evaluate the performance of the proposed model, five ablation experiments were conducted in the same environment, with the results presented in Table 4.

Table 4.

Results of ablation experiments.

With five ablation experiments, the results of each improvement point can be demonstrated, as well as their strengths and weaknesses, so that we can clearly analyze the effectiveness of each improvement method. As shown in Table 4, the first experiment was the YOLOv8 baseline model with a value of 0.864 for mAP0.5 in the textile defect detection task.

In the second experiment, we found that integrating the CBAM attention mechanism alone provides a small increase in accuracy, but its detection speed has decreased, mainly due to the increase in the number of parameters in the model as a result of the increase in network depth.

In the third experiment, we observed a significant reduction in the number of parameters when the normal convolutional module in the baseline model was replaced with GSConv, suggesting that GSConv has an advantage in improving the efficiency of the model.

Therefore, our fourth experiment was to combine both CBAM and GSConv on the baseline model, which reduced the number of model layers on the basis of focusing on the defect localization information, and it can be observed that not only did the accuracy grow significantly but also the number of parameters decreased to a certain extent.

In the succeeding fifth experiment, the YOLO-GCW also increased the model accuracy by optimizing the loss function while increasing detection speed and reducing model parameters, which highlights the further sublimation of the previous improvement and reflects the deep optimization strategy for performance.

In summary, it can be seen that YOLO-GCW improves the accuracy of tiny defective targets by CBAM and WIoU, while GhostConv can effectively compress the model parameters to promote model lightweighting.

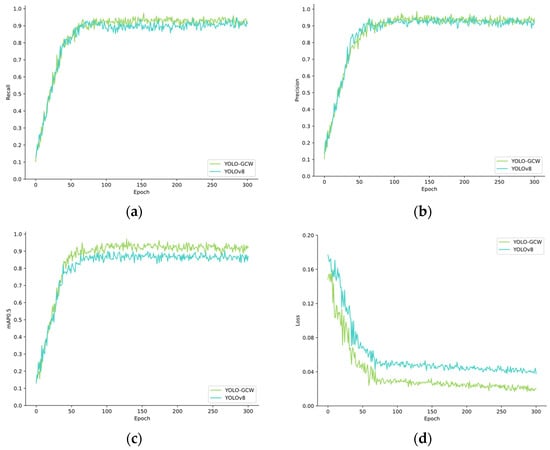

Moreover, the comparison of each training curve of the YOLO-GCW and the baseline model YOLOv8 in this paper is also shown in Figure 8. From this figure, it can be seen that the YOLO-GCW and YOLOv8 are basically the same in terms of precision, but the results of YOLO-GCW on recall, mAP0.5, and loss are significantly stronger than the YOLOv8 model, which verifies the validity of this model.

Figure 8.

(a) Recall comparison curves; (b) Precision comparison curve; (c) mAP0.5 comparison curve; (d) Loss comparison curve.

From the above results, it can be seen that the proposed model in this paper successfully improves the effectiveness of the model in locating and recognizing textile defective micro-targets by incorporating the attention mechanism, improving the loss function, and lightweighting model. The proposed model enhances both detection accuracy and speed while also integrating factory edge equipment, which can strengthen the practicality and ease of use in textile defect detection.

5. Discussion

One important step in textile quality control lies in defect detection. However, this process is greatly challenged because it involves the detection of tiny targets in complex environments and the lightweight deployment of the model.

In order to solve the above problems, a textile defect detection method based on YOLO-GCW was proposed: (1) The YOLOv8 model was used as the basic framework, and the CBAM attention mechanism was integrated to enhance the identification of small textile defect targets. (2) We replaced the traditional loss function with the WIOU loss function to more accurately evaluate the match between the bounding box predicted by the model and the actual defect boundary. (3) Considering the practical application scenario of embedded and edge devices, this study adopts the Ghost convolution module instead of the conventional convolution operation to minimize computational complexity and reduce the model parameter size, which can further promote the real-time response and convenient, lightweight deployment of the model.

In order to verify the superiority and practical value of the proposed method, we applied it to the publicly available textile defect dataset of Tianchi and conducted comparison experiments with some existing detection methods. The experiments proved that the defect detection method based on YOLO-GCW not only presents advantages in the accuracy index but also effectively reduces the model parameters to facilitate the lightweight deployment and application for embedded and edge devices while guaranteeing the detection quality.

At the same time, the Tianchi public dataset used in this paper lacks detailed information on the influencing factors in different environments. In future work, we will supplement and expand our dataset to investigate the effects of various environmental factors, thereby enhancing the method’s applicability.

Author Contributions

J.C. and Y.X. conducted the project planning; B.W. was in charge of the investigation; Y.X. and J.C. performed the experimental work and data analysis; Y.X. wrote the original draft; J.C. and W.L. reviewed and edited the manuscript; G.W. provided supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported in part by the Education Department of the Shaanxi Provincial Government (Program No. 23JS027); in part by the Key Laboratory of Expressway Construction Machinery of Shaanxi Province and the Fundamental Research Funds for the Central Universities, CHD (Chang’an University, No. 300102250510); and in part by the Research Foundation of Xi’an Polytechnic University (No. BS201847).

Data Availability Statement

The dataset analyzed in this study is available in the Ali Tianchi cloth defect detection public dataset repository, https://tianchi.aliyun.com/dataset/79336 (accessed on 22 November 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wanjin, W.U.; Canfei, H.E. China’s textile export trade network expansion. World Reg. Stud. 2022, 31, 12–28. [Google Scholar]

- Kumar, A. Computer-Vision-Based Fabric Defect Detection: A Survey. IEEE Trans. Ind. Electron. 2008, 55, 348–363. [Google Scholar] [CrossRef]

- Ha, Y.S.; Oh, M.; Pham, M.V.; Lee, J.S.; Kim, Y.T. Enhancements in Image Quality and Block Detection Performance for Reinforced Soil-Retaining Walls under Various Illuminance Conditions. Adv. Eng. Softw. 2024, 195, 103713. [Google Scholar] [CrossRef]

- Wang, X.; Yan, B.; Pan, R.; Zhou, J. Real-time textile fabric flaw inspection system using grouped sparse dictionary. J. Real Time Image Process. 2023, 20, 67. [Google Scholar] [CrossRef]

- Xiang, Z.; Shen, Y.; Ma, M.; Qian, M. HookNet: Efficient Multiscale Context Aggregation for High-Accuracy Detection of Fabric Defects. IEEE Trans. Instrum. Meas. 2023, 72, 5016311. [Google Scholar] [CrossRef]

- Jeyaraj, P.R.; Nadar, E.R.S. Effective textile quality processing and an accurate inspection system using the advanced deep learning technique. Text. Res. J. 2020, 90, 971–980. [Google Scholar] [CrossRef]

- Wei, B.; Xu, B.; Hao, K.; Gao, L. Textile defect detection using multilevel and attentional deep learning network (MLMA-Net). Text. Res. J. 2022, 92, 3462–3477. [Google Scholar] [CrossRef]

- Tao, C.; Duan, Y.; Hong, X. Discrimination of fabric frictional sounds based on Haar features. Text. Res. J. 2019, 89, 2067–2074. [Google Scholar] [CrossRef]

- Wei, Y.; Tian, Q.; Guo, J.; Huang, W.; Cao, J. Multi-vehicle detection algorithm through combining Harr and HOG features. Math. Comput. Simul. 2019, 155, 130–145. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Sermanet, P. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO: From YOLOv1 to YOLOv8 and Beyond. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Wagner, T.; Merino, F.; Stabrin, M.; Moriya, T.; Antoni, C.; Apelbaum, A.; Hagel, P.; Sitsel, O.; Raisch, T.; Prumbaum, D.; et al. SPHIRE-crYOLO is a fast and accurate fully automated particle picker for cryo-EM. Commun. Biol. 2019, 2, 218. [Google Scholar] [CrossRef]

- Nogueira, R.G.; Jadhav, A.P.; Haussen, D.C.; Bonafe, A.; Budzik, R.F.; Bhuva, P.; Yavagal, D.R.; Ribo, M.; Cognard, C.; Hanel, R.A.; et al. Thrombectomy 6 to 24 hours after stroke with a mismatch between deficit and infarct. N. Engl. J. Med. 2018, 378, 11–21. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Acharya, U.R. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Li, Y.; Song, L.; Cai, Y.; Fang, Z.; Tang, M. Research on fabric surface defect detection algorithm based on improved Yolo_v4. Sci. Rep. 2024, 14, 5537. [Google Scholar] [CrossRef]

- Li, X.; Zhu, Y. A real-time and accurate convolutional neural network for fabric defect detection. Complex Intell. Syst. 2024, 10, 3371–3387. [Google Scholar] [CrossRef]

- Yang, L.; Fan, J.; Huo, B.; Li, E.; Liu, Y. A nondestructive automatic defect detection method with pixelwise segmentation. Knowl.-Based Syst. 2022, 242, 108338. [Google Scholar] [CrossRef]

- Luo, X.; Ni, Q.; Tao, R.; Shi, Y. A Lightweight Detector Based on Attention Mechanism for Fabric Defect Detection. IEEE Access 2023, 11, 33554–33569. [Google Scholar] [CrossRef]

- Zhao, C.; Shu, X.; Yan, X.; Zuo, X.; Zhu, F. RDD-YOLO: A modified YOLO for detection of steel surface defects. Meas. J. Int. Meas. Confed. 2023, 214, 112776. [Google Scholar] [CrossRef]

- Luo, X.; Cheng, Z.; Ni, Q.; Tao, R.; Shi, Y. Defect detection algorithm for fabric based on deformable convolutional network. Text. Res. J. 2022, 93, 2342–2345. [Google Scholar] [CrossRef]

- Di, L.; Deng, S.; Liang, J.; Liu, H. Context receptive field and adaptive feature fusion for fabric defect detection. Soft Comput. 2022, 27, 13421–13434. [Google Scholar] [CrossRef]

- Kumar, A.; Pang, G.K. Defect detection in textured materials using Gabor filters. IEEE Trans. Ind. Appl. 2002, 38, 425–440. [Google Scholar] [CrossRef]

- Jing, J.F.; Ma, H.; Zhang, H.H. Automatic fabric defect detection using a deep convolutional neural network. Color. Technol. 2019, 135, 213–223. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part VII 11211. pp. 3–19. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-Iou Loss: Faster And Better Learning For Bounding Box Regression. In Proceedings of the Thirty-Fourth Aaai Conference on Artificial Intelligence, the Thirty-Second Innovative Applications of Artificial Intelligence Conference and the Tenth Aaai Symposium on Educational Advances in Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More Features from Cheap Operations. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1577–1586. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. Intell. Syst. Account. Financ. Manag. 2021, 2021, 2778–2788. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 7464–7475. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision—ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).