PLD-DETR: A Method for Defect Inspection of Power Transmission Lines

Abstract

1. Introduction

- (1)

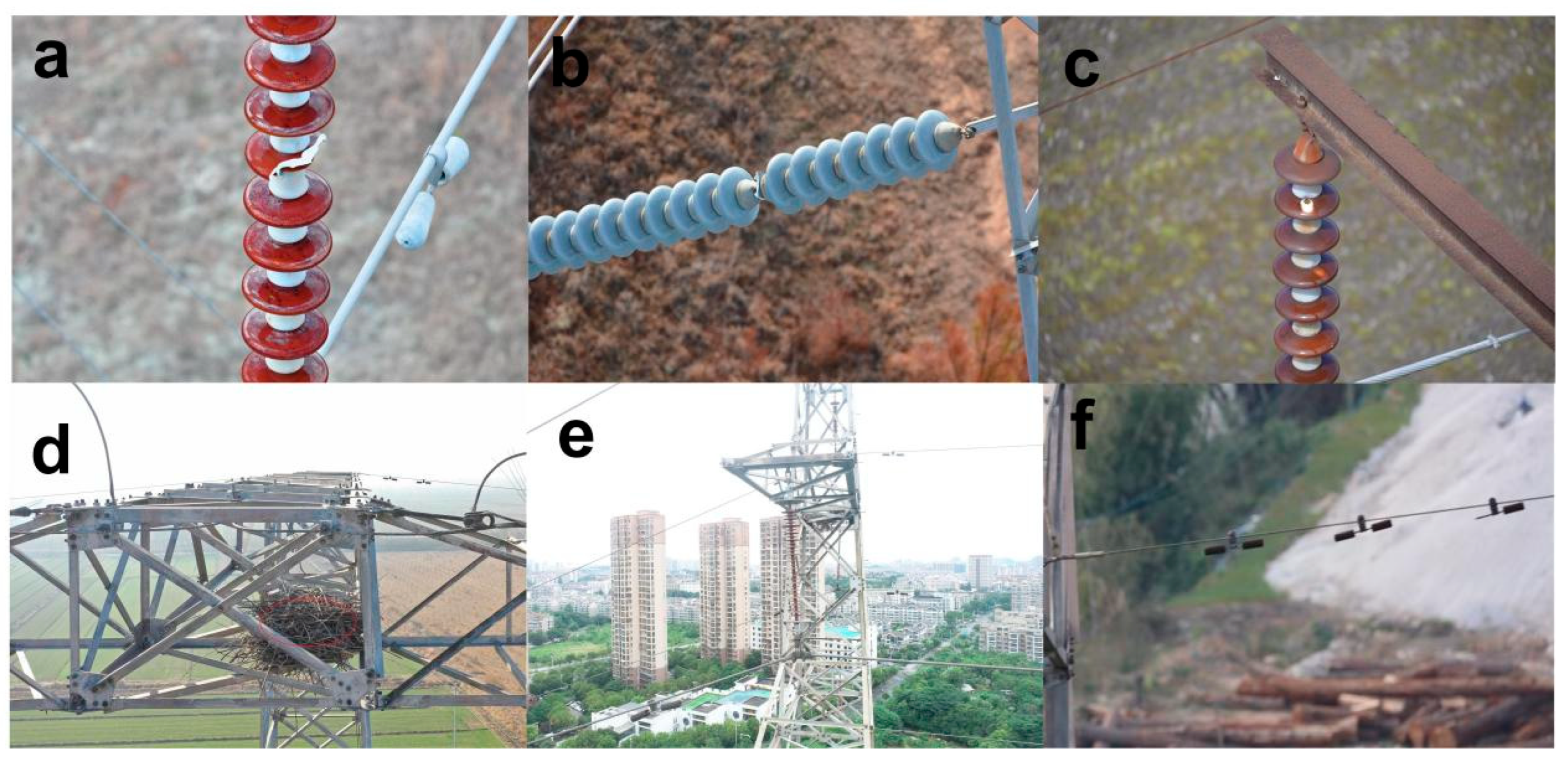

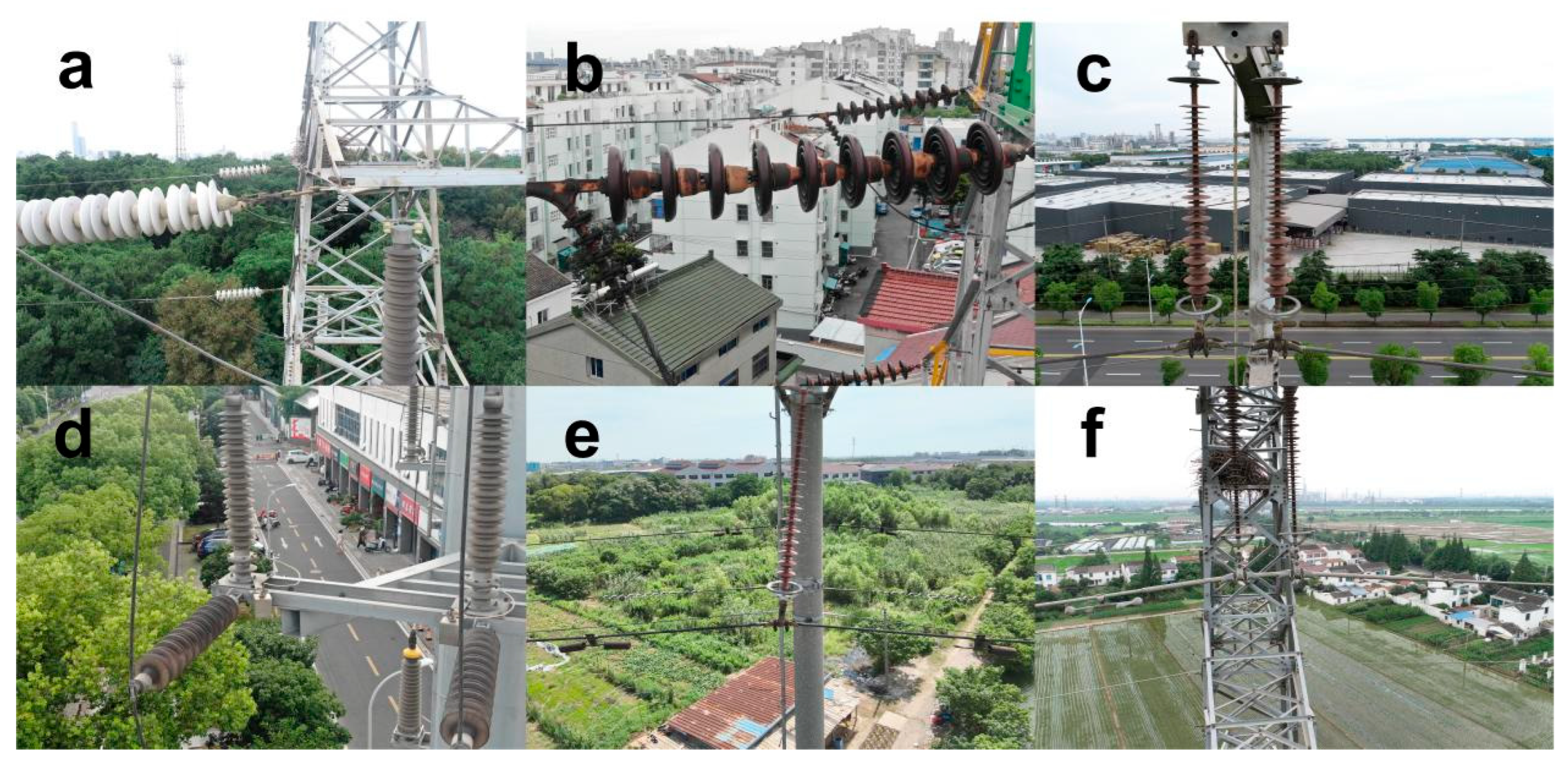

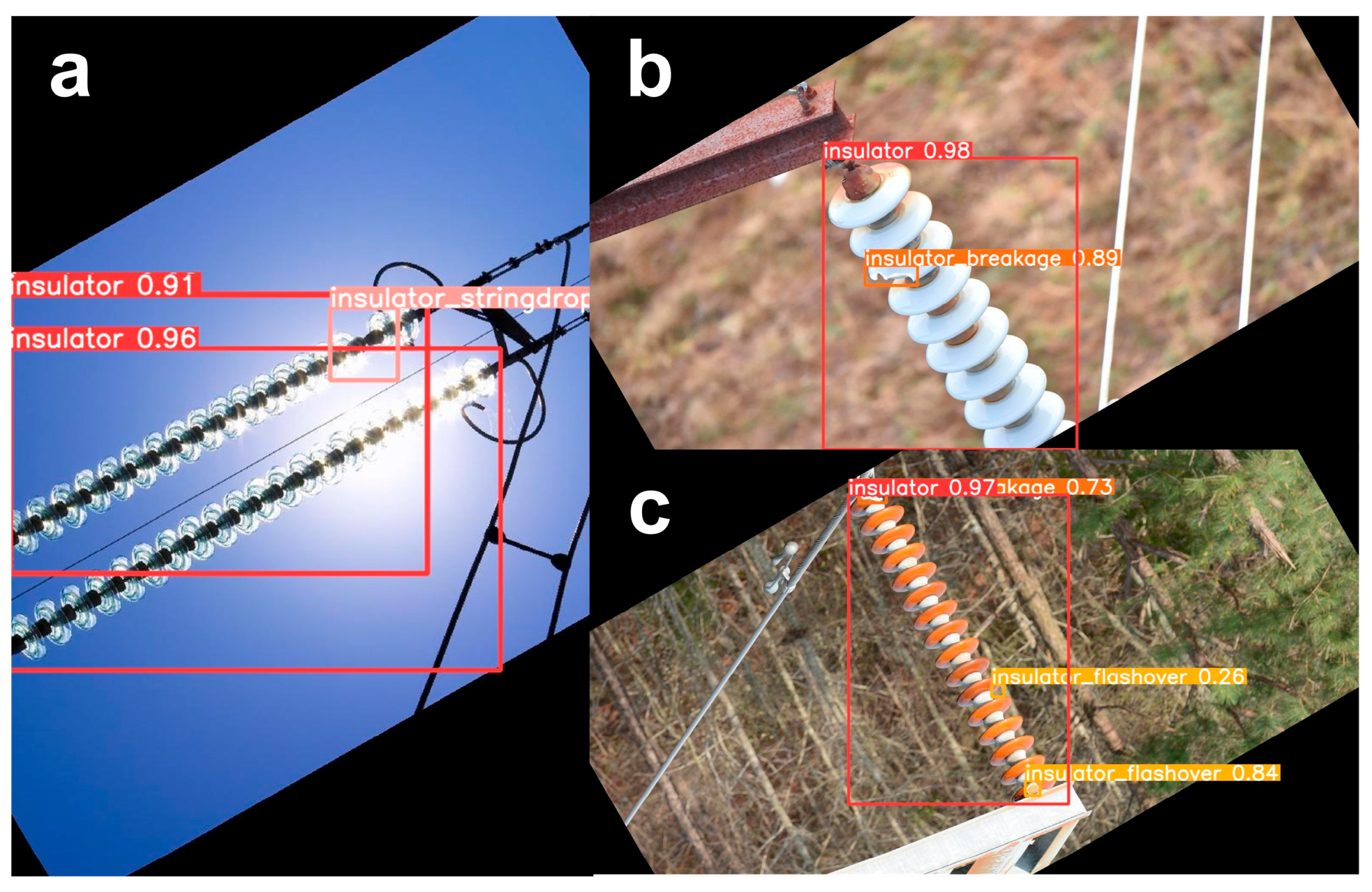

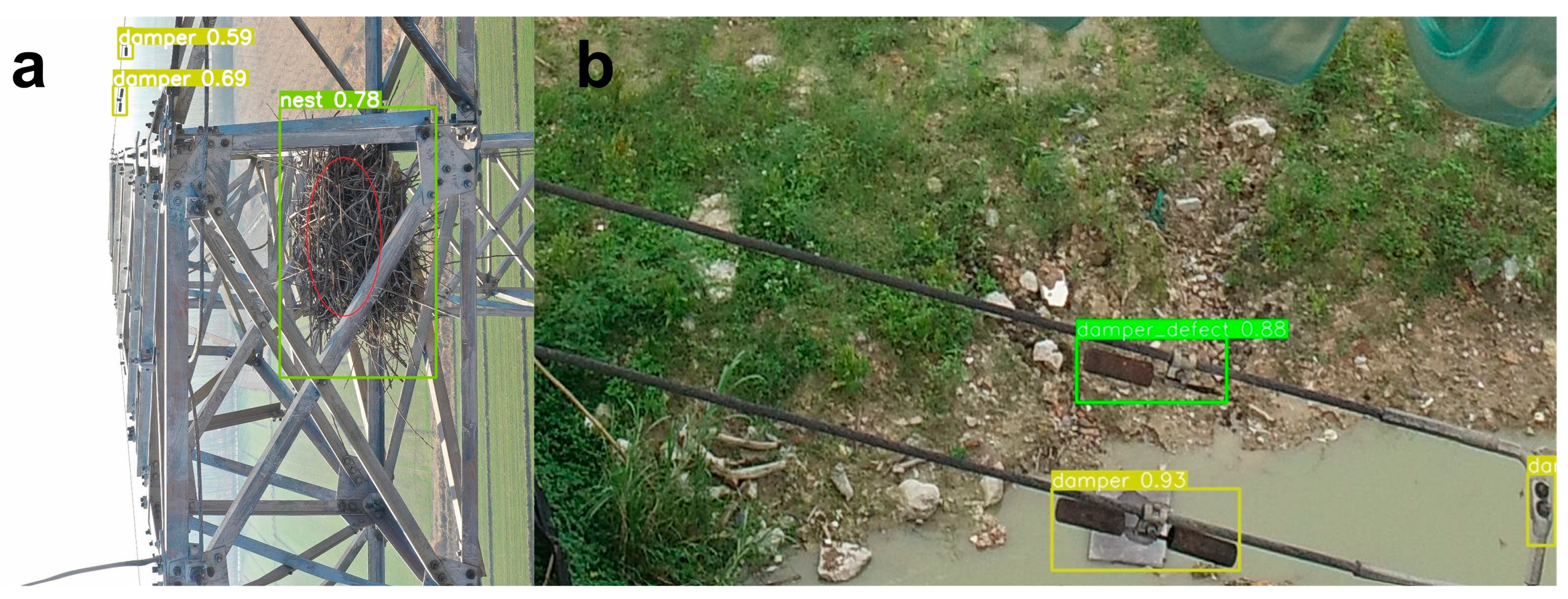

- To enhance datasets for transmission line defect detection, this study developed two comprehensive datasets: Common Defects of Transmission Lines Datasets (CDTLDs) and Real Scene Transmission Lines Datasets (RSTLDs). CDTLD extends the existing Chinese Power Line Insulator Datasets (CPLIDs) by incorporating five additional defect categories—dropped insulator strings, damaged insulators, flashover insulators, bird nests, and damaged vibration dampers—comprising seven categories with 18,072 annotated instances while addressing class imbalance issues; RSTLD contains 2180 high-resolution UAV images with 8930 annotations that capture complex background interference and multi-scale target characteristics in 110 kV transmission line environments, providing a reliable benchmark for practical algorithm evaluation.

- (2)

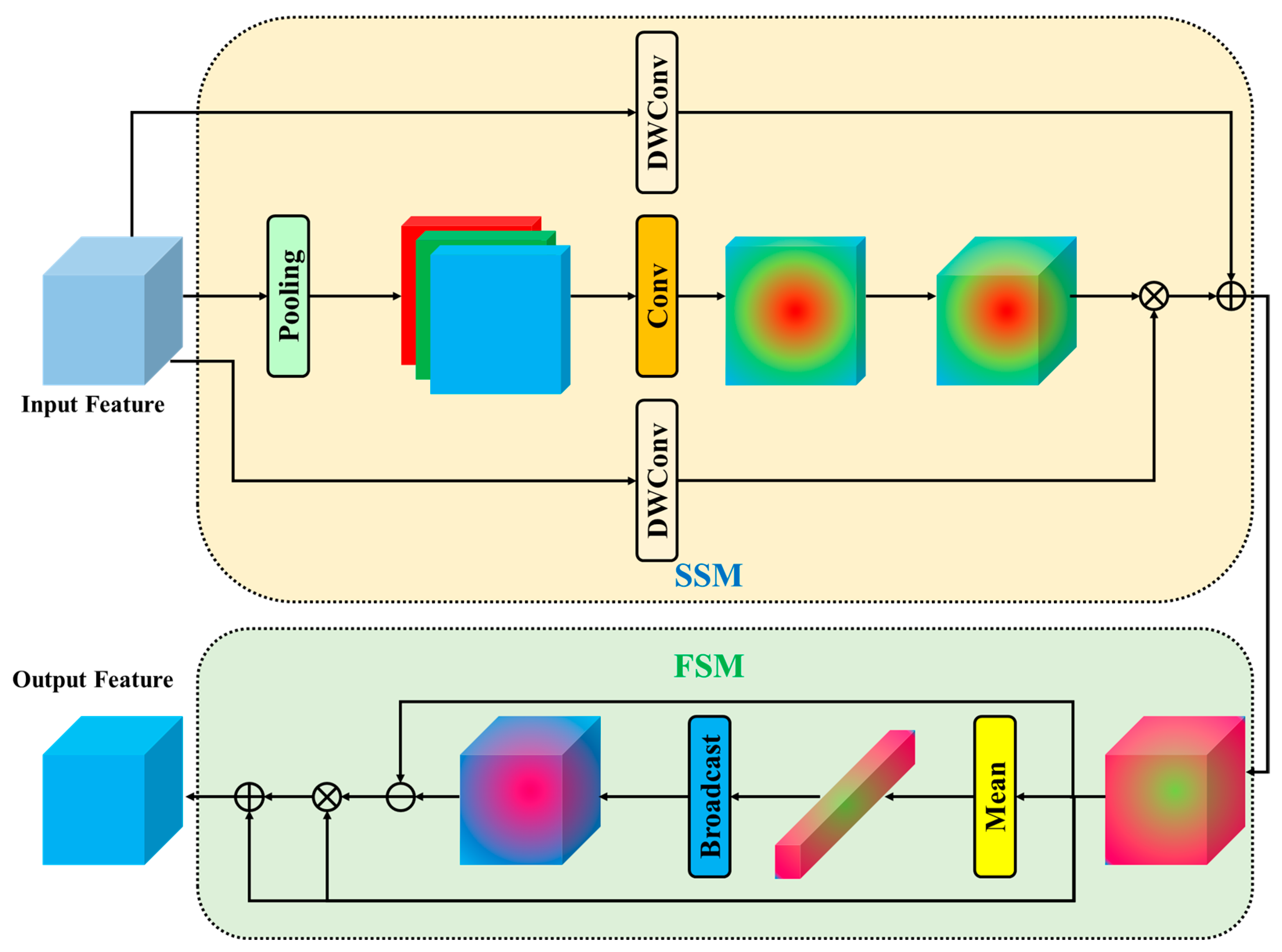

- To ensure the full extraction of image features, in the backbone stage of PLD-DETR, a dual-domain selection mechanism (DSM) block module is designed to identify degraded regions by the spatial selection module (SSM), and strengthen important high-frequency features by the frequency selection module (FSM). This dual-domain approach enables efficient extraction of multi-scale image features.

- (3)

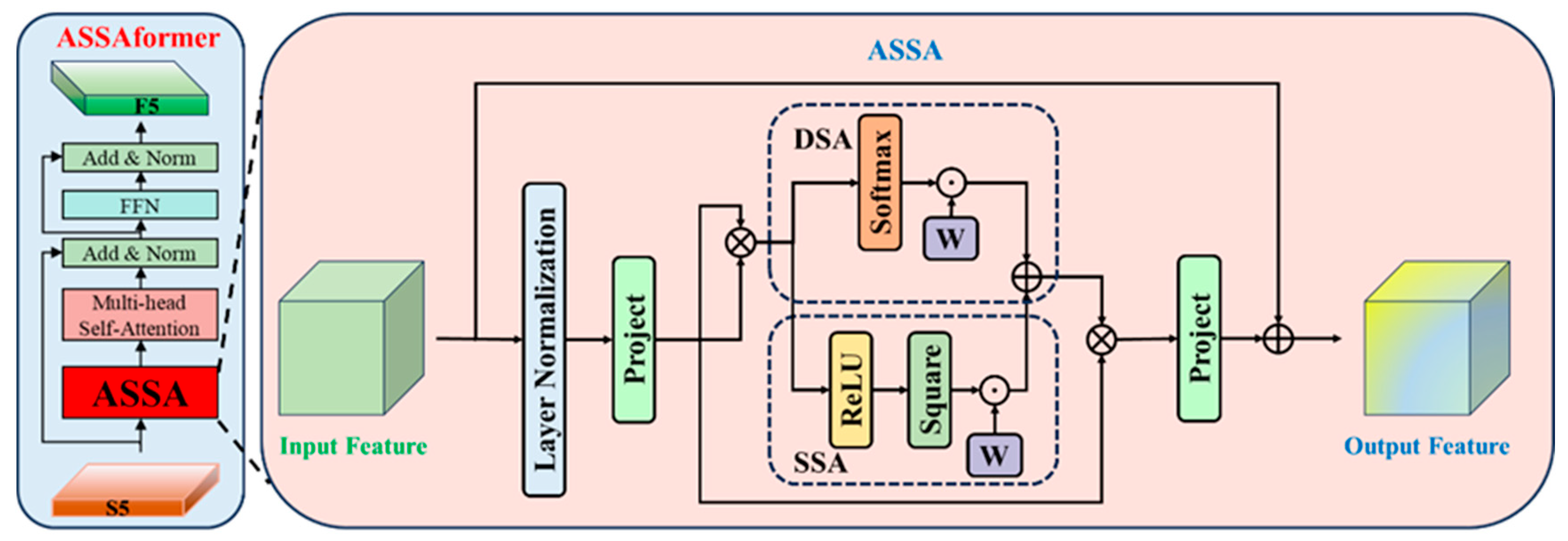

- To address challenges in deep feature extraction, the adaptive sparse self-attention former (ASSAformer) module is designed to adaptively fuse sparse and dense attention using a two-branch architecture, thereby promoting effective deep feature analysis through the adaptive weight fusion mechanism.

- (4)

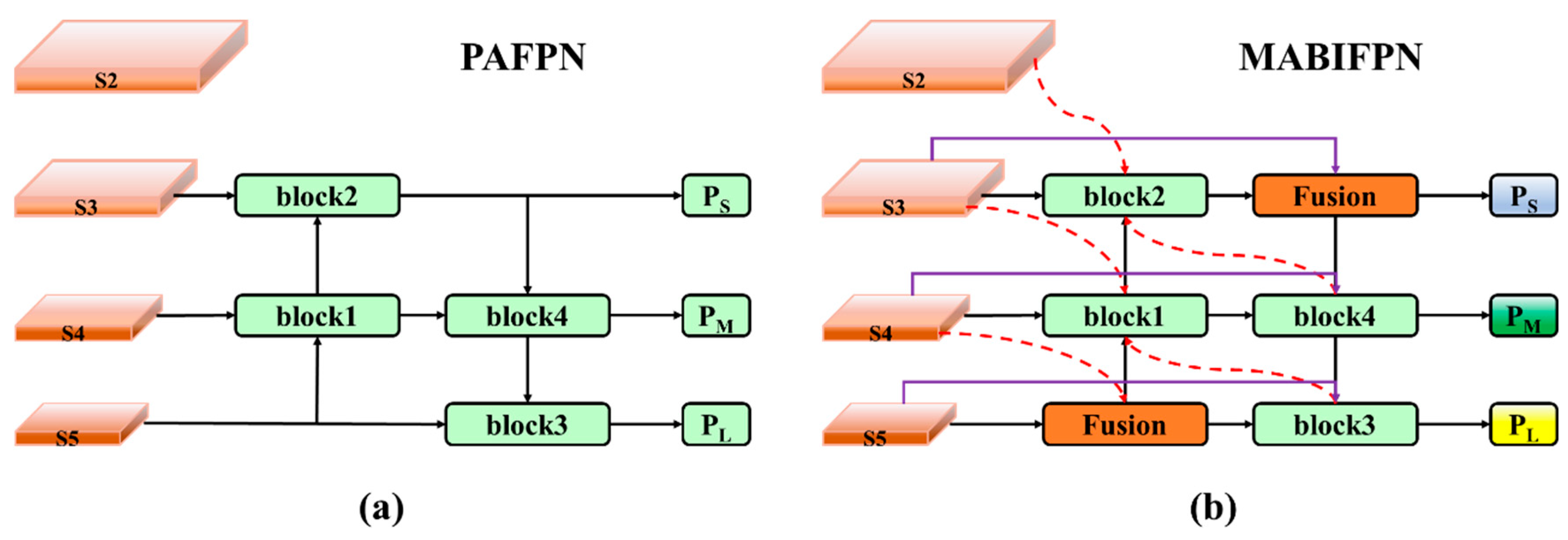

- A multi-branch auxiliary bidirectional feature pyramid network (MABIFPN) is designed to establish cross-scale information pathways. This architecture enables adaptive fusion of adjacent-scale features through learnable combination weights. The collaborative integration of multi-scale representations enhances feature complementarity and mitigates information loss during fusion processes.

2. Related Works

2.1. CNN-Based Object Detection Method

2.2. Transformer-Based Object Detection Methods

3. Methods

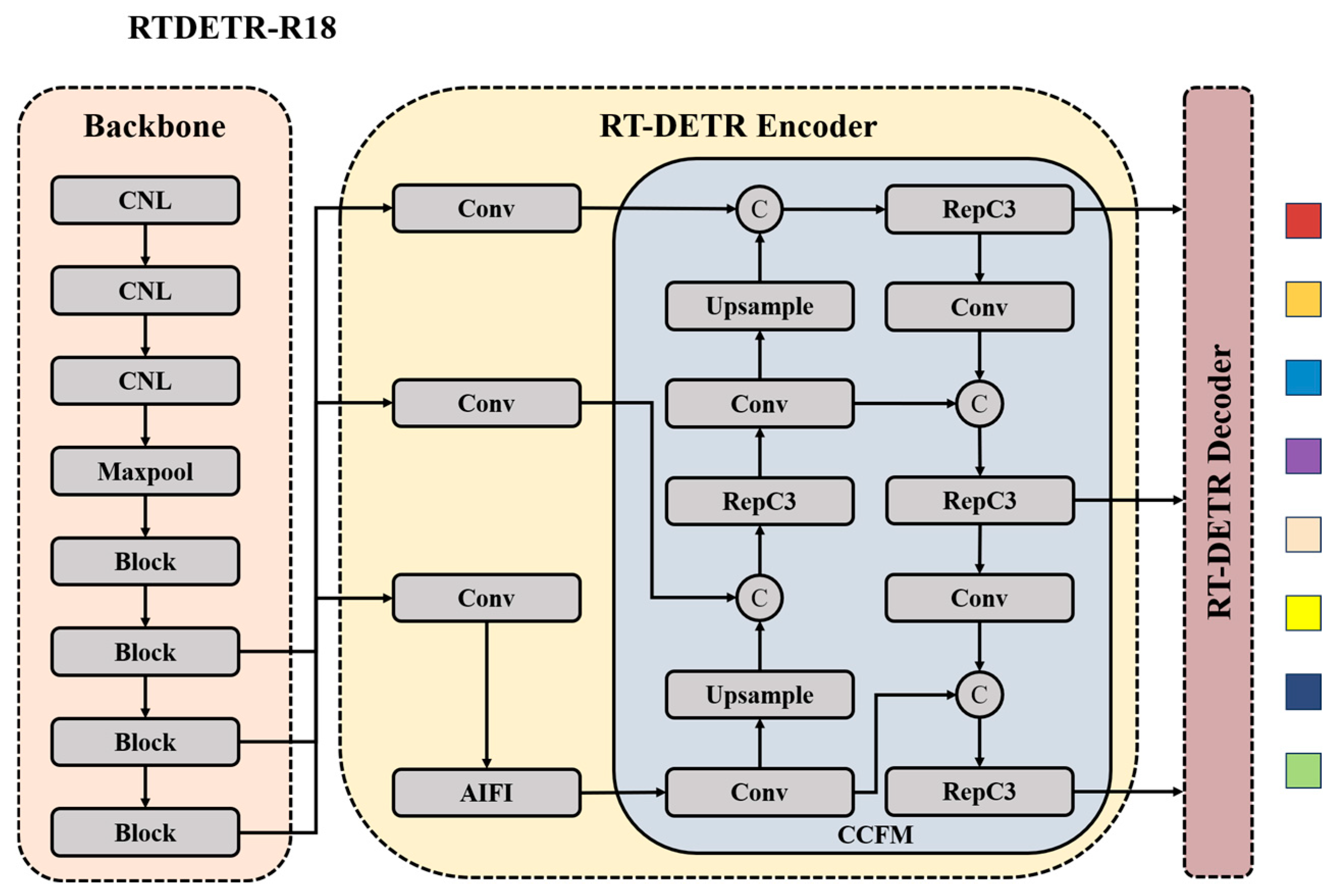

3.1. Overview of RTDETR-R18 Model

3.2. The Overall Architecture of PLD-DETR

3.2.1. DSM Block

| Algorithm 1. Pseudocode flow of the DSM |

| Input: x ∈ ℝ^(B,C,H,W) # Input tensor with batch size B, channels C, height H, width W Output: out ∈ ℝ^(B,C,H,W) # Output tensor after processing |

| 1: Initialize: (a) Spatial Gate: Low-frequency extraction (b) Local Attention: High-frequency refinement (c) Parameters a, b for output fusion |

| 2: Low-Frequency Extraction using Equation (2) (a)Pooling to reduce resolution (low-pass filter) (b)Convolution to capture low-frequency features 3: High-Frequency Extraction using Equation (3) (a)Depthwise convolution for high-frequency features (b)Additional depthwise convolution for refinement 4: Apply local attention to enhance high-frequency details 5: Fuse attention-enhanced features with original input |

| 6: Return the fused output |

| 7: end |

3.2.2. ASSAformer

| Algorithm 2. Pseudocode flow of the ASSA |

| Input: x ∈ ℝ^(B, H, W, C) # Input tensor with batch size B, height H, width W, channels C mask (optional): Mask tensor Output: out ∈ ℝ^(B, H×W, C) # Output tensor after adaptive sparse attention |

| 1: Initialize: (a) Normalize: Layer Normalize (b) Attention layers: WindowAttention_sparse (for sparse) and Window Attention (for dense) |

| 2: Flatten the input and apply normalization 3: Attention Masks (optional): 4: if mask is provided: 5: Generate attention masks using the input mask 6: else: 7: attn_mask = None 8: Compute attention using sparse and dense branches 9: if sparseAtt == True: 10: Compute sparse attention using Equation (8) 11: else: 12: sparse = None 13: Compute dense attention using Equation (9) 14: Compute attention weights using softmax to ensure α1 + α2 = 1 15: Combine sparse and dense attention matrices using Equation (10) 16: Apply the final layer of normalization and return output using Equation (12) |

| 17: end |

3.2.3. MABIFPN

| Algorithm 3. Pseudocode flow of the fusion |

| Input: x = [x1, x2, …, xk] # List of input feature maps, where k is the number of input feature maps Output: out # The fused feature map |

| 1: Initialize: fusion_weight: learnable weights for each input feature map, initialized to ones |

| 2: Apply ReLU activation to fusion weights: 3: Normalize fusion weights: 4: Normalize weights so that their sum equals 1 5: for i in range(len(x)): 6: Multiply each input feature map by its corresponding weight 7: Sum the weighted feature maps to obtain the fused output using Equation (16) |

| 8: end |

- Weight Learning

- 2.

- Weight Fusion

3.3. Decoder

4. Experiment

4.1. Datasets

4.1.1. CDTLD

4.1.2. RSTLD

4.2. Experimental Setup

4.3. Evaluation Indicators

4.4. Result

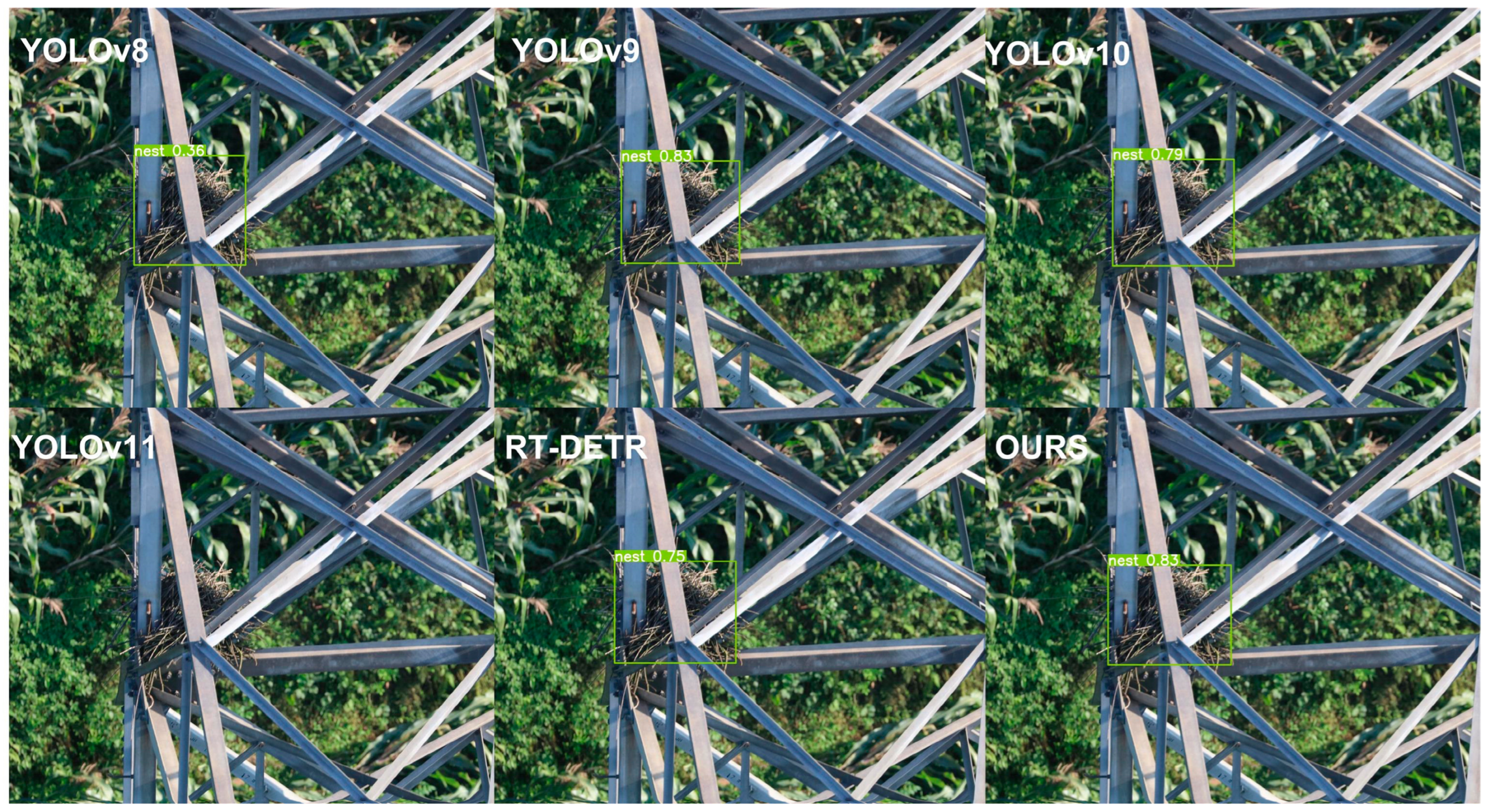

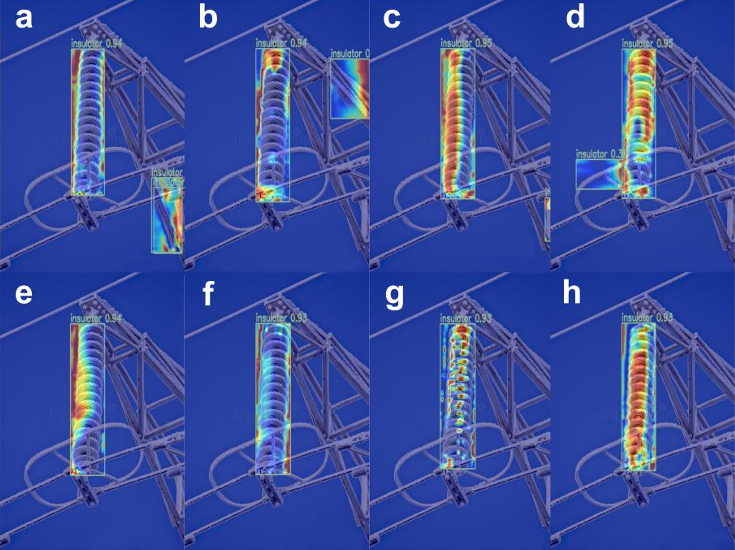

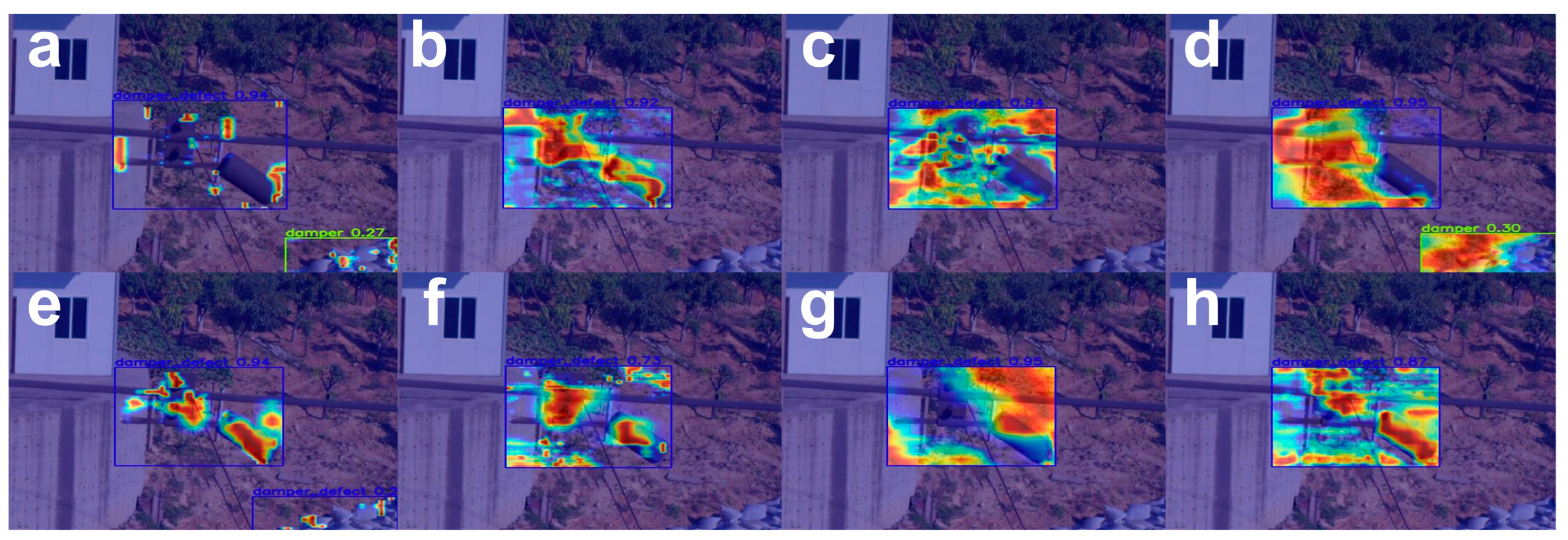

4.5. Visualization Result

4.6. Ablation Experiments

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AIFI | Attention-Based Intra-scale Feature Interaction |

| AP | Average Precision |

| ASSAformer | Adaptive Sparse Self-Attention Former |

| CCFM | Cross-Scale Fusion Module |

| CDTLDs | Common Defects of Transmission Lines Datasets |

| CNNs | Convolutional Neural Networks |

| CPLIDs | Chinese Power Line Insulator Datasets |

| DETR | Detection Transformer |

| DSA | Dense Self-Attention |

| DSM | Dual-Domain Selection Mechanism |

| FCOS | Fully Convolutional One-Stage |

| FLOPs | Floating Point Operations |

| FPN | Feature Pyramid Network |

| FPSs | Frames Per Second |

| FSM | Frequency Selection Module |

| MABIFPN | Multi-branch Auxiliary Bidirectional Feature Pyramid Network |

| NMS | Non-Maximum Suppression |

| P | Precision |

| PAFPN | Path Aggregation FPN |

| PLD-DETR | Power Line Defect Detection Transformer |

| R | Recall |

| R-CNN | Region-CNN |

| RSTLDs | Real Scene Transmission Lines Datasets |

| RT-DETR | Real Time Detection Transformer |

| SSA | Sparse Self-Attention |

| SSD | Single Shot MultiBox Detector |

| SSM | Spatial Selection Module |

| UAV | Unmanned Aerial Vehicle |

References

- Mishra, D.P.; Ray, P. Fault detection, location and classification of a transmission line. Neural Comput. Appl. 2018, 30, 1377–1424. [Google Scholar] [CrossRef]

- Wong, S.Y.; Choe, C.W.C.; Goh, H.H.; Low, Y.W.; Cheah, D.Y.S.; Pang, C. Power transmission line fault detection and diagnosis based on artificial intelligence approach and its development in uav: A review. Arab. J. Sci. Eng. 2021, 46, 9305–9331. [Google Scholar] [CrossRef]

- Xu, B.; Zhao, Y.; Wang, T.; Chen, Q. Development of power transmission line detection technology based on unmanned aerial vehicle image vision. SN Appl. Sci. 2023, 5, 72. [Google Scholar] [CrossRef]

- Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar] [CrossRef]

- He, M.; Qin, L.; Deng, X.; Liu, K. MFI-YOLO: Multi-fault insulator detection based on an improved YOLOv8. IEEE Trans. Power Deliv. 2023, 39, 168–179. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Xie, Z.; Dong, C.; Zhang, K.; Wang, J.; Xiao, Y.; Guo, X.; Zhao, Z.; Shi, C.; Zhao, W. Power-DETR: End-to-end power line defect components detection based on contrastive denoising and hybrid label assignment. IET Gener. Transm. Distrib. 2024, 18, 3264–3277. [Google Scholar] [CrossRef]

- Ren, Z.; Wang, Y. Research on the rapid check and identification of insulator faults in transmission lines based on a modified faster RCNN network. In Proceedings of the 2022 International Conference on Image Processing and Computer Vision (IPCV), Okinawa, Japan, 12–14 May 2023; pp. 17–21. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar] [CrossRef]

- Miao, X.; Liu, X.; Chen, J.; Zhuang, S.; Fan, J.; Jiang, H. Insulator detection in aerial images for transmission line inspection using single shot multibox detector. IEEE Access 2019, 7, 9945–9956. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; FCOS, T.H. Fully convolutional one-stage object detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9626–9635. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, X.; Zhang, Y.; Zhao, L. A method of identifying rust status of dampers based on image processing. IEEE Trans. Instrum. Meas. 2020, 69, 5407–5417. [Google Scholar] [CrossRef]

- Wu, X.; Yuan, P.; Peng, Q.; Ngo, C.-W.; He, J.-Y. Detection of bird nests in overhead catenary system images for high-speed rail. Pattern Recognit. 2016, 51, 242–254. [Google Scholar] [CrossRef]

- Murthy, V.S.; Tarakanath, K.; Mohanta, D.; Gupta, S. Insulator condition analysis for overhead distribution lines using combined wavelet support vector machine (SVM). IEEE Trans. Dielectr. Electr. Insul. 2010, 17, 89–99. [Google Scholar] [CrossRef]

- Zhang, J.; Xiao, T.; Li, M.; Zhou, Y. Deep-learning-based detection of transmission line insulators. Energies 2023, 16, 5560. [Google Scholar] [CrossRef]

- Zhai, Y.; Yang, X.; Wang, Q.; Zhao, Z.; Zhao, W. Hybrid knowledge R-CNN for transmission line multifitting detection. IEEE Trans. Instrum. Meas. 2021, 70, 5013312. [Google Scholar] [CrossRef]

- Zhai, Y.; Yang, K.; Zhao, Z.; Wang, Q.; Bai, K. Geometric characteristic learning R-CNN for shockproof hammer defect detection. Eng. Appl. Artif. Intell. 2022, 116, 105429. [Google Scholar] [CrossRef]

- Liang, H.; Zuo, C.; Wei, W. Detection and evaluation method of transmission line defects based on deep learning. IEEE Access 2020, 8, 38448–38458. [Google Scholar] [CrossRef]

- Hao, K.; Chen, G.; Zhao, L.; Li, Z.; Liu, Y.; Wang, C. An insulator defect detection model in aerial images based on multiscale feature pyramid network. IEEE Trans. Instrum. Meas. 2022, 71, 3522412. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Cheng, Y.; Liu, D. An Image-Based Deep Learning Approach with Improved DETR for Power Line Insulator Defect Detection. J. Sens. 2022, 2022, 6703864. [Google Scholar] [CrossRef]

- Cheng, Y.; Liu, D. AdIn-DETR: Adapting detection transformer for end-to-end real-time power line insulator defect detection. IEEE Trans. Instrum. Meas. 2024, 73, 3528511. [Google Scholar] [CrossRef]

- Zhang, K.; Lou, W.; Wang, J.; Zhou, R.; Guo, X.; Xiao, Y.; Shi, C.; Zhao, Z. PA-DETR: End-to-end visually indistinguishable bolt defects detection method based on transmission line knowledge reasoning. IEEE Trans. Instrum. Meas. 2023, 72, 5016914. [Google Scholar] [CrossRef]

- Wang, J.; Jin, L.; Li, Y.; Cao, P. Application of end-to-end perception framework based on boosted DETR in UAV inspection of overhead transmission lines. Drones 2024, 8, 545. [Google Scholar] [CrossRef]

- Li, D.; Yang, P.; Zou, Y. Optimizing insulator defect detection with improved DETR models. Mathematics 2024, 12, 1507. [Google Scholar] [CrossRef]

- Li, T.; Zhu, C.; Wang, Y.; Li, J.; Cao, H.; Yuan, P.; Gao, Z.; Wang, S. LMFC-DETR: A Lightweight Model for Real-Time Detection of Suspended Foreign Objects on Power Lines. IEEE Trans. Instrum. Meas. 2025, 74, 2539319. [Google Scholar] [CrossRef]

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Focal network for image restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 13001–13011. [Google Scholar] [CrossRef]

- Zhou, S.; Chen, D.; Pan, J.; Shi, J.; Yang, J. Adapt or perish: Adaptive sparse transformer with attentive feature refinement for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2952–2963. [Google Scholar] [CrossRef]

- Yang, Z.; Guan, Q.; Zhao, K.; Yang, J.; Xu, X.; Long, H.; Tang, Y. Multi-branch auxiliary fusion yolo with re-parameterization heterogeneous convolutional for accurate object detection. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 18–20 October 2024; pp. 492–505. [Google Scholar] [CrossRef]

- Pang, G.; Zhang, Z.; Hu, J.; Hu, Q.; Zheng, H.; Jiang, X. Analysis of Failures and Protective Measures for Core Rods in Composite Long-Rod Insulators of Transmission Lines. Energies 2025, 18, 3138. [Google Scholar] [CrossRef]

- Rezazadeh, N.; De Luca, A.; Perfetto, D.; Salami, M.R.; Lamanna, G. Systematic critical review of structural health monitoring under environmental and operational variability: Approaches for baseline compensation, adaptation, and reference-free techniques. Smart Mater. Struct. 2025, 34, 073001. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, Y.; Fan, Y.; Zhou, J.; Li, Z.; Xiao, S.; Zhang, X.; Wu, G. Optical imaging technology application in transmission line insulator monitoring: A review. IEEE Trans. Dielectr. Electr. Insul. 2024, 31, 3120–3132. [Google Scholar] [CrossRef]

- Surantha, N.; Sutisna, N. Key Considerations for Real-Time Object Recognition on Edge Computing Devices. Appl. Sci. 2025, 15, 7533. [Google Scholar] [CrossRef]

| Experimental Environment | Configuration Information |

|---|---|

| GPU | NVIDIA RTX 4090 |

| Running System | Ubuntu 22.04.3 |

| Experiment language | Python 3.9 |

| CUDA | 11.8 |

| PyTorch | 1.12.0 |

| Method | Optimizer | Learning Rate | Momentum | Weight_Decay | |

|---|---|---|---|---|---|

| Two-Stage Detector | Faster RCNN | SGD | 0.02 | 0.9 | 0.0001 |

| Cascade RCNN | SGD | 0.02 | 0.9 | 0.0001 | |

| Libra RCNN | SGD | 0.02 | 0.9 | 0.0001 | |

| Single-Stage Detector | FCOS | SGD | 0.02 | 0.9 | 0.0001 |

| YOLOv8m | SGD | 0.01 | 0.9 | 0.0005 | |

| YOLOv9m | SGD | 0.01 | 0.9 | 0.0005 | |

| YOLOv10b | SGD | 0.01 | 0.9 | 0.0005 | |

| YOLOv11l | SGD | 0.01 | 0.9 | 0.0005 | |

| Transformer Detector | RT-DETR | AdamW | 0.0001 | 0.9 | 0.0001 |

| Conditional-DETR | AdamW | 0.0001 | 0.9 | 0.0001 | |

| Dab-DETR | AdamW | 0.0001 | 0.9 | 0.0001 | |

| Detector Proposed in This Paper | PLD-DETR | SGD | 0.01 | 0.9 | 0.0005 |

| Detection Algorithms | AP50 (↑) | AP75 (↑) | AP50–95 (↑) | Parameters (↓) | FLOPs (↓) |

|---|---|---|---|---|---|

| Faster RCNN | 0.860 | 0.691 | 0.610 | 41.745 M | 90.9 G |

| Cascade RCNN | 0.853 | 0.710 | 0.615 | 69.395 M | 119.0 G |

| Libra RCNN | 0.861 | 0.711 | 0.612 | 41.637 M | 92.7 G |

| FCOS | 0.861 | 0.679 | 0.591 | 32.127 M | 78.6 G |

| YOLOv8m | 0.833 | 0.655 | 0.586 | 25.844 M | 78.7 G |

| YOLOv9m | 0.843 | 0.683 | 0.596 | 20.018 M | 76.5 G |

| YOLOv10b | 0.819 | 0.628 | 0.577 | 19.010 M | 91.6 G |

| YOLOv11l | 0.850 | 0.663 | 0.593 | 25.285 M | 86.6 G |

| Conditional-DETR | 0.751 | 0.426 | 0.423 | 43.450 M | 95.9 G |

| Dab-DETR | 0.910 | 0.727 | 0.635 | 43.703 M | 86.9 G |

| RT-DETR | 0.886 | 0.713 | 0.627 | 19.881 M | 57.0 G |

| PLD-DETR | 0.910 | 0.763 | 0.662 | 21.123 M | 59.4 G |

| Detection Algorithms | AP50 (↑) | AP75 (↑) | AP50–95 (↑) | Parameters (↓) | FLOPs (↓) |

|---|---|---|---|---|---|

| Faster RCNN | 0.500 | 0.286 | 0.282 | 41.745 M | 90.9 G |

| Cascade RCNN | 0.507 | 0.311 | 0.293 | 69.395 M | 119 G |

| Libra RCNN | 0.518 | 0.313 | 0.294 | 41.637 M | 92.7 G |

| FCOS | 0.535 | 0.284 | 0.255 | 32.127 M | 78.6 G |

| YOLOv8m | 0.556 | 0.341 | 0.332 | 25.844 M | 78.7 G |

| YOLOv9m | 0.586 | 0.380 | 0.354 | 20.018 M | 76.5 G |

| YOLOv10b | 0.513 | 0.319 | 0.310 | 19.010 M | 91.6 G |

| YOLOv11l | 0.591 | 0.360 | 0.344 | 25.285 M | 86.6 G |

| Conditional-DETR | 0.549 | 0.304 | 0.285 | 43.450 M | 95.9 G |

| Dab-DETR | 0.569 | 0.317 | 0.314 | 43.703 M | 86.9 G |

| RT-DETR | 0.623 | 0.406 | 0.387 | 19.881 M | 57.0 G |

| PLD-DETR | 0.651 | 0.422 | 0.401 | 21.123 M | 59.4 G |

| ASSA | DSM Block | MABIFPN | AP50 | AP75 | AP50–95 | Parameters | GFLOPs |

|---|---|---|---|---|---|---|---|

| 0.886 | 0.713 | 0.627 | 19.881 M | 57.0 | |||

| √ | 0.889 | 0.717 | 0.633 | 20.718 M | 57.8 | ||

| √ | 0.888 | 0.742 | 0.649 | 20.054 M | 58.0 | ||

| √ | 0.905 | 0.754 | 0.653 | 20.113 M | 57.5 | ||

| √ | √ | 0.888 | 0.729 | 0.633 | 20.891 M | 58.9 | |

| √ | √ | 0.901 | 0.767 | 0.658 | 20.951 M | 58.4 | |

| √ | √ | 0.903 | 0.764 | 0.658 | 20.286 M | 58.5 | |

| √ | √ | √ | 0.910 | 0.763 | 0.662 | 21.123 M | 59.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Zhang, X.; Feng, D.; Li, J.; Zhu, L. PLD-DETR: A Method for Defect Inspection of Power Transmission Lines. Electronics 2025, 14, 4107. https://doi.org/10.3390/electronics14204107

Chen J, Zhang X, Feng D, Li J, Zhu L. PLD-DETR: A Method for Defect Inspection of Power Transmission Lines. Electronics. 2025; 14(20):4107. https://doi.org/10.3390/electronics14204107

Chicago/Turabian StyleChen, Jianing, Xin Zhang, Dawei Feng, Jiahao Li, and Liang Zhu. 2025. "PLD-DETR: A Method for Defect Inspection of Power Transmission Lines" Electronics 14, no. 20: 4107. https://doi.org/10.3390/electronics14204107

APA StyleChen, J., Zhang, X., Feng, D., Li, J., & Zhu, L. (2025). PLD-DETR: A Method for Defect Inspection of Power Transmission Lines. Electronics, 14(20), 4107. https://doi.org/10.3390/electronics14204107