Generalized Non-Convex Non-Smooth Group-Sparse Residual Prior for Image Denoising

Abstract

1. Introduction

2. Related Work

2.1. Group Sparse Representation

2.2. Low-Rank Minimization

2.3. Adaptive Non-Local Dictionary Learning

3. Proposed Method

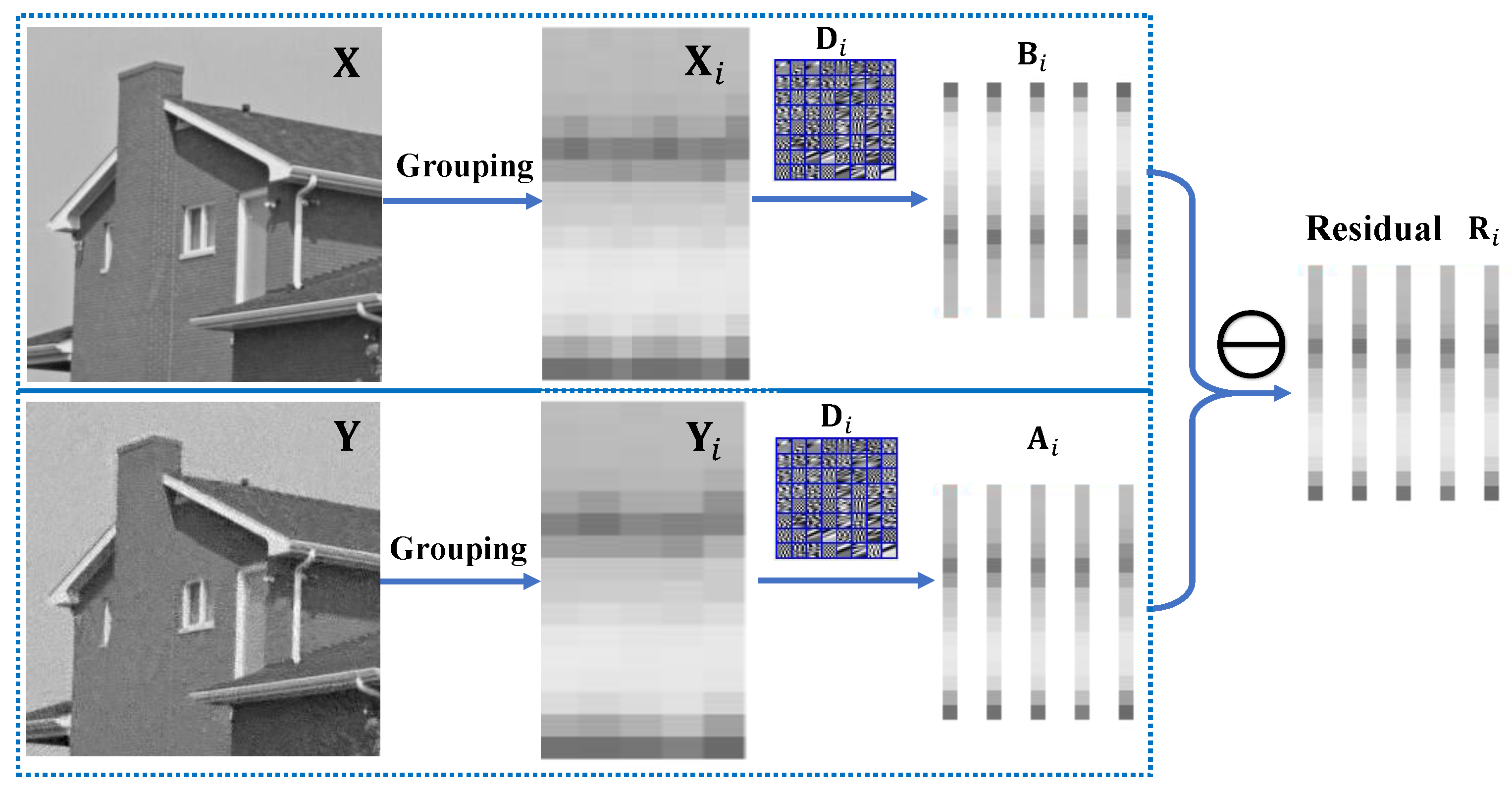

3.1. Group Sparse Coefficient Residual

3.2. Residual Prior-Based Generalized Non-Convex Non-Smooth Low-Rank Minimization

3.3. Image Denoising Application

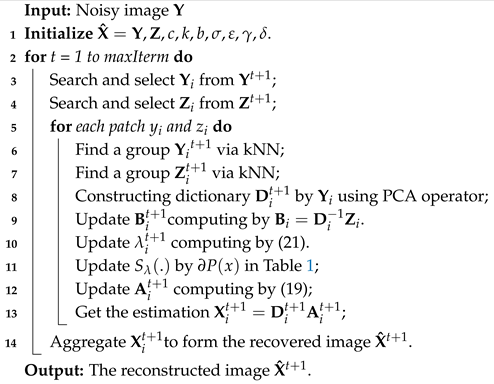

| Algorithm 1: Non-convex Optimization Denoising Model Based on Group Sparse Residual Constraint |

|

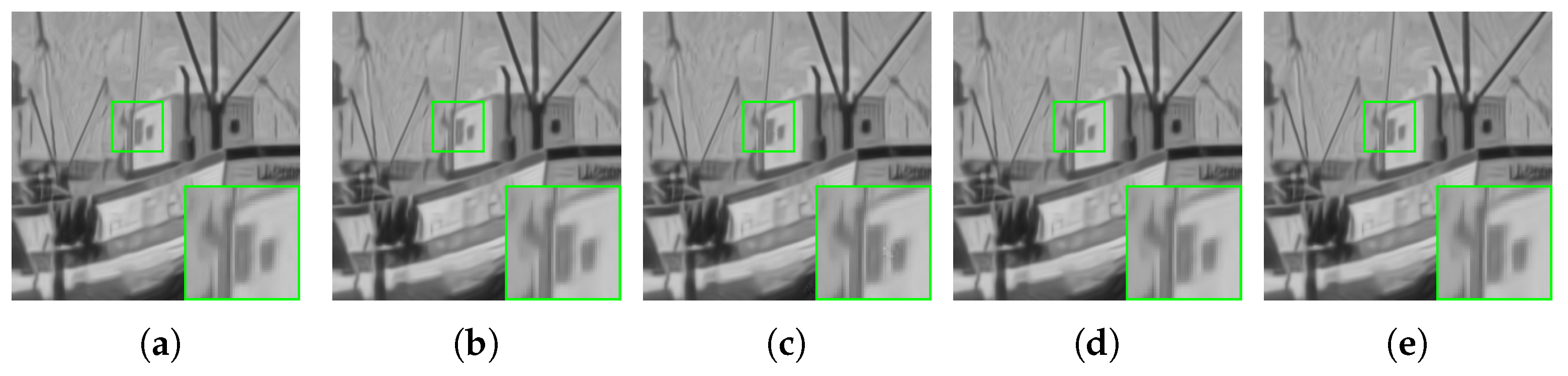

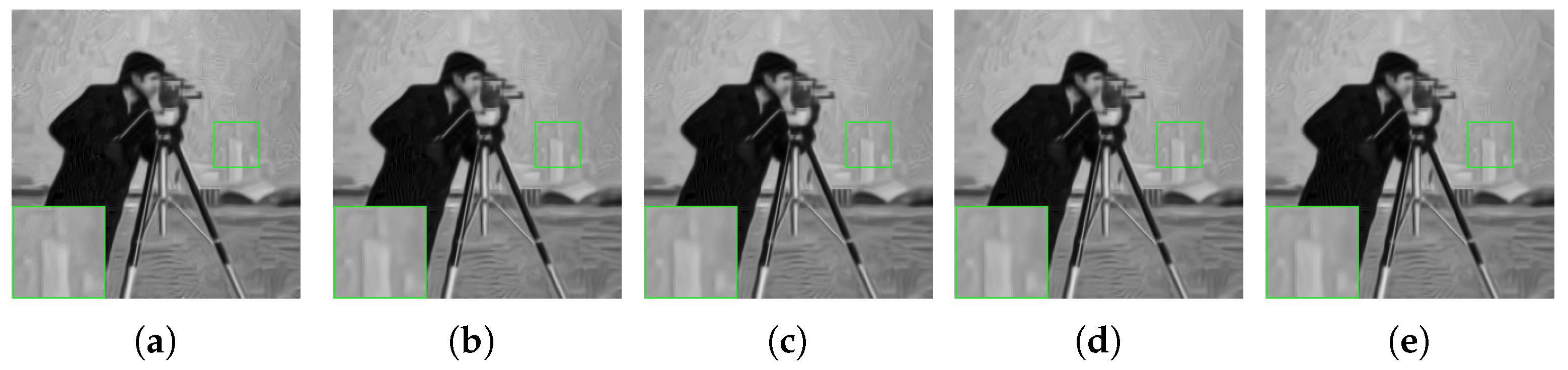

4. Experimental Results

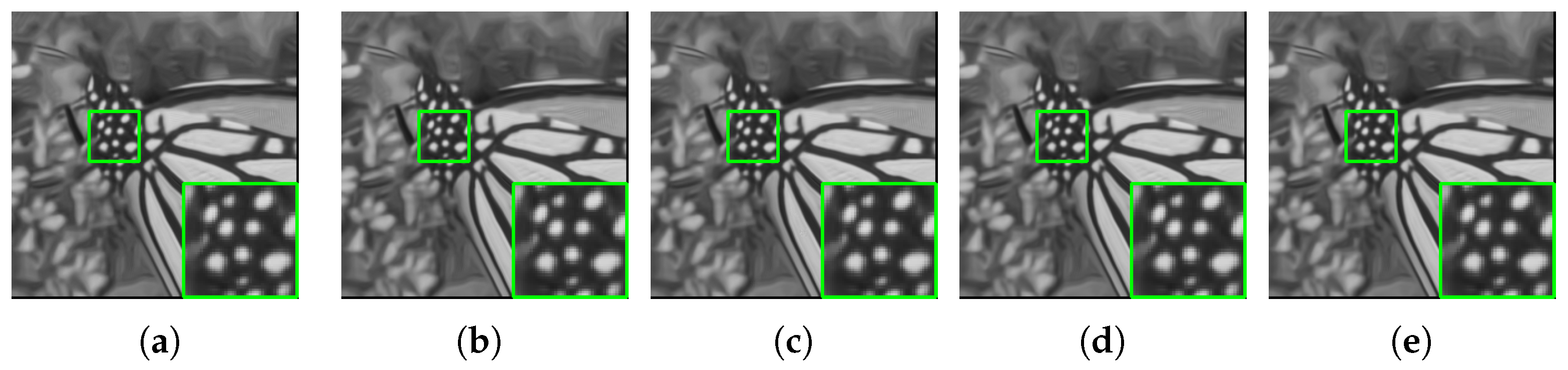

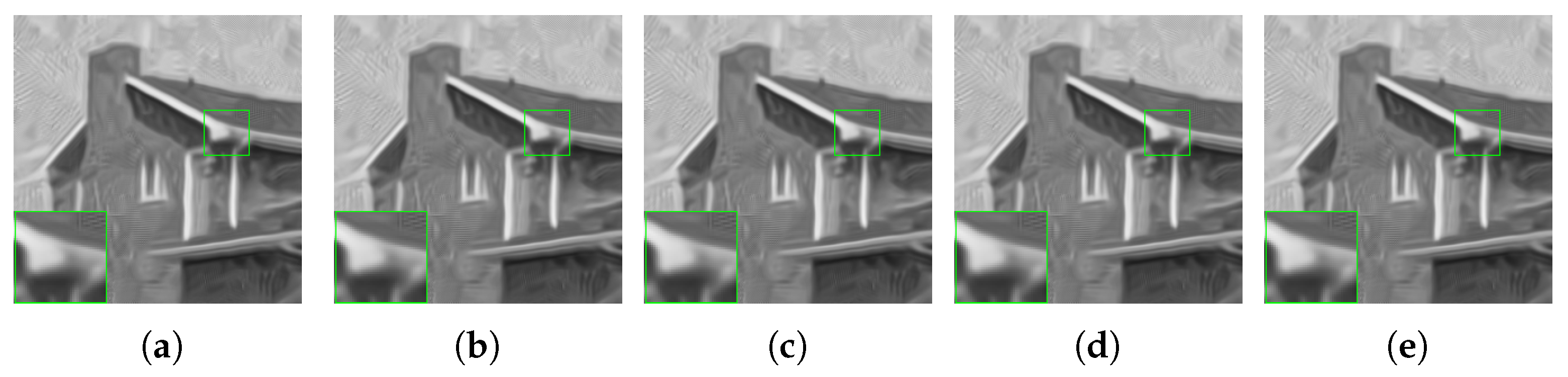

4.1. Effectiveness of Our Denoising Model

4.2. Generalization Validation of Different Penalties

4.3. Robustness Study Under Gaussian Noise with Different Standard Deviations

4.4. Computational Time Analysis

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Danielyan, A.; Katkovnik, V.; Egiazarian, K. BM3D frames and variational image debluring. IEEE Trans. Image Process. 2012, 21, 1715–1728. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Gu, J.; Zhang, Z. Attention in Attention Network for Image Super-Resolution. arXiv 2021, arXiv:2104.09497. [Google Scholar]

- Donoho, D. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Guo, L.; Huang, S.; Liu, H.; Wen, B. Towards Robust Image Denoising via Flow-based Joint Image and Noise Model. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 6105–6115. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

- Luo, Q.; Liu, B.; Zhang, Y.; Han, Z.; Tang, Y. Low-rank decomposition on transformed feature maps domain for image denoising. Vis. Comput. 2021, 37, 1899–1915. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Wen, B.; Zhou, J.; Zhu, C. Group sparsity residual constraint with non-local priors for image restoration. IEEE Trans. Image Process. 2020, 29, 8960–8975. [Google Scholar] [CrossRef]

- Li, Y.; Xiao, F.; Liang, W.; Gui, L. Multiply Complementary Priors for Image Compressive Sensing Reconstruction in Impulsive Noise. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–22. [Google Scholar] [CrossRef]

- Li, Y.; Gao, L.; Hu, S.; Gui, G.; Chen, C.Y. Nonlocal low-rank plus deep denoising prior for robust image compressed sensing reconstruction. Expert Syst. Appl. 2023, 228, 120456. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, Y.; Zhang, H.; Liu, J.; Ding, X.; Gui, G. Nonconvex L1/2-regularized nonlocal self-similarity denoiser for compressive sensing based CT reconstruction. J. Frankl. Inst. 2023, 360, 4172–4195. [Google Scholar] [CrossRef]

- Li, Y.; Gui, G.; Cheng, X. From group sparse coding to rank minimization: A novel denoising model for low-level image restoration. Signal Process. 2020, 176, 107655. [Google Scholar] [CrossRef]

- Malfait, M.; Roose, D. Wavelet-based image denoising using a Markov random field a priori model. IEEE Trans. Image Process. 1997, 6, 549–565. [Google Scholar] [CrossRef] [PubMed]

- Lefkimmiatis, S. Universal denoising networks: A novel CNN architecture for image denoising. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3204–3213. [Google Scholar]

- Tian, C.; Xu, Y.; Zuo, W. Image denoising using deep CNN with batch renormalization. Neural Netw. 2020, 121, 461–473. [Google Scholar] [CrossRef]

- Liu, W.; Li, Y.; Huang, D. RA-UNet: An improved network model for image denoising. Vis. Comput. 2023, 40, 4319–4335. [Google Scholar] [CrossRef]

- Elad, M.; Aharon, M. Image Denoising Via Sparse and Redundant Representations Over Learned Dictionaries. IEEE Trans. Image Process. 2006, 15, 3736–3745. [Google Scholar] [CrossRef]

- Mairal, J.; Bach, F.; Ponce, J.; Sapiro, G.; Zisserman, A. Non-local sparse models for image restoration. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2272–2279. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Gao, W. Group-Based Sparse Representation for Image Restoration. IEEE Trans. Image Process. 2014, 23, 3336–3351. [Google Scholar] [CrossRef]

- Lin, J.; Deng, D.; Yan, J.; Lin, X. Self-adaptive group based sparse representation for image inpainting. J. Comput. Appl. 2017, 37, 1169–1173. [Google Scholar] [CrossRef]

- Zha, Z.; Yuan, X.; Zhou, J.; Zhu, C.; Wen, B. Image restoration via simultaneous nonlocal self-similarity priors. IEEE Trans. Image Process. 2020, 29, 8561–8576. [Google Scholar] [CrossRef]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C. Image restoration via reconciliation of group sparsity and low-rank models. IEEE Trans. Image Process. 2021, 30, 5223–5238. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B.; Yuan, X.; Zhou, J.; Zhu, C.; Kot, A.C. Low-rankness guided group sparse representation for image restoration. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 7593–7607. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 60–65. [Google Scholar] [CrossRef]

- Kervrann, C.; Boulanger, J. Local adaptivity to variable smoothness for exemplar-based image regularization and representation. Int. J. Comput. Vis. 2008, 79, 45–69. [Google Scholar] [CrossRef]

- Goossens, B.; Luong, H.; Pizurica, A.; Philips, W. An improved non-local denoising algorithm. In Proceedings of the 2008 International Workshop on Local and Non-Local Approximation in Image Processing (LNLA 2008), Tuusula, Finland, 19–21 August 2008. [Google Scholar]

- Shi, M.; Fan, L.; Li, X.; Zhang, C. A competent image denoising method based on structural information extraction. Vis. Comput. 2023, 39, 2407–2423. [Google Scholar] [CrossRef]

- Feng, L.; Sun, H.; Sun, Q.; Xia, G. Compressive sensing via nonlocal low-rank tensor regularization. Neurocomputing 2016, 216, 45–60. [Google Scholar] [CrossRef]

- Feng, L.; Sun, H.; Zhu, J. Robust image compressive sensing based on half-quadratic function and weighted schatten- p norm. Inf. Sci. 2018, 477, 265–280. [Google Scholar] [CrossRef]

- Xie, Y.; Gu, S.; Liu, Y.; Zuo, W.; Zhang, W.; Zhang, L. Weighted Schatten p -Norm Minimization for Image Denoising and Background Subtraction. IEEE Trans. Image Process. 2016, 25, 4842–4857. [Google Scholar] [CrossRef]

- Chen, B.; Sun, H.; Feng, L.; Xia, G.; Zhang, G. Robust image compressive sensing based on m-estimator and nonlocal low-rank regularization. Neurocomputing 2018, 275, 586–597. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, Z.; Hu, J.; Zou, S.; Fu, Y. MRI Denoising Using Low Rank Prior and Sparse Gradient Prior. IEEE Access 2019, 7, 45858–45865. [Google Scholar] [CrossRef]

- Zha, Z.; Liu, X.; Zhou, Z.; Huang, X.; Shi, J.; Shang, Z.; Tang, L.; Bai, Y.; Wang, Q.; Zhang, X. Image denoising via group sparsity residual constraint. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1787–1791. [Google Scholar] [CrossRef]

- Li, Y.; Wu, H.; Jiang, X.; Ding, X. NG-RED:Nonconvex group-matrix residual denoising learning for image restoration. Expert Syst. Appl. 2025, 264, 125876. [Google Scholar] [CrossRef]

- Umirzakova, S.; Mardieva, S.; Muksimova, S.; Ahmad, S.; Whangbo, T. Enhancing the Super-Resolution of Medical Images: Introducing the Deep Residual Feature Distillation Channel Attention Network for Optimized Performance and Efficiency. Bioengineering 2023, 10, 1332. [Google Scholar] [CrossRef] [PubMed]

- Frank, I.E.; Friedman, J.H. A statistical view of some chemometrics regression tools. (With discussion). Technometrics 1993, 35, 109–135. [Google Scholar] [CrossRef]

- Li, F.R. Variable Selection via Nonconcave Penalized Likelihood and its Oracle Properties. Publ. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar]

- Friedman, J.H. Fast sparse regression and classification. Int. J. Forecast. 2012, 28, 722–738. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Statist. 2010, 38, 894–942. [Google Scholar] [CrossRef] [PubMed]

- Gao, C.; Wang, N.; Yu, Q.; Zhang, Z. A Feasible Nonconvex Relaxation Approach to Feature Selection. In Proceedings of the Aaai Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- Zha, Z.; Wen, B.; Yuan, X.; Ravishankar, S.; Zhou, J.; Zhu, C. Learning Nonlocal Sparse and Low-Rank Models for Image Compressive Sensing: Nonlocal sparse and low-rank modeling. IEEE Signal Process. Mag. 2023, 40, 32–44. [Google Scholar] [CrossRef]

- Larose, D.T.; Larose, C.D. k-Nearest Neighbor Algorithm. In Discovering Knowledge in Data: An Introduction to Data Mining; Wiley: Hoboken, NJ, USA, 2014; pp. 149–164. [Google Scholar] [CrossRef]

- Tang, H.; Liu, H.; Xiao, W.; Sebe, N. When Dictionary Learning Meets Deep Learning: Deep Dictionary Learning and Coding Network for Image Recognition With Limited Data. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2129–2141. [Google Scholar] [CrossRef] [PubMed]

- Kreutz-Delgado, K.; Murray, J.F.; Rao, B.D.; Engan, K.; Lee, T.W.; Sejnowski, T.J. Dictionary Learning Algorithms for Sparse Representation. Neural Comput. 2003, 15, 349–396. [Google Scholar] [CrossRef] [PubMed]

- Zha, Z.; Wen, B.; Yuan, X.; Zhang, J.; Zhou, J.; Lu, Y.; Zhu, C. Nonlocal Structured Sparsity Regularization Modeling for Hyperspectral Image Denoising. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Xiong, R.; Liu, H.; Zhang, X.; Zhang, J.; Ma, S.; Wu, F.; Gao, W. Image Denoising via Bandwise Adaptive Modeling and Regularization Exploiting Nonlocal Similarity. IEEE Trans. Image Process. 2016, 25, 5793–5805. [Google Scholar] [CrossRef] [PubMed]

- Dong, W.; Zhang, L.; Shi, G.; Li, X. Nonlocally Centralized Sparse Representation for Image Restoration. IEEE Trans. Image Process. 2013, 22, 1620–1630. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, L.; Zuo, W.; Zhang, D.; Feng, X. Patch Group Based Nonlocal Self-Similarity Prior Learning for Image Denoising. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

| Penalty | Formula | Super-Gradient |

|---|---|---|

| SCAD | ||

| Logarithm | ||

| MCP | ||

| ETP |

| Images | BM3D | NCSR | PGPD | A-NLS | GSRC | Ours | BM3D | NCSR | PGPD | A-NLS | GSRC | Ours |

| Airplane | 28.49 | 28.34 | 28.63 | 28.59 | 28.68 | 28.97 | 26.88 | 26.78 | 27.12 | 27.10 | 27.21 | 27.51 |

| Boats | 29.33 | 29.05 | 29.32 | 29.34 | 29.42 | 29.65 | 27.76 | 27.52 | 27.90 | 27.80 | 27.97 | 28.17 |

| Fence | 28.19 | 28.14 | 28.13 | 28.43 | 28.39 | 28.65 | 26.84 | 26.76 | 26.91 | 27.11 | 27.16 | 27.41 |

| Flower | 27.97 | 27.88 | 28.11 | 28.20 | 28.21 | 28.45 | 26.48 | 26.35 | 26.68 | 26.75 | 26.84 | 27.11 |

| Foreman | 32.75 | 32.61 | 32.83 | 32.79 | 33.15 | 33.45 | 31.29 | 31.52 | 31.55 | 31.29 | 31.81 | 31.99 |

| House | 32.09 | 32.01 | 32.24 | 32.26 | 32.44 | 32.78 | 30.65 | 30.79 | 31.02 | 30.91 | 31.16 | 31.39 |

| J. Bean | 31.97 | 31.90 | 31.99 | 32.07 | 32.28 | 32.43 | 30.21 | 30.49 | 30.39 | 30.38 | 30.51 | 30.79 |

| Lake | 26.74 | 26.69 | 26.90 | 26.92 | 26.89 | 27.05 | 25.21 | 25.21 | 25.51 | 25.46 | 25.52 | 25.77 |

| Leaves | 27.81 | 28.04 | 27.99 | 28.37 | 28.56 | 28.78 | 25.69 | 26.20 | 26.29 | 26.69 | 26.82 | 27.02 |

| Lena | 29.46 | 29.32 | 29.60 | 29.50 | 29.66 | 29.89 | 27.82 | 28.00 | 28.22 | 28.00 | 28.16 | 28.43 |

| Lin | 30.95 | 30.65 | 30.96 | 30.83 | 30.92 | 31.21 | 29.52 | 29.27 | 29.73 | 29.39 | 29.47 | 29.61 |

| Monarch | 28.35 | 28.38 | 28.49 | 28.70 | 28.80 | 28.97 | 26.72 | 26.81 | 27.02 | 27.20 | 27.33 | 27.58 |

| Starfish | 27.66 | 27.69 | 27.67 | 27.89 | 28.02 | 28.29 | 26.06 | 26.17 | 26.21 | 26.36 | 26.53 | 26.72 |

| Airplane | 25.76 | 25.63 | 25.98 | 26.02 | 26.17 | 26.51 | 23.99 | 23.76 | 24.15 | 24.06 | 24.12 | 24.29 |

| Boats | 26.74 | 26.37 | 26.82 | 26.78 | 26.95 | 27.43 | 24.82 | 24.44 | 24.83 | 24.76 | 24.94 | 25.12 |

| Fence | 25.92 | 25.77 | 25.94 | 26.22 | 26.26 | 26.47 | 24.22 | 23.75 | 24.18 | 24.40 | 24.53 | 24.79 |

| Flower | 25.49 | 25.31 | 25.63 | 25.77 | 25.76 | 25.99 | 23.82 | 23.50 | 23.82 | 23.87 | 23.87 | 23.99 |

| Foreman | 30.36 | 30.41 | 30.45 | 30.46 | 30.77 | 30.70 | 28.07 | 28.18 | 28.39 | 28.54 | 28.75 | 28.94 |

| House | 29.69 | 29.61 | 29.93 | 30.13 | 30.45 | 30.74 | 27.51 | 27.16 | 27.81 | 28.06 | 28.59 | 28.83 |

| J. Bean | 29.26 | 29.24 | 29.20 | 29.26 | 29.58 | 29.87 | 27.22 | 27.15 | 27.07 | 27.12 | 27.29 | 27.42 |

| Lake | 24.29 | 24.15 | 24.49 | 24.44 | 24.44 | 24.65 | 22.63 | 22.48 | 22.76 | 22.61 | 22.61 | 22.82 |

| Leaves | 24.68 | 24.94 | 25.03 | 25.32 | 25.66 | 25.97 | 22.49 | 22.60 | 22.61 | 22.95 | 23.34 | 23.51 |

| Lena | 26.90 | 26.94 | 27.15 | 27.08 | 27.06 | 27.49 | 25.17 | 25.02 | 25.30 | 25.32 | 25.32 | 25.53 |

| Lin | 28.71 | 28.23 | 28.79 | 28.50 | 28.60 | 28.98 | 26.96 | 26.22 | 27.05 | 26.72 | 26.84 | 26.97 |

| Monarch | 25.82 | 25.73 | 26.00 | 26.12 | 26.25 | 26.54 | 23.91 | 23.67 | 24.00 | 24.11 | 24.35 | 24.65 |

| Starfish | 25.04 | 25.06 | 25.11 | 25.26 | 25.36 | 25.64 | 23.27 | 23.18 | 23.23 | 23.24 | 23.32 | 23.51 |

| Methods | ETP | Logarithm | Lp | MCP | SCAD |

|---|---|---|---|---|---|

| Airplane | 25.35/0.7688 | 25.59/0.8047 | 25.50/0.7876 | 25.69/0.8202 | 25.67/0.8178 |

| Baboon | 23.41/0.5318 | 23.59/0.5428 | 23.60/0.5393 | 23.69/0.5500 | 23.68/0.5499 |

| Barbara | 26.86/0.8044 | 27.28/0.8277 | 27.28/0.8197 | 27.49/0.8372 | 27.46/0.8360 |

| Boats | 26.79/0.7770 | 27.18/0.8016 | 27.11/0.7913 | 27.34/0.8124 | 27.32/0.8113 |

| Cameraman | 24.25/0.7191 | 24.47/0.7624 | 24.44/0.7452 | 24.57/0.7836 | 24.55/0.7803 |

| Elaine | 28.24/0.8157 | 28.71/0.8412 | 28.72/0.8313 | 28.99/0.8536 | 28.95/0.8518 |

| Foreman | 30.80/0.8208 | 31.95/0.8691 | 31.87/0.8536 | 32.54/0.8907 | 32.45/0.8875 |

| House | 28.87/0.7637 | 29.64/0.8147 | 29.48/0.7956 | 29.95/0.8383 | 29.91/0.8346 |

| Leaves | 23.69/0.8565 | 23.84/0.8711 | 23.83/0.8662 | 23.91/0.8770 | 23.9/0.8762 |

| Monarch | 25.06/0.8217 | 25.30/0.8445 | 25.29/0.8360 | 25.42/0.8552 | 25.39/0.8532 |

| Parrots | 26.57/0.7890 | 27.00/0.8307 | 26.95/0.8136 | 27.21/0.8507 | 27.18/0.8478 |

| Peppers | 25.44/0.7499 | 25.68/0.7727 | 16.23/0.7601 | 25.78/0.7831 | 25.78/0.7822 |

| Starfish | 24.84/0.7454 | 25.08/0.7657 | 22.98/0.7544 | 25.16/0.7739 | 25.15/0.7725 |

| Average | 26.17/0.7664 | 26.56/0.7961 | 25.64/0.7841 | 26.75/0.8097 | 26.72/0.8078 |

| Airplane | 21.92/0.6578 | 21.94/0.6598 | 21.93/0.6585 | 21.94/0.6602 | 21.94/0.6603 |

| Baboon | 21.42/0.3613 | 21.43/0.3626 | 21.43/0.3625 | 21.43/0.3632 | 21.43/0.3631 |

| Barbara | 23.04/0.6108 | 23.04/0.6112 | 23.04/0.6098 | 23.04/0.6110 | 23.04/0.6111 |

| Boats | 23.06/0.6172 | 23.07/0.6182 | 23.06/0.6174 | 23.07/0.6187 | 23.07/0.6186 |

| Cameraman | 21.60/0.6424 | 21.61/0.6446 | 21.6/0.6420 | 21.61/0.6457 | 21.61/0.6456 |

| Elaine | 24.07/0.6883 | 24.08/0.6897 | 24.07/0.6889 | 24.08/0.6907 | 24.08/0.6906 |

| Foreman | 26.81/0.7785 | 26.86/0.7815 | 26.86/0.7796 | 26.88/0.7830 | 26.88/0.7828 |

| House | 25.13/0.7153 | 25.15/0.7181 | 25.13/0.7164 | 25.14/0.7185 | 25.14/0.7185 |

| Leaves | 19.40/0.6913 | 19.40/0.6925 | 19.4/0.6926 | 19.4/0.6932 | 19.4/0.6930 |

| Monarch | 21.34/0.6769 | 21.36/0.6785 | 21.36/0.6783 | 21.37/0.6793 | 21.36/0.6791 |

| Parrots | 23.58/0.7153 | 23.60/0.7180 | 23.6/0.7175 | 23.6/0.7191 | 23.6/0.7188 |

| Peppers | 22.25/0.6432 | 22.26/0.6445 | 22.26/0.6443 | 22.26/0.6453 | 22.26/0.6451 |

| Starfish | 21.32/0.5643 | 21.32/0.5654 | 21.32/0.5655 | 21.32/0.5661 | 21.32/0.5659 |

| Average | 22.69/0.6433 | 22.70/0.6450 | 22.70/0.6441 | 22.70/0.6457 | 22.70/0.6456 |

| Methods | ETP | Logarithm | Lp | MCP | SCAD |

|---|---|---|---|---|---|

| Results (1) | 25.67/0.7645 | 25.74/0.7703 | 25.69/0.7637 | 25.77/0.7730 | 25.76/0.7727 |

| Results (2) | 25.20/0.7265 | 25.40/0.7468 | 25.37/0.7422 | 25.49/0.7561 | 25.48/0.7547 |

| Results (3) | 24.92/0.7276 | 24.97/0.7331 | 24.96/0.7305 | 25.00/0.7357 | 24.99/0.7353 |

| Results (4) | 23.62/0.6807 | 23.64/0.6833 | 23.64/0.6822 | 23.65/0.6845 | 23.65/0.6843 |

| ETP | Logarithm | Lp | MCP | SCAD | |

|---|---|---|---|---|---|

| 20 | 6.49 | 5.74 | 8.01 | 6.99 | 6.48 |

| 30 | 9.08 | 8.34 | 10.91 | 8.83 | 8.82 |

| 40 | 9.28 | 8.79 | 10.95 | 9.13 | 8.91 |

| 50 | 10.55 | 8.94 | 11.69 | 9.03 | 9.37 |

| 75 | 13.15 | 13.07 | 16.48 | 14.01 | 14.36 |

| 100 | 21.14 | 21.09 | 26.39 | 22.31 | 23.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Han, R.; Qian, P.; Li, C. Generalized Non-Convex Non-Smooth Group-Sparse Residual Prior for Image Denoising. Electronics 2025, 14, 353. https://doi.org/10.3390/electronics14020353

Wang S, Han R, Qian P, Li C. Generalized Non-Convex Non-Smooth Group-Sparse Residual Prior for Image Denoising. Electronics. 2025; 14(2):353. https://doi.org/10.3390/electronics14020353

Chicago/Turabian StyleWang, Shaohe, Rui Han, Ping Qian, and Chen Li. 2025. "Generalized Non-Convex Non-Smooth Group-Sparse Residual Prior for Image Denoising" Electronics 14, no. 2: 353. https://doi.org/10.3390/electronics14020353

APA StyleWang, S., Han, R., Qian, P., & Li, C. (2025). Generalized Non-Convex Non-Smooth Group-Sparse Residual Prior for Image Denoising. Electronics, 14(2), 353. https://doi.org/10.3390/electronics14020353