Characterization of Students’ Thinking States Active Based on Improved Bloom Classification Algorithm and Cognitive Diagnostic Model

Abstract

1. Introduction

- The first objective is to improve the robustness of cognitive level classification for exercises, enabling it to cope with noise and semantic distortions present in complex Chinese text.

- The second objective is to solve the cognitive classification challenge for hybrid code-and-text exercises, thereby achieving a unified semantic understanding of both programming code and Chinese language.

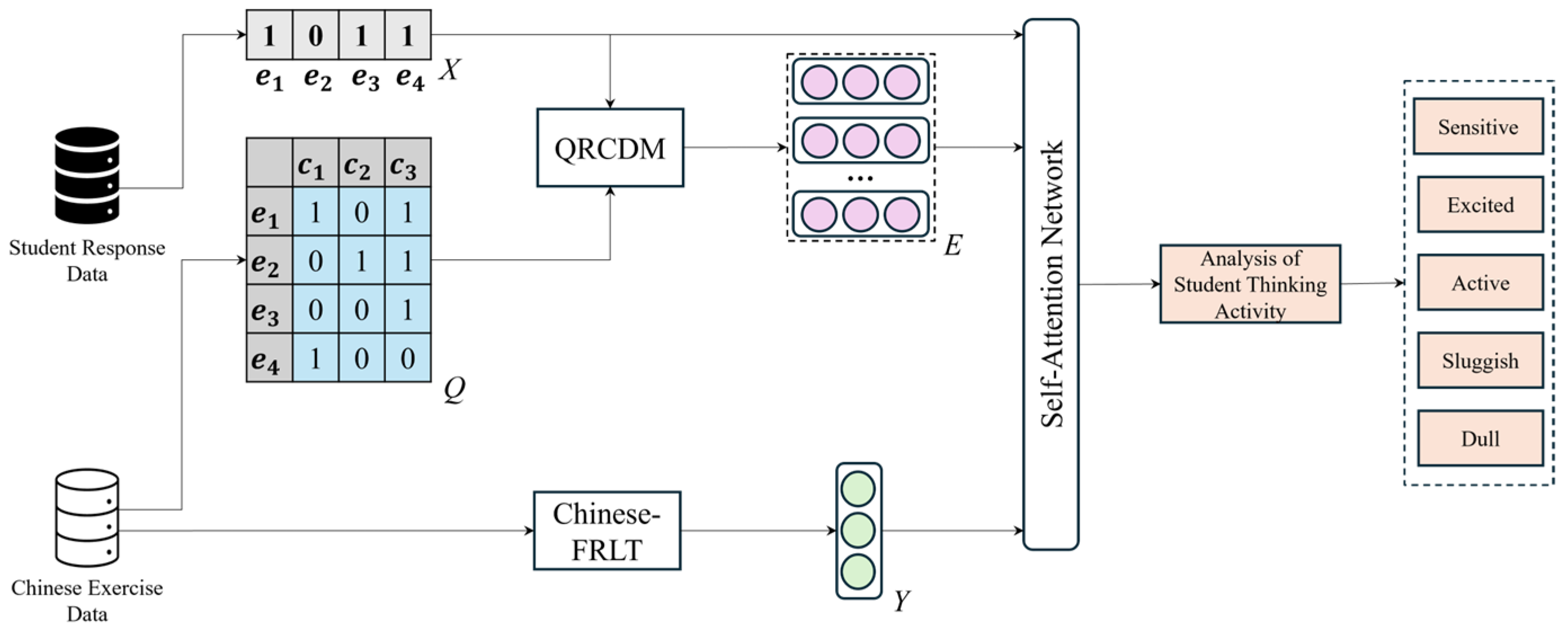

- The third objective is to create a multi-fused representation model of active thinking states by correlating exercise cognitive hierarchy with student cognitive states, resulting in a concrete state representation.

- A new text classification framework with dual capabilities—namely, robustness to noise and context awareness—is proposed. This framework integrates FreeLB, Chinese-RoBERTa-wwm, and LSTM architectures, which addresses the problems of global semantic distortion and local structural sparsity of text classification models in long-text contexts, significantly enhancing the model’s robustness with complex linguistic phenomena and its ability for deep semantic understanding.

- A text classification model based on improved Chinese-RoBERTa-wwm and TBCC is proposed. This model can fully learn and understand the problems of semantic sparsity and complex sentence structure in the code text of code-filling exercises, improving classification accuracy with exercise datasets, including mixed code texts.

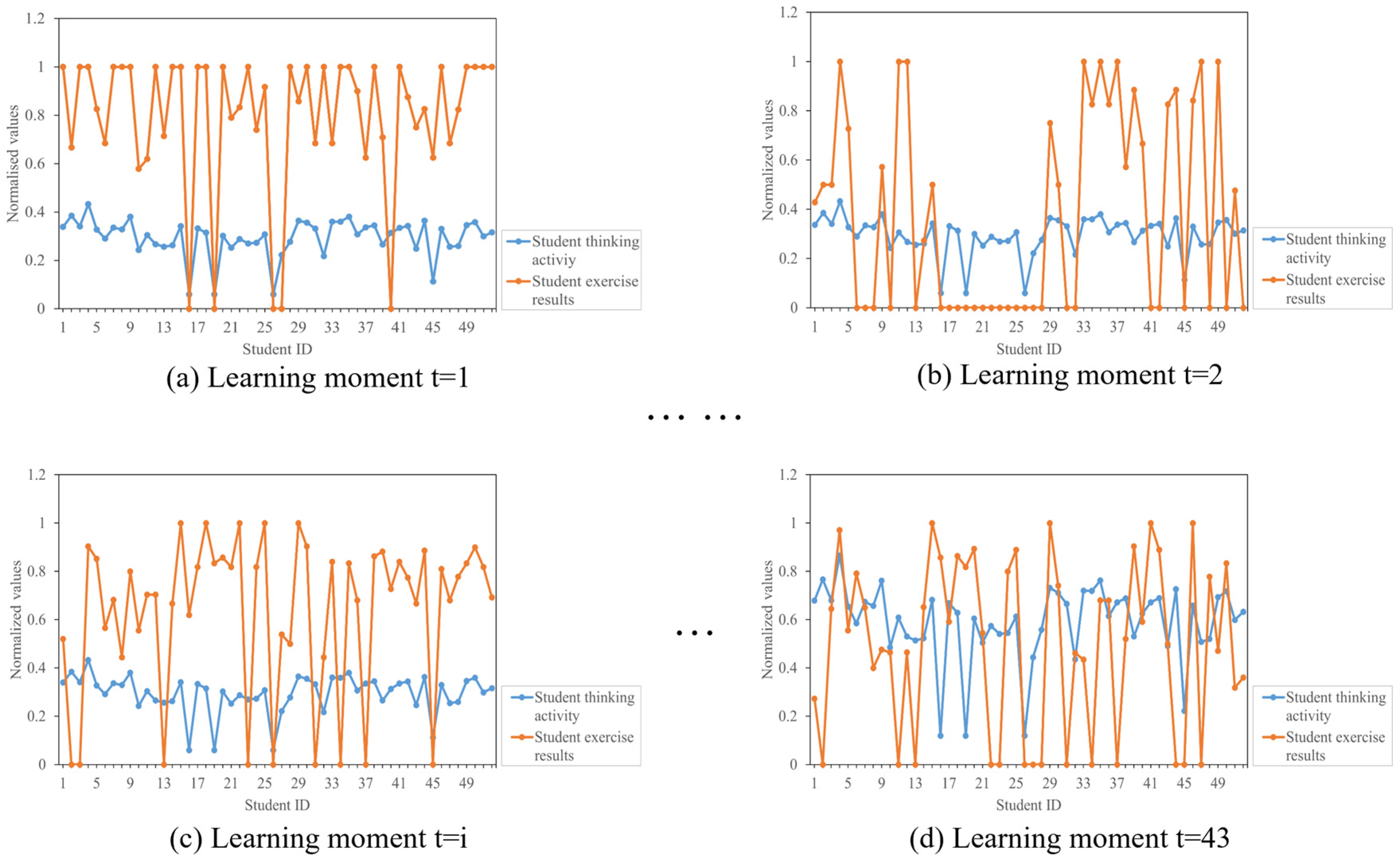

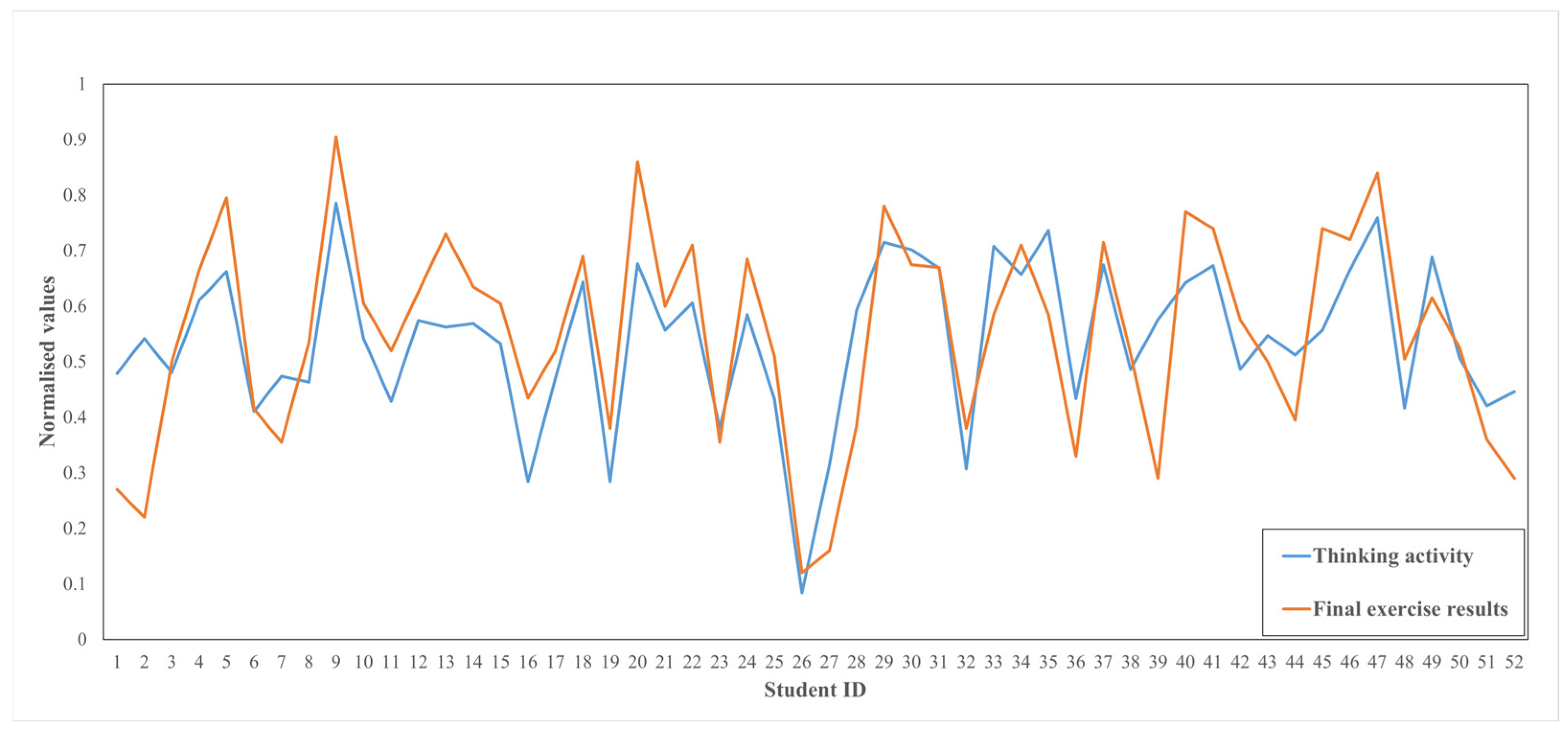

- A characterization method for an active thinking state that integrates students’ cognitive states and the cognitive level of exercises is proposed. This method analyzes the correlation between the two dimensions—namely, students’ cognitive states and the cognitive level of exercises—and enables a concrete numerical evaluation of students’ active thinking levels during classroom learning.

2. Related Work

3. Method

3.1. Chinese-FRLT

3.1.1. Text Recognition Module

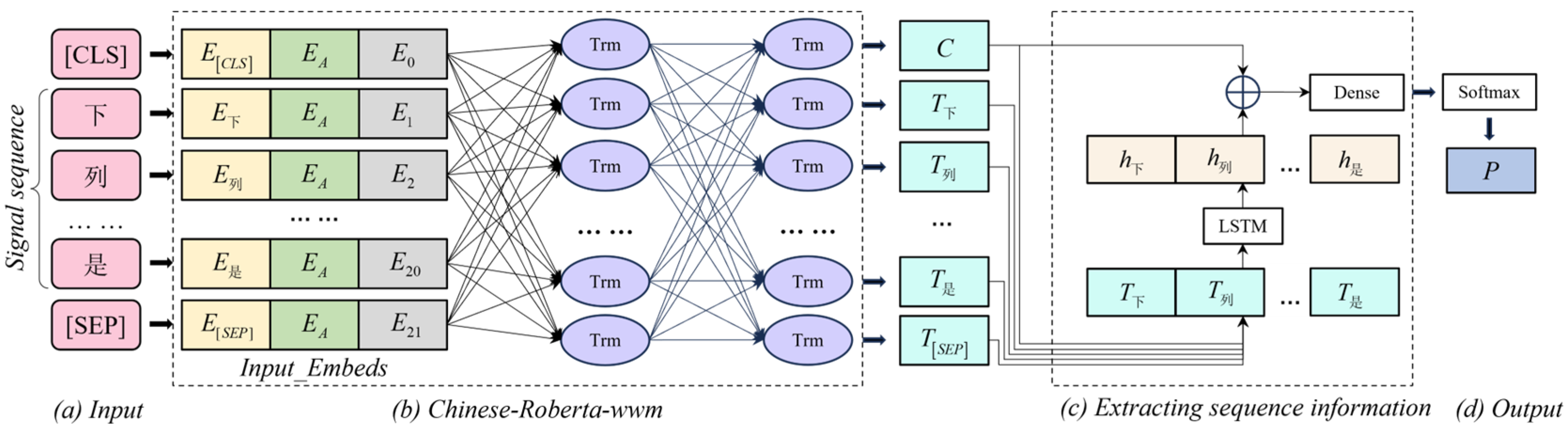

3.1.2. Text Classification Framework Based on Chinese-RoBERTa-wwm and LSTM

3.1.3. TBCC Model

3.1.4. FreeLB Adversarial Training

3.2. Cognitive Level Diagnosis Model (QRCDM)

3.3. Self-Attention-Based Prediction of Student Interest Features

3.4. Experimental Setup

3.4.1. Datasets

- Introduction to the dataset used to validate the Chinese-FRLT model.

- 2.

- Introduction to the experimental dataset for characterization of the active state.

3.4.2. Evaluation Indicators and Baseline Model

4. Experimental Results and Performance Analysis

4.1. Experimental Environment and Hyperparameter Settings

4.2. Analysis of Experimental Results of Chinese-FRLT Model in Four Chinese Datasets

4.2.1. Introduction to Dataset for Validating the Models Characterizing the Active Thinking State

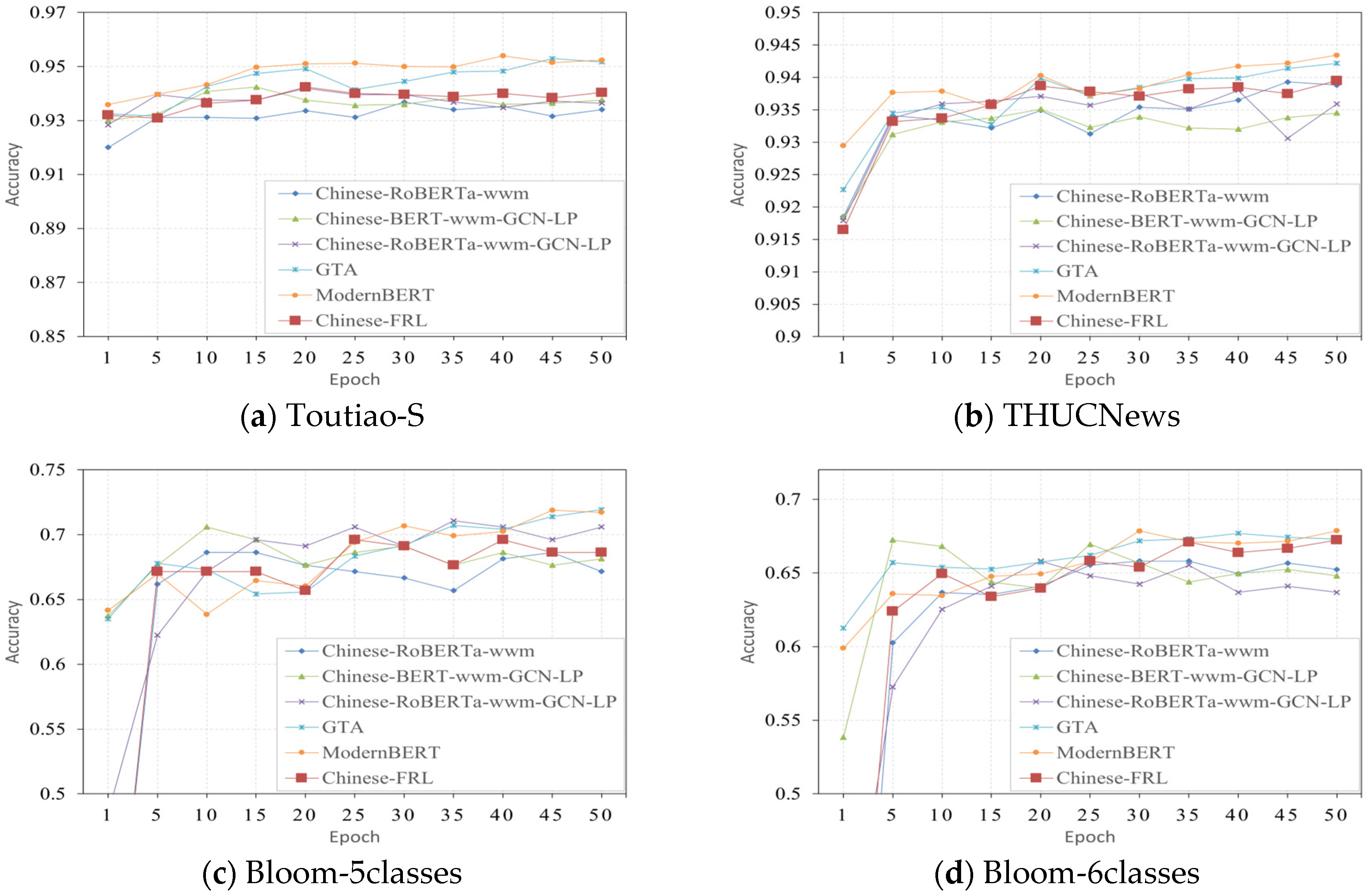

- The Chinese-BERT-wwm-GCN-LP and Chinese-RoBERTa-wwm-GCN-LP models classify texts by integrating semantic information and structural information. The proposed Chinese-FRL framework, which adopts a method that combines sentence semantic information and sequence information, outperformed the three baseline models regarding classification performance. Although its classification effect was inferior to that of GTA and ModernBERT, the accuracy difference remained within 1.5%. This proves that the proposed framework can also effectively extract the implicit shallow and deep semantic features of Chinese texts.

- The proposed model did not perform as well as GTA and ModernBERT when classifying the Bloom-5classes exercise dataset, which has sparse data and imbalanced categories. This is because GTA and ModernBERT use a combination of grouped-head attention mechanisms and Flash Attention, respectively, which can extract more local feature information than an LSTM. However, when classifying the Bloom-6classes exercise dataset, which has a balanced number of categories, the performance gap between the Chinese-FRL framework and GTA or ModernBERT was not significant, with the accuracy difference being within 1%. This indicates that, under the condition of balanced categories, combining FreeLB with Chinese-RL-wwm enables the effective classification of sparse Chinese exercise data.

4.2.2. Analysis of Experimental Results for Chinese-FRLT Model with Four Chinese Datasets

- The proposed Chinese-FRLT model outperformed the baseline models regarding ACC and both F1 evaluation metrics on the two exercise datasets with added code text. This demonstrates the superiority of the proposed model in the field of Chinese text classification, as well as its effectiveness in classifying the Bloom cognitive levels of Chinese exercises that contain mixed code.

- The classification performance of the proposed Chinese-FRLT model was significantly better than that of the Chinese-RoBERTa-wwm model. This indicates the effectiveness of using a text recognition module for the initial classification of mixed texts, enabling the Chinese-FRL and TBCC modules to handle the corresponding text types. The Chinese-BERT-wwm-GCN-LP and Chinese-RoBERTa-wwm-GCN-LP models require the generation of adjacent information between texts through a GCN before processing text, while code text is composed of relevant symbols and various logical structures, resulting in sparse semantic feature information. The classification performance of the proposed Chinese-FRLT model was superior to that of these two models, indicating that the combination with TBCC allows for parsing of the structure of code text to generate code snippets effectively, thereby mining semantic feature information in code text.

- When classifying datasets with a high proportion of pure text, the proposed Chinese-FRLT model performed slightly worse than GTA and ModernBERT, with an accuracy difference of less than 0.8%. This is because both GTA and ModernBERT use longer Token inputs and more advanced attention mechanisms. However, the classification results for subsequent exercise datasets revealed that this does not mean they can effectively classify exercise data that are sparse and imbalanced. This also proves that integrating a specific code parsing and classification model allows for the more effective classification of text data mixed with code.

4.2.3. Ablation Experiment

- Influence of individual modules in the Chinese-FRLT model on its performance.

- 2.

- The effect of the number of hidden layers in Chinese-RoBERTa-wwm.

4.3. Performance Analysis of Models Characterizing the Active State of Thinking

4.3.1. Comparison of Single- and Multi-Tasking

4.3.2. Analysis and Evaluation of a Model for Characterizing the Active State of Thinking Based on the Classroomdata Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yeru, T.; Zuozhang, D. Genetic principles and their implications for the study of learning interes. Educ. Explor. 2012, 9, 5–7. [Google Scholar]

- Al-Mudimigh, A.S.; Ullah, Z.; Shahzad, B. Critical success factors in implementing portal: A comparative study. Glob. J. Manag. Bus. Res. 2010, 10, 129–133. [Google Scholar]

- Al-Sudairi, M.; Al-Mudimigh, A.S.; Ullah, Z. A Project management approach to service delivery model in portal implementation. In Proceedings of the 2011 Second International Conference on Intelligent Systems, Modelling and Simulation, Phnom Penh, Cambodia, 25–27 January 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 329–331. [Google Scholar]

- David, R.K. A revision of bloom’s taxonomy: An overview. Theory Into Pract. 2002, 41, 212–218. [Google Scholar]

- Kusuma, S.F.; Siahaan, D.; Yuhana, U.L. Automatic Indonesia’s questions classification based on bloom’s taxonomy using Natural Language Processing a preliminary study. In Proceedings of the 2015 International Conference on Information Technology Systems and Innovation (ICITSI), Bali, Indonesia, 16–19 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Das, S.; Mandal, S.K.D.; Basu, A. Identification of cognitive learning complexity of assessment questions using multi-class text classification. Contemp. Educ. Technol. 2020, 12, ep275. [Google Scholar] [CrossRef]

- Waheed, A.; Goyal, M.; Mittal, N.; Gupta, D.; Khanna, A.; Sharma, M. BloomNet: A Robust Transformer based model for Bloom’s Learning Outcome Classification. arXiv 2021, arXiv:2108.07249. [Google Scholar] [CrossRef]

- Gan, W.; Sun, Y.; Peng, X.; Sun, Y. Modeling learner’s dynamic knowledge construction procedure and cognitive item difficulty for knowledge tracing. Appl. Intell. 2020, 50, 3894–3912. [Google Scholar] [CrossRef]

- Piech, C.; Bassen, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.J.; Sohl-Dickstein, J. Deep knowledge tracing. Adv. Neural Inf. Process. Syst. 2015, 28, 201–204. [Google Scholar]

- Wang, F.; Liu, Q.; Chen, E.; Huang, Z.; Chen, Y.; Yin, Y.; Wang, S. Neural cognitive diagnosis for intelligent education systems. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 6153–6161. [Google Scholar]

- Gao, W.; Liu, Q.; Huang, Z.; Yin, Y.; Bi, H.; Wang, M.C.; Su, Y. RCD: Relation map driven cognitive diagnosis for intelligent education systems. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Online, 11–15 July 2021; pp. 501–510. [Google Scholar]

- Pandey, S.; Srivastava, J. RKT: Relation-aware self- Attention Is All You Need for knowledge tracing. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Online, 19–23 October 2020; pp. 1205–1214. [Google Scholar]

- Yang, H.; Qi, T.; Li, J.; Ren, M.; Zhang, L.; Wang, X. A novel quantitative relationship neural network for explainable cognitive diagnosis model. Knowl. Based Syst. 2022, 250, 109156. [Google Scholar] [CrossRef]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for ChineseBERT. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

- Xu, X.; Chang, Y.; An, J.; Du, Y. Chinese text classification by combining Chinese-BERTology-wwm and GCN. PeerJ Comput. Sci. 2023, 9, e1544. [Google Scholar] [CrossRef]

- Warner, B.; Chaffin, A.; Clavié, B.; Weller, O.; Hallström, O.; Taghadouini, S.; Poli, I. Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference. arXiv 2024, arXiv:2412.13663. [Google Scholar] [CrossRef]

- Sun, L.; Deng, C.; Jiang, J.; Zhang, H.; Chen, L.; Wang, J. GTA: Grouped-head latenT Attention. arXiv 2025, arXiv:2506.17286. [Google Scholar]

- Gani, M.O.; Ayyasamy, R.K.; Sangodiah, A.; Fui, Y.T. Bloom’s Taxonomy-based exam question classification: The outcome of CNN and optimal pre-trained word embedding technique. Educ. Inf. Technol. 2023, 28, 15893–15914. [Google Scholar] [CrossRef]

- Baharuddin, F.; Naufal, M.F. Fine-Tuning IndoBERT for Indonesian Exam Question Classification Based on Bloom’s Taxonomy. J. Inf. Syst. Eng. Bus. Intell. 2023, 9, 253–263. [Google Scholar] [CrossRef]

- Mukanova, A.; Barlybayev, A.; Nazyrova, A.; Kussepova, L.; Matkarimov, B.; Abdikalyk, G. Development of a Geographical Question- Answering System in the Kazakh Language. IEEE Access 2024, 12, 105460–105469. [Google Scholar] [CrossRef]

- Bi, H.; Chen, E.; He, W.; Wu, H.; Zhao, W.; Wang, S.; Wu, J. BETA-CD: A Bayesian meta-learned cognitive diagnosis framework for personalized learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Montréal, QC, Canada, 8–10 August 2023; Volume 37, pp. 5018–5026. [Google Scholar]

- Vucetich, J.A.; Bruskotter, J.T.; Ghasemi, B.; Nelson, M.P.; Slagle, K.M. A Flexible Inventory of Survey Items for Environmental Concepts Generated via Special Attention to Content Validity and Item Response Theory. Sustainability 2024, 16, 1916. [Google Scholar] [CrossRef]

- Darman, D.R.; Suhandi, A.; Kaniawati, I.; Wibowo, F.C. Development and Validation of Scientific Inquiry Literacy Instrument (SILI) Using Rasch Measurement Model. Educ. Sci. 2024, 14, 322. [Google Scholar] [CrossRef]

- Gao, L.; Zhao, Z.; Li, C.; Zeng, Q. Deep cognitive diagnosis model for predicting students’ performance. Future Gener. Comput. Syst. 2022, 126, 252–262. [Google Scholar] [CrossRef]

- Lynn, H.M.; Pan, S.B.; Kim, P.A. Deep Bidirectional GRU Network Model for Biometric Electrocardiogram Classification based on Recurrent Neural Networks. IEEE Access 2019, 7, 145395–145405. [Google Scholar] [CrossRef]

- Zhang, W.; Gong, Z.; Luo, P.; Li, Z. DKVMN-KAPS: Dynamic Key-Value Memory Networks Knowledge Tracing with Students’ Knowledge-Absorption Ability and Problem-Solving Ability. IEEE Access 2024, 12, 55146–55156. [Google Scholar] [CrossRef]

- Hua, W.; Liu, G. Transformer-based networks over tree structures for code classification. Appl. Intell. 2022, 52, 8895–8909. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Zhu, C.; Cheng, Y.; Gan, Z.; Goldstein, T.; Liu, J. FreeLB: Enhanced Adversarial Training for Language Understanding. arXiv 2019, arXiv:1909.11764. [Google Scholar]

- Dong, R.S. Introduction to Computer Science: Thinking and Methods, 3rd ed.; Higher Education Press: Beijing, China, 2015; pp. 1–335. [Google Scholar]

- Sedgewick, R. Computer Science: An Interdisciplinary Approach; Gong, X.L., Translator; Machine Press: Beijing, China, 2020; pp. 1–636. [Google Scholar]

| Baseline Model | Model Introduction |

|---|---|

| Chinese-RoBERTa-wwm [14] | Large-scale pre-trained model that utilizes bidirectional Transformers and dynamic wwm to learn contextual semantic information from Chinese texts such as in-class exercises in Chinese courses. |

| Chinese-BERT-wwm-GCN-LP [15] | A Chinese text classification model that uses Chinese-BERT-wwm to obtain contextual semantic features of Chinese texts such as in-class exercises in Chinese courses, employs GCN to learn the structural correlations between words in the text, and then fuses the two sets of features using cross-entropy and hinge loss. |

| Chinese-RoBERTa-wwm-GCN-LP [15] | A Chinese text classification model that uses Chinese-RoBERT-wwm to obtain contextual semantic features of Chinese texts such as in-class exercises in Chinese courses, employs GCN to learn the structural correlation between words in the text, and then fuses the two sets of features using cross-entropy and hinge loss. |

| ModernBERT [16] | The dimensions of the model’s feedforward network are first expanded in stages and a soft activation mechanism is introduced. Subsequently, a dynamic frequency coordinator is used to adaptively adjust the training frequency of different expansion modules, thereby constructing an efficient and high-performance large-scale pre-trained model. |

| Grouped-head Latent Attention (GTA) [17] | By grouping multi-head attention and enabling each attention group to learn an independent, trainable shared attention basis function in the latent space, long-sequence dependencies are modeled efficiently while reducing the computational complexity. |

| Experimental Environment | Environment Configuration |

|---|---|

| Operating system | Linux, Ubuntu 20.04.2 LTS |

| CPU | Intel(R) Xeon(R) Gold 6330H, Intel Corporation, Santa Clara, CA, USA |

| GPU | GeForce RTX 3090, NVIDIA, Santa Clara, CA, USA |

| RAM | 32 GB |

| ROM | 1 T SSD |

| Programming Language | Python 3.8 |

| Framework | Pytorch 2.0.0-gpu, cuda 11.8 |

| Model | Dataset | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Toutiao-S | THUCNews | Bloom-5classes | Bloom-6classes | |||||||||

| #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | |

| A | 0.9376 | 0.9377 | 0.9375 | 0.9396 | 0.9397 | 0.9396 | 0.7157 | 0.7019 | 0.6979 | 0.6752 | 0.6761 | 0.6739 |

| B | 0.9424 | 0.9424 | 0.9423 | 0.9356 | 0.9357 | 0.9357 | 0.7108 | 0.7092 | 0.7015 | 0.6738 | 0.6754 | 0.6725 |

| C | 0.9432 | 0.9432 | 0.9432 | 0.9385 | 0.9385 | 0.9384 | 0.7206 | 0.7189 | 0.7112 | 0.6610 | 0.6637 | 0.6584 |

| D | 0.9557 | 0.9557 | 0.9555 | 0.9517 | 0.9516 | 0.9515 | 0.7234 | 0.7203 | 0.7187 | 0.6831 | 0.6842 | 0.6819 |

| E | 0.9611 | 0.9611 | 0.9609 | 0.9563 | 0.9562 | 0.9561 | 0.7284 | 0.7263 | 0.7237 | 0.6864 | 0.6879 | 0.6850 |

| F | 0.9460 | 0.9460 | 0.9458 | 0.9409 | 0.9409 | 0.9408 | 0.7108 | 0.6964 | 0.6927 | 0.6752 | 0.6743 | 0.6737 |

| G | - | - | - | - | 0.7157 | 0.7013 | 0.6991 | 0.6795 | 0.6782 | 0.6771 | ||

| Model | Dataset | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Toutiao-S(2) | THUCNews(2) | Bloom-5classes(2) | Bloom-6classes(2) | |||||||||

| #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | |

| A | 0.8532 | 0.8533 | 0.8531 | 0.9132 | 0.9135 | 0.9128 | 0.6592 | 0.6537 | 0.6514 | 0.6522 | 0.6539 | 0.6513 |

| B | 0.8637 | 0.8637 | 0.8634 | 0.9116 | 0.9117 | 0.9114 | 0.6678 | 0.6623 | 0.6607 | 0.6559 | 0.6572 | 0.6541 |

| C | 0.8604 | 0.8603 | 0.8602 | 0.9139 | 0.9138 | 0.9136 | 0.6612 | 0.6574 | 0.6559 | 0.6447 | 0.6454 | 0.6432 |

| D | 0.9157 | 0.9157 | 0.9155 | 0.9334 | 0.9334 | 0.9331 | 0.6939 | 0.6882 | 0.6873 | 0.6701 | 0.6727 | 0.6984 |

| E | 0.9182 | 0.9182 | 0.9181 | 0.9368 | 0.9367 | 0.9365 | 0.6989 | 0.6937 | 0.6926 | 0.6723 | 0.6743 | 0.6708 |

| F | 0.8643 | 0.8644 | 0.8641 | 0.9151 | 0.9151 | 0.9150 | 0.6382 | 0.6338 | 0.6315 | 0.6573 | 0.6589 | 0.6559 |

| G | - | - | - | - | - | - | 0.6494 | 0.6443 | 0.6419 | 0.6634 | 0.6653 | 0.6618 |

| H | 0.9125 | 0.9124 | 0.9123 | 0.9309 | 0.9310 | 0.9307 | 0.6889 | 0.6828 | 0.6801 | 0.6770 | 0.6785 | 0.6753 |

| I | - | - | - | - | - | - | 0.7004 | 0.6941 | 0.6912 | 0.6795 | 0.6811 | 0.6775 |

| Model | Dataset | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Toutiao-S(2) | THUCNews(2) | Bloom-5classes(2) | Bloom-6classes(2) | |||||||||

| #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | |

| Chinese-FRL | 0.8643 | 0.8644 | 0.8641 | 0.9151 | 0.9151 | 0.9150 | 0.6494 | 0.6443 | 0.6419 | 0.6634 | 0.6653 | 0.6618 |

| Chinese-RLT | 0.8911 | 0.8911 | 0.8908 | 0.9279 | 0.9280 | 0.9274 | 0.6957 | 0.6931 | 0.6917 | 0.6695 | 0.6643 | 0.6682 |

| Chinese-FRT | 0.8816 | 0.8817 | 0.8813 | 0.9287 | 0.9287 | 0.9286 | 0.6964 | 0.6929 | 0.6908 | 0.6724 | 0.6678 | 0.6703 |

| Chinese-FRLT | 0.9125 | 0.9124 | 0.9123 | 0.9309 | 0.9310 | 0.9307 | 0.7004 | 0.6941 | 0.6912 | 0.6795 | 0.6811 | 0.6775 |

| Num of Hidden Layers | Datasets | |||||

|---|---|---|---|---|---|---|

| Bloom-5classes(2) | Bloom-6classes(2) | |||||

| #ACC | #micro-F1 | #macro-F1 | #ACC | #micro-F1 | #macro-F1 | |

| Four | 0.6784 | 0.6765 | 0.6754 | 0.6337 | 0.6319 | 0.6312 |

| Six | 0.7004 | 0.6941 | 0.6912 | 0.6795 | 0.6811 | 0.6775 |

| Eight | 0.7004 | 0.6987 | 0.6958 | 0.6484 | 0.6473 | 0.6459 |

| Ten | 0.7004 | 0.6983 | 0.6953 | 0.6640 | 0.6625 | 0.6617 |

| Twelve | 0.6889 | 0.6828 | 0.6801 | 0.6770 | 0.6785 | 0.6753 |

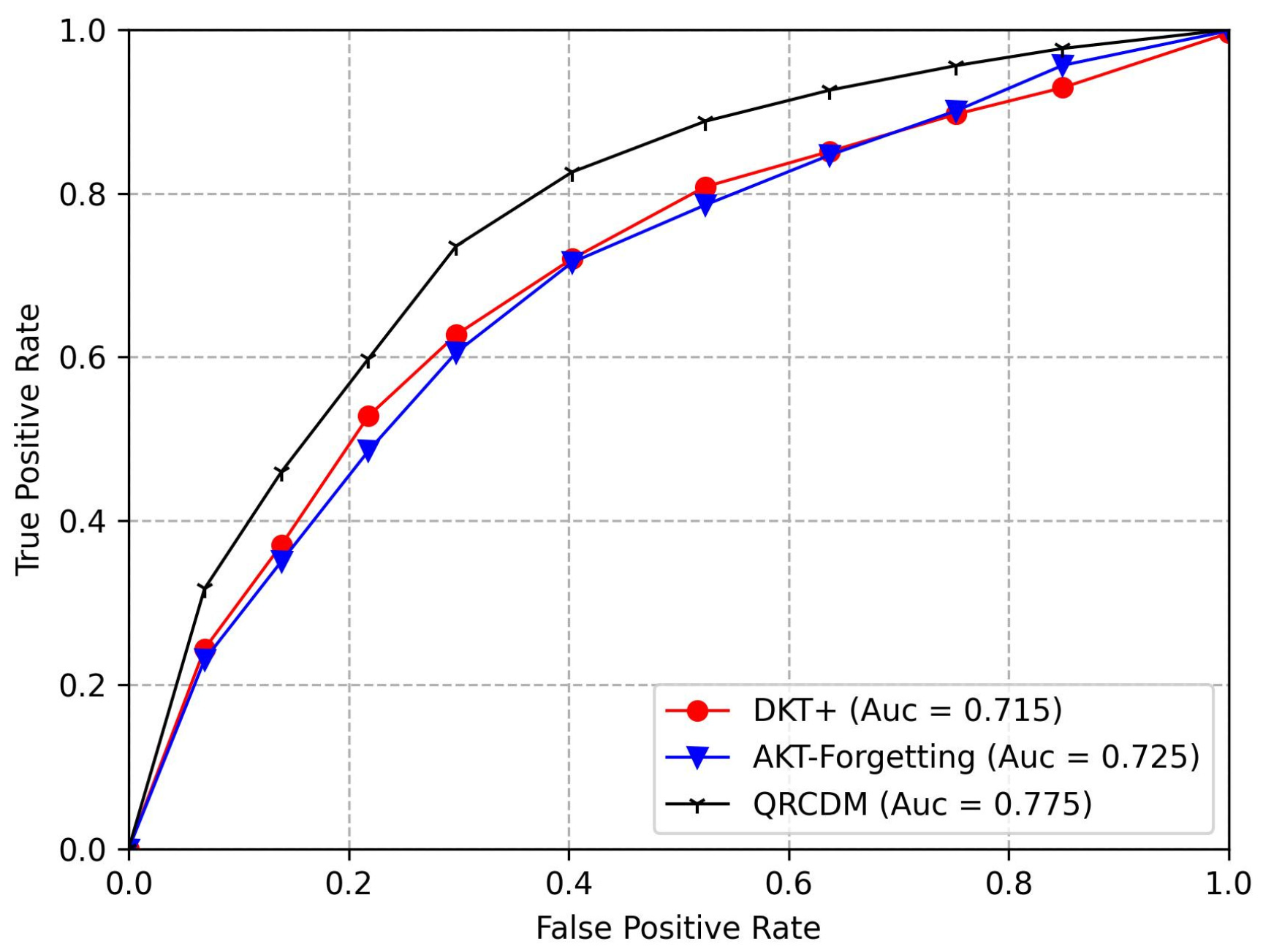

| Dataset | Model | AUC | ACC | MAE | RMSE |

|---|---|---|---|---|---|

| Classroomdata | DKT | 0.715 | 0.736 | 0.264 | 0.514 |

| DKVMN | 0.725 | 0.789 | 0.264 | 0.466 | |

| QRCDM | 0.775 | 0.780 | 0.220 | 0.469 |

| Student ID | Learning Moments | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | … | 43 | |

| 1 | Torpid | Torpid | Torpid | Torpid | Active | Active | Torpid | … | Excited |

| 2 | Torpid | Torpid | Torpid | Torpid | Active | Excited | Torpid | … | Excited |

| 3 | Torpid | Torpid | Torpid | Torpid | Active | Active | Torpid | … | Excited |

| 4 | Active | Active | Active | Active | Active | Excited | Active | … | Sensitive |

| … … | |||||||||

| 52 | Torpid | Torpid | Torpid | Torpid | Active | Active | Torpid | … | Excited |

| Student ID | Active Thinking State | Normalized Final Grade Value | Performance Interval | Student ID | Active Thinking State | Normalized Final Grade Value | Performance Interval |

|---|---|---|---|---|---|---|---|

| 1 | Active | 0.27 | Bad | 27 | Torpid | 0.16 | Terrible |

| 2 | Active | 0.415 | Medium | 28 | Active | 0.525 | Medium |

| 3 | Active | 0.50 | Medium | 29 | Excited | 0.78 | Well |

| … … | … … | ||||||

| 26 | Torpid | 0.12 | Terrible | 52 | Active | 0.29 | Bad |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Yuan, H.; Shou, Z.; Lu, C.; Mo, J. Characterization of Students’ Thinking States Active Based on Improved Bloom Classification Algorithm and Cognitive Diagnostic Model. Electronics 2025, 14, 3957. https://doi.org/10.3390/electronics14193957

Liu Y, Yuan H, Shou Z, Lu C, Mo J. Characterization of Students’ Thinking States Active Based on Improved Bloom Classification Algorithm and Cognitive Diagnostic Model. Electronics. 2025; 14(19):3957. https://doi.org/10.3390/electronics14193957

Chicago/Turabian StyleLiu, Yipeng, Hua Yuan, Zhaoyu Shou, Chenchen Lu, and Jianwen Mo. 2025. "Characterization of Students’ Thinking States Active Based on Improved Bloom Classification Algorithm and Cognitive Diagnostic Model" Electronics 14, no. 19: 3957. https://doi.org/10.3390/electronics14193957

APA StyleLiu, Y., Yuan, H., Shou, Z., Lu, C., & Mo, J. (2025). Characterization of Students’ Thinking States Active Based on Improved Bloom Classification Algorithm and Cognitive Diagnostic Model. Electronics, 14(19), 3957. https://doi.org/10.3390/electronics14193957