VimGeo: An Efficient Visual Model for Cross-View Geo-Localization

Abstract

1. Introduction

- A Vision Mamba framework based on state-space models (SSMs) is proposed, which has advantages in efficiency and representational capability. It shows great potential to replace Transformer and ConvNeXt as the foundational model for cross-view geo-localization.

- Dice Loss is introduced to solve the problem of regional scene size imbalance across different perspectives in cross-view tasks, aiming to enhance the model’s performance and robustness.

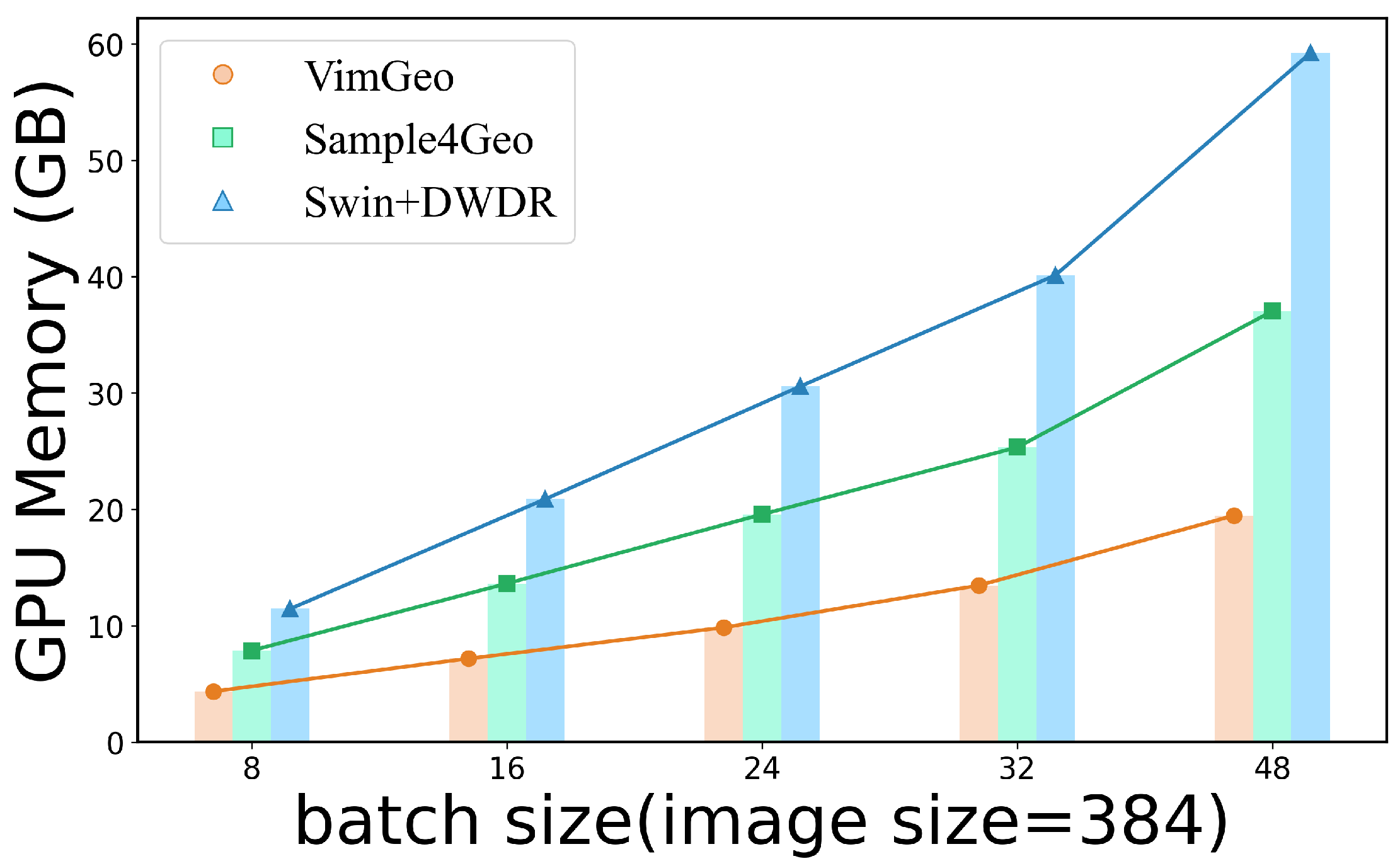

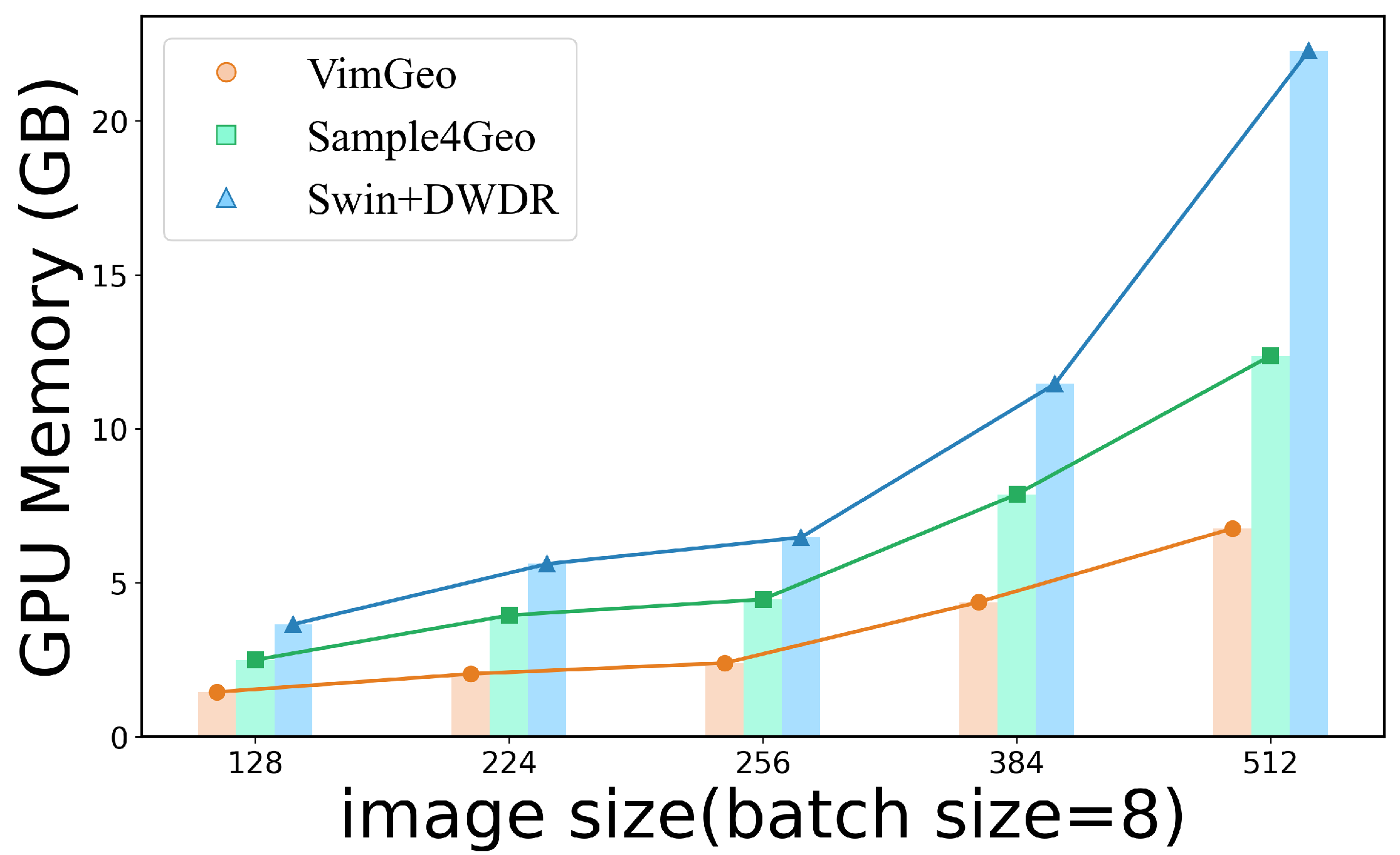

- The framework achieved superior performance in drone-view target localization on the public dataset University-1652, while maintaining the highest efficiency and the lowest GPU memory usage.

2. Related Works

2.1. Cross-View Geo-Localization

2.2. Mamba

2.3. Loss Function

3. Proposed Method

3.1. Vision Mamba

3.2. Dice Loss

- P represents the model’s predicted results.

- G represents the ground truth labels.

4. Experiments

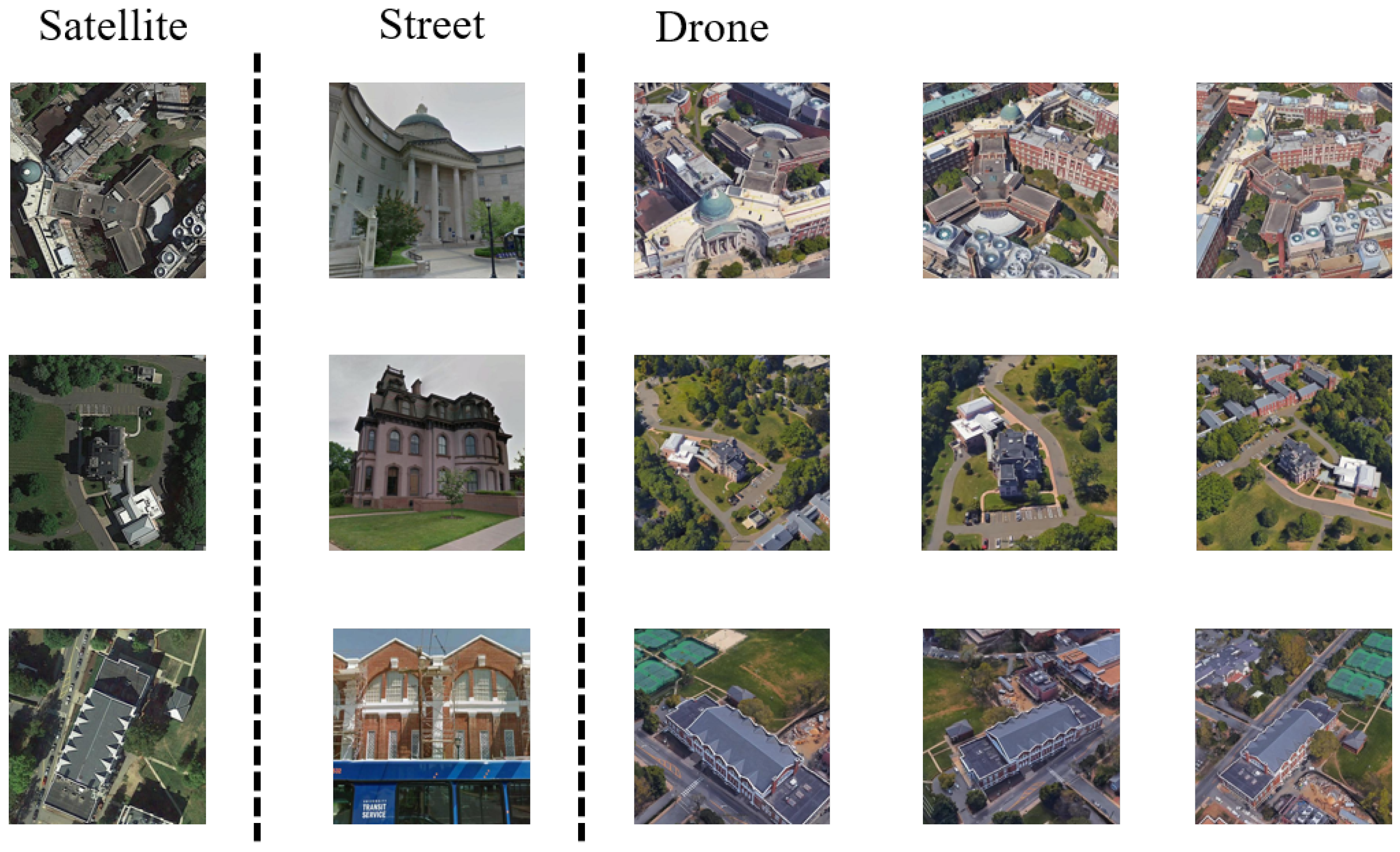

4.1. Datasets and Evaluation Protocols

4.2. Implementation Details

4.3. Comparison with the State-of-the-Art Methods

4.4. Ablation Study

4.4.1. Impact of Feature Dimension

4.4.2. Impact of Weight Sharing

4.4.3. Impact of Different Loss Functions

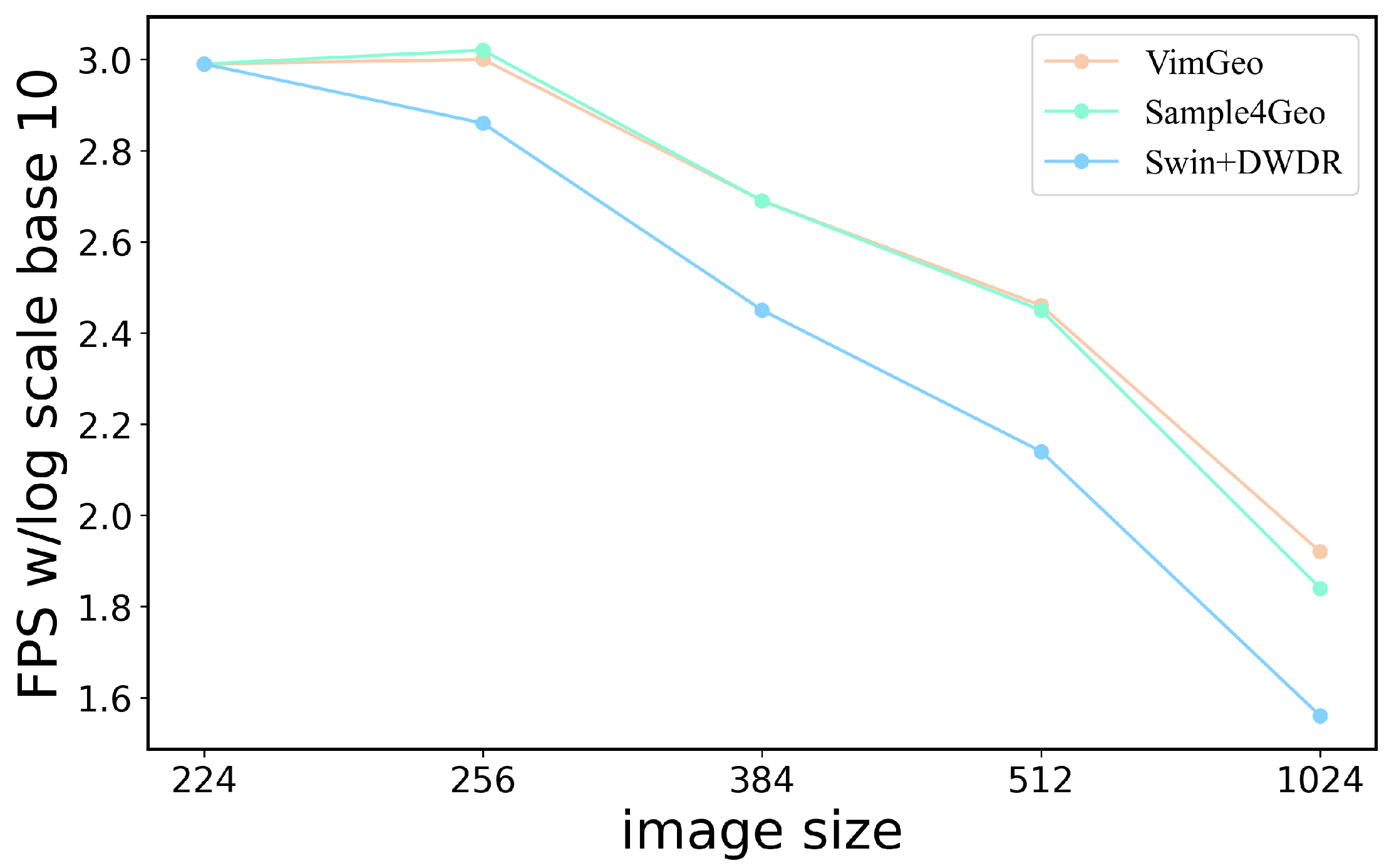

4.5. Impact of Input Size on Matching Performance

4.6. Impact of Transfer Learning on Matching Efficiency

4.7. Impact of Mixup and Augmix Data Augmentation on Matching Efficiency

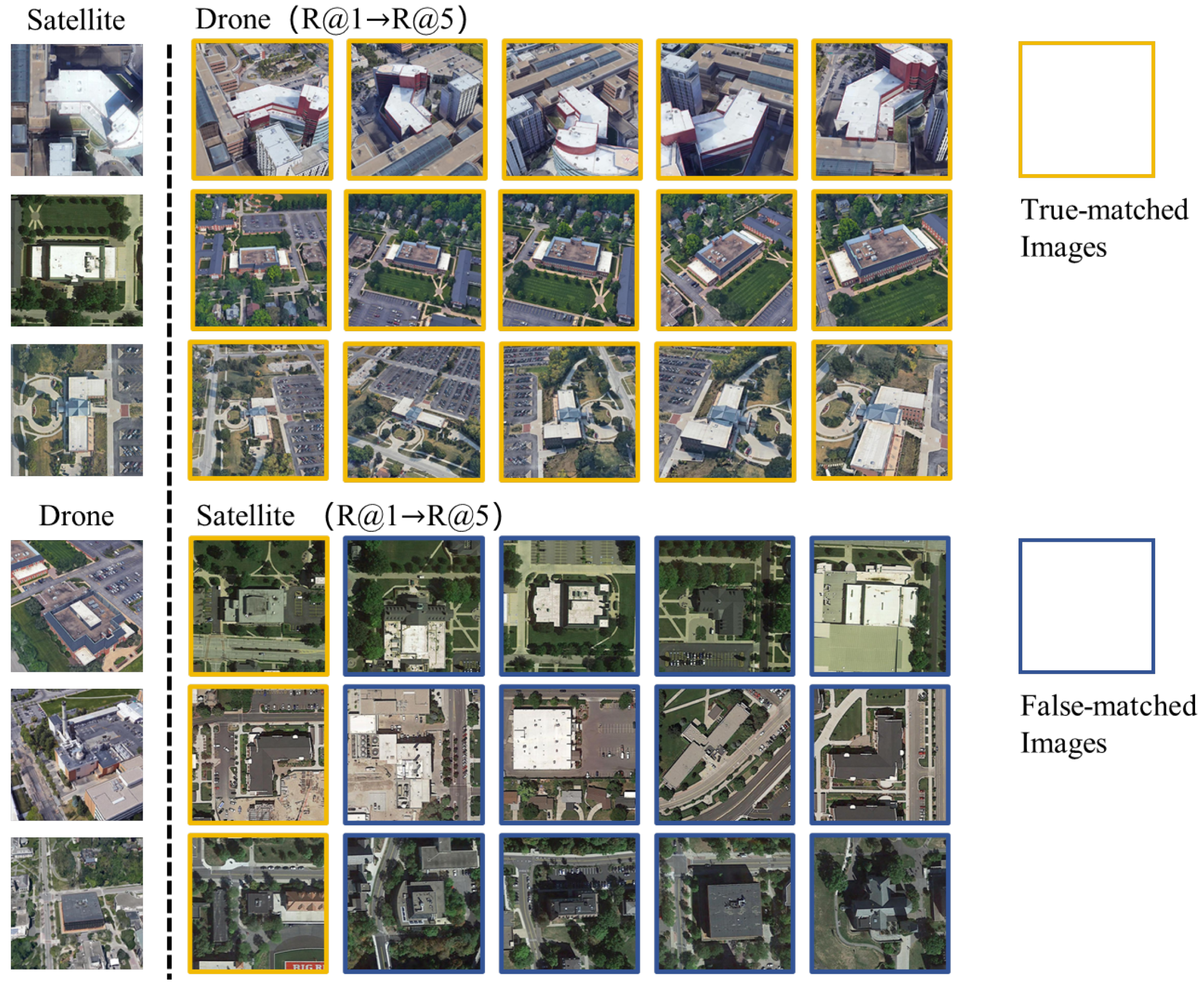

4.8. Visualization of VimGeo Network Matching Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-based navigation techniques for unmanned aerial vehicles: Review and challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Tian, Y.; Chen, C.; Shah, M. Cross-view image matching for geo-localization in urban environments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3608–3616. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Lin, T.Y.; Cui, Y.; Belongie, S.; Hays, J. Learning deep representations for ground-to-aerial geolocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5007–5015. [Google Scholar]

- Shen, T.; Wei, Y.; Kang, L.; Wan, S.; Yang, Y.H. MCCG: A ConvNeXt-based multiple-classifier method for cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 1456–1468. [Google Scholar] [CrossRef]

- Wang, T.; Zheng, Z.; Zhu, Z.; Sun, Y.; Yan, C.; Yang, Y. Learning cross-view geo-localization embeddings via dynamic weighted decorrelation regularization. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Zhu, R.; Yang, M.; Yin, L.; Wu, F.; Yang, Y. Uav’s status is worth considering: A fusion representations matching method for geo-localization. Sensors 2023, 23, 720. [Google Scholar] [CrossRef]

- Zhu, S.; Yang, T.; Chen, C. Vigor: Cross-view image geo-localization beyond one-to-one retrieval. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3640–3649. [Google Scholar]

- Zhu, S.; Shah, M.; Chen, C. Transgeo: Transformer is all you need for cross-view image geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1162–1171. [Google Scholar]

- Shi, Y.; Liu, L.; Yu, X.; Li, H. Spatial-aware feature aggregation for image based cross-view geo-localization. Adv. Neural Inf. Process. Syst. 2019, 32, 1–11. [Google Scholar]

- Hu, Z.; Fu, Z.; Yin, Y.; De Melo, G. Context-aware interaction network for question matching. arXiv 2021, arXiv:2104.08451. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhou, Q.; Sheng, K.; Zheng, X.; Li, K.; Sun, X.; Tian, Y.; Chen, J.; Ji, R. Training-free transformer architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10894–10903. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. arXiv 2024, arXiv:2401.10166. [Google Scholar] [PubMed]

- Chen, K.; Chen, B.; Liu, C.; Li, W.; Zou, Z.; Shi, Z. Rsmamba: Remote sensing image classification with state space model. IEEE Geosci. Remote Sens. Lett. 2024, 21, 8002605. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.O. Rs3mamba: Visual state space model for remote sensing image semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Liu, H.; Feng, J.; Qi, M.; Jiang, J.; Yan, S. End-to-end comparative attention networks for person re-identification. IEEE Trans. Image Process. 2017, 26, 3492–3506. [Google Scholar] [CrossRef]

- Hu, S.; Feng, M.; Nguyen, R.M.; Lee, G.H. Cvm-net: Cross-view matching network for image-based ground-to-aerial geo-localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7258–7267. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Leonardis, A.; Leonardis, A.; Pinz, A.; Bischof, H. Computer Vision-ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006: Proceedings; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; Volume 1. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Yang, C.; Zhang, X.; Li, J.; Ma, J.; Xu, L.; Yang, J.; Liu, S.; Fang, S.; Li, Y.; Sun, X.; et al. Holey graphite: A promising anode material with ultrahigh storage for lithium-ion battery. Electrochim. Acta 2020, 346, 136244. [Google Scholar] [CrossRef]

- Workman, S.; Souvenir, R.; Jacobs, N. Wide-area image geolocalization with aerial reference imagery. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3961–3969. [Google Scholar]

- Liu, L.; Li, H. Lending orientation to neural networks for cross-view geo-localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5624–5633. [Google Scholar]

- Zheng, Z.; Wei, Y.; Yang, Y. University-1652: A multi-view multi-source benchmark for drone-based geo-localization. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1395–1403. [Google Scholar]

- Zhu, R.; Yin, L.; Yang, M.; Wu, F.; Yang, Y.; Hu, W. SUES-200: A multi-height multi-scene cross-view image benchmark across drone and satellite. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 4825–4839. [Google Scholar] [CrossRef]

- Arandjelovic, R.; Gronat, P.; Torii, A.; Pajdla, T.; Sivic, J. NetVLAD: CNN architecture for weakly supervised place recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5297–5307. [Google Scholar]

- Shi, Y.; Yu, X.; Wang, S.; Li, H. Cvlnet: Cross-view semantic correspondence learning for video-based camera localization. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 123–141. [Google Scholar]

- Deuser, F.; Habel, K.; Oswald, N. Sample4geo: Hard negative sampling for cross-view geo-localisation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 16847–16856. [Google Scholar]

- Cao, Y.; Liu, C.; Wu, Z.; Zhang, L.; Yang, L. Remote sensing image segmentation using vision mamba and multi-scale multi-frequency feature fusion. Remote Sens. 2025, 17, 1390. [Google Scholar] [CrossRef]

- Ma, C.; Wang, Z. Semi-mamba-unet: Pixel-level contrastive and pixel-level cross-supervised visual mamba-based unet for semi-supervised medical image segmentation. arXiv 2024, arXiv:2402.07245. [Google Scholar] [CrossRef]

- Liao, W.; Zhu, Y.; Wang, X.; Pan, C.; Wang, Y.; Ma, L. Lightm-unet: Mamba assists in lightweight unet for medical image segmentation. arXiv 2024, arXiv:2403.05246. [Google Scholar]

- Tian, L.; Shen, Q.; Gao, Y.; Wang, S.; Liu, Y.; Deng, Z. A Cross-Mamba Interaction Network for UAV-to-Satallite Geolocalization. Drones 2025, 9, 427. [Google Scholar] [CrossRef]

- Vo, N.N.; Hays, J. Localizing and orienting street views using overhead imagery. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Cham, Switzerland, 2016; pp. 494–509. [Google Scholar]

- Weyand, T.; Kostrikov, I.; Philbin, J. Planet-photo geolocation with convolutional neural networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VIII 14. Springer: Cham, Switzerland, 2016; pp. 37–55. [Google Scholar]

- Wang, T.; Zheng, Z.; Yan, C.; Zhang, J.; Sun, Y.; Zheng, B.; Yang, Y. Each part matters: Local patterns facilitate cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 867–879. [Google Scholar] [CrossRef]

- Zhu, Y.; Yang, H.; Lu, Y.; Huang, Q. Simple, effective and general: A new backbone for cross-view image geo-localization. arXiv 2023, arXiv:2302.01572. [Google Scholar] [CrossRef]

- Lin, T.Y.; Belongie, S.; Hays, J. Cross-view image geolocalization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 891–898. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Hendrycks, D.; Mu, N.; Cubuk, E.D.; Zoph, B.; Gilmer, J.; Lakshminarayanan, B. Augmix: A simple data processing method to improve robustness and uncertainty. arXiv 2019, arXiv:1912.02781. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

| Method | # Params (M) | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|---|

| R@1 | AP | R@1 | AP | ||

| LPN [41] | 138.4 | 75.93 | 79.14 | 86.45 | 78.48 |

| SAIG-D [42] | 15.6 × 2 | 78.85 | 81.62 | 86.45 | 78.48 |

| DWDR [7] | 109.1 | 86.41 | 88.41 | 91.30 | 86.02 |

| MBF [8] | 25.5 + 99 | 89.05 | 90.61 | 92.15 | 84.45 |

| MCCG [6] | 88.6 | 89.64 | 91.32 | 94.30 | 89.39 |

| Sample4Geo (nano) [34] | 15.5 | 88.13 | 89.96 | 92.29 | 87.62 |

| Sample4Geo (tiny) [34] | 28.6 | 90.45 | 91.92 | 93.72 | 89.55 |

| Ours | 26.0 | 91.27 | 92.61 | 95.01 | 90.77 |

| Dimension | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|

| R@1 | AP | R@1 | AP | |

| 192 | 71.20 | 75.00 | 79.03 | 71.53 |

| 384 | 91.27 | 92.61 | 95.01 | 90.77 |

| 768 | 82.79 | 85.35 | 91.01 | 82.75 |

| Share Weights | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|

| R@1 | AP | R@1 | AP | |

| ✓ | 91.27 | 92.61 | 95.01 | 90.77 |

| ✕ | 89.98 | 91.62 | 94.57 | 89.60 |

| Loss Components | Drone2Sat | ||||

|---|---|---|---|---|---|

| InfoNCE | Dice | KL | Triplet | R@1 | AP |

| ✓ | 87.22 | 89.18 | |||

| ✓ | ✓ | 88.91 | 90.58 | ||

| ✓ | ✓ | 89.54 | 91.17 | ||

| ✓ | ✓ | ✓ | 91.27 | 92.61 | |

| ✓ | ✓ | 87.94 | 89.85 | ||

| ✓ | 3.46 | 5.32 | |||

| Image Size | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|

| R@1 | AP | R@1 | AP | |

| 224 | 87.01 | 89.05 | 92.29 | 86.12 |

| 256 | 89.80 | 91.47 | 94.57 | 89.46 |

| 384 | 91.27 | 92.61 | 95.01 | 90.77 |

| 512 | 91.19 | 92.58 | 94.15 | 90.72 |

| Train Set | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|

| R@1 | AP | R@1 | AP | |

| ImageNet | 3.52 | 5.04 | 14.98 | 4.21 |

| University-1652 | 12.51 | 16.76 | 17.26 | 11.69 |

| University-1652 (ImageNet pre-trained) | 91.27 | 92.61 | 95.01 | 90.77 |

| Augmentation Methods | Drone→Sat | Sat→Drone | ||

|---|---|---|---|---|

| R@1 | AP | R@1 | AP | |

| AugMix + MixUp | 87.99 | 89.92 | 95.58 | 87.04 |

| Traditional | 91.27 | 92.61 | 95.01 | 90.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, K.; Zhang, Y.; Wang, L.; Muzahid, A.A.M.; Sohel, F.; Wu, F.; Wu, Q. VimGeo: An Efficient Visual Model for Cross-View Geo-Localization. Electronics 2025, 14, 3906. https://doi.org/10.3390/electronics14193906

Yang K, Zhang Y, Wang L, Muzahid AAM, Sohel F, Wu F, Wu Q. VimGeo: An Efficient Visual Model for Cross-View Geo-Localization. Electronics. 2025; 14(19):3906. https://doi.org/10.3390/electronics14193906

Chicago/Turabian StyleYang, Kaiqian, Yujin Zhang, Li Wang, A. A. M. Muzahid, Ferdous Sohel, Fei Wu, and Qiong Wu. 2025. "VimGeo: An Efficient Visual Model for Cross-View Geo-Localization" Electronics 14, no. 19: 3906. https://doi.org/10.3390/electronics14193906

APA StyleYang, K., Zhang, Y., Wang, L., Muzahid, A. A. M., Sohel, F., Wu, F., & Wu, Q. (2025). VimGeo: An Efficient Visual Model for Cross-View Geo-Localization. Electronics, 14(19), 3906. https://doi.org/10.3390/electronics14193906