Multimodal-Based Selective De-Identification Framework

Abstract

1. Introduction

- Proposal of a novel architecture that combines image and text encoders with a cross-modality decoder to perform fine-grained object grounding based on natural language prompts for real-world.

- Demonstration of the effectiveness of zeroshot and referring-based object detection for selective de-identification, enabling precise identification of user-specified targets.

- Comparative analysis between zeroshot and referring-based approaches across multiple benchmark datasets, highlighting the advantages of prompt-driven methods.

2. Related Works

2.1. Selective De-Identification

2.1.1. Selective De-Identification Based on Object Detection

2.1.2. Selective De-Identification Based on Object Identification

- Feature similarity-based de-identification

- 2.

- Fine-grained classification-based de-identification

2.1.3. Comparison of Feature Similarity-Based and Fine-Grained Classification-Based De-Identification

2.2. Multimodal-Based Open-Set Object Detection

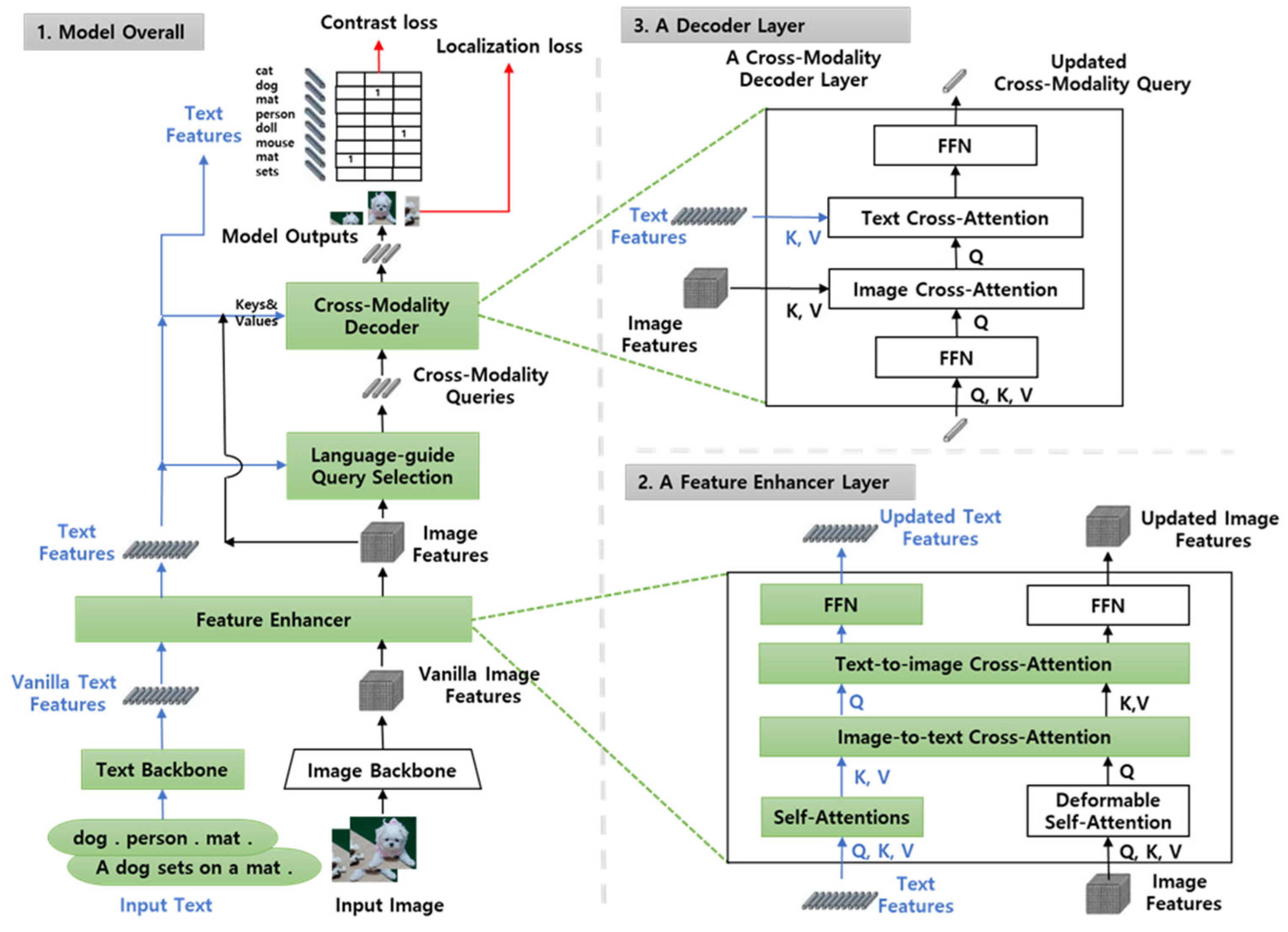

- Image Backbone: This component processes the input image using a vision transformer (e.g., Swin Transformer or ResNet) to extract high-dimensional visual features. These features encode spatial and semantic information about objects and regions within the image.

- Text Backbone: Simultaneously, the input text (e.g., a prompt like “person wearing red shorts”) is processed using a language model (typically BERT or similar transformer-based encoder) to generate contextualized textual embeddings. These embeddings capture the semantic meaning and relationships within the phrase.

- Feature Enhancer: The extracted visual and textual features are then fused through a cross-modal feature enhancer. This module aligns the two modalities in a shared semantic space, allowing the model to reason about the relationship between image regions and textual descriptions.

- Language-Based Query Selection Module: From the fused features, this module selects a set of queries that are most relevant to the textual input. These queries act as anchors for object localization and are derived from the image features, guided by the semantics of the text.

- Cross-Modality Decoder: The selected queries are passed into a transformer-based decoder that performs iterative refinement. At each decoding layer, the model attends to both visual and textual features, updating the queries to better match the target objects described in the prompt.

- Final Output Layer: After several decoding iterations, the final layer produces refined queries that are used to predict bounding boxes around the detected objects. Additionally, the model outputs grounded textual phrases that correspond to each box, effectively linking visual regions to language.

3. Methodology

3.1. Multimodal Approaches for Selective De-Identification

3.1.1. Zeroshot-Based Selective De-Identification

3.1.2. Referring-Based Selective De-Identification

3.1.3. Comparison of Zeroshot and Referring-Based Selective De-Identification

3.2. Multimodel-Based Selective De-Identification (MSD) Framework

3.2.1. Framework Role and Objectives

3.2.2. Architectural Components

- Frame Layer: The system initiates by capturing a sequence of video frames from a device or server, decodes and analyzes them to identify those containing potentially sensitive objects based on user-defined prompts, and selectively forwards only the relevant frames for further processing to optimize resource efficiency and privacy protection.

- Prompt-Guided Targeting: A natural language prompt (e.g., “person wearing red shirts”) provided by the user or system acts as a semantic filter that guides the framework to identify and focus on specific objects or individuals within the selected video frames.

- De-identification Model Layer: This module performs object grounding by integrating visual and textual features through a backbone for base-level extraction, a feature enhancer for refined discrimination, and a decoder that aligns prompt semantics with visual regions, operating in either zeroshot mode for general detection or referring mode for prompt-guided localization.

- Masking Layer: After the selective de-identification process, the system performs a final object check to verify accuracy and identify any additional targets, applies appropriate masking techniques such as blurring, resampling, or inpainting, evaluates the severity level of visual content (e.g., accidents or crime scenes) to determine the filtering intensity, and encodes the anonymized images or videos for display and distribution.

3.2.3. Strengths and Advantages

- Prompt-Guided Precision: Enables fine-grained control over anonymization targets through natural language input.

- Multimodal Reasoning: Combines visual and textual cues to enhance semantic understanding and reduce false positives.

- Scalability and Flexibility: Supports diverse object types and prompt expressions without retraining for each new category.

- Robustness to Ambiguity: Demonstrates resilience in handling complex, ambiguous, or multi-meaning prompts through referring-based detection.

- Modular Design: Allows integration of various backbone models and anonymization strategies depending on application needs.

4. Experiments

4.1. Results and Discussion

- Object and face detection followed by de-identification of all detected entities (OD, OD+FD).

- Feature-based identification, where object and face detectors are used to extract feature vector of specified targets, which are then compared against stored reference vectors to de-identify matched individuals (OD+FD+FID).

- Fine-grained object classification, leveraging pretrained models to selectively de-identify predefined targets based on detailed attribute recognition (OD+OC).

4.2. Prompt Sensitivity and Robustness to Complex Language for De-Identification

- Ambiguous prompts (e.g., “person near the car”)

- Multi-attribute prompts (e.g., “woman with a black bag and sunglasses”)

- Context-dependent prompts (e.g., “child standing behind the bench”)

- Prompt rephrasing using language models to improve clarity

- Confidence scoring for bounding box predictions

- Visual feedback mechanisms for user validation

4.3. Framework and Implementation

- Processing time: Frame-by-frame algorithm processing performance.

- Security: Accessibility of frames to external entities.

- Applicability: Ease of implementing and updating new algorithms.

- Resource distribution: Separation of rendering and algorithm computations.

- Multi-scaling: Scalability for service deployment.

| Mode | App. OS | Algorithm. OS | Interface | Characteristics | ||

|---|---|---|---|---|---|---|

| File Share | IPC | Socket | ||||

| Stand alone | windows | windows | -processing time (middle) -security (low) -applicability (middle) | -processing time (high) -security (high) -applicability (middle) | -processing time (low) -security (high) -applicability (middle) | resource distribution (low) Multi-Scaling (X) |

| windows | linux (wsl) | shared file updates not reflected in wsl | shared memory updates not reflected in wsl | |||

| Network | windows | windows | Impossibility | Impossibility | -processing time (low) -security (high) -applicability (high) | resource distribution (high) Multi-Scaling (O) |

| windows | linux | Impossibility | Impossibility | |||

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kim, D.-J.; Jeon, Y.G. A study on video automatic selective de-identification. J. Digit. Contents Soc. 2023, 24, 725–734. [Google Scholar] [CrossRef]

- Kim, D.-J.; Jeon, Y.-G.; Kim, J.-H. Automatic Selective De-identification Based on Abnormal Behavior Detection. J. Digit. Contents Soc. 2025, 26, 1069–1076. [Google Scholar] [CrossRef]

- Rempe, M.; Heine, L.; Seibold, C.; Hörst, F.; Kleesiek, J. De-Identification of Medical Imaging Data: A Comprehensive Tool for Ensuring Patient Privacy. arXiv 2024, arXiv:2410.12402. [Google Scholar] [CrossRef] [PubMed]

- Ultralytics YOLO Vision. Available online: https://github.com/ultralytics/ultralytics (accessed on 14 May 2025).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. In Proceedings of the Computer Vision—ECCV, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.-Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Ribaric, S.; Ariyaeeinia, A.; Pavesic, N. Deidentification for privacy protection in multimedia content, Signal Process. Image Commun. 2016, 47, 131–151. [Google Scholar]

- Newton, E.; Sweeney, L.; Malin, B. Preserving privacy by de-identifying face images. IEEE Transations Knowl. Data Eng. 2005, 17, 232–243. [Google Scholar] [CrossRef]

- Boyle, M.; Edwards, C.; Greenberg, S. The effects of filtered video on awareness and privacy. In Proceedings of the ACM Conference on Computer Supported Cooperative Work, Philadelphia, PA, USA, 2–6 December 2000; pp. 1–10. [Google Scholar]

- Goodfellow, I.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–11. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising difusion probabilistic models. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 6840–6851. [Google Scholar]

- Deng, J.; Guo, J.; Yang, J.; Xue, N.; Kotsia, I.; Zafeiriou, S. ArcFace: Additive Angular Margin Loss for Deep Face Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Xu, Y.; Yan, W.; Yang, G.; Luo, J.; Li, T.; He, J. CenterFace: Joint Face Detection and Alignment Using Face as Point. Sci. Program. 2020, 2020, 7845384. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. RetinaFace: Single-stage Dense Face Localisation in the Wild. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Gu, X.; Lin, T.-Y.; Kuo, W.; Cui, Y. Open-vocabulary Object Detection via Vision and Language Knowledge Distillation. In Proceedings of the Tenth International Conference on Learning Representations, Virtual, 25 April 2022. [Google Scholar]

- Zhou, X.; Girdhar, R.; Joulin, A.; Krähenbühl, P.; Misra, I. Detecting Twenty-thousand Classes using Image-level Supervision. In Proceedings of the Computer Vision—ECCV, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Zhong, Y.; Yang, J.; Zhang, P.; Li, C.; Codella, N.; Li, L.H.; Zhou, L.; Dai, X.; Yuan, L.; Li, Y.; et al. RegionCLIP: Region-based Language-Image Pretraining. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Zang, Y.; Li, W.; Zhou, K.; Huang, C.; Loy, C.C. Open-Vocabulary DETR with Conditional Matching. In Proceedings of the Computer Vision—ECCV, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Li, L.H.; Zhang, P.; Zhang, H.; Yang, J.; Li, C.; Zhong, Y.; Wang, L.; Yuan, L.; Zhang, L.; Hwang, J.-N.; et al. Grounded Language-Image Pre-training. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Yu, L.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Bansal, M.; Berg, T.L. MAttNet: Modular Attention Network for Referring Expression Comprehension. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Chen, Y.-C.; Li, L.; Yu, L.; El Kholy, A.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. UNITER: UNiversal Image-TExt Representation Learning. In Proceedings of the Computer Vision—ECCV, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Deng, J.; Yang, Z.; Chen, T.; Zhou, W.; Li, H. TransVG: End-to-End Visual Grounding with Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Du, Y.; Fu, Z.; Liu, Q.; Wang, Y. Visual Grounding with Transformers. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar] [PubMed]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V4: Unified image classification, object detection, and visual relationship detection at scale. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.-J.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- AI-Hub Dataset (Video for Vehicle Model/Year/License Plate Recognition). Available online: https://www.aihub.or.kr (accessed on 14 May 2025).

| Model | Key Feature | Dim. (224 × 224) | Strengths | Limitations | Application Domain |

|---|---|---|---|---|---|

| MobileNetV2 | Inverted residuals, depth-wise conv | 7 × 7 × 1280 | Lightweight, fast, mobile-friendly | Lower accuracy than larger models | Mobile apps, edge devices, IoT |

| ResNet-50/101 | Residual learning, deep architecture | 7 × 7 × 2048 | Stable training, high expressiveness | High computational cost | Object/person detection, feature matching |

| DenseNet121 | Layer connectivity, feature reuse | 7 × 7 × 1024 | Combines features, mitigates vanishing | High memory usage | Medical imaging, fine-grained recognition |

| EfficientNet-B0~B7 | Compound scaling, efficient representation | B|B0: 7 × 7 × 1280 | High accuracy, low parameters | Model size increases with scale | Autonomous driving, high-res classification |

| VGG16/19 | Sequential layers, simple structure | 7 × 7 × 512 | Easy to use, good for transfer learning | Many parameters, slower inference | Feature extraction, transfer learning |

| Model | BB | PTD | Zeroshot 2017val | COCO Fine-Tuning 2017val |

|---|---|---|---|---|

| GroundingDINO T | Swin-T | O365 | 46.7 | 56.9 |

| GroundingDINO T | Swin-T | O365, GoldG | 48.1 | 57.1 |

| GroundingDINO T | Swin-T | O365, GoldG, Cap4M | 48.4 | 57.2 |

| GroundingDINO L | Swin-L | O365, OI, GoldG | 52.5 | 62.6 |

| Model | BB | PTD | FT | RefCOCO | RefCOCO+ | RefCOCOg | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | val | test | ||||

| GroundingDINO T | Swin-T | O365, GoldG | No | 50.41 | 57.74 | 43.21 | 51.40 | 57.59 | 45.81 | 67.46 | 67.13 |

| GroundingDINO T | Swin-T | O365, GoldG, RefC | No | 79.98 | 74.88 | 59.29 | 66.81 | 69.91 | 56.09 | 71.06 | 72.07 |

| GroundingDINO T | Swin-T | O365, GoldG, RefC | Yes | 89.19 | 91.86 | 85.99 | 81.09 | 87.40 | 74.71 | 84.15 | 84.94 |

| GroundingDINO L | Swin-L | O365, OI, GoldG, Cap4M, COCO, RefC | Yes | 90.56 | 93.19 | 88.24 | 82.75 | 88.95 | 75.92 | 86.13 | 87.023 |

| Model | Zeroshot | Referring |

|---|---|---|

| Purpose | De-identify objects of undefined classes | De-identify specific objects referred to by sentences or phrases |

| Input | Image class name(text) | Image + Natural Language Sentence |

| Output | Masking the location (bounding box) of objects of the corresponding class | Masking the location (bounding box) of the object referred to in the sentence |

| example | De-identify cats in images even though there was no class called “cat” in the training. | Describe the sentence “brown dog sitting on a chair” and de-identify the dog. |

| Algorithm | Model | Target | Type | PTD | Test Dataset | ||

|---|---|---|---|---|---|---|---|

| COCO2017 | AI-HUB | RefCOCO | |||||

| OD | YOLOv11x | Person | GC | COCO | 66.2 | - | 68.4 |

| OD | YOLOv11x | Emblem | GC | AI-HUB | - | 48.7 | - |

| OD+FD | YOLOv11x, Centerface | Face | GC | COCO, WIDERFACE | 64.8 | - | 66.6 |

| OD+FD+FID | YOLOv11x, Centerface, MobileNetV2 | Face | ISID | COCO, WIDERFACE | - | - | - |

| OD+OC | YOLOv11x, EfficientNet(B0) | Emblem (subclass) | ISID | AI-HUB | - | 42.1 | - |

| ZD | GroundingDINO B | Person | PGC | O365, OI, GoldG, Cap4M, COCO, RefC | 70.4 | - | 71.7. |

| ZD | Face | PGC | 62.8 | - | 62.1. | ||

| ZD | Emblem | PGC | - | 39.4 | - | ||

| RD | Person | PGID | - | - | 64.3 | ||

| RD | Face | PGID | - | - | 62.3 | ||

| Model | Role | Resolution | Average Inference Speed (ms) |

|---|---|---|---|

| YOLOv11 | Object detection (Person, Emblem) | 1280 × 720 | 15.14 |

| Centerface | Face detection | 1280 × 720 | 12.02 |

| MobileNetV2 | Extract Feature | 128 × 128 | 3.32 |

| EfficientNet | Object classification (Emblem) | 128 × 128 | 7.04 |

| GroundingDINO | Zeroshot, referring object detection | 1280 × 720 | 40.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.-J. Multimodal-Based Selective De-Identification Framework. Electronics 2025, 14, 3896. https://doi.org/10.3390/electronics14193896

Kim D-J. Multimodal-Based Selective De-Identification Framework. Electronics. 2025; 14(19):3896. https://doi.org/10.3390/electronics14193896

Chicago/Turabian StyleKim, Dae-Jin. 2025. "Multimodal-Based Selective De-Identification Framework" Electronics 14, no. 19: 3896. https://doi.org/10.3390/electronics14193896

APA StyleKim, D.-J. (2025). Multimodal-Based Selective De-Identification Framework. Electronics, 14(19), 3896. https://doi.org/10.3390/electronics14193896