1. Introduction

As a foundational component of natural language processing (NLP), event extraction focuses on identifying events and their key participants from unstructured texts, supporting higher-level tasks such as semantic retrieval, knowledge graphs, and automated reasoning [

1,

2]. Driven by advances in neural architectures and the accumulation of multilingual annotated corpora, event extraction has made significant progress in well-resourced languages, particularly English and Chinese [

3,

4].

The event extraction task usually includes two core subtasks: event trigger detection (ETD) and event argument extraction (EAE) [

5,

6]. The event argument extraction task focuses on identifying the participating entities and their semantic roles in the event, which is a key part of the complete modeling of event structure [

7]. Compared with event trigger detection, argument extraction requires higher syntactic analysis and semantic comprehension, especially in scenarios with scarce language resources and complex text structures, which are more challenging [

8]. Therefore, this paper focuses on the event argument extraction task in the Tibetan judicial context.

The core difficulties of extracting Tibetan judicial events mainly lie in the limitations of low-resource languages, the particularity of judicial texts, and the limitations of existing methods [

9,

10,

11]. Tibetan is a typical low-resource language that lacks large-scale, high-quality annotated corpora, which seriously restricts the training and generalization capabilities of deep learning models [

9,

10]. Tibetan judicial texts are typically characterized by complex structures, dense legal terminology, and lengthy sentence patterns, which pose greater challenges for the identification and modeling of event arguments. In addition, judicial texts often involve the interweaving of multiple roles and events, further increasing the complexity of analysis. Although existing sequence labeling methods (e.g., BiLSTM-CRF) have achieved promising results in high-resource languages, they perform poorly in the Tibetan judicial domain, mainly due to their inability to capture long-distance semantic dependencies and their limitations in handling sparse arguments and cross-domain transfer [

11,

12].

In response to the above challenges, researchers have proposed a variety of solutions. Traditional event argument extraction methods mainly adopt classification or sequence labeling paradigms, transforming argument extraction tasks into role classification or label assignment problems [

13]. Although such methods perform well in scenarios with simple structures and sufficient data, their performance often drops significantly when dealing with low-resource languages due to insufficient training data. In recent years, researchers have begun to try to reconstruct event extraction tasks into new paradigms such as generative extraction and question-answering extraction, alleviating the impact of data scarcity through modeling transformations [

14,

15,

16,

17]. Among them, the method based on machine reading comprehension (MRC) reduces task complexity and demonstrates good transfer and knowledge fusion capabilities by virtue of its problem-guiding mechanism and semantic modeling advantages [

15].

Machine reading comprehension transforms the event argument extraction task into a series of question–answer pairs [

18,

19,

20]. Through carefully designed question templates, the model is guided to focus on specific events and their arguments, effectively decomposing the originally complex extraction task [

18]. Question templates contain prior knowledge of event types and argument roles, providing the model with additional semantic information and helping to improve the model’s capability to understand the relationship between events and arguments [

19]. The MRC paradigm has strong cross-domain transfer capabilities, enabling the model to use general domain data for transfer learning, thereby achieving effective application in low-resource scenarios [

20]. These characteristics make MRC-based approaches particularly suitable for event argument extraction in resource-constrained languages like Tibetan.

Building upon the above analysis, this paper introduces an MRC-driven approach for extracting event arguments from Tibetan judicial texts and builds a complete technical system covering question template design, extraction model construction, and training strategy optimization. Through the reconstruction of task modeling and knowledge fusion, it offers a more efficient and transferable strategy for event argument extraction in languages with limited resources, providing a reference framework for other low-resource or domain-specific applications.

The main contributions of this study are delineated as follows:

- (1)

Designing high-quality question templates that integrate the semantics of event type and associated argument roles for the Tibetan judicial field, combining Tibetan interrogative words with event contextual information, effectively alleviating the problem of semantic ambiguity;

- (2)

Proposing an MRC_TibAE framework based on CINO (Chinese Minority Pretrained Language Model), introducing multi-head self-attention to strengthen semantic interaction and modeling capabilities between event sentences and questions;

- (3)

Developing a two-stage training strategy for low-resource languages, in which the model is first trained on a general Tibetan MRC dataset to acquire general language understanding, and then fine-tuned on domain-specific judicial corpora to improve transferability and extraction performance.

3. Methodology

This paper proposes a framework comprising three interrelated components, building a comprehensive event argument extraction process for Tibetan judicial texts.

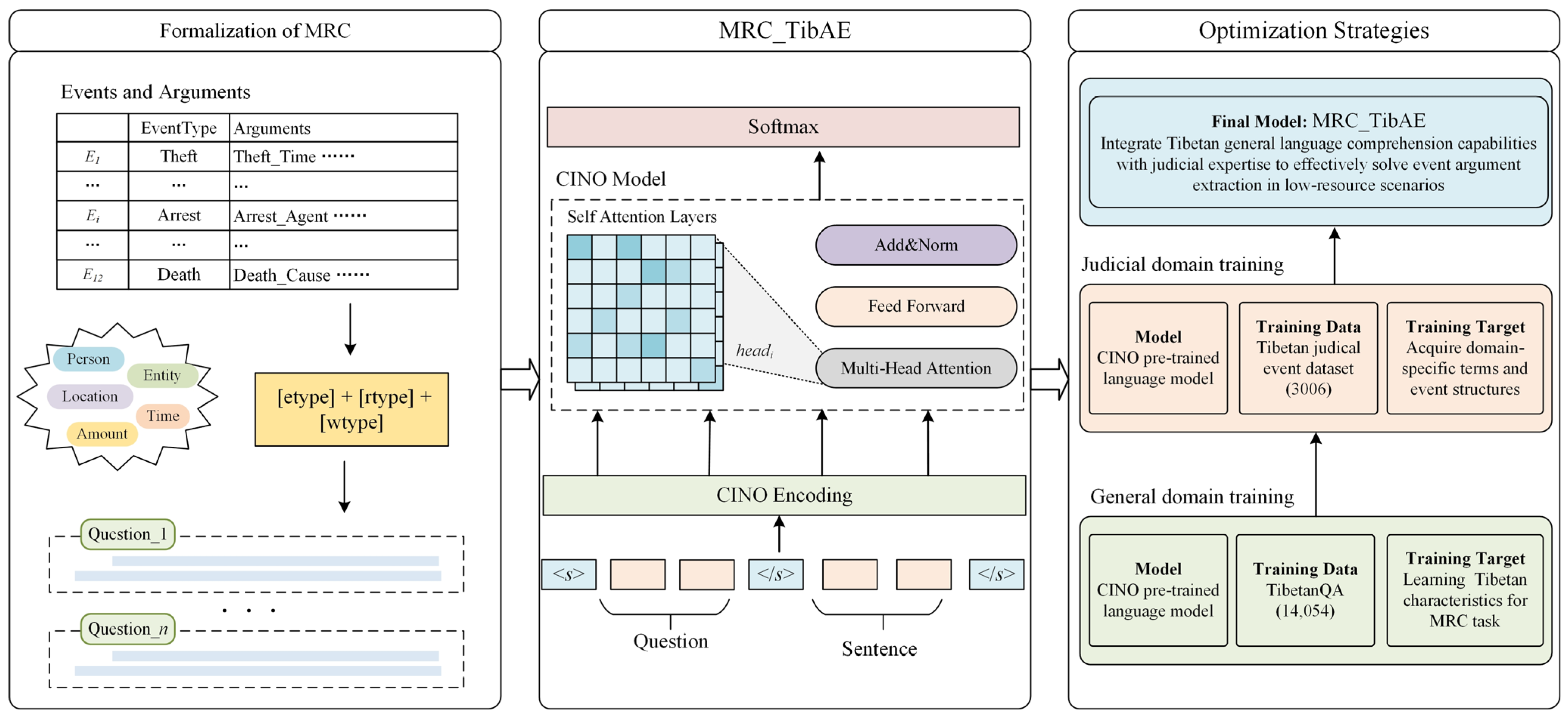

Figure 1 shows that the framework has three main parts: task formalization, the MRC_TibAE architecture, and an optimization strategy for low-resource languages.

The task formalization module transforms the traditional event argument extraction tasks into structured question-answering problems. Through carefully designed question templates, it explicitly embeds event type and argument information, guiding the model to focus on extracting key event elements. The MRC_TibAE architecture concatenates the question with the event sentence and employs multi-head self-attention to model complex dependencies between them; then, the core features are processed using a feedforward neural network and residual connections and normalization layers; finally, the start and end positions of the target segment in the text are predicted through the normalized Softmax classification layer. An optimization strategy for low-resource languages addresses the challenge of data scarcity. The model is trained on the TibetanQA dataset for general-purpose Tibetan machine reading comprehension, acquiring basic Tibetan language comprehension capabilities. It is then fine-tuned on a specific judicial dataset. This two-stage training approach effectively leverages a wider range of general-purpose datasets, establishing foundational Tibetan language comprehension capabilities adapted to the specialized judicial domain.

3.1. Task Formalization

3.1.1. Question Template Design

This study designs a question representation method that integrates event semantic knowledge. By explicitly embedding event type and argument information into query templates, a set of questions with clear semantic orientation is constructed, effectively eliminating ambiguity and enhancing the model’s ability to locate answers.

Table 1 systematically presents the event argument classification system constructed in this study. This system covers 51 event elements across five categories—person, entity, amount, location, and time—providing structured extraction targets for the machine reading comprehension framework.

To precisely guide the model in locating event arguments, this study designs structured question templates that explicitly instruct the model to search for specific arguments under a given event type. This structured question template design ensures that each question clearly includes information about the event type and argument role, effectively eliminating semantic ambiguity and improving the model’s ability to locate specific event arguments. To standardize the design of question templates, the templates are formalized as shown in Equation (1).

Here,

denotes the question template,

represents the event type,

represents the argument role,

refers to the type of interrogative word, and

Template represents the question template generation function.

The question templates shown in

Figure 2 correspond one-to-one with the 51 types of event arguments listed in

Table 1, forming a complete argument extraction question set, which provides the model with clear extraction targets and search directions. This structured system of question templates enables the model to query event elements in a standardized manner, ensuring the consistency and effectiveness of the extraction process. Each question template precisely embeds information about the event type and argument roles, eliminating semantic ambiguity and enhancing the model’s ability to locate key event elements in judicial texts.

3.1.2. Task Reformulation

Traditional event argument extraction methods mainly adopt the classification or sequence labeling paradigms. However, such methods are highly data-dependent and struggle to generalize in limited-resource settings. To address this limitation, this study reformulates event argument extraction as a question-answering-based task. Each event argument is represented by a corresponding question. Given the question

and the event sentence

, the model predicts the boundaries of the target argument span by learning the mapping function

. Formally, the model seeks to capture the following mapping:

Here,

denotes the joint modeling function of the question

and event sentence

, and

represents the beginning and ending indices of the start and end positions of the target argument fragment in the original text.

This modeling approach transforms the structured event argument extraction task into an interpretable and easily transferable question-answering matching problem, providing a strong basis for subsequent model architecture design and training optimization.

3.2. MRC_TibAE

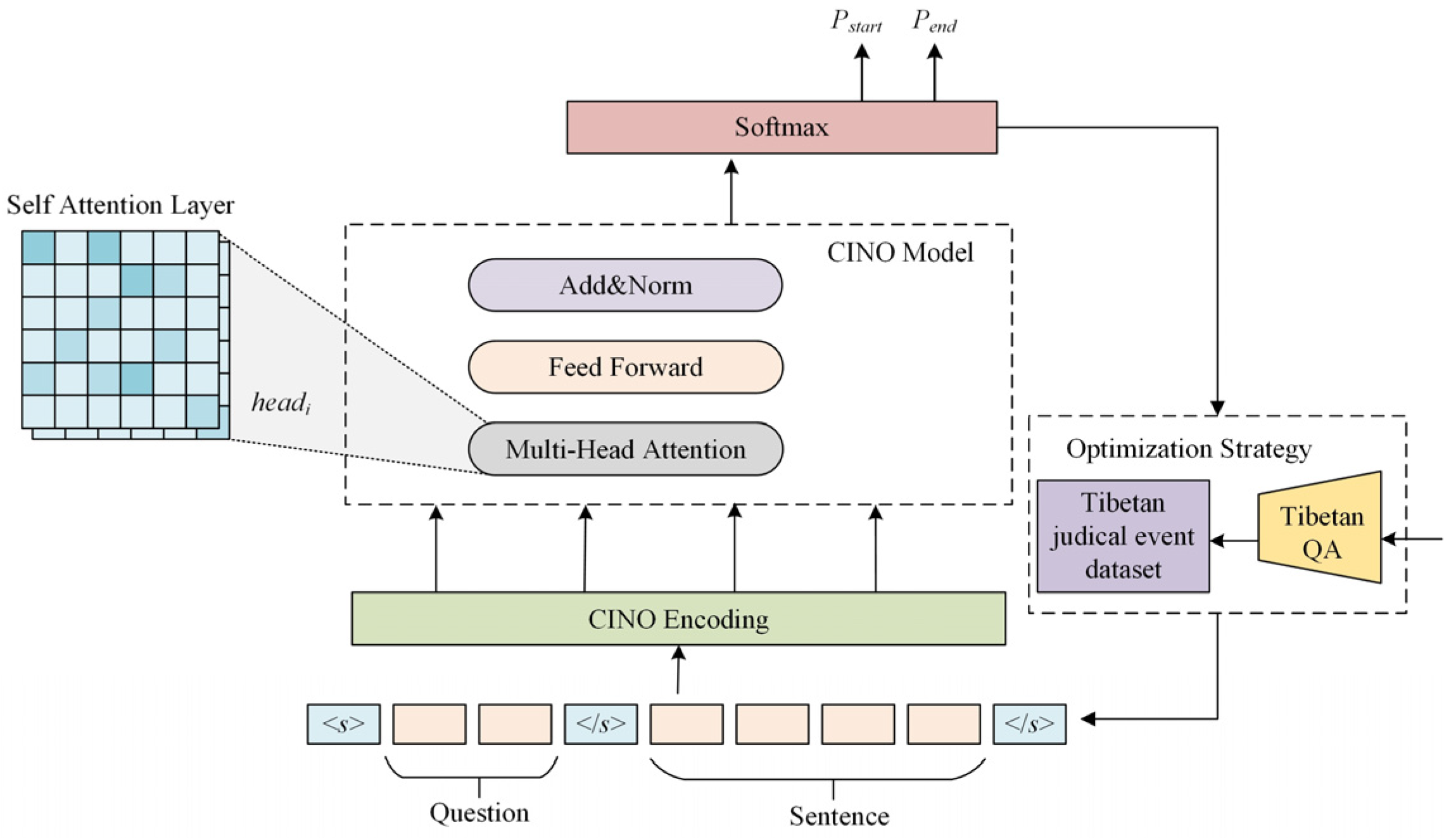

Based on the MRC paradigm following task reformulation, this paper proposes the MRC_TibAE model (Machine Reading Comprehension Model for Tibetan Judicial Event Argument Extraction). The model’s overall architecture is depicted in

Figure 3 and includes three key components: the encoding layer, the interaction layer, and the prediction layer. The model employs the minority language pre-training model CINO as the basic encoder. Using self-attention, the model effectively encodes the semantic interplay between the question and the event sentence. Finally, a position prediction module is used to locate and extract the event arguments.

3.2.1. Encoding Layer

The encoding layer is responsible for converting the Tibetan input sequence into a dense vector representation. The input consists of two parts: event sentence

(

refers to the length of

) and question

(

refers to the length of

). For joint modeling, the two parts are concatenated into a unified input sequence

in the form of:

where

and

indicate the sequence start token and separator token, respectively, and

represents the sequence concatenation operation. The size of the concatenated input is

.

The CINO encoder processes the input to generate three types of embedding: token embedding, positional embedding, and segment embedding.

Here,

,

, and

represent the token embedding, positional embedding, and segment embedding, respectively.

refers to the input sequence size, and

is the embedding dimension.

The CINO model applies multi-layer stacked encoding to the input and produces high-dimensional contextual representations for subsequent processing.

3.2.2. Interaction Layer

Tibetan judicial texts are complex in structure and information-dense, so the model needs to have strong context modeling capabilities. To accurately capture the semantic association between questions and event sentences, this study adopts a multi-head self-attention mechanism to encode interactions between tokens, effectively directing attention toward question-relevant content. Specifically, given the input representation

, the model utilizes

h parallel multi-head attention modules to capture multi-level semantic relationships from different perspectives. The computation of the

i-th self-attention head,

, is defined as shown in Equation (5).

Here,

,

,

,

,

,

are learnable parameter matrices, and

is the scaling factor for the attention mechanism.

The outputs of all attention heads,

, are merged and subsequently transformed via a linear layer to obtain the fusion representation of multi-head attention, as shown in Equation (6).

Here,

is the output mapping matrix.

is the concatenation function.

denotes the output generated by the

i-th attention head.

represents the count of attention heads.

Through the multi-head self-attention mechanism, the model can capture deep semantic associations within the interaction representations between the question and event sentence. In this process, each word

in the event sentence interacts with each word

in the question, which strengthens the model’s ability to comprehend the event sentence in a question-aware manner. After processing by multi-layer self-attention and feedforward networks, the final hidden representation from the CINO model is taken and recorded as

:

Here,

represents the final semantic interaction representation,

refers to the input length, and

is the size of the hidden representation.

3.2.3. Prediction Layer

After obtaining the deep semantic representation

, two independent linear classifiers are used to estimate the likelihood that each token corresponds to the beginning or end of the target argument span, respectively:

where

represents the likelihood assigned to each token being the starting boundary of the target argument span.

is a learnable parameter matrix.

where

denotes the likelihood assigned to each token being the ending boundary of the target argument span.

is the learnable parameter matrix.

After obtaining the position probability distribution, enumerate all possible start and end position pairs (

i,

j) to construct the candidate span range set

:

To ensure the rationality of the extraction results, the model designs a systematic constraint mechanism to screen the candidate range. First, the span length must not exceed the preset maximum value. Second, the start position cannot be later than the end position (i.e.,

). Finally, the target span needs to fall completely within the valid range of the original text. For all candidate spans that meet the constraints, the joint probability score of each start and end position pair is calculated, as shown in Equation (11).

where

denotes the likelihood of the

i-th token being selected as the start of the target span.

denotes the likelihood of the

j-th token being selected as the end of the target span.

denotes the joint probability score.

All joint probability scores are ranked from highest to lowest, the

N candidate ranges with the highest scores are selected, and the fragment yielding the maximum probability score is ultimately retained, as illustrated in Equation (12). If the scores of all candidate fragments are lower than the preset threshold, or no valid candidate fragments are generated during the screening process, the model will return an empty result, indicating that no relevant events or arguments are detected.

Here,

denotes the sequence span predicted by the model, which is mapped back to the original text space.

3.3. Optimization Strategies for Low-Resource Languages

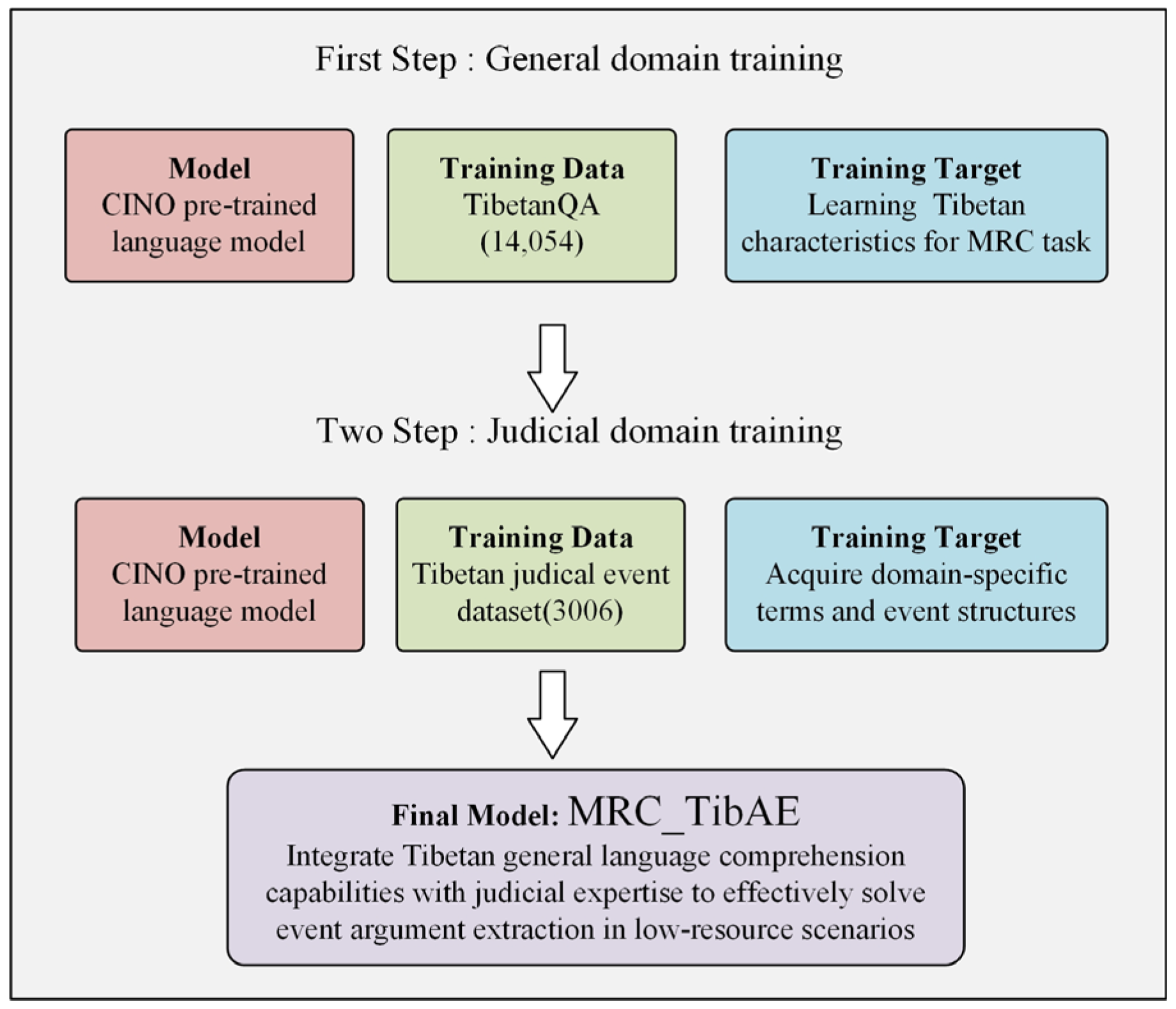

3.3.1. Two-Stage Training Strategy

The complex linguistic structure of Tibetan judicial texts and the severe lack of annotated data make it difficult for models to directly learn effective representations from limited data. To improve the model’s adaptability in this domain, this paper proposes a two-stage strategy. This strategy leverages general domain knowledge to strengthen the model’s language understanding capabilities, then transfers this knowledge to the judicial domain to enhance the model’s ability to learn specialized terminology and event structure.

As shown in

Figure 4, the first stage is trained on the general Tibetan machine reading comprehension dataset TibetanQA so that the model can learn general Tibetan language comprehension and Q&A modeling capabilities and be familiar with the basic grammar, common vocabulary, and syntactic structure of Tibetan. The formal representation is as follows:

Here,

represents the initial model parameters and

represents the Tibetan machine reading comprehension dataset.

refers to the loss function.

indicates the parameters after the general domain training is completed.

The second phase continues fine-tuning on the Tibetan judicial event dataset, adapting the model to the specific semantic features of Tibetan judicial texts, such as professional terminology, event structure, and syntactic expressions.

Here,

is the model parameter saved in the first stage and

represents the model parameter after fine-tuning in the judicial field.

represents the Tibetan judicial event dataset. Through domain-specific fine-tuning on judicial data, the model acquires legal-domain linguistic features, which in turn enhances its performance on judicial event extraction.

Through a two-stage strategy, the model can be adapted both at the language level and the task level, thereby alleviating the low-resource language problem and improving the final extraction effect.

3.3.2. Loss Function Design

The model employs binary cross-entropy to quantify the discrepancy between predicted and true start/end positions of the argument span. In each training iteration, model parameters are optimized by minimizing the loss, aligning the predicted start and end positions more closely with the annotated ground truth. Specifically, the target loss comprises two parts–

and

—representing the start and end prediction losses, respectively. The loss is calculated using the following expression:

Here,

denotes the true start position of the target argument span, and

denotes the true end position.

denotes the predicted probability distribution over the start positions, and

denotes the predicted probability distribution over the end positions.

During training, the AdamW optimizer is used to optimize the loss function, and parameter updates are performed by minimizing the total loss. The overall training loss integrates the losses for both the beginning and ending positions of the target argument span:

Here,

denotes the total loss of the model.

and

represent the cross-entropy losses for the start and end positions of the target argument span, respectively.

4. Experiments

4.1. Experimental Environment Configuration

The experiment uses the CINO minority language pre-trained model [

39], which has a hidden layer vector dimension of 768. All experiments are performed using a workstation that integrates two Tesla P40 GPUs, each offering 24 GB of memory. The software environment includes Python 3.8, PyTorch 2.4.1 (CUDA 11.8), and Transformers 4.46.0. This configuration is well-suited for high-dimensional vector processing tasks, such as fine-tuning and reasoning with pre-trained language models. It enables efficient handling of large-scale text data and long-sequence inputs, meeting the computational requirements of Tibetan judicial event extraction tasks.

4.2. Datasets

The experiment uses two Tibetan datasets: one is a MRC dataset in the general domain, TibetanQA [

27,

28]; the other is an event extraction dataset in the judicial domain [

12].

- (1)

TibetanQA: This dataset was released by Sun Yuan et al. It contains a total of 14,054 Tibetan samples covering 12 topics such as nature, culture, education, etc., and supports question-answer types such as word-matching, synonym substitution, and multi-sentence reasoning. The raw data are presented in the form of triples, including article, question, and answer. During data preprocessing, this paper uses regular expressions to locate the answers and labels for each sentence. A total of 90% of the data is allocated for training, while the remaining 10% is used for validation.

- (2)

Judicial event dataset: The dataset was constructed from publicly available Tibetan judicial documents sourced from China Judgments Online, containing 3006 Tibetan judicial event records covering 12 event types and their corresponding 51 event arguments, with all personal names anonymized to protect privacy. To better accommodate the specific task requirements of this study, the original event and argument labels were suitably revised.

Table 2 summarizes the revised event types along with their associated argument roles. An 8:1:1 split with stratified sampling is applied to divide the dataset into training, validation, and test portions. To adapt the data to the MRC task format, this paper preprocesses the original annotations by converting them into a standardized set of triples while preserving the original text span positions of the event arguments. This ensures compatibility with model training and inference.

4.3. Evaluation Metrics

This paper uses precision, recall and F1-score as evaluation indicators and uses the micro-average method for calculation. The specific calculation methods are presented in Equations (18)–(20).

Here, TP (True Positive) denotes correctly predicted instances, FP (False Positive) refers to incorrect predictions made by the model, and FN (False Negative) represents the instances that the model failed to identify. Regarding argument boundary determination, this paper adopts a relaxed matching strategy [

40,

41] for evaluation. When the extracted argument span highly overlaps with the gold standard span, it is considered correctly identified.

4.4. Experiment and Results

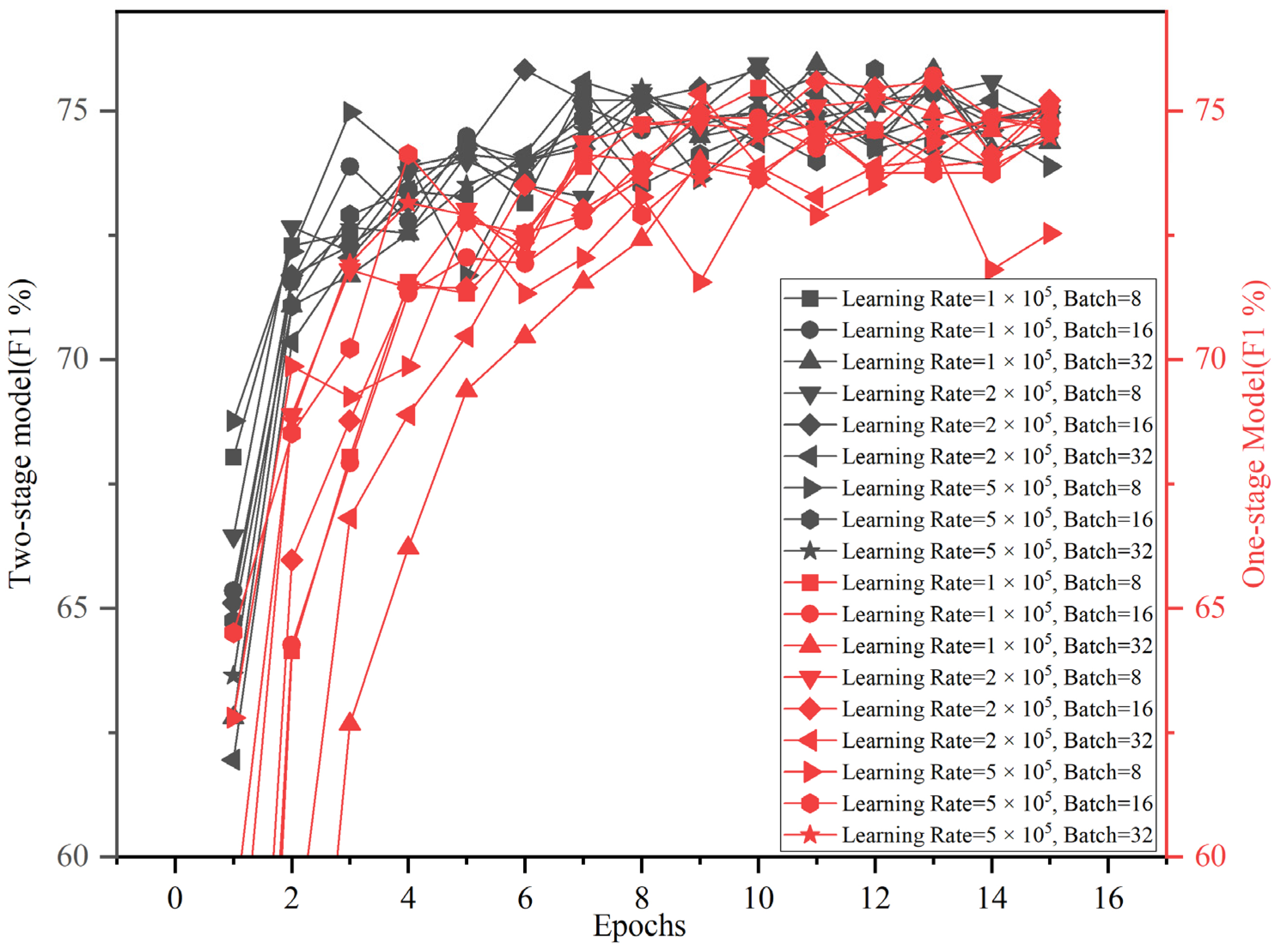

This paper uses a grid search strategy to optimize key hyperparameters. The learning rate search space is set to [1 × 10−5, 2 × 10−5, 5 × 10−5], and the batch size search space is [8, 16, 32]. The optimal configuration is found through exhaustive combinations. The training procedure is limited to 15 epochs, with early stopping employed to prevent overfitting. The patience value is set to 4 epochs, meaning that training is terminated after four consecutive epochs of validation set performance failure.

The final hyperparameter configuration is summarized in

Table 3. A batch size of 8 and a learning rate of 2 × 10

−5 are employed, while the maximum sequence length is set to 400 and the document step size to 128. The maximum length of questions and answers is limited to 64, and the optimizer uses AdamW.

This study conducted experiments on five randomly partitioned datasets, setting five random seeds in each partition, for a total of 25 experimental groups. According to the experimental results, the proposed model attains an average F1-score of 0.7659, with a standard deviation of 0.0101 and a 95% confidence interval of [0.7617, 0.7701]. This indicates that the results are concentrated and have low fluctuations, demonstrating good stability and reliability. Regarding the performance of different datasets, the best result was observed on Dataset 2, with an average F1-score of 0.7816, while the performance of Datasets 1 and 4 was relatively low but still within a reasonable range. Although there were some differences between the results of each group, they remained at a high level overall, further verifying the robustness of the proposed method under different data conditions.

5. Discussion

5.1. Model Effectiveness Analysis

5.1.1. Impact of Hyperparameter Settings

To determine the optimal hyperparameter configuration, we systematically experimented with combinations of learning rates [1 × 10

−5, 2 × 10

−5, 5 × 10

−5] and batch sizes [8, 16, 32], training for 15 epochs.

Figure 5 shows the performance of the model under different learning rate and batch size combinations.

Results show that different hyperparameter combinations lead to significant differences in convergence speed and performance. A learning rate that is too low often leads to slow model convergence, making it difficult to reach optimality within a limited number of epochs. A learning rate that is too high leads to rapid initial improvement but is accompanied by significant fluctuations and instability. Comprehensive analysis shows that the combination of a learning rate of 2 × 10−5 and a batch size of 8 achieves the best balance between convergence speed, stability, and final performance. Therefore, this configuration was ultimately selected as the core parameter for subsequent experiments.

5.1.2. The Performance of the Two-Stage Training Strategy

To validate the effectiveness of the two-stage training strategy, this paper compared a single-stage approach (training directly on judicial domain data) with a two-stage approach (first training on the general Tibetan MRC dataset and then fine-tuning on judicial domain data).

The experimental findings are presented in

Figure 5. They demonstrate that the two-stage approach demonstrates significant advantages in low-resource scenarios. Compared to the single-stage approach, the two-stage approach achieves faster performance gains in the early stages of model convergence and maintains greater stability in the later stages of training. This demonstrates that prior training on the general Tibetan MRC dataset enables the model to learn cross-task language understanding capabilities and question-answering patterns, laying a solid foundation for subsequent adaptive fine-tuning in the judicial domain. On the other hand, while the single-stage approach also gradually converges, it exhibits significant performance lags in the early stages and a relatively limited upper limit in the later stages. This suggests that under data-scarce conditions, the single-stage approach struggles to fully capture semantic regularities, resulting in insufficient model generalization. The two-stage approach, by transferring existing linguistic and semantic knowledge, effectively alleviates the performance bottleneck caused by limited data size.

5.1.3. Impact Analysis of Question Template Design

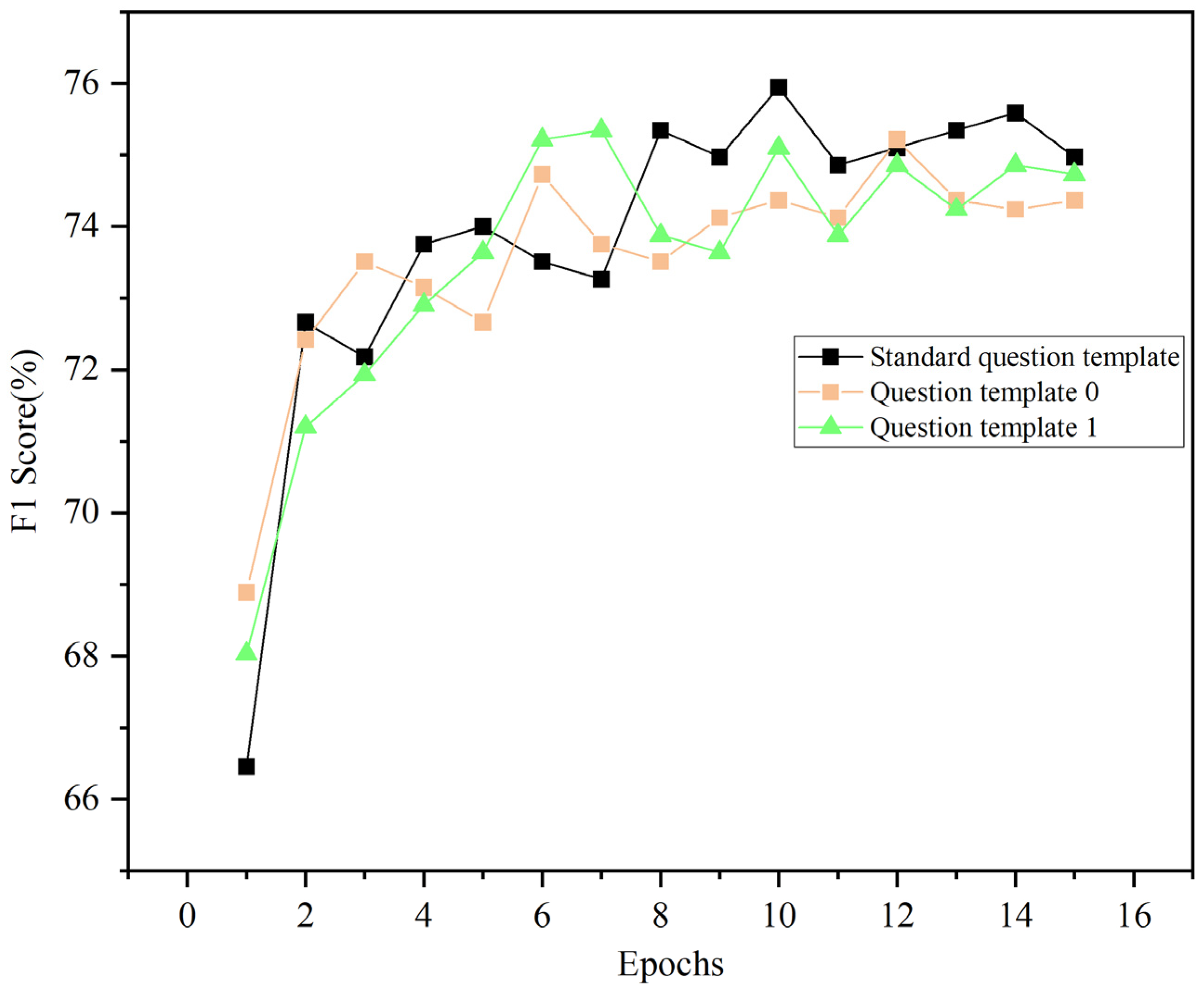

This paper designed three different question templates for comparative experiments.

Figure 6 shows the performance of the three question templates. Question Template 0 only contains event argument role information, Question Template 1 adds interrogative words and uses natural language questioning, and the standard question template is the template proposed in this paper.

While Template 0 provides the model with basic extraction guidance, its expression is overly brief and lacks semantic context, which can easily lead to confusion when the model encounters similar roles. Template 1 enhances the readability and naturalness of questions to a certain extent, enabling the model to better align questions with sentences, but its semantic guidance is still insufficient. In contrast, the question template proposed in this paper further incorporates event semantic information, making the questions more targeted and discriminative. Experimental curves indicate that the proposed question template attains optimal final results and provides more stable overall training.

5.2. Model Stability and Generalization Ability Evaluation

Based on the optimal hyperparameter configuration, a systematic experiment was conducted using a cross-validation strategy combining multiple datasets and multiple random seeds. Specifically, the dataset was randomly divided into five independent datasets at an 8:1:1 ratio, and five different random seeds (42, 123, 456, 789, and 1024) were set. A total of 25 independent experiments were carried out to verify the consistency and robustness of the results.

Figure 7 shows the performance distribution across various datasets. Overall, the model exhibits good stability across all partitions, with the mean F1-score remaining within a range of 75.85% to 78.16%. The medians across datasets show little variation, and the vast majority of results are concentrated with minimal fluctuation, demonstrating the model’s robustness and consistency in low-resource scenarios. Experimental results under different random seeds exhibit no extreme outliers, further validating the model’s robustness in low-resource scenarios. Notably, while subtle differences persist between some datasets, the overall variation remains within a reasonable range, with no significant deviations or abnormal fluctuations. This demonstrates that the proposed method enhances the model’s convergence efficiency and task adaptability, and ensures consistent performance even under uncertain data partitioning.

5.3. Performance Analysis at Event Type Granularity

To provide a clearer analysis of different event types, we conducted a fine-grained evaluation on the 12 event categories in the dataset. We used the same experimental setup as in

Section 5.2, conducting 25 independent experiments under five data partitions and five random seed conditions to ensure statistical reliability. The experimental outcomes for various event types are illustrated in

Table 4 and

Figure 8.

From the overall distribution perspective, performance across event types shows differences. “Drunk Driving” achieved the highest performance, reaching an average F1-score of 0.9050 (95% CI: [0.893, 0.917]) and a variance of only 0.0009, demonstrating excellent performance and stability. This is primarily due to the relatively simple argument structure and highly patterned presentation of judicial texts in these events. “Appraisal” also exhibited satisfactory stability (F1 = 0.8770, 95% CI: [0.866, 0.888]), with a standard deviation of only 0.0269. This is related to their procedural and normative nature. In contrast, “Purchase” (F1 = 0.6479, 95% CI: [0.610, 0.686]) and “Traffic Accident” (F1 = 0.6800, 95% CI: [0.649, 0.711]) not only had lower means but also wide confidence intervals, indicating significant uncertainty. This shows that the argument relationships of such events are complex and the contexts are diverse, which brings greater challenges for the model.

From a stability perspective, events such as “Drunk Driving” and “Theft” exhibit excellent stability (variance ≤ 0.001), indicating that the model’s outcomes for these events are more consistent and robust. In contrast, performance on events such as “Intentional Injury” and “Purchase” are more dispersed, indicating that the model is more significantly affected by data partitioning and random factors in these events, resulting in less stable results.

5.4. Data Scale Sensitivity Analysis in Low-Resource Scenarios

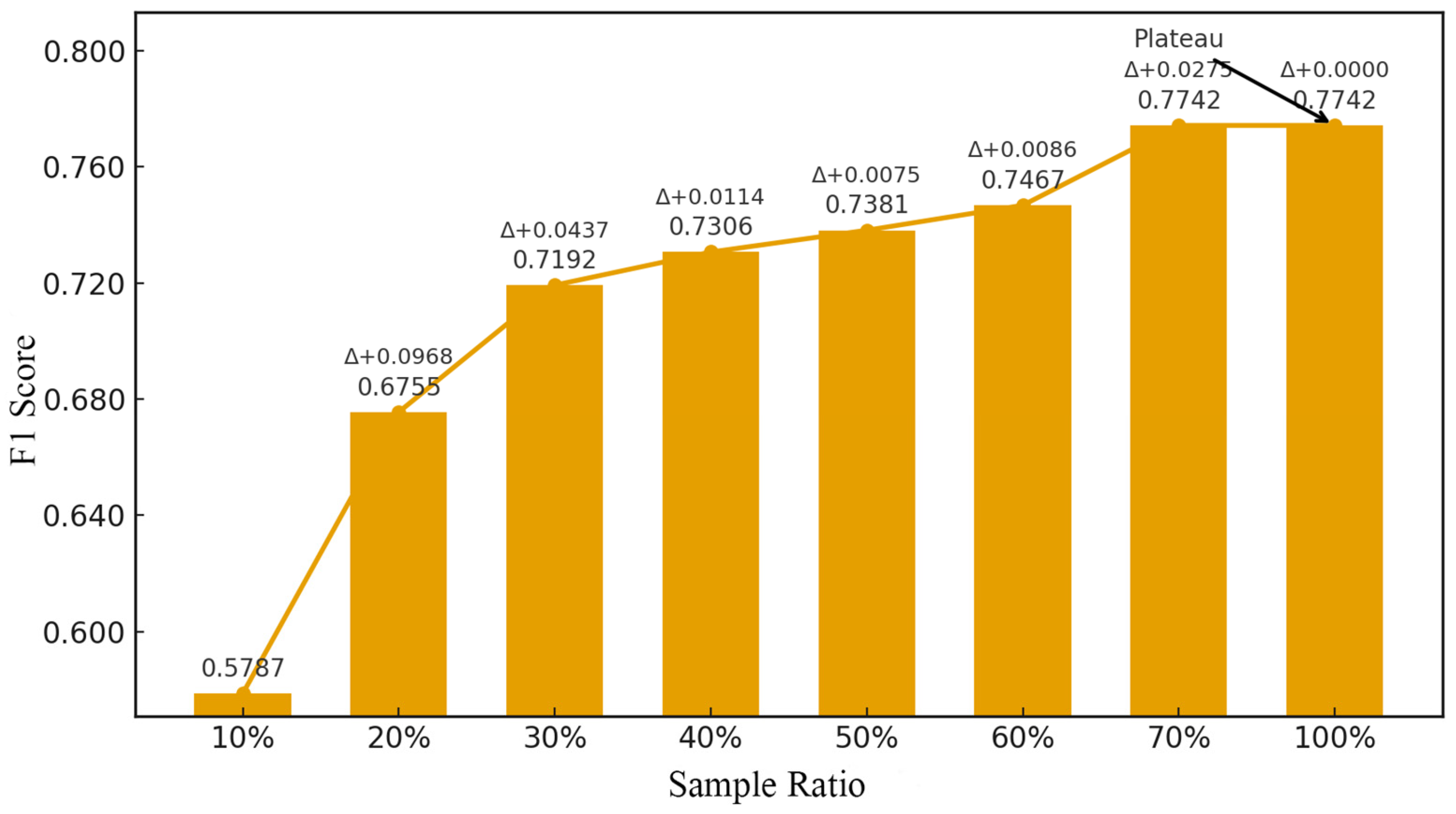

For a thorough examination of the proposed method’s adaptability under limited-resource settings, experiments were performed by randomly sampling the training dataset at different ratios: 10%, 20%, 30%, 40%, 50%, 60%, 70%, and 100%.

Figure 9 presents the experimental results.

Experimental results show that the model achieves a steady improvement with increasing data size, but the rate of increase exhibits a distinct phased pattern. At extremely low data ratios (e.g., 10%), the F1-score is only 0.5787, a significant drop in performance, indicating that the model struggles to fully learn event semantic patterns when the data are insufficient. When the data ratio increases to 20%, performance improves rapidly, with the F1-score increasing to approximately 0.6755, an increase of nearly 10 percentage points. However, as the amount of data increases, model performance improves rapidly but stabilizes after reaching a certain scale (40%). In particular, when the training data are expanded to medium and high ratios, the marginal gains in performance gradually decrease, eventually reaching a plateau as the training data approach the full amount of data. This trend demonstrates that the proposed method can effectively function with limited data, but further performance improvement requires the introduction of additional knowledge.

We conducted a qualitative analysis of typical error cases and found that the problems primarily occurred in scenarios with ambiguous semantics and unclear argument boundaries. In some judicial texts, the expressions of different argument roles were highly similar, leading to confusion in the model’s ability to distinguish them. The model also struggled to determine the appropriate extraction granularity for complex locations or times, Certain low-frequency or non-standardized expressions also weakened the model’s generalization ability, resulting in poor performance in identifying rare arguments.

This paper’s model also compares to existing models. As presented in

Table 5, MRC_TibAE achieves an F1-score of 0.7659, outperforming both sequence tagging methods, such as TJEE, and prompt-based learning methods, such as GPT-4o and DeepSeek-V3. This demonstrates the effectiveness of formulating argument extraction through a question-answering paradigm. However, compared to the BERT_AC model, which also employs a question-answering framework and achieves a performance of 0.8840, the proposed method exhibits a certain performance gap. This difference is primarily due to the complexity of linguistic characteristics: although Tibetan, like Chinese, belongs to the Sino-Tibetan language family, it is more complex in terms of lexical agglutination, word order flexibility, and morphological variation. This poses greater challenges for models in key aspects such as semantic representation and argument boundary identification. Furthermore, due to its low-resource nature, the limited availability of Tibetan pre-training corpora constrains models like CINO from fully capturing its deep semantic characteristics, which in turn affects the accuracy of question–answer matching. Despite this, MRC_TibAE still achieves relatively ideal performance in the Tibetan judicial field, providing a feasible technical path for low-resource language event extraction.

6. Conclusions

This paper proposes an MRC-based method for Tibetan judicial event argument extraction, effectively addressing the data scarcity and insufficient model generalization issues in low-resource language scenarios. By designing question templates that incorporate event semantic information, constructing a deep semantic understanding architecture based on CINO, and employing a two-stage training strategy, the event argument extraction is successfully converted into a question-answering task. The results indicate that the MRC_TibAE achieves an F1-score of 76.59% for extracting arguments from Tibetan judicial events and demonstrates adequate stability and robustness under multiple data partitioning and random seeding settings. Performance analysis across different event types demonstrates that the model performs well in most events, such as drunk driving, but that challenges remain for complex events. Data sensitivity analysis confirms that this approach remains effective under small-scale data conditions, maintaining stable performance even when using only 40% of the training data.

Future research will extend to more low-resource languages and domain scenarios, introducing multimodal data such as images and speech to enhance the model’s event understanding capabilities in multi-source data environments. Additionally, we will actively promote collaboration with judicial departments to conduct application validation of the proposed model. Against the backdrop of national efforts to promote information technology development in ethnic-minority regions, the achievements of this research not only provide technical support for the preservation and development of languages such as Tibetan but also have practical significance for improving the efficiency and intelligence level of judicial processing in ethnic-minority areas.