Surrogate-Assisted Evolutionary Multi-Objective Antenna Design

Abstract

1. Introduction

2. Background and Motivation

2.1. Yagi Antenna Design

2.2. Multi-Problem Surrogates

3. Methodology

3.1. Multiobjective Antenna Design

3.2. General Framework of MPS

| Algorithm 1 MPS model construction |

Require:

Source model set , training set , training size Ensure:

MPS model

|

3.3. Multi-Problem Surrogate Algorithm for Multi-Objective Antenna Design

| Algorithm 2 Surrogate-assisted |

Require: source models Ensure: Nondominated solutions

|

4. Experimental Study

4.1. Experimental Settings

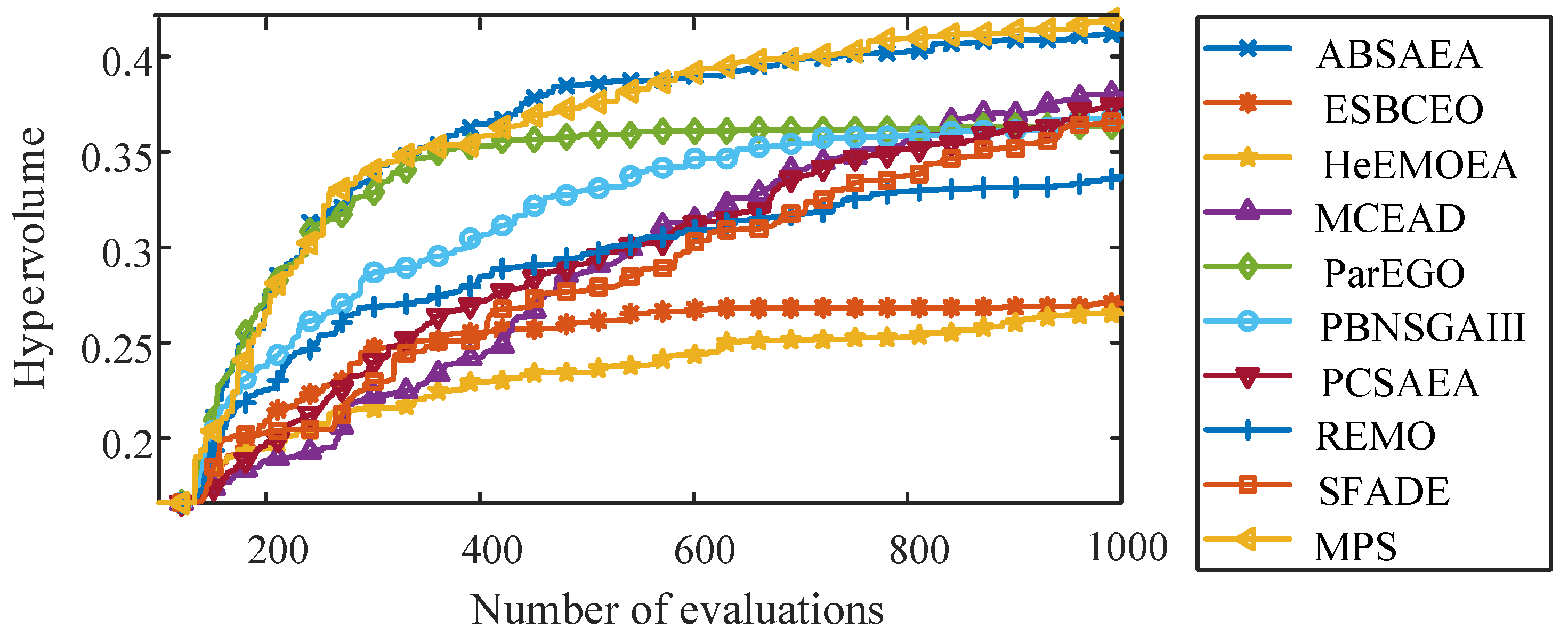

4.2. Experimental Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, Q.; Zeng, S.; Zhao, F.; Jiao, R.; Li, C. On Formulating and Designing Antenna Arrays by Evolutionary Algorithms. IEEE Trans. Antennas Propag. 2021, 69, 1118–1129. [Google Scholar] [CrossRef]

- Ji, C.; Ning, X.; Dai, W. Design of Shared-Aperture Base Station Antenna with a Conformal Radiation Pattern. Electronics 2025, 14, 225. [Google Scholar] [CrossRef]

- Wu, Q.; Li, H.; Wong, S.W.; Zhang, Z.; He, Y. A Simple Cylindrical Dielectric Resonator Antenna Based on High-Order Mode with Stable High Gain. IEEE Antennas Wirel. Propag. Lett. 2024, 23, 3476–3480. [Google Scholar] [CrossRef]

- Raghuvanshi, A.; Sharma, A.; Awasthi, A.K.; Singhal, R.; Sharma, A.; Tiang, S.S.; Wong, C.H.; Lim, W.H. Linear antenna array pattern synthesis using Multi-Verse optimization algorithm. Electronics 2024, 13, 3356. [Google Scholar] [CrossRef]

- Ta, L.P.; Nakayama, D.; Hirose, M. Design of a High-Gain X-Band Electromagnetic Band Gap Microstrip Patch Antenna for CubeSat Applications. Electronics 2025, 14, 2216. [Google Scholar] [CrossRef]

- Guo, H.; Zhao, Y.; Li, J.; Gao, R.; He, Z.; Yang, Z. Design of Ultra-Wideband Low RCS Antenna Based on Polarization Conversion Metasurface. Electronics 2025, 14, 2204. [Google Scholar] [CrossRef]

- Fujimoto, T.; Guan, C.E. A Printed Hybrid-Mode Antenna for Dual-Band Circular Polarization with Flexible Frequency Ratio. Electronics 2025, 14, 2504. [Google Scholar] [CrossRef]

- Wu, Q.Y.; Wu, L.H.; Ben, C.Q.; Lian, J.W. Millimeter-Wave Miniaturized Substrate-Integrated Waveguide Multibeam Antenna Based on Multi-Layer E-Plane Butler Matrix. Electronics 2025, 14, 2553. [Google Scholar] [CrossRef]

- Yu, Y.; Jolani, F.; Chen, Z. A wideband omnidirectional horizontally polarized antenna for 4G LTE applications. IEEE Antennas Wirel. Propag. Lett. 2013, 12, 686–689. [Google Scholar] [CrossRef]

- Zhu, Q.; Yang, S.; Chen, Z. A wideband horizontally polarized omnidirectional antenna for LTE indoor base stations. Microw. Opt. Technol. Lett. 2015, 57, 2112–2116. [Google Scholar] [CrossRef]

- Yonas Gebre Woldesenbet, G.G.Y.; Tessema, B.G. Constraint Handling in Multiobjective Evolutionary Optimization. IEEE Trans. Evol. Comput. 2009, 13, 514–525. [Google Scholar] [CrossRef]

- Altshuler, E.E.; Linden, D.S. Wire-antenna designs using genetic algorithms. IEEE Antennas Propag. Mag. 2002, 39, 33–43. [Google Scholar] [CrossRef]

- Jin, N.; Rahmat-Samii, Y. Particle swarm optimization for antenna designs in engineering electromagnetics. J. Artif. Evol. Appl. 2008, 2008, 728929. [Google Scholar] [CrossRef]

- Goudos, S.K.; Siakavara, K.; Samaras, T.; Vafiadis, E.E.; Sahalos, J.N. Self-adaptive differential evolution applied to real-valued antenna and microwave design problems. IEEE Trans. Antennas Propag. 2011, 59, 1286–1298. [Google Scholar] [CrossRef]

- Tan, Z.; Wang, H.; Liu, S. Multi-stage dimension reduction for expensive sparse multi-objective optimization problems. Neurocomputing 2021, 440, 159–174. [Google Scholar] [CrossRef]

- Ma, L.; Jin, J.; Li, X.; Liu, W.; Ma, K.; Zhang, Q.J. Advanced Surrogate-Based EM Optimization Using Complex Frequency Domain EM Simulation-Based Neuro-TF Model for Microwave Components. IEEE Trans. Microw. Theory Tech. 2025, 73, 2309–2319. [Google Scholar] [CrossRef]

- Na, W.; Liu, K.; Cai, H.; Zhang, W.; Xie, H.; Jin, D. Efficient EM Optimization Exploiting Parallel Local Sampling Strategy and Bayesian Optimization for Microwave Applications. IEEE Microw. Wirel. Compon. Lett. 2021, 31, 1103–1106. [Google Scholar] [CrossRef]

- Wei, F.F.; Chen, W.N.; Zhang, J. A hybrid regressor and classifier-assisted evolutionary algorithm for expensive optimization with incomplete constraint information. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 5071–5083. [Google Scholar] [CrossRef]

- Shi, M.; Lv, L.; Sun, W.; Song, X. A multi-fidelity surrogate model based on support vector regression. Struct. Multidiscip. Optim. 2020, 61, 2363–2375. [Google Scholar] [CrossRef]

- Liu, Q.; Cheng, R.; Jin, Y.; Heiderich, M.; Rodemann, T. Reference vector-assisted adaptive model management for surrogate-assisted many-objective optimization. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 7760–7773. [Google Scholar] [CrossRef]

- Knowles, J. ParEGO: A hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans. Evol. Comput. 2006, 10, 50–66. [Google Scholar] [CrossRef]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. An adaptive Bayesian approach to surrogate-assisted evolutionary multi-objective optimization. Inf. Sci. 2020, 519, 317–331. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, W.; Tsang, E.; Virginas, B. Expensive multiobjective optimization by MOEA/D with Gaussian process model. IEEE Trans. Evol. Comput. 2009, 14, 456–474. [Google Scholar] [CrossRef]

- Tan, K.C.; Feng, L.; Jiang, M. Evolutionary transfer optimization-a new frontier in evolutionary computation research. IEEE Comput. Intell. Mag. 2021, 16, 22–33. [Google Scholar] [CrossRef]

- Gupta, A.; Ong, Y.S.; Feng, L. Insights on transfer optimization: Because experience is the best teacher. IEEE Trans. Emerg. Top. Comput. Intell. 2017, 2, 51–64. [Google Scholar] [CrossRef]

- Da, B.; Gupta, A.; Ong, Y.S. Curbing negative influences online for seamless transfer evolutionary optimization. IEEE Trans. Cybern. 2018, 49, 4365–4378. [Google Scholar] [CrossRef]

- Jiang, M.; Huang, Z.; Qiu, L.; Huang, W.; Yen, G.G. Transfer learning-based dynamic multiobjective optimization algorithms. IEEE Trans. Evol. Comput. 2017, 22, 501–514. [Google Scholar] [CrossRef]

- Li, H.; Wan, F.; Gong, M.; Qin, A.K.; Wu, Y.; Xing, L. Fast heterogeneous multi-problem surrogates for transfer evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 2024. [Google Scholar] [CrossRef]

- Li, H.; Wan, F.; Gong, M.; Qin, A.K.; Wu, Y.; Xing, L. Many-Problem Surrogates for Transfer Evolutionary Multiobjective Optimization With Sparse Transfer Stacking. IEEE Trans. Evol. Comput. 2025. [Google Scholar] [CrossRef]

- Gupta, A.; Ong, Y.S.; Feng, L.; Tan, K.C. Multiobjective multifactorial optimization in evolutionary multitasking. IEEE Trans. Cybern. 2016, 47, 1652–1665. [Google Scholar] [CrossRef]

- Briqech, Z.; Sebak, A.R.; Denidni, T.A. High-efficiency 60-GHz printed Yagi antenna array. IEEE Antennas Wirel. Propag. Lett. 2013, 12, 1224–1227. [Google Scholar] [CrossRef]

- Balanis, C.A. Antenna Theory: Analysis and Design; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Tan, Z.; Luo, L.; Zhong, J. Knowledge transfer in evolutionary multi-task optimization: A survey. Appl. Soft Comput. 2023, 138, 110182. [Google Scholar] [CrossRef]

- Huang, L.; Feng, L.; Wang, H.; Hou, Y.; Liu, K.; Chen, C. A preliminary study of improving evolutionary multi-objective optimization via knowledge transfer from single-objective problems. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; IEEE: New York, NY, USA, 2020; pp. 1552–1559. [Google Scholar]

- Lim, R.; Zhou, L.; Gupta, A.; Ong, Y.S.; Zhang, A.N. Solution representation learning in multi-objective transfer evolutionary optimization. IEEE Access 2021, 9, 41844–41860. [Google Scholar] [CrossRef]

- Zou, J.; Lin, F.; Gao, S.; Deng, G.; Zeng, W.; Alterovitz, G. Transfer learning based multi-objective genetic algorithm for dynamic community detection. arXiv 2021, arXiv:2109.15136. [Google Scholar] [CrossRef]

- Jiang, M.; Wang, Z.; Guo, S.; Gao, X.; Tan, K.C. Individual-based transfer learning for dynamic multiobjective optimization. IEEE Trans. Cybern. 2020, 51, 4968–4981. [Google Scholar] [CrossRef]

- Min, A.T.W.; Ong, Y.S.; Gupta, A.; Goh, C.K. Multiproblem surrogates: Transfer evolutionary multiobjective optimization of computationally expensive problems. IEEE Trans. Evol. Comput. 2017, 23, 15–28. [Google Scholar] [CrossRef]

- Liu, B.; Liu, H.; Aliakbarian, H.; Ma, Z.; Vandenbosch, G.; Gielen, G.; Excell, P. An efficient method for antenna design optimization based on evolutionary computation and machine learning techniques. IEEE Trans. Antennas Propag. 2013, 62, 7–18. [Google Scholar] [CrossRef]

- Kuwahara, Y. Multiobjective optimization design of Yagi-Uda antenna. IEEE Trans. Antennas Propag. 2005, 53, 1984–1992. [Google Scholar] [CrossRef]

- Bian, H.; Tian, J.; Yu, J.; Yu, H. Bayesian co-evolutionary optimization based entropy search for high-dimensional many-objective optimization. Knowl. Based Syst. 2023, 274, 110630. [Google Scholar] [CrossRef]

- Guo, D.; Jin, Y.; Ding, J.; Chai, T. Heterogeneous ensemble-based infill criterion for evolutionary multiobjective optimization of expensive problems. IEEE Trans. Cybern. 2019, 49, 1012–1025. [Google Scholar] [CrossRef] [PubMed]

- Sonoda, T.; Nakata, M. Multiple classifiers-assisted evolutionary algorithm based on decomposition for high-dimensional multi-objective problems. IEEE Trans. Evol. Comput. 2022, 26, 1581–1595. [Google Scholar] [CrossRef]

- Song, Z.; Wang, H.; Xu, H. A framework for expensive many-objective optimization with Pareto-based bi-indicator infill sampling criterion. Memetic Comput. 2022, 14, 179–191. [Google Scholar] [CrossRef]

- Tian, Y.; Hu, J.; He, C.; Ma, H.; Zhang, L.; Zhang, X. A pairwise comparison based surrogate-assisted evolutionary algorithm for expensive multi-objective optimization. Swarm Evol. Comput. 2023, 80, 101323. [Google Scholar] [CrossRef]

- Hao, H.; Zhou, A.; Qian, H.; Zhang, H. Expensive multiobjective optimization by relation learning and prediction. IEEE Trans. Evol. Comput. 2022, 26, 1157–1170. [Google Scholar] [CrossRef]

- Horaguchi, Y.; Nishihara, K.; Nakata, M. Evolutionary multiobjective optimization assisted by scalarization function approximation for high-dimensional expensive problems. Swarm Evol. Comput. 2024, 86, 101516. [Google Scholar] [CrossRef]

| Parameter | Value | Parameter | Range |

|---|---|---|---|

| Frequency | 165 MHz | RefLengthBounds | |

| 300 | DirLengthBounds | ||

| BandWidth | 8.25 MHz | RefSpacingBounds | |

| 1.82 m | DirSpacingBounds |

| Number of Evaluations Reference | Metric [22] | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | MPS (Ours) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 150 | Avg. | 0.2103 | 0.1931 | 0.2126 | 0.1976 | 0.2204 | 0.2131 | 0.1716 | 0.1912 | 0.1917 | 0.2268 |

| Var. | 0.0214 | 0.0110 | 0.0150 | 0.0191 | 0.0269 | 0.0326 | 0.0071 | 0.0120 | 0.0235 | 0.2114 | |

| 300 | Avg. | 0.3041 | 0.2210 | 0.2262 | 0.2351 | 0.3078 | 0.3003 | 0.2191 | 0.2547 | 0.2282 | 0.2997 |

| Var. | 0.0449 | 0.0224 | 0.0153 | 0.0267 | 0.0194 | 0.0204 | 0.0249 | 0.0389 | 0.0365 | 0.0585 | |

| 500 | Avg. | 0.3407 | 0.2281 | 0.2416 | 0.2930 | 0.3116 | 0.3292 | 0.2600 | 0.3063 | 0.2810 | 0.3626 |

| Var. | 0.0421 | 0.0213 | 0.0175 | 0.0288 | 0.0193 | 0.0207 | 0.0266 | 0.0377 | 0.0350 | 0.0387 | |

| 1000 | Avg. | 0.3699 | 0.2393 | 0.2699 | 0.3872 | 0.3176 | 0.3637 | 0.3394 | 0.3533 | 0.3709 | 0.4125 |

| Var. | 0.0377 | 0.0214 | 0.0237 | 0.0187 | 0.0192 | 0.0129 | 0.0382 | 0.0344 | 0.0461 | 0.0226 |

| Number of Evaluations Reference | Metric [22] | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | MPS (Ours) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 150 | Avg. | 0.2108 | 0.1795 | 0.1838 | 0.1730 | 0.2091 | 0.2037 | 0.1739 | 0.1931 | 0.1831 | 0.2038 |

| Var. | 0.0291 | 0.0110 | 0.0131 | 0.0070 | 0.0261 | 0.0345 | 0.0110 | 0.0159 | 0.0177 | 0.0135 | |

| 300 | Avg. | 0.3356 | 0.2471 | 0.2153 | 0.2224 | 0.3288 | 0.2863 | 0.2396 | 0.2685 | 0.2296 | 0.3402 |

| Var. | 0.0409 | 0.0265 | 0.0257 | 0.0329 | 0.0376 | 0.0316 | 0.0248 | 0.0270 | 0.0429 | 0.0605 | |

| 500 | Avg. | 0.3853 | 0.2606 | 0.2342 | 0.2869 | 0.3589 | 0.3297 | 0.2913 | 0.2966 | 0.2771 | 0.3755 |

| Var. | 0.0451 | 0.0271 | 0.0307 | 0.0517 | 0.0360 | 0.0203 | 0.0241 | 0.0162 | 0.0423 | 0.0552 | |

| 1000 | Avg. | 0.4115 | 0.2705 | 0.2652 | 0.3804 | 0.3634 | 0.3676 | 0.3746 | 0.3370 | 0.3656 | 0.4197 |

| Var. | 0.0518 | 0.0228 | 0.0312 | 0.0398 | 0.0321 | 0.0273 | 0.0374 | 0.0336 | 0.0418 | 0.0426 |

| Number of Evaluations Reference | Metric [22] | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | MPS (Ours) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 150 | Avg. | 0.2336 | 0.2028 | 0.2134 | 0.1895 | 0.2264 | 0.2022 | 0.1943 | 0.2107 | 0.2013 | 0.2538 |

| Var. | 0.0189 | 0.0184 | 0.0115 | 0.0137 | 0.0157 | 0.0133 | 0.0097 | 0.0099 | 0.0147 | 0.0282 | |

| 300 | Avg. | 0.3138 | 0.2523 | 0.2227 | 0.2254 | 0.3057 | 0.2491 | 0.2255 | 0.2655 | 0.2107 | 0.3300 |

| Var. | 0.0242 | 0.0584 | 0.0132 | 0.0222 | 0.0311 | 0.0260 | 0.0155 | 0.0196 | 0.0142 | 0.0188 | |

| 500 | Avg. | 0.3552 | 0.2807 | 0.2382 | 0.2763 | 0.3221 | 0.3213 | 0.2855 | 0.2959 | 0.2520 | 0.3801 |

| Var. | 0.0146 | 0.0480 | 0.0165 | 0.0259 | 0.0347 | 0.0312 | 0.0171 | 0.0465 | 0.0346 | 0.0250 | |

| 1000 | Avg. | 0.3923 | 0.2962 | 0.2592 | 0.3695 | 0.3309 | 0.3650 | 0.3508 | 0.3388 | 0.3355 | 0.4151 |

| Var. | 0.0210 | 0.0447 | 0.0224 | 0.0387 | 0.0369 | 0.0257 | 0.0199 | 0.0413 | 0.0528 | 0.0224 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Wu, B.; Wang, R.; Li, H.; Gong, M. Surrogate-Assisted Evolutionary Multi-Objective Antenna Design. Electronics 2025, 14, 3862. https://doi.org/10.3390/electronics14193862

Li Z, Wu B, Wang R, Li H, Gong M. Surrogate-Assisted Evolutionary Multi-Objective Antenna Design. Electronics. 2025; 14(19):3862. https://doi.org/10.3390/electronics14193862

Chicago/Turabian StyleLi, Zhiyuan, Bin Wu, Ruiqi Wang, Hao Li, and Maoguo Gong. 2025. "Surrogate-Assisted Evolutionary Multi-Objective Antenna Design" Electronics 14, no. 19: 3862. https://doi.org/10.3390/electronics14193862

APA StyleLi, Z., Wu, B., Wang, R., Li, H., & Gong, M. (2025). Surrogate-Assisted Evolutionary Multi-Objective Antenna Design. Electronics, 14(19), 3862. https://doi.org/10.3390/electronics14193862