1. Introduction

Smart grid is a new concept, which evolved from the combination of renewable energy integration, digital communication progress, and increasing demand for reliable power supply [

1]. Driven by the continuous progress of science and technology, smart grid has developed rapidly. The number of all kinds of terminal equipment and control units in smart grid has greatly increased, enriching the functions and services of power grid. With the help of communication technology, smart grid not only realizes the interconnection of components in the power grid (as illustrated in

Figure 1), but also can communicate with external users and distributed energy sources in a diversified way. The emergence and development of smart grid has brought many opportunities for improving efficiency and overall performance [

2]. Related studies also indicate that frequency stability control and orderly electricity market operation—such as robust super-twisting LFC and holistic risk-aware market design—are integral to reliable smart-grid operation [

3,

4].

However, with the popularity of smart grid [

5], their security risks have gradually become prominent. In the smart grid environment, business units are scattered and independent, creating a conflict between data privacy protection requirements and network security monitoring [

6]. Crucially, widespread cyberattacks can undermine frequency-control loops, such as LFC [

3] and disrupt market clearing and price formation [

4]. This reality highlights the urgent need for intrusion detection systems (IDS) that can continuously learn and adapt in real time to these evolving threats.

In recent years, several significant cyberattacks have targeted smart grids and energy infrastructures, highlighting the vulnerability of these critical systems. One of the most notable attacks occurred in 2015, when Ukraine’s power grid was hit by a cyberattack, causing a massive blackout. The attackers used spear-phishing emails to install malware (BlackEnergy3) on the network, granting them remote access to the control systems. This allowed them to shut down 30 substations, leaving over 230,000 customers without power for several hours [

7]. Another high-profile attack was the 2017 breach of Saudi Aramco, where the Shamoon malware destroyed over 30,000 computers, disrupting operations but not causing physical damage [

8]. Similarly, the 2021 Colonial Pipeline attack underscored the growing threat to critical energy supply chains. Hackers infiltrated the company’s network using compromised employee credentials and deployed ransomware, halting fuel supplies to the U.S. East Coast. The company eventually paid a

$4.4 million ransom [

9].

These cases underscore the growing need for robust cybersecurity measures in smart grids. The attacks on Ukraine’s grid and Colonial Pipeline illustrate how cyber threats have evolved from targeting isolated industrial systems to infiltrating interconnected, complex infrastructures, affecting both public safety and economic stability. As attacks become more frequent and sophisticated, it is crucial to enhance the resilience of smart grid systems by developing adaptive, real-time cybersecurity solutions.

Moreover, the dependence of smart grid systems on communication networks makes them vulnerable to network attacks, posing significant risks to grid reliability [

5]. As a network-embedded infrastructure, smart grids must be able to detect network attacks and respond appropriately in a timely manner [

6].

The security of smart grids is increasingly challenged by sophisticated cyberattacks, making the design of robust IDS crucial for addressing these threats. In addition to real-world case studies on these attacks, Hardware-in-the-Loop (HIL) validation has become an essential tool for testing the resilience of IDS in smart grid environments. By combining real-time simulations with physical hardware components, HIL enables researchers to simulate diverse attack scenarios and evaluate IDS performance in realistic settings. For instance, HIL can be used to simulate various cyberattack strategies on power grid components, such as denial-of-service attacks or false data injections, to assess how well the IDS can detect and respond to these threats [

10].

This validation method not only ensures that IDSs perform under controlled conditions but also allows for the dynamic evaluation of how IDS models adapt to new, evolving attacks [

11]. This is particularly valuable for testing IDS solutions in scenarios where new attack types may emerge or existing methods evolve. In fact, the application of HIL in smart grid cybersecurity has been demonstrated in several studies [

12,

13,

14,

15], where real-world attack data were fed into physical and virtual smart grid systems, allowing for real-time testing of IDS performance under attack conditions.

With the continuous evolution of attack methods, traditional IDS often struggle to cope with the ever-changing threats of network attacks, especially when dealing with new attacks. Due to the complexity and high dynamics of smart grids, existing IDS solutions face significant limitations: these methods can only handle known attack types but fail to adapt to the emergence of new attacks in real time [

16]. Additionally, the high requirements of smart grids for real-time processing, computing power, and storage resources make retraining models to handle new attacks a costly and time-consuming process. Many existing smart grid IDS methods have failed to effectively address this issue, leading to significant declines in efficiency when faced with new attack threats.

Unlike traditional static learning methods, incremental learning [

17] offers an adaptive approach by updating the model step by step instead of retraining the entire system. This reduces computation costs and training time, making it especially suitable for new attack modes that frequently appear in the smart grid environment. Incremental learning can quickly adapt to new attack types without losing the ability to detect known attacks.

Given the continuous rise in the number of attack types in the smart grid, it has become an inevitable requirement to incorporate incremental learning into IDS design. This method allows the model to dynamically adapt to new attack types and provide continuous response capability for power grid security. However, with the continuous input of new data, the model is prone to catastrophic forgetting, where the ability to identify previously learned attack types declines significantly, and excessive parameter updates can lead to a sharp increase in computational overhead [

18]. The experimental results of this paper show that this problem can be effectively alleviated by a structured design, where a tree structure shows unique advantages in balancing model updates and knowledge retention due to its modular expansion characteristics.

Therefore, this paper proposes a tree-based incremental learning method, which trains a new model with new attack type data and then connects it to the original model as a child node, realizing a tree structure. This incremental learning method effectively avoids catastrophic forgetting and increases the ability of the original model to identify new types of attacks, thus ensuring the continuous protection of the smart grid.

The remainder of this paper is organized as follows:

Section 2 reviews the related works on IDS in smart grid scenarios.

Section 3 highlights the main contributions of this study.

Section 4 presents the overall system architecture, including data preprocessing, model training, and the incremental learning mechanism.

Section 5 reports the experimental setup, results, and performance analysis of the proposed scheme. Finally,

Section 6 concludes the paper and discusses potential future work.

2. Related Works

To protect the security of the smart grid, many scholars have focused on researching IDS in the smart grid scenario. By analyzing the information from the power grid network, IDS can detect whether there is an attack, monitor the running state of the grid in real time, and ensure its security. Currently, smart grid IDS methods are mainly divided into time-frequency-based IDS [

19,

20] and machine learning or deep learning-based IDS [

21,

22,

23,

24]. Among them, IDS based on deep learning can provide higher detection accuracy and robustness compared to other types. However, despite numerous innovative schemes, existing smart grid IDS still face key issues, such as insufficient response to diversified attack modes, catastrophic forgetting, and computational overhead.

Many schemes based on specific standards or models have limitations in handling diversified attack modes. Quincozes et al. [

25] proposed a synthetic traffic generation framework based on the IEC-61850 standard [

26] to address the issue of lacking real data for training/testing/evaluation of IDS. However, this framework struggles to flexibly respond to evolving attack patterns and cannot effectively detect new attack behaviors. Similarly, IDS based on federated learning, such as those proposed by Wen et al. [

27] improves training efficiency but has limited detection capabilities when facing complex and evolving attacks. Basheer et al. [

1] proposed a deep learning IDS based on graph convolution network (GCN) to identify complex threats and maintain the integrity and reliability of the power grid. While it has advantages in real-time detection, its feature extraction ability is not targeted enough to accurately capture the unique features of different attack types. Mohammed et al. [

28] constructed a dual hybrid IDS for detecting false data injection in the smart grid. It combines feature selection and deep learning classifiers to improve detection accuracy and robustness, but it is difficult to cover all kinds of new injection methods when dealing with diverse false data injection attack modes.

In terms of catastrophic forgetting and computational cost, many schemes based on federated learning or deep learning face challenges. Hamdi [

29] pointed out that the efficiency of federated learning IDS dropped significantly in unconventional scenarios where the distribution of training and test data was inconsistent. While it proposed an IDS [

30] combining centralized learning and federated learning to improve the ability to identify unknown attacks, the learning of new attack patterns weakened the ability to recognize previously learned attack patterns. At the same time, computational cost increases sharply with model updates and data growth. For instance, the federated learning IDS for the smart grid based on fog edge support vector machines proposed by Noshina et al. [

31] shares learning parameters to ensure data privacy and collaborative learning, but it is difficult to avoid catastrophic forgetting in continuous learning, and the computational resources consumed are large. Additionally, the framework of a fog computing-based AI integration model proposed by Alsirhani et al. [

32] combines machine learning and deep learning to improve detection accuracy and address the class imbalance problem, but it does not avoid catastrophic forgetting and high computational overhead caused by model integration. Pasumponthevar et al. [

33] combined Kalman filtering with recurrent neural networks, achieving high classification accuracy of 97.3%. However, it still faces catastrophic forgetting when continuing to learn and deal with complex attack scenarios, and the computational resource requirements are high. The challenges of different intrusion detection methods are also outlined in

Table 1.

Although research in recent years has significantly improved the performance of smart grid IDS, most existing schemes [

1,

25,

27,

28,

29,

30,

31,

32,

33] still face the following challenges:

- (1)

Insufficient response to diversified attack modes: Smart grids have a variety of communication modes [

34] making them vulnerable to various attacks. Therefore, IDS needs to detect a wide range of attacks comprehensively. However, most existing schemes focus on detecting known attack types, and they are not well equipped to handle new or unknown attack patterns, especially in environments where attack methods are constantly evolving.

- (2)

Catastrophic forgetting and computational overhead: With the development of technology, new attack methods continue to emerge. If the model is retrained only with new attack samples, it can easily lead to catastrophic forgetting [

35]. If all historical data are used for retraining, it incurs significant computational cost and time. Many existing IDS methods fail to effectively solve the dilemma of how to continue learning new attacks while retaining existing knowledge.

3. Contributions

Aiming at the security threats and challenges faced by IDS in smart grid application scenarios, this paper proposes Grid-IDS based on incremental learning. In current research, although a variety of IDS approaches have achieved certain results, there are still some obvious limitations, especially in dealing with new attacks, catastrophic forgetting and data imbalance, and no effective solution has been achieved so far. In view of these problems, the contribution of this paper is reflected in the following aspects:

Introduce class-incremental learning into the smart grid intrusion detection scenario: Unlike traditional machine learning models which lack dynamic update capabilities, this work pioneers the application of class-incremental learning to smart grid detection. While conventional incremental learning primarily focuses on known attack types and struggles with catastrophic forgetting, and concept drift, the proposed class-incremental mechanism can continuously learn new attack categories while retaining existing knowledge, significantly reducing the high costs associated with frequent complete retraining in traditional machine learning methods.

Propose a tree-structured class-incremental learning mechanism: This paper proposes a class-incremental learning mechanism based on a tree structure. By dynamically integrating models of new attack types as child nodes into the existing model, a hierarchically expandable detection structure is formed. This effectively adapts to novel attacks while avoiding the issues of catastrophic forgetting and the extensive retraining overhead required by traditional methods, significantly enhancing the system’s adaptability and efficiency in dynamic network environments.

Alleviate the data imbalance problem: In smart grid environments, normal traffic significantly outnumbers attack traffic, causing traditional IDS to be biased toward identifying normal traffic. To address this challenge, this paper employs SMOTE to oversample minority classes, successfully improving the model’s detection performance under imbalanced data conditions and further enhancing the model’s robustness and reliability.

Significantly improve detection performance and resource utilization: Through extensive experiments on the CICIDS2017 dataset, this study validates the exceptional performance of the Grid-IDS system across multiple evaluation metrics including precision, recall, and F1-Score. Compared with four baseline approaches (DNN-batch, Hoeffding Tree, ImFace and T-DFNN), the proposed system demonstrates superior performance across all metrics, achieving an average accuracy of 99.65%. Notably, it exhibits enhanced adaptability and stability when confronted with novel attack types. Furthermore, the system demonstrates significant advantages in inference efficiency. After three incremental learning cycles, the maximum classification time per network packet is only 1.5688 ms, underscoring its remarkable practical utility and scalability.

4. System Architecture

In this section, we first introduce the overall architecture of the proposed scheme, and then present the working principles and implementation steps of each module.

4.1. Overall Architecture

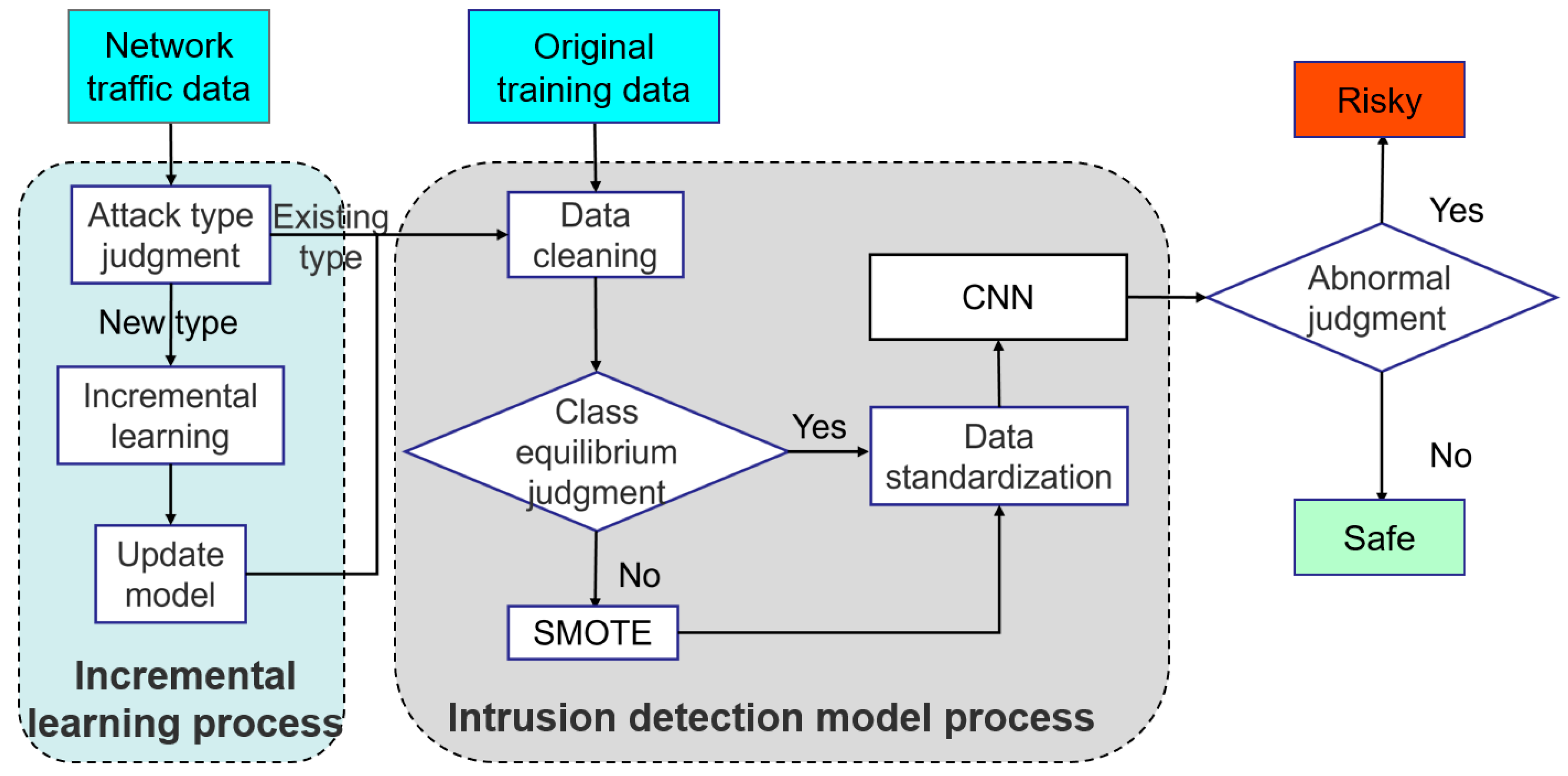

This paper focuses on building a smart grid intrusion detection system based on incremental learning, which is named Grid-IDS. The system has two core capabilities. On the one hand, it can accurately detect all kinds of attacks suffered by the smart grid on the bus and network sides; On the other hand, it can dynamically adapt and identify new attack categories through an incremental learning mechanism. As shown in

Figure 2, the overall architecture is mainly composed of two core components: an intrusion detection model and an incremental learning module, where the intrusion detection model is divided into two submodules: data preprocessing and model training. As shown in the intrusion detection model flow in

Figure 2, the existing raw training data of the smart grid should be collected first, and then imported into the data preprocessing pipeline. This stage consists of three steps:

First of all, obtain the original training data, and then proceed to the data preprocessing stage. The first step in the data preprocessing stage is data cleaning, such as filling the missing values in the training data, either deleting rows with missing values or imputing them (e.g., mean imputation). The second step is to determine whether the training data is balanced; If not, the SMOTE method [

36] is used for oversampling to achieve better training results. The third step is to standardize the data to reduce the influence of scale, characteristics and distribution differences on the model. After data preprocessing, input the data into one-dimensional convolutional neural network (1D-CNN) for training.

However, in the actual operation of the smart grid, new types of attacks may emerge constantly. When Grid-IDS needs to identify these new attack categories, the system enters the incremental learning stage. At this stage, the system collects and processes the emerging attack data and integrates it into the existing learning process. Through incremental learning, the system can dynamically adjust its classification ability, not only accurately identifying the original attack types, but also effectively classifying new attack types.

Finally, when smart-grid data requires security inspection, the data to be inspected is input into the updated and optimized Grid-IDS. The system classifies the input data according to the learned knowledge and classification rules, and outputs the corresponding detection results, thus providing strong assurance for the safe operation of the smart grid. Next, the implementation details of each part will be further described based on the overall architecture of the system.

4.2. Data Preprocessing

It is essential to preprocess the training data before model training because the original training data may have problems such as incomplete data, inconsistent data types and unbalanced classes, which can seriously affect the model training performance. The data preprocessing process mainly consists of data cleaning, checking whether the training data is balanced, and data standardization. The details are as follows.

4.2.1. Data Cleaning

The first step in the data preprocessing stage is data cleaning, which aims to remove the noise and anomalies in the original training data and provide a high-quality data foundation for the subsequent model training. This process mainly covers two key steps: invalid value processing and string encoding.

- (1)

Invalid value processing

The original data may contain null values and other invalid items, which will have a negative impact on the training performance of the model if they are not processed. In order to ensure the effectiveness of the training, this paper has formulated targeted processing strategies: on the one hand, directly remove rows with missing values to ensure data integrity; on the other hand, the average imputation method is used to maintain the number of samples and reduce the influence of missing values on subsequent analysis. Considering the stability and accuracy of training, this paper chooses to delete the row where the null value is located for invalid value processing.

- (2)

String encoding

In training data, attack types are usually labeled in the form of strings, while model training needs numerical input, so these string labels need to be converted into numeric values. Specifically, the normal type should be labeled as , and the attack type should be labeled as in turn. Through this encoding method, the model can better understand and deal with different types of attack data, thus improving the classification performance and detection accuracy of the model.

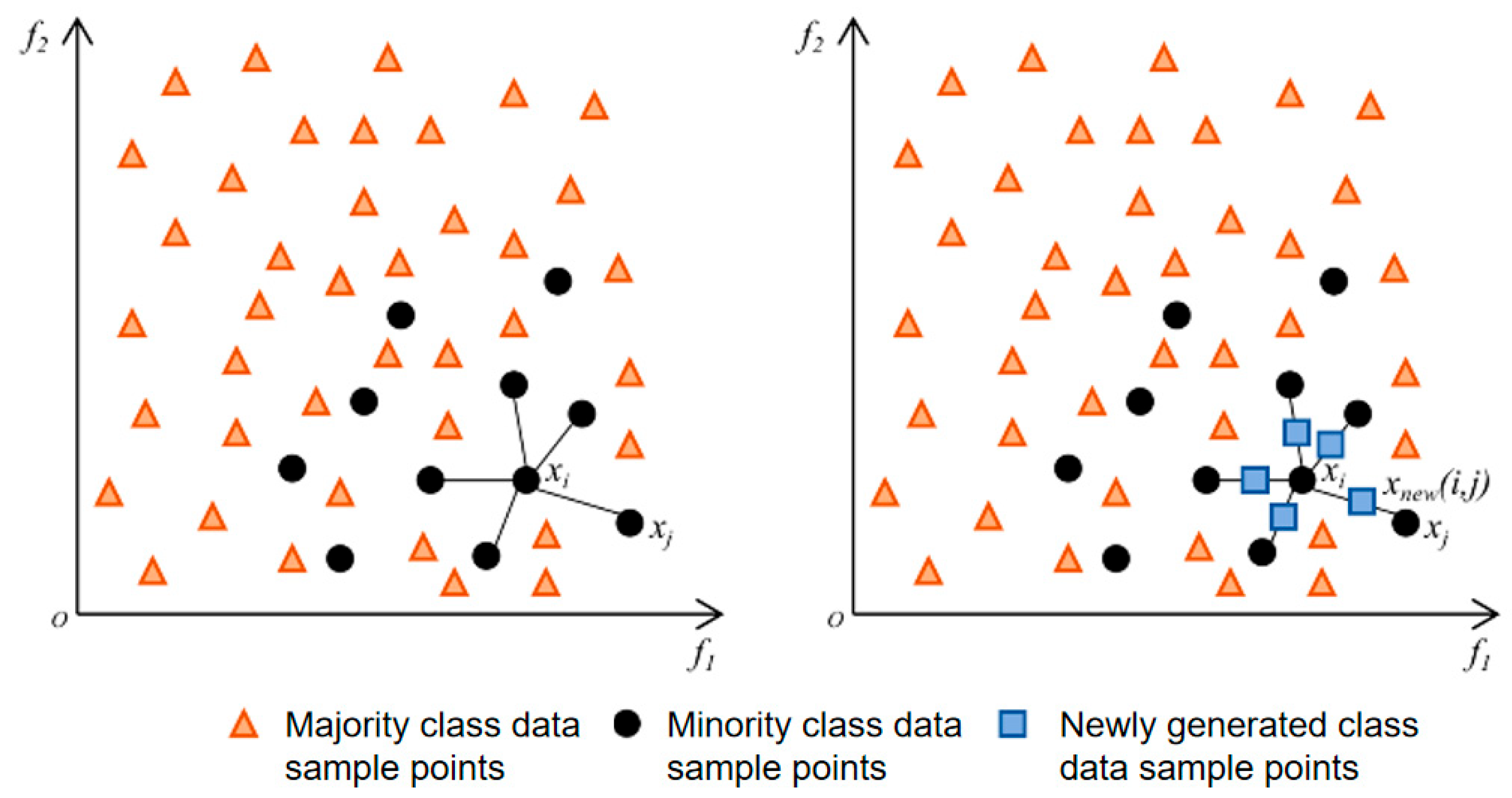

4.2.2. SMOTE

In practical network traffic, benign flows vastly outnumber attacks, yielding highly imbalanced training data and biasing classifiers toward majority classes. Among common remedies we adopt SMOTE on the training split of each incremental batch. Undersampling removes a large portion of benign traffic and degrades the model’s calibration to the background distribution required at inference. Random oversampling duplicates minority instances and tends to overfit the 1D-CNN. Class weighting or focal losses can mitigate bias but require per increment retuning as class priors shift in a class-incremental protocol, which destabilizes probability calibration and decision thresholds. By contrast, SMOTE synthesizes minority samples via linear interpolation among

k-nearest neighbors, increasing diversity without discarding majority data while keeping the training recipe invariant across increments. In practice, SMOTE is applied only after data splitting and only to the training set, after feature standardization, and sampling ratios set according to each class imbalance. We prefer vanilla SMOTE over boundary-focused variants (e.g., ADASYN [

37], Borderline-SMOTE [

38]) to avoid noisy synthetic points for extremely small classes. A schematic is shown in

Figure 3. SMOTE is implemented as follows:

- (3)

Calculation of k-nearest neighbor:

For each sample in the minority sample set, the distance between it and all samples in the minority sample set is calculated based on Euclidean distance, and the k-nearest neighbor of the sample is determined.

- (4)

Determine the sampling rate:

Set the sampling rate according to the sample imbalance ratio, and then determine the sampling rate . For each minority sample , several samples are randomly selected from its k neighbors, and the selected neighbors are .

- (5)

Constructing new samples:

Generating new samples according to Formula (1) for each randomly selected neighbor

:

where

is a random number evenly distributed in the range of

, so as to enrich minority samples, improve the balance of data distribution, avoid overfitting, provide high-quality sample data for model training, and help improve model performance in an all-round way.

4.2.3. Data Standardization

The third step in the data preprocessing stage is data standardization, which brings features to a common scale and reduces the impact of outliers and scale differences on model training, thereby improving learning performance and generalization. In this paper, the Z-score standardization method is used, and the formula is as follows:

where

denotes a sample value,

denotes the average value of the sample data,

represents the standard deviation of the sample data, and

represents the normalized value. Z-score can make the processed data conform to the standard normal distribution, that is, the mean value is

and the standard deviation is

. The standardized data are then used for model training.

4.3. Model Training

After data preprocessing, we use a 1D-CNN to carry out model training, thus constructing the original Grid-IDS. As a classic deep learning algorithm, CNN significantly reduces the number of parameters and shortens the calculation time by virtue of the shared convolutional kernel. This algorithm is not only widely used in the field of image recognition but has also achieved remarkable results in natural language processing. Specifically, while two-dimensional convolutional neural networks are suitable for image processing, 1D-CNNs are more appropriate for time-series data such as text and sensor data.

In view of the fact that smart grid data are essentially time-series data, this paper selects 1D-CNN for data classification. The 1D-CNN model consists of a one-dimensional convolution layer, a pooling layer, a dropout layer, and a fully connected layer [

39]. The convolution layer is responsible for extracting key features from the input data; the pooling layer filters the features extracted by the convolution layer to reduce the data dimension; the dropout layer effectively prevents the model from overfitting by randomly ignoring some neurons; and the fully connected layer maps the outputs of the previous layers to the sample label space to achieve classification. The model trained at this stage can classify the existing attack types. Below, we briefly introduce the implementation steps of the 1D-CNN.

While 1D-CNNs have proven highly effective for time-series data classification, it is important to justify why they are preferred over other sequential models like RNNs and Transformers in the context of smart grid traffic. Unlike RNNs, which are specifically designed for sequential data and are capable of capturing temporal dependencies, 1D-CNNs excel at learning local patterns over fixed-length windows of data [

40]. This makes 1D-CNNs particularly effective in environments like smart grid traffic, where local patterns (e.g., short-term fluctuations in traffic) play a key role in detecting intrusions. RNNs, on the other hand, often suffer from issues like vanishing gradients during training and can be computationally intensive when handling long sequences [

41].

Furthermore, compared to Transformer models, which are powerful for capturing long-range dependencies in sequential data, 1D-CNNs offer a simpler architecture with fewer parameters, leading to reduced computational overhead. Transformers, though highly effective in sequence modeling, require significant computational resources and are often overkill for tasks where the temporal dependencies are short-range and local [

42]. In contrast, 1D-CNNs offer a more efficient solution, as they are capable of extracting relevant features with a lower computational cost, making them better suited for real-time intrusion detection in smart grid systems where speed and efficiency are critical.

The following briefly introduces the implementation steps of the 1D-CNN:

Consider a set of 1-D input feature sequences . Each convolutional feature map is connected to multiple input feature sequences through a local weight matrix . has size (where is the convolution-kernel lengththe convolution kernel length), determining how many input units each output unit depends on. The corresponding mapping operation is called convolution in the field of signal processing.

The convolutional feature value of each unit can be obtained by the following formula:

where

denotes the activation function,

denotes the

-th unit in the

-th convolution feature map

;

is the

-th unit of the

-th input feature sequence

; and

is the

-th coefficient of the kernel (weight matrix) that connects

to

. The final convolutional feature map can also be written as:

where

denotes the convolution operator. Next, a max-pooling operation is applied to the feature maps. The purpose of pooling is to reduce the dimensionality of feature signals and enhance invariance to small perturbations. The formula of the pool layer is as follows:

where

is the pooling window size and

is the stride (the step size of the window) on the convolutional feature map. After pooling, a fully connected layer is typically used. The fully connected layer mainly acts as a classifier in convolutional neural networks, so its details are omitted here for brevity. Typically, the model uses multiple convolution layers, pooling layers, and fully connected layers, and the final output corresponds to a probability distribution over the output classes.

The model designed by this method is shown in

Figure 4, which includes three convolutional layers, three pooling layers, and two fully connected layers. The first convolutional layer consists of 32 filters with a kernel length of 1 and ReLU as the activation function, which transforms the input samples into vectors of

, where

is the input dimension. A max-pooling layer is then applied. The next two convolution layers are composed of 64 filters with a kernel length of 1. After three consecutive convolution–pooling operations, the Flatten layer is used to transform the multidimensional input into a one-dimensional vector.

As illustrated in

Figure 4, the model comprises three convolution–pooling blocks followed by two fully connected layers. The first convolution uses

pointwise

filters with ReLU activation to map the input to a

representation (where

is the input dimension), after which a max-pooling layer is applied. The next two convolution layers each use

filters and are paired with pooling. After three rounds of convolution and pooling, a Flatten layer converts the multi-dimensional features into a vector, and two fully connected layers with ReLU and Softmax activations produce the final predictions. Cross-entropy is used as the training loss.

We select three convolution layers to balance representational power and real-time latency. Pointwise convolutions perform channel lifting and nonlinear feature mixing without changing sequence length; stacking three such layers yields progressively more discriminative intermediate representations that help separate fine-grained attack patterns, while keeping the parameter count and computation modest. Under our hardware and latency budget, this depth maintains per-sample inference in the millisecond range and provides consistent accuracy gains over 1–2 layers, whereas going deeper to four layers brings diminishing returns and higher latency. The three-layer design also offers a stable intermediate feature space that facilitates knowledge retention and transfer in the subsequent tree-based incremental learning. For these reasons, three convolution layers are adopted as the default configuration.

4.4. Incremental Learning

In the part of incremental learning, this paper refers to the incremental learning framework [

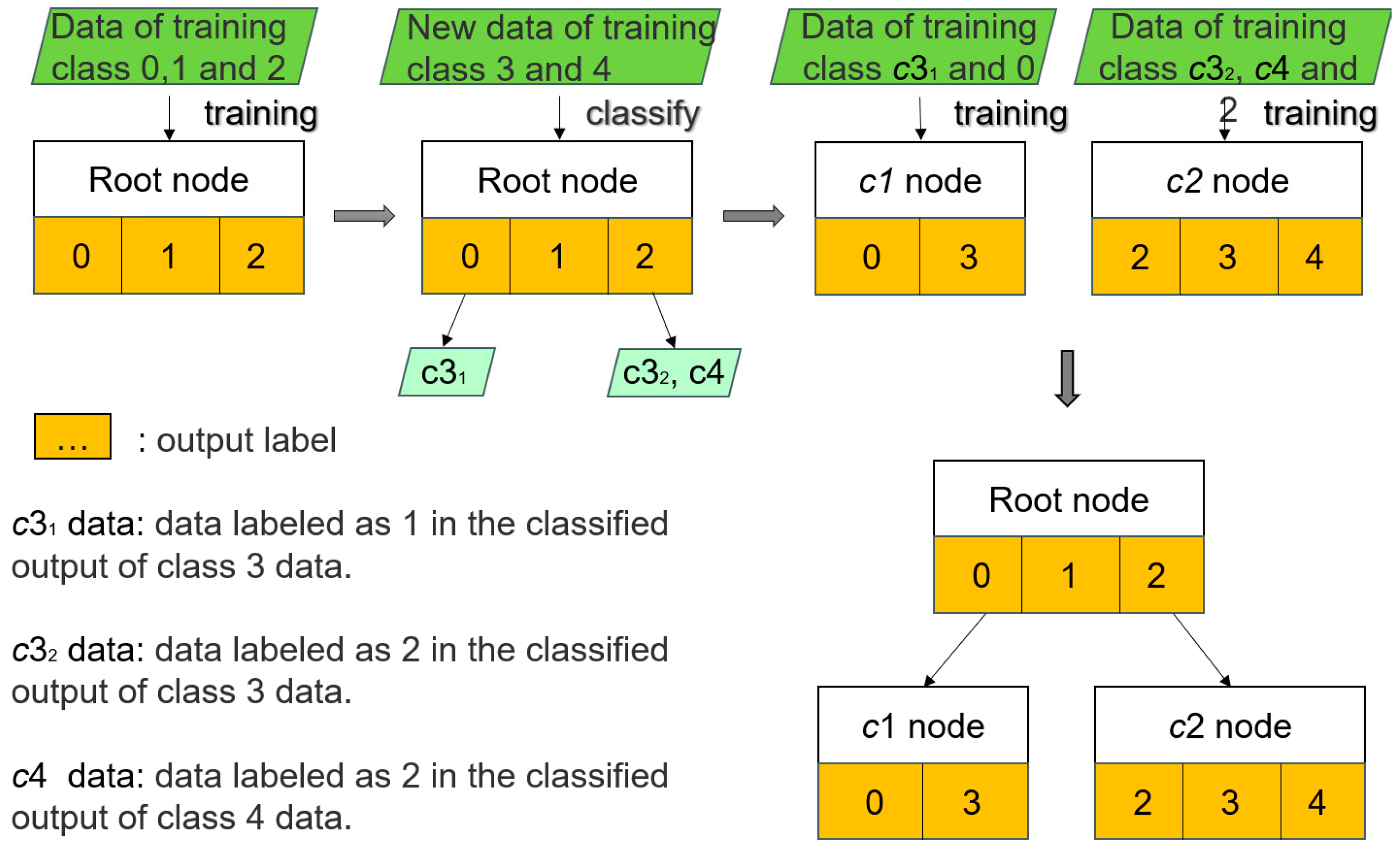

43] proposed by DATA and ARITSUGI, and improves upon it. Specifically, this paper selects 1D-CNN, which is more suitable for traffic data, as the benchmark classifier for model training and uses SMOTE technology to solve the class imbalance problem that may exist in the training data. This incremental learning method is also applied to the construction of Grid-IDS. The principle of the incremental learning part is shown in

Figure 5.

This study addresses catastrophic forgetting in incremental learning by employing a tree structure. The tree structure allows the model to add new classes at the leaf nodes of the existing model during each incremental learning phase, instead of retraining the entire network. By training only new data for each new class, the model retains the representations of previously learned classes, preventing forgetting. Compared to replay-based methods, the tree structure provides a more efficient solution.

In incremental learning, replay-based methods [

44] mitigate catastrophic forgetting by storing historical data and continuously replaying it during subsequent learning stages. However, this method has a significant drawback: as the dataset grows, there is a need to store and repeatedly use past samples, which increases storage costs [

45]. This issue becomes especially problematic in large-scale datasets and when class imbalance is severe. In contrast, the tree structure employed in this work learns new classes by performing local incremental updates and adding new nodes, avoiding the need to retrain the entire model. As a result, the tree structure reduces both storage and computational costs, providing a more efficient solution to catastrophic forgetting compared to traditional replay-based methods. Moreover, by maintaining independent nodes for each class and performing local incremental updates, the tree structure avoids the performance degradation typically caused by global updates in traditional incremental learning approaches. Therefore, the tree structure provides a more efficient and stable incremental learning mechanism, especially when dealing with concept drift and new attack types.

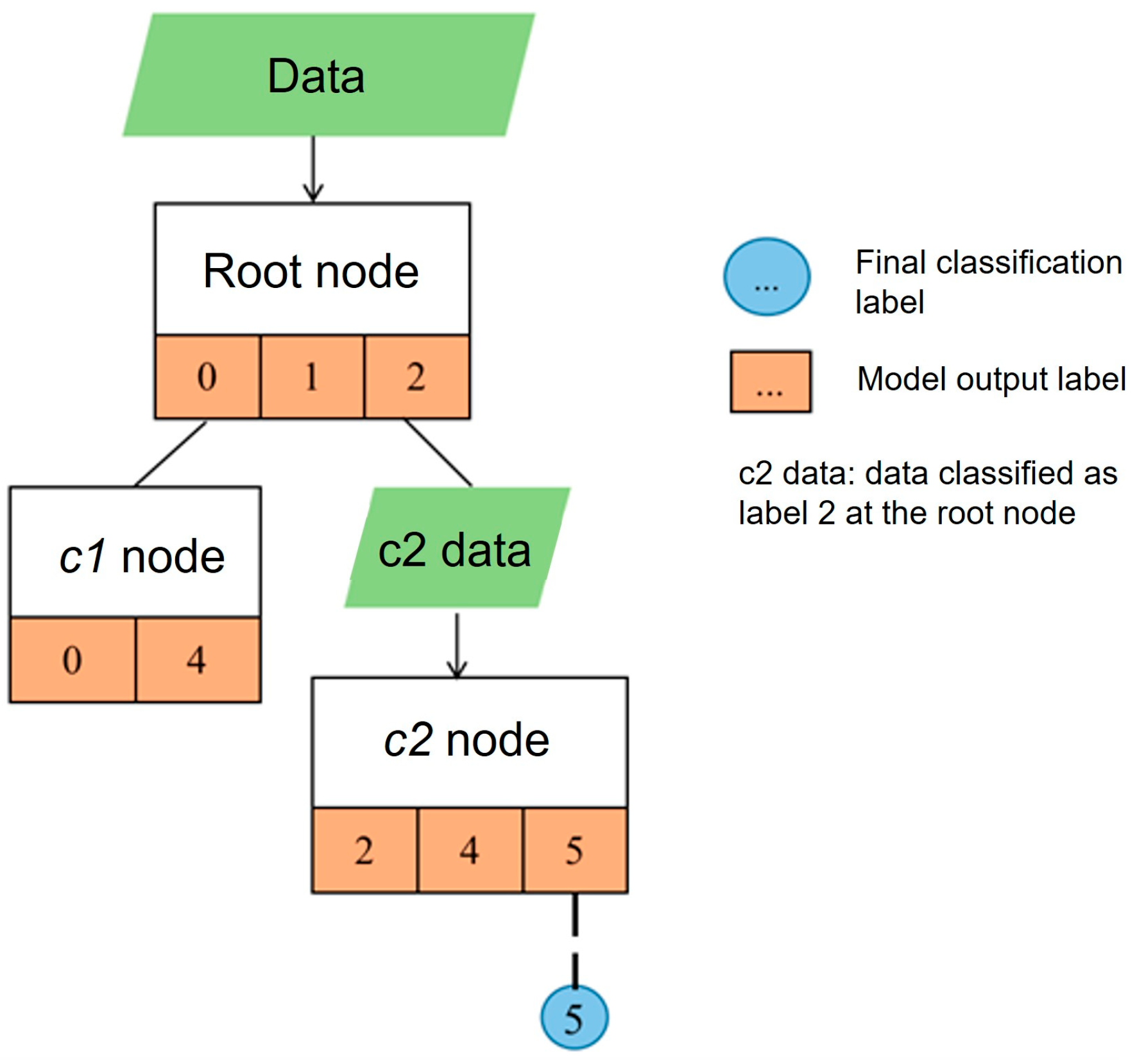

The most important part of the incremental learning method in this paper is the model node. The model node consists of two parts: the model and the model label mapping. The model classifies the input data into several labels, and these output labels are connected to other models through mapping. This mapping is realized by key-value pairs, where the output label is the key, and the value is the connected model or null, indicating that the label is not connected to any model.

Table 2 shows the implementation of model nodes.

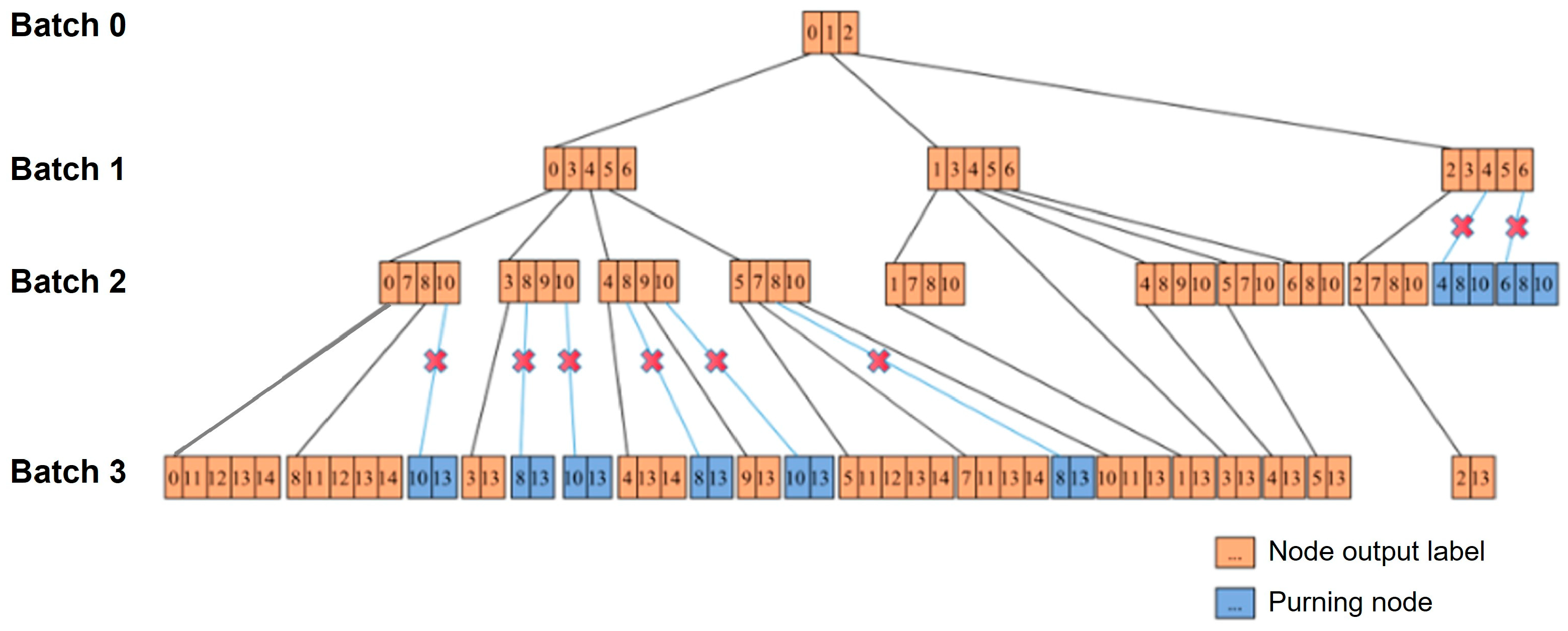

When input data needs to be classified, the root node of the original model is used for classification first, and whether to continue classification with child nodes or terminate is determined based on the mapping of the root node’s output labels. This process is similar to the decision tree algorithm. The following sections will introduce the specific details of the incremental process and classification process in the incremental learning method.

When the original training data is obtained, we build the first model using

Table 2, which will be the root node of the incremental tree. First, the original data is used to train the root node, and then the output labels of the root node are mapped to NULL to indicate that the current root is not connected to any child node.

As shown in

Figure 5, it is assumed that the initial training data includes three types of data with labels of

,

and

. We first use this dataset to train a 1D-CNN model as the root node of Grid-IDS. As the smart grid operates, new attack types may appear, such as classes

and

. When incremental learning is needed for these new classes, the specific steps are as follows:

Input the data of classes

and

into the root node classifier for classification. Because the root node classifier is trained based on old data, it will misclassify new data into one or more existing classes. For example, in the second part of

Figure 5, a part of data of class

is classified as

, and we mark this part of data as

; The other part is classified as

, labeled

; meanwhile, the data of class 4 are all classified as

and labeled

.

Retrain models using the partition produced above. As shown in the third part of

Figure 5,

classified as

is merged with the original class

data, and a new model is obtained by retraining. The

,

classified as

and the original class

data are merged and retrained to obtain another new model.

After retraining, the models form a tree structure, and each node consists of a 1D-CNN model, as shown in the fourth part of

Figure 5. When new data needs to be classified, it is first input to the root node for prediction. If the label output by the root node is not connected to any child node, then the label is the final classification result of the data; If the label is connected to other nodes, the data will continue to be input to the connected nodes for prediction, and so on recursively until the label corresponding to the prediction result is not connected to any node. For example, for the updated model, the input data

is fed into the root node for prediction. Assuming that the prediction result is

, since the label

is connected to another node

, the data

is put into

for prediction. If the prediction result is still

and label

is not connected to any node at this stage, then the category of data

is

.

The pseudo code of the incremental process is shown in

Table 3:

In addition, data screening is needed when training the new model, and the pseudo code of data screening is shown in

Table 4:

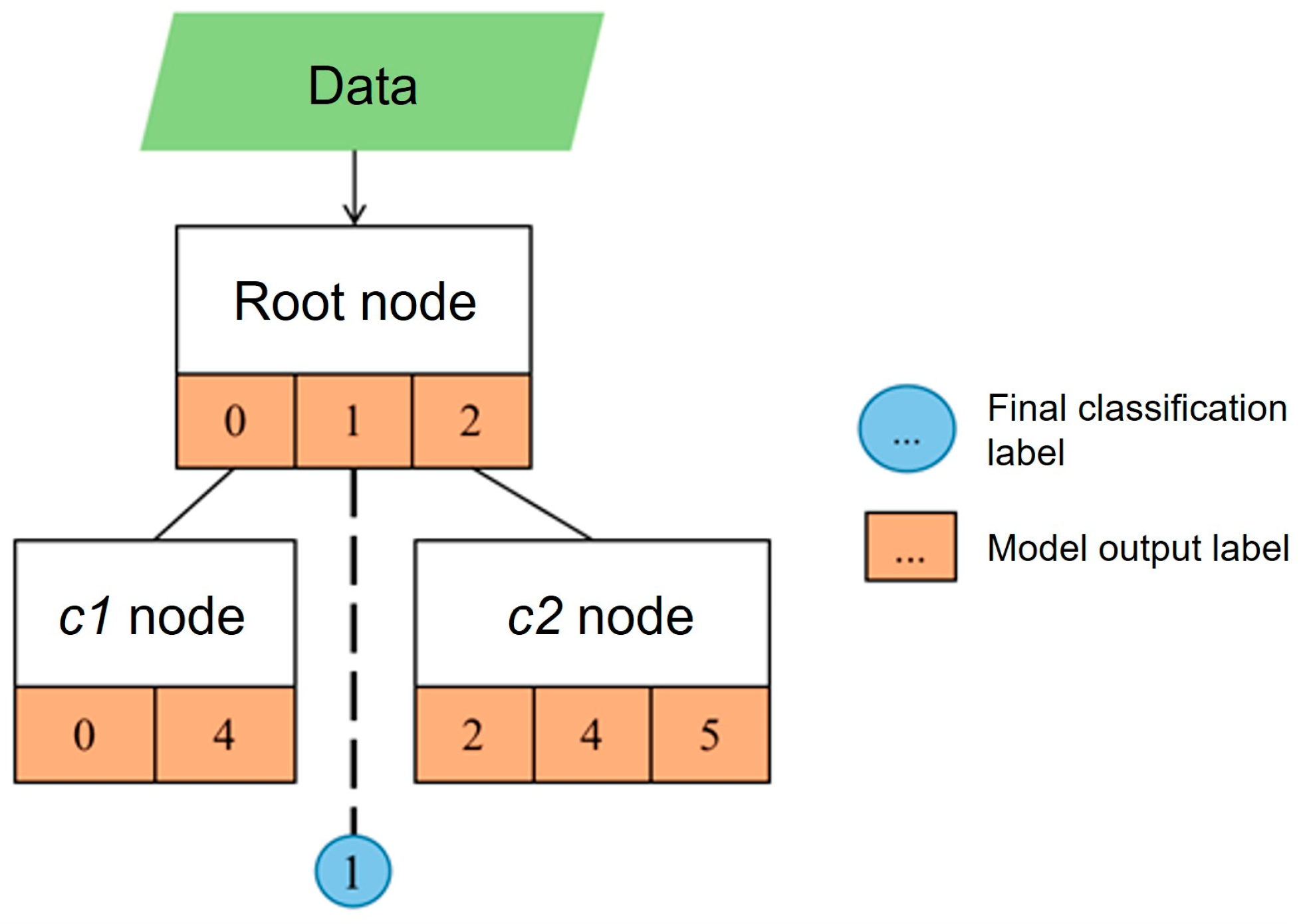

4.5. Classification Process

After the incremental process, the model can classify both previously seen (old) types and newly added types. The classification is a recursive procedure. The first step is to classify the input at the root node and then check whether the output label is linked to another node. If the output label is linked to another node, the linked node is invoked recursively to classify the sample again, and this continues until the output label is not linked to any further node.

If the output label is not linked to any node, that label is taken as the final classification result. As shown in

Figure 6, the root node assigns the input to label

; because label

is not linked to any node, the final classification result is label

.

If the output label is linked to another node, recursive classification is required until the label reached is not linked to any node; that label is then the final result. As shown in

Figure 7, the root node assigns the input to label

; since label

is linked to node

, the samples classified as label

are recursively passed to node

for further classification. Node

assigns the input to label

; because label

is not linked to any node, the final classification result is label

.

In addition, the model may classify a given type of data via multiple nodes. As shown in

Figure 8, the root node assigns the input to labels

and

. At this point both label

and label

are linked to other nodes, so the data routed to label 0 are recursively classified at node

, yielding label

, and the data routed to label

are recursively classified at node

, also yielding label

. Since both instances of label

are not linked to any further node, the final classification result for this group of data is label

.

The pseudo code of the classification process is given in

Table 5.

During classification, data selection is required when connected nodes are traversed, and the pseudocode is shown in

Table 6.

In addition, the pseudocode of the function UpdateLabel, which updates the original classification result, is shown in

Table 7:

The classification process traverses nodes at different levels of the tree; thus, the inference time for one input equals the sum of per-node classification times along the path from root to leaf. As increments proceed, the tree can grow and reduce IDS throughput. We therefore employ two complementary measures: pruning and fallback full retraining when necessary.

(1) Pruning mechanism. For a node’s output label

, let

be the number of samples belonging to a new class

in the current increment, and

the number of those samples predicted as label

at this node. We define the misassignment ratio:

If the maximum misassignment ratio over all new classes is below the threshold,

, and the absolute count of new-class samples routed to label

is insufficient (

, to avoid unstable child nodes), we do not train a new child model for label

in this round (i.e., the branch is pruned). Concretely, as in

Figure 5, if the new class

has

samples and fewer than

are predicted as label

, then the child of label-0 is not trained in this increment. Importantly, the samples involved in pruning are not permanently discarded: they are buffered and revisited in later increments once their presence becomes significant (

) or their cumulative count reaches the minimum support (

). This lightweight rule limits the effective branching factor and average depth, thereby curbing latency growth while preserving accuracy.

controls whether the new-class presence at label

is significant enough to justify a new branch; sets the minimum support. Larger

(or larger

) prunes more aggressively, reducing depth and latency but potentially causing a slight recall drop on rare classes; smaller

does the opposite.

If, despite pruning, the per-decision latency exceeds the real-time requirement in smart grid scenario as increments accumulate, we trigger a full retraining on the aggregated dataset and then resume incremental updates on the refreshed model. With these mechanisms, learning new attack types only reuses a subset of old data, which reduces training cost and mitigates catastrophic forgetting, enabling the model to adapt dynamically to emerging attacks while maintaining robust detection performance and practical real-time latency.

Let the output space be , where is the set of attack types. For deployment, a binary alarm is obtained by collapsing all into a single Attack label, i.e., ; otherwise we return the fine-grained label .

6. Conclusions

Smart grid security is fundamental to a stable energy supply. This paper proposes Grid-IDS, an incremental intrusion detection system with three modules—data preprocessing, model training, and incremental learning. By introducing a tree-based incremental strategy, Grid-IDS monitors attacks in real time, learns new attack types while retaining prior knowledge, and mitigates catastrophic forgetting.On CICIDS2017, Grid-IDS attains an average detection accuracy of 99.65% and consistently outperforms baseline approaches including T-dfnn [

43], DNN-batch [

48], ImFace [

48], and Hoeffding Tree [

49]. On WUSTL-IIoT-2018, it maintains competitive accuracy and precision—comparable to Diaba et al. [

51], higher than Ahakonye et al. [

52], while achieving state-of-the-art F1 and perfect recall, indicating good generalization under heterogeneous traffic.

Despite these promising results, several limitations remain. First, our evaluation uses public datasets rather than live smart-grid traffic, which may constrain generalizability. Second, prolonged incremental updates could still degrade performance or increase computational overhead. Finally, although latency is acceptable in our testbed, real-time performance under resource-constrained deployments requires further validation.Future work will integrate Grid-IDS with SCADA/PMU infrastructures to assess deployment feasibility, address concept drift in highly dynamic traffic, and incorporate multi-source data (e.g., device logs and control signals). We also plan to explore distributed or federated learning to enhance scalability, privacy, and adaptability, paving the way for real-world deployment in smart grids.