1. Introduction

The deployment of conditionally automated vehicles (SAE Level 2–3) poses new challenges to traffic safety, as drivers are no longer continuously engaged in vehicle control but must remain ready to intervene when automation reaches its operational limits [

1,

2,

3]. In such contexts, effective DSM is essential to detect distraction and ensure readiness for takeover, as delays in driver response may compromise safety [

4,

5,

6].

Driver inattention recognition has been a subject of research for decades, with much of the earlier work targeting distraction during manual driving. A large proportion of these studies relied on driving performance measures—such as steering variability, lane deviation, or combined these with physiological and ocular indicators to infer workload and attention [

7,

8]. However, in conditionally automated driving, drivers are disengaged from continuous control, rendering performance-based indicators largely unavailable [

9]. Accordingly, two main approaches have emerged to assess driver states: image-based methods and physiology-based methods [

10,

11].

Image-based approaches attempt to classify NDRTs using in-vehicle cameras. Yang et al. developed a dual-camera monitoring system for detecting visual-related NDRTs in Level 3 automation, achieving 86.18% accuracy with a gaze-mapping algorithm and ROI (Region of Interest) optimization [

12]. Yang et al. also proposed a lightweight temporal attention-based CNN (Convolutional Neural Networks) for low-latency inference on edge devices [

13]. Ma et al. designed a lightweight Transformer with soft–hard feature constraints guided by visual priors, reaching 97.1% accuracy [

14]. Knapik et al. combined far-infrared imaging with deep neural fusion modules to detect fatigue and distraction features such as yawning and head pose [

15]. Shen et al. introduced StarDL-YOLO, improving distracted driving detection to 99.6% accuracy with fewer parameters and reduced computational load [

16]. While such approaches are effective for detecting overt NDRTs (e.g., phone use, reading), they are limited in capturing internal cognitive distraction.

Physiological and ocular signals, in contrast, provide objective indicators of cognitive and visual processes that can reveal internal driver states [

17,

18]. Najafi et al. compared manual and automated driving using EEG, ECG, and EDA, showing higher engagement in manual driving and the utility of physiological signals as attention markers [

19]. Yang et al. found that human-crafted glance features outperform machine-generated ones in manual driving but exhibit reduced sensitivity in Level 2 automation [

20]. Lin et al. proposed HATNet, integrating temporal–spatial attention with calibration-based transfer learning, achieving up to 94.26% subject-dependent and 87.03% subject-independent accuracy [

21]. Liu et al. introduced a diffusion-based encoder–decoder model for EEG-based driving behavior classification, achieving 83.65% accuracy and outperforming baselines through spectral features and synthetic data generation [

22].

While prior studies demonstrate the promise of individual physiological or ocular modalities, systematic investigations of multimodal fusion under conditionally automated driving remain limited. To address this gap, the present study focuses on integrating physiological and ocular signals to capture both visual and cognitive distraction. The recently released TD2D dataset [

23] was employed, providing synchronized multimodal signals from 50 participants across ten secondary tasks. A standardized preprocessing pipeline was developed to extract features from cardiovascular, electrodermal, and ocular modalities, and task labels were reassigned based on subjective workload ratings to better reflect cognitive demand. This design enables the classification of internal states such as cognitive distraction. The remainder of this paper is organized as follows:

Section 2 describes the dataset, preprocessing pipeline, feature extraction, and experimental design;

Section 3 presents the classification results, including feature selection, model comparison, modality contributions, temporal window analysis, and within versus cross-subject evaluation; and

Section 4 concludes with key findings and implications.

The main contributions of this study are summarized as follows: (1) a multimodal distraction detection framework integrating physiological and ocular signals under conditionally automated driving is proposed, enabling the detection of both visual and cognitive distraction; (2) a workload-based task reassignment strategy is introduced to re-categorize ten secondary tasks into three representative conditions, supported by statistical validation; (3) a comprehensive evaluation of temporal windowing and overlap settings is conducted, providing quantitative evidence for optimal feature extraction configurations in multimodal DSM; and (4) the cross-subject generalization challenge is highlighted, and potential solutions are discussed to provide practical insights for real-world deployment.

In summary, this study advances driver state monitoring in conditionally automated driving by introducing a workload-based task reassignment strategy and integrating ocular and physiological modalities for more accurate and interpretable distraction detection.

2. Methodology

2.1. Dataset Description

This study focuses on conditionally automated driving (SAE Level 2–3) in highway-like scenarios, where drivers were disengaged from continuous vehicle control but required to monitor the driving environment and remain ready for takeover. During the experiments, participants performed various secondary tasks (e.g., N-back, reading, audiobook) while the automation controlled longitudinal and lateral maneuvers. The TD2D dataset, collected by Hwang et al., provides synchronized multimodal recordings in such scenarios, enabling systematic evaluation of distraction states. The dataset was chosen because it uniquely combines takeover performance data with synchronized ocular and physiological signals across a broad range of secondary-task conditions. Its relatively large sample size, balanced demographics, and ecological validity of secondary tasks make it particularly suitable for multimodal driver state monitoring.

The dataset comprises 50 licensed drivers (25 female, 25 male) spanning five age groups (20 s–60 s, mean age ≈ 43.8 years), ensuring balanced sex distribution and broad demographic coverage. Each participant completed ten scenarios corresponding to distinct task conditions.

Scenarios followed a standardized L2 driving script: the ego vehicle cruised at 50 km/h in the right lane, maintaining a fixed 25 m headway behind a lead vehicle. A critical takeover event occurred at a random time between 50 s and 130 s (M = 90.9, SD = 18.2), triggered by either (i) a pedestrian jaywalking from the sidewalk or (ii) the lead vehicle’s sudden stop. The event was designed with a time-to-collision (TTC) of 2 s, requiring rapid takeover to avoid collision. Each scenario terminated after the driver’s intervention was recorded, ensuring consistent outcome measures across conditions.

2.2. Instrumentation and Signals

TD2D provides multimodal data streams synchronized via the Network Time Protocol (NTP), ensuring precise temporal alignment across all modalities. The dataset includes the following components:

Ocular signals from Pupil Labs’ Pupil Core headset (Pupil Labs GmbH, Berlin, Germany)—two eye cameras (200 Hz, 192 × 192) and one scene camera (60 Hz, 1280 × 720)—processed to derive pupil diameter, gaze angles/positions, and fixations;

Physiological signals from Polar H10 (Polar Electro Oy, Kempele, Finland) (ECG at 130 Hz; heart rate at 1 Hz) and Empatica E4 (Empatica Inc., Boston, MA, USA) (PPG/BVP at 64 Hz; EDA at 4 Hz);

Performance and workload measures, including takeover reaction time (from event onset to first control input) and takeover success (binary), and post-trial NASA-TLX workload ratings.

TD2D includes ten task conditions designed to elicit modality-specific distraction: one baseline with no secondary task; three visual tasks—e-book reading, visual texting, and number-guessing game with on-screen interaction; and three cognitive tasks (0-/1-/2-back) and three auditory naturalistic tasks (audiobook listening, voice-based texting, voice-based number-guessing game).

The TD2D dataset was obtained from its official public repository on Zenodo (DOI: 10.5281/zenodo.14185964) under an open-access license on 15 July 2025. The dataset is distributed as CSV files containing synchronized physiological signals and workload/event metadata, along with MP4 recordings of eye videos.

2.3. Preprocessing and Feature Extraction

The raw TD2D dataset contains multimodal signals with heterogeneous sampling rates and asynchronous timestamps. To prepare the data for analysis, a unified preprocessing pipeline, as illustrated in

Figure 1, was implemented, followed by systematic feature extraction to obtain robust representations of driver distraction states. Data preprocessing and analysis were implemented in Python 3.8 using PyTorch 2.4.1, NumPy 1.24.4, Pandas 2.0.3, and scikit-learn 1.3.2.

In this study, it was assumed that the automation provided reliable control of longitudinal and lateral maneuvers, ensuring that distraction was induced primarily by secondary tasks rather than by vehicle handling demands. All signals were resampled to 64 Hz to achieve synchronization across modalities, under the assumption that this sampling rate preserved relevant temporal dynamics while enabling multimodal integration. These assumptions allowed the experimental design to focus specifically on the detection of visual and cognitive distraction under conditionally automated driving.

2.3.1. Signal Resampling and Interpolation

The original dataset employed heterogeneous sampling rates: ECG (130 Hz), PPG (64 Hz), pupil diameter (200 Hz), gaze (200 Hz), EDA (4 Hz), and fixations (event-based). To enable synchronized feature extraction, all continuous signals were resampled to a common rate of 64 Hz using linear interpolation. This frequency matches the native PPG sampling, represents a practical compromise between low-frequency EDA and high-frequency ocular streams, and remains sufficient to capture the dynamics of pupil and gaze measures (<30 Hz).

For consistency, EDA was upsampled to 64 Hz (without adding new information but ensuring temporal alignment), while ocular signals were anti-aliased before downsampling to minimize spectral distortion. A supplementary validation using each channel’s native rate showed negligible performance differences (<2%), confirming that the unified 64 Hz representation preserved model fidelity without introducing bias.

2.3.2. Fixation Data Transformation

Fixation information in the original dataset was stored as event-based annotations (onset, duration, dispersion). These were transformed into continuous time series at 64 Hz to match other modalities. During fixation intervals, corresponding spatial and temporal descriptors were assigned to each sampled time point, and a binary fixation flag was introduced to distinguish fixation from non-fixation periods. This conversion enabled fixation dynamics to be analyzed alongside continuous physiological and ocular signals.

2.3.3. Temporal Synchronization

Although all signals were timestamped via NTP, minor offsets occurred due to differences in sensor initialization. To ensure consistency, the latest common start time across modalities was aligned as the trial onset, and the earliest common end time was set as the trial termination. This alignment guaranteed full overlap across modalities, thereby preserving the integrity of multimodal feature extraction.

2.3.4. Final Dataset Structure

After preprocessing, each participant contributed up to ten trials, with synchronized signals across 10 channels: ECG, PPG, pupil diameter, gaze angle X/Y, EDA, fixation angle X/Y, fixation dispersion, and fixation flag. Quality control removed incomplete or corrupted sessions, yielding 444 valid trials (88.8% of the original 500).

At the task level, the numbers of retained trials were: 0-back (45), 1-back (43), 2-back (42), audiobook listening (46), auditory gaming (47), auditory texting (44), baseline (42), e-book reading (45), gaming (48), and texting (42). Excluded trials were primarily due to missing or corrupted signals. At the modality level, losses were largely caused by complete session failures (e.g., gaze missing in 57 trials, and ECG, PPG, EDA, pupil diameter, and fixation streams each missing in 56 trials). Trials with missing modalities were discarded entirely, and no interpolation was applied to reconstruct long gaps.

The final dataset comprised 2,584,261 time points, equivalent to 673 min (11.2 h) of synchronized multimodal data, providing sufficient statistical power for subsequent feature extraction and analysis.

2.3.5. Feature Extraction

To characterize driver states, multimodal features were extracted from synchronized physiological and ocular signals using a sliding-window approach. Common statistical descriptors, including standard deviation, mean, maximum, minimum, range, skewness, kurtosis, and coefficient of variation, were computed across relevant modalities to capture autonomic, sympathetic, and cognitive indicators of visual distraction, cognitive distraction, and normal attentive states. The feature set is derived from the following modalities:

ECG. Heart rate variability (HRV) metrics were calculated, including mean RR interval (average time between heartbeats), standard deviation of normal to normal intervals (SDNN), root mean square of successive differences (RMSSD), and mean heart rate. These features reflect cardiovascular responses to attentional states.

EDA. Twelve features were extracted, including statistical descriptors and four rate-of-change indices (mean and standard deviation of first-order differences, mean of positive changes, mean of negative changes). These capture sympathetic arousal and skin conductance dynamics.

PPG. Eight statistical descriptors were computed, characterizing cardiovascular variability complementary to ECG.

Ocular Features.

Gaze Features. Sixteen descriptors were derived from horizontal and vertical gaze angles, capturing spatial gaze dynamics related to attention allocation.

Pupil Features. Eight descriptors were computed, indexing cognitive load and attentional engagement.

Fixation-Derived Features. Twenty-five features were extracted from fixation angles (X/Y) and fixation dispersion, along with the normalized proportion of fixation time (sum of binary fixation flags per window). These quantify fixation stability and attentional focus.

In total, the final feature space comprised 73 features per temporal window, integrating cardiovascular, electrodermal, and ocular modalities (see

Table 1).

2.3.6. Normalization and Validation

To address inter-individual variability, subject-specific z-score normalization was applied using baseline (no-task) trials as reference, with parameters learned only from the training subjects. This strategy preserved intra-individual dynamics while ensuring comparability across participants and avoided information leakage from the test data.

2.4. Label Reassignment and Workload-Based Classification

To systematically evaluate driver distraction states and ensure ecological validity, the ten secondary task conditions were reorganized into three primary categories based on both task modality and perceived workload ratings from the NASA-TLX questionnaire. This label reassignment approach aligns with established driver distraction taxonomy [

24,

25].

The original ten task conditions were consolidated into three classes based on workload assessment and task modality:

Focused Driving (Baseline): Comprising the baseline condition (no secondary task), audiobook listening, and 0-back task, representing low cognitive load conditions that approximate normal driving states. Audiobook and 0-back were merged with the baseline category because their NASA-TLX workload scores were statistically close to the no-task condition, confirming that they impose minimal additional cognitive demand.

Cognitive Distraction: Including 1-back and 2-back tasks, auditory gaming, and auditory texting. These tasks primarily engage working memory and cognitive processing without requiring visual attention shifts from the driving scene.

Visual Distraction: Encompassing e-book reading, visual texting, and gaming tasks that require sustained visual attention to secondary displays, potentially compromising road monitoring and hazard detection.

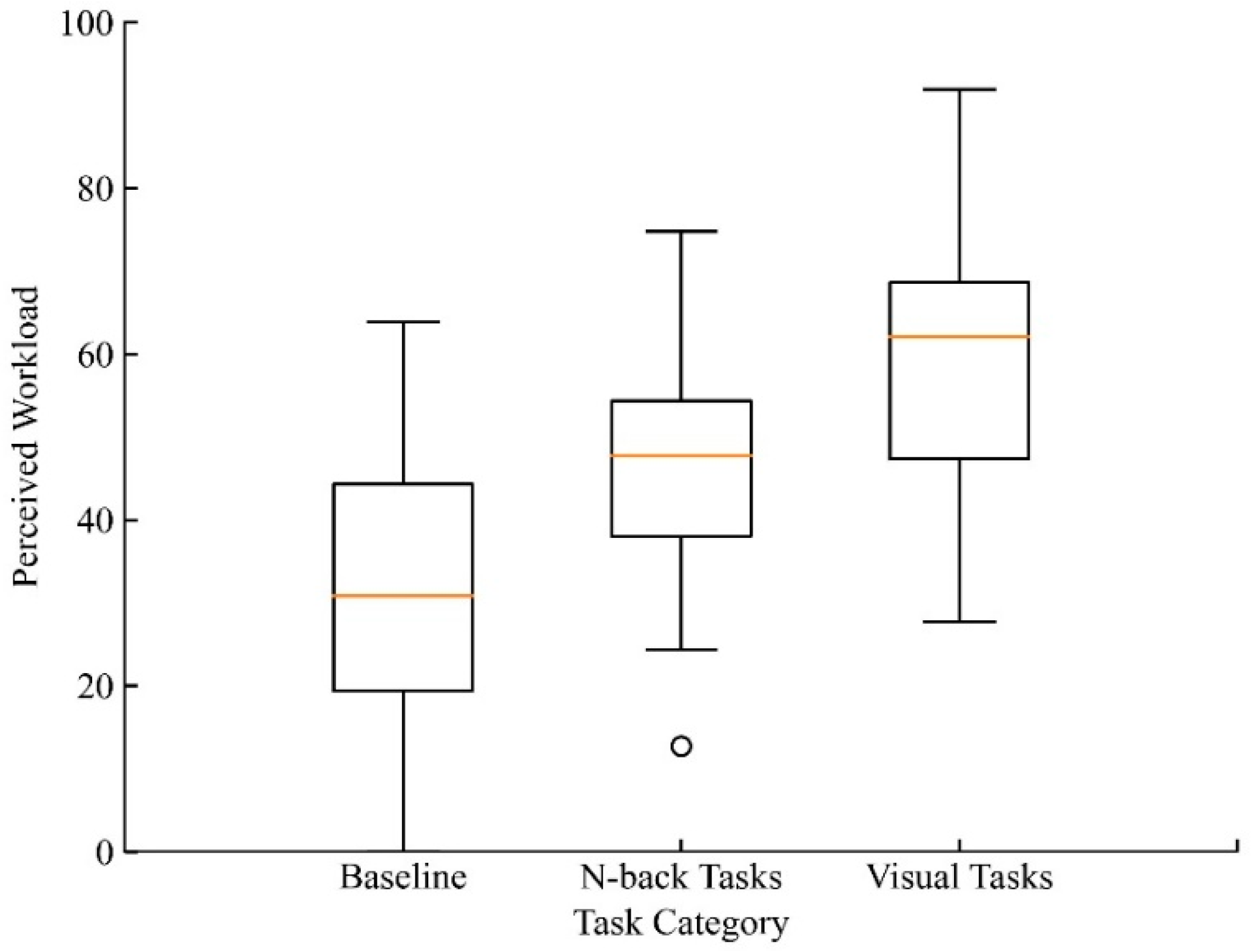

The NASA-TLX ratings were analyzed to validate the proposed label reassignment scheme using a repeated measures analysis of variance (ANOVA). Mauchly’s test of sphericity indicated that the sphericity assumption was violated (W = 0.274, p < 0.001). Therefore, Greenhouse–Geisser correction was applied to the degrees of freedom. The repeated measures ANOVA revealed a significant effect of task condition on perceived workload (F(2, 98) = 101.48, p < 0.001).

Figure 2 presents the distribution of workload ratings across conditions. Bonferroni-corrected post hoc comparisons showed that cognitive distraction tasks (M = 47.27, SD = 13.65) elicited significantly higher workload ratings than baseline (M = 31.16, SD = 16.34) (

p < 0.001). Visual distraction tasks (M = 60.26, SD = 13.85) produced the highest workload, significantly exceeding both baseline (

p < 0.001) and cognitive distraction conditions (

p < 0.001). Importantly, audiobook listening (M = 38.28, SD = 18.95) and the 0-back task (M = 33.37, SD = 21.18) did not significantly differ from baseline (

p > 0.05), although both were significantly lower than the 1-back, 2-back, and visual distraction tasks (all

p < 0.01). These findings provide strong statistical justification for reassigning audiobook and 0-back into the baseline category.

Overall, the progressive increase in workload from baseline to cognitive distraction to visual distraction establishes a clear hierarchical pattern, thereby supporting the validity of the three-class taxonomy and reflecting meaningful differences in cognitive and visual resource demands imposed by secondary tasks. Such a categorization is not only theoretically meaningful but also practically relevant, as different levels of workload may exert distinct impacts on takeover safety. Consequently, the proposed taxonomy can serve as a foundation for designing adaptive takeover warning systems in conditionally automated driving.

2.5. Classification Framework

2.5.1. Classification Methods

Three representative machine learning models were implemented to classify the three driver states using the established dataset.

Random Forest (RF). A tree-based ensemble model chosen as the primary classifier due to its robustness against noisy features, ability to capture nonlinear dependencies, and built-in feature importance ranking. In this study, the RF model was configured with 300 trees, unrestricted maximum depth, and a fixed random seed.

Multilayer Perceptron (MLP). A feedforward neural network with two hidden layers (64 and 32 units) was used to examine whether nonlinear feature interactions could be better captured through learned representations. The MLP employed ReLU activations, Adam optimizer, and a maximum of 500 training iterations, with random initialization controlled by the same random seed as in the RF model.

Support Vector Machine (SVM). A classical margin-based classifier adopted as a baseline due to its effectiveness on high-dimensional, small-sample datasets. The SVM used a radial basis function (RBF) kernel with penalty parameter C = 1.0, kernel coefficient gamma = ‘scale’. The random seed was set identically to ensure consistency across models.

To ensure fairness, all models were trained and evaluated under identical preprocessing procedures, including the same temporal windowing, baseline-based normalization, and data splits, without applying any additional augmentation.

2.5.2. Evaluation Metrics

To comprehensively evaluate the classification performances of different methods, the following metrics were adopted.

As illustrated in Equation (1), accuracy is the proportion of correctly classified samples among all samples. Accuracy reflects overall performance but may be biased toward majority classes in imbalanced datasets.

where

is the number of correctly classified samples in class

,

is the total number of classes, and

is the total number of samples.

- 2.

Macro-averaged Recall

Recall for each class is defined as Equation (2) below.

where

is the number of false negatives.

The macro-averaged recall denoted as

is then computed as Equation (3).

Unlike micro-averaged recall, which is dominated by the majority class, the macro-average treats all classes equally by first computing recall per class and then averaging. This ensures that minority classes receive equal importance, making the metric more appropriate for imbalanced datasets and for evaluating overall model generalization across all driver-state categories.

- 3.

Macro-averaged F1-score (F1)

For each class, F1 is the harmonic mean of precision and recall.

is the number of false positives for class .

The macro-averaged F1-score is then calculated as equation below.

Macro-F1 balances precision and recall across all classes while giving each class equal weight. This is particularly important in multi-class driver state classification, where both false negatives and false positives in minority classes need to be penalized equally to ensure fair and robust evaluation.

2.5.3. Dataset Partitioning Strategies

Two partitioning strategies were employed to evaluate both within-subject performance and cross-subject generalization.

To avoid potential information leakage caused by overlapping temporal windows, each trial was first segmented into five consecutive, non-overlapping blocks of equal duration. All windows extracted from the same block were kept together and assigned to the same fold during cross-validation. This block-wise partitioning ensured that temporally adjacent and highly correlated windows did not appear in both training and testing sets simultaneously [

26]. The evaluation was conducted using five-fold cross-validation, where four blocks were used for training and the remaining block for testing in each fold. This strategy reflects an optimistic setting, as the model is trained and tested on data from the same individuals, thereby quantifying the upper-bound performance achievable when individual-specific patterns are available.

- 2.

Cross-subject

This strategy corresponded to group k-fold cross-validation, in which all trials from selected participants were reserved for testing, while the remaining participants’ data were used for training. Two complementary approaches were adopted:

Random subject splits. Standard group five-fold cross-validation was applied by randomly partitioning subjects into disjoint training and testing folds. This procedure directly evaluates the model’s ability to generalize to previously unseen drivers.

Age-stratified splits. To further assess demographic robustness, participants were grouped into five age strata (20–29, 30–39, 40–49, 50–59, 60–69 years). Leave-group-out validation was then performed by holding out all subjects from one age group for testing while training on the remaining groups. This setting simulates external validation across demographics and evaluates whether the framework generalizes beyond the training cohort.

2.5.4. Feature Selection Strategy

Given that the original feature space comprised 73 features per temporal window, retaining all features risked introducing noise, multicollinearity, and unnecessary computational overhead. Therefore, a systematic feature selection strategy was implemented. The following feature selection steps were applied:

Features with near-zero variance were removed, as such features contribute little information for classification;

Highly correlated features (Pearson’s r > 0.9) were eliminated to avoid redundancy and collinearity. The threshold was chosen in line with common practice (typically 0.85–0.95), striking a balance between removing redundancy and retaining informative signals;

The remaining features were ranked based on their importance scores derived from a Random Forest model. Feature importance was computed using the mean decrease in impurity (MDI) criterion, which quantifies the average reduction in Gini impurity contributed by each feature across all trees. This method was selected for its computational efficiency and suitability for high-dimensional spaces.

To determine the optimal subset size, a systematic search was conducted by incrementally selecting the top-k ranked features and evaluating their performance on the training set. Importantly, feature selection was performed independently within each training fold of cross-validation to avoid information leakage. The final feature subset yielding the highest cross-validation accuracy was chosen, ensuring an effective trade-off between dimensionality reduction and predictive performance.

Additionally, post hoc SHAP analysis was applied to interpret the relative contribution of the selected features.

3. Results and Discussion

This section reports the experimental results of driver state classification using the preprocessed multimodal dataset. Feature selection (

Section 2.5.4) was consistently applied, and the optimized feature set was used in all experiments. Unless otherwise noted,

Section 3.1,

Section 3.2 and

Section 3.3 were conducted under the 5 s window with 50% overlap, while

Section 3.4 examines alternative window lengths and overlaps to identify the optimal temporal configuration. A threefold comparative analysis was conducted, focusing on: (1) the discriminative contribution of individual modalities (physiological features and ocular features) versus multimodal integration; (2) the impact of temporal window configurations (3 s, 5 s, 8 s with 0%, 25%, and 50% overlaps) on classification performance; and (3) the contrast between within-subject generalization and cross-subject transferability.

3.1. Feature Selection Results

The feature selection process began with the 73 extracted features. Since all features exhibited sufficient variance, none were removed at the near-zero variance stage. Subsequently, highly correlated features (Pearson’s r > 0.9) were eliminated, reducing the set to 62. Using Random Forest importance scores as the ranking criterion, a systematic search was then performed to determine the optimal number of features. Subsets ranging from 5 to 60 (in increments of 5) were evaluated using 5-fold cross-validation on the training set. For computational efficiency, the Random Forest was restricted to 100 trees, prioritizing speed over peak accuracy.

As shown in

Figure 3, the best-performing subset contained 25 features, representing a 65.8% reduction relative to the original 73 while achieving the highest cross-validation accuracy. This optimized feature subset was therefore adopted in all subsequent experiments.

The optimal feature set, comprising 25 selected features, is summarized in

Table 2, grouped by modality and feature type. Ocular metrics dominated the subset (21 out of 25), underscoring their discriminative strength for distraction detection. In contrast, only three features originated from EDA and one from PPG. This distribution suggests that ocular signals provide the most informative cues for capturing distraction-related behaviors, while physiological measures such as EDA and PPG contribute complementary robustness by indexing autonomic arousal and cardiovascular regulation. Notably, ECG and PPG features are known to exhibit high inter-subject variability, which may reduce their utility in cross-subject generalization, explaining why most were eliminated during feature selection. The final 25-feature subset was fixed and consistently applied in all subsequent experiments.

To further interpret the contribution of the selected features, SHAP analysis was applied to the Random Forest model trained on the 25-feature subset.

Table 3 lists the top ten features ranked by mean absolute SHAP value (±standard deviation). The results confirm that ocular measures dominate the importance ranking. Interestingly, EDA mean emerged as the single most influential feature, highlighting that tonic arousal provides complementary cues beyond ocular dynamics.

3.2. Model Comparison

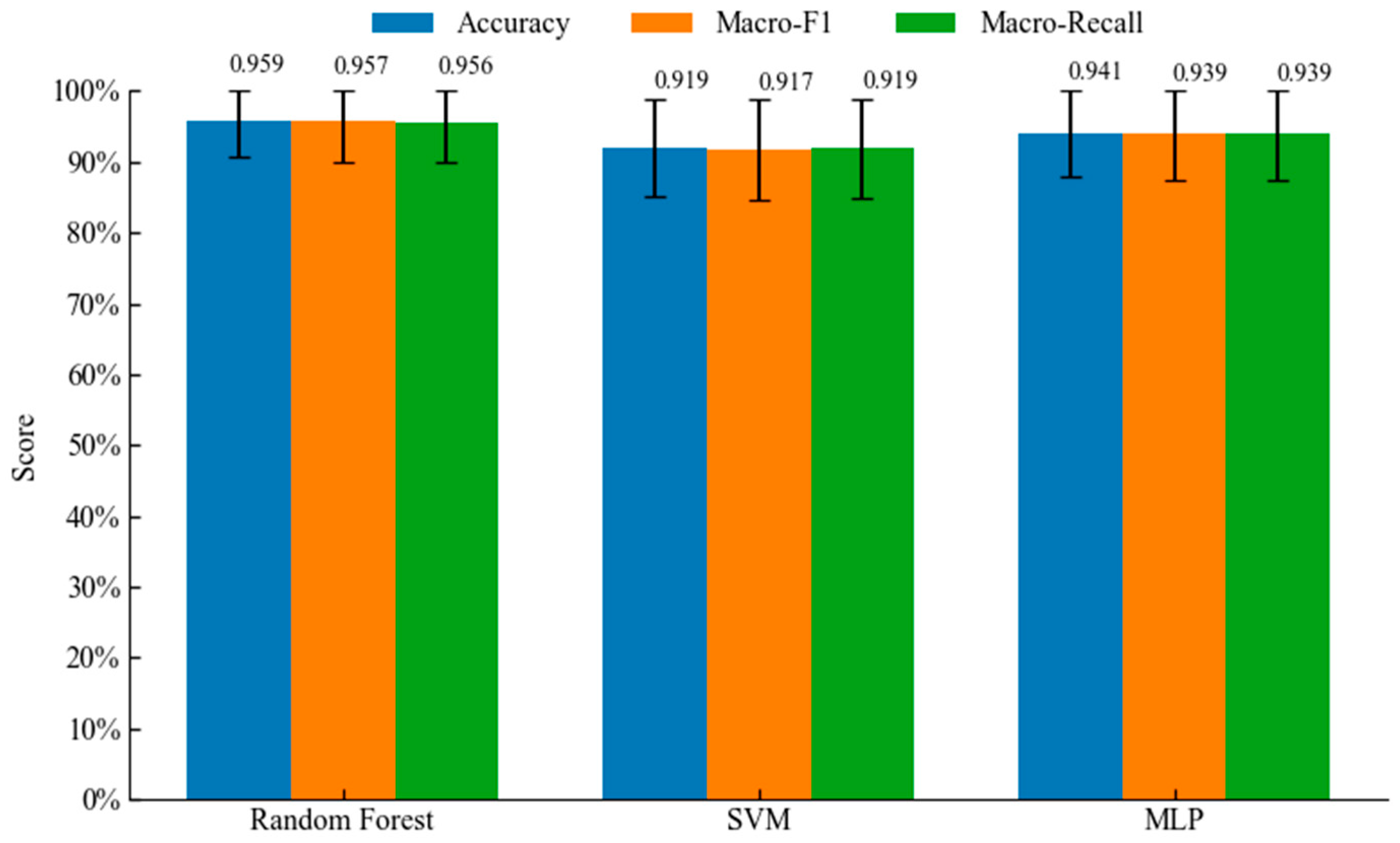

Figure 4 presents the within-subject classification performance of the three models evaluated using the selected 25 features. Across all three evaluation metrics, Random Forest consistently achieved the best performance, reaching about 0.96 with relatively low variance, which demonstrates both high accuracy and robustness.

SVM achieved slightly lower performance, with metrics around 0.92, indicating that kernel-based methods can capture multimodal feature patterns but are less effective than tree ensembles. MLP obtained intermediate-to-high results, with all metrics around 0.94, performing better than SVM but still slightly below Random Forest.

Overall, these findings confirm that tree-based ensemble methods are particularly well suited for driver state classification with the proposed feature set, and Random Forest was therefore selected as the primary model for subsequent analyses.

Under the 5 s window with 50% overlap, the average feature extraction time was 2.89 ms per window, computed over 15,192 windows. For the subsequent classification stage, the block-wise 5-fold cross-validation results showed that Random Forest, configured with 300 trees of average depth ≈ 6.83, required longer training time (≈325 ms per fold) but offered efficient inference, with prediction latency of only 0.54 ms per sample. The MLP with two hidden layers (64 and 32 units) had the highest training cost (≈996 ms per fold) but provided extremely fast prediction (≈0.004 ms per sample). In contrast, the SVM required about 121 support vectors, leading to a modest training time (≈1.9 ms per fold) and fast inference (≈0.008 ms per sample), although its performance was consistently lower than RF and MLP. Overall, Random Forest demonstrates a favorable trade-off between accuracy and efficiency, making it suitable for real-time deployment in driver state monitoring.

3.3. Contribution of Modalities

Table 4 summarizes the classification performance under different modality and fusion strategies. In the unimodal setting, models trained only on ocular features consistently outperformed physiology-only models (ECG, PPG, EDA), achieving ≈0.93 across all metrics versus ≈0.85 for physiology. This confirms that ocular measures are the most informative single modality.

For multimodal integration, both early and late fusion improved performance over unimodal baselines. Early fusion with all 73 features reached ≈0.94, while applying feature selection (top-25 subset) further boosted accuracy, Macro-F1, and Macro-Recall to ≈0.96, demonstrating that removing redundant features enhances discriminative power. Late fusion also improved upon unimodal baselines (≈0.93) but remained slightly below early fusion.

Overall, these findings highlight three conclusions: (1) ocular features are the most informative single modality, (2) multimodal integration improves classification performance beyond any unimodal model, and (3) feature selection enhances early fusion by removing redundancy and yielding stronger generalization.

3.4. Effect of Temporal Window Length and Overlap

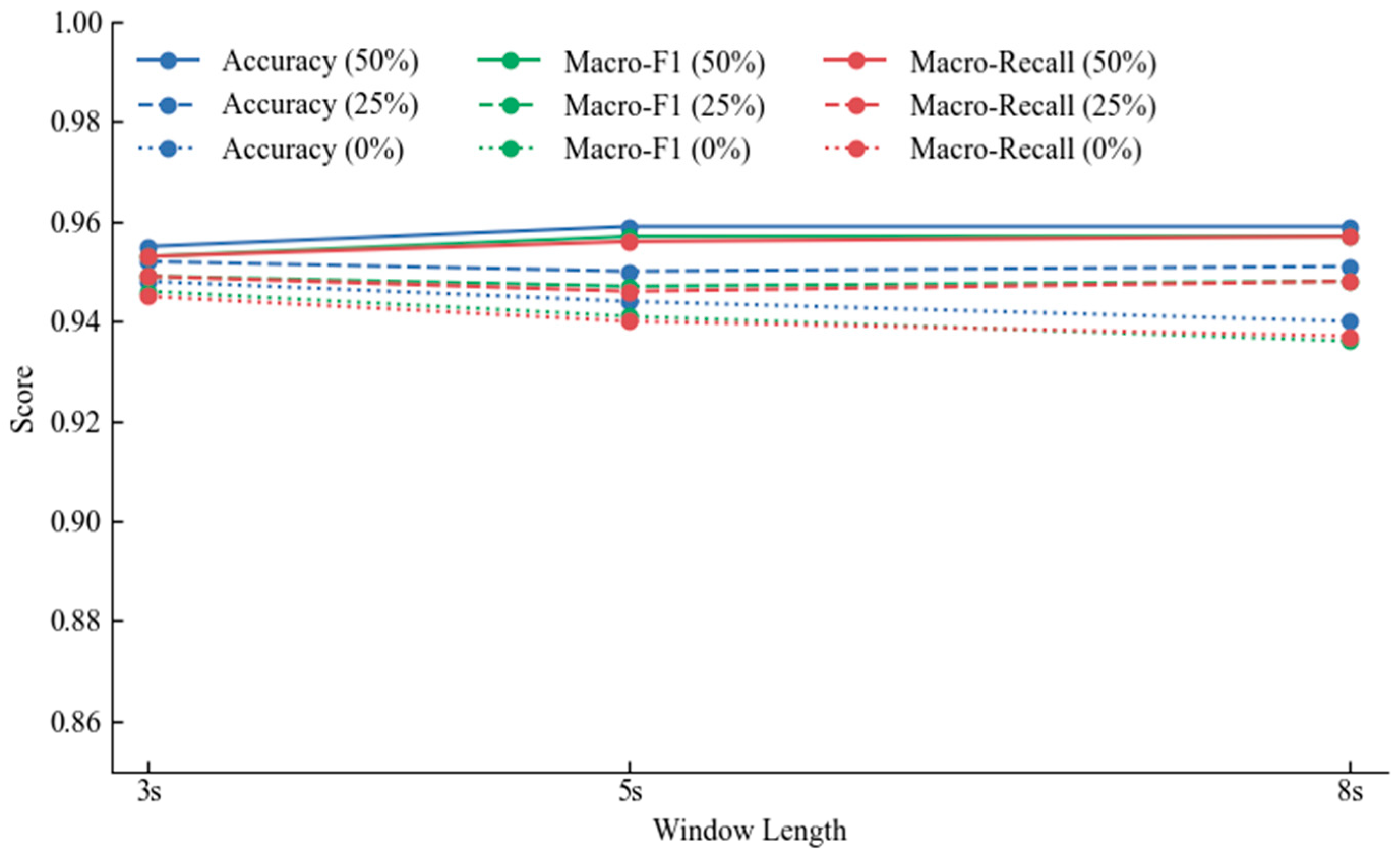

To investigate how temporal window configurations affect model performance, the window length (192, 320, and 512 samples, corresponding to 3.0 s, 5.0 s, and 8.0 s at 64 Hz) and the window overlap (50%, 25%, and 0%) were systematically varied.

Figure 5 summarizes the results.

The findings reveal that overlap plays a more decisive role than window length. With 50% overlap, all window lengths (3 s, 5 s, and 8 s) achieved similarly high performance. However, when overlap decreased, performance dropped consistently across all window lengths, reaching as low as 0.940 at 8 s with 0% overlap. This demonstrates that insufficient overlap reduces sample diversity and increases the risk of overfitting, whereas sufficient overlap helps stabilize temporal representations. Statistical analysis confirmed these trends: repeated-measures ANOVA showed a significant effect of overlap (p < 0.05), while the effect of window length was less pronounced. The best-performing configuration in this study was an 8 s window with 50% overlap, which yielded the highest classification results.

3.5. Within vs. Cross-Subject Evaluation

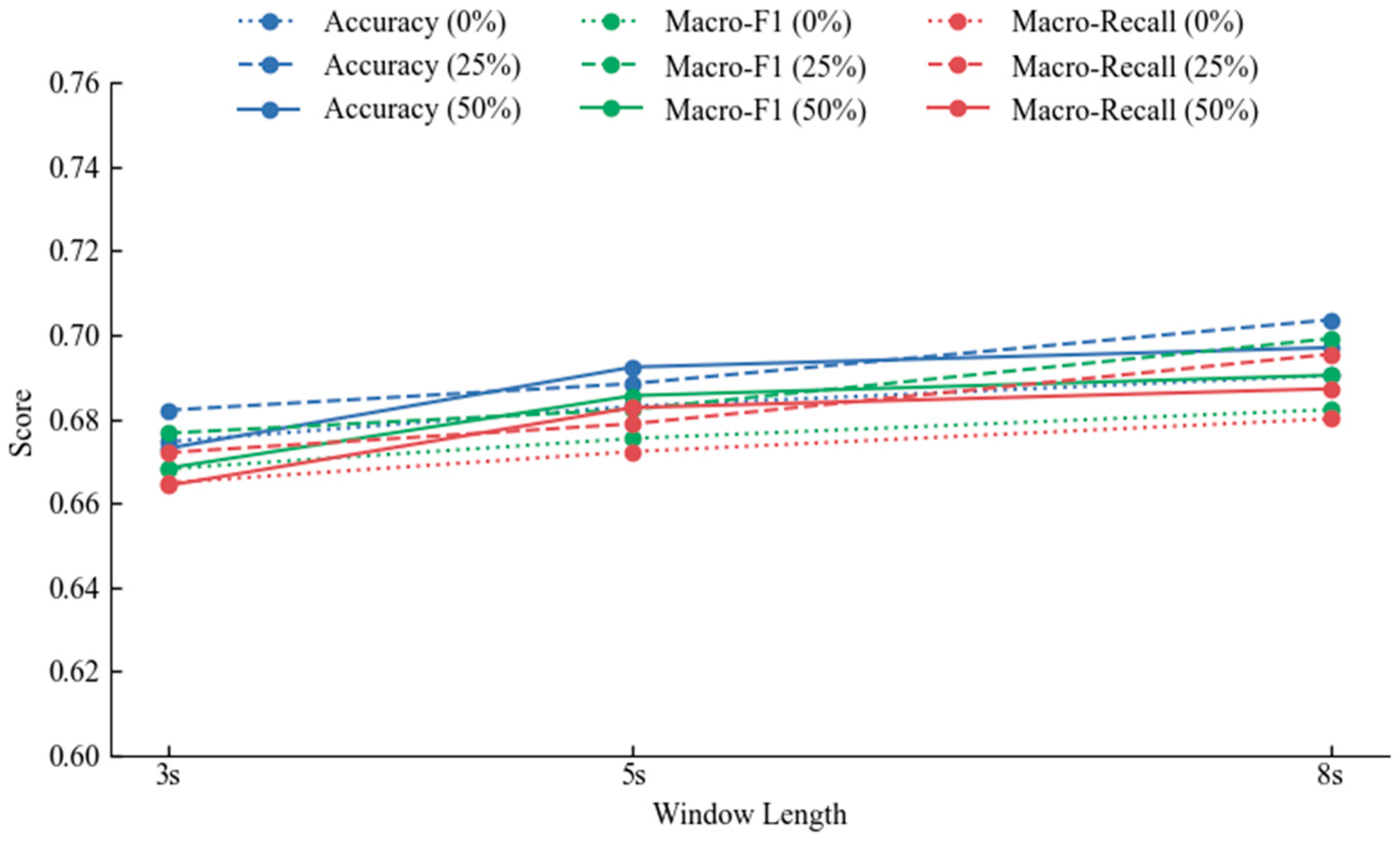

Before directly comparing within- and cross-subject protocols, a sensitivity analysis was first conducted under the cross-subject setting to examine robustness across temporal window configurations (

Figure 6). Results showed that performance was relatively stable, with Accuracy and Macro-F1 ranging between 0.67 and 0.70. Among the tested settings, an 8 s window with 25% overlap achieved the highest performance, slightly outperforming 5 s windows with 50% overlap. Shorter 3 s windows consistently yielded lower results, suggesting that longer temporal coverage enhances cross-subject generalization. All subsequent analyses were therefore reported under the 8 s, 25% overlap configuration. Using this setting, Random Forest achieved Accuracy = 0.704 [0.666–0.741], Macro-F1 = 0.699 [0.664–0.735], and Macro-Recall = 0.695 [0.661–0.730] across subject-disjoint five-fold cross-validation. These results confirm that performance remains statistically stable across folds.

To examine whether the performance gain in the three-class setting was primarily due to label coarsening from ten classes to three, an analysis was repeated using the original ten task categories. In the within-subject evaluation, performance remained high (RF: Accuracy ≈ 0.959, Macro-F1 ≈ 0.954, Macro-Recall ≈ 0.956), demonstrating that the features were discriminative even under fine-grained task distinctions. However, in the cross-subject evaluation, performance dropped markedly (Accuracy ≈ 0.287, Macro-F1 ≈ 0.276, Macro-Recall ≈ 0.296). This comparison indicates that the improvement observed with the three-class mapping partly reflects the reduction in task-specific noise through workload-based relabeling, while also confirming that workload levels constitute a more stable and generalizable representation across drivers.

The confusion matrix (

Figure 7) highlights the class-wise performance. Baseline states were the most difficult to classify, with recall ≈ 0.52 and frequent confusion with cognitive distraction. Cognitive distraction achieved moderate recall (≈0.75), though many instances were mislabeled as baseline. Visual distraction was most robust (recall ≈ 0.83), with relatively few misclassifications. Error taxonomy analysis suggested that baseline–cognitive confusion was often linked to transient arousal fluctuations (e.g., short EDA bursts) or noisy gaze tracking, while visual distraction errors were primarily due to eye-tracking artifacts (e.g., unstable pupil tracking).

Calibration analysis (

Figure 8) showed that predicted probabilities were generally well aligned with observed correctness. Visual distraction was best calibrated, while baseline and cognitive distraction showed mild overconfidence in the mid-probability range (40–70%), consistent with the confusion patterns. Moreover, the cognitive distraction curve dropped in the high-probability region (≥0.9), which can be attributed to the small number of highly confident predictions combined with the systematic confusion between baseline and cognitive distraction. This pattern is consistent with the confusion matrix results, highlighting that distinguishing between baseline and cognitive distraction remains more challenging, while visual distraction is more robustly detected.

Table 5 summarizes the results of leave-group-out validation across five age groups. Overall, the framework achieved Accuracy = 0.694 (95% CI: 0.646–0.741), Macro-F1 = 0.687 (95% CI: 0.640–0.734), and Macro-Recall = 0.685 (95% CI: 0.635–0.735). Performance remained stable across folds, peaking in the 40–49 group but dropping in the 50–59 group, indicating that age-related heterogeneity affects robustness.

Together, these results demonstrate that while within-subject evaluation quantifies the upper bound of personalized performance, cross-subject and demographic-stratified evaluations provide more realistic estimates of deployment feasibility. Improving cross-driver robustness remains the key challenge, and future work should explore normalization, domain adaptation, and transfer learning to address inter-individual and demographic variability.

4. Conclusions

This study explored the use of multimodal physiological and ocular measures for distraction detection in conditionally automated driving. By analyzing synchronized ECG, PPG, EDA, and ocular data from the TD2D dataset, an optimized feature set was derived. Random Forest consistently outperformed the other models, confirming the suitability of tree-based ensemble models for heterogeneous multimodal signals.

The experimental results highlight two main findings. First, ocular signals are the most informative modality for capturing distraction, achieving the highest unimodal performance. Although physiological measures such as ECG, PPG, and EDA are less discriminative, they provide complementary cues that enhance robustness when integrated with ocular features. This finding aligns with prior evidence that glance-based metrics are critical for detecting cognitive distraction [

20]. Second, while within-subject evaluation reached ≈95% accuracy as an optimistic upper bound, cross-subject evaluation achieved moderate yet stable results, confirming practical feasibility but underscoring inter-individual variability as the main deployment challenge. This is consistent with Chen et al. [

27], who emphasized that robustness and transferability remain key challenges for real-life deployment of driver state monitoring systems. Additional analyses using the original ten task labels confirmed that the observed improvements under the three-class workload mapping are not an artifact of label coarsening but rather reflect a principled strategy that reduces sparsity and improves stability under cross-subject evaluation.

In addition, the present study contributes systematic evidence by integrating physiological and ocular modalities under conditionally automated driving, introducing workload-based task reassignment, and quantitatively analyzing temporal windowing and overlap effects. These outcomes clarify that the work advances the field by providing more comprehensive insights into multimodal driver state monitoring.

The proposed framework is sensitive to design choices such as window overlap, window length, feature selection, and signal quality. In practice, a 50% overlap proved most effective for within-subject evaluation, whereas an 8 s window with 25% overlap yielded the most stable cross-subject performance. Under these settings, detection remained accurate with low latency, and the required multimodal signals can be readily acquired from in-vehicle sensors, supporting the framework’s practical potential for integration into driver monitoring systems.

Future research will proceed in four directions. First, advanced domain adaptation and transfer learning techniques will be explored to enhance cross-subject generalization and mitigate inter-individual variability. Second, lightweight attention mechanisms will be considered to enable efficient modality-specific fusion beyond hand-crafted features, which is particularly relevant for edge-constrained in-vehicle computing environments [

28,

29]. Third, integration with autonomous driving decision-making and control modules represents a promising future direction, particularly in mixed traffic scenarios where human-driven and automated vehicles must interact [

30]. Fourth, future work will explicitly investigate how predicted workload states relate to takeover performance (reaction time and intervention success), thereby linking multimodal driver monitoring with safety-critical outcomes.