Noisy Label Learning for Gait Recognition in the Wild

Abstract

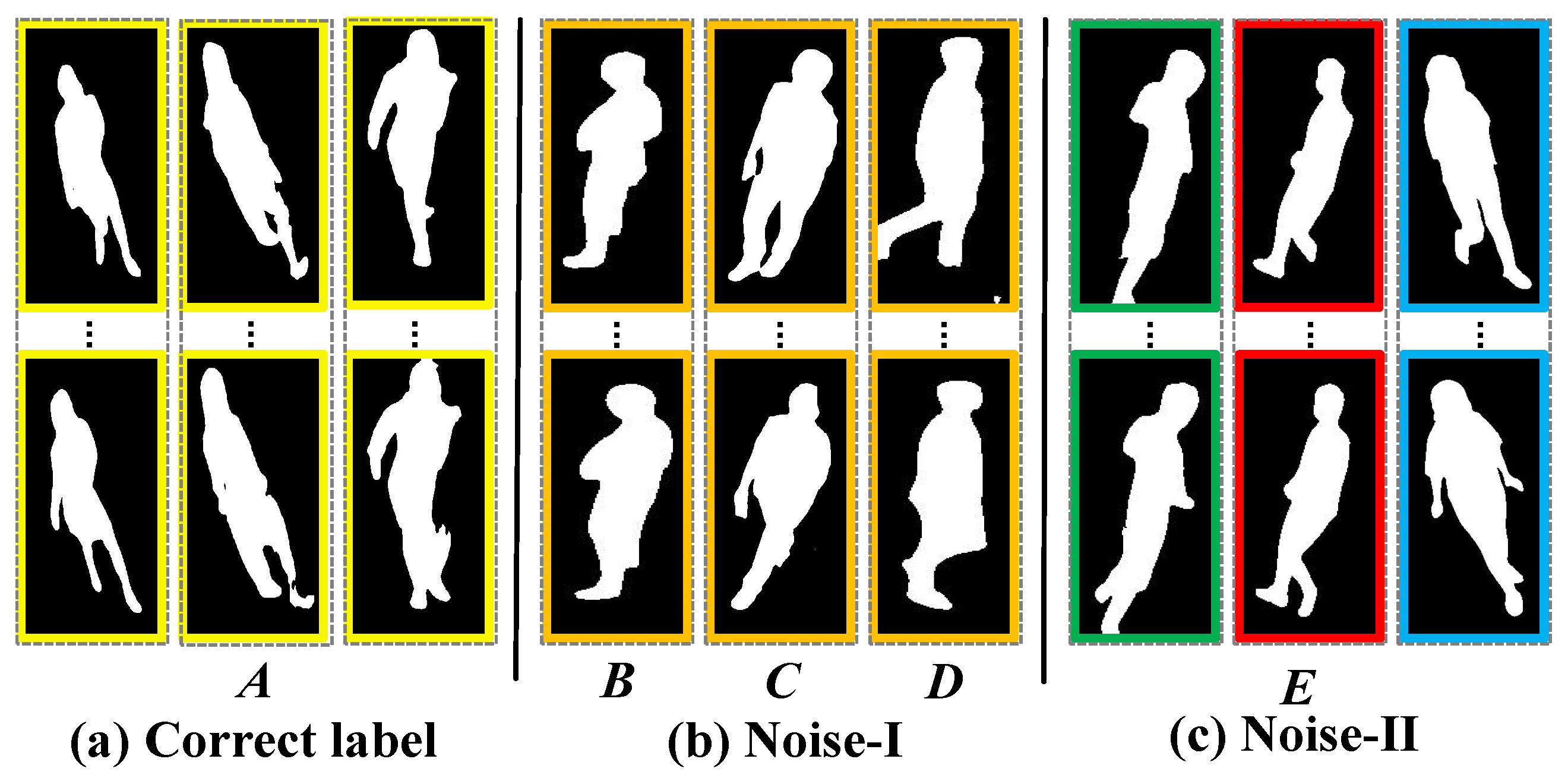

1. Introduction

- To the best of our knowledge, we are the first to explore noisy label learning for gait recognition in the wild.

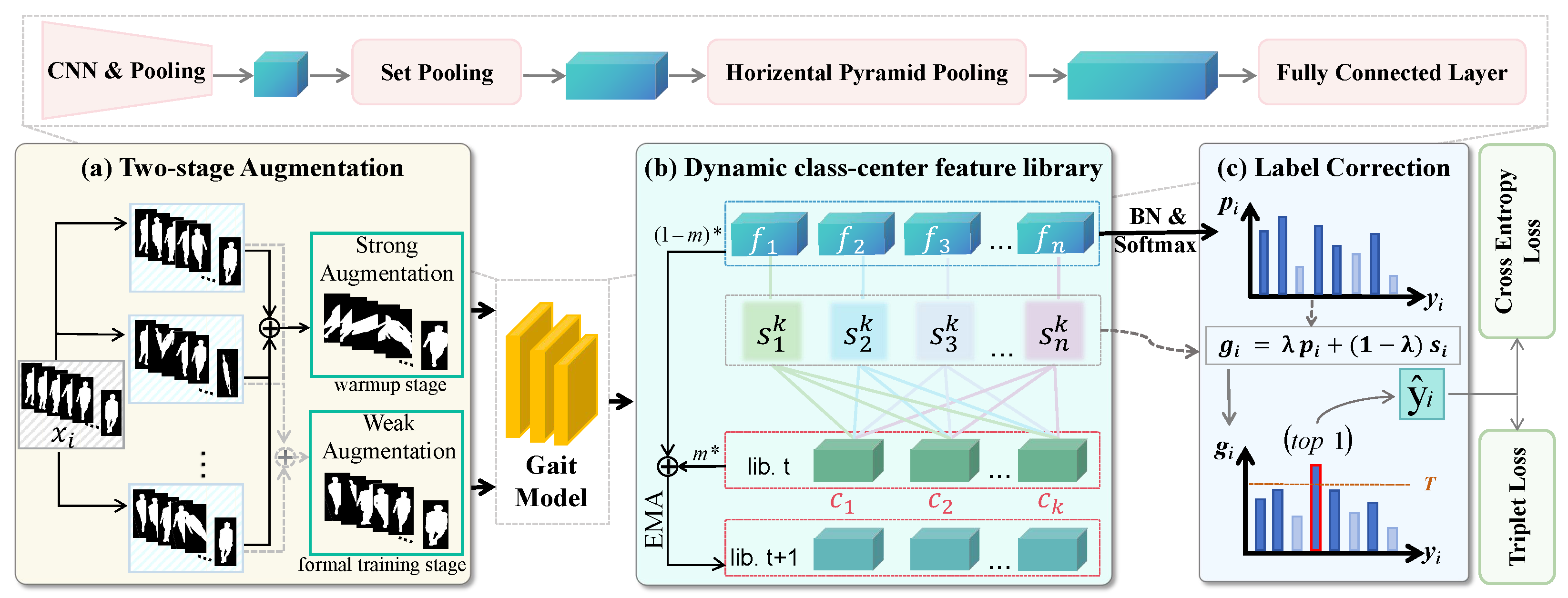

- We propose a plug-and-play gait framework, named Dynamic Noise Label Correction Network (DNLC), to automatically discover and correct the noisy labels, which consists of the dynamic class-center feature library and the label correction module.

- We introduce a new two-stage augmentation strategy, which can efficiently improve the model to learn robust gait features in noisy labels.

- Extensive experiments demonstrate that our proposed method can effectively promote the performance of existing gait recognition methods. As a plug-and-play solution, the DNLC framework can be seamlessly integrated into existing gait recognition systems without the need for additional complex operations or techniques.

2. Related Works

2.1. Gait Recognition

2.2. Noisy Label Learning

3. Method

3.1. Overview

3.2. The Dynamic Class-Center Feature Library

3.3. The Label Correction Module

3.4. The Two-Stage Augmentation Strategy

3.5. Training and Inference

4. Experiment

4.1. Dataset

4.2. Implementation Details

4.3. Experimental Results on Gait3D

4.4. Experimental Results on CCPG

4.5. Ablation Study

4.6. Sensitivity Analysis

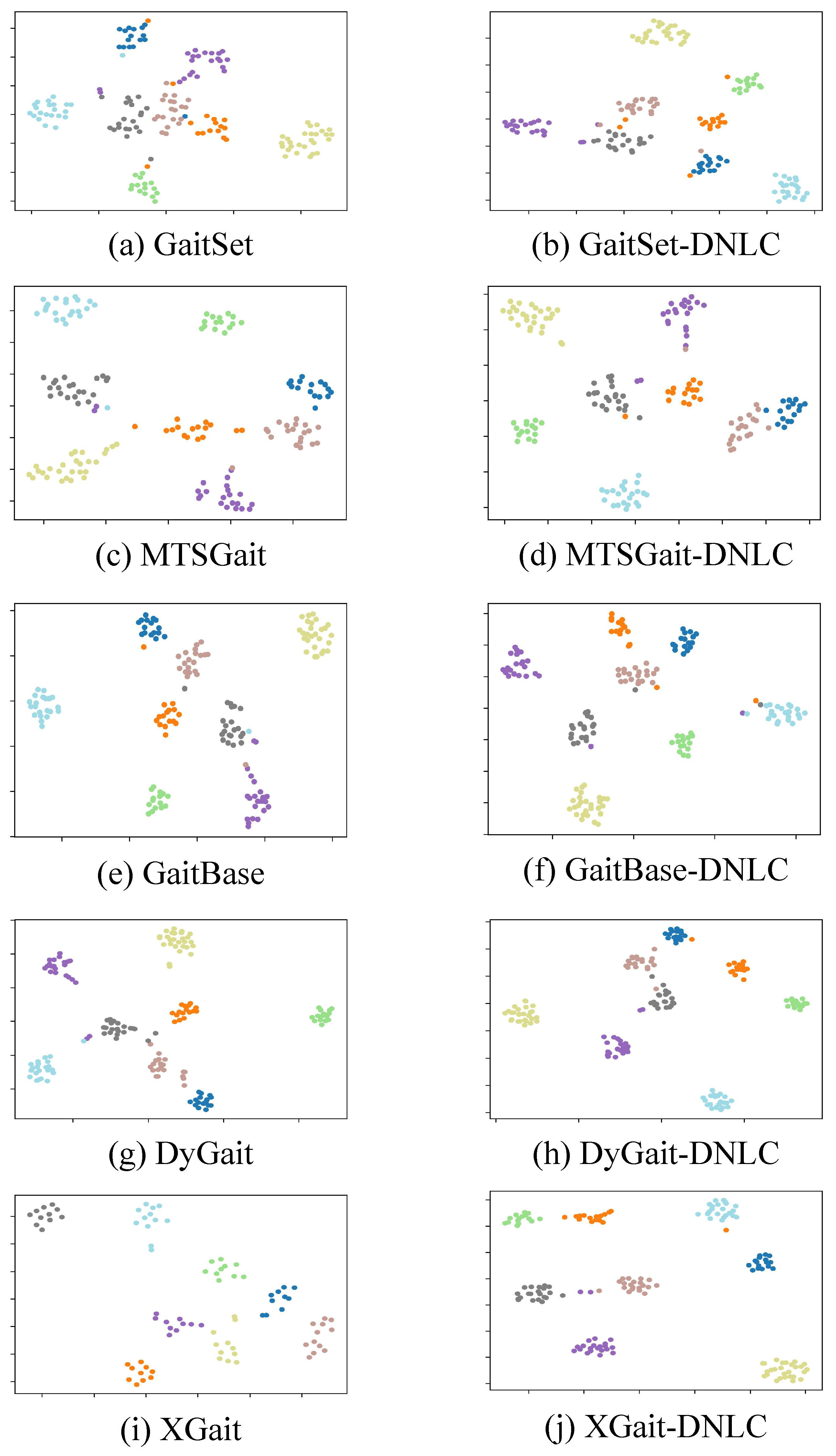

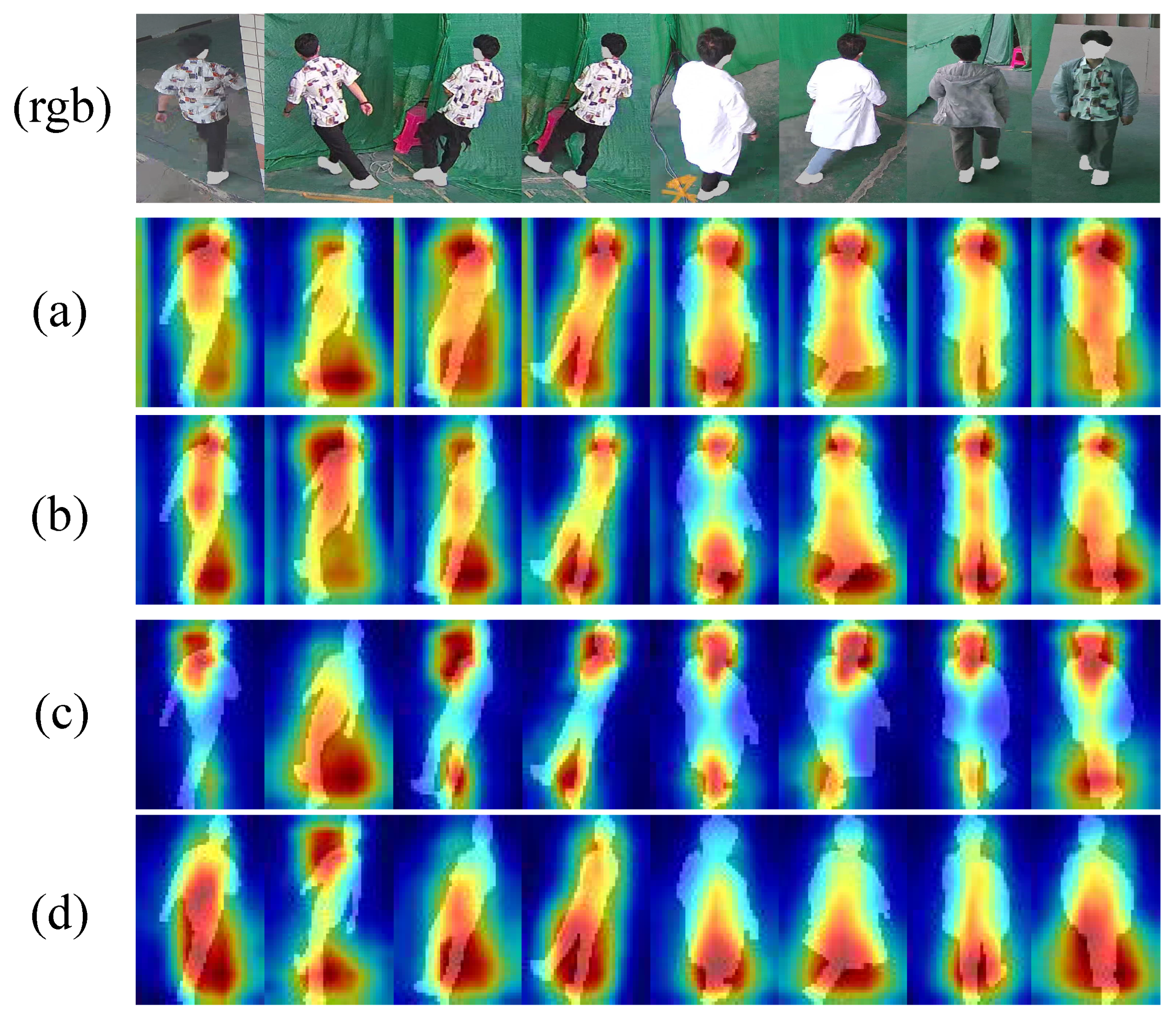

4.7. Visualization

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, L.; Tan, T.; Ning, H.; Hu, W. Silhouette analysis-based gait recognition for human identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1505–1518. [Google Scholar] [CrossRef]

- Zou, Y.; He, N.; Sun, J.; Huang, X.; Wang, W. Occluded Gait Emotion Recognition Based on Multi-Scale Suppression Graph Convolutional Network. Comput. Mater. Contin. 2025, 82, 1255. [Google Scholar] [CrossRef]

- Harris, E.; Khoo, I.; Demircan, E. A Survey of Human Gait-Based Artificial Intelligence Applications. Front. Robot. AI Ed. Pick. 2023, 8, 17. [Google Scholar] [CrossRef]

- Wan, C.; Wang, L.; Phoha, V.V. A survey on gait recognition. ACM Comput. Surv. 2018, 51, 1–35. [Google Scholar] [CrossRef]

- Tan, D.; Huang, K.; Yu, S.; Tan, T. Efficient Night Gait Recognition Based on Template Matching. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; IEEE Computer Society: Washington, DC, USA, 2006; pp. 1000–1003. [Google Scholar]

- Takemura, N.; Makihara, Y.; Muramatsu, D.; Echigo, T.; Yagi, Y. Multi-view large population gait dataset and its performance evaluation for cross-view gait recognition. IPSJ Trans. Comput. Vis. Appl. 2018, 10, 4. [Google Scholar] [CrossRef]

- Chao, H.; He, Y.; Zhang, J.; Feng, J. GaitSet: Regarding Gait as a Set for Cross-View Gait Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8126–8133. [Google Scholar]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. GaitPart: Temporal Part-Based Model for Gait Recognition. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 14213–14221. [Google Scholar]

- Lin, B.; Zhang, S.; Yu, X.; Chu, Z.; Zhang, H. Learning Effective Representations from Global and Local Features for Cross-View Gait Recognition. arXiv 2020, arXiv:2011.01461. [Google Scholar]

- Huang, X.; Zhu, D.; Wang, H.; Wang, X.; Yang, B.; He, B.; Liu, W.; Feng, B. Context-sensitive temporal feature learning for gait recognition. In Proceedings of the IEEE/CVF International Conference on Compsuter Viion, Montreal, BC, Canada, 11–17 October 2021; pp. 12909–12918. [Google Scholar]

- Zhu, Z.; Guo, X.; Yang, T.; Huang, J.; Deng, J.; Huang, G.; Du, D.; Lu, J.; Zhou, J. Gait recognition in the wild: A benchmark. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 14789–14799. [Google Scholar]

- Zheng, J.; Liu, X.; Liu, W.; He, L.; Yan, C.; Mei, T. Gait Recognition in the Wild with Dense 3D Representations and A Benchmark. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 20196–20205. [Google Scholar]

- Zheng, J.; Liu, X.; Gu, X.; Sun, Y.; Gan, C.; Zhang, J.; Liu, W.; Yan, C. Gait Recognition in the Wild with Multi-hop Temporal Switch. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 6136–6145. [Google Scholar]

- Wang, M.; Guo, X.; Lin, B.; Yang, T.; Zhu, Z.; Li, L.; Zhang, S.; Yu, X. DyGait: Exploiting Dynamic Representations for High-performance Gait Recognition. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 13378–13387. [Google Scholar]

- Zheng, J.; Liu, X.; Zhang, B.; Yan, C.; Zhang, J.; Liu, W.; Zhang, Y. It Takes Two: Accurate Gait Recognition in the Wild via Cross-granularity Alignment. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 8786–8794. [Google Scholar]

- Chen, J.; Deng, S.; Teng, D.; Chen, D.; Jia, T.; Wang, H. APPN: An Attention-based Pseudo-label Propagation Network for few-shot learning with noisy labels. Neurocomputing 2024, 602, 128212. [Google Scholar] [CrossRef]

- He, Z.; Xu, J.; Huang, P.; Wang, Q.; Wang, Y.; Guo, Y. PLTN: Noisy label learning in long-tailed medical images with adaptive prototypes. Neurocomputing 2025, 645, 130514. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, X.; Wang, S.; Wang, L.; Yan, C.; Liu, W. Parsing is All You Need for Accurate Gait Recognition in the Wild. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 116–124. [Google Scholar]

- Yu, W.; Yu, H.; Huang, Y.; Cao, C.; Wang, L. CNTN: Cyclic Noise-tolerant Network for Gait Recognition. arXiv 2022, arXiv:2210.06910. [Google Scholar] [CrossRef]

- Liu, H.; Zeng, S.; Deng, L.; Liu, T.; Liu, X.; Zhang, Z.; Li, Y.F. HPCTrans: Heterogeneous Plumage Cues-Aware Texton Correlation Representation for FBIC via Transformers. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, C.; Deng, Y.; Xie, B.; Liu, T.; Li, Y.F. TransIFC: Invariant cues-aware feature concentration learning for efficient fine-grained bird image classification. IEEE Trans. Multimed. 2023, 27, 1677–1690. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, Q.; Zhang, C.; Zhu, J.; Liu, T.; Zhang, Z.; Li, Y.F. MMATrans: Muscle movement aware representation learning for facial expression recognition via transformers. IEEE Trans. Ind. Inform. 2024, 20, 13753–13764. [Google Scholar] [CrossRef]

- Garuda, N.; Prasad, G.; Dev, P.P.; Das, P.; Ghaderpour, E. CNNViT: A robust deep neural network for video anomaly detection. IET Conf. Proc. 2023, 2023, 13–22. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Wang, W.; Dou, S.; Jiang, Z.; Sun, L. A fast dense spectral–spatial convolution network framework for hyperspectral images classification. Remote Sens. 2018, 10, 1068. [Google Scholar] [CrossRef]

- Song, B.; Zhao, S.; Dang, L.; Wang, H.; Xu, L. A survey on learning from data with label noise via deep neural networks. Syst. Sci. Control Eng. 2025, 13, 2488120. [Google Scholar] [CrossRef]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

- Xiao, T.; Xia, T.; Yang, Y.; Huang, C.; Wang, X. Learning from massive noisy labeled data for image classification. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 2691–2699. [Google Scholar]

- Song, H.; Kim, M.; Lee, J. SELFIE: Refurbishing Unclean Samples for Robust Deep Learning. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 5907–5915. [Google Scholar]

- Liu, H.; Song, Y.; Liu, T.; Chen, L.; Zhang, Z.; Yang, X.; Xiong, N.N. TransSIL: A Silhouette Cue-Aware Image Classification Framework for Bird Ecological Monitoring Systems. IEEE Internet Things J. 2025. [Google Scholar] [CrossRef]

- Liu, H.; Liu, T.; Chen, Y.; Zhang, Z.; Li, Y.F. EHPE: Skeleton cues-based gaussian coordinate encoding for efficient human pose estimation. IEEE Trans. Multimed. 2022, 26, 8464–8475. [Google Scholar] [CrossRef]

- Deng, Y.; Ma, J.; Wu, Z.; Wang, W.; Liu, H. DSR-Net: Distinct selective rollback queries for road cracks detection with detection transformer. Digit. Signal Process. 2025, 164, 105266. [Google Scholar] [CrossRef]

- Song, H.; Kim, M.; Park, D.; Shin, Y.; Lee, J. Learning From Noisy Labels with Deep Neural Networks: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8135–8153. [Google Scholar] [CrossRef]

- Goldberger, J.; Ben-Reuven, E. Training deep neural-networks using a noise adaptation layer. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhao, J.; Liu, X.; Zhao, W. Balanced and Accurate Pseudo-Labels for Semi-Supervised Image Classification. ACM Trans. Multim. Comput. Commun. Appl. 2022, 18, 145:1–145:18. [Google Scholar] [CrossRef]

- Zhang, J.; Song, B.; Wang, H.; Han, B.; Liu, T.; Liu, L.; Sugiyama, M. BadLabel: A Robust Perspective on Evaluating and Enhancing Label-Noise Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 4398–4409. [Google Scholar] [CrossRef]

- Rusiecki, A. Batch Normalization and Dropout Regularization in Training Deep Neural Networks with Label Noise. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 13–15 December 2021; Volume 418, pp. 57–66. [Google Scholar]

- Chen, Y.; Hu, S.X.; Shen, X.; Ai, C.; Suykens, J.A.K. Compressing Features for Learning with Noisy Labels. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 2124–2138. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Jin, C.; Li, G.; Li, T.H.; Gao, W. Mitigating Label Noise in GANs via Enhanced Spectral Normalization. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3924–3934. [Google Scholar] [CrossRef]

- An, W.; Tian, F.; Shi, W.; Lin, H.; Wu, Y.; Cai, M.; Wang, L.; Wen, H.; Yao, L.; Chen, P. DOWN: Dynamic Order Weighted Network for Fine-grained Category Discovery. Knowl. Based Syst. 2024, 293, 111666. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, B.; Liang, P.; Yuan, X.; Li, N. Semi-supervised fault diagnosis of gearbox based on feature pre-extraction mechanism and improved generative adversarial networks under limited labeled samples and noise environment. Adv. Eng. Inform. 2023, 58, 102211. [Google Scholar] [CrossRef]

- Fang, C.; Cheng, L.; Mao, Y.; Zhang, D.; Fang, Y.; Li, G.; Qi, H.; Jiao, L. Separating Noisy Samples From Tail Classes for Long-Tailed Image Classification with Label Noise. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 16036–16048. [Google Scholar] [CrossRef] [PubMed]

- Chung, Y.; Lu, W.; Tian, X. Data Cleansing for Salt Dome Dataset with Noise Robust Network on Segmentation Task. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Liu, D.; Zhao, J.; Wu, J.; Yang, G.; Lv, F. Multi-category classification with label noise by robust binary loss. Neurocomputing 2022, 482, 14–26. [Google Scholar] [CrossRef]

- Yu, X.; Zhang, S.; Jia, L.; Wang, Y.; Song, M.; Feng, Z. Noise is the fatal poison: A Noise-aware Network for noisy dataset classification. Neurocomputing 2024, 563, 126829. [Google Scholar] [CrossRef]

- Chen, G.; Qin, H.; Huang, L. Recursive noisy label learning paradigm based on confidence measurement for semi-supervised depth completion. Int. J. Mach. Learn. Cybern. 2024, 15, 3201–3219. [Google Scholar] [CrossRef]

- Zhang, Y.; Sugiyama, M. Approximating Instance-Dependent Noise via Instance-Confidence Embedding. arXiv 2021, arXiv:2103.13569. [Google Scholar]

- Malach, E.; Shalev-Shwartz, S. Decoupling “when to update” from “how to update”. In Proceedings of the NIPS’17: 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 960–970. [Google Scholar]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Tsang, I.W.; Sugiyama, M. Co-teaching: Robust training of deep neural networks with extremely noisy labels. In Proceedings of the NIPS’18: 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 8536–8546. [Google Scholar]

- Yu, X.; Han, B.; Yao, J.; Niu, G.; Tsang, I.W.; Sugiyama, M. How does Disagreement Help Generalization against Label Corruption? In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 7164–7173. [Google Scholar]

- Wei, H.; Feng, L.; Chen, X.; An, B. Combating Noisy Labels by Agreement: A Joint Training Method with Co-Regularization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13723–13732. [Google Scholar]

- Lin, J.; Zhao, Y.; Wang, S.; Tang, Y. A robust training method for object detectors in remote sensing image. Displays 2024, 81, 102618. [Google Scholar] [CrossRef]

- Fan, C.; Liang, J.; Shen, C.; Hou, S.; Huang, Y.; Yu, S. OpenGait: Revisiting Gait Recognition Toward Better Practicality. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 9707–9716. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C.H. Deep Learning for Person Re-Identification: A Survey and Outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Hou, S.; Zhang, C.; Cao, C.; Liu, X.; Huang, Y.; Zhao, Y. An in-depth exploration of person re-identification and gait recognition in cloth-changing conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13824–13833. [Google Scholar]

- Nishi, K.; Ding, Y.; Rich, A.; Höllerer, T. Augmentation Strategies for Learning with Noisy Labels. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8022–8031. [Google Scholar]

| Method | Gait3D | CCPG | ||||||

|---|---|---|---|---|---|---|---|---|

| Total_Iter | Warmup_Iter | Milestone | Batch_Size | Total_Iter | Warmup_Iter | Milestone | Batch_Size | |

| Gaitset | 180 k | 6 k | [30 k, 90 k] | [32, 4, 30] | 80 k | 3 k | [30 k, 60 k] | [16, 16, 30] |

| MTSGait | 180 k | 6 k | [30 k, 90 k] | [32, 4, 30] | - | - | - | - |

| GaitBase | 60 k | 2 k | [20 k, 40 k, 50 k] | [32, 4, 30] | 80 k | 3 k | [30 k, 60 k] | [16, 16, 30] |

| DyGait | 160 k | 2 k | [60 k, 120 k] | [8, 16, 30] | 160 k | 6 k | [60 k, 120 k] | [8, 16, 30] |

| XGait | 120 k | 4 k | [40 k, 80 k, 100 k] | [32, 2, 30] | 120 k | 4 k | [40 k, 80 k, 100 k] | [8, 8, 30] |

| Method | Noise Rate | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | |||||||||||||||||||

| Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | |

| GaitSet [7] | 39.00 | 59.90 | 31.52 | 18.61 | 28.50 | 49.30 | 22.64 | 12.38 | 27.70 | 45.90 | 20.50 | 11.20 | 25.30 | 45.50 | 19.83 | 11.03 | 24.20 | 44.9 | 19.48 | 10.85 | 24.70 | 44.00 | 19.10 | 10.56 |

| GaitSet-DNLC | 46.40 | 66.50 | 36.26 | 21.60 | 42.30 | 61.60 | 32.51 | 18.79 | 41.40 | 60.80 | 30.89 | 17.67 | 38.40 | 58.10 | 29.00 | 16.58 | 36.80 | 56.80 | 27.74 | 15.90 | 37.20 | 57.40 | 27.91 | 15.95 |

| MTSGait [13] | 48.70 | 67.10 | 37.63 | 21.93 | 38.50 | 56.40 | 28.51 | 15.66 | 36.80 | 56.50 | 28.24 | 16.12 | 35.40 | 52.90 | 26.16 | 14.54 | 32.10 | 52.70 | 24.94 | 14.17 | 33.00 | 52.60 | 24.46 | 13.50 |

| MTSGait-DNLC | 48.90 | 69.11 | 38.73 | 23.35 | 45.40 | 64.70 | 34.98 | 20.87 | 42.30 | 61.90 | 32.05 | 18.39 | 41.20 | 62.20 | 31.64 | 18.56 | 40.70 | 58.90 | 30.31 | 17.51 | 40.50 | 59.70 | 30.20 | 17.27 |

| GaitBase [53] | 47.20 | 67.40 | 38.21 | 23.38 | 36.50 | 58.10 | 28.83 | 17.06 | 30.60 | 51.60 | 24.74 | 14.27 | 30.90 | 49.10 | 23.31 | 13.67 | 28.40 | 47.70 | 22.34 | 13.32 | 28.60 | 50.40 | 22.71 | 13.10 |

| GaitBase-DNLC | 64.00 | 79.50 | 53.64 | 35.51 | 55.30 | 75.10 | 45.88 | 28.49 | 51.01 | 70.20 | 41.97 | 25.80 | 49.20 | 67.70 | 39.81 | 24.67 | 47.50 | 68.10 | 39.01 | 23.92 | 48.20 | 66.90 | 38.29 | 22.70 |

| DyGait [14] | 51.30 | 68.70 | 42.11 | 22.09 | 40.70 | 60.40 | 31.58 | 15.80 | 37.9 | 55.50 | 28.30 | 13.95 | 35.90 | 53.10 | 26.35 | 12.54 | 34.40 | 52.10 | 26.35 | 12.77 | 34.80 | 53.30 | 25.55 | 12.51 |

| DyGait-DNLC | 60.60 | 78.40 | 52.07 | 29.12 | 51.20 | 70.70 | 42.23 | 22.18 | 47.90 | 67.00 | 39.11 | 20.50 | 46.90 | 66.00 | 37.37 | 19.72 | 44.60 | 66.20 | 34.85 | 17.85 | 46.30 | 63.50 | 35.91 | 18.79 |

| XGait [15] | 80.50 | 91.90 | 73.30 | 55.40 | 69.90 | 86.80 | 62.21 | 42.95 | 65.90 | 83.20 | 56.63 | 37.95 | 61.30 | 80.00 | 52.75 | 34.37 | 58.80 | 79.70 | 51.50 | 33.56 | 59.20 | 77.90 | 49.75 | 31.50 |

| XGait-DNLC | 81.30 | 93.10 | 74.12 | 56.63 | 72.20 | 88.20 | 64.65 | 45.68 | 67.60 | 85.50 | 59.54 | 40.85 | 65.80 | 84.30 | 56.63 | 38.07 | 63.20 | 81.30 | 56.79 | 36.24 | 63.33 | 80.89 | 53.49 | 34.73 |

| Method | Noise Rate | |||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | |||||||||||||||||||

| CL-FULL | CL-UP | CL-DN | CL-BG | CL-FULL | CL-UP | CL-DN | CL-BG | CL-FULL | CL-UP | CL-DN | CL-BG | CL-FULL | CL-UP | CL-DN | CL-BG | CL-FULL | CL-UP | CL-DN | CL-BG | CL-FULL | CL-UP | CL-DN | CL-BG | |

| GaitSet [7] | 57.006 | 60.737 | 61.628 | 64.857 | 42.579 | 48.379 | 47.985 | 51.505 | 34.447 | 37.866 | 39.274 | 44.392 | 28.951 | 33.549 | 34.940 | 37.913 | 26.720 | 30.337 | 31.635 | 34.599 | 25.417 | 30.173 | 30.094 | 34.069 |

| GaitSet-DNLC | 63.497 | 70.681 | 71.795 | 77.715 | 52.479 | 61.222 | 61.846 | 70.094 | 45.226 | 52.676 | 54.725 | 64.549 | 43.212 | 49.572 | 52.931 | 62.496 | 40.488 | 47.089 | 49.175 | 59.648 | 39.721 | 47.075 | 49.232 | 58.715 |

| GaitBase [53] | 65.245 | 69.260 | 71.857 | 73.574 | 51.082 | 55.708 | 59.009 | 63.225 | 42.744 | 46.457 | 51.727 | 56.329 | 38.318 | 42.517 | 43.722 | 51.467 | 34.649 | 39.353 | 41.247 | 47.174 | 35.085 | 39.046 | 42.016 | 47.072 |

| GaitBase-DNLC | 70.408 | 77.1336 | 76.970 | 81.561 | 55.331 | 60.443 | 63.860 | 70.937 | 46.063 | 52.039 | 55.267 | 62.689 | 41.918 | 47.207 | 51.037 | 58.676 | 37.556 | 44.126 | 46.634 | 53.647 | 37.363 | 42.356 | 46.207 | 52.104 |

| DyGait [14] | 40.575 | 48.085 | 47.116 | 56.249 | 31.895 | 38.966 | 38.015 | 46.786 | 29.139 | 33.790 | 34.640 | 43.552 | 16.790 | 22.670 | 20.712 | 28.795 | 16.644 | 120.668 | 20.993 | 26.804 | 17.260 | 21.720 | 22.314 | 29.542 |

| DyGait-DNLC | 37.630 | 47.518 | 47.964 | 61.361 | 29.390 | 40.287 | 38.970 | 52.605 | 26.871 | 34.749 | 35.862 | 48.671 | 23.318 | 31.329 | 32.208 | 44.580 | 22.695 | 28.805 | 30.157 | 41.206 | 21.279 | 28.213 | 29.270 | 40.547 |

| XGait [15] | 72.500 | 76.723 | 78.990 | 79.989 | 53.799 | 55.750 | 62.628 | 62.732 | 42.560 | 43.542 | 51.440 | 53.061 | 36.576 | 39.116 | 45.768 | 46.604 | 33.920 | 37.079 | 43.056 | 46.426 | 32.670 | 34.815 | 43.352 | 46.92 |

| XGait-DNLC | 73.200 | 77.616 | 80.723 | 81.855 | 52.531 | 57.101 | 63.415 | 65.208 | 42.817 | 45.252 | 53.269 | 55.709 | 37.235 | 40.318 | 47.519 | 50.829 | 35.692 | 38.920 | 45.028 | 48.051 | 33.773 | 36.158 | 45.009 | 48.717 |

| Baseline | TAS | LCM | GaitSet | XGait | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Rank-1 | Rank-5 | mAP | mINP | Rank-1 | Rank-5 | mAP | mINP | |||

| ✓ | 24.70 | 44.00 | 19.10 | 10.56 | 59.20 | 77.90 | 49.75 | 31.50 | ||

| ✓ | ✓ | 31.50 | 50.90 | 24.39 | 13.94 | 60.11 | 78.56 | 50.70 | 32.36 | |

| ✓ | ✓ | 33.90 | 54.70 | 26.09 | 14.86 | 62.50 | 80.10 | 52.62 | 33.78 | |

| ✓ | ✓ | ✓ | 37.20 | 57.40 | 27.91 | 15.95 | 63.33 | 80.89 | 53.49 | 34.73 |

| Hyperparameter | Rank-1 | Rank-5 | mAP | mINP | |

|---|---|---|---|---|---|

| Original | 49.20 | 67.7 | 39.81 | 24.67 | |

| Temperature Coefficient: | 0.01 | 49.09 | 67.53 | 39.77 | 24.54 |

| 0.2 | 49.17 | 67.72 | 39.90 | 24.48 | |

| 0.5 | 49.11 | 67.80 | 39.79 | 24.68 | |

| Label Correction Threshold: T | 0.5 | 49.15 | 67.75 | 39.85 | 24.60 |

| Label Cleaning Weight: | 0.6 | 49.11 | 67.65 | 39.80 | 24.75 |

| Momentum Parameter: m | 0.6 | 49.18 | 67.81 | 39.84 | 24.70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, S.; Zheng, J.; Li, X.; Sun, Y.; Li, W.; Gao, R.; Omar, M.H.; Zhang, J. Noisy Label Learning for Gait Recognition in the Wild. Electronics 2025, 14, 3752. https://doi.org/10.3390/electronics14193752

Yuan S, Zheng J, Li X, Sun Y, Li W, Gao R, Omar MH, Zhang J. Noisy Label Learning for Gait Recognition in the Wild. Electronics. 2025; 14(19):3752. https://doi.org/10.3390/electronics14193752

Chicago/Turabian StyleYuan, Shuping, Jinkai Zheng, Xuan Li, Yaoqi Sun, Wenchao Li, Ruilai Gao, Mohd Hasbullah Omar, and Jiyong Zhang. 2025. "Noisy Label Learning for Gait Recognition in the Wild" Electronics 14, no. 19: 3752. https://doi.org/10.3390/electronics14193752

APA StyleYuan, S., Zheng, J., Li, X., Sun, Y., Li, W., Gao, R., Omar, M. H., & Zhang, J. (2025). Noisy Label Learning for Gait Recognition in the Wild. Electronics, 14(19), 3752. https://doi.org/10.3390/electronics14193752