DVIOR: Dynamic Vertical and Low-Intensity Outlier Removal for Efficient Snow Noise Removal from LiDAR Point Clouds in Adverse Weather

Abstract

1. Introduction

- (1)

- To address the key challenge that existing LiDAR snow noise removal methods struggle to balance snow noise elimination and environmental feature preservation (a critical bottleneck for autonomous driving perception accuracy in adverse weather), this study focuses on the unmet demand for robust dynamic filtering and establishes a targeted technical framework that integrates snow noise characteristics with point cloud spatial attributes;

- (2)

- According to the characteristics of snow noise points, a dynamic filter DVIOR is proposed, which combines the distance, height, and intensity of the point cloud;

- (3)

- Experiments on the MSP (WADS, CADC, RADIATE) datasets demonstrate that DVIOR outperforms DDIOR, with F1-scores exceeding DDIOR by 10.20, 19.82, and 36.9, respectively.

2. Related Works

2.1. Datasets

2.2. Filter

3. Method

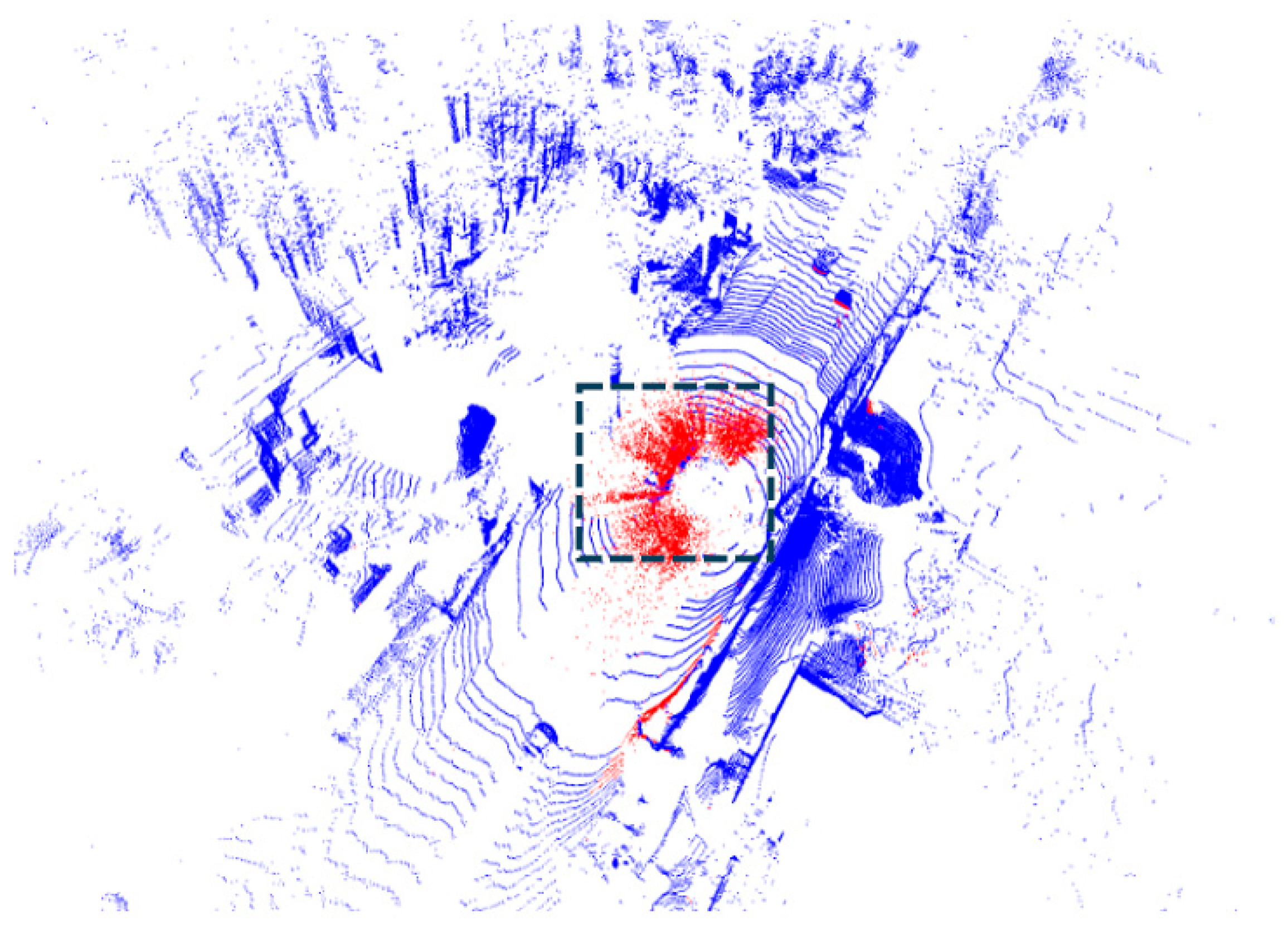

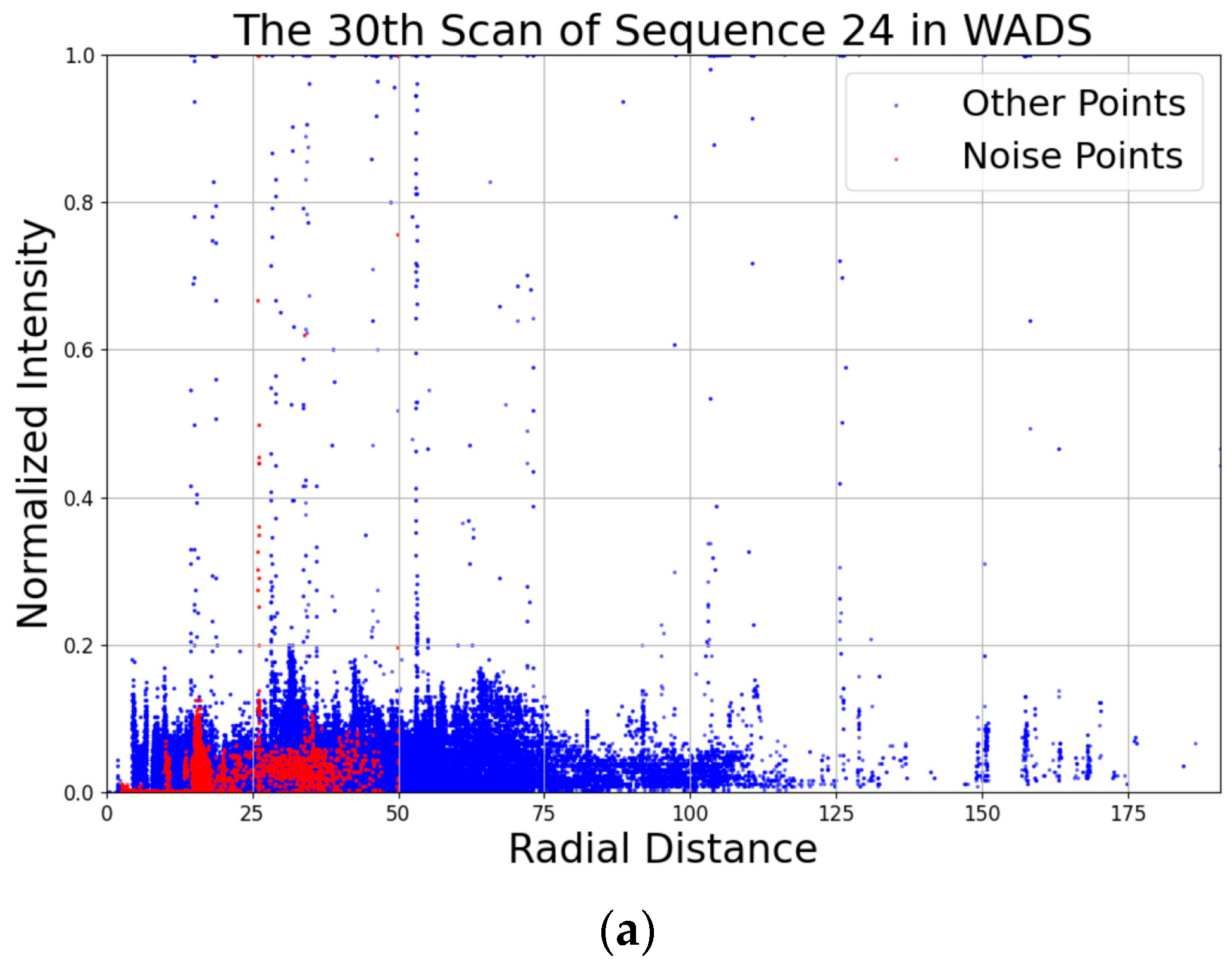

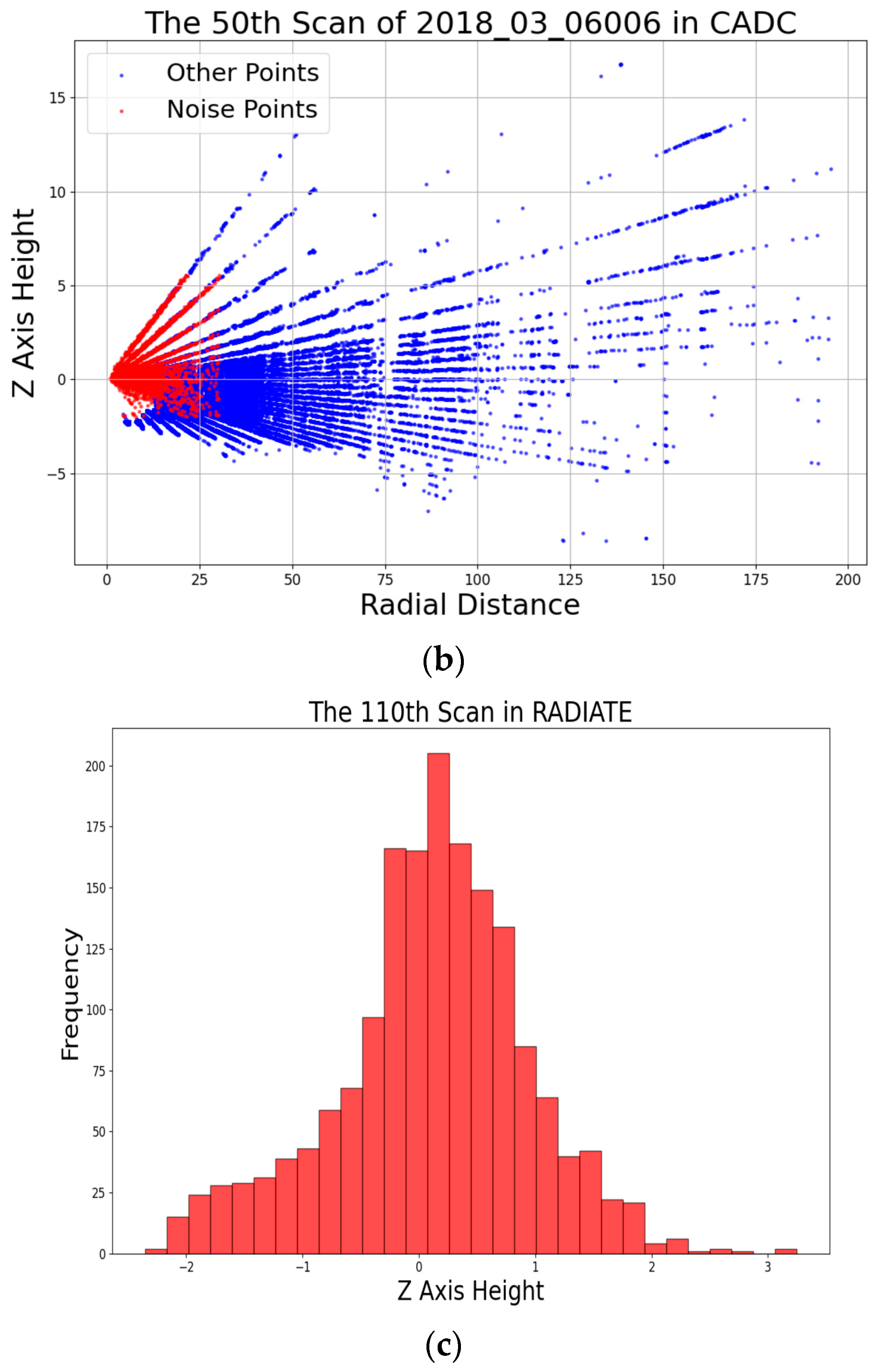

3.1. Characteristics of Snow Noise

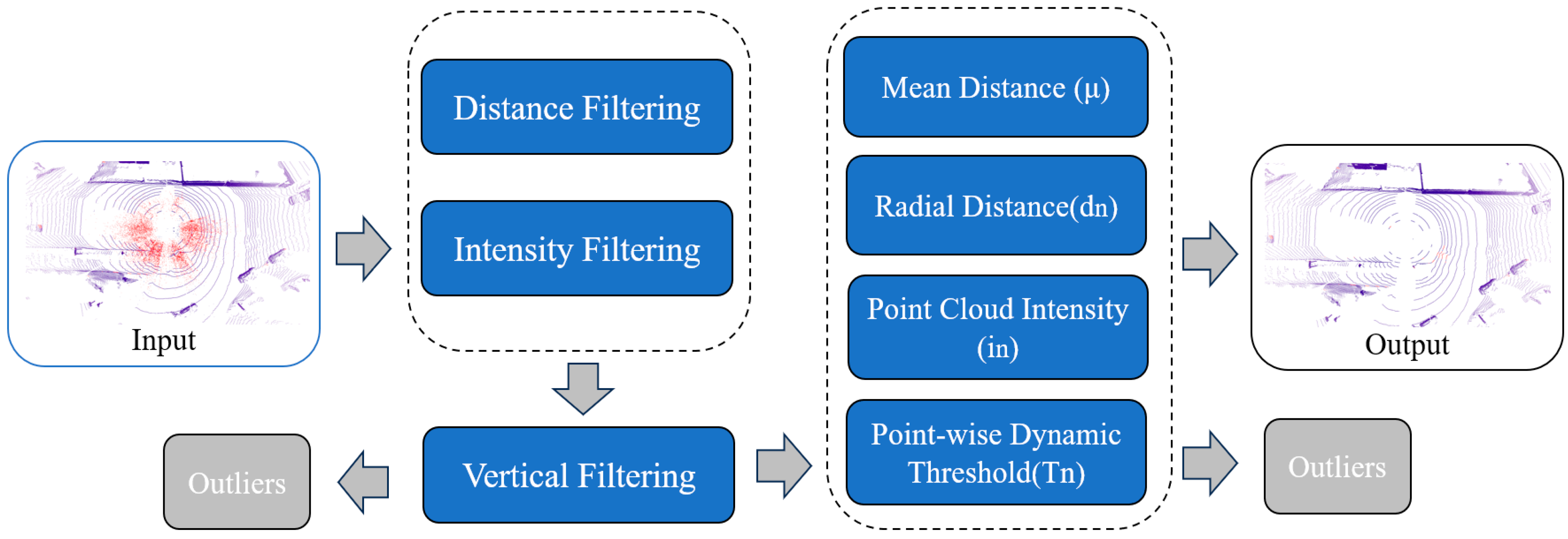

3.2. DVIOR

| Algorithm 1: Dynamic Vertical and Low-Intensity Outliers Removal |

| Input: Point Cloud ; Output: Outliers (O) Filtered point cloud (F) |

|

4. Experimental Results and Analysis

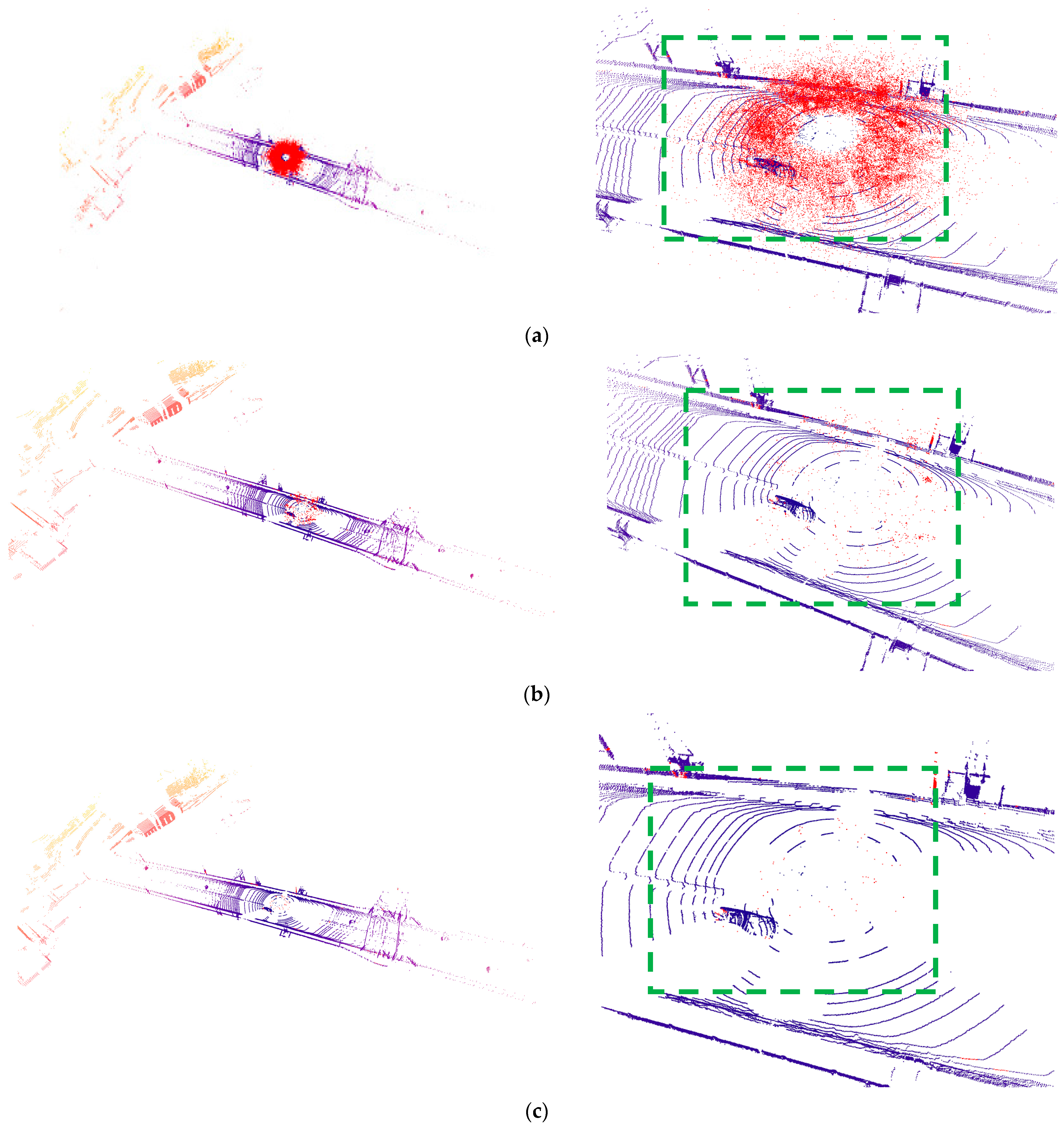

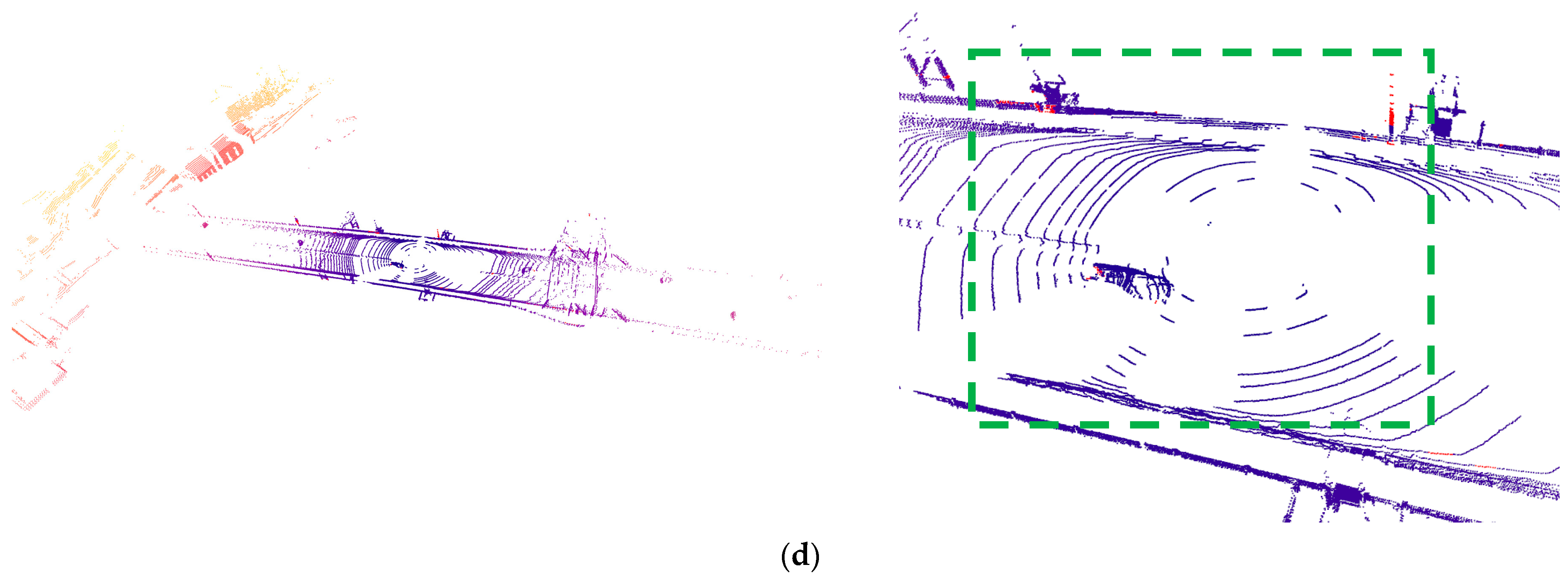

4.1. Qualitative Evaluation

4.2. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, S.; Liu, L.; Tang, J.; Yu, B.; Wang, Y.; Shi, W. Edge computing for autonomous driving: Opportunities and challenges. Proc. IEEE 2019, 107, 1697–1716. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- Abbasi, R.; Bashir, A.K.; Alyamani, H.J.; Amin, F.; Doh, J.; Chen, J. Lidar point cloud compression, processing and learning for autonomous driving. IEEE Trans. Intell. Transp. Syst. 2022, 24, 962–979. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive lidar sensors. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; IEEE: New York, NY, USA, 2019; pp. 1527–1534. [Google Scholar]

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. IATSS Res. 2019, 43, 253–262. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J.; Zhao, J. Automatic vehicle detection with roadside LiDAR data under rainy and snowy conditions. IEEE Intell. Transp. Syst. Mag. 2020, 13, 197–209. [Google Scholar] [CrossRef]

- Royo, S.; Ballesta-Garcia, M. An overview of lidar imaging systems for autonomous vehicles. Appl. Sci. 2019, 9, 4093. [Google Scholar] [CrossRef]

- Michaud, S.; Lalonde, J.F.; Giguere, P. Towards characterizing the behavior of LiDARs in snowy conditions. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 21–25. [Google Scholar]

- Jokela, M.; Kutila, M.; Pyykönen, P. Testing and validation of automotive point-cloud sensors in adverse weather conditions. Appl. Sci. 2019, 9, 2341. [Google Scholar] [CrossRef]

- Park, J.I.; Park, J.; Kim, K.S. Fast and accurate desnowing algorithm for LiDAR point clouds. IEEE Access 2020, 8, 160202–160212. [Google Scholar] [CrossRef]

- Zhou, S.; Xu, H.; Zhang, G.; Ma, T.; Yang, Y. DCOR: Dynamic channel-wise outlier removal to de-noise LiDAR data corrupted by snow. IEEE Trans. Intell. Transp. Syst. 2024, 25, 7017–7028. [Google Scholar] [CrossRef]

- Han, H.; Jin, X.; Li, Z. Denoising point clouds with intensity and spatial features in rainy weather. In Proceedings of the 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 8–11 October 2023; pp. 3015–3019. [Google Scholar]

- Kurup, A.; Bos, J. Dsor: A scalable statistical filter for removing falling snow from lidar point clouds in severe winter weather. arXiv 2021, arXiv:2109.07078. [Google Scholar] [CrossRef]

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian adverse driving conditions dataset. Int. J. Robot. Res. 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Sheeny, M.; De Pellegrin, E.; Mukherjee, S.; Ahrabian, A.; Wang, S.; Wallace, A. Radiate: A radar dataset for automotive perception in bad weather. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through fog without seeing fog: Deep multimodal sensor fusion in unseen adverse weather. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11682–11692. [Google Scholar]

- Sun, C.; Sun, P.; Wang, J.; Guo, Y.; Zhao, X. Understanding LiDAR Performance for Autonomous Vehicles Under Snowfall Conditions. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16462–16472. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3d is here: Point cloud library (pcl). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: New York, NY, USA, 2011; pp. 1–4. [Google Scholar]

- Prio, M.H.; Patel, S.; Koley, G. Implementation of dynamic radius outlier removal (dror) algorithm on lidar point cloud data with arbitrary white noise addition. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–7. [Google Scholar]

- Wang, W.; You, X.; Chen, L.; Tian, J.; Tang, F.; Zhang, L. A scalable and accurate de-snowing algorithm for LiDAR point clouds in winter. Remote Sens. 2022, 14, 1468. [Google Scholar] [CrossRef]

- Ibrahim, M.R.; Haworth, J.; Cheng, T. WeatherNet: Recognising weather and visual conditions from street-level images using deep residual learning. ISPRS Int. J. Geo-Inf. 2019, 8, 549. [Google Scholar] [CrossRef]

- Wichmann, M.; Kamil, M.; Frederiksen, A.; Kotzur, S.; Scherl, M. Long-term investigations of weather influence on direct time-of-flight LiDAR at 905nm. IEEE Sens. J. 2021, 22, 2024–2036. [Google Scholar] [CrossRef]

- Linnhoff, C.; Hofrichter, K.; Elster, L.; Rosenberger, P.; Winner, H. Measuring the influence of environmental conditions on automotive lidar sensors. Sensors 2022, 22, 5266. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Servomaa, H.; Muramoto, K.I.; Shiina, T. Snowfall characteristics observed by weather radars, an optical lidar and a video camera. IEICE Trans. Inf. Syst. 2002, 85, 1314–1324. [Google Scholar]

- Hahner, M.; Sakaridis, C.; Bijelic, M.; Heide, F.; Yu, F.; Dai, D.; Van Gool, L. Lidar snowfall simulation for robust 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16364–16374. [Google Scholar]

- Wang, W.; Yang, T.; Du, Y.; Liu, Y. Snow Removal for LiDAR Point Clouds with Spatio-Temporal Conditional Random Fields. IEEE Robot. Autom. Lett. 2023, 8, 6739–6746. [Google Scholar] [CrossRef]

- Han, S.-J.; Lee, D.; Min, K.-W.; Choi, J. RGOR: De-noising of LiDAR point clouds with reflectance restoration in adverse weather. In Proceedings of the 2023 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 11–13 October 2023; pp. 1844–1849. [Google Scholar] [CrossRef]

- Park, J.-I.; Jo, S.; Seo, H.-T.; Park, J. LiDAR Denoising Methods in Adverse Environments: A Review. IEEE Sens. J. 2025, 25, 7916–7932. [Google Scholar] [CrossRef]

| Dataset | Channels | Features | Sensors | Weather Conditions | Annotations | Data Size |

|---|---|---|---|---|---|---|

| DENSE | 32 channels | Focus on enhancing perception systems for autonomous driving in severe conditions | LiDAR, Radar, Cameras | Snow, Rain, Fog, Low Light | Primarily bounding boxes for object detection | Approx. 8 TB |

| CADC | 32 channels | Canadian winter conditions, urban/rural driving environments | LiDAR, Radar, Cameras | Snow, Rain | Bounding boxes for object detection | 200 GB+ |

| RADIATE | 64 channels | Adverse weather focus, complex environments | LiDAR, Radar, Cameras | Rain, Snow, Fog, Low Light | Bounding boxes for object detection | Approx. 400 GB |

| WADS | 32 channels | Collected in extreme winter conditions in Michigan’s Upper Peninsula | LiDAR, Radar, Cameras | Heavy Snowfall, Icy Roads, Snow-Covered Roads | Point-level LiDAR annotations | 26 TB (7 GB LiDAR, 3.6 billion points) |

| Algorithm | Parameters | Values |

|---|---|---|

| DSOR | k for KNN search | 5 |

| the global threshold constant | 0.01 | |

| the range multiplicative factor | 0.05 | |

| LIOR | searching radius | 0.1 m |

| min. number of neighbors | 3 | |

| snow detection range | 71.235 m | |

| intensity threshold constant | 0.066 | |

| DDIOR | k for KNN search | 5 |

| DVIOR | k for KNN search | 5 |

| distance threshold | intensity threshold | |

| intensity threshold | 1.0 |

| Dataset | Method | Precision | Recall | F1-Score |

|---|---|---|---|---|

| WADS [13] | DSOR | 65.07 | 95.60 | 77.43 |

| LIOR | 80.90 | 78.10 | 79.00 | |

| DDIOR | 69.87 | 95.23 | 80.60 | |

| WeatherNet | 94.88 | 85.13 | 89.74 | |

| DVIOR | 86.02 | 96.25 | 90.80 | |

| DVIOR (no z-axis) | 64.73 | 98.33 | 78.07 | |

| CADC [14] | DSOR | 71.55 | 96.01 | 81.74 |

| LIOR | 76.64 | 89.75 | 82.68 | |

| DDIOR | 51.96 | 98.60 | 67.53 | |

| DVIOR | 83.92 | 91.07 | 87.35 | |

| DVIOR (no z-axis) | 75.72 | 91.65 | 82.92 | |

| RADIATE [15] | DSOR | 29.43 | 99.19 | 45.39 |

| LIOR | 56.73 | 86.66 | 68.73 | |

| DDIOR | 36.04 | 80.40 | 49.78 | |

| DVIOR | 78.93 | 96.11 | 86.68 | |

| DVIOR (no z-axis) | 59.33 | 96.88 | 73.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruan, G.; Kong, F.; Ding, C.; Yang, K.; Hu, T.; Yan, R. DVIOR: Dynamic Vertical and Low-Intensity Outlier Removal for Efficient Snow Noise Removal from LiDAR Point Clouds in Adverse Weather. Electronics 2025, 14, 3662. https://doi.org/10.3390/electronics14183662

Ruan G, Kong F, Ding C, Yang K, Hu T, Yan R. DVIOR: Dynamic Vertical and Low-Intensity Outlier Removal for Efficient Snow Noise Removal from LiDAR Point Clouds in Adverse Weather. Electronics. 2025; 14(18):3662. https://doi.org/10.3390/electronics14183662

Chicago/Turabian StyleRuan, Guanqiang, Fanhao Kong, Chenglin Ding, Kuo Yang, Tao Hu, and Rong Yan. 2025. "DVIOR: Dynamic Vertical and Low-Intensity Outlier Removal for Efficient Snow Noise Removal from LiDAR Point Clouds in Adverse Weather" Electronics 14, no. 18: 3662. https://doi.org/10.3390/electronics14183662

APA StyleRuan, G., Kong, F., Ding, C., Yang, K., Hu, T., & Yan, R. (2025). DVIOR: Dynamic Vertical and Low-Intensity Outlier Removal for Efficient Snow Noise Removal from LiDAR Point Clouds in Adverse Weather. Electronics, 14(18), 3662. https://doi.org/10.3390/electronics14183662