YOLOv11-Based UAV Foreign Object Detection for Power Transmission Lines

Abstract

1. Introduction

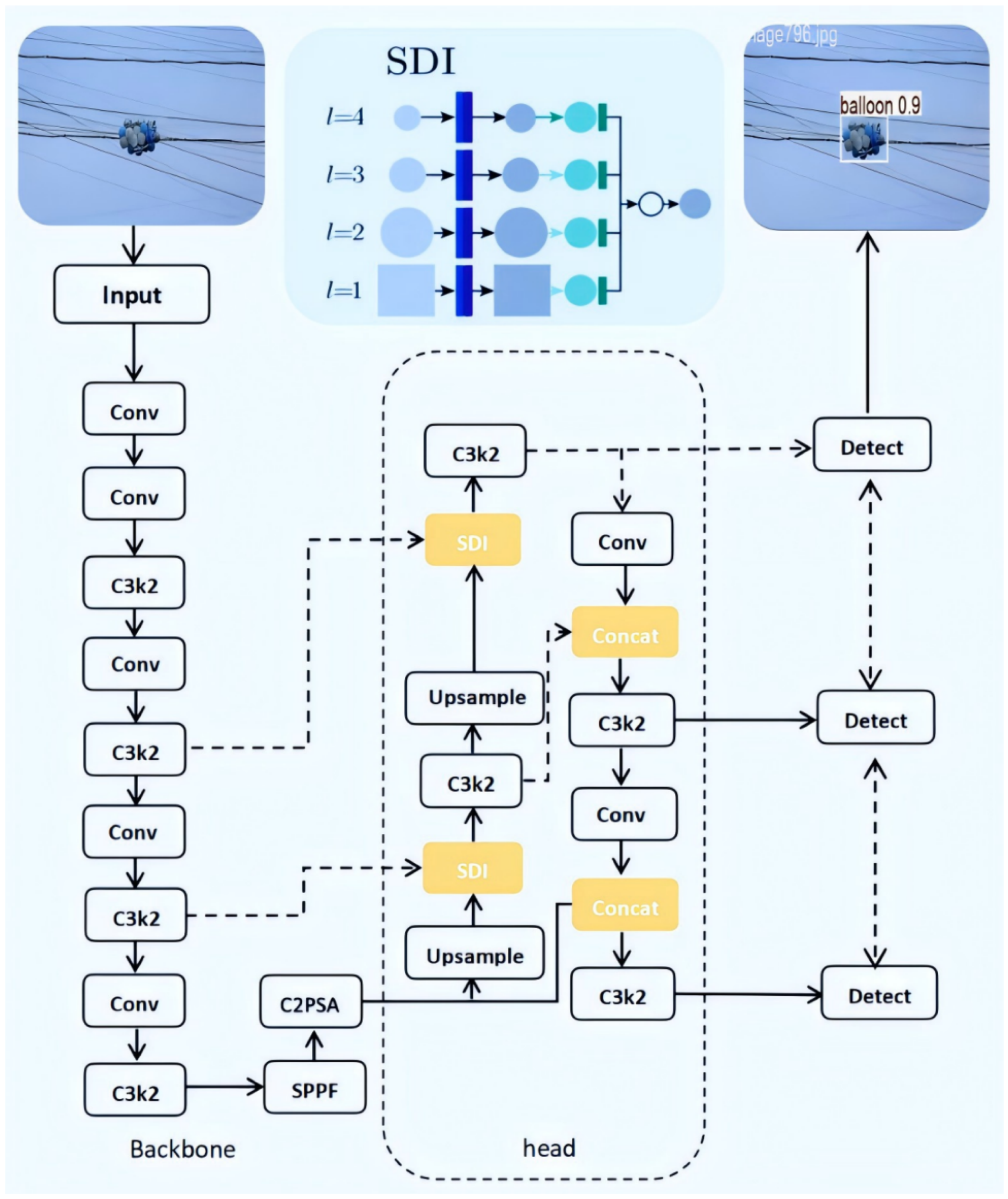

- This paper proposes an end-to-end detector YOLOv11_SDI for the task of foreign object detection on transmission lines. The novelty is to integrate SDI module into the YOLOv11 network.

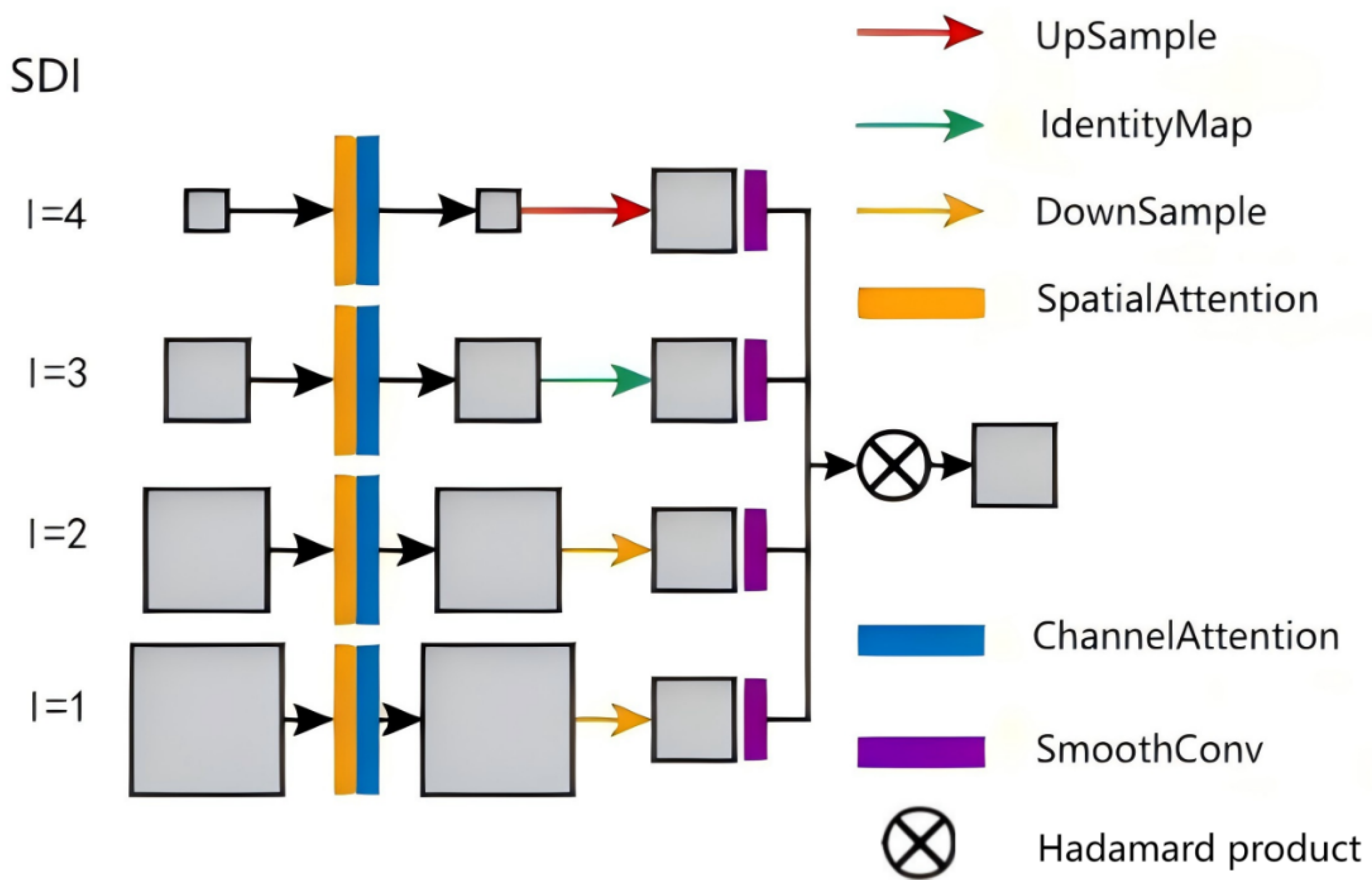

- This paper incorporates a spatial attention unit to co-optimize the multi-layer features to achieve the adaptive fusion of semantic information and detailed features. It enhances the model’s attentions on critical regions with high efficiency.

- Experiments conducted on the EFOD_Drone dataset demonstrate the effectiveness of the proposed YOLOv11_SDI model, which achieves a 94.1% average accuracy, a mAP@0.50 of 0.952, and outperforms existing mainstream methods.

2. Related Work

2.1. Foreign Object Detection Methods

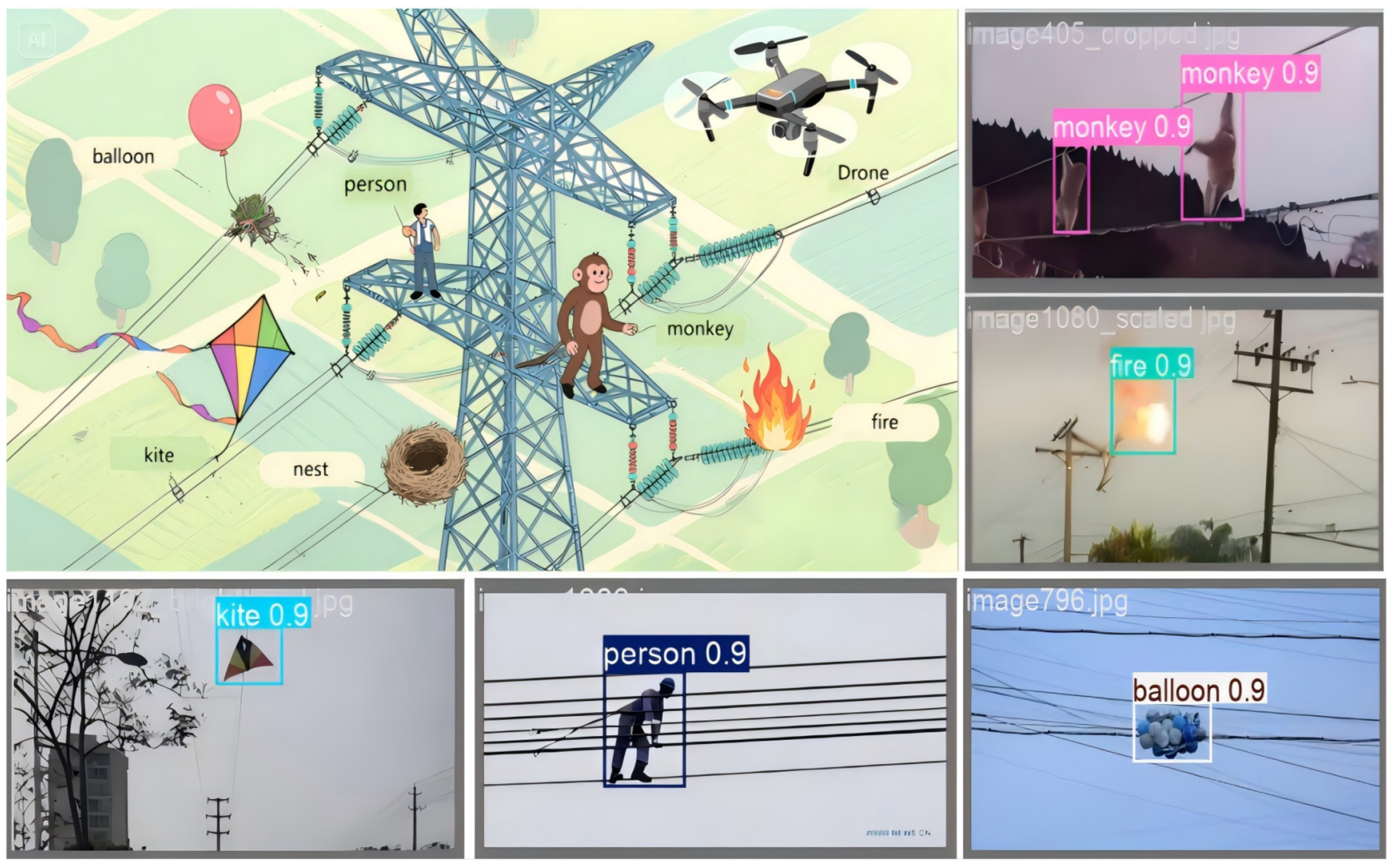

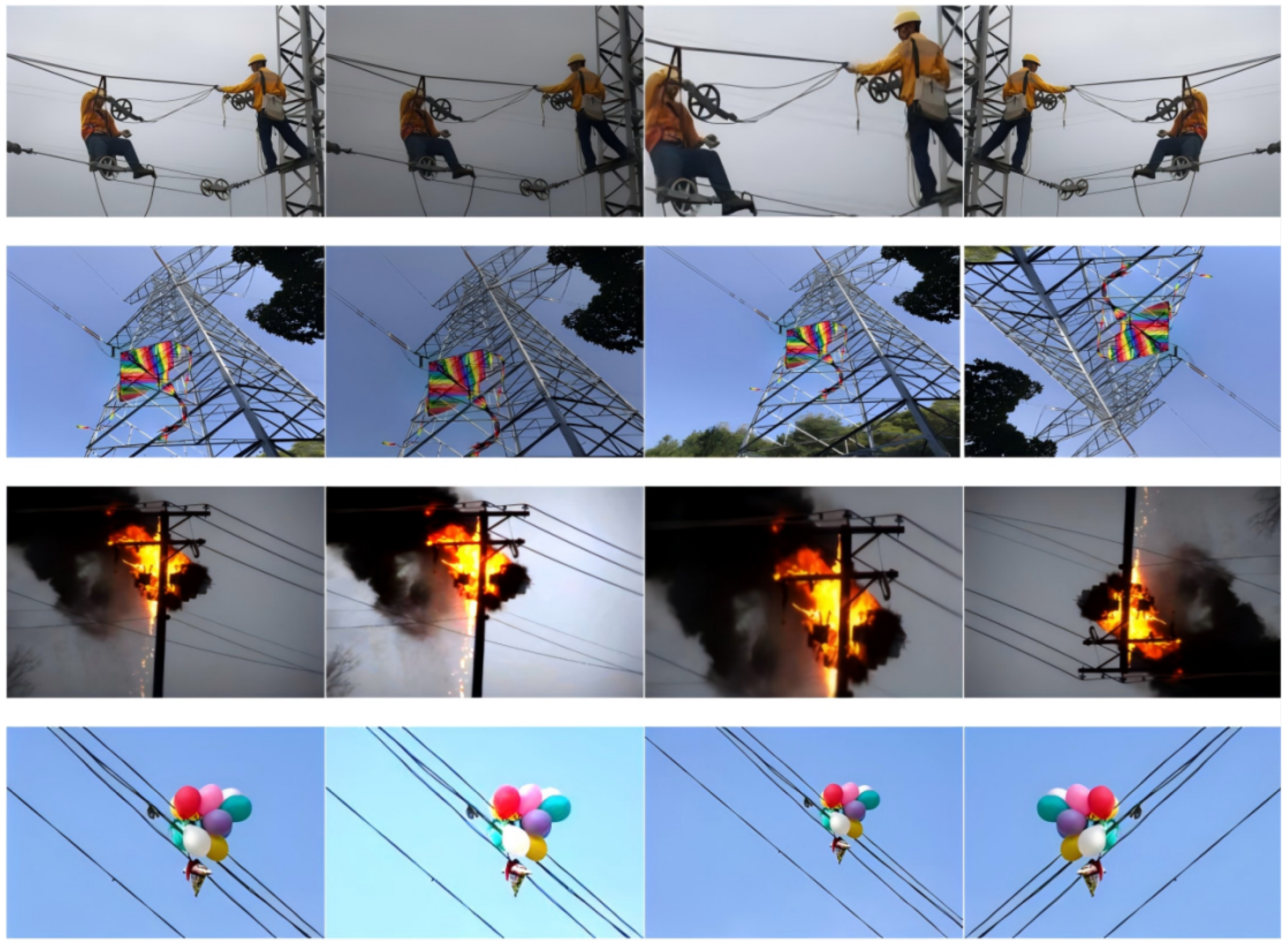

2.2. Foreign Object Detection Datasets

3. Methodology

3.1. The Enhanced YOLOv11 with Spatial-Channel Dynamic Inference

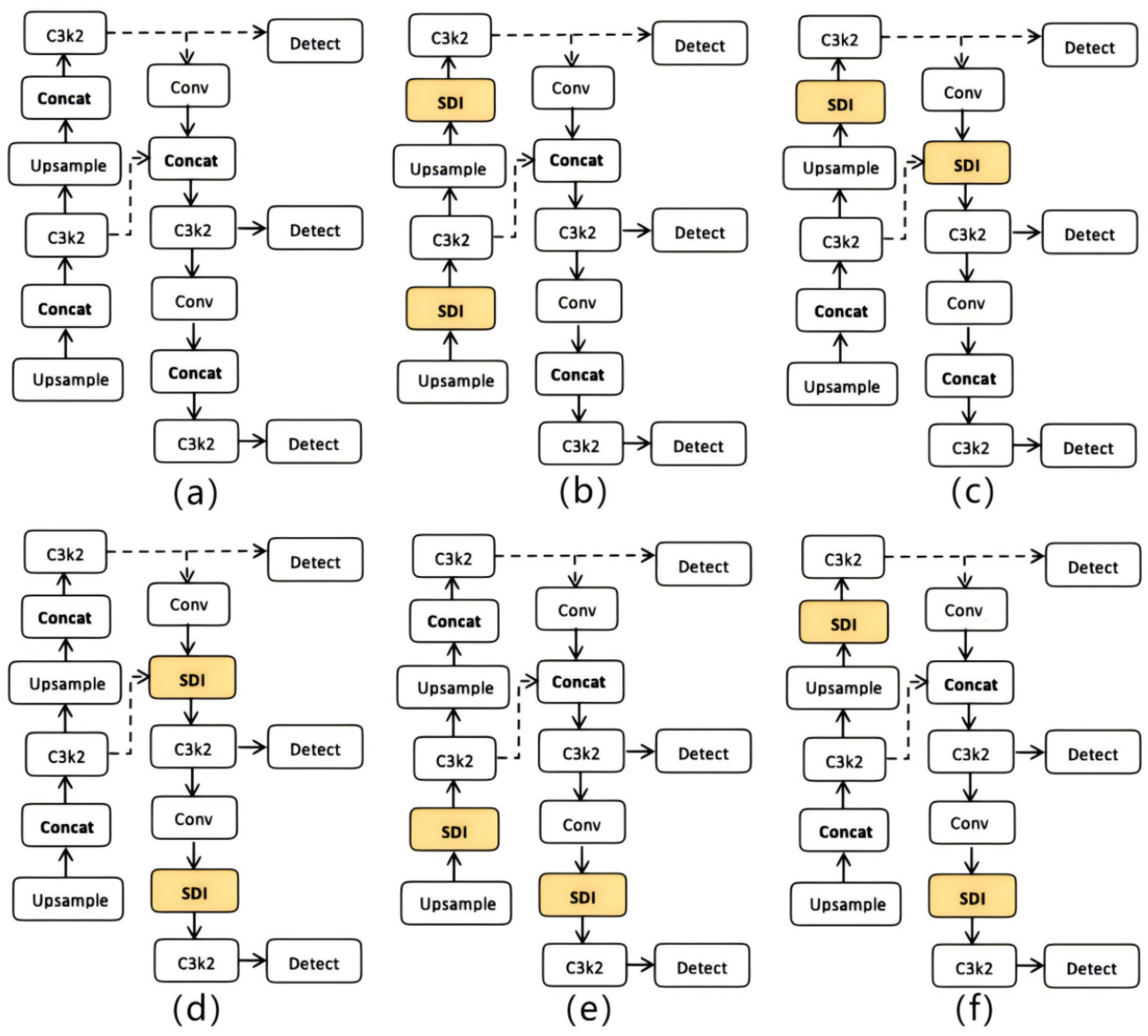

3.2. The Variants YOLOv11_SDI

4. Experiments

4.1. Evaluation Metrics and Dataset

4.2. The Selection of Feature Enhancement Module

4.3. Model Selection

4.4. Ablation Study

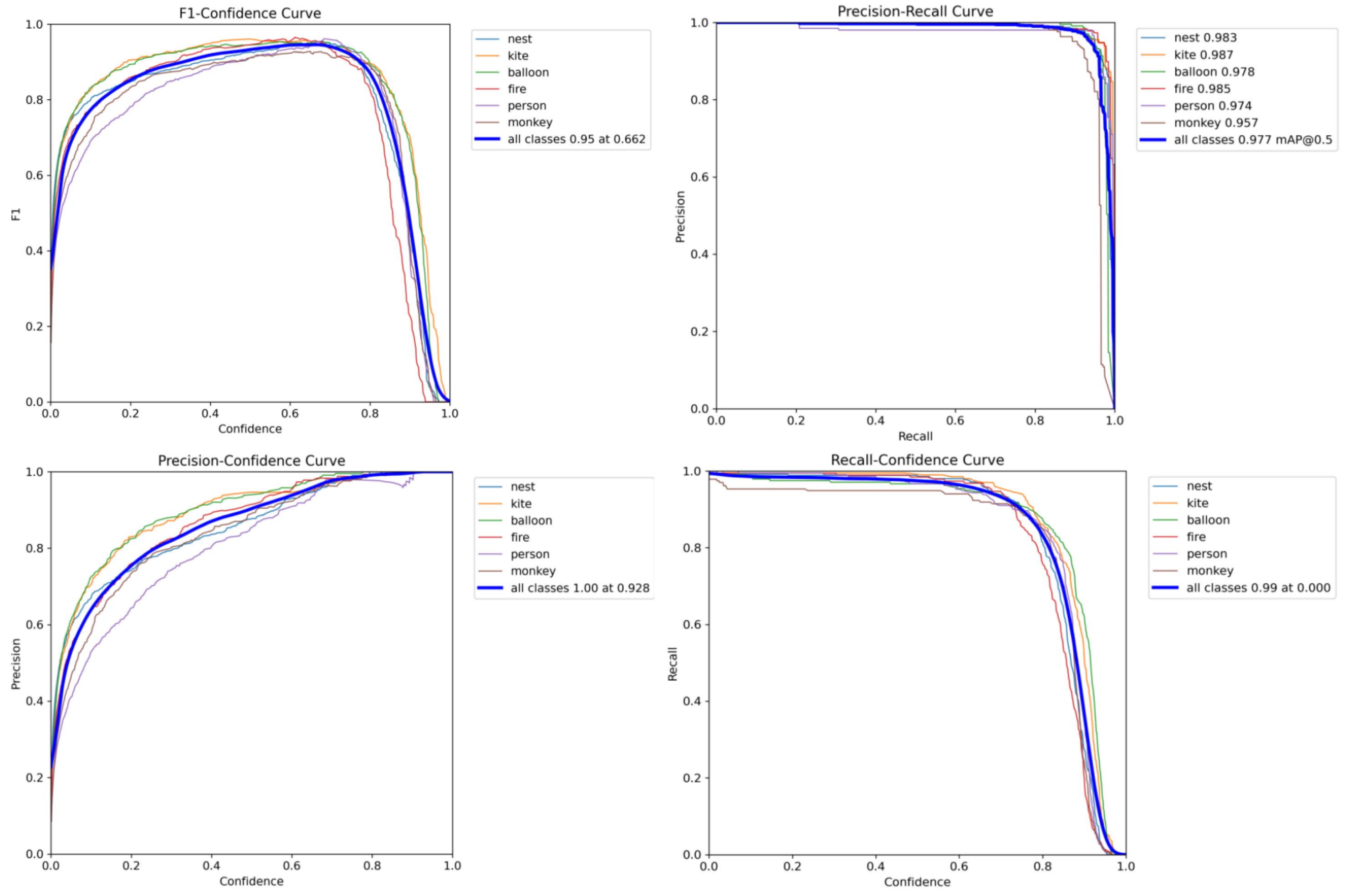

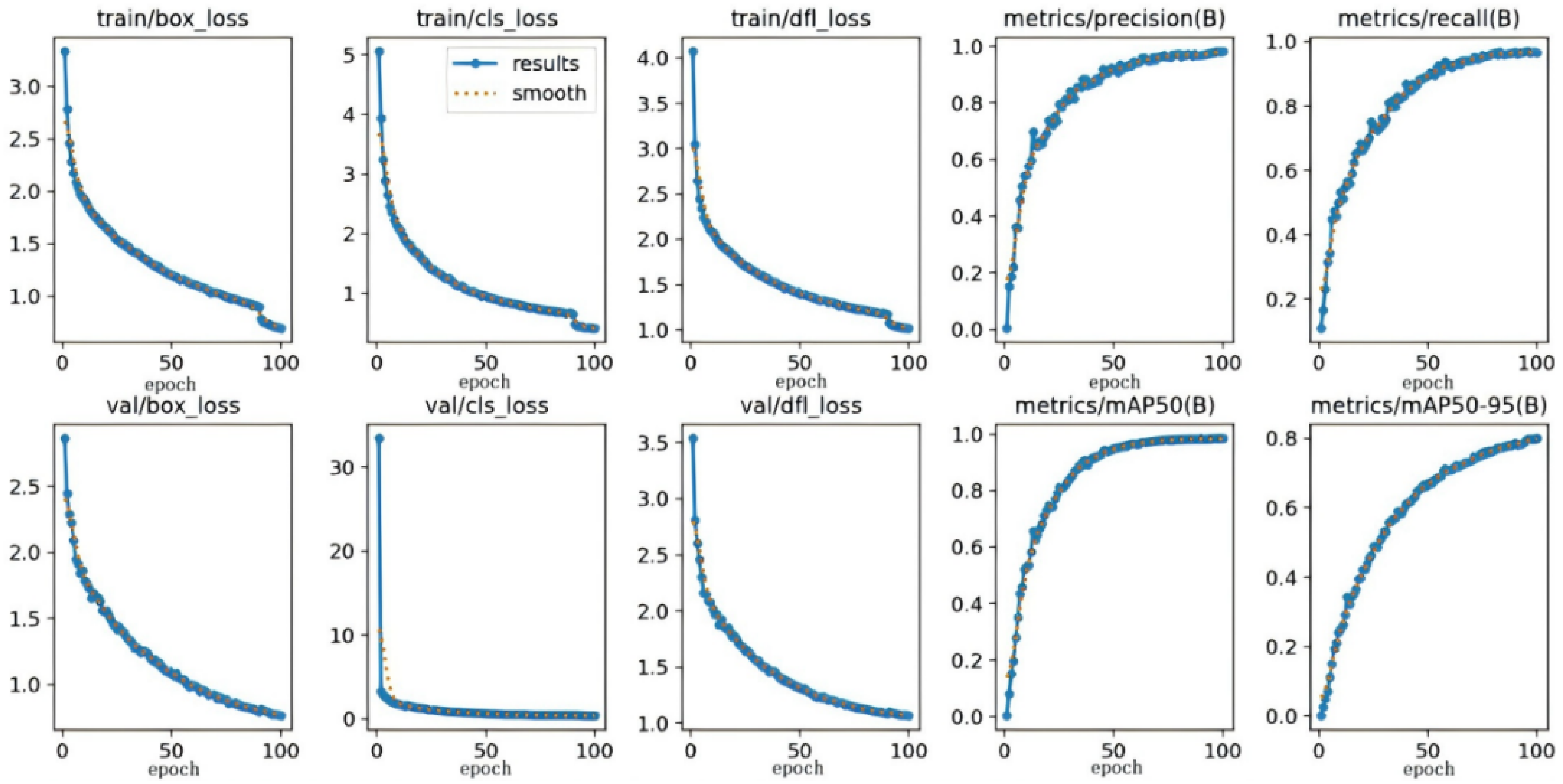

4.5. Our Model’s Training

4.6. Quantitative Comparison with Other Approaches

- Superior Accuracy: Achieves the highest mAP@0.50 (95.2%), outperforming all competitors, including YOLOv8n (92.4%) and YOLOX (91.2%).

- Optimal Efficiency: With only 3.74 M parameters, our model strikes a better accuracy–efficiency balance than larger models (e.g., YOLOv7, 37.2 M) while surpassing lighter models (e.g., YOLOv8n, 3.2 M) in performance.

- Robust Feature Learning: The highest precision (94.1%) and recall (94.4%) indicate robustness against false positives and missed detections, critical for drone-based inspections in complex environments.

- Precision–Recall Trade-Off: Our method improves precision by +1.4% (vs. YOLOv8n) and +2.0% (vs. YOLOX), reducing false alarms. Simultaneously, it boosts recall by +3.9% (vs. YOLOv8n) and +3.4% (vs. YOLOX), enhancing object coverage.

- mAP@0.50 Dominance: The 95.2% mAP@0.50 signifies a +2.8% absolute gain over YOLOv8n (92.4%) and a +4.0% gain over YOLOX (91.2%), despite comparable parameter counts. Notably, our model outperforms YOLOv7 (85.3% mAP) and RetinaNet (87.1% mAP) by >9%, despite their 10× larger sizes.

- Efficiency–Accuracy Pareto Frontier: As illustrated in Table 8, our method resides on the optimal Pareto front, achieving higher accuracy with fewer parameters than all alternatives. For instance, compared to Gold_YOLO (5.6M, 87.5% mAP), our model reduces parameters by 33% while improving mAP by 7.7%. Against YOLOv11 (7.5 M, 92.2% mAP), we use 50% fewer parameters yet deliver +3.0% higher mAP. Notably, while RT-DETR achieves competitive performance (88.2% mAP), its parameter size (41.96 M) is 11.2× larger than our model, making it impractical for resource-constrained UAV deployments.

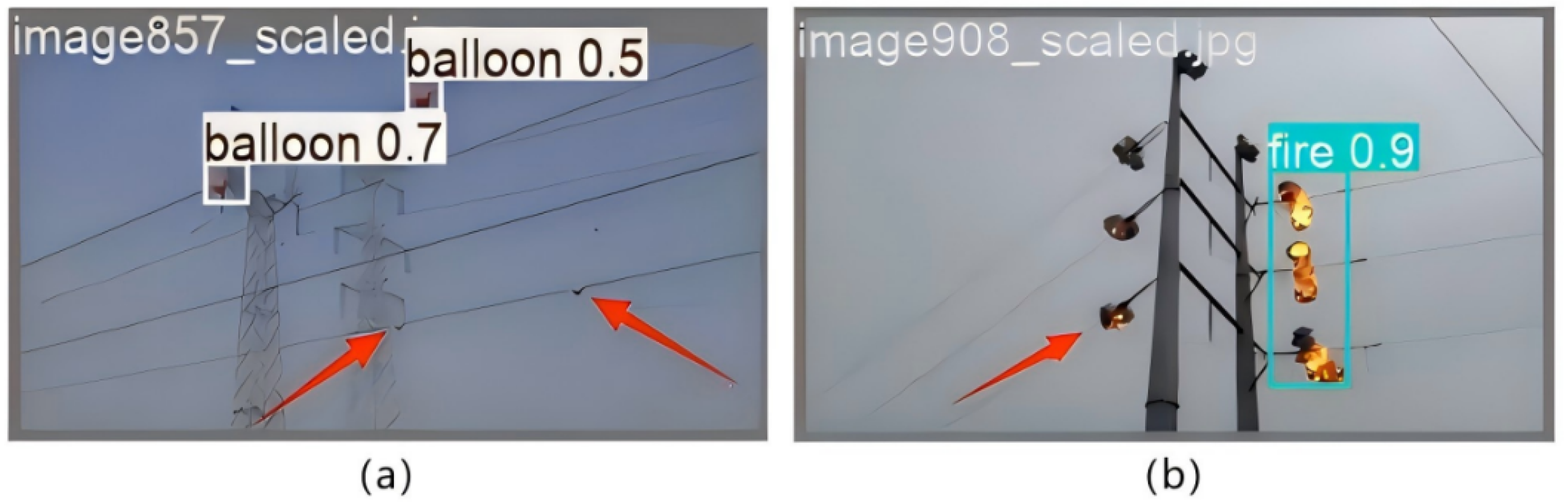

4.7. Visual Results

- Low-Contrast Targets: Objects such as personnel and fires (e.g., eighth row) exhibit dark features due to sky-dominated backgrounds, yet remain detectable.

- Occlusion Handling: The model successfully addresses obstructions caused by transmission infrastructure (e.g., towers and wires), demonstrating resilience to partial occlusions.

- Small-Scale Fire Detection: Critically, the system accurately identifies even small-scale fire incidents (e.g., drone-induced fires), which are vital for early hazard prevention.

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SDI | Spatial-channel Dynamic Inference |

| FOTL_Drone | Foreign Object detection on Transmission Lines from a Drone-view |

| EFOD_Drone | Foreign Object detection on Transmission Lines from a Drone-view |

References

- Zhang, S.; Li, H.C.; Song, Y.; Yan, C.; Wang, J. YOLOv5-Based Foreign Object Detection Algorithm for Transmission Lines. In Proceedings of the 2024 6th Asia Symposium on Image Processing (ASIP), Tianjin, China, 13–15 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 40–46. [Google Scholar]

- Wang, G. FMH-YOLO: Detecting Foreign Objects on Transmission Lines via Enhanced Yolov8. Acad. J. Eng. Technol. Sci. 2025, 8, 8–17. [Google Scholar] [CrossRef]

- Lu, Y.; Li, D.; Li, D.; Li, X.; Gao, Q.; Yu, X. A Lightweight Insulator Defect Detection Model Based on Drone Images. Drones 2024, 8, 431. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, Y.; Wu, H.; Suzuki, S.; Namiki, A.; Wang, W. Design and Application of a UAV Autonomous Inspection System for High-Voltage Power Transmission Lines. Remote Sens. 2023, 15, 865. [Google Scholar] [CrossRef]

- Faisal, M.A.A.; Mecheter, I.; Qiblawey, Y.; Fernandez, J.H.; Chowdhury, M.E.; Kiranyaz, S. Deep Learning in Automated Power Line Inspection: A Review. Appl. Energy 2025, 385, 125507. [Google Scholar] [CrossRef]

- Wang, J.; Jin, L.; Li, Y.; Cao, P. Application of End-to-End Perception Framework Based on Boosted DETR in UAV Inspection of Overhead Transmission Lines. Drones 2024, 8, 545. [Google Scholar] [CrossRef]

- Su, J.; Su, Y.; Zhang, Y.; Yang, W.; Huang, H.; Wu, Q. EpNet: Power Lines Foreign Object Detection with Edge Proposal Network and Data Composition. Knowl.-Based Syst. 2022, 249, 108857. [Google Scholar] [CrossRef]

- Dai, L.; Zhang, X.; Gardoni, P.; Lu, H.; Liu, X.; Krolczyk, G.; Li, Z. A New Machine Vision Detection Method for Identifying and Screening Out Various Large Foreign Objects on Coal Belt Conveyor Lines. Complex Intell. Syst. 2023, 9, 5221–5234. [Google Scholar] [CrossRef]

- Li, J.; Nie, Y.; Cui, W.; Liu, R.; Zheng, Z. Transmission Line Foreign Object Detection Based on Improved YOLOv3 and Deployed to the Chip. In Proceedings of the 2020 3rd International Conference on Machine Learning and Machine Intelligence, Hangzhou, China, 18–20 September 2020; pp. 100–104. [Google Scholar]

- Xu, L.; Song, Y.; Zhang, W.; An, Y.; Wang, Y.; Ning, H. An Efficient Foreign Objects Detection Network for Power Substation. Image Vis. Comput. 2021, 109, 104159. [Google Scholar] [CrossRef]

- Chen, B.; Liu, L.; Zou, Z.; Shi, Z. Target Detection in Hyperspectral Remote Sensing Image: Current Status and Challenges. Remote Sens. 2023, 15, 3223. [Google Scholar] [CrossRef]

- Hao, J.; Yan, G.; Wang, L.; Pei, H.; Xiao, X.; Zhang, B. A Lightweight Transmission Line Foreign Object Detection Algorithm Incorporating Adaptive Weight Pooling. Electronics 2024, 13, 4645. [Google Scholar] [CrossRef]

- Yao, N.; Zhu, L.F. A Novel Foreign Object Detection Algorithm Based on GMM and K-Means for Power Transmission Line Inspection. J. Phys. Conf. Ser. 2020, 1607, 012014. [Google Scholar] [CrossRef]

- Benelmostafa, B.-E.; Medromi, H. PowerLine-MTYOLO: A Multitask YOLO Model for Simultaneous Cable Segmentation and Broken Strand Detection. Drones 2025, 9, 505. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, S.; Xing, Z.; Wei, Z.; Li, Y.; Li, Y. Detection of Foreign Objects Intrusion into Transmission Lines Using Diverse Generation Model. IEEE Trans. Power Deliv. 2023, 38, 3551–3560. [Google Scholar] [CrossRef]

- Zhang, X.; Li, J. A Survey on Detecting Foreign Objects on Transmission Lines Based on UAV Images. In Proceedings of the International Conference on Intelligent Robotics and Applications, Xi’an, China, 31 July–2 August 2024; Springer Nature: Singapore, 2024; pp. 388–402. [Google Scholar]

- Jiang, T.; Li, C.; Yang, M.; Wang, Z. Improved YOLOv5s Algorithm for Object Detection with an Attention Mechanism. Electronics 2022, 11, 2494. [Google Scholar] [CrossRef]

- Li, Z.; Chen, G.; Zhang, T. A CNN-Transformer Hybrid Approach for Crop Classification Using Multi-Temporal Multisensor Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 847–858. [Google Scholar] [CrossRef]

- Guan, F.; Zhang, H.; Wang, X. An Improved YOLOv8 Model for Prohibited Item Detection with Deformable Convolution and Dynamic Head. J. Real-Time Image Process. 2025, 22, 84. [Google Scholar] [CrossRef]

- Yu, Y.; Lv, H.; Chen, W.; Wang, Y. Research on Defect Detection for Overhead Transmission Lines Based on the ABG-YOLOv8n Model. Energies 2024, 17, 5974. [Google Scholar] [CrossRef]

- Su, Y.; Sun, R.; Lin, G.; Wu, Q. Context Decoupling Augmentation for Weakly Supervised Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 7004–7014. [Google Scholar]

- Su, Y.; Deng, J.; Sun, R.; Lin, G.; Wu, Q. A Unified Transformer Framework for Group-Based Segmentation: Co-Segmentation, Co-Saliency Detection and Video Salient Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5879–5895. [Google Scholar] [CrossRef]

- Guo, X.; Bao, Y.; Jiang, H.; Feng, Z.; Sun, Y. RDBL-Net: Detection of Foreign Objects on Transmission Lines Based on Positional Encoding Multiscale Feature Fusion. Int. J. Sens. Netw. 2025, 47, 61–71. [Google Scholar] [CrossRef]

- Ji, C.; Jia, X.; Huang, X.; Zhou, S.; Chen, G.; Zhu, Y. FusionNet: Detection of Foreign Objects in Transmission Lines During Inclement Weather. IEEE Trans. Instrum. Meas. 2024, 73, 5021218. [Google Scholar] [CrossRef]

- Sun, H.; Shen, Q.; Ke, H.; Duan, Z.; Tang, X. Power Transmission Lines Foreign Object Intrusion Detection Method for Drone Aerial Images Based on Improved YOLOv8 Network. Drones 2024, 8, 346. [Google Scholar] [CrossRef]

- Wang, B.; Li, C.; Zou, W.; Zheng, Q. Foreign Object Detection Network for Transmission Lines from Unmanned Aerial Vehicle Images. Drones 2024, 8, 361. [Google Scholar] [CrossRef]

- Han, G.; Wang, R.; Yuan, Q.; Zhao, L.; Li, S.; Zhang, M.; He, M.; Qin, L. Typical Fault Detection on Drone Images of Transmission Lines Based on Lightweight Structure and Feature-Balanced Network. Drones 2023, 7, 638. [Google Scholar] [CrossRef]

- Bae, M.H.; Park, S.W.; Park, J.; Jung, S.H.; Sim, C.B. YOLO-RACE: Reassembly and Convolutional Block Attention for Enhanced Dense Object Detection. Pattern Anal. Appl. 2025, 28, 90. [Google Scholar] [CrossRef]

- Wang, J.; Guo, Y.; Tan, X.; Lan, Y.; Han, Y. Enhancing Green Guava Segmentation with Texture Consistency Loss and Reverse Attention Mechanism Under Complex Background. Comput. Electron. Agric. 2025, 216, 110308. [Google Scholar] [CrossRef]

- Alamri, F.; Pugeault, N. Improving Object Detection Performance Using Scene Contextual Constraints. IEEE Trans. Cogn. Dev. Syst. 2020, 14, 1320–1330. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Yang, J.; Ma, X.; Chen, J. AMFEF-DETR: An End-to-End Adaptive Multiscale Feature Extraction and Fusion Object Detection Network Based on UAV Aerial Images. Drones 2024, 8, 523. [Google Scholar] [CrossRef]

- Mao, Q.; Li, Q.; Wang, B.; Zhang, Y.; Dai, T.; Chen, C.P. SpirDet: Towards Efficient, Accurate and Lightweight Infrared Small Target Detector. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5006912. [Google Scholar] [CrossRef]

- Li, H.; Dong, Y.; Liu, Y.; Ai, J. Design and Implementation of UAVs for Bird’s Nest Inspection on Transmission Lines Based on Deep Learning. Drones 2022, 6, 252. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Feng, Z.; Zhu, H. RailFOD23: A Dataset for Foreign Object Detection on Railroad Transmission Lines. Sci. Data 2024, 11, 72. [Google Scholar] [CrossRef]

- Wang, S.; Tan, W.; Yang, T.; Zeng, L.; Hou, W.; Zhou, Q. High-Voltage Transmission Line Foreign Object and Power Component Defect Detection Based on Improved YOLOv5. J. Electr. Eng. Technol. 2024, 19, 851–866. [Google Scholar] [CrossRef]

- Zhu, J.; Guo, Y.; Yue, F.; Yuan, H.; Yang, A.; Wang, X.; Rong, M. A Deep Learning Method to Detect Foreign Objects for Inspecting Power Transmission Lines. IEEE Access 2020, 8, 94065–94075. [Google Scholar] [CrossRef]

- Wang, Z.; Yuan, G.; Zhou, H.; Ma, Y.; Ma, Y. Foreign-Object Detection in High-Voltage Transmission Line Based on Improved YOLOv8m. Appl. Sci. 2023, 13, 12775. [Google Scholar] [CrossRef]

- Yue, G.; Liu, Y.; Niu, T.; Liu, L.; An, L.; Wang, Z.; Duan, M. Glu-YOLOv8: An Improved Pest and Disease Target Detection Algorithm Based on YOLOv8. Forests 2024, 15, 1486. [Google Scholar] [CrossRef]

- Buhari, A.M.; Ooi, C.P.; Baskaran, V.M.; Baskaran, V.M.; Phan, R.C.; Wong, K.; Tan, W.H. Invisible Emotion Magnification Algorithm (IEMA) for Real-Time Micro-Expression Recognition with Graph-Based Features. Multimed. Tools Appl. 2022, 81, 9151–9176. [Google Scholar] [CrossRef]

- Jordan, S. Using E-Assessment to Learn About Learning. In Proceedings of the CAA 2013 International Conference, Southampton, UK, 9–10 July 2013; pp. 1–12. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Liu, C.; Hogan, A.; Diaconu, L.; Poznanski, J.; Yu, L.; Rai, P.; Ferriday, R.; et al. Ultralytics/yolov5: V3.0. Zenodo. 2020. Available online: https://zenodo.org/records/3983579 (accessed on 31 August 2025).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. [Google Scholar]

- Zhang, Y.; Xu, W.; Yang, S.; Xu, Y.; Yu, X. Improved YOLOX Detection Algorithm for Contraband in X-Ray Images. Appl. Opt. 2022, 61, 6297–6310. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Hua, Z.; Wen, Y.; Zhang, S.; Xu, X.; Song, H. E-YOLO: Recognition of Estrus Cow Based on Improved YOLOv8n Model. Expert Syst. Appl. 2024, 238, 122212. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2980–2988. [Google Scholar]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient Object Detector via Gather-and-Distribute Mechanism. Adv. Neural Inf. Process. Syst. 2024, 36, 51094–51112. [Google Scholar]

- Li, T.; Zhu, C.; Wang, Y.; Li, J.; Cao, H.; Yuan, P.; Gao, Z.; Wang, S. LMFC-DETR: A Lightweight Model for Real-Time Detection of Suspended Foreign Objects on Power Lines. IEEE Trans. Instrum. Meas. 2025, 74, 2539319. [Google Scholar] [CrossRef]

- Xiao, C.; Xiao, C.; An, W.; Zhang, Y.; Su, Z.; Li, M.; Sheng, W.; Pietikäinen, M.; Liu, L. Highly Efficient and Unsupervised Framework for Moving Object Detection in Satellite Videos. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 11532–11539. [Google Scholar] [CrossRef] [PubMed]

- Kavukcuoglu, K.; Ranzato, M.A.; LeCun, Y. Fast Inference in Sparse Coding Algorithms with Applications to Object Recognition. arXiv 2020, arXiv:1010.3467. [Google Scholar]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13668–13677. [Google Scholar]

- Wu, S.; Xiao, C.; Wang, Y.; Yang, J.; An, W. Sparsity-Aware Global Channel Pruning for Infrared Small-target Detection Networks. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5615011. [Google Scholar] [CrossRef]

| Device | New Configuration (SDI) |

|---|---|

| Operating System | Windows 11 |

| GPU | NVIDIA GeForce RTX 3080 (12 G) |

| GPU Accelerator | CUDA v11.6 |

| Scripting Languages | Python v3.8 |

| Frameworks | PyTorch v2.0 |

| Compilers | Anaconda3 (v2023.09-0, 64-bit), PyCharm Community Edition v2023.3.2 |

| Target detection algorithms | YOLOv11 |

| Nest | Kite | Balloon | Fire | Person | Monkey | |

|---|---|---|---|---|---|---|

| Proportion | 23.2% | 13.8% | 15.2% | 15.4% | 16.3% | 16.1% |

| Class | Model | Precision | Recall | mAP | Inference | GFLOPS | |

|---|---|---|---|---|---|---|---|

| @0.50 | @0.50:0.95 | (ms) | |||||

| Nest | SDI | 93.3 | 93.0 | 95.9 | 76.7 | 120.33 | 11.5 |

| BiFPN | 93.6 | 92.2 | 94.3 | 71.6 | 106.28 | 10.8 | |

| GoldYOLO | 93.0 | 93.4 | 94.8 | 74.1 | 136.08 | 12.5 | |

| iEMA | 91.1 | 92.3 | 95.5 | 71.1 | 122.71 | 11.8 | |

| GAM | 94.4 | 90.9 | 93.1 | 76.4 | 129.12 | 12.0 | |

| Kite | SDI | 95.0 | 94.1 | 96.3 | 77.7 | 107.56 | 11.5 |

| BiFPN | 91.1 | 90.8 | 93.7 | 75.7 | 101.10 | 10.8 | |

| GoldYOLO | 92.6 | 93.8 | 95.5 | 73.4 | 126.62 | 12.5 | |

| iEMA | 93.2 | 87.0 | 95.3 | 75.5 | 110.98 | 11.8 | |

| GAM | 93.5 | 91.4 | 94.1 | 76.2 | 112.25 | 12.0 | |

| Balloon | SDI | 96.2 | 91.6 | 95.7 | 77.3 | 147.38 | 11.5 |

| BiFPN | 93.1 | 92.7 | 95.4 | 74.3 | 136.80 | 10.8 | |

| GoldYOLO | 95.3 | 91.2 | 94.5 | 75.3 | 154.16 | 12.5 | |

| iEMA | 93.1 | 88.7 | 94.2 | 74.1 | 152.91 | 11.8 | |

| GAM | 94.5 | 89.9 | 92.1 | 76.3 | 139.81 | 12.0 | |

| Fire | SDI | 93.8 | 93.8 | 95.8 | 70.1 | 133.13 | 11.5 |

| BiFPN | 91.0 | 91.5 | 95.9 | 70.8 | 135.93 | 10.8 | |

| GoldYOLO | 90.7 | 93.3 | 94.5 | 67.8 | 143.16 | 12.5 | |

| iEMA | 89.7 | 90.1 | 94.8 | 64.3 | 147.36 | 11.8 | |

| GAM | 92.1 | 90.7 | 93.7 | 69.3 | 145.94 | 12.0 | |

| Person | SDI | 89.7 | 90.9 | 94.2 | 74.2 | 65.78 | 11.5 |

| BiFPN | 87.4 | 90.8 | 94.0 | 70.1 | 57.6 | 10.8 | |

| GoldYOLO | 86.3 | 91.3 | 93.4 | 73.0 | 66.29 | 12.5 | |

| iEMA | 89.1 | 87.1 | 92.4 | 69.2 | 52.80 | 11.8 | |

| GAM | 88.9 | 87.2 | 91.7 | 68.5 | 70.83 | 12.0 | |

| Monkey | SDI | 94.4 | 88.9 | 93.3 | 71.4 | 68.08 | 11.5 |

| BiFPN | 90.3 | 88.6 | 93.6 | 69.7 | 58.81 | 10.8 | |

| GoldYOLO | 94.2 | 87.1 | 92.5 | 72.1 | 70.31 | 12.5 | |

| iEMA | 91.6 | 88.1 | 91.9 | 67.2 | 67.2 | 11.8 | |

| GAM | 92.5 | 89.9 | 92.1 | 68.92 | 69.73 | 12.0 | |

| Average | SDI | 93.7 | 92.1 | 95.2 | 74.6 | 107.04 | 11.5 |

| BiFPN | 91.1 | 91.1 | 94.5 | 72.0 | 99.42 | 10.8 | |

| GoldYOLO | 92.0 | 91.7 | 94.2 | 72.6 | 116.10 | 12.5 | |

| iEMA | 91.3 | 88.9 | 94.0 | 70.2 | 113.98 | 11.8 | |

| GAM | 92.4 | 89.9 | 92.8 | 70.6 | 119.54 | 12.0 | |

| Model | Precision | Recall | mAP | Inference | GFLOPS | |

|---|---|---|---|---|---|---|

| @0.50 | @0.50:0.95 | (ms) | ||||

| YOLOv11 | 88.9 | 89.2 | 89.2 | 56.1 | 87.15 | 8.2 |

| 1-YOLOv11_SDI | 92.1 | 89.8 | 91.0 | 65.1 | 107.04 | 11.5 |

| 2-YOLOv11_SDI | 91.3 | 87.6 | 89.5 | 63.4 | 104.44 | 11.2 |

| 3-YOLOv11_SDI | 89.9 | 84.7 | 83.4 | 54.3 | 97.56 | 10.5 |

| 4-YOLOv11_SDI | 90.6 | 89.8 | 89.7 | 59.6 | 102.03 | 10.9 |

| 5-YOLOv11_SDI | 87.1 | 84.6 | 85.9 | 52.1 | 88.83 | 9.1 |

| Model | Precision | Recall | mAP | Inference | GFLOPS | |

|---|---|---|---|---|---|---|

| @0.50 | @0.50:0.95 | (ms) | ||||

| YOLOv11 | 90.7 | 91.0 | 92.2 | 70.1 | 87.15 | 8.2 |

| 1-YOLOv11_SDI | 94.1 | 94.4 | 95.2 | 74.4 | 107.04 | 11.5 |

| 2-YOLOv11_SDI | 93.2 | 92.3 | 95.5 | 72.3 | 104.44 | 11.2 |

| 3-YOLOv11_SDI | 93.1 | 89.4 | 93.9 | 72.4 | 97.56 | 10.5 |

| 4-YOLOv11_SDI | 94.3 | 92.8 | 94.9 | 72.7 | 102.03 | 10.9 |

| 5-YOLOv11_SDI | 92.7 | 91.2 | 94.7 | 71.6 | 88.83 | 9.1 |

| Model | Category | Precision | Recall | mAP@0.50 | mAP@0.50:0.95 | Inference (ms) |

|---|---|---|---|---|---|---|

| YOLOv11 | Nest | 90.6 | 91.0 | 93.3 | 70.8 | 105.93 |

| Kite | 91.9 | 92.8 | 92.8 | 69.6 | 100.78 | |

| Balloon | 90.2 | 89.2 | 91.3 | 76.0 | 94.56 | |

| Fire | 89.2 | 90.9 | 89.8 | 65.4 | 106.78 | |

| Person | 86.5 | 89.7 | 88.7 | 69.2 | 54.86 | |

| Monkey | 90.7 | 87.4 | 90.4 | 65.1 | 59.96 | |

| 1-YOLOv11_SDI | Nest | 93.3 | 93.0 | 95.9 | 76.7 | 120.33 |

| Kite | 95.0 | 94.1 | 96.3 | 77.7 | 107.56 | |

| Balloon | 96.2 | 91.6 | 95.7 | 77.3 | 147.38 | |

| Fire | 93.8 | 93.8 | 95.8 | 70.1 | 133.13 | |

| Person | 89.7 | 90.9 | 94.2 | 74.2 | 65.78 | |

| Monkey | 94.4 | 88.9 | 93.3 | 71.4 | 68.08 | |

| 2-YOLOv11_SDI | Nest | 91.6 | 91.6 | 95.0 | 72.9 | 122.84 |

| Kite | 92.5 | 91.2 | 94.7 | 77.1 | 114.74 | |

| Balloon | 94.6 | 89.5 | 94.8 | 76.2 | 139.91 | |

| Fire | 93.8 | 90.7 | 95.5 | 68.3 | 133.05 | |

| Person | 89.6 | 91.8 | 94.1 | 71.9 | 57.26 | |

| Monkey | 91.9 | 87.6 | 92.3 | 69.8 | 58.86 | |

| 3-YOLOv11_SDI | Nest | 91.0 | 91.3 | 94.8 | 70.3 | 117.34 |

| Kite | 90.7 | 90.0 | 94.5 | 75.1 | 100.69 | |

| Balloon | 92.3 | 89.2 | 93.7 | 73.3 | 141.69 | |

| Fire | 93.4 | 89.6 | 95.4 | 64.8 | 123.93 | |

| Person | 88.0 | 91.3 | 93.9 | 69.7 | 47.38 | |

| Monkey | 94.7 | 85.4 | 92.1 | 67.2 | 53.75 | |

| 4-YOLOv11_SDI | Nest | 93.2 | 93.3 | 96.0 | 75.0 | 120.03 |

| Balloon | 93.9 | 89.7 | 94.3 | 75.9 | 140.01 | |

| Fire | 96.1 | 92.4 | 95.2 | 69.4 | 128.333 | |

| Person | 91.5 | 91.3 | 94.5 | 74.5 | 58.74 | |

| Monkey | 93.7 | 89.3 | 93.1 | 71.1 | 60.22 | |

| 5-YOLOv11_SDI | Nest | 90.6 | 93.6 | 94.3 | 71.6 | 88.16 |

| Kite | 90.2 | 92.8 | 95.1 | 75.5 | 95.42 | |

| Balloon | 95.2 | 89.9 | 94.4 | 74.4 | 132.48 | |

| Fire | 90.7 | 90.7 | 94.9 | 67.4 | 109.53 | |

| Person | 87.1 | 89.2 | 92.5 | 69.3 | 49.98 | |

| Monkey | 89.2 | 87.1 | 91.7 | 67.7 | 57.41 |

| Model | Precision | Recall | mAP | Inference | GFLOPS | |

|---|---|---|---|---|---|---|

| @0.50 | @0.50:0.95 | (ms) | ||||

| YOLOv11 | 90.7 | 91.0 | 92.2 | 70.1 | 87.15 | 8.2 |

| Spatial attention | 92.9 | 92.6 | 93.5 | 72.8 | 100.56 | 10.3 |

| Channel attention | 92.6 | 91.8 | 93.1 | 71.3 | 102.18 | 10.8 |

| Channel–spatial_order | 93.6 | 93.8 | 94.1 | 73.5 | 107.04 | 11.5 |

| Spatial–channel_order (Ours) | 94.1 | 94.4 | 95.2 | 74.4 | 107.04 | 11.5 |

| Model | Parameters (M) | Precision (%) | Recall (%) | mAP@0.50 | GFLOPS |

|---|---|---|---|---|---|

| YOLOv5 [41] | 7.0 | 91.4 | 90.9 | 91.8 | 16.5 |

| YOLOv7 [42] | 37.2 | 87.2 | 81.2 | 85.3 | 105.3 |

| YOLOX [43] | 5.02 | 92.1 | 91.0 | 91.2 | 12.8 |

| YOLOv8n [44] | 3.2 | 92.7 | 90.5 | 92.4 | 8.3 |

| YOLOv11 [45] | 7.5 | 90.7 | 91.0 | 92.2 | 15.2 |

| RetinaNet [46] | 37.7 | 85.8 | 85.6 | 87.1 | 98.7 |

| Gold_YOLO [47] | 5.6 | 87.5 | 82.1 | 87.5 | 13.5 |

| RT-DETR [48] | 41.96 | 87.9 | 86.3 | 88.2 | 112.4 |

| Ours | 3.74 | 94.1 | 94.4 | 95.2 | 11.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, D.; Yin, Y.; Zhang, H.; Li, C.; Wang, B. YOLOv11-Based UAV Foreign Object Detection for Power Transmission Lines. Electronics 2025, 14, 3577. https://doi.org/10.3390/electronics14183577

Gao D, Yin Y, Zhang H, Li C, Wang B. YOLOv11-Based UAV Foreign Object Detection for Power Transmission Lines. Electronics. 2025; 14(18):3577. https://doi.org/10.3390/electronics14183577

Chicago/Turabian StyleGao, Depeng, Yihan Yin, Han Zhang, Changping Li, and Bingshu Wang. 2025. "YOLOv11-Based UAV Foreign Object Detection for Power Transmission Lines" Electronics 14, no. 18: 3577. https://doi.org/10.3390/electronics14183577

APA StyleGao, D., Yin, Y., Zhang, H., Li, C., & Wang, B. (2025). YOLOv11-Based UAV Foreign Object Detection for Power Transmission Lines. Electronics, 14(18), 3577. https://doi.org/10.3390/electronics14183577