Federated Multi-Agent DRL for Task Offloading in Vehicular Edge Computing

Abstract

1. Introduction

- For VEC situations, develop communication, computation, and system cost models predicated on task processing deadlines and energy limitations, and articulate the task offloading problem as a Markov Decision Process (MDP). This approach takes into account the dynamic attributes of RSU and MEC server workloads, formulates an effective reward mechanism, and emphasizes enhancing performance in MEC systems.

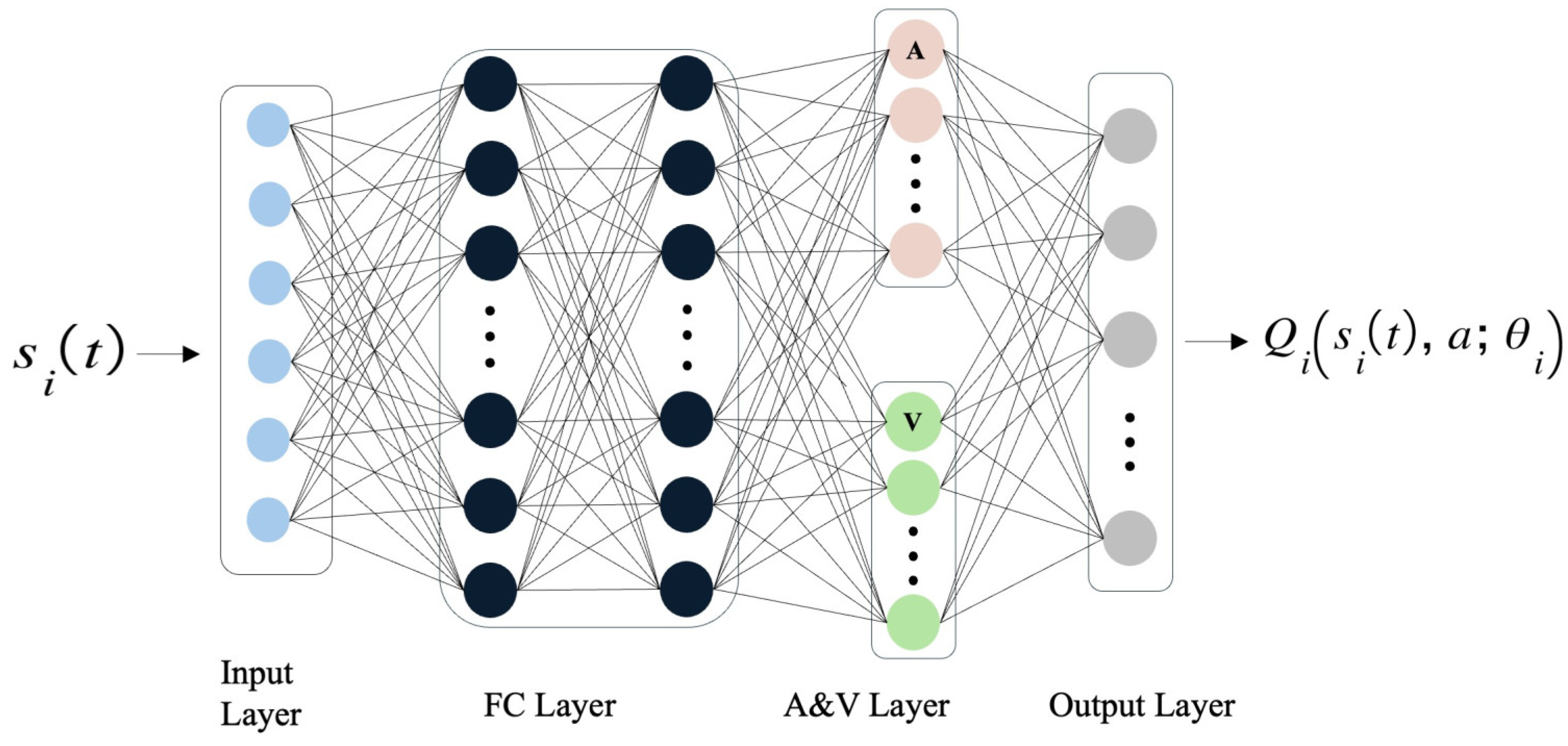

- To mitigate the expenses associated with prolonged task processing, we developed a task offloading approach utilizing multi-agent deep reinforcement learning. This approach employs a dual network design to partition the Q-function into a state value function and an action advantage function, hence augmenting the program’s learning efficacy in intricate situations and facilitating the agent’s ability to make optimal offloading decisions.

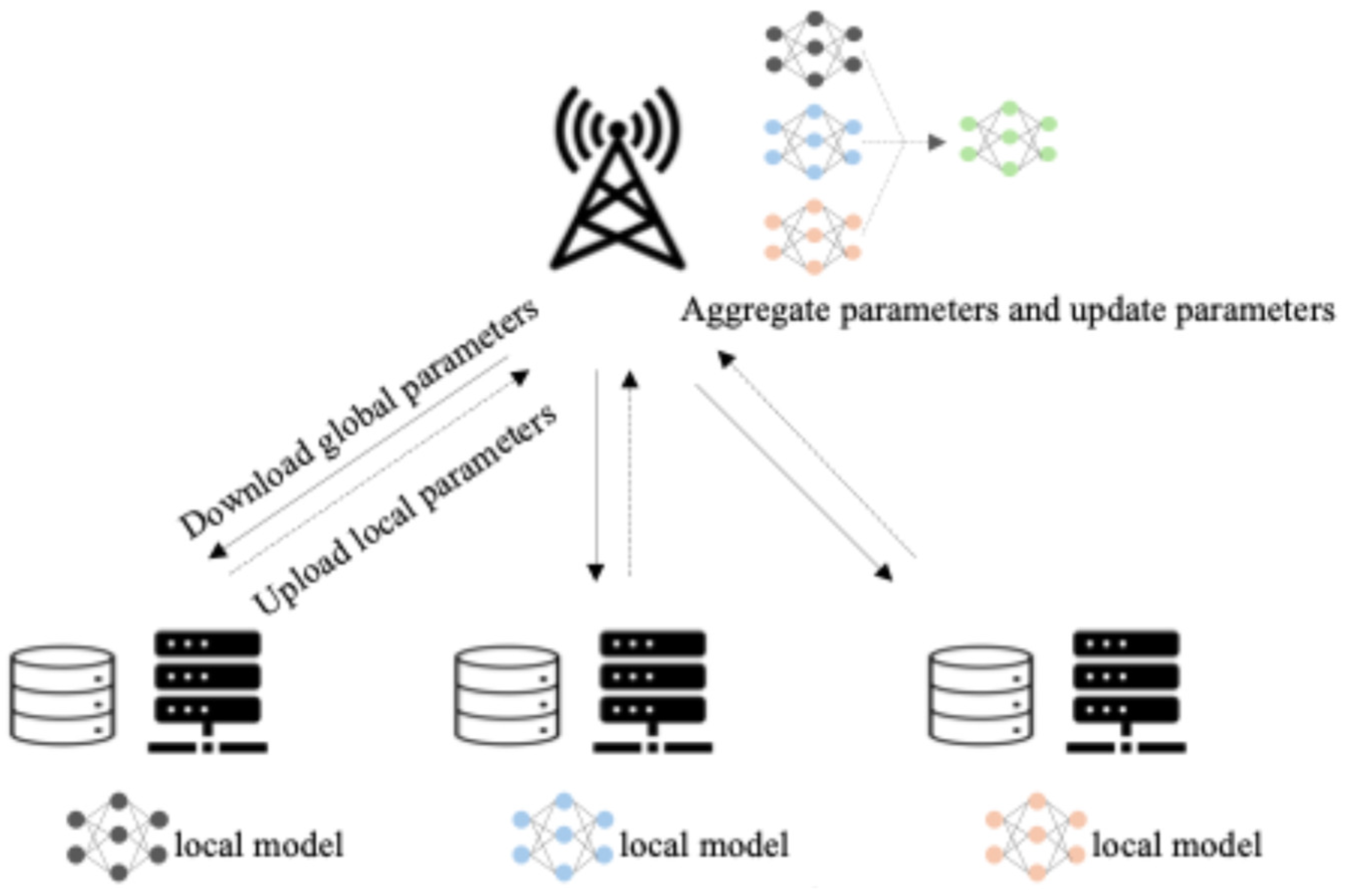

- The MAD3QN algorithm employs a distributed training approach that necessitates the handling of substantial data transport. Federated learning was implemented to enhance training efficiency. During this process, agents exchange training parameters following model aggregation and updates, which mitigates the effects of non-IID data while safeguarding data privacy and security, thereby enhancing the convergence rate and optimization efficacy of the model.

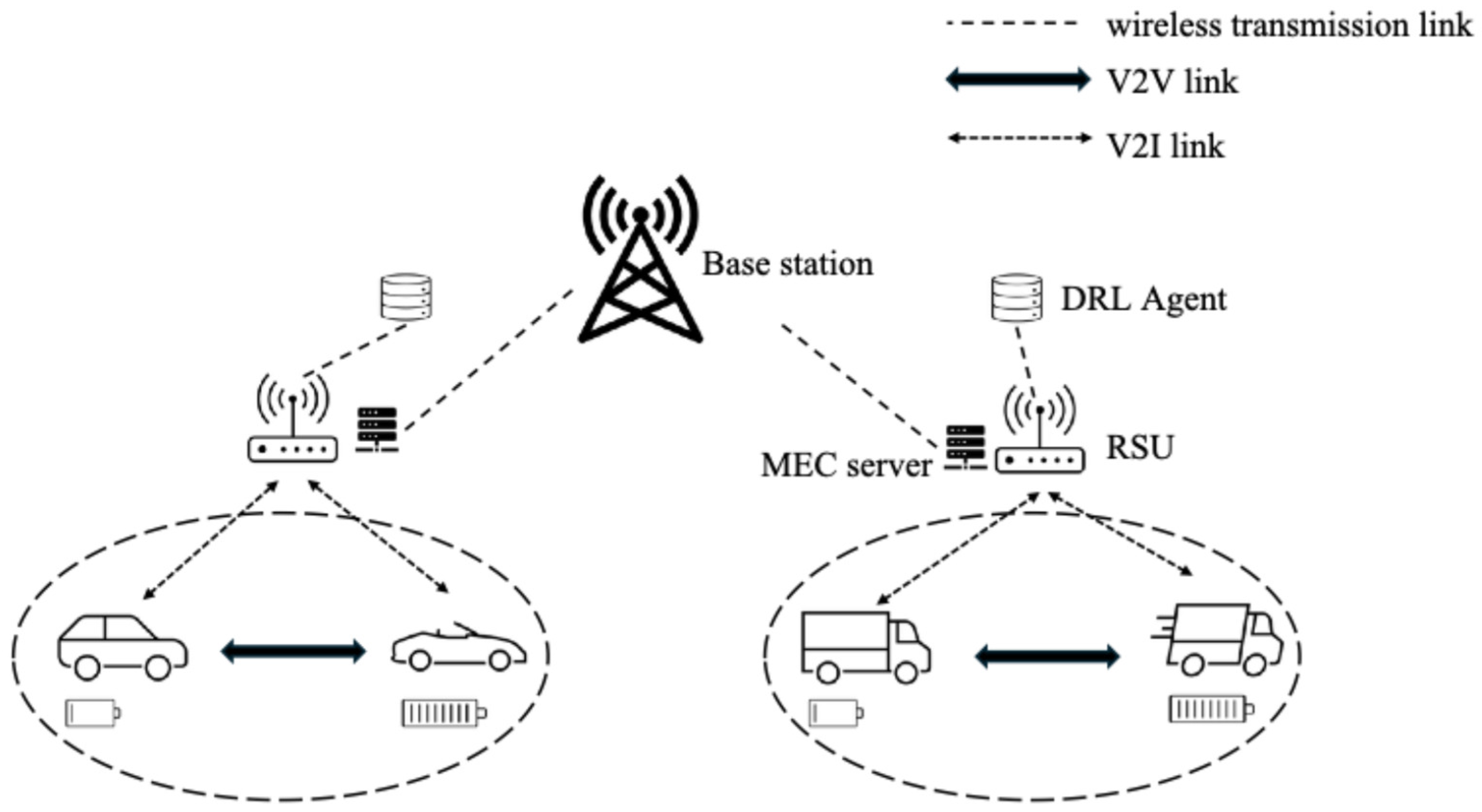

2. System Model

2.1. Communication Model

2.2. Computation Model

2.2.1. Local Execution

2.2.2. Edge Execution

3. Problem Formulation and Algorithm Design

3.1. Problem Formulation

3.2. Markov Decision Process

3.3. MAD3QN Optimization with Enhanced Q-Values

| Algorithm 1: MAD3QN training process |

| 1: Input: Observed environmental state ; Output: parameters |

| 2: Initialization: empirical replay buffer D, evaluation network and target , parameters and |

| 3: for episode i = 1, 2, …, M do |

| 4: Initialize the state |

| 5: for each agent in a time slot do |

| 6: According to the current state , randomly select an action or choose to |

| 7: Perform the action to obtain and the reward |

| 8: Store the experience , , , ) in D. |

| 9: for agent do |

| 10: Obtain a set of experience samples from D. |

| 11: for each experience do |

| 12: Get the experience |

| 13: Calculate according to (29) |

| 14: end for |

| 15: Setting |

| 16: Update parameters by minimizing the loss function (Equation (31)) |

| 17: Update parameters for each agent |

| 18: end for |

| 19: if end of episode then |

| 20: Update target network parameters: |

| 21: Perform model aggregation |

| 22: end if |

| 23: end for |

| 24: end for |

3.4. Complexity Analysis

3.5. FL-Based Optimization Method for MAD3QN Models

| Algorithm 2: FL-based MAD3QN model training |

| 1: Input: Global model parameters ; Local model parameters |

| 2: Output: updated global model parameters |

| 3: Initialization: In time slot , the base station initializes the MAD3QN model ; each agent initializes the local |

| 4: MAD3QN model . |

| 5: for round i = 1, 2, …, M do |

| 6: for agent do |

| 7: Download global parameters from base station |

| 8: Update local parameters according to (32) |

| 9: Perform local training based on local data |

| 10: Update the trained parameters and upload to the base station |

| 11: end for |

| 12: Base station aggregates the uploaded by the agents according to Equation (33) |

| 13: Each smart body receives the updated weighted average parameters |

| 14: end for |

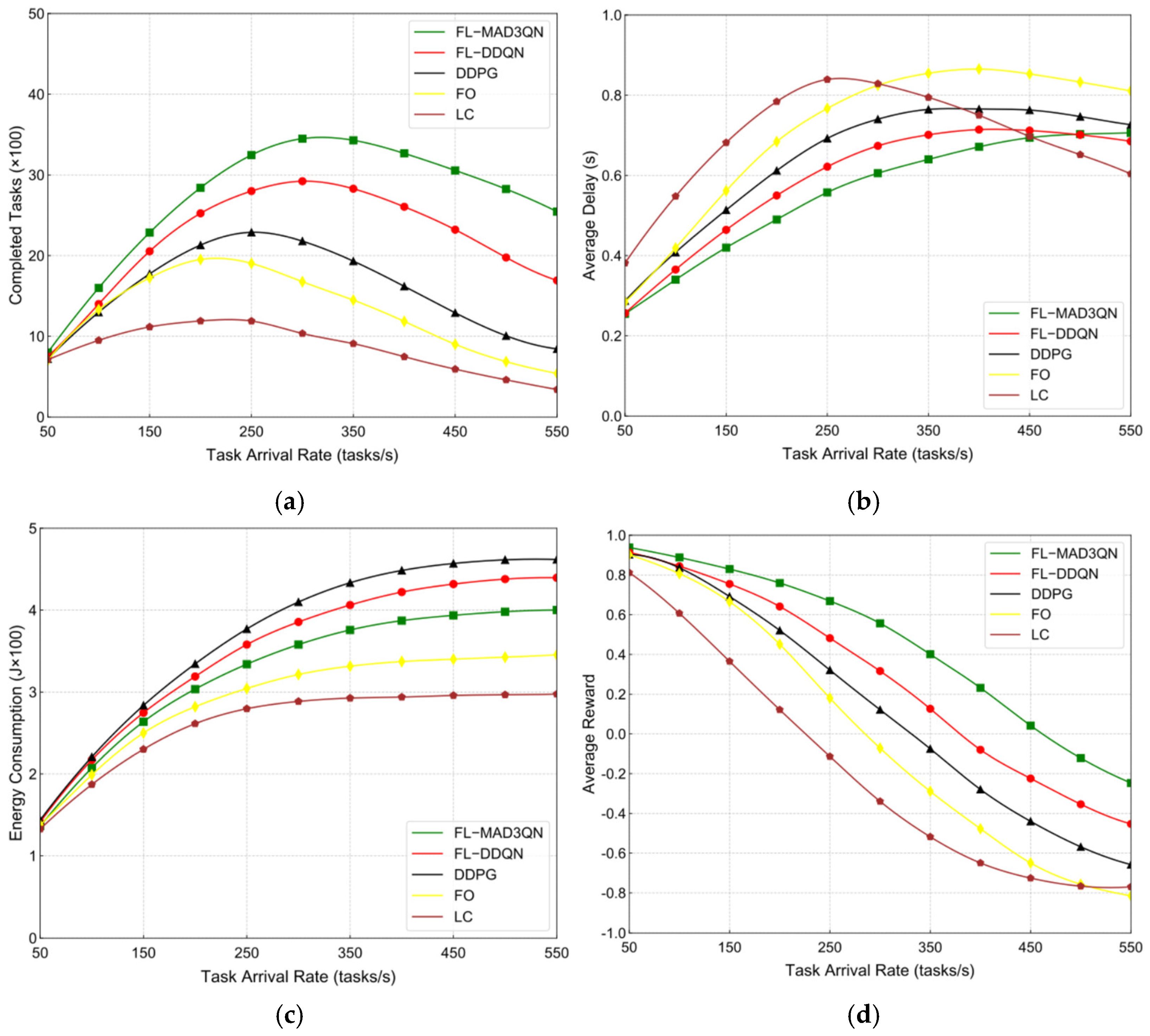

4. Experimental Results

4.1. Parameter Settings

- Local computation (LC): Vehicles utilize their inherent computing capabilities to execute all computational operations.

- Full offloading (FO): All vehicle tasks are transferred to the RSU for remote execution.

- FL-DQN: Achieves the ideal offloading technique through integration with federated learning.

- DDPG: A task offloading algorithm utilizing an evaluation network and a target network architecture.

4.2. Convergence Analysis and Performance Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Panigrahy, S.K.; Emany, H. A survey and tutorial on network optimization for intelligent transport system using the internet of vehicles. Sensors 2023, 23, 555. [Google Scholar] [CrossRef]

- Gong, T.; Zhu, L.; Yu, F.R.; Tang, T. Edge intelligence in intelligent transportation systems: A survey. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8919–8944. [Google Scholar] [CrossRef]

- Luo, G.; Shao, C.; Cheng, N.; Zhou, H.; Zhang, H.; Yuan, Q.; Li, J. Edge Cooper: Network-aware cooperative LiDAR perception for enhanced vehicular awareness. IEEE J. Sel. Areas Commun. 2023, 42, 207–222. [Google Scholar] [CrossRef]

- Yin, L.; Luo, J.; Qiu, C.; Wang, C.; Qiao, Y. Joint task offloading and resources allocation for hybrid vehicle edge computing systems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10355–10368. [Google Scholar] [CrossRef]

- Dong, S.; Tang, J.; Abbas, K.; Hou, R.; Kamruzzaman, J.; Rutkowski, L.; Buyya, R. Task offloading strategies for mobile edge computing: A survey. Comput. Netw. 2024, 254, 110791. [Google Scholar] [CrossRef]

- Ren, J.; Hou, T.; Wang, H.; Tian, H.; Wei, H.; Zheng, H.; Zhang, X. Collaborative task offloading and resource scheduling framework for heterogeneous edge computing. Wirel. Netw. 2024, 30, 3897–3909. [Google Scholar] [CrossRef]

- Zabihi, Z.; Moghadam, A.M.E.; Rezvani, M.H. Reinforcement learning methods for computation offloading: A systematic review. ACM Comput. Surv. 2023, 56, 17. [Google Scholar] [CrossRef]

- Luo, Z.; Dai, X. Reinforcement learning-based computation offloading in edge computing: Principles, methods, challenges. Alex. Eng. J. 2024, 108, 89–107. [Google Scholar] [CrossRef]

- Xue, J.; Wang, L.; Yu, Q.; Mao, P. Multi-Agent Deep Reinforcement Learning-based Partial Offloading and Resource Allocation in Vehicular Edge Computing Networks. Comput. Commun. 2025, 234, 108081. [Google Scholar] [CrossRef]

- He, H.; Yang, X.; Mi, X.; Shen, H.; Liao, X. Multi-Agent Deep Reinforcement Learning Based Dynamic Task Offloading in a Device-to-Device Mobile-Edge Computing Network to Minimize Average Task Delay with Deadline Constraints. Sensors 2024, 24, 5141. [Google Scholar] [CrossRef]

- Zhao, P.; Kuang, Z.; Guo, Y.; Hou, F. Task offloading and resource allocation in UAV-assisted vehicle platoon system. IEEE Trans. Veh. Technol. 2024, 74, 1584–1596. [Google Scholar] [CrossRef]

- Sun, G.; He, L.; Sun, Z.; Wu, Q.; Liang, S.; Li, J.; Niyato, D.; Leung, V.C.M. Joint task offloading and resource allocation in aerial-terrestrial UAV networks with edge and fog computing for post-disaster rescue. IEEE Trans. Mob. Comput. 2024, 23, 8582–8600. [Google Scholar] [CrossRef]

- Farimani, M.K.; Karimian-Aliabadi, S.; Entezari-Maleki, R.; Egger, B.; Sousa, L. Deadline-aware task offloading in vehicular networks using deep reinforcement learning. Expert Syst. Appl. 2024, 249, 123622. [Google Scholar] [CrossRef]

- He, H.; Yang, X.; Huang, F.; Shen, H.; Tian, H. Enhancing QoE in Large-Scale U-MEC Networks via Joint Optimization of Task Offloading and UAV Trajectories. IEEE Internet Things J. 2024, 11, 35710–35723. [Google Scholar] [CrossRef]

- Zhu, K.; Li, S.; Zhang, X.; Wang, J.; Xie, C.; Wu, F.; Xie, R. An Energy-Efficient Dynamic Offloading Algorithm for Edge Computing Based on Deep Reinforcement Learning. IEEE Access 2024, 12, 127489–127506. [Google Scholar] [CrossRef]

- Zhai, L.; Lu, Z.; Sun, J.; Li, X. Joint task offloading and computing resource allocation with DQN for task-dependency in multi-access edge computing. Comput. Netw. 2025, 263, 111222. [Google Scholar] [CrossRef]

- Wu, J.; Du, R.; Wang, Z. Deep reinforcement learning with dual-Q and Kolmogorov–Arnold Networks for computation offloading in Industrial IoT. Comput. Netw. 2025, 257, 110987. [Google Scholar] [CrossRef]

- Moon, S.; Lim, Y. Federated deep reinforcement learning based task offloading with power control in vehicular edge computing. Sensors 2022, 22, 9595. [Google Scholar] [CrossRef]

- Xiang, H.; Zhang, M.; Jian, C. Federated deep reinforcement learning-based online task offloading and resource allocation in harsh mobile edge computing environment. Clust. Comput. 2024, 27, 3323–3339. [Google Scholar] [CrossRef]

- Hasan, M.K.; Jahan, N.; Nazri, M.Z.; Islam, S.; Khan, M.A.; Alzahrani, A.I.; Alalwan, N.; Nam, Y. Federated learning for computational offloading and resource management of vehicular edge computing in 6G-V2X network. IEEE Trans. Consum. Electron. 2024, 70, 3827–3847. [Google Scholar] [CrossRef]

- Qiang, X.; Chang, Z.; Ye, C.; Hämäläinen, T.; Min, G. Split federated learning empowered vehicular edge intelligence: Concept, adaptive design, and future directions. IEEE Wirel. Commun. 2025, 32, 90–97. [Google Scholar] [CrossRef]

- Hussain, M.; Azar, A.T.; Ahmed, R.; Amin, S.U.; Qureshi, B.; Reddy, V.D.; Alam, I.; Khan, Z.I. SONG: A multi-objective evolutionary algorithm for delay and energy aware facility location in vehicular fog networks. Sensors 2023, 23, 667. [Google Scholar] [CrossRef]

- Hao, H.; Xu, C.; Zhang, W.; Yang, S.; Muntean, G.-M. Task-Driven Priority-Aware Computation Offloading Using Deep Reinforcement Learning. IEEE Trans. Wirel. Commun. 2025. [Google Scholar] [CrossRef]

- Pang, S.; Wang, T.; Gui, H.; He, X.; Hou, L. An intelligent task offloading method based on multi-agent deep reinforcement learning in ultra-dense heterogeneous network with mobile edge computing. Comput. Netw. 2024, 250, 110555. [Google Scholar] [CrossRef]

- Du, R.; Wu, J.; Gao, Y. Dual-Q network deep reinforcement learning-based computation offloading method for industrial internet of things. J. Supercomput. 2024, 80, 25590–25615. [Google Scholar] [CrossRef]

- Tan, L.; Kuang, Z.; Zhao, L.; Liu, A. Energy-efficient joint task offloading and resource allocation in OFDMA-based collaborative edge computing. IEEE Trans. Wirel. Commun. 2021, 21, 1960–1972. [Google Scholar] [CrossRef]

- Shen, J.; Du, Z.; Zhang, Z.; Yang, N.; Tang, H. 5G NR and Enhancements: From R15 to R16; Elsevier: Amsterdam, The Netherlands, 2022. [Google Scholar]

- Jiang, J. Key Technologies of C-V2X Internet of Vehicles and Vehicle-Road Coordination; China Science and Technology Publishing & Media Co., Ltd.: Beijing, China, 2024. [Google Scholar]

- Zhu, L.; Zhang, Z.; Liu, L.; Feng, L.; Lin, P.; Zhang, Y. Online distributed learning-based load-aware heterogeneous vehicular edge computing. IEEE Sens. J. 2023, 23, 17350–17365. [Google Scholar] [CrossRef]

| Parameter | Values |

|---|---|

| Learning rate | 0.001 |

| Discount factor | 0.9 |

| Initial epsilon | 1.0 |

| Epsilon decay rate | 0.99 |

| Batch size | 32 |

| Buffer size D | 2000 |

| Soft update weight | 0.995 |

| Parameter | Values |

|---|---|

| Radio Frequency | 2 GHz |

| Vehicle Computing Resources | [0.1–1] GHz |

| Number of vehicles; Vehicle speed | [10, 50]; 10 m/s |

| Noise power | −120 dBm |

| MEC Server Computing Resources | 40 GHZ |

| RSU Coverage | [100–300] m2 |

| Number of RSUs | 6, 8, 10 |

| Task Size | [1.0, 1.1, ¦, 10.0] Mbits |

| Task deadline | 10 time slots (1 s) |

| Vehicle power status | [20, 50, 80] |

| RSU calculation power and Bandwidth | 10 W; 100 MHz |

| Vehicle Transmission Power and Standby Power | 0.5 W; 0.1 W |

| Federal Learning Rate | 0.4 |

| V2I/V2V Transmit Power and Communication Bandwidth | 0.2 W; 10 MHz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Li, Y.; Pang, Z.; Ma, Z. Federated Multi-Agent DRL for Task Offloading in Vehicular Edge Computing. Electronics 2025, 14, 3501. https://doi.org/10.3390/electronics14173501

Zhao H, Li Y, Pang Z, Ma Z. Federated Multi-Agent DRL for Task Offloading in Vehicular Edge Computing. Electronics. 2025; 14(17):3501. https://doi.org/10.3390/electronics14173501

Chicago/Turabian StyleZhao, Hongwei, Yu Li, Zhixi Pang, and Zihan Ma. 2025. "Federated Multi-Agent DRL for Task Offloading in Vehicular Edge Computing" Electronics 14, no. 17: 3501. https://doi.org/10.3390/electronics14173501

APA StyleZhao, H., Li, Y., Pang, Z., & Ma, Z. (2025). Federated Multi-Agent DRL for Task Offloading in Vehicular Edge Computing. Electronics, 14(17), 3501. https://doi.org/10.3390/electronics14173501