A High-Payload Data Hiding Method Utilizing an Optimized Voting Strategy and Dynamic Mapping Table

Abstract

1. Introduction

2. Related Works in Neighborhood Pixel Technique

- First, an original image I of size is down-scaled to a smaller image with dimensions .

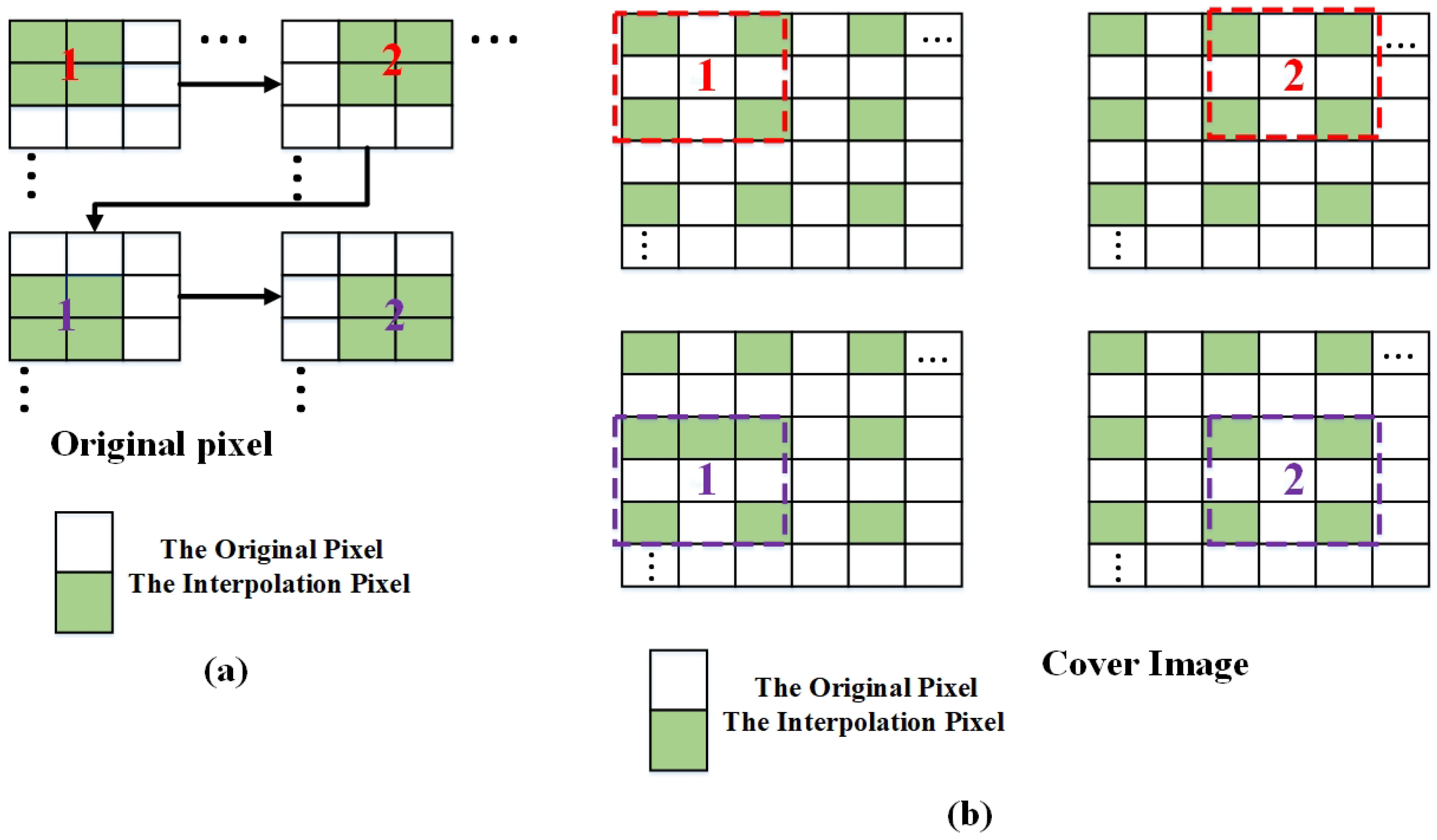

- Next, this reduced image is divided into overlapping blocks. These blocks are then processed sequentially in a raster scan order, which is similar to reading text from left to right and top to bottom, as shown in Figure 1a.

- Following the downscaling and block segmentation, each block is expanded into a overlapping block. In this enlarged block, the pixel positions that were omitted during the down scaling process are reconstructed using interpolation techniques. Figure 1b visually illustrates this concept: the original seed pixels from the block are shown in green, while the white regions represent the positions where new, interpolated pixel values must be computed.

3. The Proposed Scheme

- Step 1:The original image, I, is segmented into a checkerboard pattern where white pixels serve as unaltered reference seeds, and blue pixels are designated for embedding secret information. The value of each blue pixel (P) is predicted solely based on its four neighboring white pixels (, , , and ), which denote the left, upper, right, and lower pixel of the pixel, respectively.

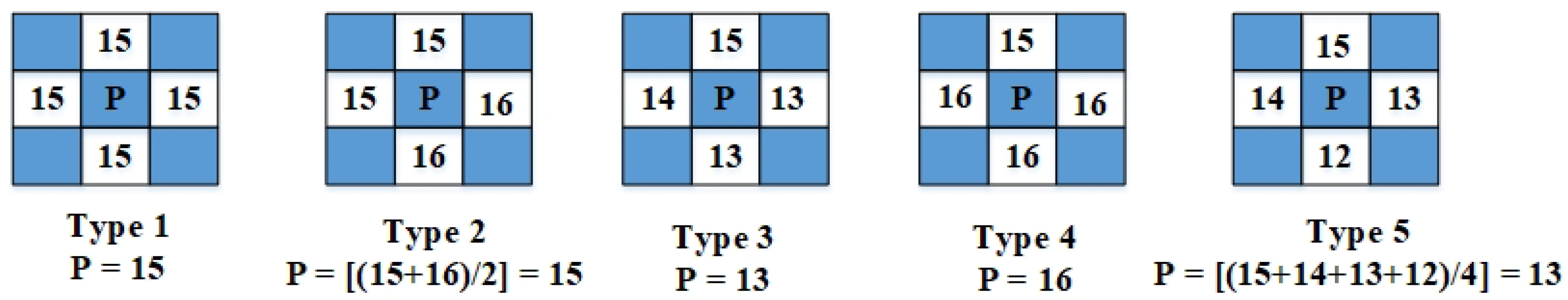

- Step 2: The value of a central pixel P is determined by looking at the values of its four surrounding pixels. This prediction process has five different scenarios, which are shown in Figure 2:Scenario 1 (uniform neighbors): If all four neighboring pixels have the exact same value, then P is assigned that value.Scenario 2 (two matching pairs): If the four neighbors form two pairs of identical values, then P is predicted as the average of one of these pairs.Scenario 3 (one matching pair): If only one pair of neighboring pixels has the same value, then P is assigned the value that appears most frequently among the four neighbors.Scenario 4 (three identical neighbors): If three of the four neighbors share the same value, then P takes on that majority value.Scenario 5 (all different neighbors): If all four neighboring pixels have distinct values, then P is calculated as the average of these four values.

- Step 3: When dealing with pixels along the edges or at the corners of an image, there are fewer neighboring (seed) pixels to reference. As a result, the prediction approach adapts accordingly:(1) For edge pixels (where three seed pixels are available), if all three seed pixels have the same value, use Prediction Type 1; if any two of the seed pixels have the same value, use Prediction Type 4; if all three seed pixels have different values, use Prediction Type 5.(2) For corner pixels (where two seed pixels are available), if the two seed pixels are identical, use Prediction Type 1; if the two seed pixels have different values, use Prediction Type 5.

3.1. Data Embedding Phase

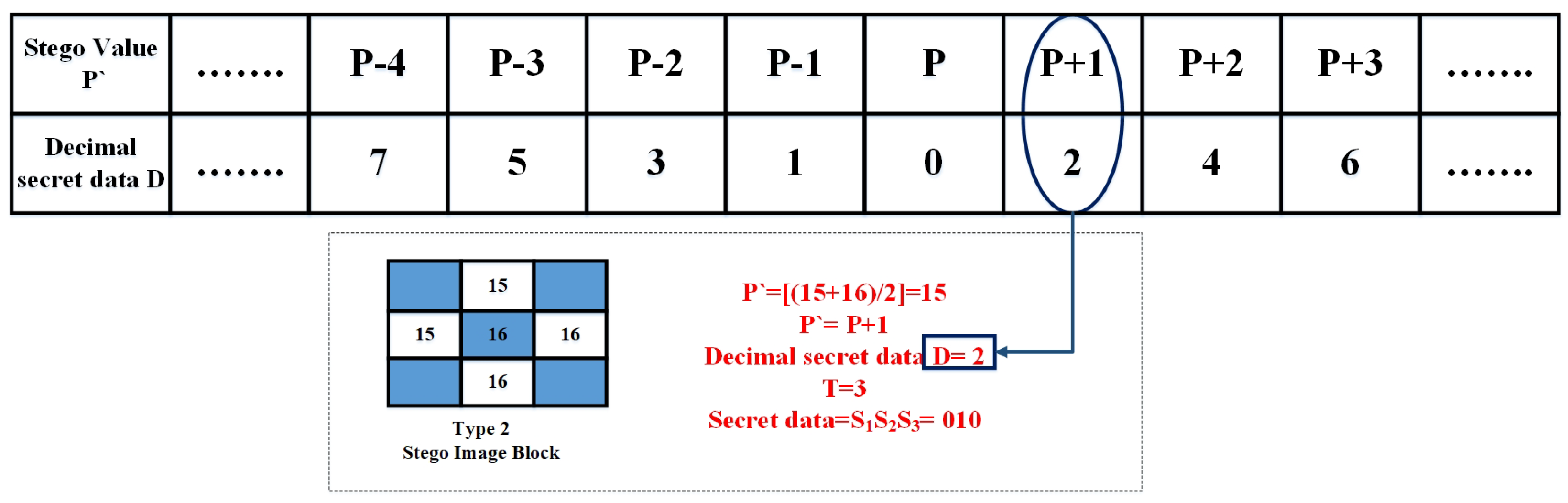

- Step 1: Begin by calculating the estimated value, P, of the pixel. For the Scenario 2 prediction, P is determined by taking the average of two adjacent pixels that have similar values. In this case, the neighboring pixel values are 15 and 16. Then, compute their average.

- Step 2: A secret message consisting of T bits is prepared to be embedded into the pixel.

- Step 3: Now, we need to check the mapping table to determine how much we need to adjust our initial prediction, P. In this step, the embedding process adjusts the anticipated pixel value based on a lookup table, as shown in Table 1. Initially, a group of T secret bits is translated into its decimal equivalent, D, which serves as a compact integer representation of the hidden data. For example, the bit sequence “010” becomes .Next, this decimal value is mapped to an offset, , using the predefined lookup table. Each value of has a unique corresponding offset from the range . This mapping guarantees that every potential secret data value leads to a specific pixel modification.Finally, the anticipated pixel, P, is modified by the chosen offset to create the stego-pixel, denoted as . For instance, as depicted in Figure 3, the predicted pixel value is calculated as follows:When the secret bits “010” are embedded, their decimal form is , which corresponds to an offset of . Consequently, the stego-pixel is derived as , and the modified block is updated in the stego-image. This method ensures that each group of secret bits reliably generates a unique alteration to the predicted pixel value, facilitating both consistent data embedding and precise extraction.

3.2. Data Extraction Phase

- Step 1: The stego-image is split into two distinct categories, similar to the embedding process. Blue pixels hold the concealed secret information. White pixels remain unchanged from the original image and function as reference or seed pixels. The pixel, P’, falls into the gray pixel category, meaning it contains the embedded secret data.

- Step 2: To retrieve the hidden secret data, we start by estimating P, which represents the pixel’s original value before the data were embedded. The method of prediction is selected based on the values of the four adjacent seed pixels.

- Step 3: We estimate the original value of the current pixel as P. Then, using the difference between and P, we refer to the mapping table to determine the corresponding decimal secret value D. Finally, this decimal value D is converted into a T-bit binary number, , where .

- Step 4: When the extracted secret data are retrieved, we reverse the modification and obtain the original pixel value, P.

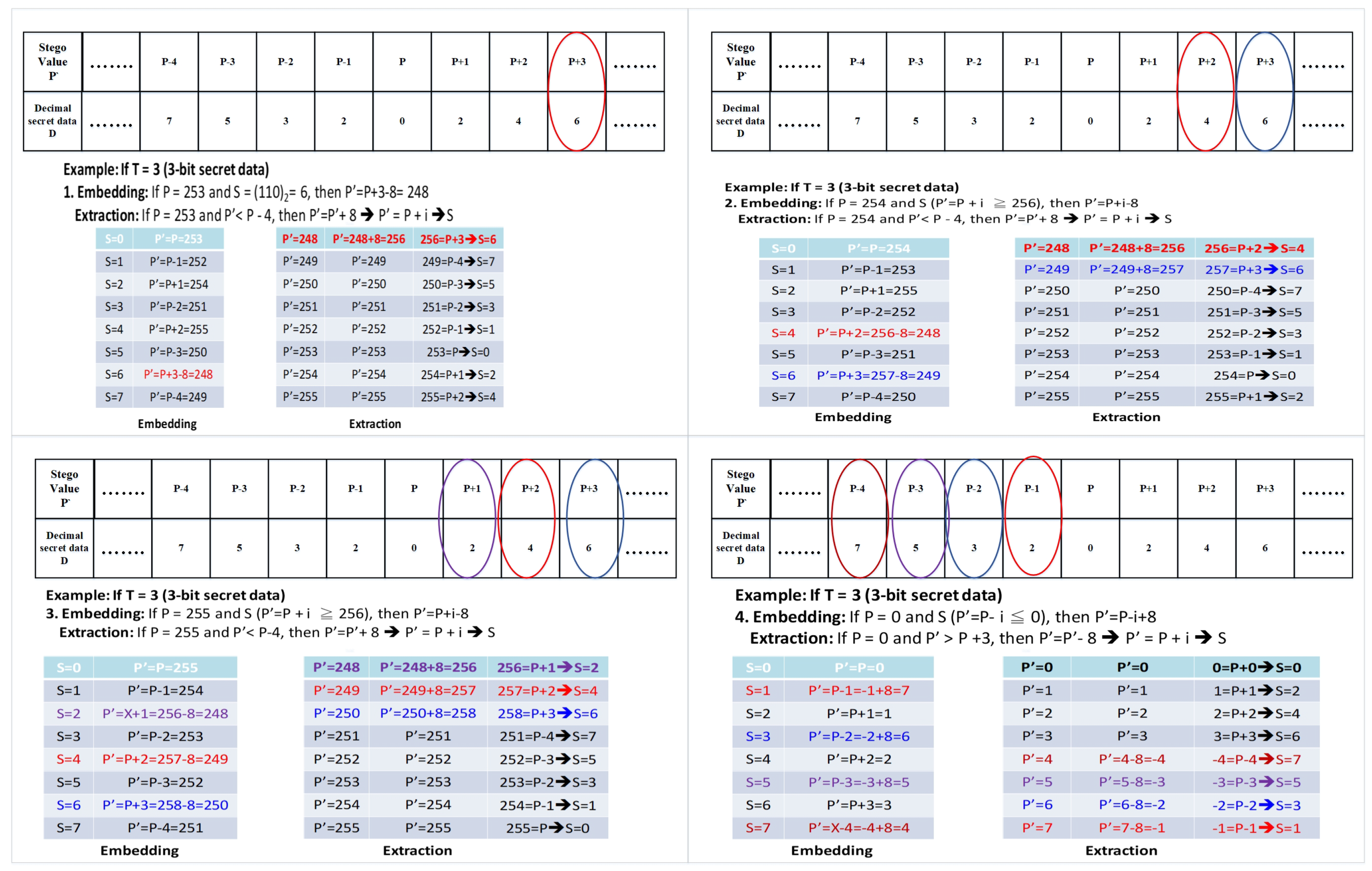

3.3. Overflow and Underflow

- Case 1:The embedding and extraction methods are the same as the method of Chi et al. [17].

- Case 2:

- -

- Embedding: If S (), then .

- -

- Extraction: If , then .

- Case 3:

- -

- Embedding: If S (), then .

- -

- Extraction: If , then .

4. Evaluation Metrics

4.1. Embedding Capacity

4.2. Peak Signal-to-Noise Ratio (PSNR)

4.3. Security of Proposed Scheme

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fang, G.; Wang, F.; Zhao, C.; Chang, C.C.; Lyu, W.L. Reversible data hiding based on chinese remainder theorem for polynomials and secret sharing. Int. J. Netw. Secur. 2025, 27, 4. [Google Scholar]

- Ren, F.; Zeng, M.M.; Wang, Z.F.; Shi, X. Reversible data hiding in encrypted binary images based on improved prediction methods. Int. J. Netw. Secur. 2025. Available online: http://ijns.jalaxy.com.tw/contents/ijns-v99-n6/ijns-2099-v99-n6-p28-0.pdf (accessed on 28 August 2025).

- Ragab, H.; Shaban, H.; Ahmed, K.; Ali, A.-E. Digital image steganography and reversible data hiding: Algorithms, applications and recommendations. J. Image Graph. 2025, 13, 1. [Google Scholar] [CrossRef]

- Johnson, N.F.; Jajodia, S. Exploring steganography: Seeing the unseen. Computer 1998, 31, 26–34. [Google Scholar] [CrossRef]

- Du, Y.; Yin, Z.; Zhang, X. High capacity lossless data hiding in jpeg bitstream based on general vlc mapping. IEEE Trans. Dependable Secur. Comput. 2020, 19, 1420–1433. [Google Scholar] [CrossRef]

- Xian, Y.; Wang, X.; Teng, L. Double parameters fractal sorting matrix and its application in image encryption. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4028–4037. [Google Scholar] [CrossRef]

- Liang, X.; Tang, Z.; Wu, J.; Li, Z.; Zhang, X. Robust image hashing with isomap and saliency map for copy detection. IEEE Trans. Multimed. 2021, 25, 1085–1097. [Google Scholar] [CrossRef]

- Yan, X.; Lu, Y.; Yang, C.-N.; Zhang, X.; Wang, S. A common method of share authentication in image secret sharing. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2896–2908. [Google Scholar] [CrossRef]

- Chen, Y.-Y.; Hsia, C.-H.; Kao, H.-Y.; Wang, Y.-A.; Hu, Y.-C. An image authentication method for secure internet-based communication in human-centric computing. J. Internet Technol. 2020, 21, 1893–1903. [Google Scholar]

- Liu, L.; Wang, A.; Chang, C.-C. Separable reversible data hiding in encrypted images with high capacity based on median-edge detector prediction. IEEE Access 2020, 8, 29639–29647. [Google Scholar] [CrossRef]

- He, W.; Cai, Z.; Wang, Y. Flexible spatial location-based pvo predictor for high-fidelity reversible data hiding. Inf. Sci. 2020, 520, 431–444. [Google Scholar] [CrossRef]

- Geetha, R.; Geetha, S. Efficient rhombus mean interpolation for reversible data hiding. In Proceedings of the 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT), Bangalore, India, 18–19 May 2018; pp. 1007–1012. [Google Scholar]

- Huang, C.-T.; Lin, C.-Y.; Weng, C.-Y. Dynamic information-hiding method with high capacity based on image interpolating and bit flipping. Entropy 2023, 25, 744. [Google Scholar] [CrossRef]

- Yavuz, E. Reversible data hiding in encrypted images using chaos theory and Chinese Remainder Theorem. Pattern Anal. Appl. 2025, 28, 120. [Google Scholar] [CrossRef]

- Sanjalawe, Y.; Al-E’mari, S.; Fraihat, S.; Abualhaj, M.; Alzubi, E. A deep learning-driven multi-layered steganographic approach for enhanced data security. Sci. Rep. 2025, 15, 4761. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Wang, J.; Chang, Q.; Ren, Y.; Meng, W. High invisibility image steganography with wavelet transform and generative adversarial network. Expert Syst. Appl. 2024, 249, 123540. [Google Scholar] [CrossRef]

- Chi, H.; Chang, C.-C.; Lin, C.-C. Data hiding methods using voting strategy and mapping table. J. Internet Technol. 2024, 25, 365–377. [Google Scholar]

- Jung, K.-H.; Yoo, K.-Y. Data hiding method using image interpolation. Comput. Stand. Interfaces 2009, 31, 465–470. [Google Scholar] [CrossRef]

- Lee, C.-F.; Huang, Y.-L. An efficient image interpolation increasing payload in reversible data hiding. Expert Syst. Appl. 2012, 39, 6712–6719. [Google Scholar] [CrossRef]

| Stego value P’ | P | |||||||||

| Decimal secret data D | 9 | 7 | 5 | 3 | 1 | 0 | 2 | 4 | 6 | 8 |

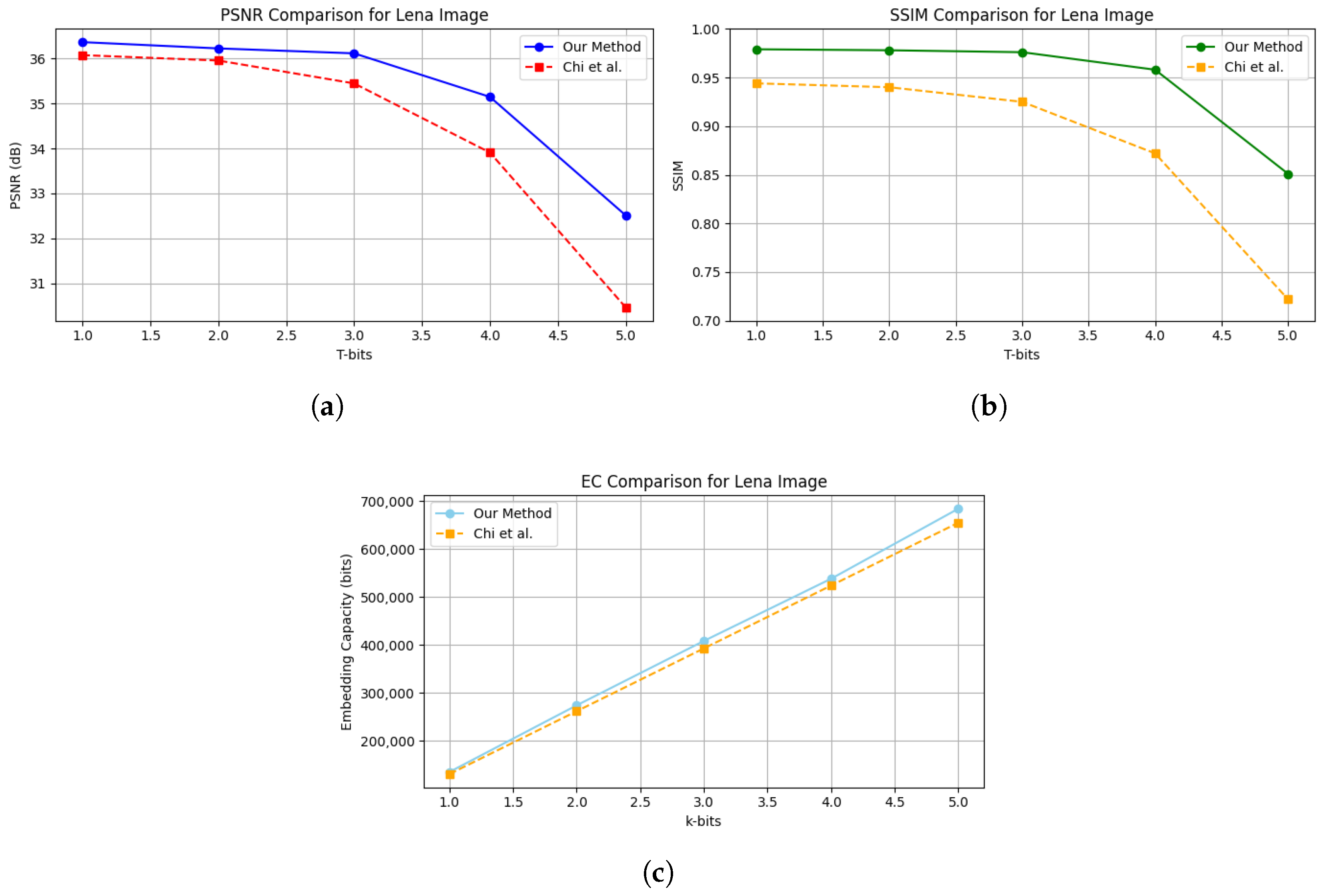

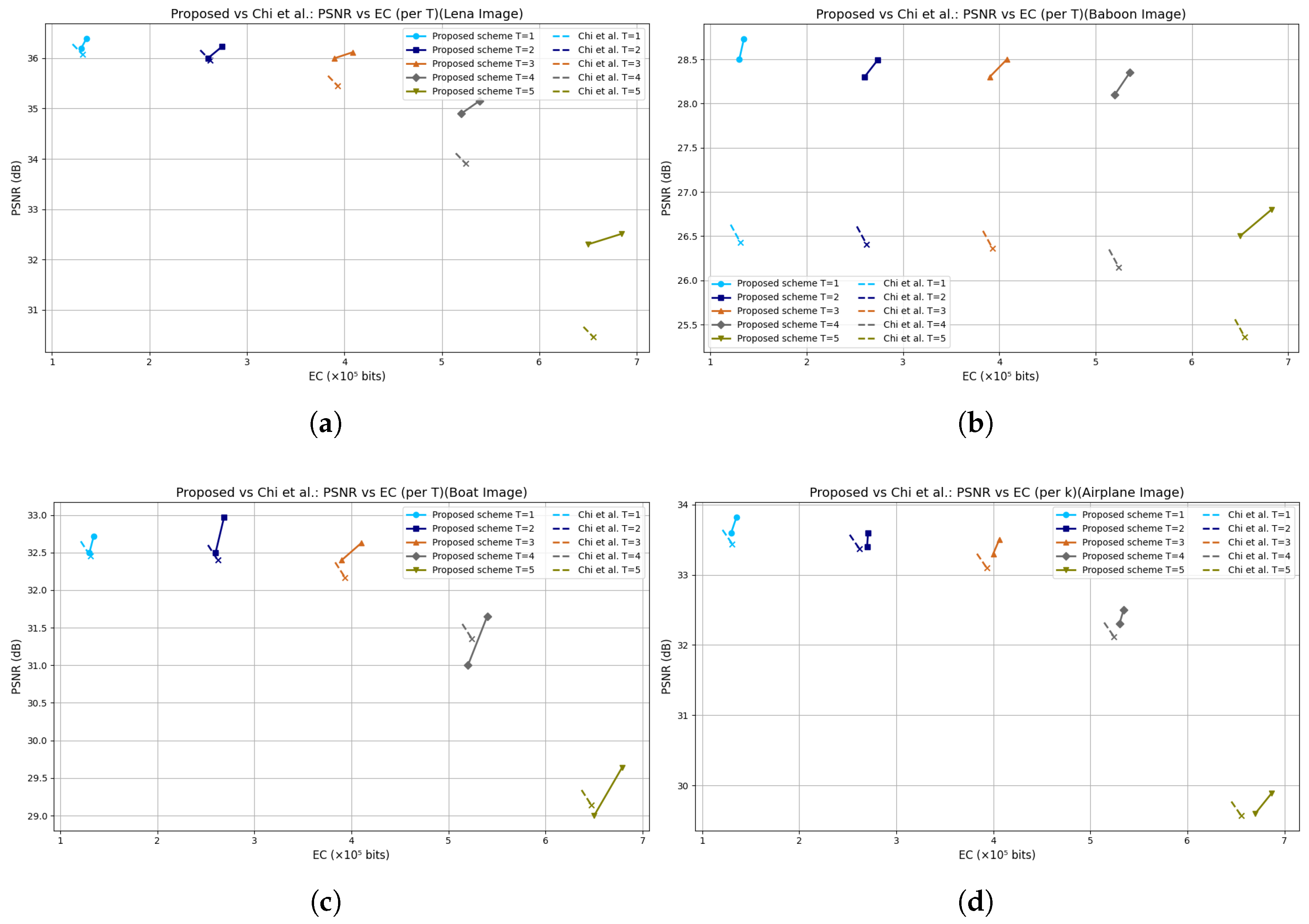

| Image | Metric | T = 2 | T = 3 | T = 4 | T = 5 | T = 2 | T = 3 | T = 4 | T = 5 |

|---|---|---|---|---|---|---|---|---|---|

| Chi et al. [17] | Proposed Method | ||||||||

| Lena | EC | 262,145 | 393,217 | 524,289 | 655,361 | 274,399 | 408,708 | 538,493 | 684,538 |

| PSNR | 35.96 | 35.45 | 33.91 | 30.46 | 36.23 | 36.12 | 35.15 | 32.51 | |

| SSIM | 0.9403 | 0.9252 | 0.8720 | 0.7223 | 0.9784 | 0.9765 | 0.9581 | 0.8512 | |

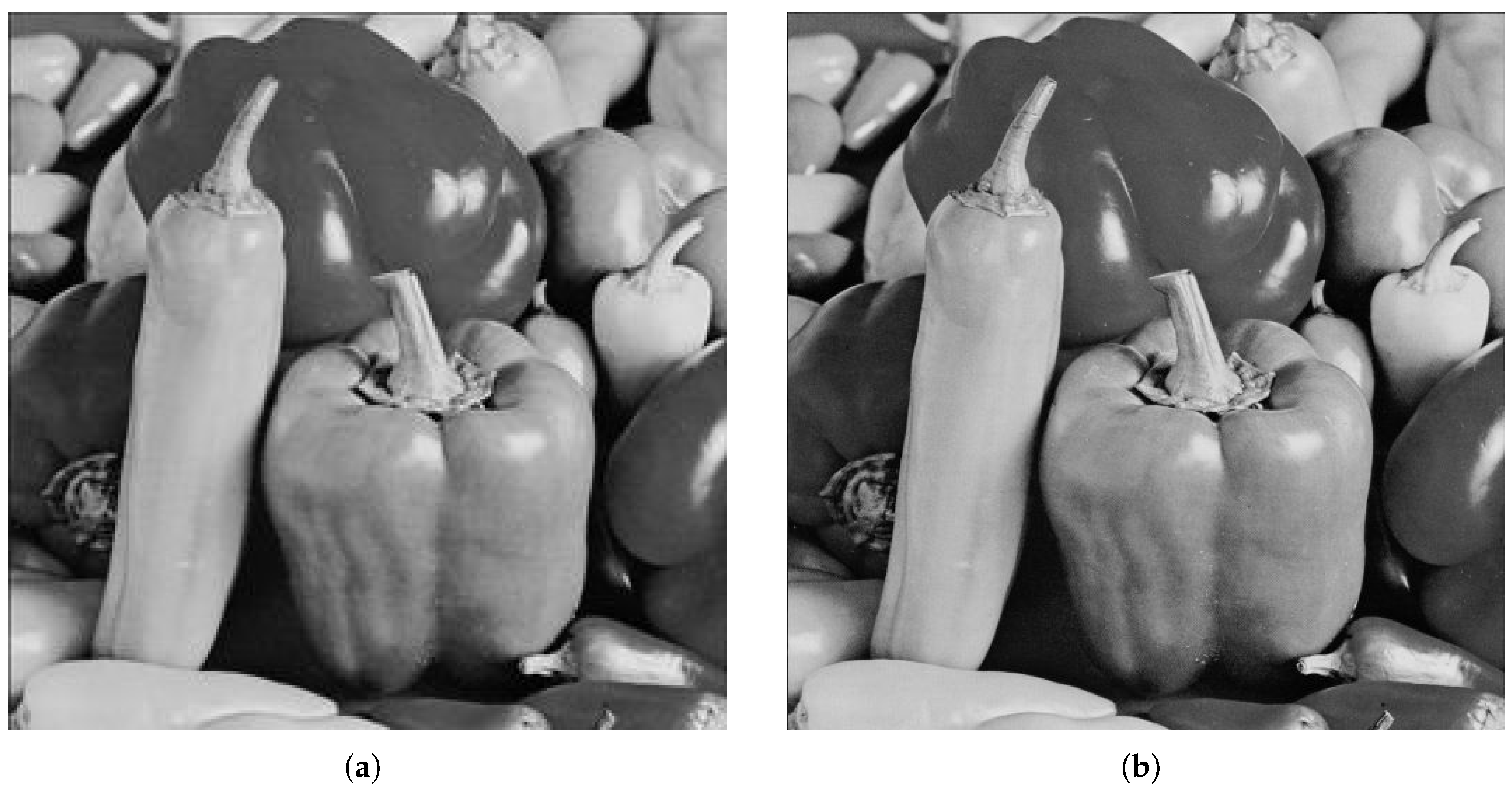

| Peppers | EC | 262,139 | 393,118 | 521,709 | 634,751 | 268,346 | 407,293 | 538,802 | 683,234 |

| PSNR | 33.96 | 33.64 | 32.56 | 29.90 | 32.69 | 32.61 | 31.28 | 28.01 | |

| SSIM | 0.9038 | 0.8903 | 0.8423 | 0.7098 | 0.9647 | 0.9618 | 0.8824 | 0.7656 | |

| Baboon | EC | 262,083 | 393,052 | 524,033 | 654,811 | 273,577 | 407,988 | 535,518 | 682,674 |

| PSNR | 26.41 | 26.37 | 26.15 | 25.36 | 28.49 | 28.50 | 28.35 | 26.80 | |

| SSIM | 0.8648 | 0.8600 | 0.8426 | 0.7831 | 0.9936 | 0.9928 | 0.9898 | 0.9779 | |

| Boat | EC | 262,145 | 393,217 | 524,177 | 647,081 | 268,687 | 410,362 | 539,779 | 678,808 |

| PSNR | 32.40 | 32.18 | 31.36 | 29.14 | 32.97 | 32.63 | 31.65 | 29.64 | |

| SSIM | 0.9025 | 0.8924 | 0.8540 | 0.7467 | 0.9586 | 0.8995 | 0.8609 | 0.7551 | |

| Barbara | EC | 262,145 | 393,217 | 524,289 | 655,331 | 270,583 | 408,793 | 538,716 | 683,462 |

| PSNR | 28.22 | 28.12 | 27.79 | 26.67 | 35.23 | 35.15 | 34.23 | 31.30 | |

| SSIM | 0.9033 | 0.8928 | 0.8553 | 0.7470 | 0.9835 | 0.9824 | 0.9676 | 0.8549 | |

| Couple | EC | 262,031 | 392,839 | 522,269 | 646,126 | 274,005 | 406,291 | 548,023 | 680,247 |

| PSNR | 32.53 | 32.29 | 31.47 | 29.25 | 28.49 | 28.50 | 28.35 | 26.80 | |

| SSIM | 0.9237 | 0.9140 | 0.8790 | 0.7747 | 0.9936 | 0.9928 | 0.9898 | 0.9779 | |

| Airplane | EC | 262,145 | 393,217 | 524,289 | 655,346 | 270974 | 406149 | 534210 | 686874 |

| PSNR | 33.38 | 33.10 | 32.12 | 29.58 | 33.60 | 33.50 | 32.50 | 29.89 | |

| SSIM | 0.9567 | 0.9402 | 0.8816 | 0.7249 | 0.964 | 0.947 | 0.851 | 0.732 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fatima, K.; Wu, N.-I.; Chan, C.-S.; Hwang, M.-S. A High-Payload Data Hiding Method Utilizing an Optimized Voting Strategy and Dynamic Mapping Table. Electronics 2025, 14, 3498. https://doi.org/10.3390/electronics14173498

Fatima K, Wu N-I, Chan C-S, Hwang M-S. A High-Payload Data Hiding Method Utilizing an Optimized Voting Strategy and Dynamic Mapping Table. Electronics. 2025; 14(17):3498. https://doi.org/10.3390/electronics14173498

Chicago/Turabian StyleFatima, Kanza, Nan-I Wu, Chi-Shiang Chan, and Min-Shiang Hwang. 2025. "A High-Payload Data Hiding Method Utilizing an Optimized Voting Strategy and Dynamic Mapping Table" Electronics 14, no. 17: 3498. https://doi.org/10.3390/electronics14173498

APA StyleFatima, K., Wu, N.-I., Chan, C.-S., & Hwang, M.-S. (2025). A High-Payload Data Hiding Method Utilizing an Optimized Voting Strategy and Dynamic Mapping Table. Electronics, 14(17), 3498. https://doi.org/10.3390/electronics14173498