1. Introduction

Healthcare providers frequently encounter conditions demanding instantaneous decisions in emergency and critical care settings [

1]. Traditional information retrieval techniques, including exploring textbooks, searching internet databases, or interacting with colleagues, are unsuitable for these settings [

2]. Rapid access to specific medical information has a substantial impact on patient outcomes [

3]. In this context, question-answering systems (QASs) serve as crucial tools, delivering prompt and precise solutions to queries and enabling faster and more informed decision-making [

3]. In addition, amidst the transition towards patient-centered care models, there is an increasing focus on patient education and engagement [

4]. Individuals are highly interested in learning about their health conditions and available treatment alternatives. By using QASs integrated with patient portals or mobile health applications, patients can access personalized health information. QAS enhances patient engagement and compliance with treatment strategies and reduces the informational burden on healthcare practitioners by responding to typical individual queries [

4].

The introduction of artificial intelligence (AI) in healthcare has provided an opportunity to enhance patient care and medical research [

5]. However, the growth of structured and unstructured medical data poses challenges in developing an automated patient care system [

6]. Unstructured data, such as medical texts, research papers, and clinical reports, offers context and information, whereas structured data, like the Resource Description Framework (RDF) standardizes data interchange [

7,

8,

9]. The recent advancements in AI provide a platform for handling vast amounts of healthcare data, supporting medical professionals to treat and manage complex diseases [

10].

Natural language processing (NLP) technologies, including generative pre-trained transformers (GPT), retrieval-augmented generation (RAG), and

SPARQL, have been especially significant among the notable advancements [

11]. The attention mechanisms enable GPT models to focus on the relevant part of the input sequence, capturing long-range dependencies and providing context-rich representations for each token [

12,

13]. Modern NLP models encounter challenges in dealing with the complexities of medical terminology, resulting in inaccurately generated content [

14]. There is a lack of context-aware models that recognize and evaluate clinical communication’s intricacies and unpredictability [

14]. Additionally, adaptable medical NLP systems for regional- or specialty-specific word and phrase usage are currently in their early stages of development [

14]. The performance of

SPARQL relies on the RDF triples [

15]. This dependency restricts it from providing valuable insights contained in unstructured data sources. A deeper understanding of the schema is crucial in formulating

SPARQL queries. Due to these limitations,

SPARQL queries are unsuitable in clinical decision-making environments. RAG model’s performance depends on the retrieval phase.

The suboptimal information retrieval can lead to irrelevant responses. Similarly, inaccuracies and biases in the dataset can affect the performance of the GPT model [

16]. This process can lead to hallucinations, generating factually incorrect information. The impact of hallucinations can be detrimental in healthcare settings, leading to serious consequences. Mitigating hallucinations can lead to the development of reliable and accurate medical QASs.

The capabilities of QASs can be considerably improved through the integration of SPARQL, RAG, and GPT. GPT models can provide thoughtfully designed, contextually relevant responses to multiple queries due to their proficiency in deep learning (DL) and natural language processing. Medical RDF databases typically contain clinical recommendations, research results, and pharmacological information. By leveraging SPARQL’s potential in retrieving precise information and RAG’s capabilities of generating nuanced text from unstructured data, comprehensive, accurate, and contextually relevant responses can be generated. This synergy enables QASs to generate detailed explanations or essential context for user queries. In this study, we aim to harness the synergistic potentials of SPARQL and GPT, augmented by the RAG technique, to address the demands of medical data handling and query answering.

The study contributions are outlined as follows:

The novelty lies in integrating structured and unstructured data processing within a single framework. This strategy addresses a critical gap in leveraging RDG data for NLP. It enhances the reliability of generated texts by grounding them in verifiable data sources.

- 2.

Enhancement of Data Retrieval and Processing.

By integrating sophisticated NLP techniques, this study advances the efficiency and accuracy of medical data retrieval. The innovative aspect of this contribution is the dynamic retrieval capability, extracting data based on the evolving context of an individual’s health or specific clinical queries. This approach allows the proposed QASs to adapt to real-time settings, enhancing static retrieval methods.

- 3.

Generation of a Synthetic Dataset.

In order to enable the safe and ethical training of validating AI-driven QASs, we construct a high-quality dataset mimicking real-world medical environments. This dataset serves as a benchmarking tool for evaluating QASs without risking patient confidentiality.

- 4.

Contribution to Medical Research and Patient Care.

The proposed QAS enhances clinical decision-making and supports medical education. The integration of advanced AI techniques provides rapid, accurate medical information, aiding personalized medicine and evidence-based practice.

The study is structured as follows:

Section 2 covers existing studies on

SPARQL, LLMs, and the applications of RAG in various domains. It outlines the strengths and limitations of current information retrieval systems.

Section 3 details the experimental design, data sources, and technical frameworks. It describes the creation of the synthetic dataset used for training, validation, and testing the proposed model. The quantitative outcomes, including accuracy, precision, recall, F1-Score, and the rate of hallucinations are presented in

Section 4. Finally,

Section 5 summarizes the key findings of the study, emphasizing the potential of the proposed QAS in handling medical queries. It reflects the broader impact of the research in improving clinical decision-making and patient care.

2. Literature Review

Advancements in QASs have been greatly facilitated by the rapid development of AI and NLP [

16]. Accurate conversion of natural language queries to structured queries is essential in order to retrieve information from knowledge graphs (KGs) [

17,

18,

19,

20,

21]. The integration of LLMs with RAG techniques has been the focus of current studies [

22,

23,

24]. The primary objective of these studies is to improve the efficiency of

SPARQL query generation and reduce hallucinations, generating non-factual information [

25,

26,

27].

Ji et al. [

28] enhance complex QASs through the integration of KGs into LLMs. We emphasize retrieving and reasoning capabilities, aiding in precise query generation. Muqtadir et al. [

29] employ ensemble learning integrating KG and vector storage in order to mitigate hallucinations in mental health support system. Liang et al. [

30] explore approaches aligning LLMs with domain-specific KGs. We enhance the natural language to graph query conversion process. Nassiri and Akhloufi [

31] highlight the applications of transformers in text-based QASs. We outline the effectiveness and adaptability over multiple contexts. Badenes-Olmedo and Corcho [

32] present zero-shot QASs framework using heterogeneous knowledge bases to enhance model’s adaptability. Pusch and Conrad [

33] mitigate the occurrences of hallucinations in QASs using the combination of LLMs with KGs. Tian et al. [

34] build a dual approach to refine response accuracy and relevance. We apply RAG utilizing external knowledge.

Wu et al. [

35] develop a framework based on chain-of-thought processes for better reasoning. De bellis et al. [

36] explore the integration of ontologies with LLMs in order to implement effective RAG systems. Zhan et al. [

37] propose an approach for retrieval-augmented reasoning on KG to enhance QAS performance in healthcare domain. Doan et al. [

38] build a hybrid retrieval approach, focusing on scalability and efficiency. Xu et al. [

39] introduce a unified framework leveraging LLMs with KGs to improve QAS performance. Kim (2025) [

40] fine-tune LLMs with RAG to generate responses for the medical queries. Shi et al. (2025) [

41] introduce retrieval algorithms to extract medical information from structured and unstructured databases. Alonso et al. (2024) [

42] train LLMs on multi-lingual datasets to handle medical queries across multiple languages.

Table 1 highlights the characteristics of existing studies.

The findings of these studies underscore the importance of integrating LLMs with RAG approaches and structured data retrieval methods, including SPARQL, in order to improve the accuracy and reliability of QASs in specialized domains. The current QASs using SPARQL, RAG, and LLMs exhibit significant progress. However, future enhancement is required to address gaps in integrating structured and unstructured data sources. Although SPARQL is capable of effectively querying structured databases, its integration with deep learning models to improve the accuracy and relevance of results obtained from unstructured data is underexplored. In healthcare settings, existing models face challenges in retrieving relevant data and providing contextually accurate responses. As a result, challenges like information retrieval bottlenecks and retrieving irrelevant data arise, leading to user dissatisfaction. There has been a lack of effective strategies to identify and reduce hallucinations in generative models. There is a demand for extensive research on the interaction between hallucination tendencies, training data quality, and model architecture.

3. Materials and Methods

In this study, we enhance the functionality and efficiency of medical information systems through the integration of NLP and advanced AI techniques. The study focuses on the transformative potential of RAG and the sophisticated querying potential of

SPARQL within RDF-based knowledge graphs. The transformation of natural language queries to

SPARQL serves as the gateway to interact with structured knowledge bases. The application of the state-of-the-art transformer architectures can improve the accuracy and reliability of medical information systems. This approach minimizes hallucination effects in order to generate factually correct information.

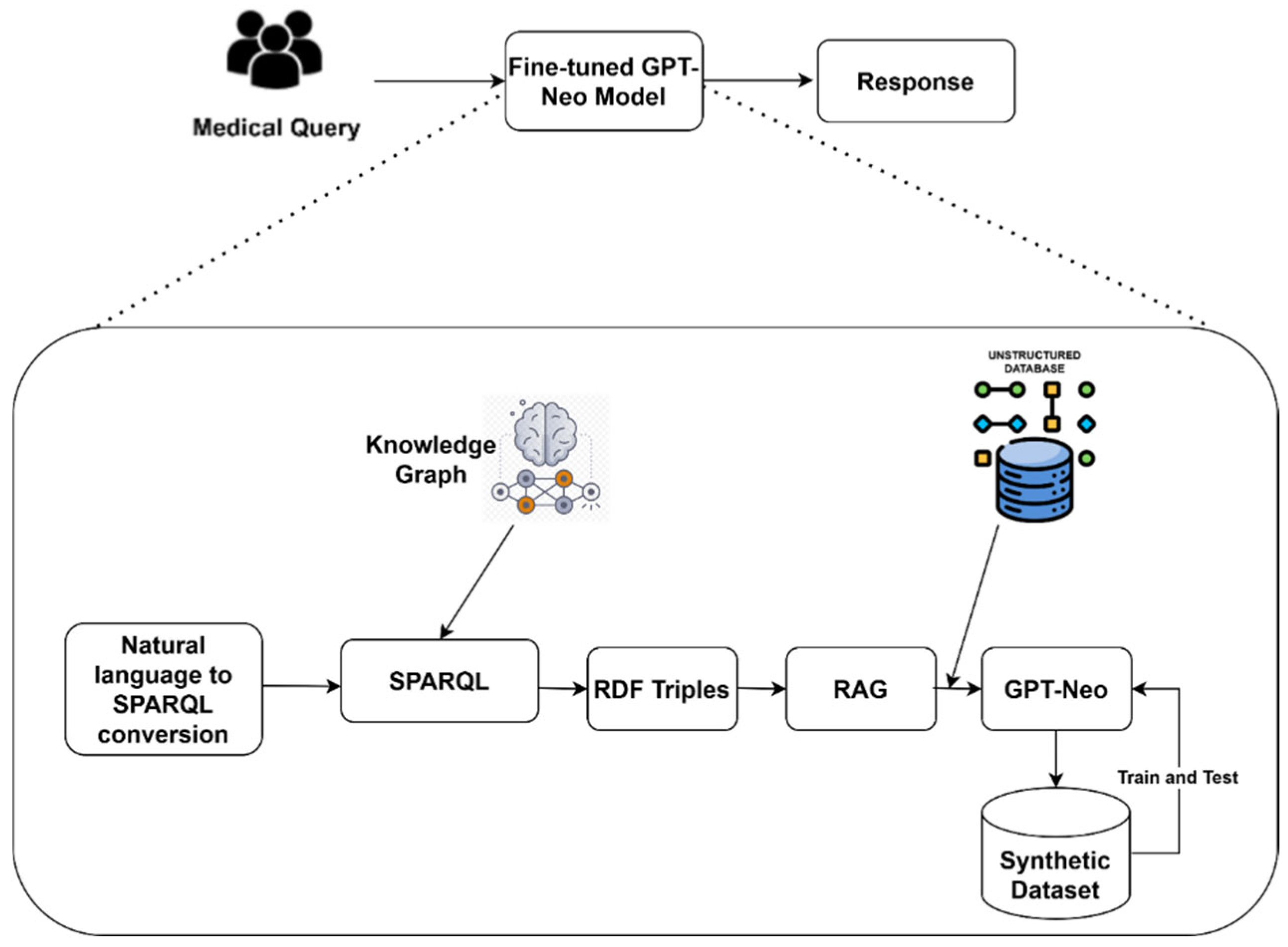

Figure 1 shows the synergy of RDF triples, RAG-based document retrieval, and synthetic data generation.

3.1. Data Acquisition

In this study, we employ medical ontologies, including SNOMED CT [

43], LOINC [

44], MeSH [

45], RdfNorm [

46], UMLS [

47], and Gene Ontology [

48], to retrieve RDF triples, providing the foundation data needed for accurate responses. These ontologies ensure that the information is comprehensive and standardized across diverse platforms and systems. To retrieve unstructured data, we utilize key repositories, including PubMed [

49], Clinical trials [

50], RadioPedia [

51], and Open-I [

52]. In addition, to evaluate the model’s generalization capability, we use the MEDQA dataset [

53] covering 766 question–answer pairs.

3.2. Natural Language to SPARQL Transformation

The conversion of natural language queries to

SPARQL plays a crucial role in performing semantic searches on RDF data stores. It retrieves semantically relevant information, addressing the challenges in understanding the relationship between symptoms, diseases, and interventions. To ensure the compatibility between the query and the structure and semantics of data in the knowledge graph, we apply query optimization and validation strategies.

SPARQL is a standardized query language, facilitating simplified data sharing and interpretability. It can extract specific data relevant to patient characteristics, contributing to personalized treatment plans. Equation (1) highlights the suggested transformation strategy.

where

is the

SPARQL query, and

is the NLP function.

The function interprets and maps user queries () based on the defined schema (). The SPARQL formulation leverages the underlying ontology of the knowledge graph, providing solid foundation for the subsequent processes in the proposed medical information retrieval pipeline.

3.3. RDF Triple Retrieval

In the proposed methodology, the RDF triple retrieval serves as the bridge between structured knowledge representation and practical information application. It guarantees the accuracy and reliability of the extracted responses. Equation (2) reveals the process of executing the query to retrieve appropriate RDF triples from the knowledge graph.

where

is the RDF triples extracted from the knowledge graph (

KG).

The function uses to extract the RDF triples using the knowledge graph. The extracted RDF triple comprises subject–predicate–object constructs, encapsulating structured information. For instance, the query “diabetic symptoms” may return triple like (Diabetes, hasSymptom, FrequentUrination). The RDF triple enriches the context generation process. The query optimization and validations support the proposed approach in mapping SPARQL queries to the relevant triples, reducing the risk of irrelevant or inaccurate data.

3.4. RAG-Based Document Retrieval

To enrich the structured data points (RDF triples), we employ the RAG framework. By capitalizing on the semantic richness embedded within the RDF triples, RAG conducts targeted searchers across document repositories, including research papers, clinical guidelines, and comprehensive medical articles. Equation (3) presents the mathematical expression of the RAG-based document retrieval.

where

is the retrieved documents using the function (

) from the corpus (

.

The retrieval process is fine-tuned to the semantic nuances of the RDF data, guiding the retrieval mechanism towards documents containing relevant information. The function generates a semantic query set. We compose canonical labels and synonyms for the subject/object entities, relation-aware cues derived from the predicate, and salient qualifiers present in the graph, populating the semantic query set. The semantic query set is transformed into dense vectors with the Sentence_BERT. To minimize the computational costs, principal component analysis is used. The cosine similarity technique is used to evaluate the similarity between the query and the extracted documents’ vectors. The extracted documents are re-ranked by combining similarity with structured features, including entity coverage, predicate alignment, qualifier match, and source trust. We de-duplicate near-duplicates, cap per-publisher dominance, and retain provenance for auditability. The final set of documents are passed to the generator for evidence-gated answering. During response generation, we employ the permutation importance technique to compute the feature importance value for each feature during the response generation for a query.

3.5. GPT-Neo Outcome Generation

GPT-Neo [

54] provides a robust, scalable, and adaptable solution, capable of handling complex queries and mitigating the risk of hallucinations. It is an open-sourced and optimized model for democratized access. Researchers and developers can tweak the GPT-Neo’s architecture, training procedure, and data processing pipelines. GPT-Neo’s scalability enables developers to maintain trade-offs between performance and computational costs. By leveraging a highly curated dataset, the occurrence of hallucinations can be decreased. These features motivate us to employ GPT-Neo to generate an outcome using RDF triples and unstructured data. GPT-Neo integrates and synthesizes RDF triples and unstructured data into an informative outcome. Equation (4) presents the process of output generation.

where

is the GPT-Neo’s output using the function (

) and

is the GPT-Neo’s parameters.

The function transforms the retrieved documents () into an outcome (). The generated outcomes () are based on the combined information from RDF triples and associated unstructured documents.

3.6. Synthetic Dataset Generation

The pre-trained GPT-Neo may face challenges in handling real-time medical data. It may generate misleading information due to the complexities of medical queries. To overcome the shortcomings, high-quality annotated synthetic data is required. Generating synthetic data exposes GPT-Neo to a diverse range of scenarios. The trained GPT-Neo can support evidence-based medicine due to its capability of synthesizing datasets containing the latest research and clinical findings. We generated queries using medical ontologies (SNOMED CT, MeSH, UMLS, and Gene Ontology) through sampling of entities/relations and composing

SPARQL to retrieve RDF triples. These triples are seeded RAG, retrieving top-K passages from PubMed, ClinicalTrails.gov, RadioPedia, and Open-i. GPT-3 generates question–answer pairs conditioned on the triples and retrieved evidence. We apply pre-processing techniques, including tokenization, normalization, and data encoding into an appropriate format. GPT-Neo uses the pre-processed dataset to generate detailed and contextually informed answers. These outcomes are paired with the original query to form the synthetic dataset, comprising paired question–answer entries. Equation (5) outlines the computational form of synthetic dataset generation.

where

is the synthetic dataset and N is the total number of queries and outcomes,

is the user query, and

is the generated outcome.

We used scheduled scripts to automatically update the dataset without manual intervention, ensuring seamless integration of novel data. Using this approach, the dataset remains up-to-date with the latest medical advancements and discoveries. In addition, we enable the model to gather feedback from users through user correction inputs and analysis of follow-up questions, indicating confusion or dissatisfaction with previous responses. The synthetic dataset is balanced, audited, and validated in order to enable reliable use of the dataset in the healthcare domain. The process of stratifying along three dimensions, including disease families (mapped to ICD/SNOMED chapters), question intent (diagnostic, treatment, medication, risk, prognosis, prevention, monitoring, complications, lifestyle, education), and difficulty tier, is used to ensure that balancing is properly implemented at the generation time. Each item’s difficulty depends on

SPARQL graph depth, number of joins, and RAG’s document volume and heterogeneity. Using a stratified approach, two domain experts examined and annotated the synthetic dataset for factual correctness, clinical appropriateness, clarity, and safety. Reviewers are unaware of the generator or the prompts in order to reduce the possibility of bias. In the event that no agreement can be achieved following a series of brief adjudication sessions, a third senior clinician makes the final decision. We measure consistency through inter-rater agreement and breakdown revision types: minor language modifications, evidence updates, clinical corrections, and discards. Additionally, we normalize entities to SNOMED/MeSH and drugs to generics in order to prevent brand or region effects. To generalize the model on the external dataset, we utilize the MEDQA dataset [

44].

3.7. Fine-Tuning GPT-Neo

We train GPT-Neo using the synthetic dataset with Bayesian Optimization and Hyperband (BOHB) optimization. The model learns the nuances of medical terms and the structure of medical knowledge. BOHB determines the optimal learning rates, batch sizes, and other key parameters influencing the model’s performance. It supports GPT-Neo to understand and integrate the context, minimizing the risk of hallucinations. During fine-tuning, the loss function is used to measure the discrepancy between the generated responses and the actual responses in the dataset. Let

be the tokenized input for the ith instance in the synthetic dataset. This input includes query and relevant context. Equation (6) shows the functionality of GPT-Neo, representing the operational mechanism of GPT-Neo during the fine-tuning process.

where

is the GPT-Neo’s hyperparameters.

The

function produces a sequence of logits over the possible output tokens in the model’s vocabulary. Equation (7) computes the cross-entropy loss between the predicted probability distribution and the actual distribution of the subsequent tokens in the training set.

where

is the loss function,

is the probability distribution,

is the output token (response),

is the sequence of the actual tokens before position

j, and

i is the input instance.

We employ top-k sampling approach to enhance the quality of the generated text during the inference phase. This approach uses top-k words with the highest probability. It introduces randomness into the response generation, producing diverse and meaningful response. Equation (8) presents the generated response.

where

is the generated outcome during the inference phase,

is the probability distribution over the vocabulary, and

is a token in the vocabulary.

3.8. Performance Evaluation

The evaluation metrics, including accuracy, precision, recall, and F1-score are employed to evaluate the effectiveness of the proposed model. These metrics assess the quality of the generated responses, ensuring the model’s reliability. There is no standard hallucination metric for evaluating the generated text [

55,

56,

57]. Equation (9) is computed as the mean of a binary indicator over the evaluation set. Averaging these indicators yields a proportion between 0 and 1, quantifying the existence of hallucinated answers across the corpus. We breakdown the text into checkable claims and assemble an evidence pool for each model response. The evidence pool comprises

SPARQL-verified RDF triples and the top-K passages retrieved by the RAG component. The model evaluates each claim against this pool, yielding an outcome. Using a clinician-adjudicated set, decision thresholds for support and contradiction are calibrated. Evaluation reports the answer-level hallucination rate over the number of retrieved documents, aligning this metric with the safety requirements for reliable MQAS.

where

is the rate of hallucination rate;

is the total number of evaluated answers;

is a claim;

is the

-th atomic claim extracted from answer

i;

is the best maximum score over all evidence items;

is the function that returns probabilities between 0 and 1;

are the thresholds for support, contradictions, and knowledge graph (KG); and

is the logical OR function.

We employ statistical methods, including 95% confidence intervals (CI) and p-values to support the model’s outcomes. The 95% CI expresses the uncertainty around the estimated metrics (accuracy and hallucination rate). Practically, 95% corresponds to standard errors in normal-based intervals with the common p 0.05 significance level.

4. Results and Discussion

We utilize Windows 11 Pro with Intel i9 (Intel, Santa Clara, CA, USA) and an NVIDIA H100 GPU (NVidia, Santa Clara, CA, USA) to ensure compatibility and stability throughout the model implementation process. The synthetic dataset contains 6500 question–answer pairs while the MEDQA dataset covers 766 question-answer pairs. The dataset is divided into training (60%), validation (20%), and testing (20%). In addition, a significant portion (20%) of the MEDQA dataset is used to evaluate the model’s generalization, guaranteeing the model’s capability on novel data. The internal and external evaluation comprises 1453 inputs and outputs (1300 internal test and 153 external test). Python 3.10.0 is used for the robust and efficient implementation of the model, integrating

SPARQL, GPT-Neo, and the RAG framework. The key Python 3.12 libraries include Transformers 4.10.0, RDFlib 5.0.0, Pandas 1.3.3, AllenNLP 2.9.3, MatPlotlib 3.4.3, Seaborn 0.11.2, TensorFlow 2.6.0, and Optuna 2.10.0.

Table 2 offers the details of the computational strategies for deploying the proposed model.

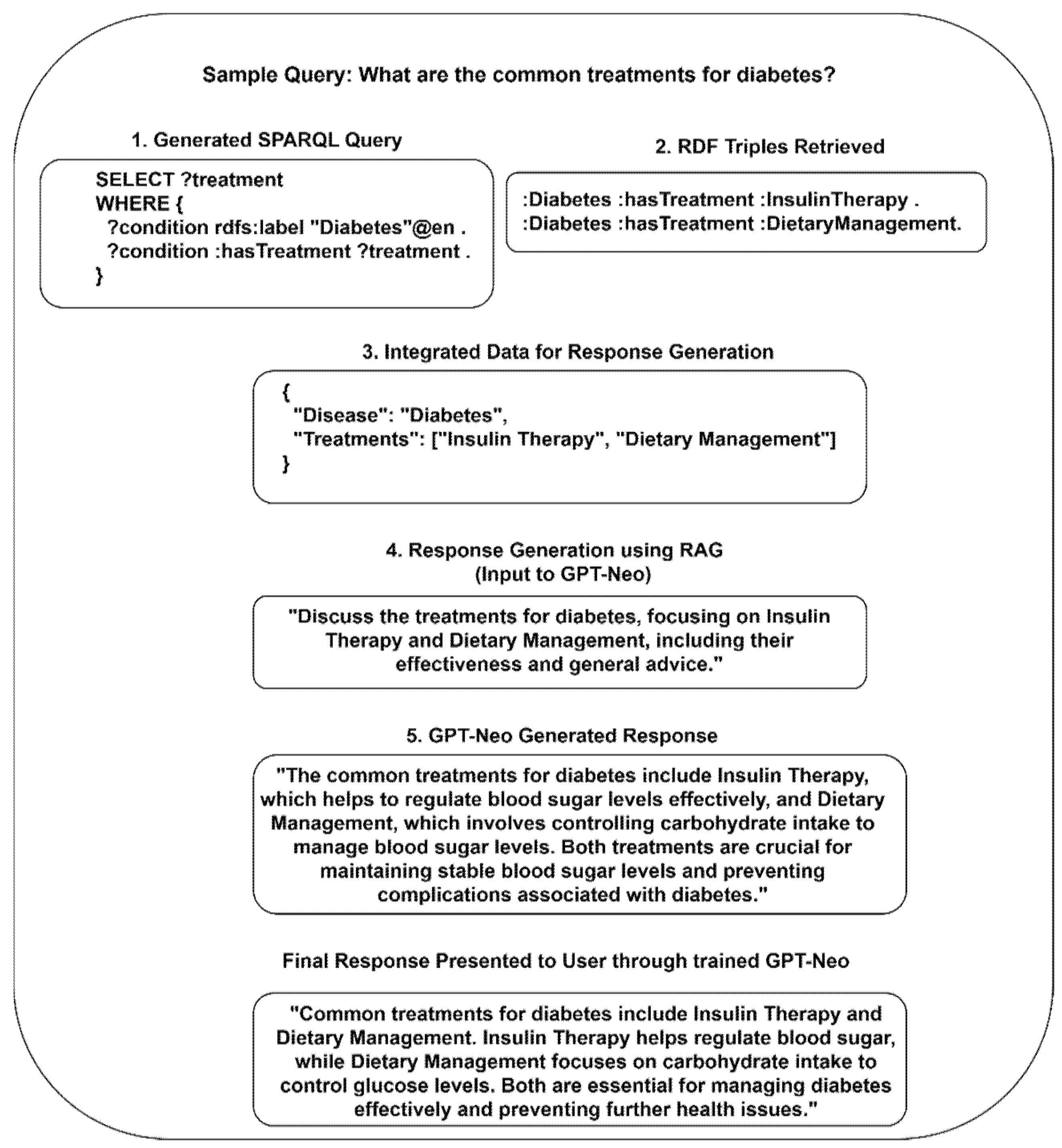

Figure 2 illustrates an end-to-end instance of transforming a medical query into a detailed and useful response, aiding patients and healthcare providers in comprehending diabetes treatment options. Each step builds upon the previous one in order to ensure that the outcome is reliable and grounded in verified medical knowledge.

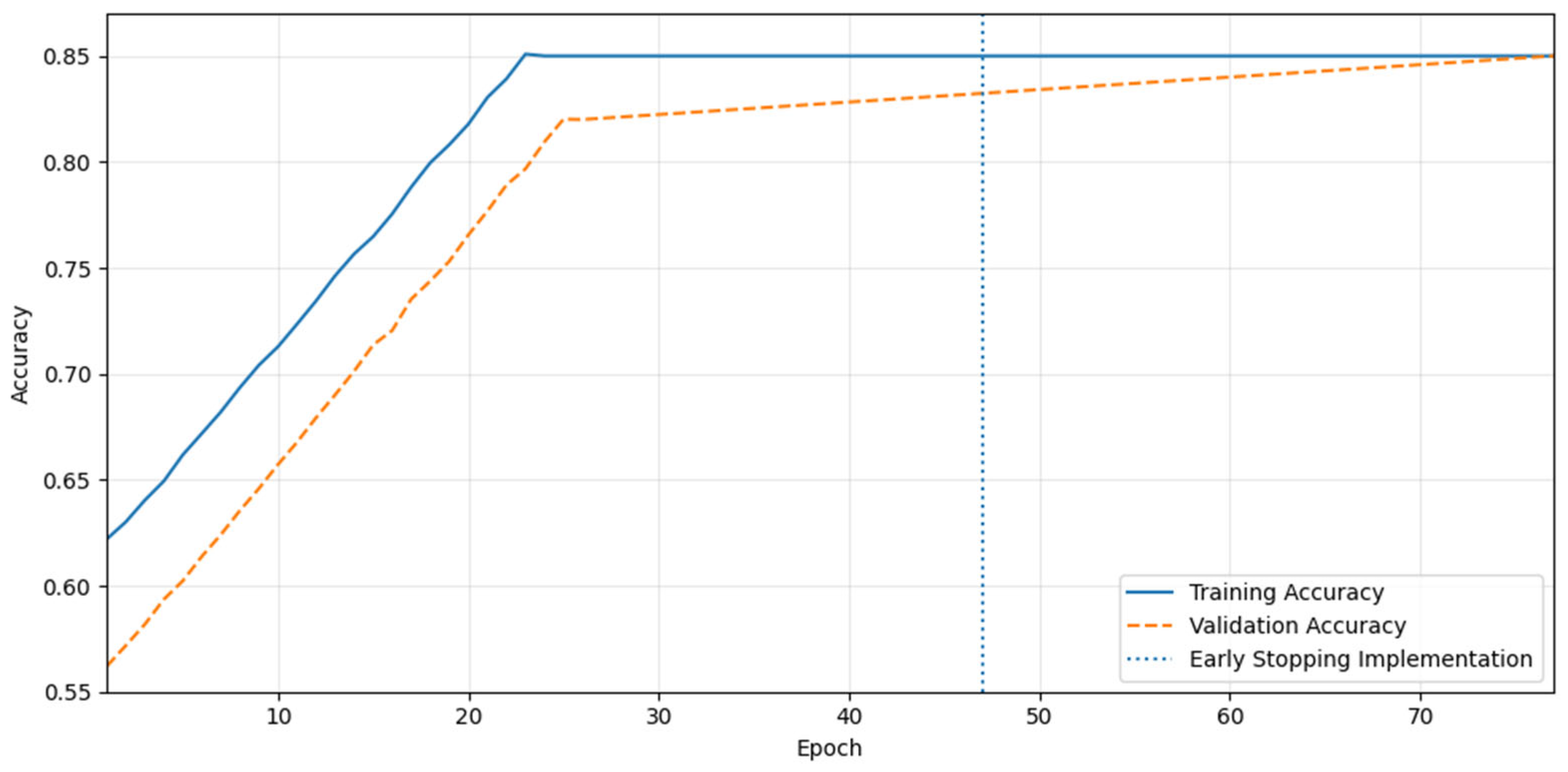

Figure 3 shows the training and validation accuracy of the proposed model across epochs. Using parameter-efficient tuning, we fine-tuned the GPT-Neo model. By freezing the base model weights, the GPT-Neo model was trained. We focus LoRA adapters on the transformer blocks, LayerNorm gains, biases, and the LM-head, during the training phase. BOHB optimization searched the hyperparameters, including lr, weight decay, warmup ratio, LoRA rank/α/dropout, batch size, maximum sequence length, and scheduler. In order to validate the model’s resilience in real-world situations, an early stopping strategy is used to ensure that the model maintains its generalizability to unseen data. The precision of the training exhibits steady improvement beginning with the first epoch, extending significantly until it reaches a plateau around the 25th epoch. This increasing trend shows that the model learns from the training data efficiently and adjusts its parameters to generate the outcomes. A robust fit to the training data implies that there are no apparent indicators of underfitting, as the training accuracy reaches its maximum level (85%). Implementing the early stopping strategy at the 47th epoch is beneficial. This approach halts training when validation accuracy no longer improves, avoiding overfitting. While retaining model generality, the early stopping point is selected to maximize validation accuracy.

A comprehensive performance analysis of the proposed model on the synthetic dataset is presented in

Table 3. The analysis covers five distinct sets and includes parameters such as accuracy, precision, recall, F1-score, and hallucination rate. The consistency in accuracy and precision throughout the majority of the sets indicate the exceptional performance. With significant documents retrieved, recall increases to 90.2% in Set 5, retrieving appropriate documents. The model retrieves relevant documents with high accuracy and low false positives, which is crucial for QAS dependability. With a balance between accuracy and recall, the F1-Score displays an amazing peak at 90.3% in Set 5, underlining the model’s efficiency in balancing recall and accuracy at larger document retrieval volumes. In Set 5, the hallucination rate of 0.25 indicates that the system is becoming effective as more documents are retrieved. Due to early stopping strategies, the average test accuracy (85.7%) of the proposed model is slightly higher than the training accuracy (85%) This trend can indicate the potential of the proposed QAS in generating more accurate and less hallucinated responses.

Table 4 demonstrates the individual and combined contributions of various components in the proposed model. This analysis is used to benchmark the efficacy of each module within the model’s architecture. The baseline model achieves an accuracy of 85.7% with F1-score of 87.5%. The relatively low hallucination rate of 0.25 underscores the model’s reliability in generating accurate and relevant responses. Excluding the

SPARQL retrieval led to the increased hallucination rate, suggesting the significance of

SPARQL retrieval in producing factual content. The removal of the RAG component reduces the model’s accuracy of 78.4% with a hallucination rate of 0.45. The inherent features, including parametric knowledge and template/lexical regularities, supported the model to achieve a considerable outcome without

SPARQL and RAG. The outcome indicates the RAG’s role in enhancing the quality and depth of the generated answers. Without hallucination checks, the model obtains 89.8%, suggesting hallucination checks prevent the generation of incorrect information. Finally, the model’s performance is significantly reduced without

SPARQL, RAG, and hallucination checks. Thus, the absence of

SPARQL and LLMs with RAG, lead to a substantial increase in error rates and ineffective handling of queries.

Table 5 presents a comparative performance analysis of various transformer-based QASs against the proposed QAS on a synthetic dataset. The baseline models are underperformed due to the lack of KG-aware re-ranking and evidence-gated decoding. T5 demands more context or longer outputs to resolve contraindication details. The compressed representation affects the performance of DistilBERT, generating the highest hallucination rate (0.67 ± 0.018) and modest F1-Score. With fixed prompt templates, XLNet produces generic response. However, the lack of KG-aware generation restricts its performance. The low hallucination rate of 0.25 underscores the model’s ability to generate responses with minimal errors. The superior performance can be credited to the recommended

SPARQL and GPT-Neo integration. The findings highlight the effectiveness of the proposed model in producing meaningful responses to the user queries.

Figure 4 illustrates the model’s performance on the MEDQA dataset based on different volumes of documents. It indicates that the increased number of documents influence the model’s performance. The high precision value suggests the effectiveness of the model in identifying relevant responses. The high F1-score highlights an overall improvement in balanced performance, rendering robust scalability in handling an increasing number of documents.

Table 6 outlines a comparative analysis of the proposed QAS against well-known transformers using the MEDQA dataset. The proposed model shows substantial improvements compared to its counterparts. It identifies relevant information and minimizes incorrect information, which is a key factor in medical contexts. We used bucketing/padding to a constant sequence length. In addition, the caches are warmed for

SPARQL and the vector store. Under this environment, the GPU time is nearly identical across datasets. The proposed model reports a hallucination rate of 0.31 ± 0.020, significantly lower than other models, highlighting its superiority in generating reliable responses. We report 95% CI and evaluate pairwise gaps at a significance level of

p 0.05. Paired significance tests on per question outcomes confirm that the proposed model surpasses the baseline models with

p 0.05. We adjust for multiple comparisons, and the outcomes remain significant at

p 0.05. The superior performance implies the model’s potential integration into clinical practice, supporting clinical decision-making, patient education, and engagement.

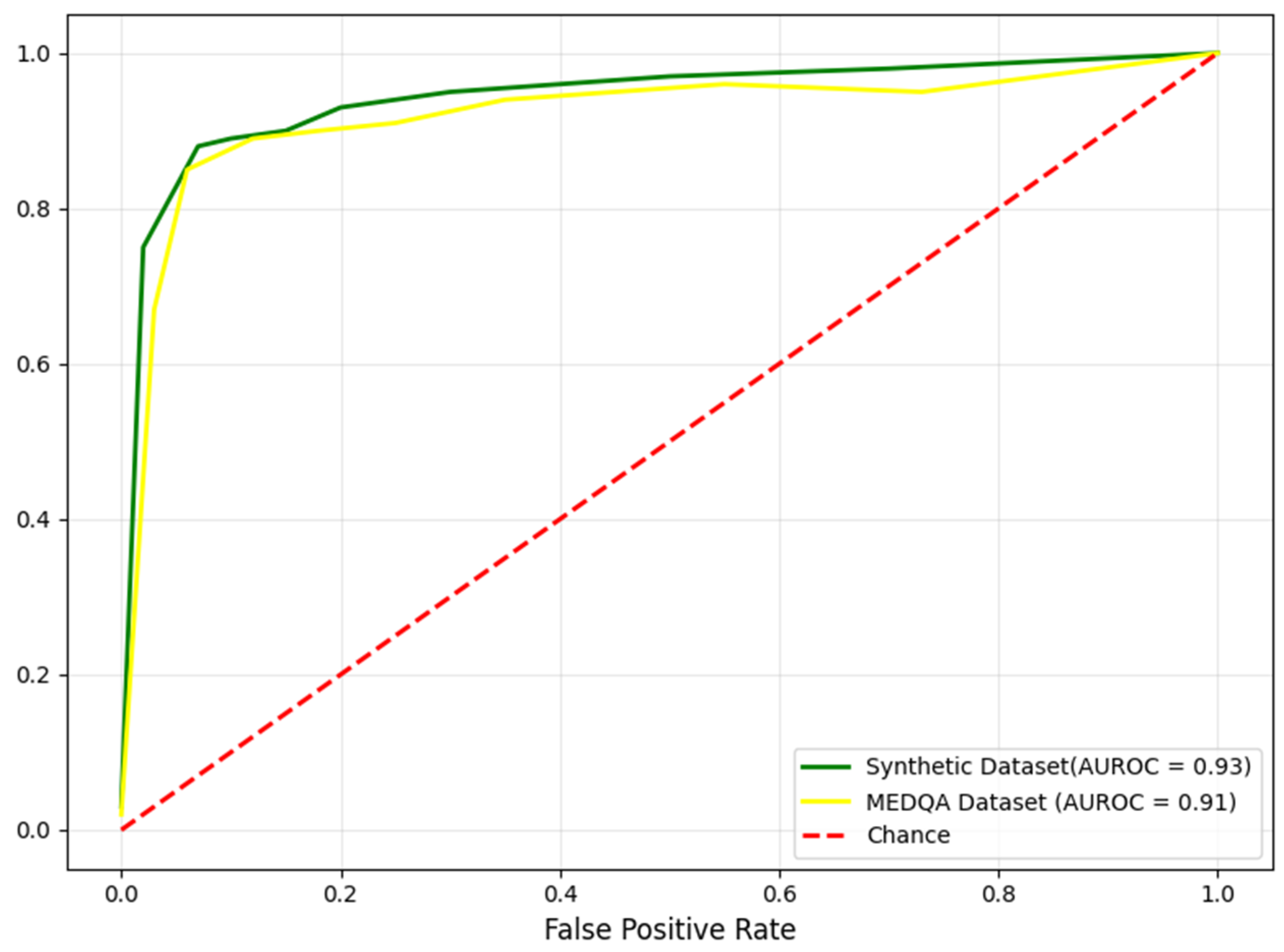

Figure 5 illustrates the area under the receiver operating characteristics (AUROC) curves for the model on the synthetic and MEDQA datasets. An AUROC of 0.93 on the synthetic data represents the model’s discriminatory ability. The high AUROC of 0.91 on the MEDQA dataset indicates the model’s robust performance on real-world data. The outcomes present compelling evidence to show the capabilities of the proposed QAS in generating accurate and reliable responses to medical queries.

Table 7 reveals a comparative analysis of various models, including the proposed QAS, across different datasets. Shi et al. [

41] and Alonso et al. [

42] generate responses with high hallucination rates, reflecting limited grounding or weaker evidence controls. Kim (2025) [

40] exhibits higher accuracy with substantially higher hallucination rate. In contrast, the proposed model generates a response with minimum hallucination rate of 0.31 ± 0.020 on the MEDQA dataset, demonstrating strong generalizability and effectiveness in real-world settings. The incorporation of adaptive learning techniques refine the proposed model’s performance on novel data, maintaining its reliability with the latest medical information and user interaction trends.

The experimental findings indicate that the proposed model outperforms current QASs and transformers-based information retrieval systems. Compared to individual transformers, the proposed QAS generate responses with minimal rate of hallucinations. By leveraging the hybrid SPARQL–RAG–GPT-Neo approach, the model handles complex language patterns and long-range dependencies within texts. The integration of advanced technologies improve information retrieval, decision-making, patient interaction, and clinical operations, advancing healthcare technology. Training on a broad spectrum of medical text and datasets, we enabled the model to comprehend standard medical queries and lesser-known medical terminologies. This extensive training approach facilitates the model to manage a wide variety of medical queries, reducing biases and improving generalization.

In order to improve the accuracy of patient diagnosis and treatment, the proposed model can assist healthcare professionals by properly understanding complicated medical queries and obtaining relevant information from extensive healthcare databases. For instance, a clinician may retrieve recent treatment procedures for chronic conditions, including diabetes or hypertension. To deliver evidence-based suggestions, the model can process the latest research, clinical guidelines, and patient data. This reduces duration and guarantees that treatment is based on the latest medical knowledge, increasing patient outcomes. As virtual health assistants, the model can respond to health-related queries, clarify prescription regimens, and provide guidance on minor ailments. It empowers users to control their health, improving health outcomes and increasing their satisfaction. The model can identify a patient’s requirements and provide recommendations for treatment and prevention based on their medical history, genetic profile, and lifestyle habits. By recommending lifestyle adjustments and preventative care targeted to individual health requirements, it improves treatment effectiveness and disease prevention. Improved diagnosis accuracy and treatment effectiveness enable the model to contribute to lowering the overall healthcare cost. Stopping misdiagnoses and unsuitable treatments saves a lot of money and lessens the financial load on patients and healthcare providers. This approach can assist researchers in accelerating the pace of medical research and discoveries by facilitating the development of hypotheses, the design of experiments, and the analysis of study data.

Despite its strengths, the study acknowledges certain limitations, restricting its generalizability and scalability. Using a synthetic dataset for controlled experiments may be unable to adequately replicate the complexity and diversity of real-time data, especially in medical settings where queries can be unpredictable. Although the model performs extremely well, its accuracy decreases as query complexity expands, indicating the demand for further enhancements in the model’s architecture. Using cutting-edge machine learning methods, including meta-learning and few-shot learning, may enable the model to adapt rapidly to complex queries without extensive retraining. The clinical relevance and usefulness of the model can be improved by iterative refinement in collaboration with medical experts based on practical input. This would guarantee that the model closely matches clinical goals and priorities in addition to performing effectively. Investigating additional factors, including more sophisticated methods of reducing hallucinations and recent NLP advancements can improve the model outcomes, guaranteeing dependability and reliability.

Prioritizing regulatory, ethical, and patient-safety measures is essential for validating the proposed model using real-time clinical data. Essential controls include evidence-gated generation (answers traceable to referenced knowledge-graph triples and retrieved sources); rigorous safety checks for dosages, contraindications, and black-box warnings; and conservative abstention with explicit uncertainty messaging when evidence is inadequate. Privacy measures should adhere to existing health data regulations, which include data minimization and the implementation of robust access controls. Lifecycle governance should include post-release bias and safety monitoring, incident reporting, and restricted knowledge graph and model updates. Prior to any clinical review, human-factors testing, risk management associated with medical device practices, and evaluation of AI applications, are essential. These strategies will provide a visible, auditable path from research prototype to clinically ideal applications.