IntegraPSG: Integrating LLM Guidance with Multimodal Feature Fusion for Single-Stage Panoptic Scene Graph Generation

Abstract

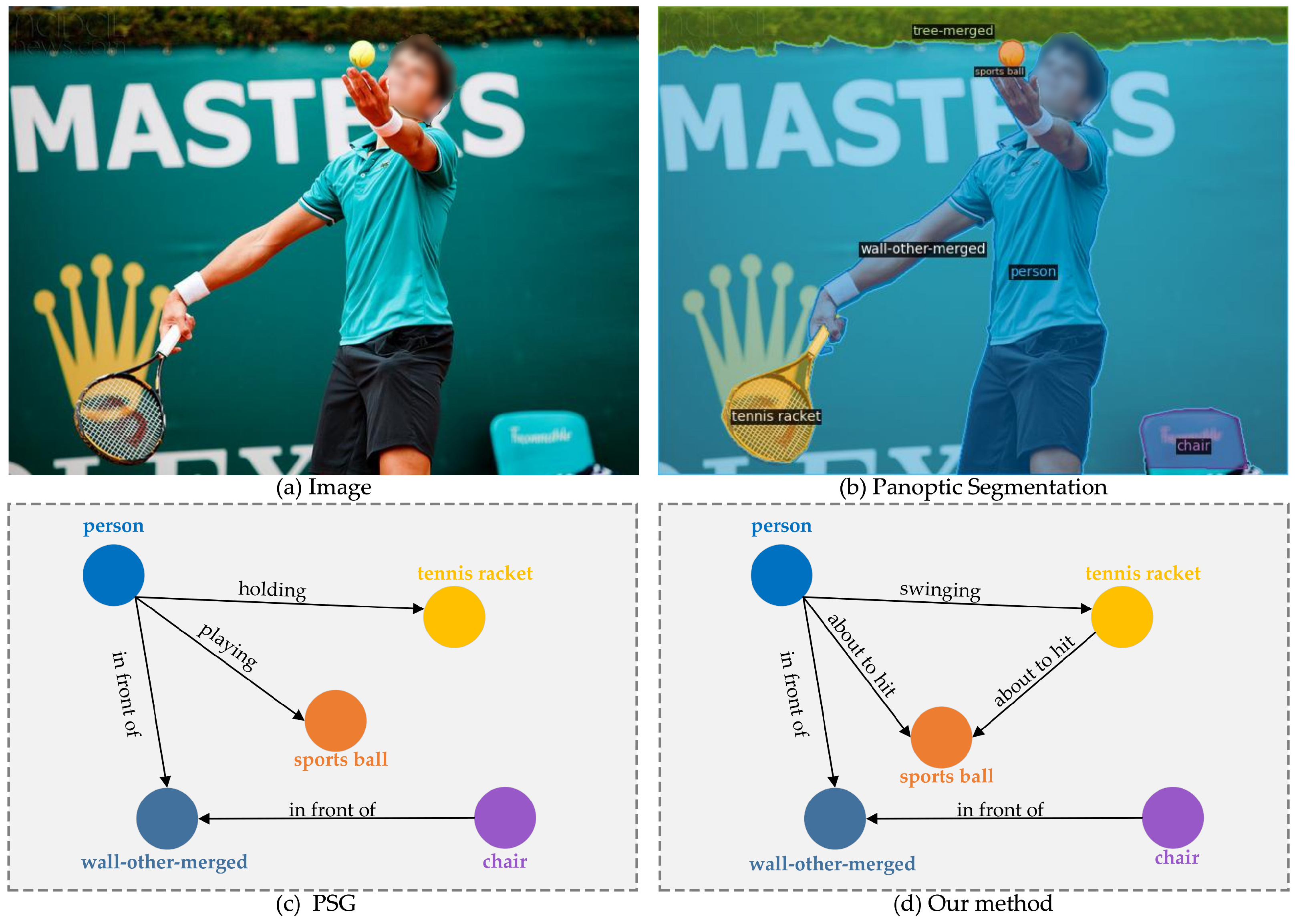

1. Introduction

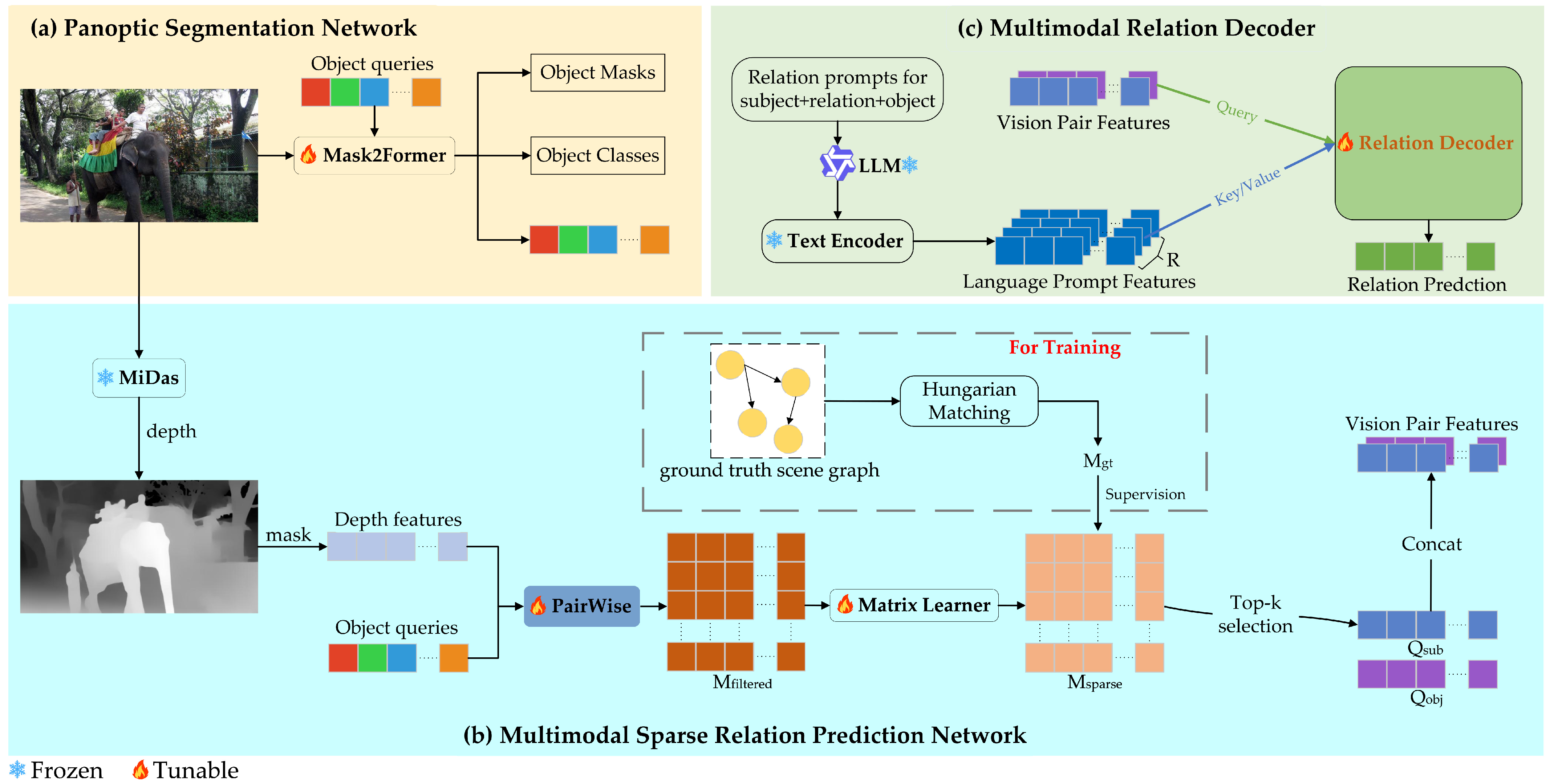

- We propose a unified single-stage framework, IntegraPSG, to address the challenges of spatial reasoning and long-tail distribution in PSG by integrating multimodal features in the subject–object pairing stage and introducing the semantic guidance of LLM for relation prediction. Our method establishes a collaborative mechanism of “multimodal refinement–language-guided reasoning”, which provides an effective solution to these fundamental challenges.

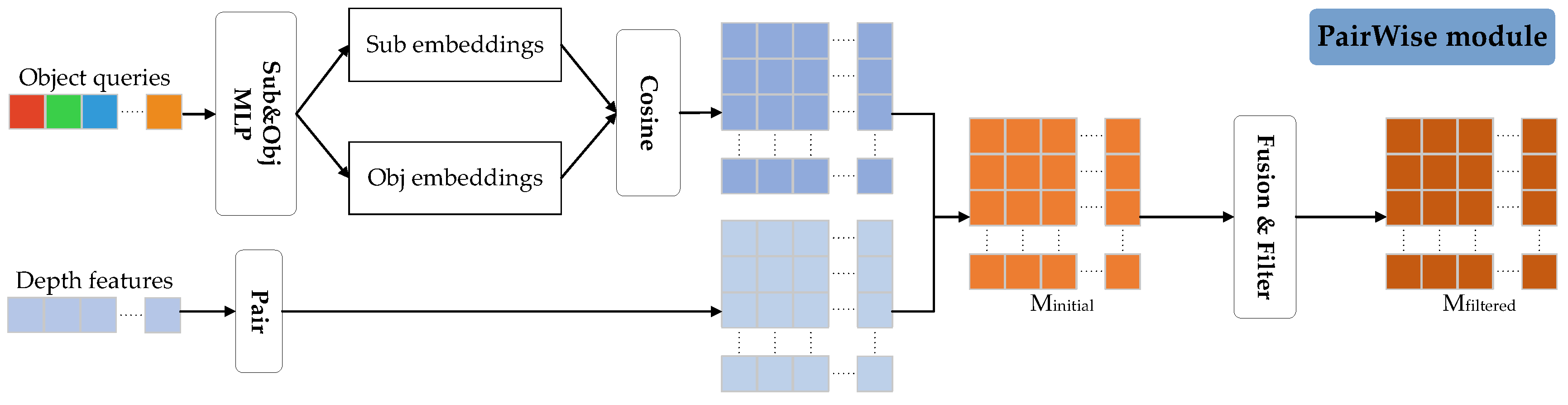

- We design a multimodal sparse relation prediction network that constructs visual language and deep spatial multimodal relation proposal matrix and jointly optimizes them. The architecture enhances the screening mechanism of subject–object pairs to improve the accuracy of subject–object candidate pairs selection while reducing redundant pairings.

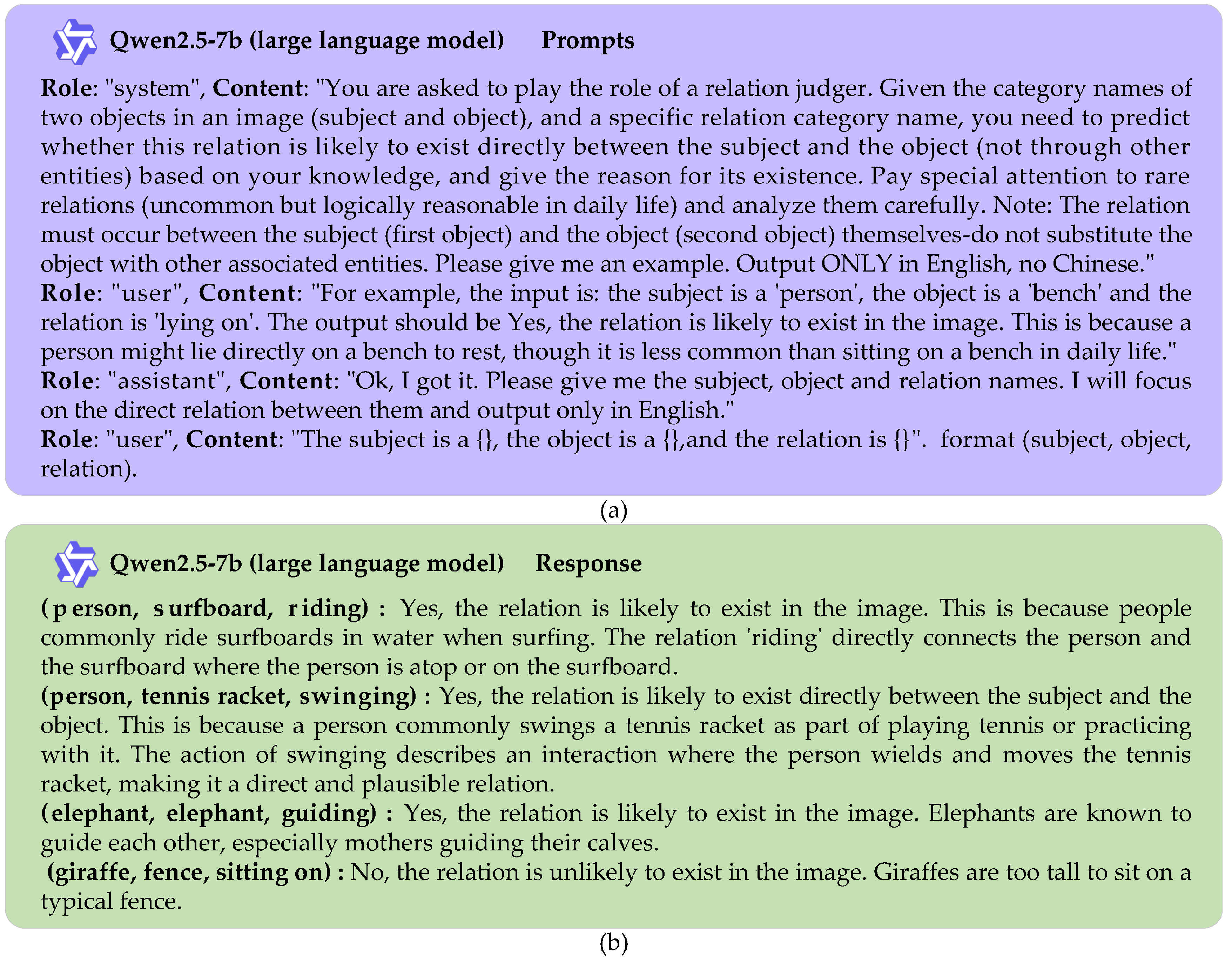

- We propose a language-guided multimodal relation decoder that cross-modally interacts language descriptions generated by LLM with visual features. The design substantially strengthens discriminative capability for rare relations and markedly improves prediction performance on long-tail samples.

2. Related Work

2.1. Panoptic Scene Graph Generation

2.2. Panoptic Segmentation

2.3. Large Language Model for Visual Relation Understanding

3. Method

3.1. Problem Definition

3.2. Overall Architecture

3.3. Panoptic Segmentation Network

3.4. Multimodal Sparse Relation Prediction Network

3.4.1. Multimodal Relation Proposal Matrix Construction

3.4.2. Multimodal Sparse Relation Matrix Refinement

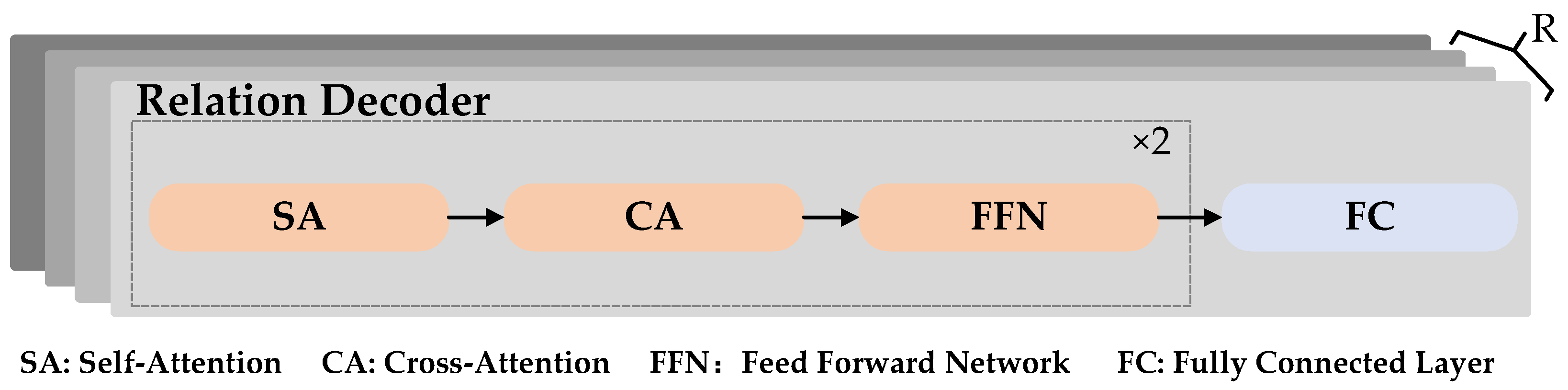

3.5. Multimodal Relation Decoder

3.5.1. Vision Pair Features Extraction

3.5.2. Language Prompt Features Extraction

3.5.3. Relation Prediction

3.6. Model Training

4. Experiments

4.1. Experimental Settings

4.2. Implementation Details

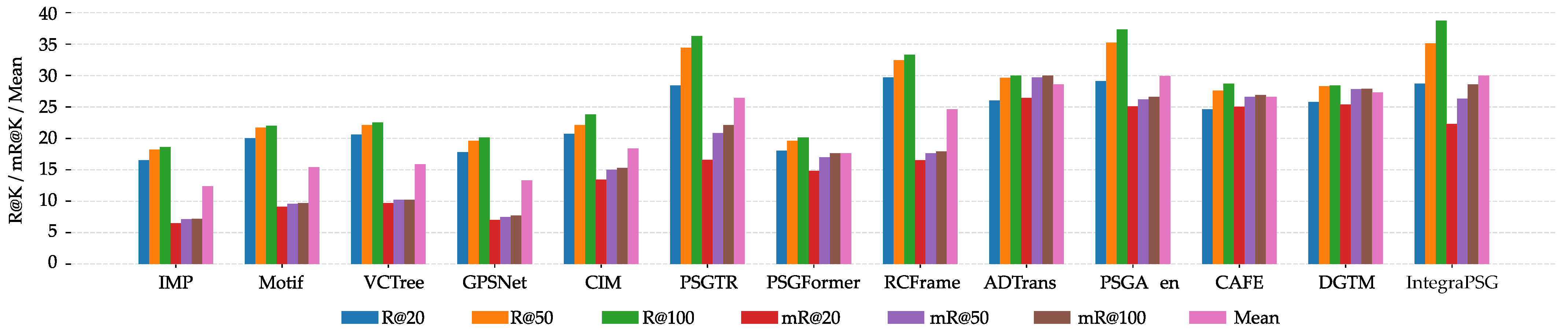

4.3. Experimental Results

4.4. Ablation Study

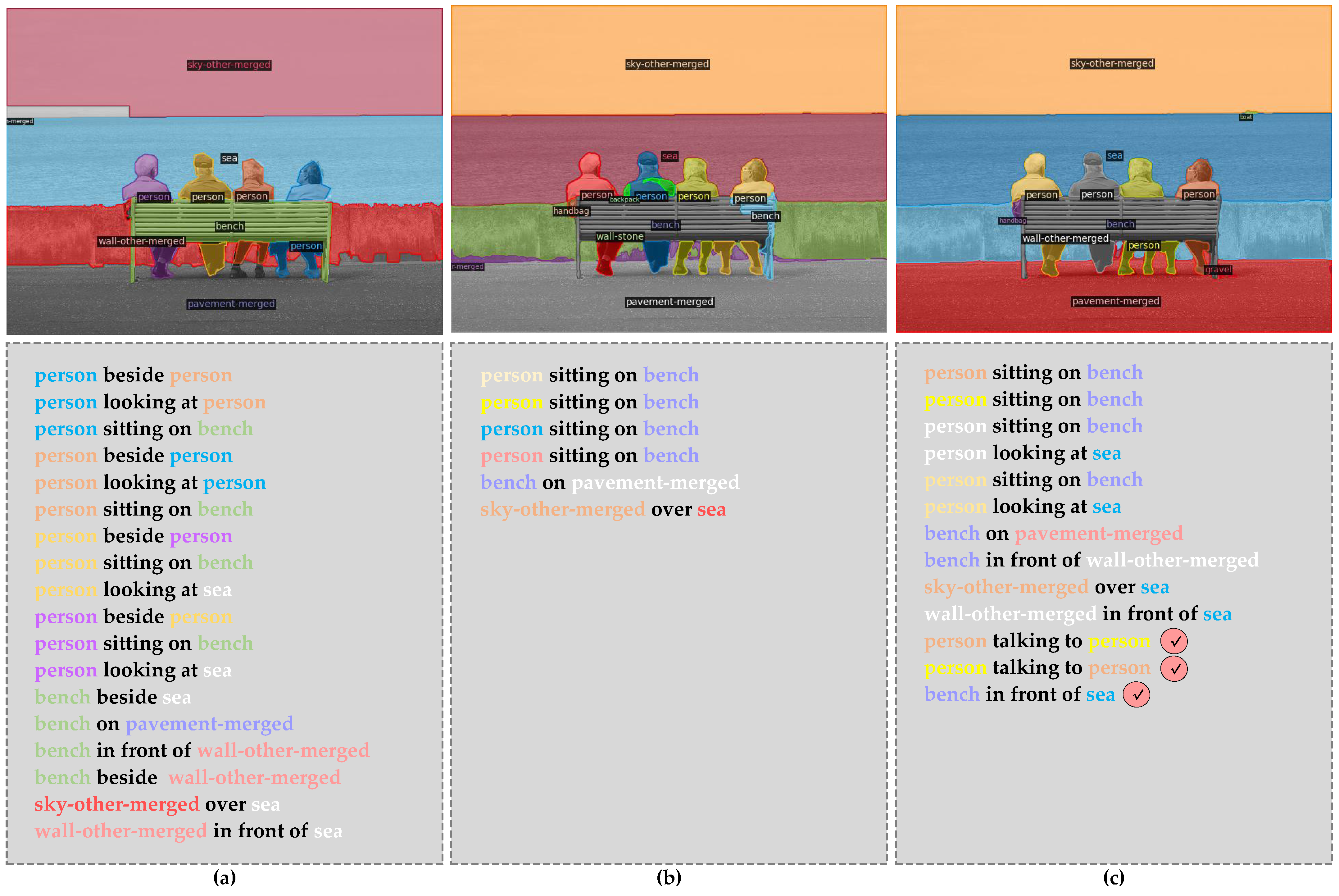

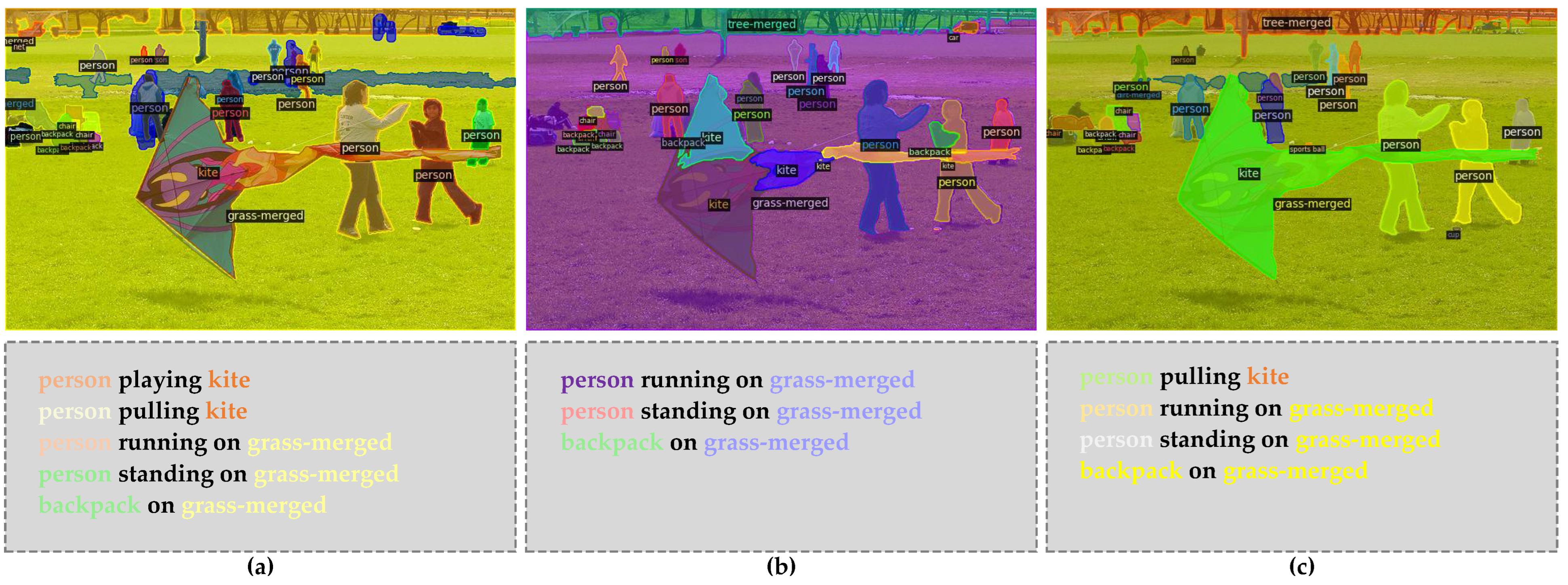

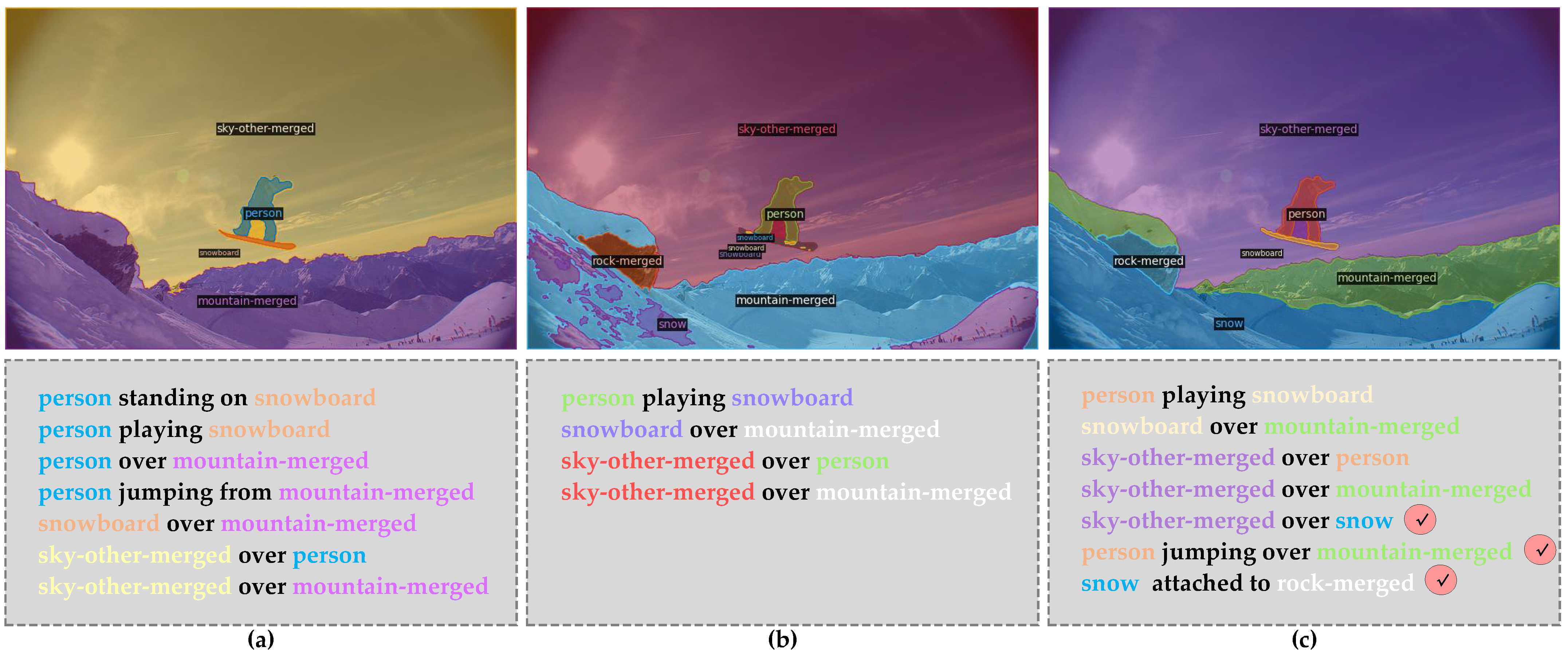

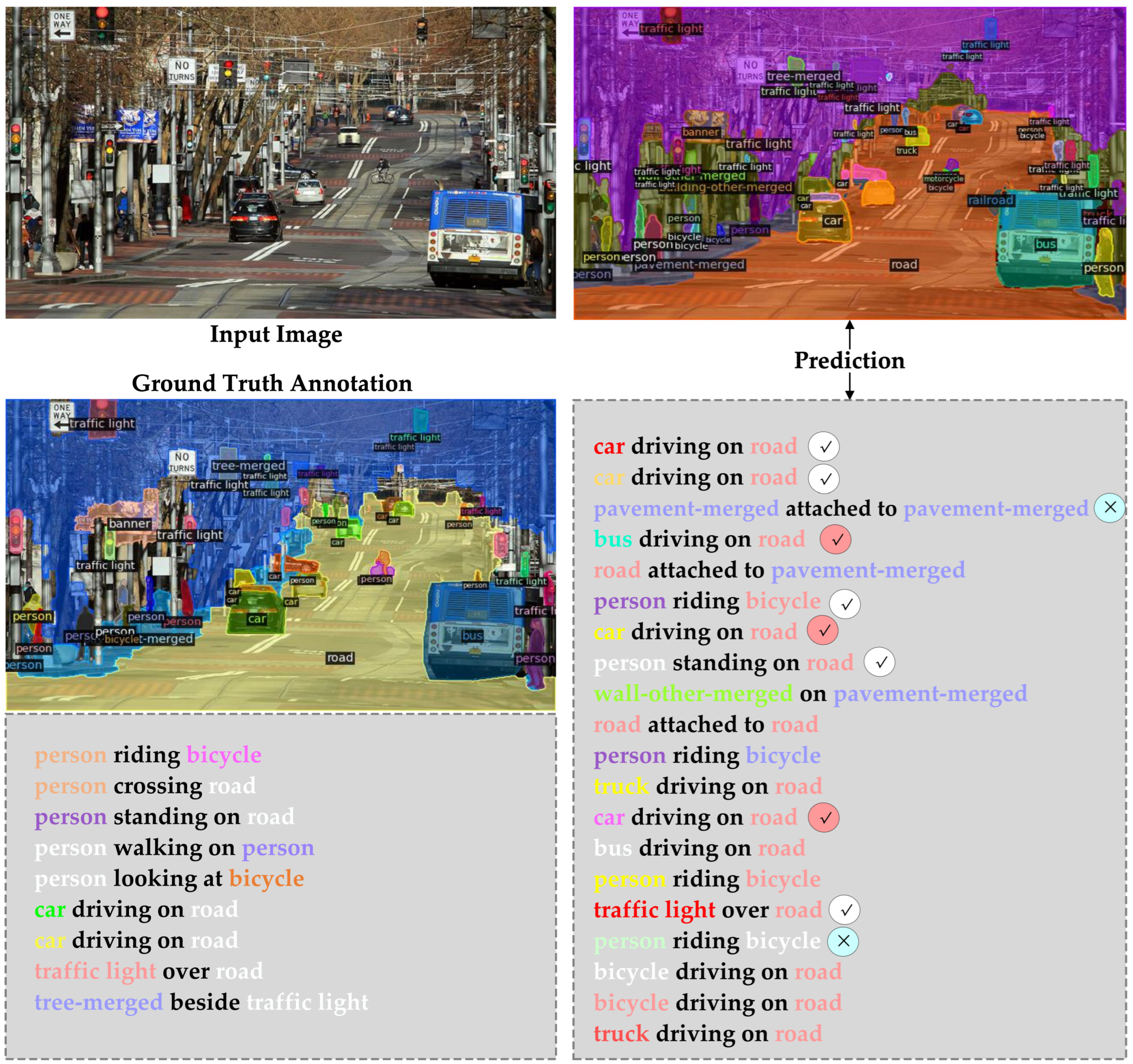

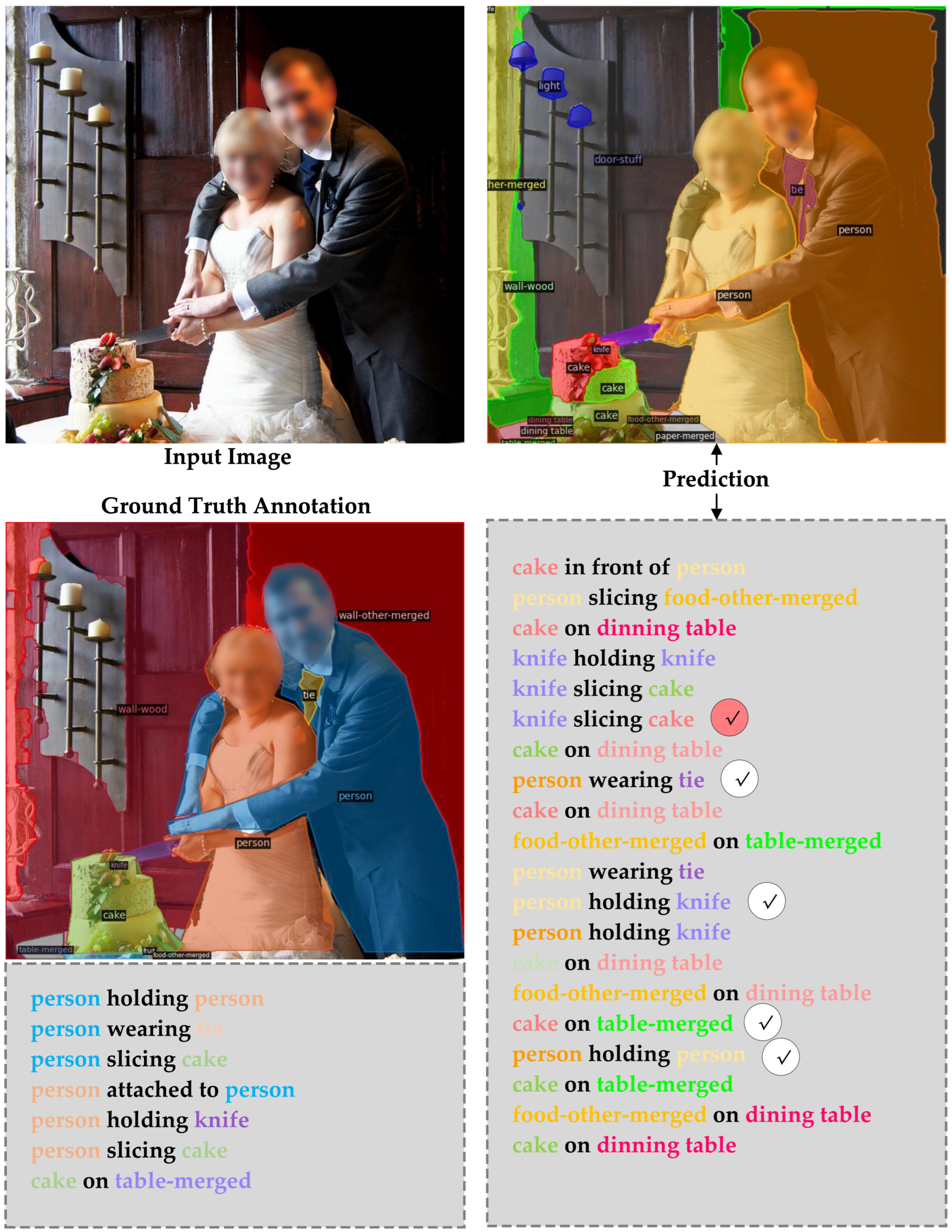

4.5. Qualitative Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gu, J.; Zhao, H.; Lin, Z.; Li, S.; Cai, J.; Ling, M. Scene graph generation with external knowledge and image reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1969–1978. [Google Scholar]

- Li, H.; Zhu, G.; Zhang, L.; Jiang, Y.; Dang, Y.; Hou, H.; Shen, P.; Zhao, X.; Shah, S.A.A.; Bennamoun, M. Scene graph generation: A comprehensive survey. Neurocomputing 2024, 566, 127052. [Google Scholar] [CrossRef]

- Schroeder, B.; Tripathi, S. Structured query-based image retrieval using scene graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 178–179. [Google Scholar]

- Qian, T.; Chen, J.; Chen, S.; Wu, B.; Jiang, Y.G. Scene graph refinement network for visual question answering. IEEE Trans. Multimed. 2022, 25, 3950–3961. [Google Scholar] [CrossRef]

- Chen, S.; Jin, Q.; Wang, P.; Wu, Q. Say as you wish: Fine-grained control of image caption generation with abstract scene graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9962–9971. [Google Scholar]

- Zhong, Y.; Wang, L.; Chen, J.; Yu, D.; Li, Y. Comprehensive image captioning via scene graph decomposition. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 211–229. [Google Scholar]

- Li, W.; Zhang, H.; Bai, Q.; Zhao, G.; Jiang, N.; Yuan, X. Ppdl: Predicate probability distribution based loss for unbiased scene graph generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19447–19456. [Google Scholar]

- Herzig, R.; Bar, A.; Xu, H.; Chechik, G.; Darrell, T.; Globerson, A. Learning canonical representations for scene graph to image generation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 210–227. [Google Scholar]

- Amiri, S.; Chandan, K.; Zhang, S. Reasoning with scene graphs for robot planning under partial observability. IEEE Robot. Autom. Lett. 2022, 7, 5560–5567. [Google Scholar] [CrossRef]

- Jiang, B.; Zhuang, Z.; Shivakumar, S.S.; Taylor, C.J. Enhancing Scene Graph Generation with Hierarchical Relationships and Commonsense Knowledge. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 8883–8894. [Google Scholar]

- Liu, H.; Yan, N.; Mortazavi, M.; Bhanu, B. Fully convolutional scene graph generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11546–11556. [Google Scholar]

- Wang, L.; Yuan, Z.; Chen, B. Multi-Granularity Sparse Relationship Matrix Prediction Network for End-to-End Scene Graph Generation. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2025; pp. 105–121. [Google Scholar]

- Yang, J.; Ang, Y.Z.; Guo, Z.; Zhou, K.; Zhang, W.; Liu, Z. Panoptic scene graph generation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 25–27 October 2022; pp. 178–196. [Google Scholar]

- Caesar, H.; Uijlings, J.; Ferrari, V. Coco-stuff: Thing and stuff classes in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1209–1218. [Google Scholar]

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413. [Google Scholar]

- Yang, J.; Wang, C.; Liu, Z.; Wu, J.; Wang, D.; Yang, L.; Cao, X. Focusing on flexible masks: A novel framework for panoptic scene graph generation with relation constraints. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 4209–4218. [Google Scholar]

- Shi, H.; Li, L.; Xiao, J.; Zhuang, Y.; Chen, L. From easy to hard: Learning curricular shape-aware features for robust panoptic scene graph generation. Int. J. Comput. Vis. 2025, 133, 489–508. [Google Scholar] [CrossRef]

- Liang, S.; Zhang, L.; Xie, C.; Chen, L. Causal intervention for panoptic scene graph generation. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–6. [Google Scholar]

- Xu, J.; Chen, J.; Yanai, K. Contextual associated triplet queries for panoptic scene graph generation. In Proceedings of the 5th ACM International Conference on Multimedia in Asia, Tainan, Taiwan, 6–8 December 2023; pp. 1–5. [Google Scholar]

- Sun, Y.; Chen, Y.; Huang, X.; Wang, Y.; Chen, S.; Yao, K.; Yang, A. DGTM: Deriving Graph from transformer with Mamba for panoptic scene graph generation. Array 2025, 26, 100394. [Google Scholar] [CrossRef]

- Wang, J.; Wen, Z.; Li, X.; Guo, Z.; Yang, J.; Liu, Z. Pair Then Relation: Pair-Net for Panoptic Scene Graph Generation. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10452–10465. [Google Scholar] [CrossRef] [PubMed]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A Comprehensive Overview of Large Language Models. ACM Trans. Intell. Syst. Technol. 2025, 16, 1–72. [Google Scholar] [CrossRef]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 2023, 56, 1–40. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable transformers for end-to-end object detection. In Proceedings of the Ninth International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021; pp. 1–16. [Google Scholar]

- Li, L.; Ji, W.; Wu, Y.; Li, M.; Qin, Y.; Wei, L.; Zimmermann, R. Panoptic scene graph generation with semantics-prototype learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 3145–3153. [Google Scholar]

- Kuang, X.; Che, Y.; Han, H.; Liu, Y. Semantic-enhanced panoptic scene graph generation through hybrid and axial attentions. Complex Intell. Syst. 2025, 11, 110. [Google Scholar] [CrossRef]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Xiong, Y.; Liao, R.; Zhao, H.; Hu, R.; Bai, M.; Yumer, E.; Urtasun, R. Upsnet: A unified panoptic segmentation network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8818–8826. [Google Scholar]

- Cheng, B.; Schwing, A.; Kirillov, A. Per-pixel classification is not all you need for semantic segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 17864–17875. [Google Scholar]

- Zhang, W.; Pang, J.; Chen, K.; Loy, C.C. K-net: Towards unified image segmentation. Adv. Neural Inf. Process. Syst. 2021, 34, 10326–10338. [Google Scholar]

- Li, Z.; Wang, W.; Xie, E.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P.; Lu, T. Panoptic segformer: Delving deeper into panoptic segmentation with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1280–1289. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Abdullah, M.; Madain, A.; Jararweh, Y. ChatGPT: Fundamentals, applications and social impacts. In Proceedings of the 2022 Ninth International Conference on Social Networks Analysis, Management and Security (SNAMS), Milan, Italy, 29 November–1 December 2022; pp. 1–8. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Anthropic. Claude. 2023. Available online: https://claude.ai/chat (accessed on 29 July 2025).

- Google. Bard. 2023. Available online: https://bard.google.com/chat (accessed on 29 July 2025).

- Alibaba. Qwen. 2023. Available online: https://chat.qwen.ai/ (accessed on 29 July 2025).

- Li, L.; Xiao, J.; Chen, G.; Shao, J.; Zhuang, Y.; Chen, L. Zero-Shot Visual Relation Detection via Composite Visual Cues from Large Language Models. Adv. Neural Inf. Process. Syst. 2023, 36, 50105–50116. [Google Scholar]

- Ranftl, R.; Lasinger, K.; Hafner, D.; Schindler, K.; Koltun, V. Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1623–1637. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 3–5 June 2019; pp. 4171–4186. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Wang, J.; Zhang, W.; Zang, Y.; Cao, Y.; Pang, J.; Gong, T.; Chen, K.; Liu, Z.; Loy, C.C.; Lin, D. Seesaw loss for long-tailed instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9695–9704. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Xu, D.; Zhu, Y.; Choy, C.B.; Fei-Fei, L. Decoupled weight decay regularization. In Proceedings of the Seventh International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019; pp. 1–18. [Google Scholar]

- Xu, D.; Zhu, Y.; Choy, C.B.; Fei-Fei, L. Scene graph generation by iterative message passing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5410–5419. [Google Scholar]

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural motifs: Scene graph parsing with global context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Lake City, UT, USA, 18–23 June 2018; pp. 5831–5840. [Google Scholar]

- Tang, K.; Zhang, H.; Wu, B.; Luo, W.; Liu, W. Learning to compose dynamic tree structures for visual contexts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6619–6628. [Google Scholar]

- Lin, X.; Ding, C.; Zeng, J.; Tao, D. Gps-net: Graph property sensing network for scene graph generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3746–3753. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Los Alamitos, CA, USA, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

| Method | Core Architecture | Relation Modeling | Strengths and Limitations |

|---|---|---|---|

| PSGTR [13] | Single-Decoder Transformer | Triplet Query Decoding | (+) A simple one-stage baseline: Serves as the most direct and straightforward benchmark. (−) Redundant segmentation, non-trivial Re-ID. |

| PSGFormer [13] | Dual-Decoder Transformer | Object and Relation Query Matching | (+) Unbiased prediction, excels on novel relations. (−) Limited peak recall (R@K). |

| ADTrans [25] | Plug-and-Play Dataset Enhancement Framework | Annotation Debiasing via Prototype Learning | (+) Alleviates the long-tail problem via annotation correction. (−) Reduces recall (R@k) on frequent predicates. |

| PSGAtten [26] | Two-Stage Attention-Based Structure | Visual-Semantic Fusion via Hybrid Attention | (+) Achieves superior recall via enhanced visual semantic fusion. (−) High complexity; conservative predictions. |

| DGTM [20] | Single-Stage Structure Integrating Mamba and KAN | Deriving Relations from Self-Attention via Mamba | (+) Enhances mean recall (mR@K) by leveraging attention by-products. (−) Overall recall is constrained by segmentation performance. |

| IntegraPSG | LLM-Integrated Single-Stage Framework | Synergistic Multimodal Pairing and LLM-Guided Decoding | (+) Achieves balanced recall and long-tail performance via a “refine-then-reason” synergy. (−) Under-calibrated confidence for its long-tail predictions. |

| Relationship Predicate Vocabulary | |||||||

|---|---|---|---|---|---|---|---|

| over | in front of | beside | on | in | attached to | hanging from | on back of |

| falling off | going down | painted on | walking on | running on | crossing | standing on | lying on |

| sitting on | flying over | jumping over | jumping from | wearing | holding | carrying | looking at |

| guiding | kissing | eating | drinking | feeding | biting | catching | picking |

| playing with | chasing | climbing | cleaning | playing | touching | pushing | pulling |

| opening | cooking | talking to | throwing | slicing | driving | riding | parked on |

| driving on | about to hit | kicking | swinging | entering | exiting | enclosing | leaning on |

| Method | Scene Graph Detection (SGDet) | Inference Time | ||||||

|---|---|---|---|---|---|---|---|---|

| R@20 | R@50 | R@100 | mR@20 | mR@50 | mR@100 | Mean | ||

| IMP [45] | 16.5 | 18.2 | 18.6 | 6.5 | 7.1 | 7.2 | 12.4 | - |

| Motif [46] | 20.0 | 21.7 | 22.0 | 9.1 | 9.6 | 9.7 | 15.4 | - |

| VCTree [47] | 20.6 | 22.1 | 22.5 | 9.7 | 10.2 | 10.2 | 15.9 | 0.132 * |

| GPSNet [48] | 17.8 | 19.6 | 20.1 | 7.0 | 7.5 | 7.7 | 13.3 | - |

| CIM [18,26] | 20.7 | 22.1 | 23.8 | 13.4 | 15.0 | 15.3 | 18.4 | - |

| PSGTR [13] | 28.4 | 34.4 | 36.3 | 16.6 | 20.8 | 22.1 | 26.4 | 0.230 * |

| PSGFormer [13] | 18.0 | 19.6 | 20.1 | 14.8 | 17.0 | 17.6 | 17.6 | 0.175 * |

| RCFrame [16] | 29.7 | 32.4 | 33.3 | 16.5 | 17.6 | 17.9 | 24.6 | 0.155 |

| ADTrans [25] | 26.0 | 29.6 | 30.0 | 26.4 | 29.7 | 30.0 | 28.6 | - |

| PSGAtten [26] | 29.1 | 35.2 | 37.3 | 25.1 | 26.2 | 26.6 | 29.9 | - |

| CAFE [17] | 24.6 | 27.6 | 28.7 | 25.0 | 26.6 | 26.9 | 26.6 | - |

| DGTM [20] | 25.79 | 28.26 | 28.42 | 25.38 | 27.8 | 27.9 | 27.3 | - |

| IntegraPSG (Ours) | 28.7 | 35.1 | 38.7 | 22.3 | 26.3 | 28.6 | 30.0 | 0.183 |

| Method | Scene Graph Detection (SGDet) | |||||

|---|---|---|---|---|---|---|

| Vision | Depth | Prior | R@20 | R@50 | mR@20 | mR@50 |

| ✓ | × | × | 27.0 | 33.6 | 21.0 | 26.3 |

| ✓ | ✓ | × | 28.4 | 34.8 | 22.0 | 25.7 |

| ✓ | × | ✓ | 28.6 | 33.9 | 22.2 | 26.0 |

| ✓ | ✓ | ✓ | 28.7 | 35.1 | 22.3 | 26.3 |

| Method | Scene Graph Detection (SGDet) | |||||

|---|---|---|---|---|---|---|

| R@20 | R@50 | R@100 | mR@20 | mR@50 | mR@100 | |

| Add | 27.2 | 33.4 | 36.9 | 20.5 | 24.4 | 26.6 |

| Concat | 28.7 | 35.1 | 38.7 | 22.3 | 26.3 | 28.6 |

| Method | LLM | Scene Graph Detection (SGDet) | |||||

|---|---|---|---|---|---|---|---|

| R@20 | R@50 | R@100 | mR@20 | mR@50 | mR@100 | ||

| Re-sampling | × | 26.7 | 33.7 | 37.9 | 18.0 | 21.7 | 23.4 |

| Loss re-weighting | × | 27.7 | 34.8 | 38.5 | 20.8 | 24.9 | 27.2 |

| IntegraPSG(Ours) | ✓ | 28.7 | 35.1 | 38.7 | 22.3 | 26.3 | 28.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Zhang, Q.; Sun, X.; Liu, G. IntegraPSG: Integrating LLM Guidance with Multimodal Feature Fusion for Single-Stage Panoptic Scene Graph Generation. Electronics 2025, 14, 3428. https://doi.org/10.3390/electronics14173428

Zhao Y, Zhang Q, Sun X, Liu G. IntegraPSG: Integrating LLM Guidance with Multimodal Feature Fusion for Single-Stage Panoptic Scene Graph Generation. Electronics. 2025; 14(17):3428. https://doi.org/10.3390/electronics14173428

Chicago/Turabian StyleZhao, Yishuang, Qiang Zhang, Xueying Sun, and Guanchen Liu. 2025. "IntegraPSG: Integrating LLM Guidance with Multimodal Feature Fusion for Single-Stage Panoptic Scene Graph Generation" Electronics 14, no. 17: 3428. https://doi.org/10.3390/electronics14173428

APA StyleZhao, Y., Zhang, Q., Sun, X., & Liu, G. (2025). IntegraPSG: Integrating LLM Guidance with Multimodal Feature Fusion for Single-Stage Panoptic Scene Graph Generation. Electronics, 14(17), 3428. https://doi.org/10.3390/electronics14173428