1. Introduction

Instance segmentation is one of the important tasks in computer vision. It combines the characteristics of object detection and semantic segmentation. It not only identifies category information for diverse objects but also needs to generate corresponding pixel-level masks for each target [

1]. When deployed in critical domains such as autonomous driving, medical imaging, and remote sensing, instance segmentation is typically subject to stringent requirements regarding both accuracy and processing speed [

2]. These metrics are not merely key indicators of system performance but also critical determinants of operational stability and safety.

Traditional instance segmentation can be traced back to Carsten Rother et al. [

3] proposed an interactive graph cut method for extracting foreground objects from images, which is suitable for the foreground extraction task of instance segmentation. Traditional instance segmentation mainly relies on classical image processing and manual design feature extraction technology for tasks. However, traditional instance segmentation methods face several limitations: insufficient feature representation capability, challenges in handling complex scenes, low accuracy, and a tendency to generate substantial redundant information. With the advancement of deep learning, significant breakthroughs have been achieved in instance segmentation tasks. Among these, convolutional neural networks (CNNs) have been widely adopted, spawning a series of methods with enhanced performance. The current deep learning instance segmentation methods are mainly divided into two categories in their detection structure: one-stage methods and two-stage methods.

The two-stage method is represented by Faster R-CNN [

4] and Cascade R-CNN [

5], and the candidate regions are classified and segmented. The one-stage method is represented by the YOLO series [

6,

7,

8,

9] and SSD [

10], and the target prediction is performed directly on the image. The two-stage method first generates candidate regions and then classifies and segments each region, which has higher precision, while the one-stage method skips the proposal step of the candidate box and directly performs dense prediction on the feature map, which has higher speed. Mask R-CNN, the most classical two-stage method model, was proposed by He et al. [

11]. By adding a segmentation mask branch to Faster R-CNN, it outputs the pixel-level mask of each candidate region, and realizes multi-task learning of classification, border, and segmentation mask. Cascade Mask R-CNN [

12] is an instance segmentation model extended from Cascade R-CNN. It achieves instance segmentation by adding a mask in the cascade structure. Compared with Mask R-CNN, these methods achieve higher accuracy but suffer from relatively slower inference speed. Notable examples include PointRend proposed by Kirillov et al. [

13] and RefineMask developed by Zhang et al. [

14]. PointRend prioritizes high-uncertainty regions to enhance focus on object edges, while RefineMask explicitly monitors boundary areas for edge refinement. Both approaches optimize the mask edges generated by Mask R-CNN through cascaded upsampling (from coarse to fine resolution), effectively addressing the issue of subpar edge prediction in traditional Mask R-CNN outputs.

One-stage real-time segmentation began to rise in 2018. Feature pyramid enhancement methods focus on improving multi-scale representation, with PANet [

15] pioneering bidirectional feature pyramid networks that enhance multi-scale object segmentation capabilities and subsequently being adopted as the neck network in YOLOv4/v5 for improved small object segmentation. Prototype-based approaches revolutionized real-time segmentation efficiency, exemplified by YOLACT [

16], the first real-time one-stage instance segmentation framework that generates masks through shared prototypes and linear combinations, with subsequent frameworks like EmbedMask [

17] and CondInst [

18] building upon this foundation.

Dynamic kernel prediction methods address mask quality issues, as demonstrated by SOLOv2 [

19], which improved upon SOLOv1 [

20] by introducing dynamic convolution kernel prediction to solve YOLACT’s mask coarseness problem, while BlendMask [

21] further enhanced instance discrimination in complex scenes through attention-guided feature fusion. Contour-based segmentation approaches offer an alternative to traditional mask-level characterization, with PolarMask [

22] extending the FCOS detection framework to unify object detection and instance segmentation architectures, and AdaptIS [

23] enabling instance mask generation from single-point annotations, closely mimicking manual annotation processes.

Unified architecture methods demonstrate versatility across segmentation tasks, with K-Net [

24] handling semantic, instance, and panoptic segmentation simultaneously through learnable dynamic kernels without relying on traditional region proposals. Transformer-based approaches have significantly advanced the field, with Swin Transformer [

25] introducing hierarchical window self-attention mechanisms widely adopted in segmentation backbones, Mask DINO [

26] enhancing DETR-like models through dynamic matching and mask decoding modules, and Yang et al. [

27] proposed YOLO-SegNet, seamlessly fusing YOLOv8 with SegFormer for refined spatio-temporal feature expression. Real-time optimization methods balance performance with efficiency, as exemplified by RTMDet-Ins [

28], which maintains strong feature representation while achieving excellent real-time performance on COCO benchmarks, and Liu et al. proposed YOLO-CORE [

29], which improves boundary description and segmentation precision through contour modeling within the YOLO framework. The continuous evolution of one-stage instance segmentation methods toward high efficiency, deployment-friendly architectures, end-to-end inference, and decoupled mask generation, combined with the expanding YOLO ecosystem and integration of dynamic masks, lightweight backbones, and Transformer modules, promises more practical solutions for high-performance instance segmentation systems in real-world applications.

In recent years, with the continuous development of autonomous driving technology, the application scenarios of visual perception systems have become more and more diverse. Real-time feedback under complex street scenes and a large number of dense targets has become a major difficulty, and instance segmentation can effectively separate each target object accurately. The instance segmentation method that distinguishes its boundaries is the key to semantic understanding, path planning, and obstacle avoidance. However, although advanced instance segmentation models such as Mask R-CNN have high precision, they are difficult to deploy on resource-constrained platforms such as on-board GPUs or edge devices. Reducing the complexity of the instance segmentation model while ensuring the segmentation performance is the current research hotspot in instance segmentation.

To this end, this paper proposes a dual-path enhanced lightweight scheme based on YOLO11. The contributions of this paper are as follows:

A dual (YOLO-SA and YOLO-SD) collaborative lightweight enhancement scheme is proposed. On the premise of not changing the main structure of YOLO11 and decoding heads, two enhancement modules are designed to improve the segmentation precision and control the model complexity.

Introducing the SimAM attention mechanism to the C3k2 module (forming C3k2SA). Under the condition of almost no parameters and increased computational cost, the perception ability of the model to small targets and local salient regions is effectively enhanced.

Combining DySample and SPDConv modules to optimize the upsampling and convolution paths, improve the accuracy of feature reconstruction without introducing large-scale structure, suppress redundant information interference, and improve the overall segmentation performance.

Multiple sets of ablation experiments and comparative verification are carried out. The empirical results show that the YOLO-SA improves the segmentation performance under the premise of zero computational cost. YOLO-SD maintains excellent segmentation precision under the premise of controllable parameter overhead.

The dual-path enhancement models achieve a good trade-off between lightweight and precision and provide a practical reference solution for single-stage instance segmentation tasks for edge deployment or real-time applications.

The remainder of this paper is organized as follows.

Section 2 introduces the network structure of YOLO11 and the basic principles of SimAM, SPD-Conv, and Dysample modules.

Section 3 describes the fundamental framework and specific procedures of the new proposed method. Comparative experimental results of datasets and analysis are reported in

Section 4. Finally,

Section 5 is a summary of the algorithm and points for further work.

3. Architecture

Aiming at the problem of considering the precision of the instance segmentation model and the difficulty of deployment on autonomous driving, we choose YOLO11 as the basic model. On the basis of ensuring the basic structure of the model, two lightweight and efficient modules, SimAM and Dysample + SPDConv, are added to improve the performance of the model.

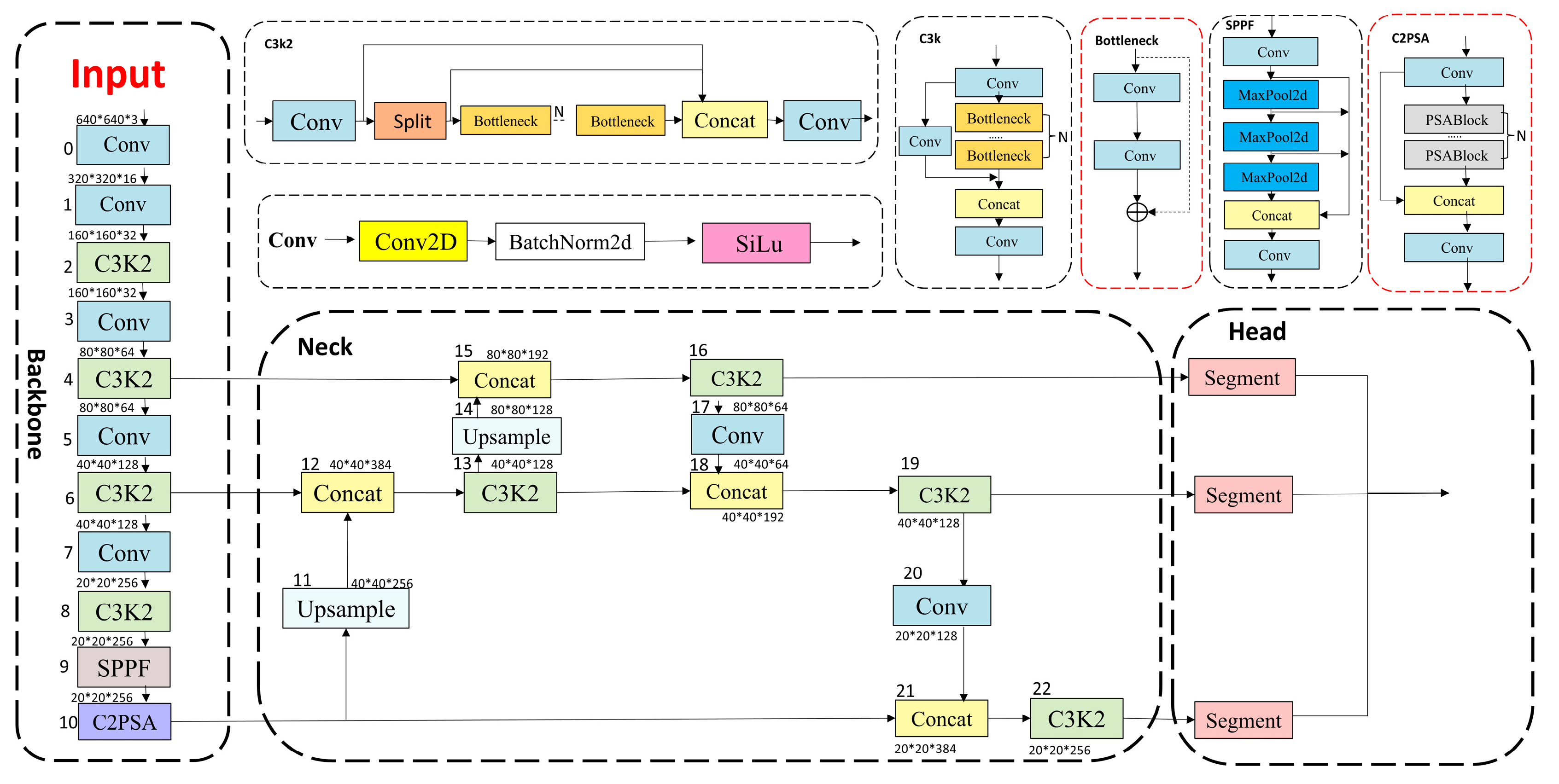

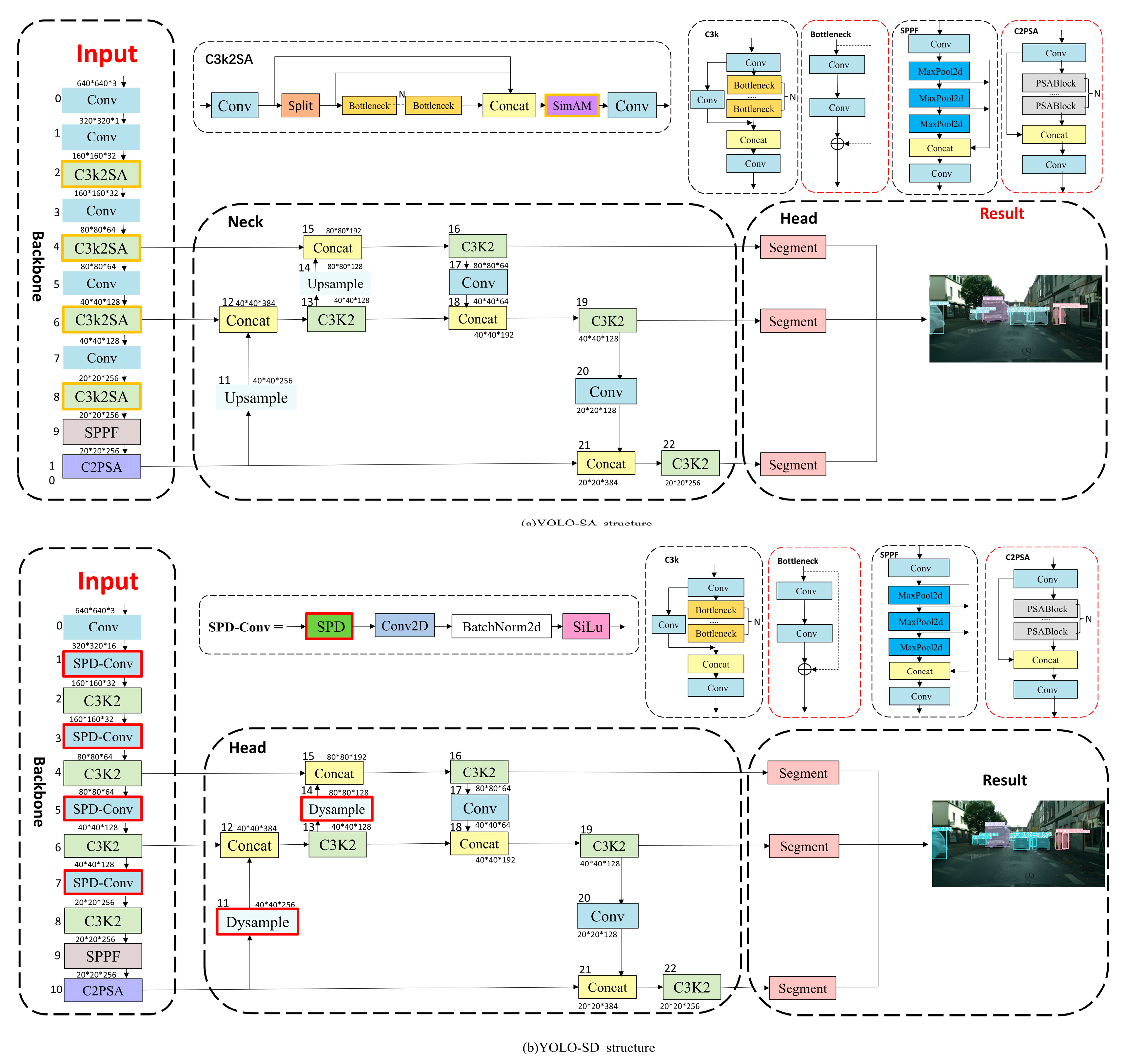

Figure 5 illustrates the YOLO-SA and YOLO-SD architecture.

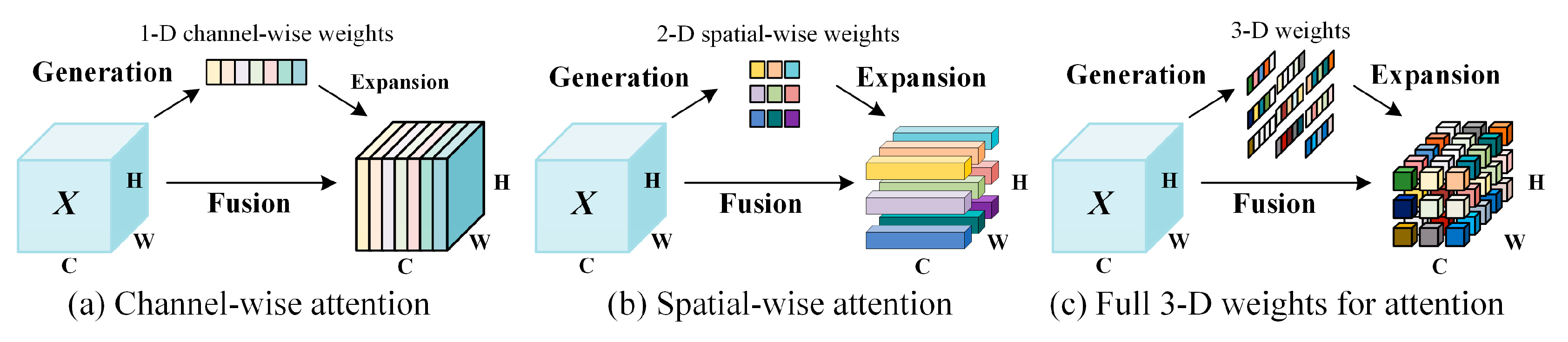

The C3k2 structure is widely used as a feature extraction module in YOLO11. In order to enhance the response ability of the model to the target edge region and the small target region, a lightweight attention mechanism, SimAM (Simple Attention Module) [

31], is introduced on the basis of the original C3k2 module to construct the C3k2SA module, as illustrated in

Figure 5a. SimAM is a parameter-free attention machine that enhances the response intensity of important spatial position pairs by weighting based on neuron activity, and improves the modeling ability of the model for the visual area. SimAM does not add additional weights and convolution operations, and can directly replace the original activation function at the corresponding position without affecting the original structure. Using the results of C2f output, SimAM is used to re-use the attention weighting of the feature information after the shunt fusion, so that the model can pay attention to useful information earlier, prevent a large amount of useless or redundant information from being brought to the model at the beginning, and make the features of different levels connect with each other better and more targeted. Interactive fusion improves model performance. Based on the introduction of C3k2SA, it almost does not increase the model parameters, but it can better exert the strong response selectivity advantage of the model to the key features, especially in the mask quality of the complex background and the boundary area. The mask quality of the region is significantly higher than that of other models.

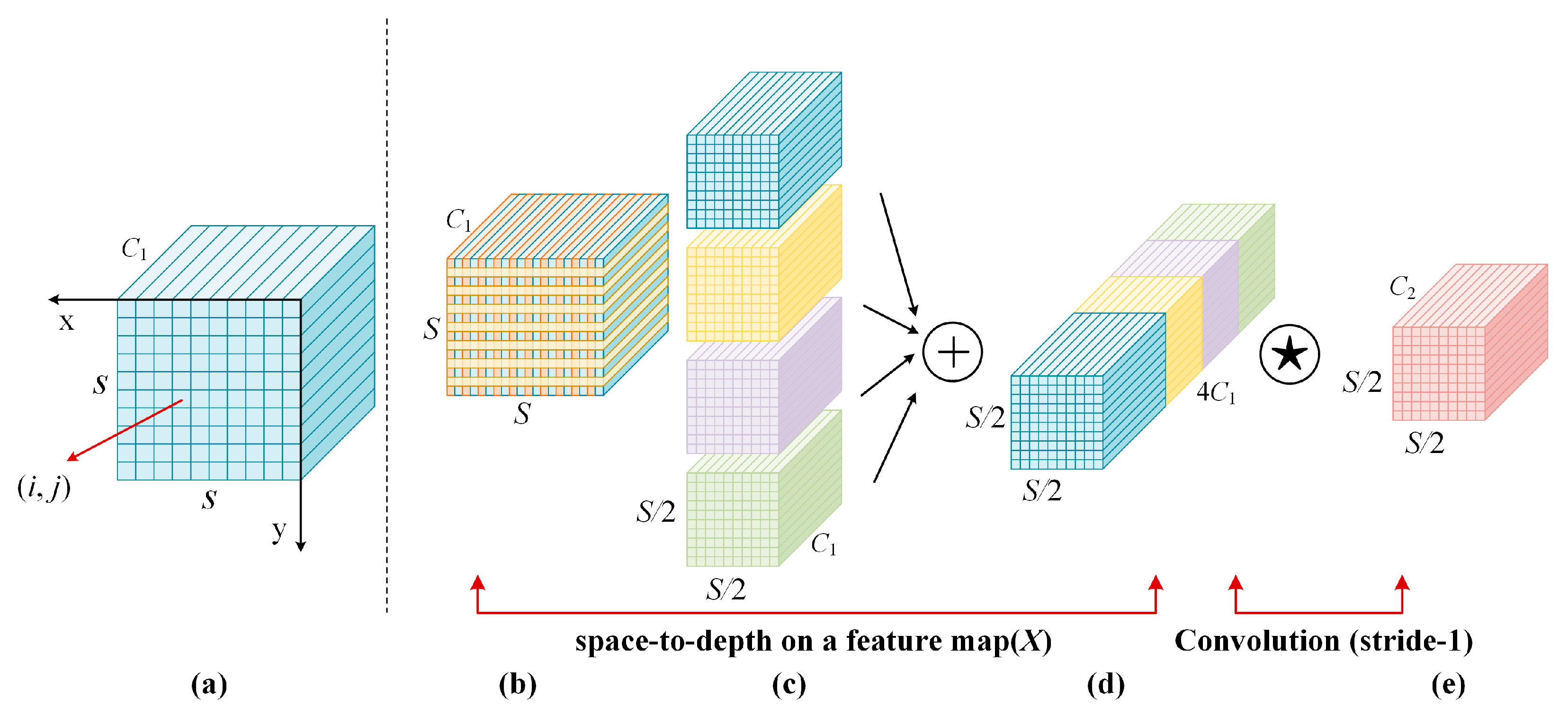

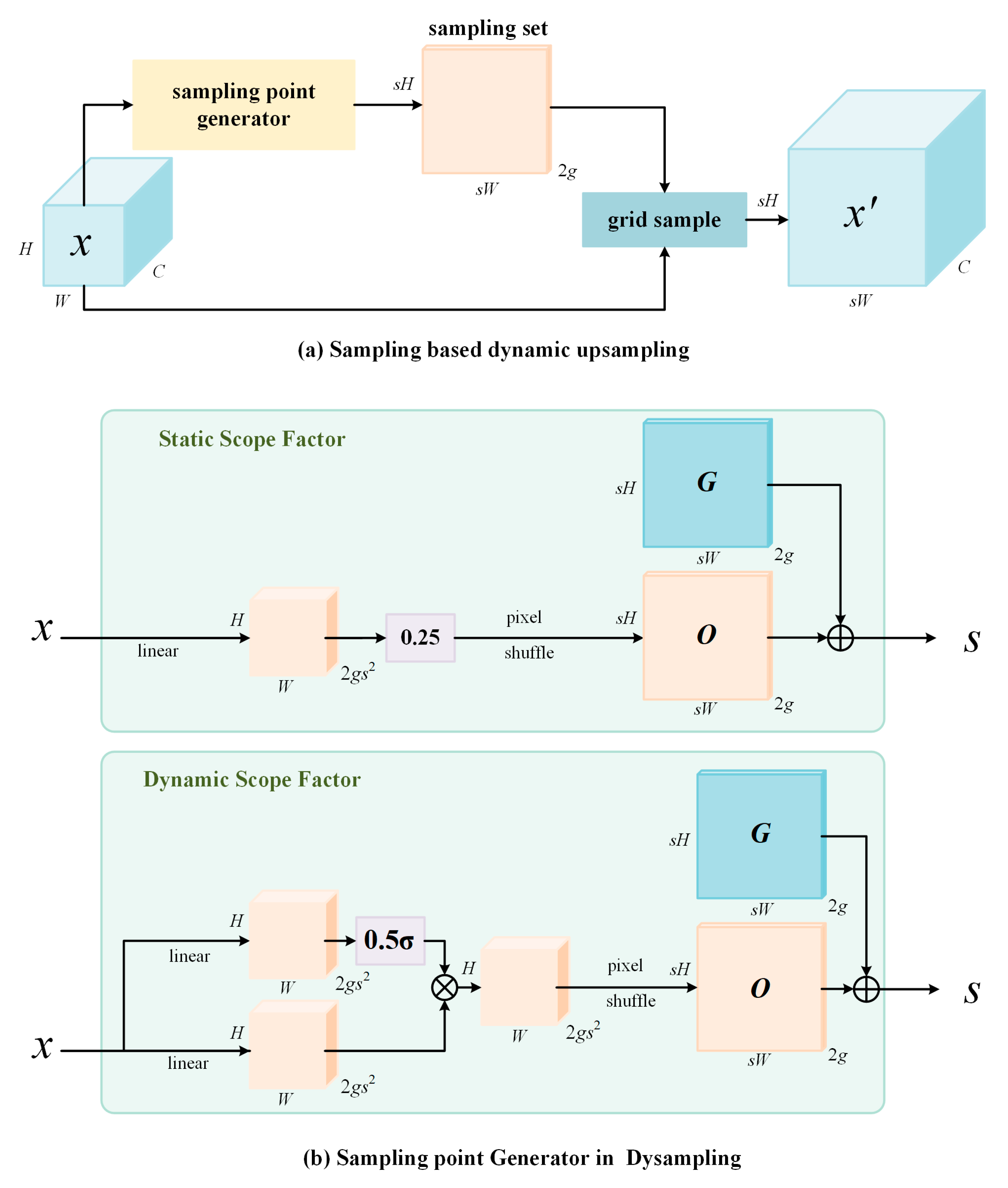

The upsampling of the original structure of YOLO11 adopts the nearest neighbor interpolation method. Although this upsampling method has a small amount of calculation, it cannot accurately obtain the detailed information and semantic information of the features, which will affect the effect of the model in intensive prediction tasks. YOLO11 replaces the four standard convolutions (Conv) of each stage of the original backbone network with a lightweight spatial perception convolution SPDConv to improve the parameter utilization and spatial perception ability in the feature extraction stage. SPDConv can achieve effective convolution operations when guided by local spatial information, which can reduce many repeated convolution operations and complete low-cost modeling of complex targets on different scales of P2–P5. The C3k2 module, SPPF, and C2PSA in the original structure of YOLO11 are retained to ensure the ability to express deep semantic information. Moreover, the original Upsample operation in the neck structure is replaced with DySample upsampling, which reconstructs features using local dynamic weights. This method effectively alleviates spatial information loss introduced by interpolation and is more suitable for recovering small targets, as shown in

Figure 5b. The two DySample act on the two upsampling paths of P5 P4 and P4 P3, respectively, and then complete the cross-layer feature fusion through Concat. The three-scale output mechanism of YOLO11 is still used, and finally, the instance segmentation masks on the three scales of P3, P4, and P5 are output. It ensures the same effective layer depth as the original trunk. In this way, the model size can be well compressed, and the perception of small targets in dense scenes can be enhanced.

4. Experimental Results

In this study, YOLO11l is selected as the baseline architecture because it achieves the best precision among the YOLO11 variants during initial training. This makes it a more suitable candidate to demonstrate the effectiveness of our proposed enhancement modules. Although our method is compatible with other versions such as YOLO11s and YOLOv1m, the large variant provides a better foundation for performance improvement analysis due to its superior baseline accuracy.

4.1. Dataset

The Cityscapes dataset [

35] holds significant value for computer vision research. Primarily designed for semantic segmentation tasks, it also supports the development of application systems such as autonomous driving and ADAS. The dataset comprises 5000 images captured from a driver’s perspective in urban driving scenarios, divided into 2975 training images, 500 validation images, and 1525 test images. These three subsets are densely annotated at the pixel level (full annotations) and further subdivided into eight categories at the instance level, including specific objects like pedestrians and vehicles. Cityscapes data was collected from street views of approximately 50 cities in Germany and neighboring European countries under three distinct climatic conditions (spring, summer, and autumn). The collection scope primarily covers large-scale urban road-centered scenes, encompassing elements such as traffic flow on ordinary urban roads, street-side restaurants, and shops, as well as road infrastructure like trees and transit stops. Each image in the dataset has a resolution of 2048 × 1024, with annotations for up to 19 object classes (with absolute labeling for over half of each image area) and 8 classes with precise instance-level classifications. Cityscapes employs two evaluation criteria: “fine” and “coarse.” The fine subset includes 5000 meticulously annotated images, while the coarse subset combines these 5000 fine annotations with 20,000 coarsely annotated images.

The COCO dataset is a large-scale, comprehensive resource for object detection, segmentation, and captioning tasks [

36]. Focused on scene understanding, it draws primarily from complex daily-life scenarios, with objects in images annotated via accurate segmentation. The dataset includes 91 object categories, 328,000 images, and 2.5 million labels. As one of the largest datasets for semantic segmentation to date, it provides annotations for 80 categories across over 330,000 images, with 200,000 of these images labeled. The total number of individual instances in the dataset exceeds 1.5 million.

In this work, although all experiments are conducted on the Cityscapes dataset, the backbone networks of all models are initialized with pretrained weights from COCO2017. This leverages the diverse annotations in COCO to improve generalization and stability during fine-tuning.

4.2. Training Details of Model

The experiment is carried out in the environment of Python 3.8, Torch 2.0.0, CUDA 11.8, and Torchvision 0.15.1. The configuration used was equipped with an Intel Corei9-12900K CPU (NVIDIA, Santa Clara, CA, USA) with 16 GB of RAM, and an NVIDIA GeForce RTX 3090ti GPU (NVIDIA, Santa Clara, USA) with 24 GB of video memory. The equipment was provided by the laboratory of Jiangsu University of Science and Technology, China. The specific parameters are shown in

Table 1.

In all experiments, this paper uses the transfer learning strategy for model training, that is, using the pre-trained model weights on large-scale datasets as initialization, and fine-tuning on the target task. To improve training stability and efficiency, model parameters are initialized using pre-trained weights obtained from the COCO2017 dataset. This strategy not only helps the model to converge quickly in the early stage, but also enhances the generalization ability of the model, especially showing stronger robustness under non-ideal data such as small samples and pseudo-labels. All models are trained under a unified training strategy to ensure the fairness and comparability of the results. The training image is uniformly scaled to a resolution of 640 × 640, the optimizer is AdamW, the batch size is set to 16, and the upper limit of training rounds is 100. During the training process, the Early Stopping strategy is not enabled, but a complete iteration is performed to ensure that the model fully converges. At the same time, hybrid precision training (AMP) was enabled in all experiments to improve computational efficiency. Except for the backbone loading COCO pre-training weight, other training parameters were consistent in each experiment.

4.3. Evaluation Indicators of the Model

Four evaluation metrics—mean average precision (

mAP), FLOPs, model parameters, and FPS—are adopted to compare and evaluate different segmentation models. Among them, mAP@0.5 represents the average precision when the intersection over union (IoU) threshold is 0.5, which is used to measure the basic segmentation ability of the model. However, mAP@ (0.5:0.95) takes the average value in the range of IoU threshold from 0.5 to 0.95 (step size is 0.05), which more strictly reflects the accurate fitting ability of the model to the target boundary, and is a more authoritative evaluation standard in the current instance segmentation task. The mean average precision (

mAP)calculation relies on two basic concepts: Precision and Recall. The precision rate represents how many of the samples predicted as positive samples are real positive samples, which is defined as follows:

The recall rate represents how many of all true positive samples are correctly predicted by the model, defined as:

On this basis, the precision and recall curves under different IoU thresholds are calculated, and the average precision (

) can be obtained. The integral form is defined as follows:

The Mean Average Precision (

) is the average of all categories of APs, expressed as:

where

(True Positive) refers to the number of correctly predicted positive instances;

(False Positive) denotes the number of negative instances incorrectly predicted as positive;

(False Negative) represents the number of positive instances incorrectly predicted as negative;

stands for the precision value at a specific recall level

;

is the area under the precision–recall curve for a single class;

indicates the total number of object categories; and

(Mean Average Precision) refers to the average of

across all classes.

FLOPs are used to quantify the computational resources required by the model in the reasoning stage. The smaller the FLOPs, the higher the computational efficiency. The parameter quantity reflects the storage requirements and size of the model, which is particularly critical for edge device deployment.

4.4. Experiment Results and Analysis

4.4.1. Refine the Experiment

The experimental results are shown as presented in

Table 2. The YOLO-SA model achieves 0.410 on the mAP(mask)@0.5 indicator, which is 2.2% higher than the 0.401 of the baseline model. The model also achieved 0.208 under the stricter mAP(mask)@0.5:0.9 evaluation criteria, which is 2.0% higher than the baseline model 0.204. The YOLO-SD model also shows competitive performance. The model reaches 0.406 on the mAP(mask)@0.5 index, which is 1.2% higher than the baseline and slightly lower than YOLO-SA. However, on the comprehensive index of mAP(mask)@0.5:0.9, YOLO-SD and YOLO-SA reached the same level of 0.208, indicating that the two models have stable detection and segmentation capabilities in different IoU threshold ranges. The floating-point operations (FLOPs) of the YOLO-SA model is 142.5 G, which is only 0.4% higher than the baseline model’s 142.0 G. This small increase in computational overhead is acceptable. The parameters of the model remain at 27.6 M, which is almost consistent with the baseline model, indicating that the introduction of the SimAM attention mechanism does not significantly increase the storage requirements of the model. In contrast, the YOLO-SD model performs better in efficiency optimization. The FLOPs of the model was reduced to 140.5 G, a decrease of 1.1% compared to the baseline, and the amount of parameters was reduced to 26.0 M, a decrease of 5.8%. This shows that the design of YOLO-SD not only maintains the detection accuracy but also effectively reduces the computational complexity and storage requirements of the model, providing better feasibility for actual deployment.

Combining the performance of the two dimensions of accuracy and efficiency, both improved schemes achieve performance improvement based on the baseline model YOLO11l-seg. The YOLO-SA model takes into account both accuracy and speed, and can obtain stable gain without large modification of the model structure. YOLO-SD is more suitable for lightweight deployment. Although its accuracy has not been greatly improved, it has achieved good operator-level savings. The combination of the two needs to be further optimized. There is still much room for improvement in adding feature alignment and designing its fusion mechanism more reasonably.

4.4.2. Comparison with Other Models

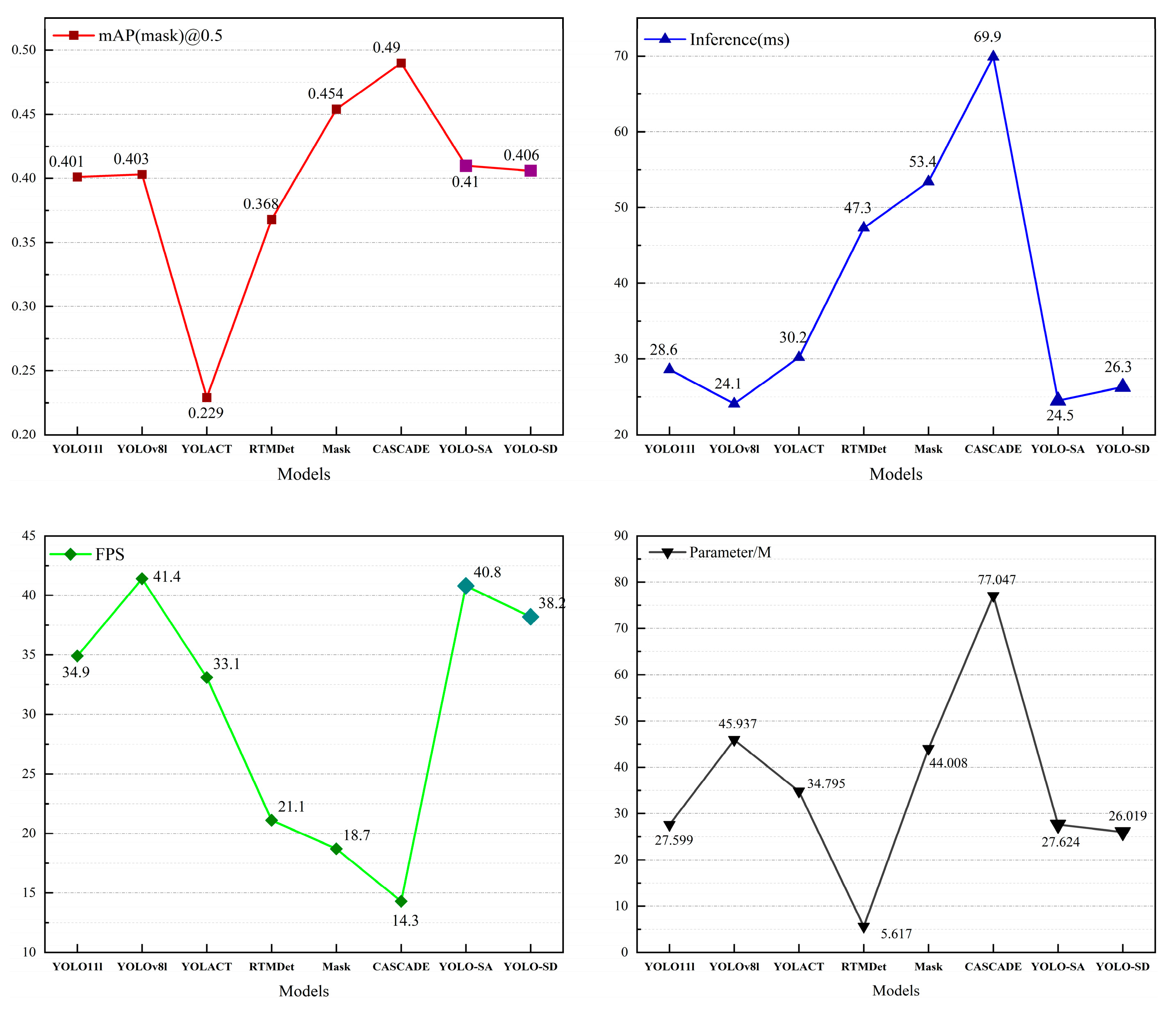

To further validate the effectiveness of the proposed method, this study compares the improved YOLO11 model with state-of-the-art instance segmentation algorithms, including YOLOv8l, YOLACT, RTMDet-ins-tiny, Mask R-CNN, Cascade Mask R-CNN, and the original YOLO11 baseline. The evaluation metrics include mAP@0.5, inference time, FPS, FLOPs, and model parameters, with detailed results presented in

Table 3 and

Figure 6.

The comparative results reveal that YOLO-SA achieves the highest segmentation accuracy among all tested models, with a mean average precision of 0.410—an improvement of approximately 2.24% over YOLO11l. YOLO-SD also performs favorably, attaining a mean average precision of 0.406, which is 1.25% higher than the baseline. In terms of inference speed, YOLO-SA reduces latency from 28.6 ms to 24.5 ms, representing a 14.3% performance gain, while YOLO-SD achieves a latency of 26.3 ms, corresponding to an 8% acceleration. Notably, YOLO-SD maintains the smallest parameter count and the lowest computational cost across all models. Compared to YOLOv8l, YOLO-SA improves accuracy by 1.74%, reduces parameters by over 40%, and lowers FLOPs consumption by nearly 36%. Although the segmentation accuracy of YOLO-SD is slightly lower than that of YOLO-SA, its segmentation performance is still better than that of YOLOv8l, and it has a higher accuracy. Importantly, compared with the 45.94 M parameters of YOLOv8l, YOLO-SD requires only 2.602 M parameters, and the computational complexity is lower; only 140.5 GFLOPs and 220.7 GFLOPs are required. These results highlight the advantages of YOLO-SD in efficiency and accuracy, making it a more balanced and practical real-time deployment model. For models designed for real-time scenarios, YOLACT and RTMDet-ins-tiny achieve mean average precisions of only 0.229 and 0.368, respectively—significantly lower than the proposed models. In contrast, while Mask R-CNN and Cascade Mask R-CNN attain higher accuracies of 0.454 and 0.490, this is accompanied by a substantial increase in model size and computational cost. These two models also exhibit significantly prolonged inference times (53.4 ms and 69.9 ms) and parameter scales exceeding 44 M and 77 M, respectively.

To provide a more intuitive performance comparison, line plots are visualized for key evaluation metrics including accuracy (mAP), inference latency, FPS, and parameter count. These visualizations clearly demonstrate that YOLO-SA and YOLO-SD consistently achieve strong segmentation accuracy while maintaining low latency, high throughput, and compact model size. Compared to mainstream real-time and high-accuracy models, our proposed methods offer a favorable trade-off between precision and efficiency, making them well-suited for practical deployment.

Figure 7 shows the instance segmentation results of multiple models on complex urban street scenes in the Cityscapes dataset. In order to more clearly show the performance differences of each model, yellow arrows are added to the figure to point out the common types of errors such as instance fragmentation and missed detection. In the prediction of YOLACT, RTMDet-ins-tiny, and Mask R-CNN, it can be observed that some objects (such as vehicles or pedestrians) are wrongly segmented into multiple discontinuous masks. This shows that these models are difficult to maintain the integrity of instances when dealing with occluded or dense targets. In the detection results of YOLOv8 and YOLO11, there is a problem of redundant detection boxes in the yellow box area. Sometimes the model will generate two repeated borders for the same object, resulting in detection redundancy, which will not only affect the interpretability of the model but also reduce the efficiency of subsequent processing.

In contrast, YOLO-SA and YOLO-SD show more stable segmentation performance. They can achieve only one coherent mask for each object, avoid duplicate boxes, and maintain high accuracy, especially in crowded and occluded scenes.

4.4.3. Ablation Experiment

To verify the effectiveness of individual components and their synergistic performance, we conducted ablation experiments by incrementally incorporating SimAM, DySample, and SPDConv into the baseline model. The resulting performance metrics (based on the baseline YOLO11l) are summarized in

Table 4.

As presented in

Table 4, integrating SimAM alone elevates the mask mAP@0.5 to 0.410, demonstrating that this module enhances the model’s ability to focus on critical features and precisely localize object boundaries. Adding DySample independently yields a mask mAP@0.5 of 0.405, indicating its utility in improving segmentation accuracy with minimal additional computational overhead. In contrast, introducing SPDConv alone leads to a noticeable drop in mask mAP@0.5 to 0.365, suggesting that SPDConv may compromise the model’s capacity to capture local edge information during feature extraction, resulting in accuracy degradation.

When combining DySample and SPDConv (YOLO11 + DS + SPD), the mask mAP@0.5 rebounds to 0.406, with parameters reduced to 26.0 M (from 27.5 M) and FLOPs slightly lowered to 140.5 G, confirming that their joint application achieves a degree of lightweight optimization without sacrificing accuracy. However, integrating all three modules (SimAM, DySample, and SPDConv) does not yield additive performance gains; instead, the mask mAP@0.5 drops to 0.405, indicating suboptimal synergy between the components. This suggests that further refinement of the fusion strategy is required to leverage the strengths of each module effectively.