Research on Knowledge Graph Construction and Application for Online Emergency Load Transfer in Power Systems

Abstract

1. Introduction

2. Fault Disposal Plan Information Extraction Technique

2.1. Text Content of Power Grid Fault Disposal Plan

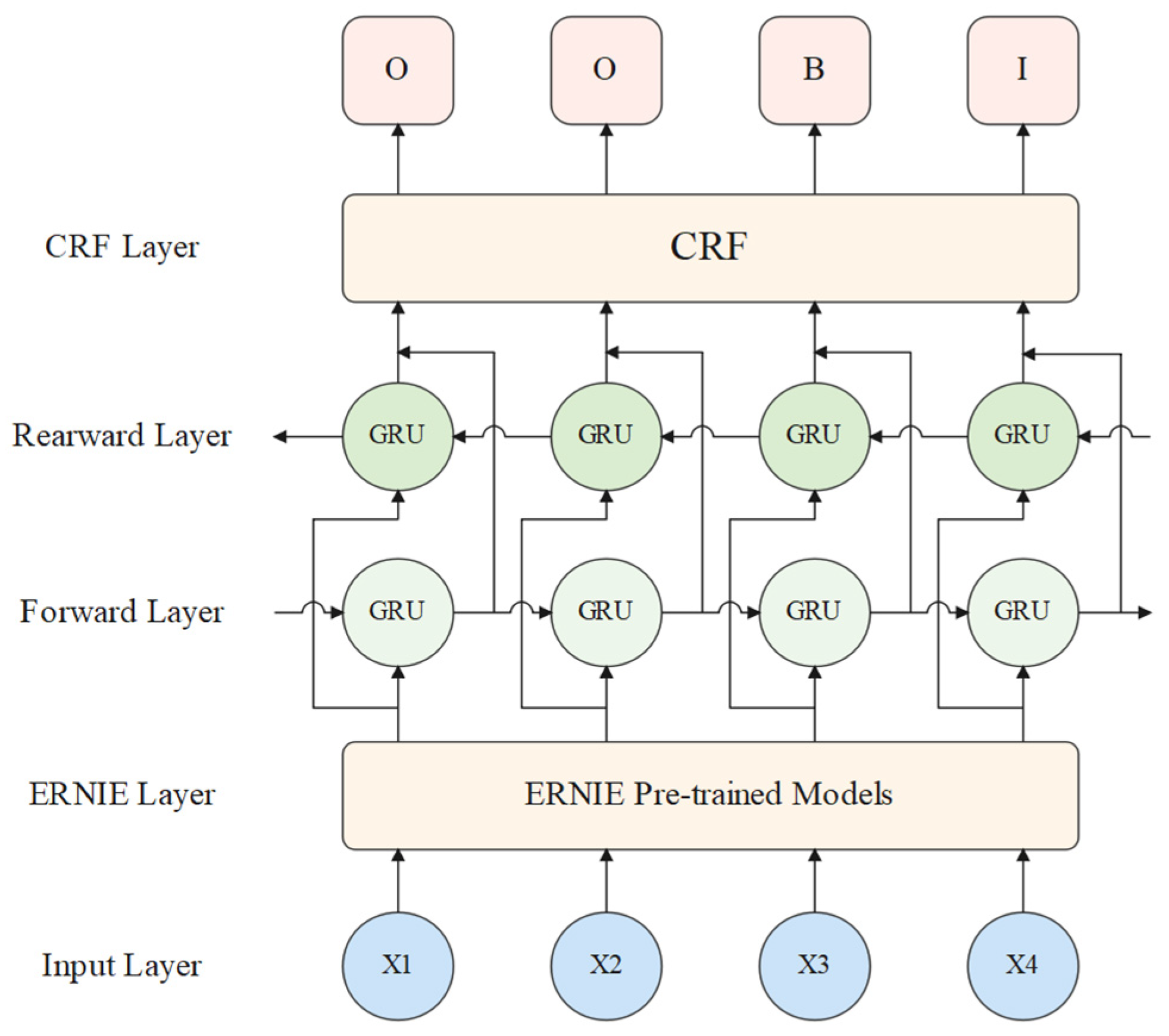

2.2. ERNIE-BiGRU-CRF Named Entity Recognition Model

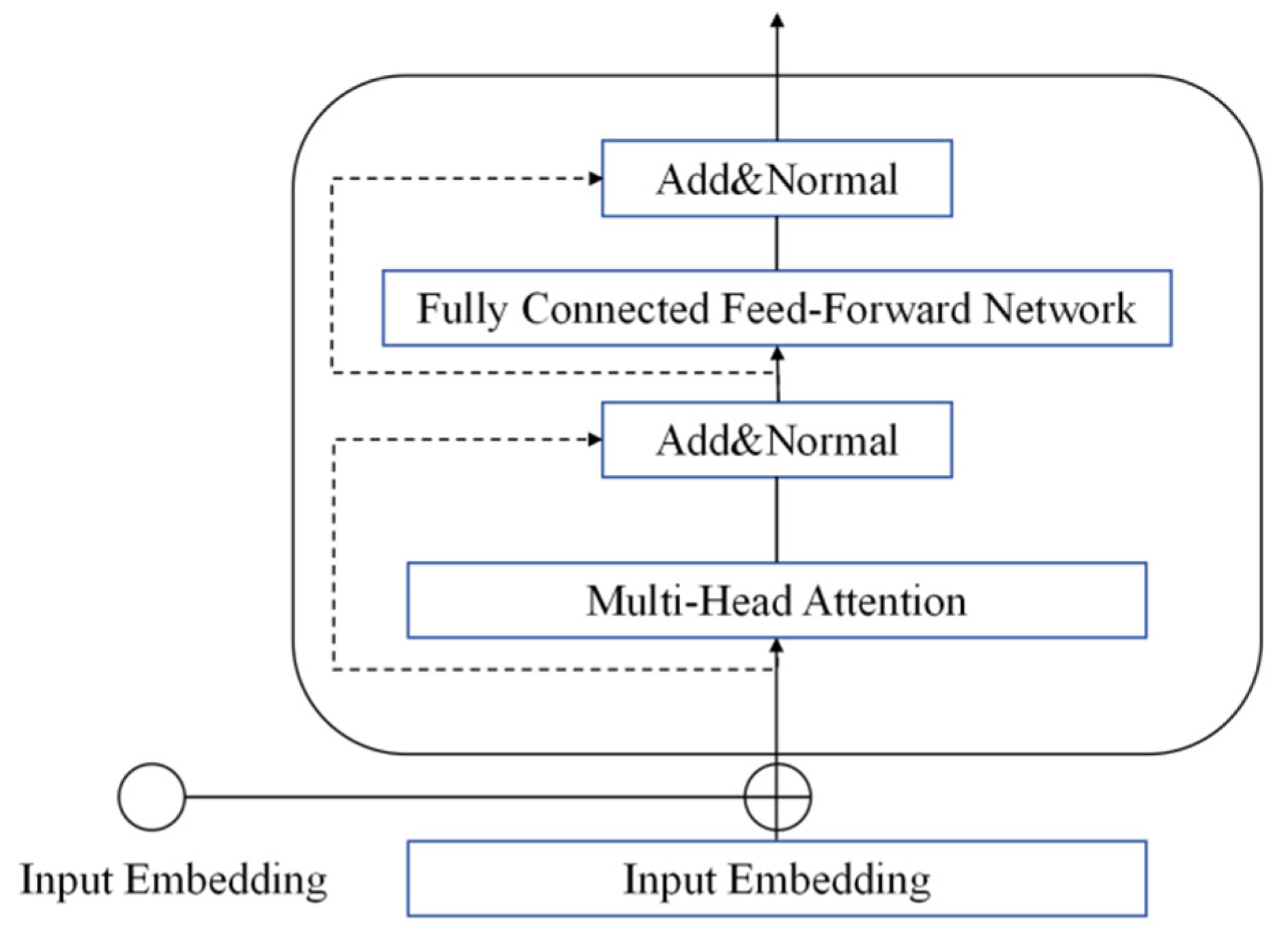

2.2.1. ERNIE Pre-Training Model

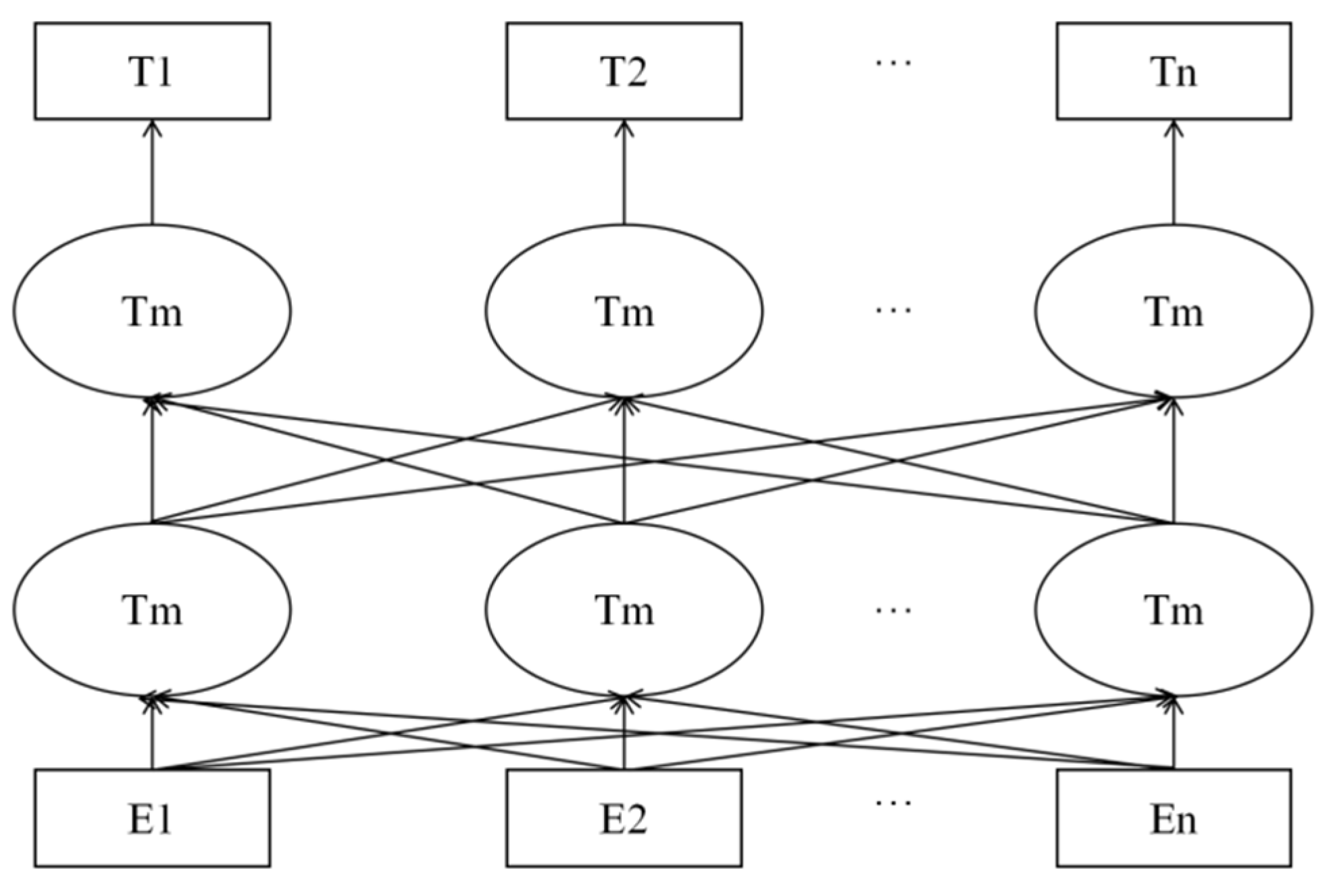

2.2.2. BiGRU Model

2.2.3. CRF Layer

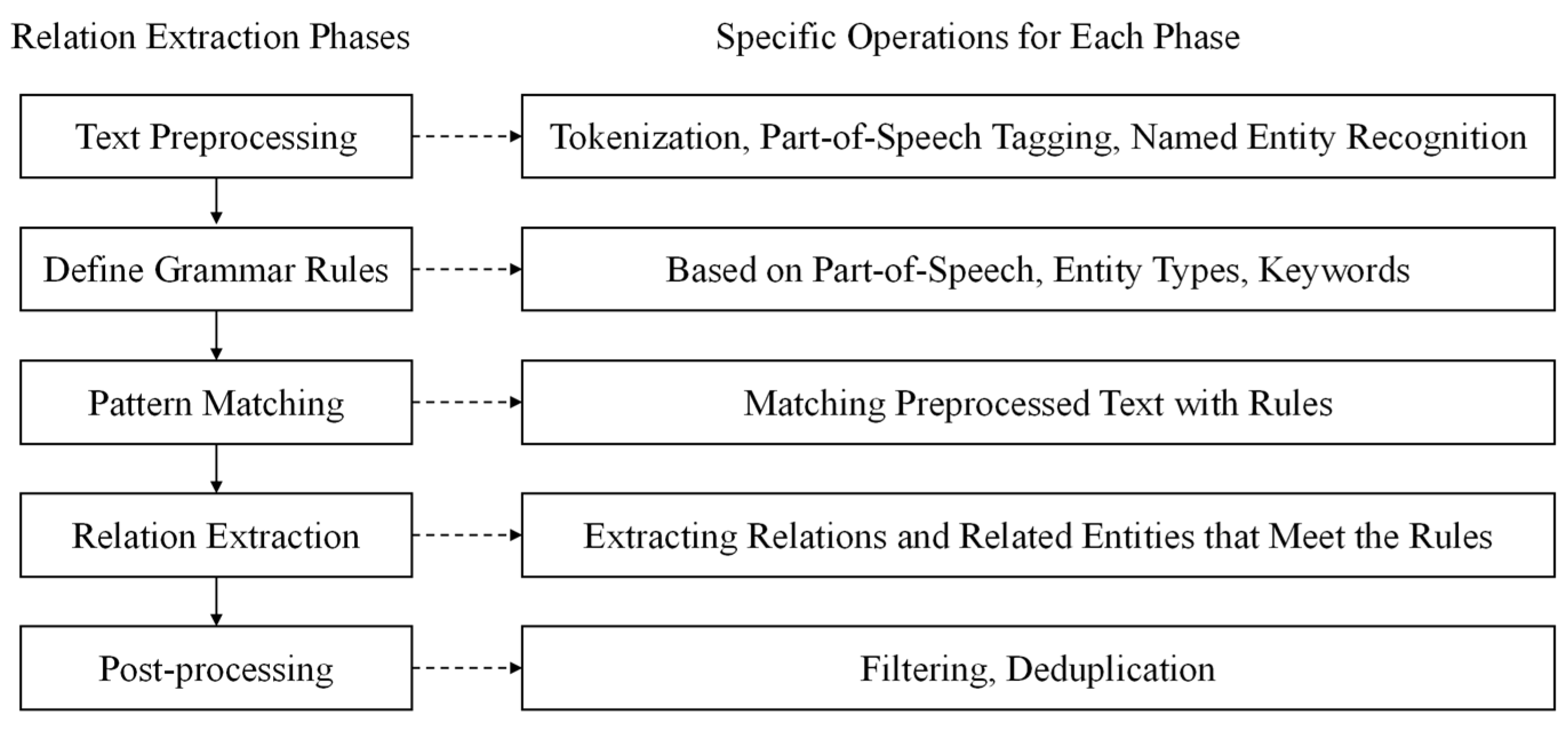

2.3. Grammatical Rule-Based Relational Extraction

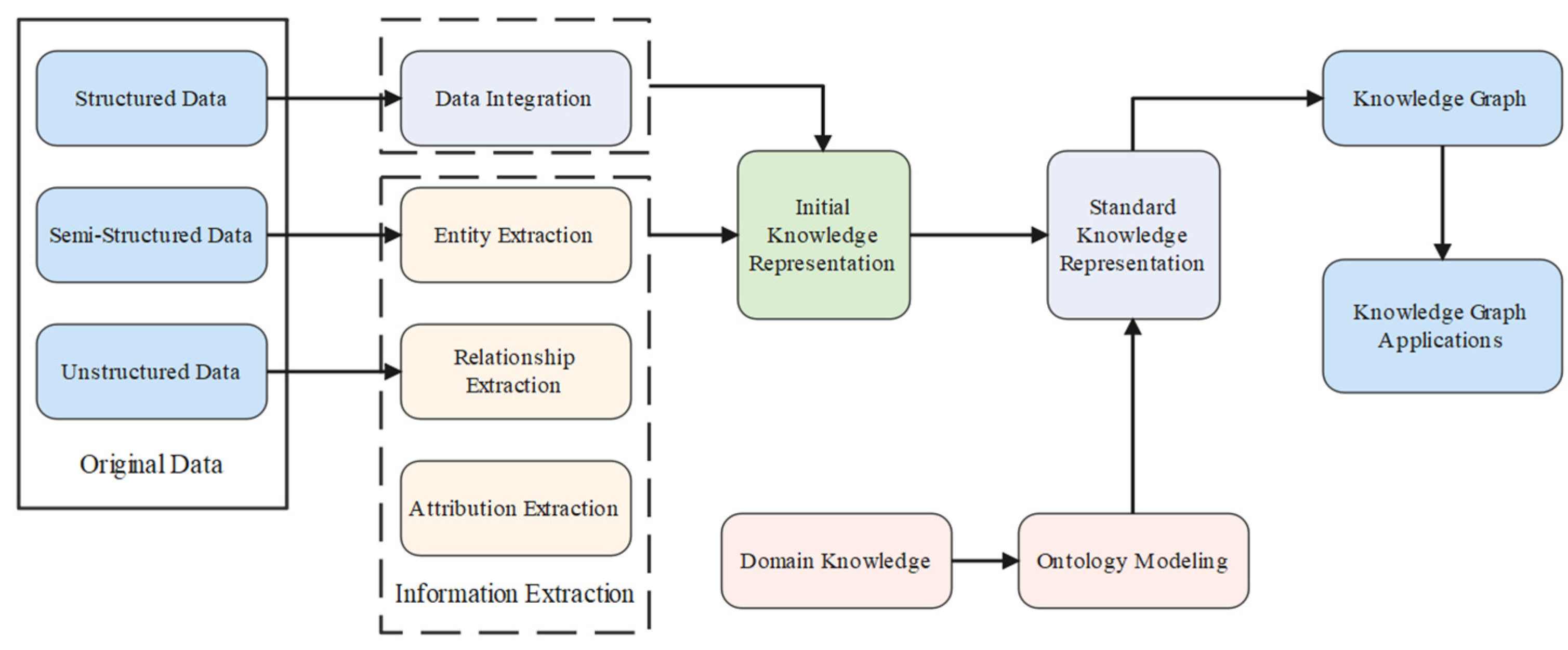

3. Construction of an Electronic Library of Fault Disposal Plans Based on Knowledge Graphs

3.1. Domain Knowledge Ontology Modeling

3.2. Domain Knowledge Graph Construction

4. Case Study Analysis

4.1. Dataset and Experimental Parameter Settings

4.2. Analysis of the Effectiveness of ERNIE-BiGRU-CRF

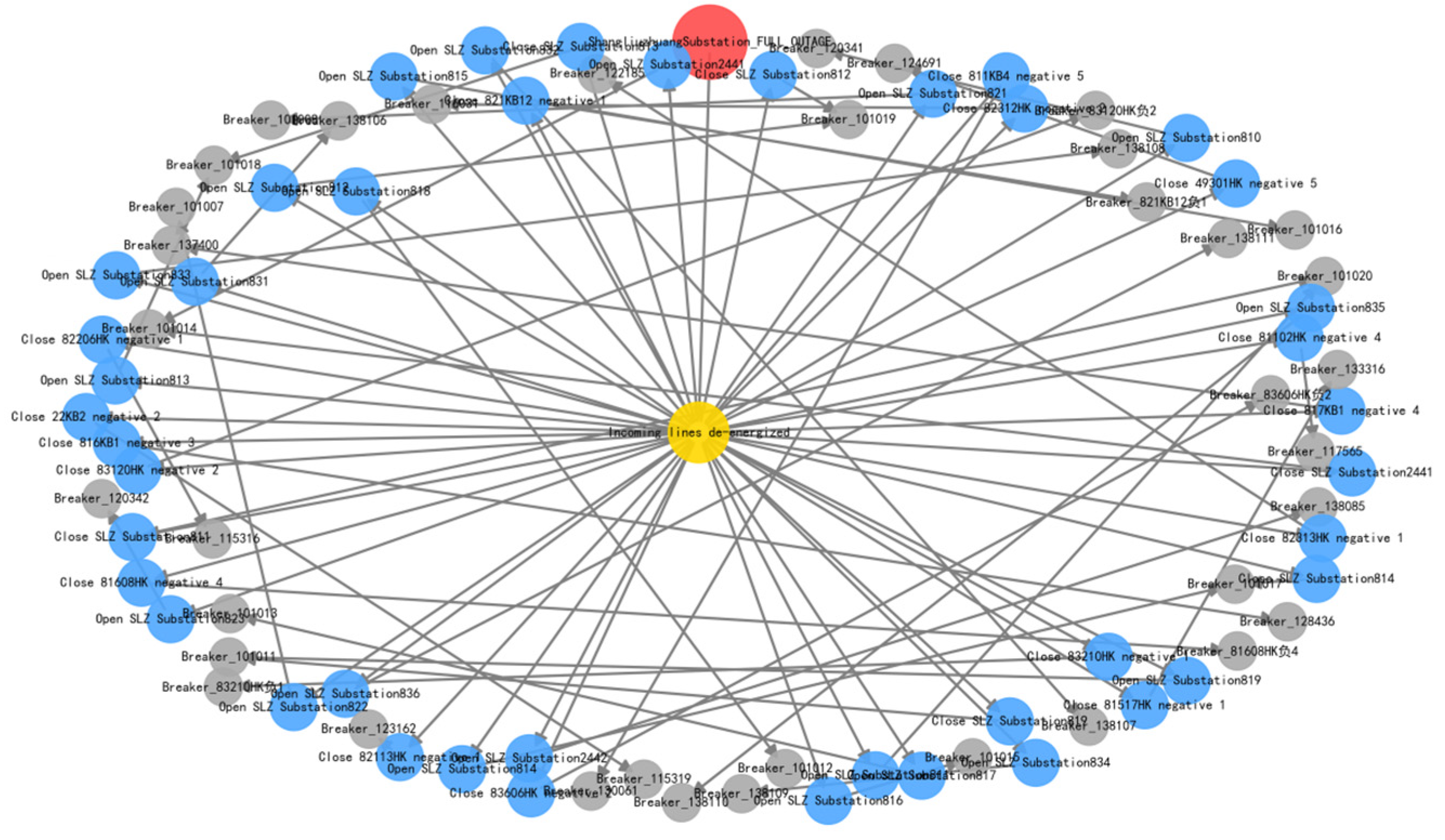

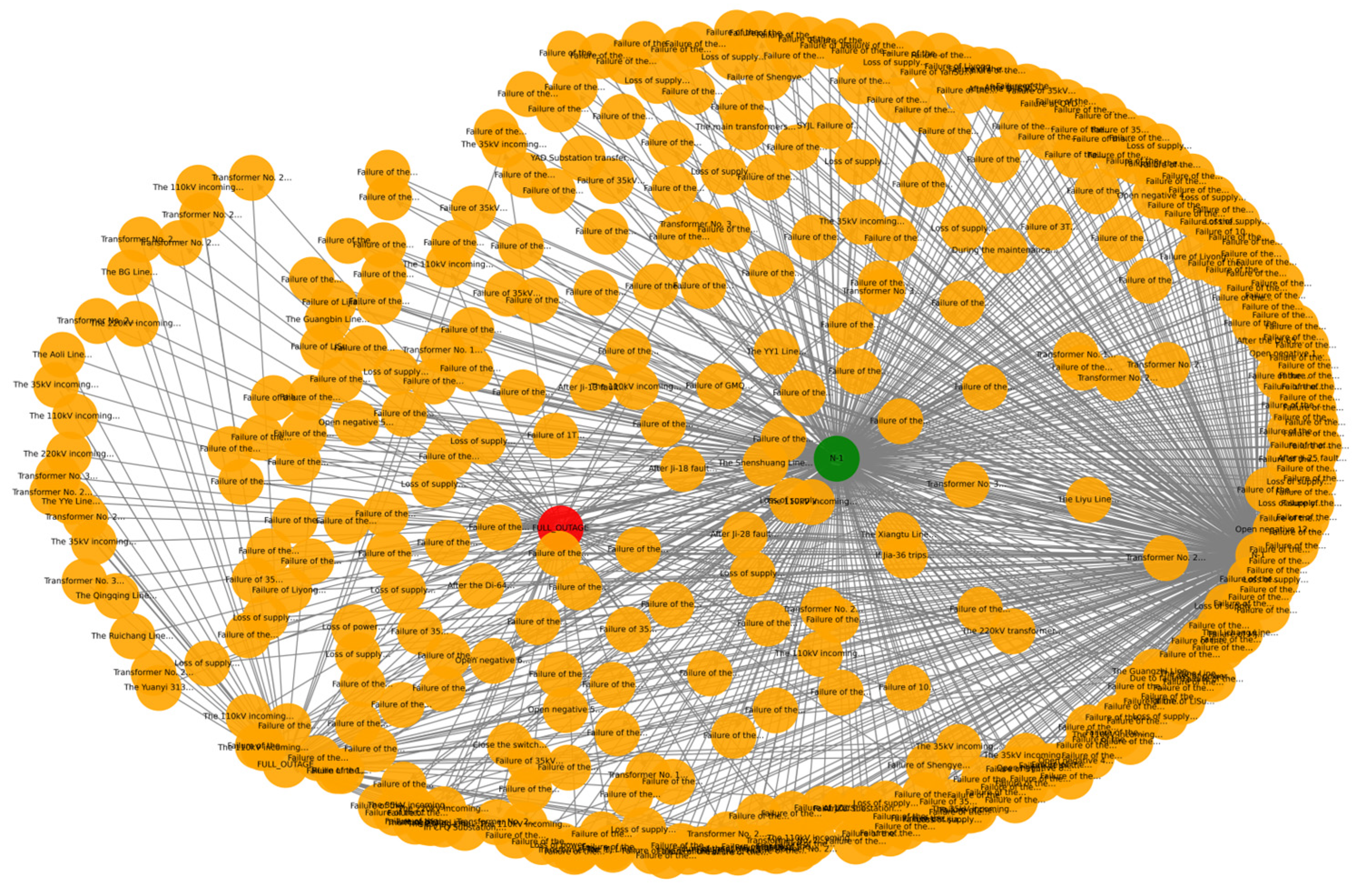

4.3. Knowledge Graph Construction for Power Grid Fault Disposal

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tsiaras, E.; Papadopoulos, D.N.; Antonopoulos, C.N.; Papadakis, V.G.; Coutelieris, F.A. Planning and assessment of an off-grid power supply system for small settlements. Renew. Energy 2020, 149, 1271–1281. [Google Scholar] [CrossRef]

- Iturrino Garcia, C.A.; Bindi, M.; Corti, F.; Luchetta, A.; Grasso, F.; Paolucci, L.; Piccirilli, M.C.; Aizenberg, I. Power quality analysis based on machine learning methods for low-voltage electrical distribution lines. Energies 2023, 16, 3627. [Google Scholar] [CrossRef]

- Jain, S.; Satsangi, A.; Kumar, R.; Panwar, D.; Amir, M. Intelligent assessment of power quality disturbances: A comprehensive review on machine learning and deep learning solutions. Comput. Electr. Eng. 2025, 123, 110275. [Google Scholar] [CrossRef]

- Wu, C.; Cui, Z.; Xia, Q.; Yue, J.; Lyu, F. An overview of digital twin technology for power electronics: State-of-the-art and future trends. IEEE Trans. Power Electron. 2025, 40, 13337–13362. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar] [CrossRef]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar] [CrossRef]

- Meng, F.; Li, J.; Li, T.; Xu, J.; Gao, H.; Qiao, Y. Matching method for power grid fault handling plan based on semantic enhancement. Electr. Power 2025, 58, 237–244. [Google Scholar]

- Xiao, D.; Zhang, Y.; Xu, X.; Liu, L.T.; Li, X. Research and implementation of power grid fault handling plan information extraction based on Bert. Electr. Power Inf. Commun. Technol. 2023, 21, 26–32. [Google Scholar] [CrossRef]

- Yu, J.; Shan, L.; Pi, J.; Zhang, Y.; Qiao, Y.; Wang, Y. Analysis method of fault handling plan based on knowledge graph. Electr. Autom. 2023, 45, 75–78. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Zhou, Y.; Lin, Z.; Tu, L.; Song, Y.; Wu, Z. Big Data and Knowledge Graph Based Fault Diagnosis for Electric Power Systems. EAI Endorsed Trans. Ind. Netw. Intell. Syst. 2022, 9, 1. [Google Scholar] [CrossRef]

- Yu, J.; Wang, X.; Zhang, Y.; Liu, Y.; Zhao, S.A.; Shan, L.F. Construction and application of knowledge graph for intelligent dispatching and control. Power Syst. Prot. Control 2020, 48, 29–35. [Google Scholar]

- Li, C.; Wang, B. A knowledge graph method towards power system fault diagnosis and classification. Electronics 2023, 12, 4808. [Google Scholar] [CrossRef]

- Zhang, Z.; Han, X.; Liu, Z.; Jiang, X.; Sun, M.; Liu, Q. ERNIE: Enhanced Language Representation with Informative Entities. arXiv 2019, arXiv:1905.07129. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) Network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of Gated Recurrent Unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar] [CrossRef]

- Santoso, J.; Setiawan, E.I.; Purwanto, C.N.; Yuniarno, E.M.; Hariadi, M.; Purnomo, M.H. Named Entity Recognition for Extracting Concept in Ontology Building on Indonesian Language Using End-to-End Bidirectional Long Short Term Memory. Expert Syst. Appl. 2021, 176, 114856. [Google Scholar] [CrossRef]

- Al-Nabki, M.W.; Fidalgo, E.; Alegre, E.; Fernández-Robles, L. Improving Named Entity Recognition in Noisy User-Generated Text with Local Distance Neighbor Feature. Neurocomputing 2020, 382, 1–11. [Google Scholar] [CrossRef]

| Entity Category | Entity Description | Entity Example |

|---|---|---|

| Fault Type | Classify faults | N-1, full shutdown |

| Fault Name | Describe the fault | Ji-13 fault at JZZ Substation |

| Fault Cause | Specific situations causing the fault | The fault caused the Ji-13 circuit breaker at JZZ Substation to trip |

| Operational Steps | Operational steps to take after the fault occurs | Open NK979 Negative 1, attempt to reclose Ji-13 circuit breaker. If reclosing fails, close 87808 Station Internal Negative 2 |

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| Transformer | 12 | Hidden Dimension | 768 |

| GRU_dim | 128 | Learning Rate | 5 × 10−5 |

| max_seq_len | 128 | batch_size | 16 |

| Dropout | 0.5 | epoch | 25 |

| Model | Precision/% | Recall/% | F1-Score/% |

|---|---|---|---|

| ERNIE-BiGRU-CRF | 76.63 | 88.89 | 82.30 |

| ERNIE-BiLSTM-CRF | 39.23 | 92.59 | 55.11 |

| BiGRU | 39.68 | 11.11 | 17.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lou, N.; Liu, S.; Yan, R.; Si, R.; Yu, W.; Wang, K.; Fan, Z.; Shan, Z.; Zhang, H.; Yu, X.; et al. Research on Knowledge Graph Construction and Application for Online Emergency Load Transfer in Power Systems. Electronics 2025, 14, 3370. https://doi.org/10.3390/electronics14173370

Lou N, Liu S, Yan R, Si R, Yu W, Wang K, Fan Z, Shan Z, Zhang H, Yu X, et al. Research on Knowledge Graph Construction and Application for Online Emergency Load Transfer in Power Systems. Electronics. 2025; 14(17):3370. https://doi.org/10.3390/electronics14173370

Chicago/Turabian StyleLou, Nan, Shiqi Liu, Rong Yan, Ruiqi Si, Wanya Yu, Ke Wang, Zhantao Fan, Zhengbo Shan, Hongxuan Zhang, Xinyue Yu, and et al. 2025. "Research on Knowledge Graph Construction and Application for Online Emergency Load Transfer in Power Systems" Electronics 14, no. 17: 3370. https://doi.org/10.3390/electronics14173370

APA StyleLou, N., Liu, S., Yan, R., Si, R., Yu, W., Wang, K., Fan, Z., Shan, Z., Zhang, H., Yu, X., Wang, D., & Zhang, J. (2025). Research on Knowledge Graph Construction and Application for Online Emergency Load Transfer in Power Systems. Electronics, 14(17), 3370. https://doi.org/10.3390/electronics14173370