1. Introduction

Rice, a staple for over half the world’s population, faces challenges from diseases and pests, which can lead to economic losses [

1]. Quickly identifying and treating these diseases is crucial. Traditional methods rely on expert sensory identification, which is subjective and time-consuming. The recent application of artificial intelligence, particularly machine learning, offers a more objective and efficient approach to rice disease recognition [

2]. At present, recognition methods mainly include traditional machine learning methods and deep learning methods, a branch of machine learning. Traditional machine learning recognition is mainly divided into four stages: data preprocessing, image segmentation, feature extraction and classification. Pritlmoy constructed a multilayer perceptron neural network based on color and texture features [

3]. Chao Ma trained a Support Vector Machine (SVM) classifier using HOG features as input, and obtained a disease classifier [

4]. Bikash proposed a rice disease image recognition technology based on Twin Support Vector Machine (TSVM) technology [

5]. Although traditional machine learning has achieved certain results in research on rice disease recognition, the feature extraction step requires the manual extraction of features from relevant samples, and people usually extract features through their relevant experience, which is subjective, and the large-scale manual extraction process is cumbersome and time-consuming.

Deep learning methods, which are becoming increasingly sophisticated [

6,

7], have an advantage over traditional machine learning. Deep learning methods abandon the cumbersome, subjective manual feature extraction steps to extract features through the model, and the recognition of accuracy and efficiency is greatly improved. Mohapatra et al. proposed an automatic recognition and diagnosis AlexNet model to make a diagnosis of three rice diseases using a transfer learning approach [

8]. Dengshan Li et al. proposed a video recognition system based on Fast-RCNN [

9]. Rahman et al. proposed a CNN architecture called Simple CNN [

10]. Saleem et al. used the Mutant Particles Swarm Optimization (MUT-PSO) Algorithm to search for the best CNN, to find the optimal CNN structure [

11]. Sathya et al. proposed a novel reconstructed disease awareness-convolutional neural network (RDA-CNN) [

12].

Deep learning methods are developing rapidly, and many scholars have begun to combine various techniques with backbone networks, allowing further improvements in model performance. The attention mechanism is a very effective method. Refs. [

13,

14,

15] used the method of the channel attention mechanism, by adding, e.g., Efficient Channel Attention (ECA) and Squeeze-and-Excitation (SE) attention to the backbone network and feature learning in the channel dimension. However, focusing on the information on the channel may not be enough, and the location of the features is also a kind of information that should not be ignored. Refs. [

16,

17]’s methods with spatial attention mechanisms, such as Coordinate Attention (CA) and Convolutional Block Attention Module (CBAM) attention mechanisms, are used. These methods can capture the location information of key features and suppress the weight of background location, resulting in improved model performance. The spatial attention mechanism can capture the positional information of features, but it cannot establish the connection between different features, and the self-attention approach effectively improves this problem. SA, MHSA, and Window-based Self-Attention methods obtain the strength of association between different blocks by comparing the different blocks. Refs. [

18,

19,

20] are good proofs of self-attention’s effectiveness. In addition to conventional supervised deep learning approaches, recent research has explored few-shot recognition and semi-supervised learning to address the challenge of limited labeled agricultural data. For example, Ref. [

21] proposes a novel few-shot plant disease recognition framework that leverages meta-learning and attention mechanisms to achieve robust performance even with scarce training samples. Such approaches are particularly valuable for real-world agricultural scenarios, where obtaining large-scale labeled datasets is often infeasible.

In addition to the attention mechanism, convolution is also an area that can be improved. Ref. [

22] proposed the use of transposed convolution and dilated convolution as the up-sampling operation and down-sampling operation in the feature pyramid, which achieved better performance than standard convolution. The replacement of standard convolution with depth-separable convolution and the use of deformable convolution as the feature extraction layer are proposed to achieve better detection than ordinary convolution [

23]. The flexibility of the deformed convolution allows it to adapt to the shape of the features. A Dynamic Snake convolution is proposed [

24], which utilizes the slender shape and variability of the convolution to segment the cardiac vascular dataset and the remote sensing road dataset with outstanding results.

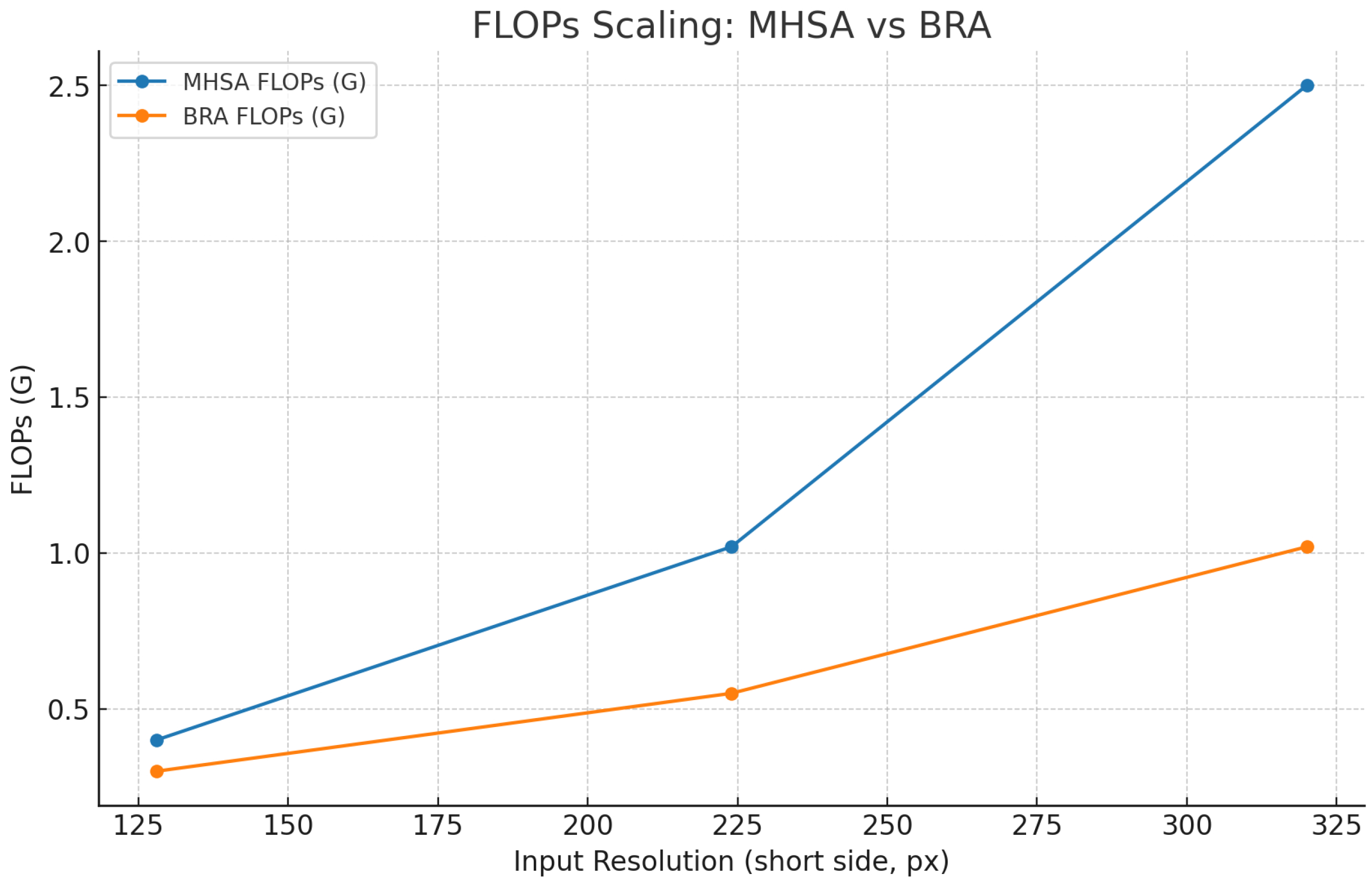

The methods described above take the addition of an attentional mechanism or replace the standard convolution to further improve recognition accuracy. However, these methods still have some drawbacks. The computational volume of the multi-head attention mechanism is too large. Although some works [

19,

25,

26,

27] have sparse processing of the multi-head attention mechanism; they still fail to solve this problem well. Although the method using depth-separable convolution greatly reduces the number of parameters without decreasing the accuracy, the invariance of the shape of its convolution kernel makes it ineffective for extracting disease features like multiple shapes. Dynamic convolution can adapt to feature shapes through offsets, but the number of convolution kernels still grows at the same rate as standard convolution, all to the second power of x. There is still room to reduce the number of parameters in the model.

Although the above methods have achieved some success in the direction of deep learning, there are still some flaws and shortcomings. Firstly, many methods do not construct multicategory disease datasets and cannot make judgments for multiple rice diseases in real conditions. Secondly, some of the above methods only focus on the local region of the image and cannot focus on the global dependency of the image to form contextual associations. Although methods such as self-attention solve this problem, considering that rice paddy areas are usually in remote, more complex terrain, deploying slower inference speeds with larger parametric quantities of transformers in computationally limited devices is still a major challenge at this point in time. Based on this, this paper proposes an improved hybrid CNN and Transformer architecture model - MobileViT_BiAK, in order to achieve lightweight and high accuracy. The model adopts Alterable Kernel Convolution, which can be adapted to different disease features by changing different shapes of convolution kernels, and its linearly increasing number of convolution kernels also ensures the advantage of being lightweight, and so it does not bring heavy computation. Meanwhile, the Bi-level Routing Attention method divides the attention process into two phases, in the first phase of coarse-grained filtering, retaining the k most relevant regions in the graph, and then in the second phase of fine-grained attention mechanism operation, which improves the problem of the huge computational volume of Multi-Head Self Attention. Overall, our contributions are three things:

A two-stage attention mechanism for capturing global dependencies is proposed, performing attention calculations at both coarse-grained and fine-grained levels. This approach reduces computational cost while maintaining accuracy, achieving lightweight performance.

Alterable Kernel Convolution is employed for feature extraction. Its dynamic nature allows it to better adapt to features of varying shapes, while its linearly growing parameter count effectively controls model complexity.

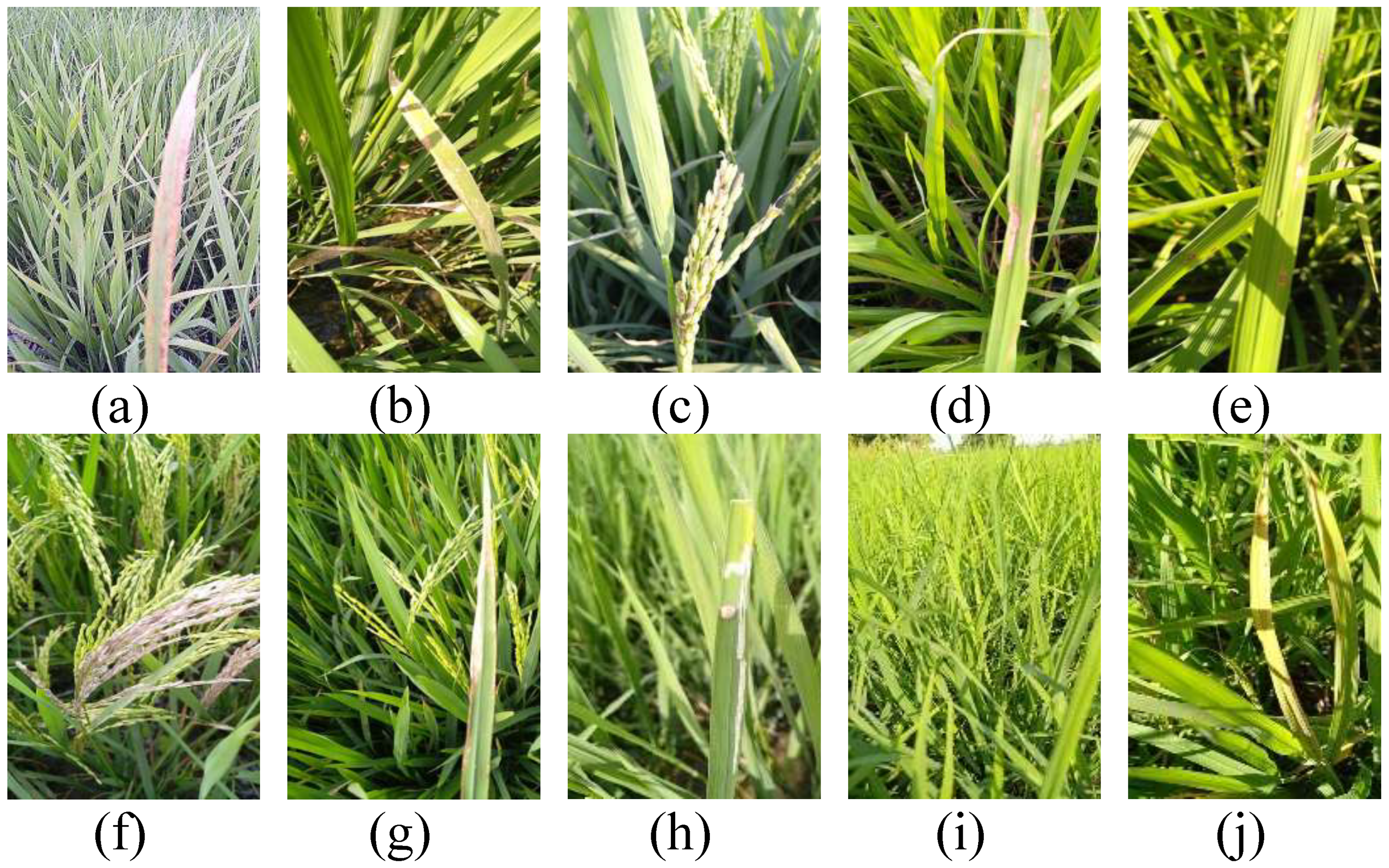

The proposed method performs well on a rice disease dataset containing 10 categories, accurately identifying targets even in complex background environments.

In this work, we selected MobileViT as the backbone because it uniquely integrates the advantages of CNNs for local feature extraction and Transformers for global dependency modeling, while maintaining a compact model size suitable for deployment on resource-limited agricultural devices. Compared with other lightweight architectures such as MobileNetV3, EfficientNet, and ShuffleNet, MobileViT demonstrated superior accuracy–efficiency trade-offs in our preliminary experiments on agricultural image datasets. Moreover, its modular architecture facilitates the seamless integration of enhanced convolution and attention modules, making it an ideal foundation for our proposed AKConv–BRA framework.

3. Methods

3.1. MobileViT

MobileViT [

38] is a lightweight model released by Apple in 2021 that achieves competitive performance in various computer vision tasks while maintaining low computational complexity. In our proposed method, MobileViT serves as the backbone network, providing an efficient combination of convolutional layers for local feature extraction and transformer blocks [

39] for capturing long-range dependencies. The processing pipeline follows four main steps: (1) input image preprocessing and patch embedding; (2) local feature extraction using initial convolutional layers; (3) global context modeling via transformer blocks and (4) classification through a global pooling layer and a fully connected layer. As shown in

Table 1, the MobileViT model structure consists of multiple layers including convolution operations, MV2 modules, and MobileViT blocks. This detailed breakdown highlights the architecture’s efficiency in processing rice disease classification tasks. To enhance the baseline MobileViT, we integrate Alterable Kernel Convolution (AKConv) into the convolutional stages to improve adaptability to disease features of varying shapes, and we replace the standard multi-head self-attention in the transformer stages with Bi-level Routing Attention (BRA) to reduce computational cost while maintaining global feature modeling capability. The contribution of each main step is as follows: (1) MobileViT backbone: provides a compact yet powerful architecture capable of efficient local-global feature integration; (2) Alterable Kernel Convolution: adapts receptive field shapes dynamically to better match the morphological diversity of rice disease symptoms; (3) Bi-level Routing Attention: selectively focuses on the most relevant spatial regions, achieving sub-quadratic complexity and improving inference efficiency on high-resolution images.

3.2. The Attention Mechanism

In our proposed method, the original Multi-Head Self-Attention (MHSA) modules within MobileViT blocks are replaced by Bi-level Routing Attention (BRA). This modification introduces a two-stage attention process: (1) coarse-grained region selection, which identifies the top-

k most relevant spatial regions for each query and (2) fine-grained token-level attention applied only to the selected regions. This design reduces the computational complexity from

in MHSA to approximately

, while maintaining global feature modeling capabilities, where

H and

W denote the spatial height and width of the feature map, respectively. By embedding BRA into the MobileViT backbone, the model achieves improved efficiency and scalability on high-resolution agricultural images. Similar to humans, the attention mechanism in deep learning also processes input data faster and more accurately by focusing on the more important parts, achieving better performance and generalization ability. Transformer uses multi-head attention replacement convolution for global context modeling. However, the multi-head attention mechanism computes the correlation between them for all the windows into which the input samples are divided. There has been a lot of attention on this problem, with many ideas being proposed to use sparse key-value pairs. These make it only necessary to concentrate on a few key-value pairs between strong correlations to obtain a global representation, such as local windows [

26], axial stripe [

25], and dilated windows [

27]. However, these methods are manually designed windows, whereas Bi-level Routing Attention (BRA) [

35] is achieved by locating the few most relevant key-value pairs. The specific operation of the mechanism is divided into two steps: finding the most relevant regions and token-to-token attention.

Finding the most relevant regions. Given a 2D input feature map

, we divide it into regions of size

, resulting in reshaped feature map

. Then, we derive the query, key, and value tensors with linear projections as shown in Equation (

1):

where

are the projection weights for query, key, and value, respectively. The average is then calculated for each region separately to derive region-based queries and keys

.

Next, the semantic adjacency matrix

is computed by matrix multiplication of

and

as shown in Equation (

2):

measures the semantic relevance between pairs of regions. Only the top-

k most relevant regions are retained on the top

k using the row-wise top

k operator. This gives the routing index matrix

, defined in Equation (

3):

Token-to-token attention. With the region-to-region routing index matrix

, we can filter out the least relevant tokens and focus on the

k routing regions indexed by

and gather all key value pairs as shown in Equation (

4):

where

are the key and value tensors gathered.

Finally, attention is paid to the key-value pairs gathered, with an additional Local Context Enhancement (LCE) term [

40], as shown in Equation (

5):

3.3. The Convolution

In the convolutional component of our architecture, we replace the standard convolution layers in MobileViT with Alterable Kernel Convolution (AKConv). Unlike standard convolution, which uses fixed square kernels and whose parameter count increases quadratically with kernel size, AKConv allows dynamic, irregular kernel shapes whose parameter growth is linear with respect to kernel size. This adaptability enables the kernels to better match the morphological diversity of rice disease symptoms (e.g., circular lesions, elongated streaks) while keeping the model lightweight. Compared with deformable convolution, AKConv achieves a similar receptive field flexibility without incurring significant computational overhead, making it better suited for real-time agricultural applications. However, the standard convolution still suffers from several limitations. Specifically, the standard convolution uses kernels, and as the kernel size increases, the number of convolutional parameters increases quadratically.

Moreover, standard convolution has a fixed kernel shape, which cannot adapt well to the input samples of varying shapes and structures. This rigidity affects the accurate extraction of local features and ultimately affects overall performance.

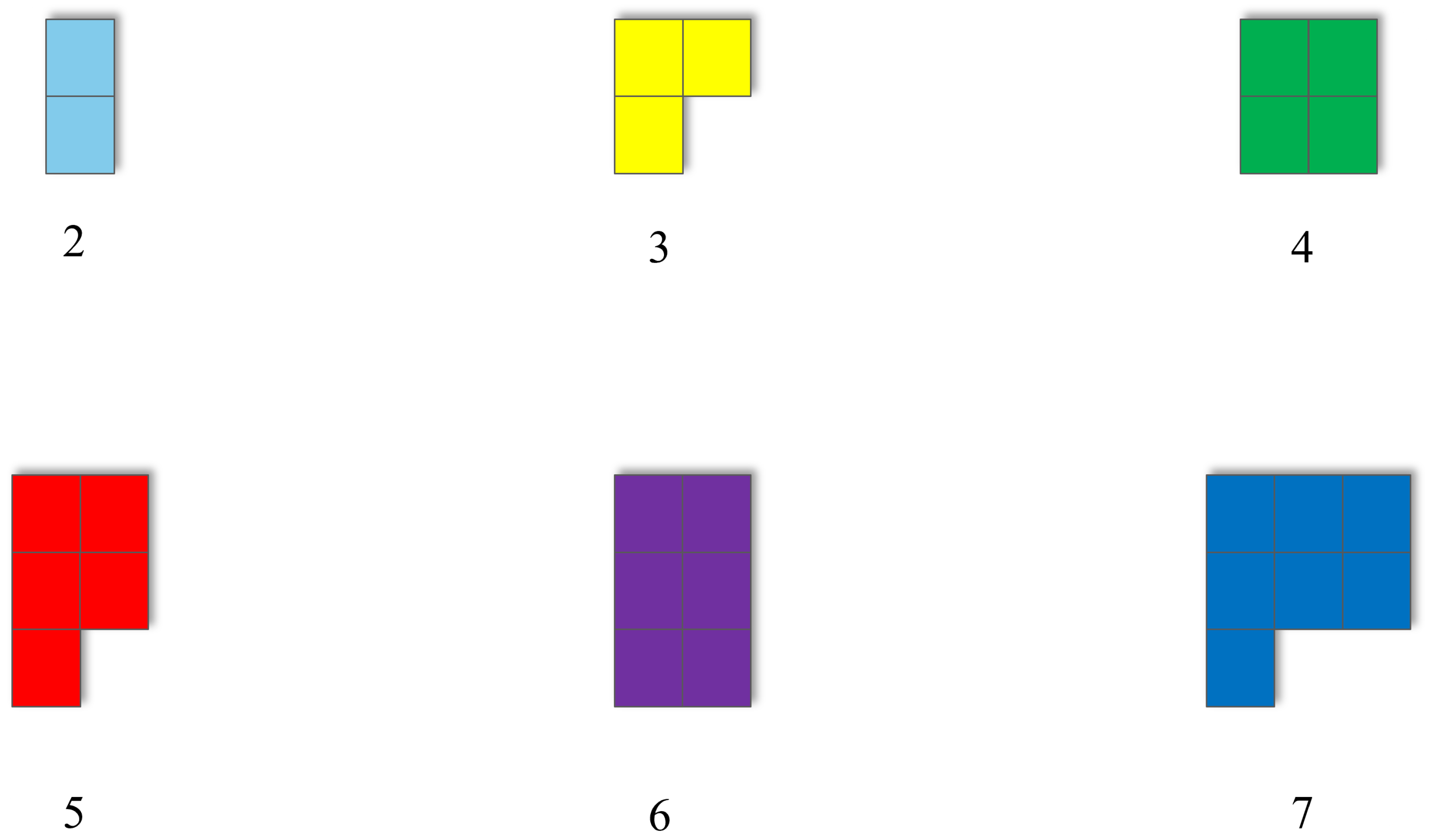

To overcome these issues, we introduce a more flexible convolution mechanism called Alterable Kernel Convolution (AKConv) [

41], whose parameters grow linearly and whose shape can adapt to the input. An illustration of AKConv is shown in

Figure 2. It takes the upper-left corner

as the initial sampling coordinate. The convolution operation corresponding to position

is defined as shown in Equation (

6):

Here, w denotes the convolutional parameter. AKConv extracts features using the following methods: stacking convolutional features in the spatial dimension and then extracting the features using a convolution operation with a stride of 3; transforming the features into four dimensions and then extracting the features using Conv3d with a stride and kernel size of ; or stacking the features of the channel dimensions into and then reducing the dimension to using a convolution.

AKConv has a dynamic size of the convolution kernel, so that the parameter growth trend of this convolution is linear, and the number of parameters can be controlled within a small range. Moreover, the shape of the convolution kernel is not constrained to the square structure of standard convolutions, allowing it to dynamically adapt to the size of different features. Based on these advantages, it can be effectively applied in rice disease recognition.

AKConv was chosen over other variants of adaptive convolution, such as deformable convolution, because it achieves adaptive receptive field adjustment with the growth of linear parameters, avoiding the significant computational overhead often introduced by deformable kernels. This property is especially critical in real-time agricultural disease monitoring, where computational resources are limited. A detailed empirical comparison between AKConv, standard convolution, depthwise separable convolution, and DCNv2 in terms of parameter efficiency, accuracy, and inference time is provided in

Section 5.3, further supporting the motivation for adopting AKConv.

3.4. Proposed Methods

The current rice disease recognition system suffers from limited dataset variety, low recognition accuracy, and poor portability. MobileViT is a lightweight model that combines the advantages of spatial inductive bias in convolutional neural networks (CNNs), enabling the model to adapt well to different applications via learnable weights and accelerating convergence. Simultaneously, it integrates the transformer’s ability to focus on global information, allowing the model to attend not only to local content but also to information with strong relevance across different spatial positions. This dual capability addresses the current challenges of low accuracy and weak portability in rice disease recognition.

Our idea is to improve the feature extraction component of the network, which specifically refers to the convolutional and attention modules responsible for learning low- and mid-level representations from the input images, rather than the full encoder. Rice disease symptoms manifest in diverse forms; for instance, brown spot lesions are circular, whereas bacterial leaf blight exhibits elongated, striped patterns. Therefore, by using Alterable Kernel Convolution (AKConv) we can better extract such shape-varied disease features. Unlike standard convolution, AKConv has a linear parameter growth trend, which enables control over the parameter count while enhancing model performance. This makes it possible to improve the recognition accuracy of rice diseases with only a small increase in the model size.

Considering the Multi-Head Self-Attention (MHSA) mechanism in MobileViT, “multi-head” indicates that the output is split into

n blocks along the channel dimension, and each block applies a separate set of projection weights. MHSA is defined as shown in Equation (

7):

where

denotes the output of the

i-th attention head, and

,

,

are the corresponding query, key, and value projections.

is the weight matrix used to combine all heads. MHSA has a complexity of

, that may result in high computational costs, overfitting, and weak generalization.

To address these issues, we replace MHSA with Bi-level Routing Attention (BRA), which first filters irrelevant regions at a coarse level and then applies attention at a fine-grained level to the remaining relevant regions. BRA enables a more efficient extraction of globally correlated features and has a computational complexity of .

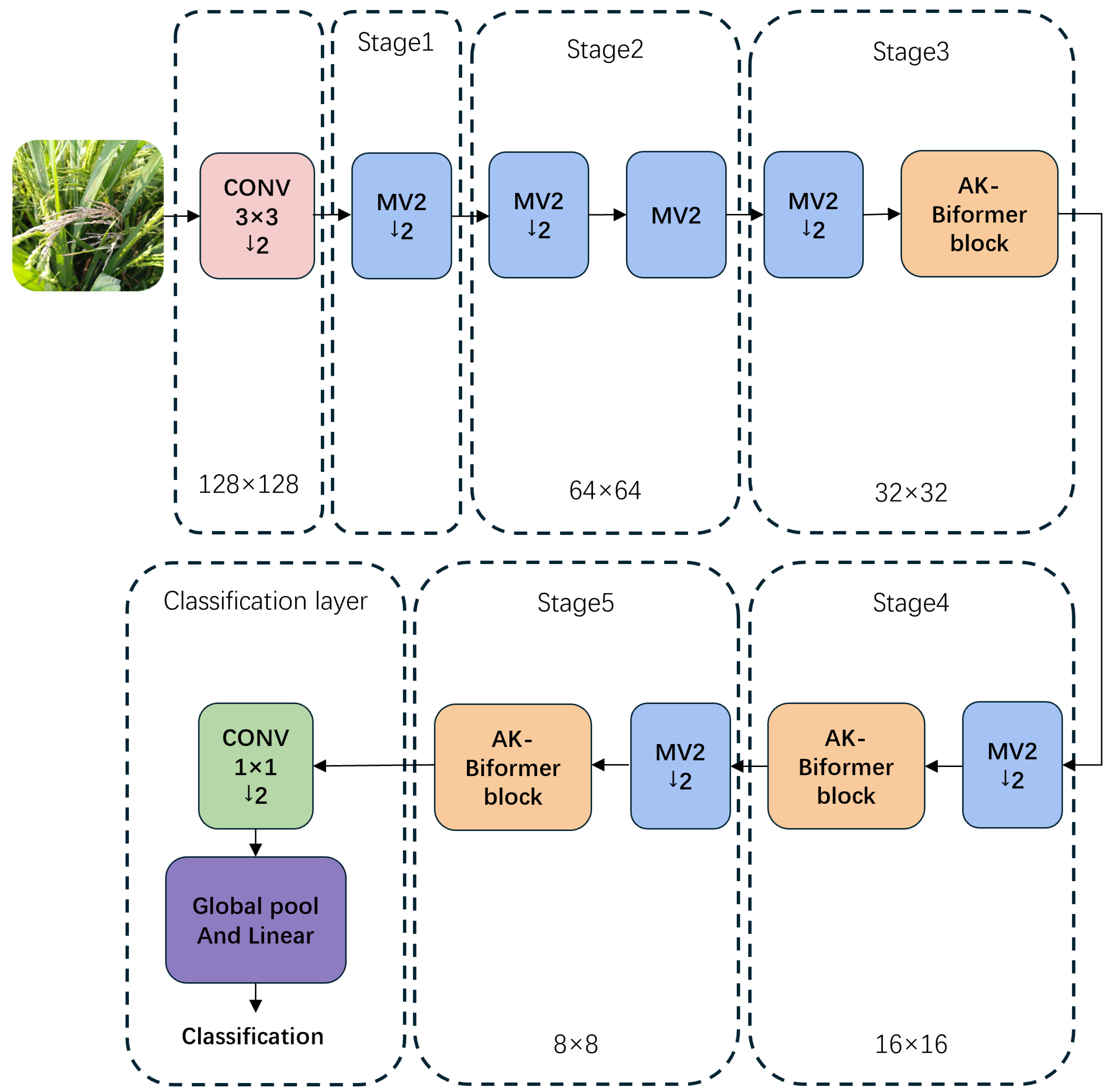

We name the proposed improved architecture MobileViT_BiAK. The architecture of the improved model is illustrated in

Figure 3, and its detailed structure is shown in

Figure 4.

Each block in

Figure 3 is annotated with the corresponding resolution of the feature map (height × width), the number of channels, and the specific operation type (e.g., standard convolution, inverted residual block, AKConv, BRA). This enables readers to trace how spatial resolution and channel depth evolve throughout the network, from raw image input to the final classification layer. In

Figure 4, both the AK-Biformer block (a) and the original Biformer block (b) are labeled with tensor dimensions at each stage, arrows indicating the direction of feature propagation, and the specific transformations applied in each sub-module. For the AK-Biformer block, we additionally highlight the dual-branch design: the AKConv branch for adaptive local feature extraction and the BRA branch for efficient global dependency modeling. These annotations and descriptions collectively provide a clear, step-by-step view of the internal processing flow, helping readers understand how the proposed architecture maintains lightweight characteristics while improving feature expressiveness and classification performance.

For an input feature map of size

with

C channels, the standard multi-head self-attention (MHSA) has the following complexity:

where the first term corresponds to the linear projections for

Q,

K, and

V, and the second term to the dot-product attention across all tokens.

In BRA, coarse region-level routing partitions the feature map into

regions, each with

tokens. Fine-grained attention is then applied to only the top-

k tokens per query, where

. This reduces the attention cost from

to the following:

Thus, BRA achieves sub-quadratic complexity in the number of tokens, enabling more efficient scaling to high-resolution inputs while preserving global context modeling.

BRA was selected instead of alternative efficient global attention mechanisms, including Swin Transformer, Linformer, and Performer, due to its unique two-level routing strategy: coarse region-level routing and fine token-level attention. This design reduces the theoretical complexity from to approximately , striking a better balance between efficiency and accuracy in high-resolution plant disease images.

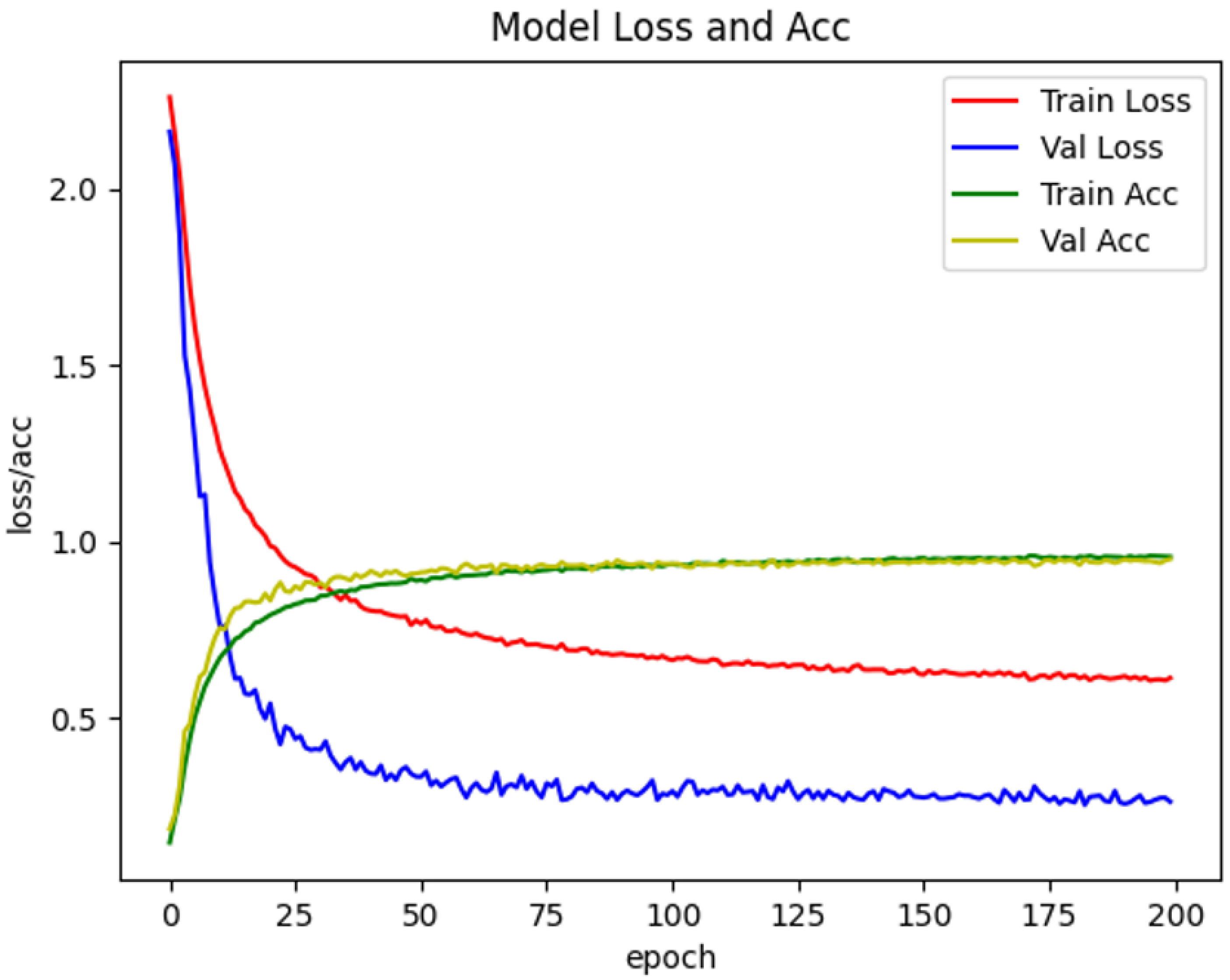

6. Conclusions

In this paper, we proposed a lightweight and high-accuracy rice disease classification framework, MobileViT_BiAK, which enhances both local and global representation learning by replacing the original standard convolution and multi-head self-attention with the adaptive Alterable Kernel Convolution (AKConv) and Bi-level Routing Attention (BRA). This design preserves a compact model size and efficient inference speed, making it suitable for deployment on mobile and edge devices in agricultural scenarios.

The ablation study verifies that AKConv effectively captures diverse lesion morphologies through dynamically shaped kernels with linear parameter growth, while BRA efficiently models global dependencies via a hierarchical two-stage attention process that reduces computational complexity. Together, these components achieve a strong balance between recognition performance and computational efficiency.

Although the proposed method demonstrates robust overall performance, the analysis reveals challenges in distinguishing diseases with highly similar visual symptoms. Future work will explore the integration of additional spectral cues, multi-scale feature enhancement, and fine-grained texture modeling to address this limitation.

Overall, MobileViT_BiAK provides a practical and portable solution for intelligent, field-deployable plant disease monitoring systems, contributing to the broader development of smart and digital agriculture.