Steel Defect Detection with YOLO-RSD: Integrating Texture Feature Enhancement and Environmental Noise Exclusion

Abstract

1. Introduction

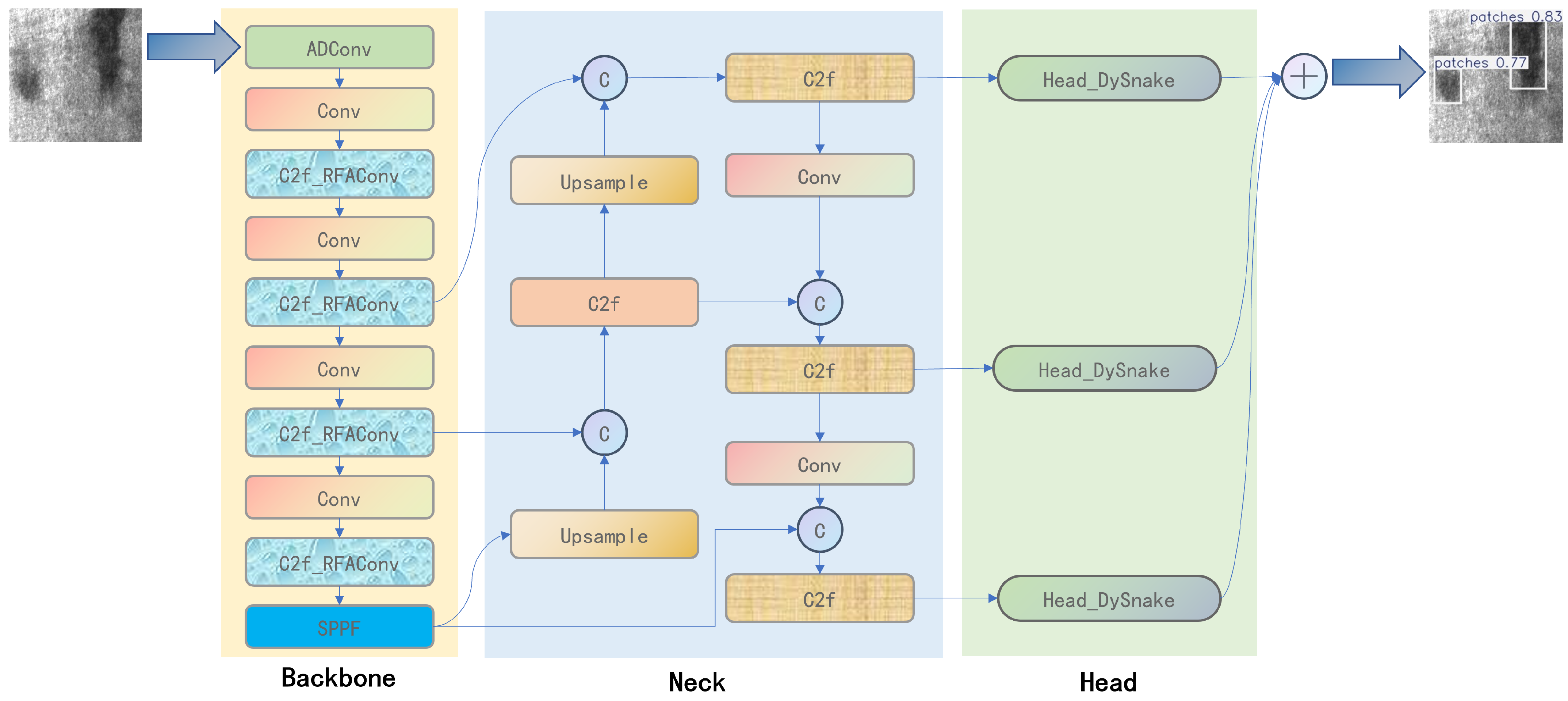

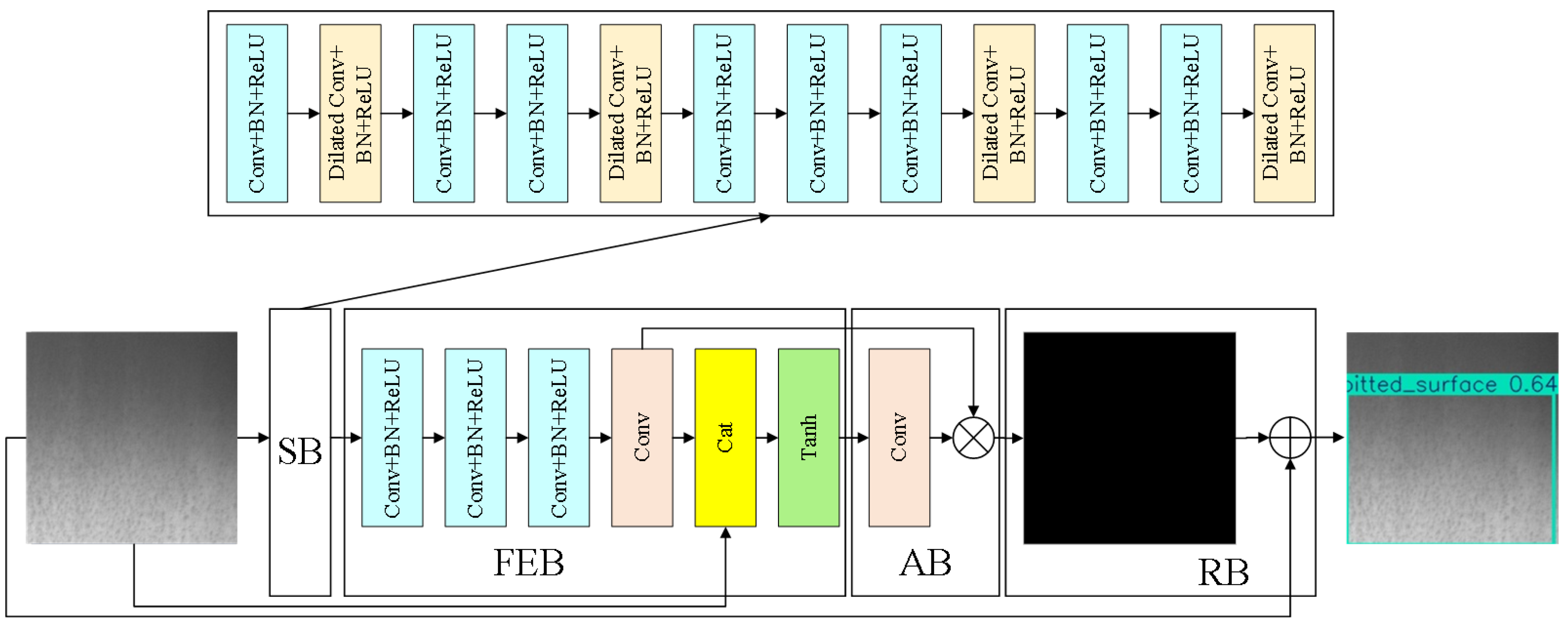

- Robust Environmental Denoising: We introduce a novel module dedicated to effectively mitigating noise from complex industrial environments. This enhancement significantly improves the model’s ability to discern defects amid challenging visual interference, leading to more reliable detection.

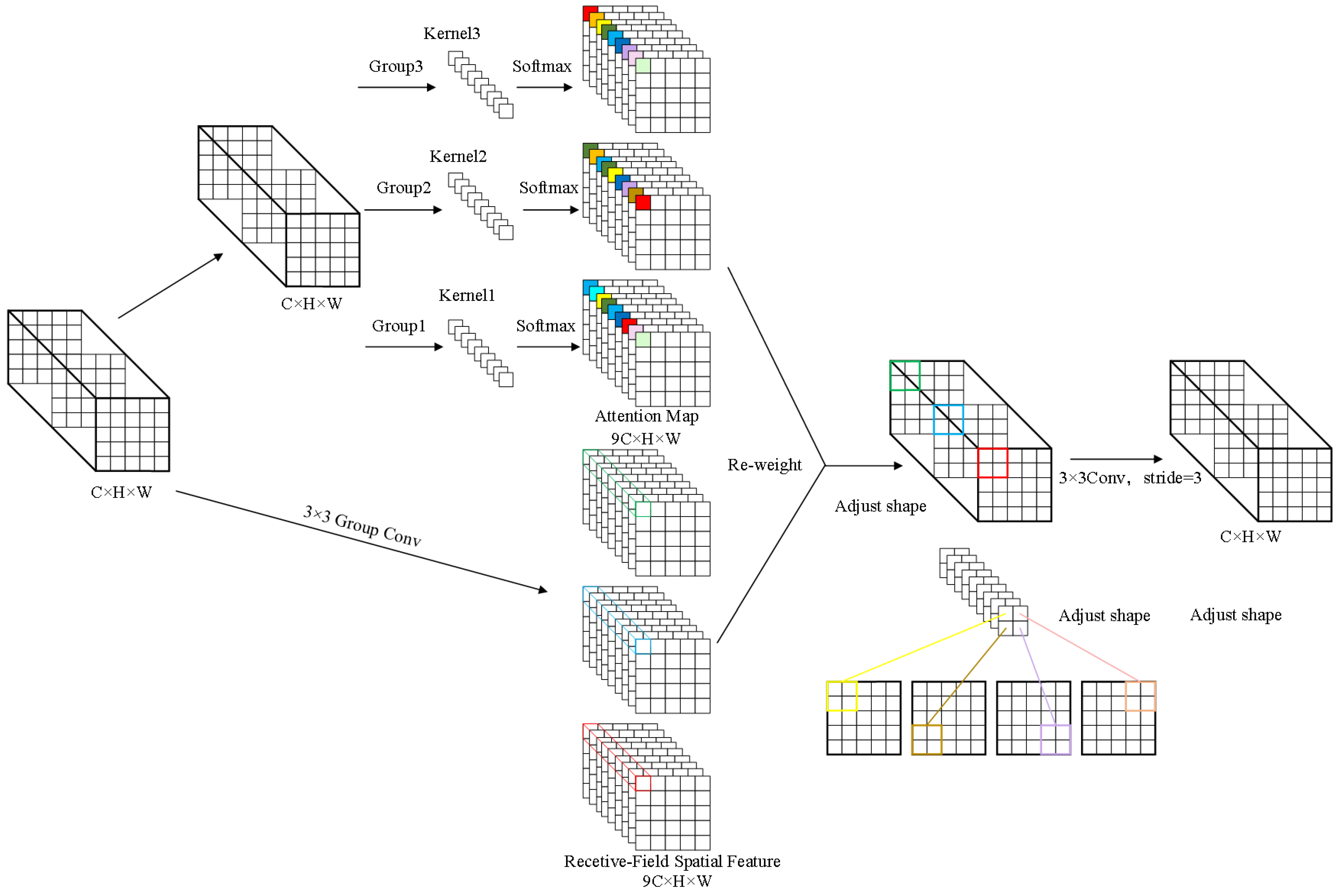

- Enhanced Contextual Understanding for Clustered Defects: To precisely identify defects that frequently appear in groups, our model incorporates a mechanism designed to acquire a larger receptive field. This enables a more comprehensive understanding of contextual information, thereby boosting the detection accuracy of such aggregated imperfections.

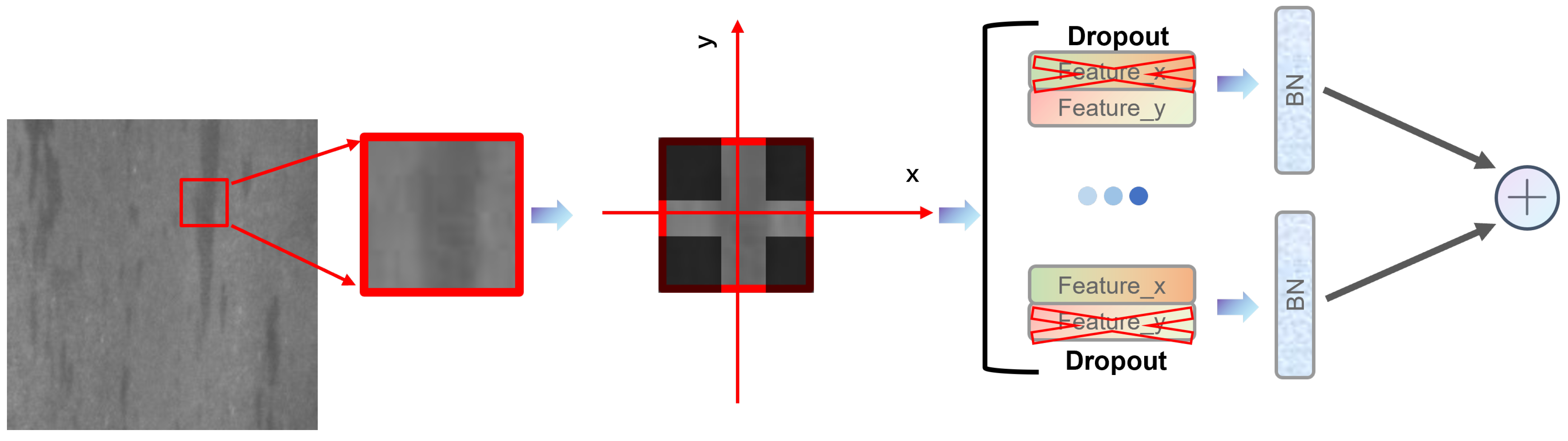

- Head_DySnake for Adaptive Texture Structure Recognition: Our model integrates the unique Head_DySnake component, which is engineered to adaptively capture and refine texture structures. This innovation drastically improves the recognition capability for defects defined by distinct textural patterns, ensuring high precision even for subtle or intricate structural variations.

2. Related Work

2.1. YOLO

2.2. Steel Defect Detection

3. Proposed Method

3.1. ADConv

3.2. RFAConv

3.3. Head_DySnake

4. Experiment

4.1. Experimental Environment and Parameters

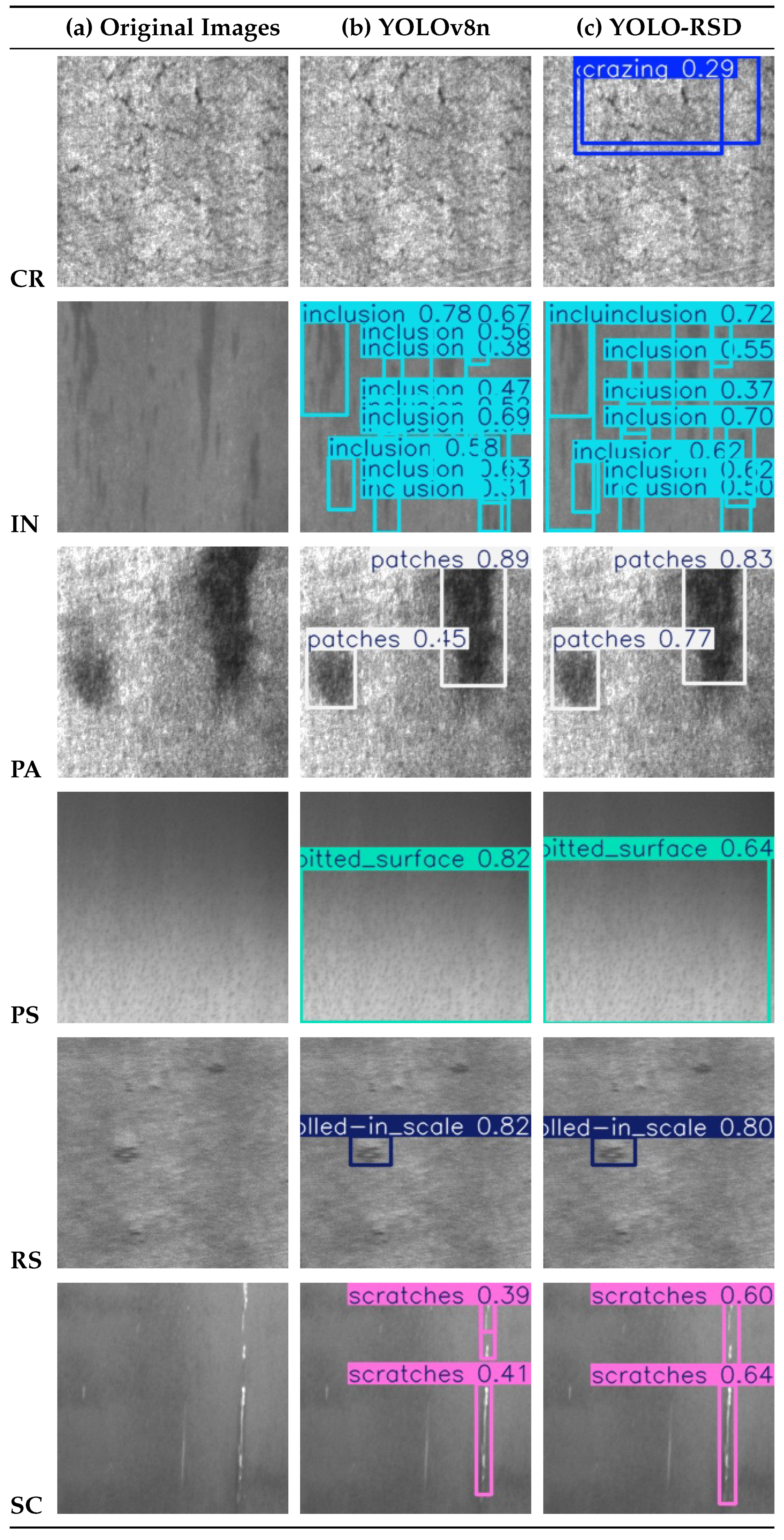

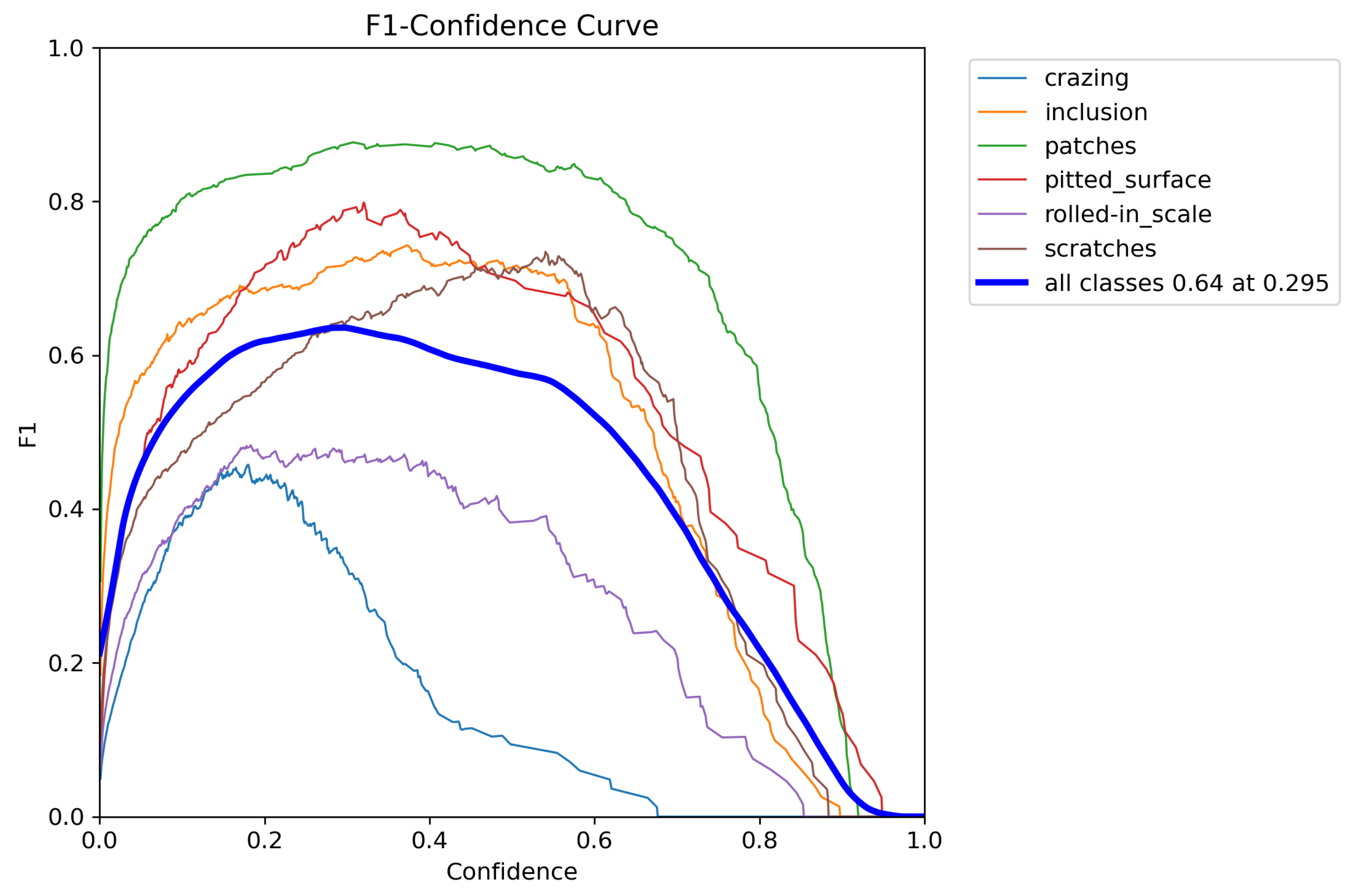

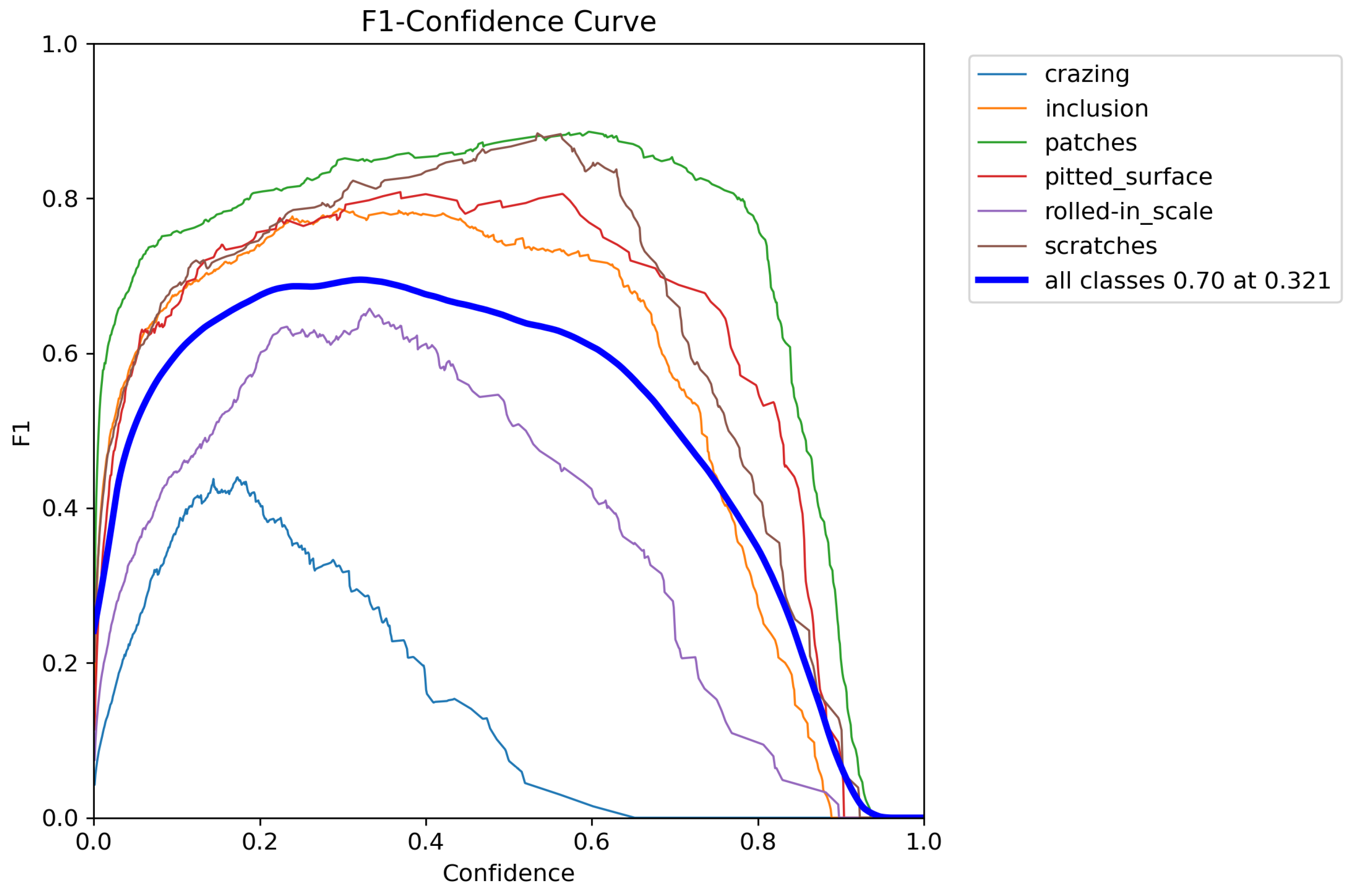

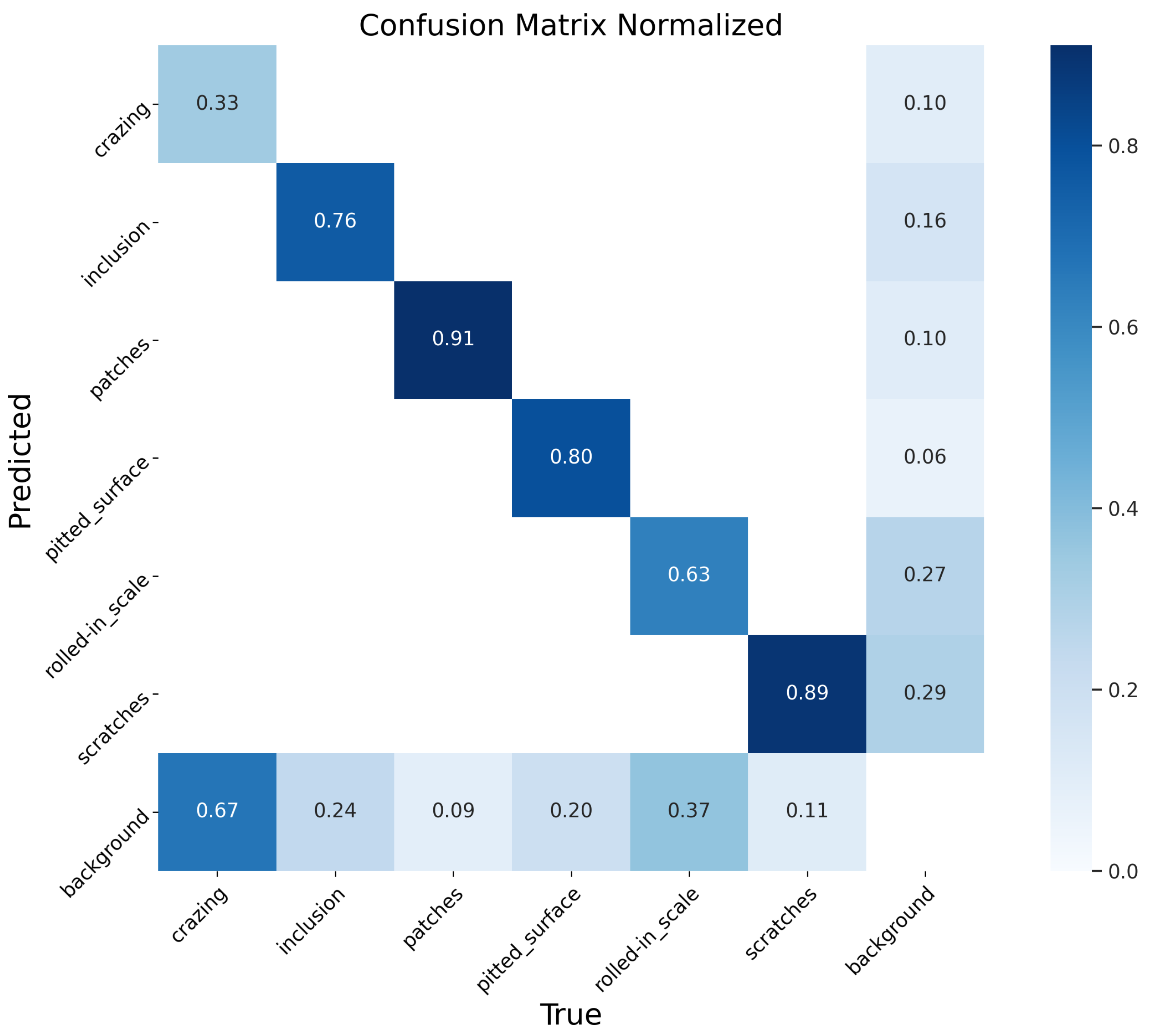

4.2. Model Performance Analysis Across Datasets

4.3. Module Ablation Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Luo, Q.; Fang, X.; Liu, L.; Yang, C.; Sun, Y. Automated visual defect detection for flat steel surface: A survey. IEEE Trans. Instrum. Meas. 2020, 69, 626–644. [Google Scholar] [CrossRef]

- Mordia, R.; Verma, A.K. Visual techniques for defects detection in steel products: A comparative study. Eng. Fail. Anal. 2022, 134, 106047. [Google Scholar] [CrossRef]

- Li, Z.; Wei, X.; Hassaballah, M.; Li, Y.; Jiang, X. A deep learning model for steel surface defect detection. Complex Intell. Syst. 2024, 10, 885–897. [Google Scholar] [CrossRef]

- Song, C.; Chen, J.; Lu, Z.; Li, F.; Liu, Y. Steel Surface Defect Detection via Deformable Convolution and Background Suppression. IEEE Trans. Instrum. Meas. 2023, 72, 1–9. [Google Scholar] [CrossRef]

- Xia, K.; Lv, Z.; Zhou, C.; Gu, G.; Zhao, Z.; Liu, K.; Li, Z. Mixed Receptive Fields Augmented YOLO with Multi-Path Spatial Pyramid Pooling for Steel Surface Defect Detection. Sensors 2023, 23, 5114. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics YOLO. Available online: https://docs.ultralytics.com/zh/ (accessed on 17 August 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Varghese, R.; M, S. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Singlor, T.; Thawatdamrongkit, P.; Techa-Angkoon, P.; Suwannajak, C.; Bootkrajang, J. Globular Cluster Detection in M33 Using Multiple Views Representation Learning. In Proceedings of the International Conference on Intelligent Data Engineering and Automated Learning, Évora, Portugal, 22–24 November 2023; pp. 323–331. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Qiao, Q.; Hu, H.; Ahmad, A.; Wang, K. A Review of Metal Surface Defect Detection Technologies in Industrial Applications. IEEE Access 2025, 13, 48380–48400. [Google Scholar] [CrossRef]

- Mao, W.L.; Chiu, Y.Y.; Lin, B.H.; Wang, C.C.; Wu, Y.T.; You, C.Y.; Chien, Y.R. Integration of deep learning network and robot arm system for rim defect inspection application. Sensors 2022, 22, 3927. [Google Scholar] [CrossRef]

- Semitela, Â.; Pereira, M.; Completo, A.; Lau, N.; Santos, J.P. Improving Industrial Quality Control: A Transfer Learning Approach to Surface Defect Detection. Sensors 2025, 25, 527. [Google Scholar] [CrossRef]

- Saberironaghi, A.; Ren, J.; El-Gindy, M. Defect Detection Methods for Industrial Products Using Deep Learning Techniques: A Review. Algorithms 2023, 16, 95. [Google Scholar] [CrossRef]

- Sun, X.; Liu, T.; Liu, C.; Dong, W. FCNN: Simple neural networks for complex code tasks. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 101970. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Yin, J.; Huang, F.; Li, Q. Surface defect inspection of industrial products with object detection deep networks: A systematic review. Artif. Intell. Rev. 2024, 57, 333. [Google Scholar] [CrossRef]

- Wang, B.; Wang, M.; Yang, J.; Luo, H. YOLOv5-CD: Strip steel surface defect detection method based on coordinate attention and a decoupled head. Meas. Sens. 2023, 30, 100909. [Google Scholar] [CrossRef]

- Li, J.; Chen, M. DEW-YOLO: An Efficient Algorithm for Steel Surface Defect Detection. Appl. Sci. 2024, 14, 5171. [Google Scholar] [CrossRef]

- Tian, C.; Xu, Y.; Li, Z.; Zuo, W.; Fei, L.; Liu, H. Attention-guided CNN for image denoising. Neural Netw. 2020, 124, 117–129. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 6070–6079. [Google Scholar]

- Bao, Y.; Song, K.; Liu, J.; Wang, Y.; Yan, Y.; Yu, H.; Li, X. Triplet-graph reasoning network for few-shot metal generic surface defect segmentation. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

- Song, K.; Yan, Y. A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 2013, 285, 858–864. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An end-to-end steel surface defect detection approach via fusing multiple hierarchical features. IEEE Trans. Instrum. Meas. 2019, 69, 1493–1504. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.j.; Fu, X.; Gan, L. Deep metallic surface defect detection: The new benchmark and detection network. Sensors 2020, 20, 1562. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Guo, Z.; Wang, C.; Yang, G.; Huang, Z.; Li, G. Msft-yolo: Improved yolov5 based on transformer for detecting defects of steel surface. Sensors 2022, 22, 3467. [Google Scholar] [CrossRef]

- Guo, D.; Zhang, C.; Yang, G.; Xue, T.; Ma, J.; Liu, L.; Ren, J. Siamese-RCNet: Defect Detection Model for Complex Textured Surfaces with Few Annotations. Electronics 2024, 13, 4873. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Liang, Y.; Xu, K.; Zhou, P.; Zhou, D. Automatic defect detection of texture surface with an efficient texture removal network. Adv. Eng. Inform. 2022, 53, 101672. [Google Scholar] [CrossRef]

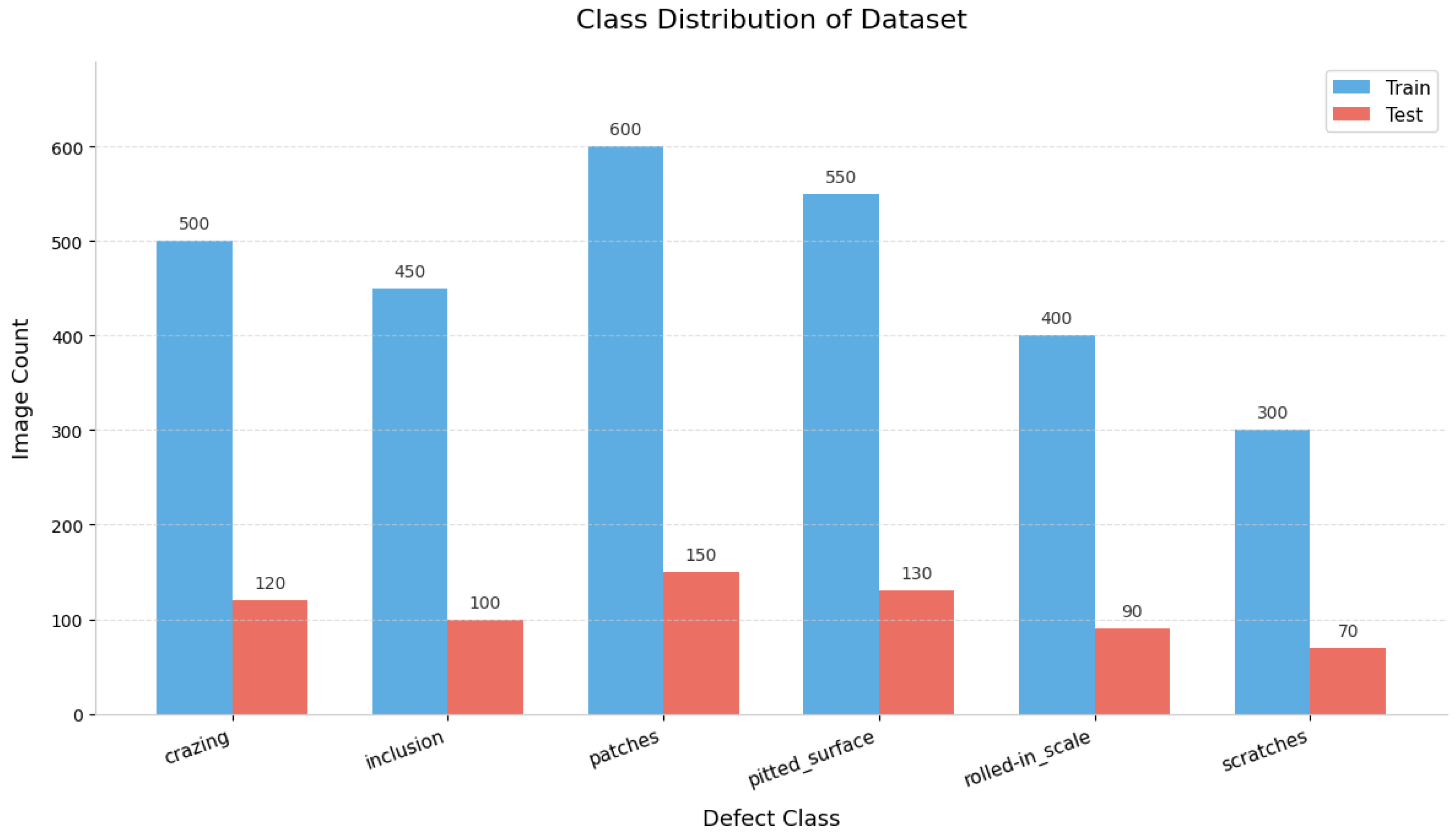

| Attribute | NEU-DET | GC10-DET |

|---|---|---|

| Primary Purpose | Widely recognized benchmark for steel surface defect detection | Utilized for cross-dataset evaluation to substantiate model reliability and robustness |

| Image Type | Grayscale images | High-resolution images |

| Image Resolution | pixels | pixels |

| Number of Images | 1800 | 2300 |

| Defect Categories | 6 common types | 10 typical categories |

| Specific Defect Types | Crazing, Inclusion, Patches, Pitted Surface, Rolled-in Scale, Scratches | Punching, Weld Line, Crescent Gap, Water Spot, Oil Spot, Silk Spot, Inclusion, Rolled Pit, Crease, Waist Folding |

| Parameter | Value/Description |

|---|---|

| Input Image Size | pixels |

| Optimizer | AdamW |

| Initial Learning Rate | 0.01 |

| Weight Decay | 0.0005 |

| Training Epochs | 200 |

| Batch Size | 16 |

| GPU | NVIDIA GeForce RTX 3090 |

| CUDA Version | 12.4 |

| PyTorch Version | 2.3.0 |

| Data Augmentation | Mosaic (merging 4 images into 1) |

| Method | mAP | CR | IN | PA | PS | RS | SC | GFLOPs |

|---|---|---|---|---|---|---|---|---|

| DDN [30] | 72.6 | 49.8 | 67.2 | 89.3 | 85.2 | 63.1 | 87.2 | - |

| SSD [32] | 65.3 | 48.2 | 66.4 | 87.1 | 69.4 | 54.7 | 59.0 | 281.9 |

| MSFT-YOLO [33] | 75.2 | 56.9 | 80.8 | 93.5 | 82.1 | 52.7 | 83.5 | - |

| Siamese-RCNet [34] | 76.1 | 58.0 | 82.5 | 93.0 | 82.5 | 58.0 | 88.0 | - |

| YOLOv8n | 71.3 | 45.8 | 77.6 | 91.4 | 81.9 | 55.4 | 75.8 | 8.1 |

| YOLOv11n | 71.2 | 49.2 | 81.5 | 92.3 | 81.9 | 56.3 | 66.3 | 6.3 |

| YOLOv8l | 72.3 | 46.7 | 79.1 | 92.4 | 81.9 | 52.7 | 80.9 | 164.8 |

| YOLOv11l | 68.2 | 50.0 | 81.8 | 90.8 | 81.5 | 56.2 | 49.0 | 86.6 |

| YOLO-RSD | 77.6 | 33.2 | 85.5 | 92.5 | 82.7 | 64.8 | 94.6 | 9.8 |

| Method | mAP | PU | WL | CG | WS | OS | SS | IN | RP | CR | WF |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Faster R-CNN [21] | 59.3 | 83.4 | 72.4 | 80.0 | 70.3 | 55.2 | 73.1 | 22.5 | 12.9 | 45.8 | 63.7 |

| RetinaNet [21] | 65.3 | 78.6 | 90.5 | 94.7 | 78.9 | 61.3 | 66.4 | 30.7 | 32.9 | 35.2 | 77.1 |

| RT-DETR [35] | 64.7 | 93.1 | 94.6 | 90.5 | 64.4 | 65.8 | 49.8 | 24.4 | 33.1 | 53.8 | 77.8 |

| YOLOv8n | 62.0 | 98.5 | 92.1 | 90.2 | 83.1 | 61.5 | 63.5 | 27.7 | 9.4 | 24.3 | 69.7 |

| YOLOv8l | 66.3 | 94.0 | 94.3 | 87.4 | 85.4 | 68.4 | 61.4 | 41.6 | 19.3 | 39.6 | 71.9 |

| YOLOv11n | 61.7 | 97.8 | 94.2 | 90.5 | 75.8 | 64.8 | 60.3 | 21.5 | 9.5 | 29.3 | 73.0 |

| YOLOv11l | 64.4 | 95.9 | 95.2 | 91.5 | 82.6 | 69.9 | 60.7 | 36.9 | 20.4 | 21.7 | 69.7 |

| YOLO-RSD | 67.9 | 96.7 | 95.5 | 91.0 | 86.2 | 67.3 | 57.1 | 43.3 | 24.9 | 34.8 | 72.4 |

| Model Configuration | Precision (P) | Recall (R) | ||

|---|---|---|---|---|

| YOLOv8n (Baseline) | 0.646 | 0.678 | 0.712 | 0.373 |

| +RFAConv | 0.698 | 0.715 | 0.755 | 0.439 |

| +ADConv | 0.714 | 0.695 | 0.752 | 0.439 |

| +Head_DySnake | 0.681 | 0.722 | 0.752 | 0.449 |

| +RFAConv + ADConv | 0.725 | 0.720 | 0.762 | 0.455 |

| +RFAConv + Head_DySnake | 0.710 | 0.730 | 0.765 | 0.460 |

| +ADConv + Head_DySnake | 0.720 | 0.735 | 0.760 | 0.458 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, H.; Zou, Y.; Song, J.; Xu, H. Steel Defect Detection with YOLO-RSD: Integrating Texture Feature Enhancement and Environmental Noise Exclusion. Electronics 2025, 14, 3302. https://doi.org/10.3390/electronics14163302

Pan H, Zou Y, Song J, Xu H. Steel Defect Detection with YOLO-RSD: Integrating Texture Feature Enhancement and Environmental Noise Exclusion. Electronics. 2025; 14(16):3302. https://doi.org/10.3390/electronics14163302

Chicago/Turabian StylePan, Honghua, Yujin Zou, Jinyu Song, and He Xu. 2025. "Steel Defect Detection with YOLO-RSD: Integrating Texture Feature Enhancement and Environmental Noise Exclusion" Electronics 14, no. 16: 3302. https://doi.org/10.3390/electronics14163302

APA StylePan, H., Zou, Y., Song, J., & Xu, H. (2025). Steel Defect Detection with YOLO-RSD: Integrating Texture Feature Enhancement and Environmental Noise Exclusion. Electronics, 14(16), 3302. https://doi.org/10.3390/electronics14163302