MA-HRL: Multi-Agent Hierarchical Reinforcement Learning for Medical Diagnostic Dialogue Systems

Abstract

1. Introduction

2. Related Works

3. Methodology

3.1. Overview

3.2. Hierarchical Reinforcement Learning Architecture

3.3. Hierarchical Disease Classifier

3.4. Reward Design Based on Information Entropy Differential

3.5. Design of Knowledge Graph for Dialogue Management Module

4. Experimental

4.1. Dataset

4.2. Experimental Configuration

4.3. Indicators

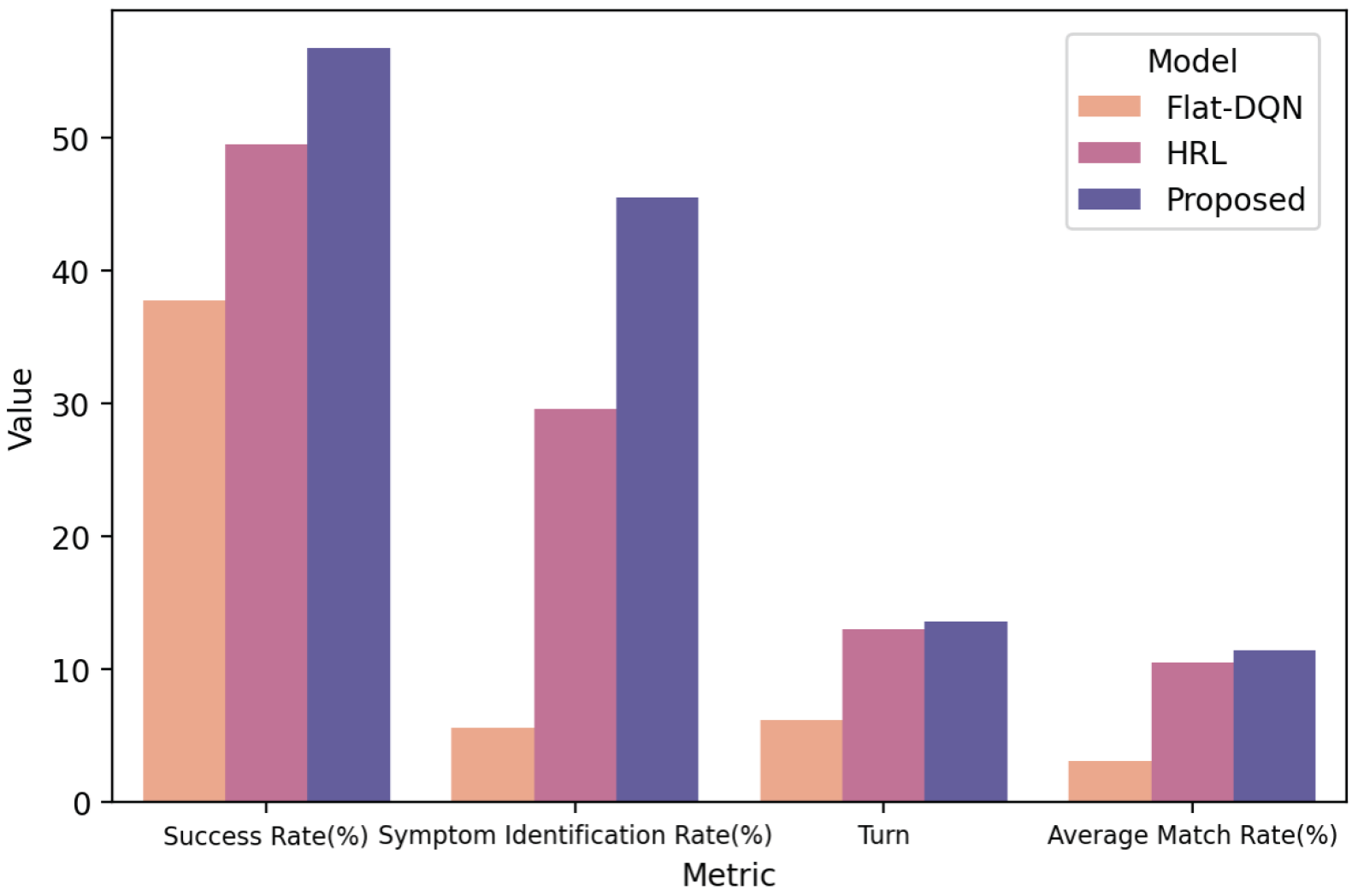

4.4. Performance Analysis

- SVM-ex: This model uses the Support Vector Machine (SVM) model [48] and treats the intelligent medical consultation task as a supervised classification task. The input consists of the patient’s explicit symptoms, and the output is the disease diagnosis. The experimental results of this model are generally considered as the lower bound of the diagnostic accuracy in intelligent medical consultation tasks.

- SVM-ex&im: This model is similar to the SVM-ex model, with the difference that the input includes both explicit and implicit symptoms. The experimental results of this model are generally considered as the upper bound of the diagnostic accuracy in intelligent medical consultation tasks.

- Flat-DQN [21]: This model uses a single agent to make decisions, and the action space consists of a set of diseases and symptoms. Its neural network structure is the same as the agent in this study.

- HRL [31]: HRL is currently the state-of-the-art method, which first proposed the SD dataset and trained and validated the model on this dataset. HRL adopts a hierarchical reinforcement learning architecture, and its main and Worker Agents have the same neural network structure as in this study. However, its disease classifier is not hierarchical but a single neural network with a three-layer structure and 256 neurons in the hidden layer. HRL does not introduce information entropy and does not include any knowledge graph.

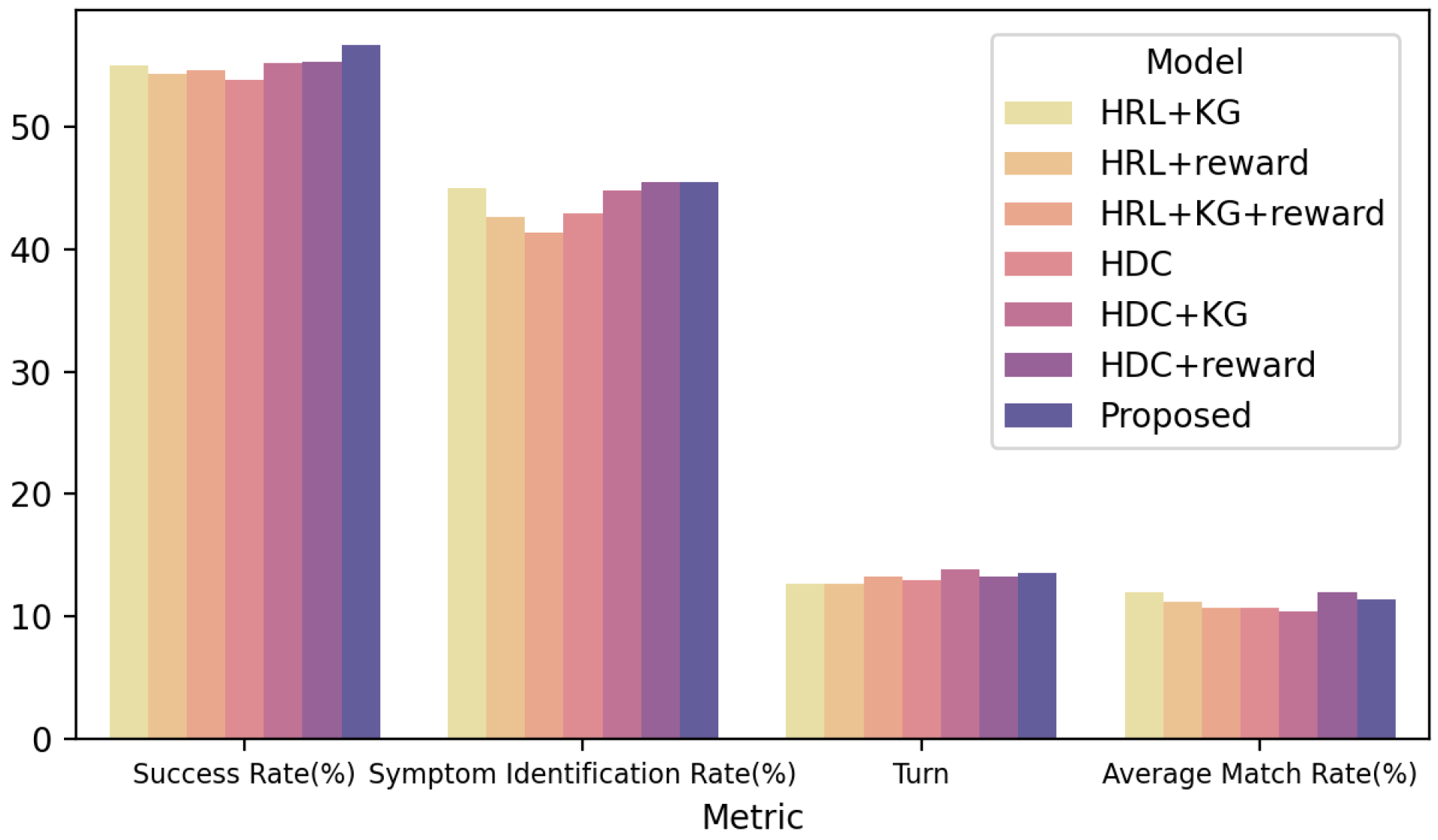

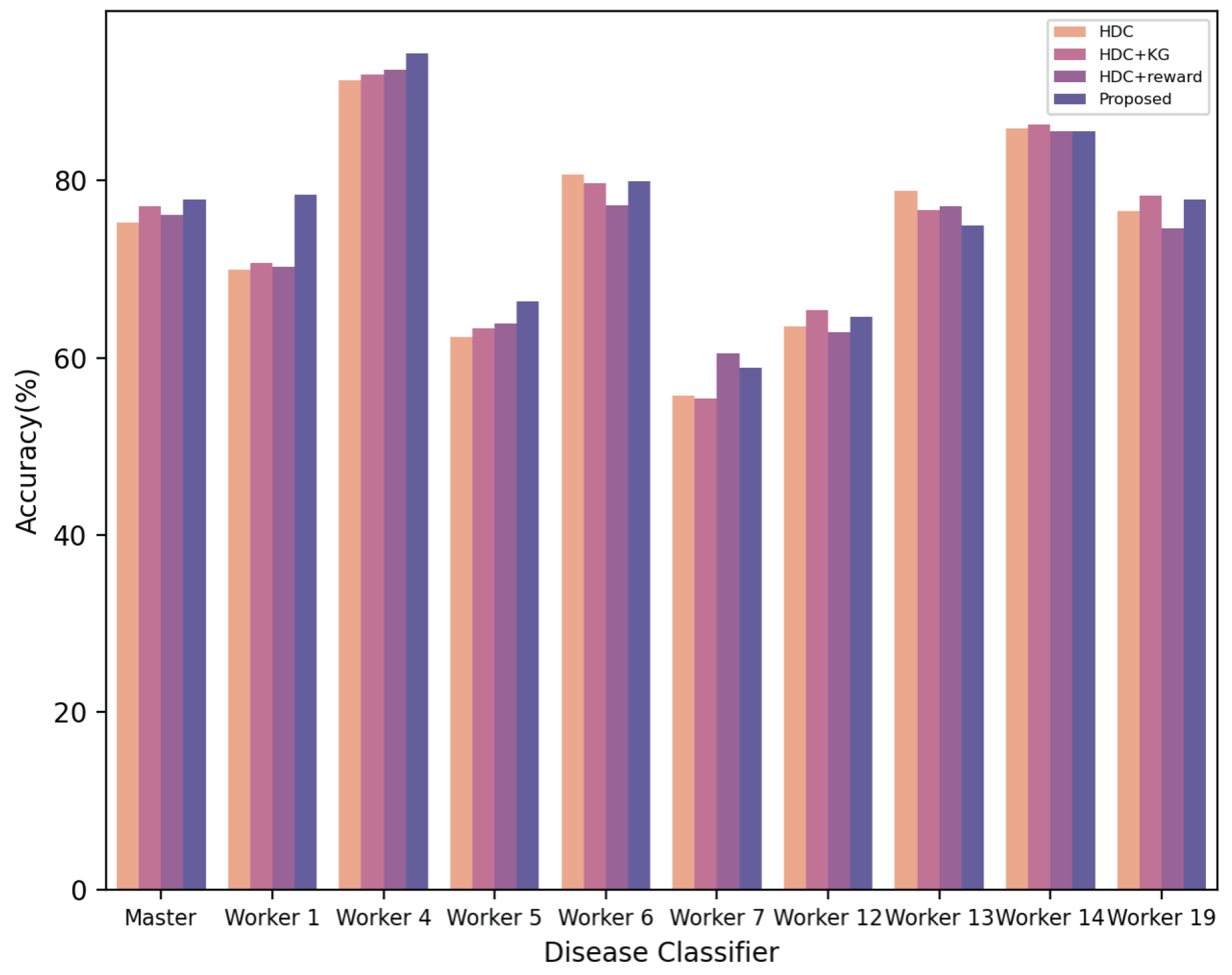

4.5. Ablation Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, X.; Wang, S.; Liang, X.; Zhao, D.; Huang, J.; Xu, X.; Dai, B.; Miao, Q. Deep reinforcement learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5064–5078. [Google Scholar] [CrossRef] [PubMed]

- Moerl, T.M.; Broekens, J.; Plaat, A.; Jonker, C.M. Model-based reinforcement learning: A survey. Found. Trends® Mach. Learn. 2023, 16, 1–118. [Google Scholar]

- Matsuo, Y.; LeCun, Y.; Sahani, M.; Precup, D.; Silver, D.; Sugiyama, M.; Uchibe, E.; Morimoto, J. Deep learning, reinforcement learning, and world models. Neural Netw. 2022, 152, 267–275. [Google Scholar] [CrossRef]

- Vrba, P.; Mařík, V.; Siano, P.; Leitão, P.; Zhabelova, G.; Vyatkin, V.; Strasser, T. A review of agent and service-oriented concepts applied to intelligent energy systems. IEEE Trans. Ind. Inform. 2014, 10, 1890–1903. [Google Scholar] [CrossRef]

- Marinakis, V.; Doukas, H.; Tsapelas, J.; Mouzakitis, S.; Sicilia, Á.; Madrazo, L.; Sgouridis, S. From big data to smart energy services: An application for intelligent energy management. Future Gener. Comput. Syst. 2020, 110, 572–586. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement learning. J. Cogn. Neurosci. 1999, 11, 126–134. [Google Scholar]

- Kaelbling, L.P.; Littman, M.L.; Moore, A.W. Reinforcement learning: A survey. J. Artif. Intell. Res. 1996, 4, 237–285. [Google Scholar] [CrossRef]

- Calinescu, R.; Grunske, L.; Kwiatkowska, M.; Mirandola, R.; Tamburrelli, G. Dynamic QoS management and optimization in service-based systems. IEEE Trans. Softw. Eng. 2010, 37, 387–409. [Google Scholar] [CrossRef]

- Pinson, P.; Madsen, H. Benefits and challenges of electrical demand response: A critical review. Renew. Sustain. Energy Rev. 2014, 39, 686–699. [Google Scholar]

- Li, S.E. Deep reinforcement learning. In Reinforcement Learning for Sequential Decision and Optimal Control; Springer Nature: Singapore, 2023; pp. 365–402. [Google Scholar]

- Desolda, G.; Ardito, C.; Matera, M. Empowering end users to customize their smart environments: Model, composition paradigms, and domain-specific tools. ACM Trans. Comput.-Hum. Interact. (TOCHI) 2017, 24, 1–52. [Google Scholar] [CrossRef]

- Aiello, M. A challenge for the next 50 years of automated service composition. In Proceedings of the International Conference on Service-Oriented Computing, Seville, Spain, 29 November–2 December 2022; Springer Nature: Cham, Switzerland, 2022; pp. 635–643. [Google Scholar]

- Levene, M.; Poulovassilis, A.; Abiteboul, S.; Benjelloun, O.; Manolescu, I.; Milo, T.; Weber, R. Active XML: A data-centric perspective on web services. In Web Dynamics: Adapting to Change in Content, Size, Topology and Use; Springer: Berlin/Heidelberg, Germany, 2004; pp. 275–299. [Google Scholar][Green Version]

- Cuayáhuitl, H.; Lee, D.; Ryu, S.; Cho, Y.; Choi, S.; Indurthi, S.; Yu, S.; Choi, H.; Hwang, I.; Kim, J. Ensemble-based deep reinforcement learning for chatbots. Neurocomputing 2019, 366, 118–130. [Google Scholar] [CrossRef]

- Mo, K.; Zhang, Y.; Li, S.; Li, J.; Yang, Q. Personalizing a dialogue system with transfer reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar][Green Version]

- Chen, Z.; Chen, L.; Zhou, X.; Yu, K. Deep reinforcement learning for on-line dialogue state tracking. In Proceedings of the National Conference on Man-Machine Speech Communication, Hefei, China, 15–18 December 2022; Springer Nature: Singapore, 2022; pp. 278–292. [Google Scholar][Green Version]

- Kwan, W.C.; Wang, H.R.; Wang, H.M.; Wong, K.F. A survey on recent advances and challenges in reinforcement learning methods for task-oriented dialogue policy learning. Mach. Intell. Res. 2023, 20, 318–334. [Google Scholar] [CrossRef]

- Zhou, H.; Young, T.; Huang, M.; Zhao, H.; Xu, J.; Zhu, X. Commonsense knowledge aware conversation generation with graph attention. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; Volume 18, pp. 4623–4629. [Google Scholar][Green Version]

- Moon, S.; Shah, P.; Kumar, A.; Subba, R. Opendialkg: Explainable conversational reasoning with attention-based walks over knowledge graphs. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 845–854. [Google Scholar][Green Version]

- Kao, H.C.; Tang, K.F.; Chang, E. Context-aware symptom checking for disease diagnosis using hierarchical reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar][Green Version]

- Wei, Z.; Liu, Q.; Peng, B.; Tou, H.; Chen, T.; Huang, X.; Wong, K.-F.; Dai, X. Task-oriented dialogue system for automatic diagnosis. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 2: Short Papers, pp. 201–207. [Google Scholar][Green Version]

- Xu, L.; Zhou, Q.; Gong, K.; Liang, X.; Tang, J.; Lin, L. End-to-end knowledge-routed relational dialogue system for automatic diagnosis. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7346–7353. [Google Scholar][Green Version]

- Milanovic, N.; Malek, M. Current solutions for web service composition. IEEE Internet Comput. 2004, 8, 51–59. [Google Scholar] [CrossRef]

- Ardagna, D.; Pernici, B. Adaptive service composition in flexible processes. IEEE Trans. Softw. Eng. 2007, 33, 369–384. [Google Scholar] [CrossRef]

- Rao, J.; Su, X. A survey of automated web service composition methods. In Proceedings of the International Workshop on Semantic Web Services and Web Process Composition, San Diego, CA, USA, 6 July 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 43–54. [Google Scholar][Green Version]

- Kona, S.; Bansal, A.; Blake, M.B.; Gupta, G. Generalized semantics-based service composition. In Proceedings of the 2008 IEEE International Conference on Web Services, Beijing, China, 23–26 September 2008; pp. 219–227. [Google Scholar][Green Version]

- Weise, T.; Blake, M.B.; Bleul, S. Semantic web service composition: The web service challenge perspective. In Web Services Foundations; Springer: New York, NY, USA, 2014; pp. 161–187. [Google Scholar][Green Version]

- Weise, T.; Bleul, S.; Geihs, K. Web Service Composition Systems for the Web Service Challenge-a Detailed Review; University of Kassel: Kassel, Germany, 2007. [Google Scholar][Green Version]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The llama 3 herd of models. arXiv 2024, arXiv:2407.21783. [Google Scholar][Green Version]

- Dankwa, S.; Zheng, W. Twin-delayed ddpg: A deep reinforcement learning technique to model a continuous movement of an intelligent robot agent. In Proceedings of the 3rd International Conference on Vision, Image and Signal Processing, Singapore, 27–29 July 2019; pp. 1–5. [Google Scholar][Green Version]

- Liao, K.; Zhong, C.; Chen, W.; Liu, Q.; Wei, Z.; Peng, B.; Huang, X. Task-oriented dialogue system for automatic disease diagnosis via hierarchical reinforcement learning. In Proceedings of the Tenth International Conference on Learning Representations (ICLR 2022), Virtual, 25 April 2022. [Google Scholar][Green Version]

- Tang, K.F.; Kao, H.C.; Chou, C.N.; Chang, E.Y. Inquire and diagnose: Neural symptom checking ensemble using deep reinforcement learning. In Proceedings of the NIPS Workshop on Deep Reinforcement Learning, Barcelona, Spain, 5–10 December 2016. [Google Scholar][Green Version]

- Nesterov, A.; Ibragimov, B.; Umerenkov, D.; Shelmanov, A.; Zubkova, G.; Kokh, V. Neuralsympcheck: A symptom checking and disease diagnostic neural model with logic regularization. In Proceedings of the International Conference on Artificial Intelligence in Medicine, Halifax, NS, Canada, 14–17 June 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 76–87. [Google Scholar][Green Version]

- Liu, W.; Tang, J.; Liang, X.; Cai, Q. Heterogeneous graph reasoning for knowledge-grounded medical dialogue system. Neurocomputing 2021, 442, 260–268. [Google Scholar] [CrossRef]

- Lin, S.; Zhou, P.; Liang, X.; Tang, J.; Zhao, R.; Chen, Z.; Lin, L. Graph-evolving meta-learning for low-resource medical dialogue generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 13362–13370. [Google Scholar][Green Version]

- Zhao, X.; Chen, L.; Chen, H. A weighted heterogeneous graph-based dialog system. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 5212–5217. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, M.; Gao, Z.; Lei, X.; Wang, Y.; Nie, L. Multimodal dialog system: Relational graph-based context-aware question understanding. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 695–703. [Google Scholar][Green Version]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar][Green Version]

- Chen, W.; Feng, F.; Wang, Q.; He, X.; Song, C.; Ling, G.; Zhang, Y. Catgcn: Graph convolutional networks with categorical node features. IEEE Trans. Knowl. Data Eng. 2021, 35, 3500–3511. [Google Scholar] [CrossRef]

- Jin, W.; Derr, T.; Wang, Y.; Ma, Y.; Liu, Z.; Tang, J. Node similarity preserving graph convolutional networks. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Virtual, 8–12 March 2021; pp. 148–156. [Google Scholar][Green Version]

- Liu, W.; Cheng, Y.; Wang, H.; Tang, J.; Liu, Y.; Zhao, R.; Li, W.; Zheng, Y.; Liang, X. “My nose is running.” “Are you also coughing?”: Building A Medical Diagnosis Agent with Interpretable Inquiry Logics. arXiv 2022, arXiv:2204.13953. [Google Scholar][Green Version]

- Fansi Tchango, A.; Goel, R.; Martel, J.; Wen, Z.; Marceau Caron, G.; Ghosn, J. Towards trustworthy automatic diagnosis systems by emulating doctors’ reasoning with deep reinforcement learning. Adv. Neural Inf. Process. Syst. 2022, 35, 24502–24515. [Google Scholar]

- Lin, J.; Xu, L.; Chen, Z.; Lin, L. Towards a reliable and robust dialogue system for medical automatic diagnosis. In Proceedings of the International Conference on Learning Representations (ICLR 2021), Vienna, Austria, 4 May 2021. [Google Scholar][Green Version]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Al-Ars, Z.; Agba, O.; Guo, Z.; Boerkamp, C.; Jaber, Z.; Jaber, T. NLICE: Synthetic Medical Record Generation for Effective Primary Healthcare Differential Diagnosis. In Proceedings of the 2023 IEEE 23rd International Conference on Bioinformatics and Bioengineering (BIBE), Dayton, OH, USA, 4–6 December 2023; pp. 397–402. [Google Scholar][Green Version]

- Cartwright, D.J. ICD-9-CM to ICD-10-CM codes: What? why? how? Adv. Wound Care 2013, 2, 588–592. [Google Scholar] [CrossRef] [PubMed]

- Sievert, D.M.; Boulton, M.L.; Stoltman, G.; Johnson, D.; Stobierski, M.G.; Downes, F.P.; Somsel, P.A.; Rudrik, J.T.; Brown, W.; Hafeez, W.; et al. From the centers for disease control and prevention. JAMA 2002, 288, 824. [Google Scholar]

- Wang, Z.; Xue, X. Multi-class support vector machine. Support Vector Mach. Appl. 2014, 23–48. [Google Scholar][Green Version]

| Vector | Meaning |

|---|---|

| No inquiry about this symptom | |

| Patient responds “Uncertain” | |

| Patient responds “No” | |

| Patient responds “Yes” |

| Categories | Quantity |

|---|---|

| Patient goal | 30,000 |

| Disease | 90 |

| Disease group | 10 |

| Symptoms | 266 |

| Average prominent symptoms | 2.6 |

| Disease Groups | Disease Group Names | Number of Disease Categories | Number of Patient Goals | Number of Associated Symptoms | Number of Unique Symptoms |

|---|---|---|---|---|---|

| 1 | Infectious diseases and parasitic diseases | 10 | 3371 | 65 | 9 |

| 4 | Endocrine, nutritional and metabolic diseases | 10 | 3348 | 89 | 16 |

| 5 | Psychiatric and behavioral disorders | 10 | 3355 | 68 | 10 |

| 6 | Neurological disorders | 10 | 3380 | 58 | 7 |

| 7 | Ocular and adnexal diseases | 10 | 3286 | 46 | 10 |

| 12 | Skin and subcutaneous tissue diseases | 10 | 3303 | 51 | 18 |

| 13 | Musculoskeletal system and connective tissue diseases | 10 | 3249 | 62 | 24 |

| 14 | Diseases of the genitourinary system | 10 | 3274 | 69 | 26 |

| 19 | Injuries, poisonings, and certain other external causes | 10 | 3389 | 73 | 6 |

| Hyperparameters | Values |

|---|---|

| Training epochs | 5000 |

| Number of dialogues per epoch | 100 |

| Batch size | 100 |

| Learning rate | 0.005 |

| 0.1 | |

| Maximum dialogue turns | 28 |

| Maximum dialogue turns for subtask N | 5 |

| Discount factor | 0.95 |

| Model | Diagnostic Accuracy (%) | Number of Dialogue Turns | Symptom Matching Rate (%) | Symptom Recognition Rate (%) |

|---|---|---|---|---|

| SVM-ex | 32.2 | N/A | N/A | N/A |

| SVM-ex&im | 73.1 | N/A | N/A | N/A |

| Flat-DQN | 37.7 | 6.2 | 3.1 | 5.6 |

| HRL | 49.5 | 12.95 | 10.49 | 29.56 |

| MA-HRL (Ours) | 56.7 | 13.55 | 11.4 | 45.5 |

| Model | Diagnostic Accuracy (%) | Number of Dialogue Turns | Symptom Matching Rate (%) | Symptom Recognition Rate (%) |

|---|---|---|---|---|

| HRL + KG | 55.0 | 12.64 | 12.0 | 45.0 |

| HRL + reward | 54.4 | 12.62 | 11.2 | 42.6 |

| HDC | 53.9 | 12.94 | 10.7 | 42.9 |

| HRL + KG + reward | 54.6 | 13.20 | 10.7 | 41.4 |

| HDC + KG | 55.2 | 13.84 | 10.4 | 44.8 |

| HDC + reward | 55.3 | 13.23 | 12.0 | 45.5 |

| MA-HRL (Ours) | 56.7 | 13.55 | 11.4 | 45.5 |

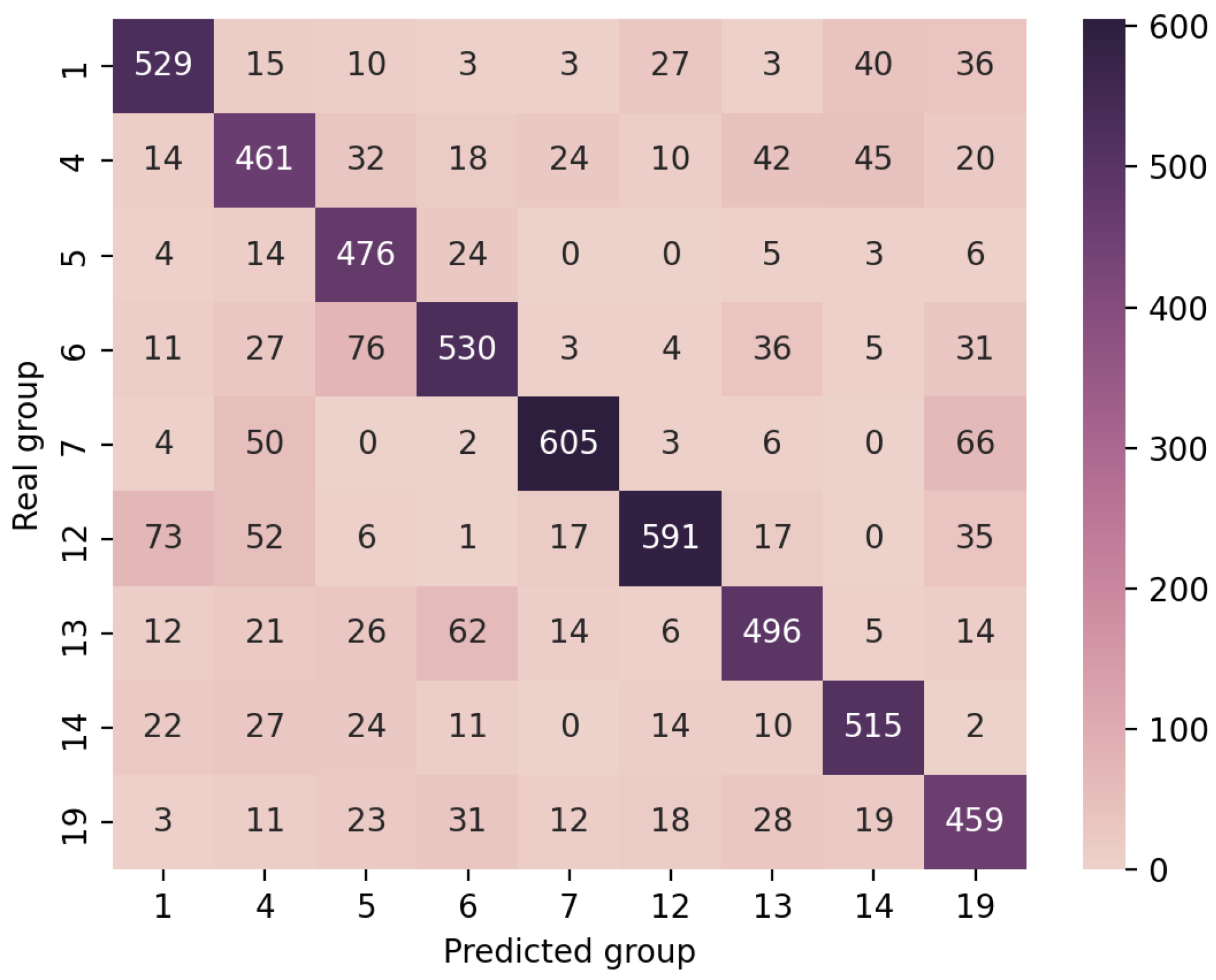

| Disease Classifier | HDC | HDC + KG | HDC + Reward | MA-HRL (Ours) |

|---|---|---|---|---|

| Primary disease classifier | 75.3% | 77.1% | 76.1% | 77.9% |

| Secondary disease classifier1 | 69.94% | 70.68% | 70.24% | 78.42% |

| Secondary disease classifier 4 | 91.3% | 92.04% | 92.48% | 94.4% |

| Secondary disease classifier 5 | 62.36% | 63.3% | 63.9% | 66.42% |

| Secondary disease classifier 6 | 80.65% | 79.77% | 77.27% | 79.91% |

| Secondary disease classifier 7 | 55.75% | 55.46% | 60.47% | 58.85% |

| Secondary disease classifier 12 | 63.6% | 65.38% | 62.85% | 64.64% |

| Secondary disease classifier 13 | 78.85% | 76.67% | 77.14% | 74.96% |

| Secondary disease classifier 14 | 85.92% | 86.39% | 85.6% | 85.6% |

| Secondary disease classifier 19 | 76.53% | 78.33% | 74.59% | 77.88% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, X.; Qin, Y.; Fan, Z.; Yu, X.; Yang, J.; Shi, R.; Wu, W. MA-HRL: Multi-Agent Hierarchical Reinforcement Learning for Medical Diagnostic Dialogue Systems. Electronics 2025, 14, 3001. https://doi.org/10.3390/electronics14153001

Liao X, Qin Y, Fan Z, Yu X, Yang J, Shi R, Wu W. MA-HRL: Multi-Agent Hierarchical Reinforcement Learning for Medical Diagnostic Dialogue Systems. Electronics. 2025; 14(15):3001. https://doi.org/10.3390/electronics14153001

Chicago/Turabian StyleLiao, Xingchuang, Yuchen Qin, Zhimin Fan, Xiaoming Yu, Jingbo Yang, Rongye Shi, and Wenjun Wu. 2025. "MA-HRL: Multi-Agent Hierarchical Reinforcement Learning for Medical Diagnostic Dialogue Systems" Electronics 14, no. 15: 3001. https://doi.org/10.3390/electronics14153001

APA StyleLiao, X., Qin, Y., Fan, Z., Yu, X., Yang, J., Shi, R., & Wu, W. (2025). MA-HRL: Multi-Agent Hierarchical Reinforcement Learning for Medical Diagnostic Dialogue Systems. Electronics, 14(15), 3001. https://doi.org/10.3390/electronics14153001