Abstract

Accurately detecting lane lines is a hot topic in computer vision. How to effectively utilize the relationship between lane features for detection is still an open question. In this paper, we propose a novel lane detection model based on convolutional neural network (CNN), namely, the BSCNNLaneNet (Bidirectional Spatial CNN Lane Detection Network). The proposed model is based on the spatial CNN method and incorporates a bidirectional recurrent neural network (BRNN) block to learn the spatial relationships between slice features. Additionally, a convolutional block attention mechanism is introduced to gain global features, which enhance the global connection between slice features in different directions. We conduct extensive experiments on the TuSimple dataset. The results demonstrate that the proposed method surpasses the original spatial CNN method, achieving an increase in accuracy from 96.53% to 96.86%.

1. Introduction

Lane detection has gained significant attention in research and industrial communities due to its wide range of real-world applications, such as in automatic driving, intelligent transportation, and robot navigation systems. Lane detection, especially in intelligent transportation as a critical component of autonomous driving, has become a key issue. To accurately and efficiently detect lane information, researchers have proposed numerous methods [1,2,3,4,5]. One popular approach is the spatial CNN [6], which introduces slice-by-slice convolutional operation to capture spatial relationship information between rows and columns of feature maps. In this way, slender structural targets, such as lane lines, can be identified more effectively [7,8].

Although spatial CNN has achieved advanced performance, it still encounters several limitations when identifying complex or curved lanes. First, due to the fact that the slice-by-slice convolutional operation is conducted separately along the row and column directions of a feature map, it is difficult to capture spatial information effectively when the local features of a lane line are unreliable or obscured. Second, as spatial CNN only involves a unidirectional stacking operation, it is difficult to find the corresponding relationship within the features.

For these reasons, Wang et al. [9] proposed a method based on the keypoint global association network and introduce the Lane-Aware Feature Aggregator (LFA) to adaptively capture the local correlation among adjacent keypoints. Zou et al. [10] proposed a hybrid network architecture by combining the convolutional neural network (CNN) with the recurrent neural network (RNN), although the issue of the gradient explosion of RNNs adversely affects feature learning. To effectively capture local features, Qu et al. [11] proposed a two-stage approach for detecting keypoint information of lane lines. Yoo et al. [12] devise a novel module for horizontal reduction, which can more effectively extract features and compress the horizontal representation of each lane line in an end-to-end manner. Although significant advancements have been made by the aforementioned methods, they still encounter some technical challenges. In particular, effectively capturing the features of lane lines occluded by vehicles and pedestrians presents a challenge. Additionally, detecting lane lines at a distance remains difficult.

In this paper, a novel network model based on spatial CNN, the BSCNNLaneNet (Bidirectional Spatial CNN Lane Detection Network), is proposed for feature extraction in complex scenes, which is beneficial for improving the overall performance of lane detection. First, a bidirectional recurrent neural network (BRNN) is put forward to enhance the correlation between learned slice features. Second, to more efficiently extract feature information, a convolutional attention block is proposed to refine the slice features and strengthen the global relationships between slice features in different directions.

The main contributions of this paper are outlined below:

- (1)

- Based on the spatial CNN method, the proposed network model introduces the bidirectional recurrent neural network (BRNN) to effectively learn spatial relationships among slice features.

- (2)

- In addition, our method utilizes the convolutional block attention module to refine the slice features’ output by the BRNN, which can strengthen the global relationships between the features in different directions.

The remainder of this article is structured as follows: In Section 2, a brief review of lane detection methods is summarized. Then, the proposed method is described in Section 3. After that, experiments and results are shown in Section 4. Finally, Section 5 concludes our work and briefly discusses the possible future work.

2. Related Work

In this section, we briefly review the related work of lane detection. Lane detection is a computer vision task that involves identifying the boundaries of driving lanes in a video or image of a road scene. Based on different lane representations, most of the lane detection models can be sorted into three categories: segmentation-based methods, anchor-based methods, and curve-based methods.

Segmentation-based Methods: Segmentation-based methods treat lane detection as a pixel classification problem, which classifies each pixel and assigns it to a specific lane class. For this issue, Pan et al. [6] utilized slice-by-slice convolution within feature maps and allowed for message-passing between adjacent pixels. In order to reduce the computation cost and learn more information from context, Hou et al. [13] introduced a novel knowledge distillation approach, i.e., self-attention distillation (SAD), which allows the model to learn from rich contextual information through top-down and layer-wise attention distillation within the network. Li et al. [14] proposed the line proposal unit (LPU) to locate accurate traffic curves, which forced the system to learn the global feature representation of all traffic lines. For reducing extra annotation and retraining costs, Li et al. [15] proposed the multi-level domain adaptation (MLDA) framework, which can process lane detection at three complementary levels, i.e., pixel level, instance level, and category level. In order to catch subtle lane features, RESA [16] is proposed to enrich lane features after CNN feature extraction, which is able to take advantage of strong shape priors of lanes and capture the spatial relationships of pixels across rows and columns. In addition, Qiu et al. [17] proposed the PriorLane framework, which can enhance the segmentation performance of the full vision transformer by introducing low-cost local prior knowledge. In contrast to the abovementioned methods, our approach utilizes the bidirectional recurrent neural network and attention mechanism to gather spatial information and context features, respectively.

Anchor-Based Methods: These methods involve predefining a set of anchor points on the input image and then using a neural network to predict the offsets between these anchors and the actual lane markings, which convert the lane detection problem into a regression task. Qin et al. [18] proposed a novel formulation to select the locations of lanes from predefined rows in the image using global features and treated the process of lane detection as a row-based selection problem. In LaneATT [19], the authors proposed an anchor-based attention model that focuses on capturing relevant features around the anchor points to increase the detection accuracy in conditions with occlusions. In addition, Zheng et al. [20] proposed a cross-layer refinement method that leverages the high-level semantic features and low-level texture features to enhance the proposal representations, which can minimize localization errors and improve the detection results. Su et al. [21] proposed a structure-guided framework for lane detection, which can utilize a novel vanishing point guided anchoring mechanism to generate anchors and multi-level structure constraints to improve lane perception. In ObliqueConv [22], the authors proposed a new idea of oblique convolution that can break through the limitation that prevent the traditional convolution kernel from effectively extracting the lane of the inclined cross region. In order to solve the problem of fixed-shape anchors being difficult to use for modeling complex lane-line shapes, Zhang et al. [23] proposed the ECPNet (Enhanced Curve Perception Network), which can exploit high-level features to predict lanes while leveraging local-detailed features to improve localization accuracy. Although these anchor-based approaches directly introduce geometric knowledge of the lanes into the model, which enables robust prediction for heavily occluded lanes, their precision still depends on the design and placement of the anchors.

Curve-Based Methods: Curve-based methods for lane detection fit polynomial or spline curves to the lane markings by predicting the parameters of these curves using a neural network. This approach transforms lane detection into a curve fitting problem. The pioneering work [24] proposed a method to train a lane detector in an end-to-end manner, which is able to estimate lane curvature parameters by solving a weighted least-squares problem. The PolyLaneNet [25] takes as input the images from a forward-looking camera mounted in the vehicle and estimates polynomials that represent each lane marking in the image. In order to fit well with irregular lines, the authors [26] proposed a novel framework for lane detection, which decomposes the detection process into parts: curve modeling and ground height regression. Recently, LSTR [27] used transformer blocks to predict polynomials, in which the structures for lanes and the global context can be captured efficiently. Although curve-Based Methods are more robust due to the smoothness of the fitting curve, their accuracy still lags behind that of other methods.

3. Method

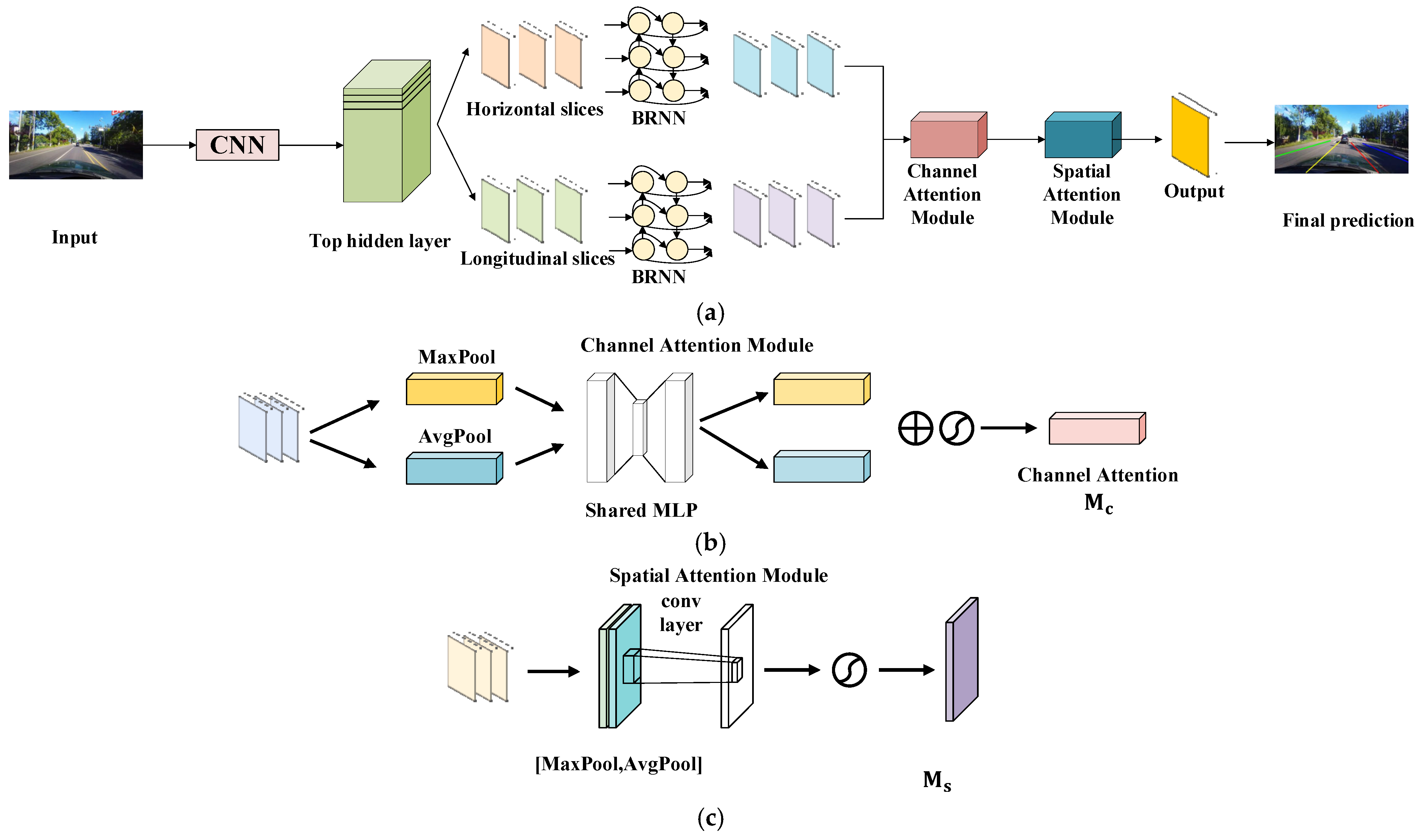

In this section, we describe the proposed BSCNNLaneNet network model in detail. The workflow of BSCNNLaneNet is shown in Figure 1. First, ResNet was chosen as BSCNNLaneNet’s backbone, and then a neck module was employed to capture spatial information. After that, the RNN and the CAM module were used to extract weighted features.

Figure 1.

Overall framework of the BSCNNLaneNet: (a) network schematic of the BSCNNLaneNet, (b) channel attention module and (c) spatial attention module.

3.1. BRNN-Based Serialized Feature Learning Method

In order to efficiently locate continuous lane lines, Pan et al. [6] proposed the spatial CNN method to capture the relationships among features by utilizing four-directional slices and convolution stacking. However, this method only obtains basic correlation information among the slice features. In contrast, in our method, the features are extracted in both horizontal and vertical directions. In addition, instead of performing stacking convolutional operations, our method uses a bidirectional recurrent neural network (BRNN) to gain more correlations among slice features. The forward propagation RNN transfers information in the order to , and the backward counterpart performs the same operations in the opposite direction. In this way, our approach is able to capture more contextual information.

In our method, the formulas used for learning the BRNN serialization feature are as follows [28,29,30]:

In these formulas, represents the hidden state of RNN forward propagation, while represents the hidden state of RNN backward propagation. is related to the information before the th sequence, and is the information after the th sequence. and are the weights of the forward and backward fully connected layers, respectively. is the input of BRNN, which represents the feature sequence of the output slice feature map. is the output of BRNN at time .

3.2. CBAM-Based Feature Association Method

In our method, we introduce the convolution block attention module to strengthen the global associations among slice features. In this way, the proposed method can gain attention mapping based on both channel and spatial order, which makes it possible to focus on pertinent information and disregard irrelevant information [31,32,33,34].

Thus, in our method, CBAM is adopted, which can generate a two-dimensional channel attention map and a two-dimensional space attention map. The whole attention process can be summarized as follows:

where denotes the element-wise multiplication operation. The channel attention weight value is propagated across the spatial dimension, and the spatial attention weight value can be propagated across the channel dimension. The details of the channel attention module and spatial attention module can be described as follows.

First, for the channel attention module, an intermediate feature map is obtained. and are the computing results of the average and maximum pooling operations conducted on . Then, and are input into a shared network where a multi-layer perceptron of the hidden layer is included. To minimize parameter computation overhead, the hidden activation size can be configured as , where r is the compression ratio. Finally, the merge and sigmoid function operations are applied to the output result of the shared network. The whole computation process can be summarized as follows:

where represents the sigmoid function, and and are the weight of the multilayer perceptron.

For the spatial attention module, first, maximum pooling and average pooling operations are performed on the input . Then, the pooling feature maps are put into a convolutional layer to generate a 2D spatial attention map. Finally, the sigmoid function operation is applied to the output of the convolution layer. This computation process can be summarized as follows:

where denotes the size of a convolution kernel.

By applying the channel attention module and the spatial attention module on sequence splicing, the network is able to effectively identify the key features and capture the spatial information of the lane lines.

3.3. Loss Function

In our detection model, the general cross entropy loss is adopted, which is defined in Formula (8). Here, is the true value, and is the predicted probability value obtained from the softmax logistic regression. represents the background. , , , and represent the four lane lines, respectively. To address the issue of imbalance between the positive and negative samples, we have implemented the concept of focal loss and incorporated it into our classification loss function. Specifically, we have set the , while setting , respectively.

The learning rate formula is shown in Formula (9).

4. Experiments and Results

4.1. Datasets

The TuSimple dataset [35] is commonly used in the field of lane detection. It is a challenging dataset that comprises 3626 images in the training set and 2782 images in the test set, each with a consistent image resolution of 1280 × 720. Lane lines are represented by equally spaced points, and their two-dimensional coordinates are stored in a corresponding JSON file. The JSON file for each image includes information on all four lane lines.

The TuSimple dataset provides a clear and relatively simple depiction of high-speed driving with sparse traffic flow and distinct road markings, while real-world driving scenarios can be more complex, with challenges like heavy traffic and obscured lane markings.

4.2. Evaluation Metric

In this experiment, we used the official metric for TuSimple [35] to evaluate our method, such as accuracy rate, false positive (FP) and false negative (FN) rates, the F1-measure, etc. The accuracy rate is determined by averaging the number of correctly predicted points in the image and calculated with the following Formula (10). denotes the count of accurately predicted points, and denotes the overall count of actual label points. When the coordinate difference between the predicted and actual lane point coordinates is below the threshold (which has been set to 40 pixels in this paper), the lane point prediction is correct.

The FP and FN rates are defined as follows:

where is the number of wrong predicted lanes, is the total number of predicted lanes, is the missed ground-truth lanes in the predictions, and is the number of all ground-truth lanes.

Additionally, the F1-score, a combination metric of precision and recall, is reported as shown in previous works [6]. The F1-score is defined as follows:

where

and TP (true positive) is the number of lane points correctly predicted.

4.3. Implementation Details

4.3.1. Experiment Settings

We chose ResNet18, ResNet34, and ResNet101 as our pre-trained backbones for different computational requirements: small, medium and large. In the training process, we adopted the Adam optimizer and cosine decay learning-rate strategy. The settings, as described in [6], along with the specific hyperparameter configurations used during training, are detailed in Table 1. All experiments were implemented based on Tensorflow with one NVIDIA 3090 GPU.

Table 1.

Hyperparameter configurations in the training process.

4.3.2. Data Preprocessing

In the experiment, the data preprocessing was mainly divided into three parts:

- (1)

- Assign a grayscale image with one channel as the label for the TuSimple dataset. The grayscale value of the label corresponds to the sequence number of the lane line from left to right, with 1, 2, 3, and 4 representing the lane lines, respectively.

- (2)

- Due to the uppermost portion of the picture mostly consisting of buildings, trees, sky, and other non-lane line elements, this area should be removed, leaving only the lane line area covering the entirety of the remaining picture. This process reduces the image size and the computation cost. All the input images are resized to 320 × 800. This can lead to faster training times and lower computational resource requirements.

- (3)

- Data augmentation is applied to the training phase, including random scaling and random rotation.

4.4. Main Results

We compared our approach with recent state-of-the-art methods on the TuSimple benchmark. As illustrated in Table 2, our results show that our method achieves a comparable performance with a 96.87% F1@50 measure with the backbone ResNet34. In addition, we also analyzed the FP and FN for each method. The FP of our method is far below other algorithms, which means that BSCNNLaneNet gains higher precision on the lane detection task and contributes to achieving higher accuracy. It is worth noting that BSCNNLaneNet reaches 150+ FPS and is much faster than most other methods, which means our method is suitable for real-time applications.

Table 2.

Performance of the different methods for the TuSimple dataset.

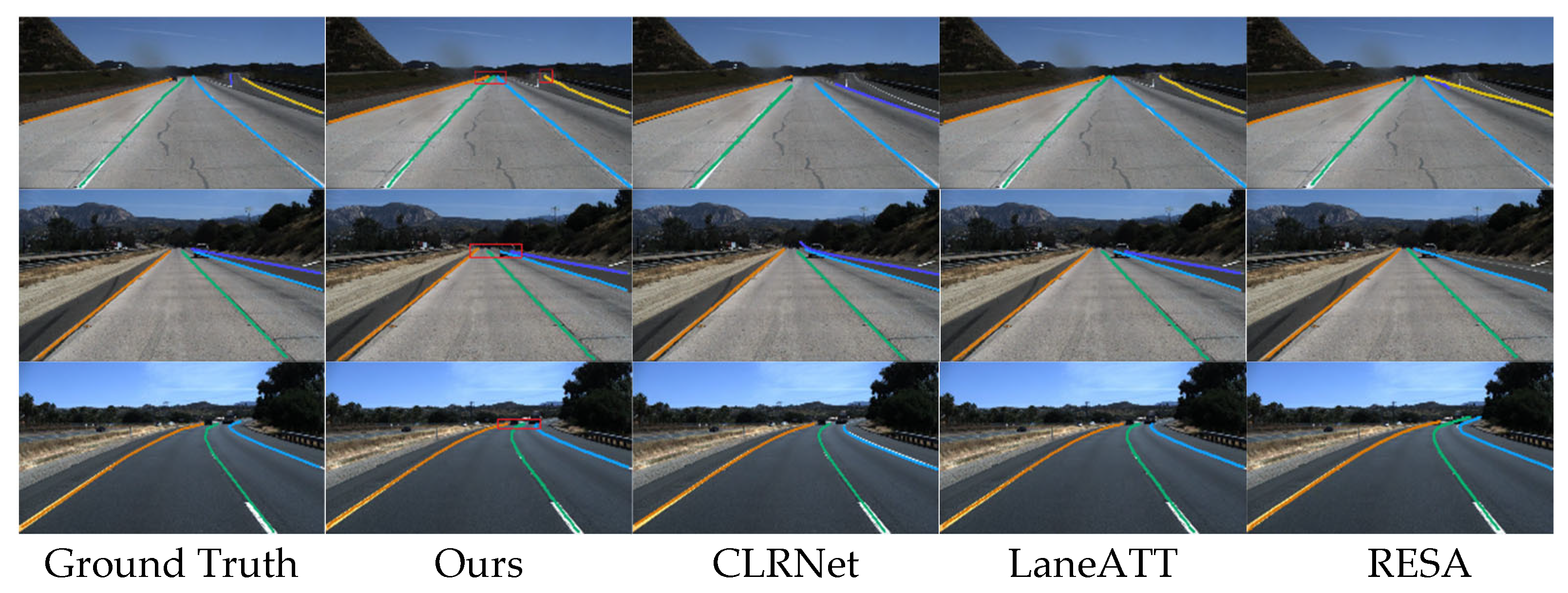

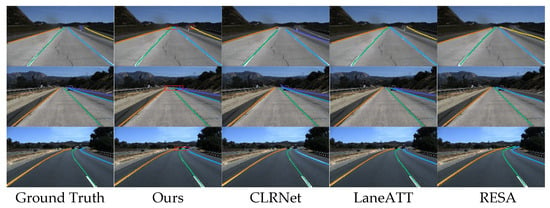

Additionally, Figure 2 presents the visualization results of other approaches, in which different lane marking instances are represented by different colors. In contrast, our method demonstrates a greater ability to precisely detect distant lane lines, avoiding the common issue of missed detections in far-off regions that is seen in other approaches. (shown in the red rectangle box in the second column).

Figure 2.

Visualization results for the TuSimple dataset. Different lane marking instances are represented by different colors.

These experimental findings serve as compelling evidence of the viability of and advancements achieved by introducing bidirectional spatial information and associating slice features globally.

4.5. Ablation Study

In this section, we conducted the ablation study to evaluate the impact of the bidirectional recurrent neural network model and the CBAM model for our proposed method on ResNet18, ResNet34, and ResNet101. The same settings as described in Section 4.3.1, although not specially mentioned, were adopted. The component-level evaluation results are shown in Table 3, and the first row demonstrates the baseline results of the original SCNN with a different ResNet backbone.

Table 3.

Ablation study of the components in BSCNNLaneNet on TuSimple.

The second row of Table 3 summarizes the performance of our method based on different backbones. By adding the RNN module, we found that all three network models outperform their original counterparts, respectively.

From the results in the third row of Table 3, we can see that the CAM module creates improvement by adding spatial and channel attention as compared with the baseline of the original SCNN. SCNN+CAM achieves accuracy scores of 96.62, 96.66, and 96.68 when RestNet18, Renet34, and ResNet101, respectively, are used as the backbones.

Finally, the results in the fourth row demonstrate that the proposed network model, BSCNNLaneNet, achieves better recognition accuracy. The improvement indicates that it is valid to add spatial information and attention mechanisms for the lane line detection method.

4.6. Cross-Dataset Generalization Evaluation

In order to estimate the generalization ability of our method, cross-dataset evaluation test was conducted. We utilized the model trained on the TuSimple training set to draw inferences from the CULane testing set. Table 4 shows that the proposed method achieves a comparable result with an accuracy of 35.32%, which surpasses the SCNN method by a significant margin of nearly 30%. Thus, it confirms the stable generalization capabilities of our method.

Table 4.

Evaluation of the generalization ability of different methods from the TuSimple training set to the CULane testing set.

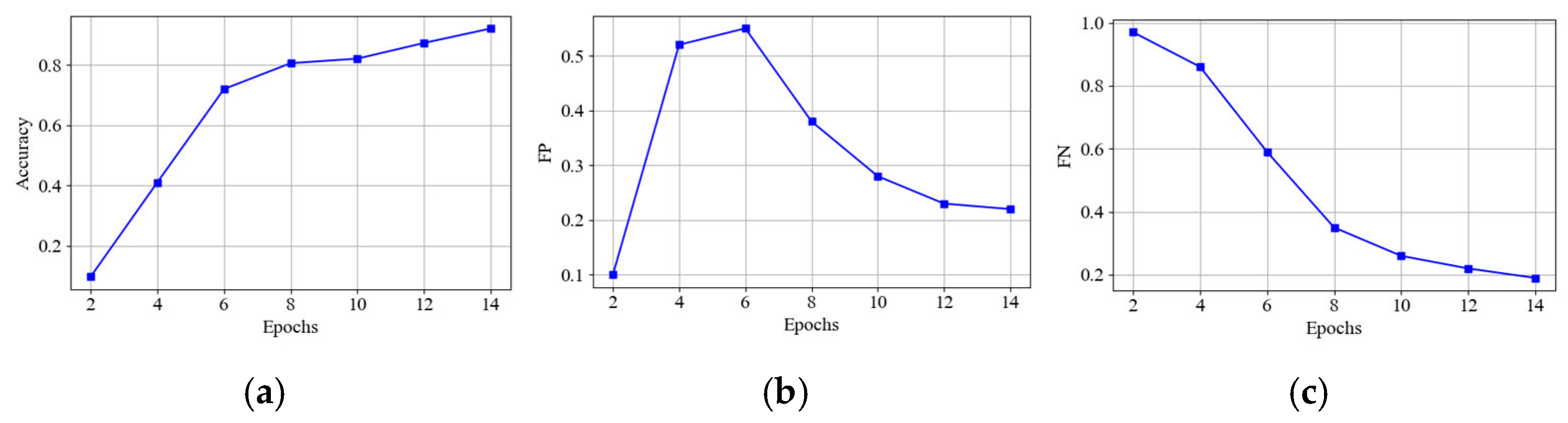

4.7. Study on Training Dynamics

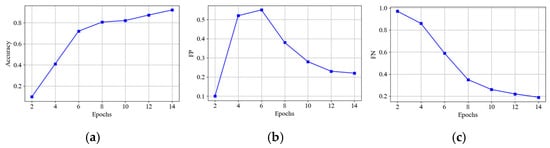

In this section, we analyzed the training dynamics of BSCNNLaneNet. To this end, we trained our module on the TuSimple dataset for 14 epochs and evaluated the performances per two epochs. As depicted in Figure 3, it is evident that the accuracy score increases as the number of rounds increases, whereas the values of FP and FN decrease as the number of rounds increases. BSCNNLaneNet converges well at about the sixth epoch, which indicates that our method achieves a fast convergence rate. In contrast, CLRNet needs about 50 training epochs. This suggests that our method not only achieves a comparable result in the test process but can also save computation costs in the training phrase.

Figure 3.

Change trends of the evaluation index: (a) the change trend of accuracy, (b) the change trend of FP, and (c) the change trend of FN.

5. Conclusions

In this paper, we present an enhanced Spatial CNN algorithm designed for detecting lane lines, which can assist in creating intelligent transportation systems for autonomous driving, auxiliary driving, etc. This model is capable of extracting more comprehensive spatial correlation information, improving the spatial correlation among slice features in multiple directions, and extending the correlation ranges among slice features, all while minimizing computational complexity. The detectors yield precise and valuable metrics for lane detection systems, including lane-line contour and area. Our experiments demonstrate that incorporating a bidirectional recurrent neural network (BRNN) and a convolutional attention module into the spatial convolutional neural network (CNN) leads to significant improvement in lane detection. Specifically, our approach based on ResNet34 achieves a high accuracy of 96.86%. Compared with the spatial CNN, the algorithm presented in this paper achieves a reduction in both the FP and FN to 2.26% and 1.99%, respectively. Though the results demonstrate the superiority of our approach compared with existing methods, there also exist some limitations, i.e., how to improve its performance in low-light conditions and further enhance its adaptability for the issue of partially missing lane markings.

Author Contributions

Conceptualization, G.W.; Software, X.L.; Validation, X.L.; Resources, X.L.; Data curation, Y.G.; Writing—original draft, M.Z.; Writing—review & editing, Z.J.; Supervision, L.W.; Critical revision, G.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (NSFC) under grant no. 62176113; the Key Technologies R&D program of Henan Province under grants no. 242102211024, 222102210013, and 252102221019; the Key Research and Development Projects of Henan Province under grant no. 241111221700; the Longmen Laboratory Frontier Exploration Project of Henan Province under Grant No. LMQYTSKT035.

Data Availability Statement

The data underlying this article are available, and the hyperlink address is as follows: https://www.kaggle.com/datasets/manideep1108/tusimple?resource=download (accessed on 1 March 2024).

Conflicts of Interest

There are no conflicts of interest regarding the publication of this paper.

References

- Zhao, L.; Zheng, W.; Zhang, Y.; Zhou, J.; Lu, J. StructLane: Leveraging Structural Relations for Lane Detection. IEEE Trans. Image Process. 2024, 33, 3692–3706. [Google Scholar] [CrossRef] [PubMed]

- Feng, Z.; Guo, S.; Tan, X.; Xu, K.; Wang, M.; Ma, L. Rethinking efficient lane detection via curve modeling. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17062–17070. [Google Scholar]

- Liu, B.; Feng, L.; Zhao, Q.; Li, G.; Chen, Y. Improving the Accuracy of Lane Detection by Enhancing the Long-Range Dependence. Electronics 2023, 12, 2518. [Google Scholar] [CrossRef]

- Xie, J.; Han, J.; Qi, D.; Chen, F.; Huang, K.; Shuai, J. Lane detection with position embedding. In Proceedings of the Fourteenth International Conference on Digital Image Processing (ICDIP 2022), Wuhan, China, 20–23 May 2022; Volume 12342, pp. 78–86. [Google Scholar]

- Deng, L.; Liu, X.; Jiang, M.; Li, Z.; Ma, J.; Li, H. Lane Detection Based on Adaptive Cross-Scale Region of Interest Fusion. Electronics 2023, 12, 4911. [Google Scholar] [CrossRef]

- Pan, X.; Shi, J.; Luo, P.; Wang, X.; Tang, X. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Wang, J.; Ma, Y.; Huang, S.; Hui, T.; Wang, F.; Qian, C.; Zhang, T. A keypoint-based global association network for lane detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 1392–1401. [Google Scholar]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust lane detection from continuous driving scenes using deep neural networks. IEEE Trans. Veh. Technol. 2019, 69, 41–54. [Google Scholar] [CrossRef]

- Qu, Z.; Jin, H.; Zhou, Y.; Yang, Z.; Zhang, W. Focus on local: Detecting lane marker from bottom up via key point. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 14122–14130. [Google Scholar]

- Yoo, S.; Lee, H.S.; Myeong, H.; Yun, S.; Park, H.; Cho, J.; Kim, D.H. End-to-end lane marker detection via row-wise classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 1006–1007. [Google Scholar]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection cnns by self attention distillation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1013–1021. [Google Scholar]

- Li, X.; Li, J.; Hu, X.; Yang, J. Line-cnn: End-to-end traffic line detection with line proposal unit. IEEE Trans. Intell. Transp. Syst. 2019, 21, 248–258. [Google Scholar] [CrossRef]

- Li, C.; Zhang, B.; Shi, J.; Cheng, G. Multi-level domain adaptation for lane detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4380–4389. [Google Scholar]

- Zheng, T.; Fang, H.; Zhang, Y.; Tang, W.; Yang, Z.; Liu, H.; Cai, D. Resa: Recurrent feature-shift aggregator for lane detection. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 3547–3554. [Google Scholar]

- Qiu, Q.; Gao, H.; Hua, W.; Huang, G.; He, X. Priorlane: A prior knowledge enhanced lane detection approach based on transformer. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 5618–5624. [Google Scholar]

- Qin, Z.; Wang, H.; Li, X. Ultra fast structure-aware deep lane detection. In Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XXIV 16; Springer International Publishing: Cham, Switzerland, 2020; pp. 276–291. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Keep your eyes on the lane: Real-time attention-guided lane detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 294–302. [Google Scholar]

- Zheng, T.; Huang, Y.; Liu, Y.; Tang, W.; Yang, Z.; Cai, D.; He, X. CLRNET: Cross layer refinement network for lane detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 898–907. [Google Scholar]

- Su, J.; Chen, C.; Zhang, K.; Luo, J.; Wei, X.; Wei, X. Structure Guided Lane Detection. arXiv 2021, arXiv:2105.05403. [Google Scholar] [CrossRef]

- Zhang, X.; Gong, Y.; Lu, J.; Li, Z.; Li, S.; Wang, S.; Liu, W.; Wang, L.; Li, J. Oblique Convolution: A Novel Convolution Idea for Redefining Lane Detection. IEEE Trans. Intell. Veh. 2023, 9, 4025–4039. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, Y.; Wu, C.; Zhang, T.; Liu, Y. ECPNet: An Enhanced Curve Perception Network for Lane Detection. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 7380–7384. [Google Scholar]

- Van Gansbeke, W.; De Brabandere, B.; Neven, D.; Proesmans, M.; Van Gool, L. End-to-end lane detection through differentiable least-squares fitting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; De Souza, A.F.; Oliveira-Santos, T. Polylanenet: Lane estimation via deep polynomial regression. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 6150–6156. [Google Scholar]

- Han, W.; Shen, J. Decoupling the Curve Modeling and Pavement Regression for Lane Detection. arXiv 2023, arXiv:2309.10533. [Google Scholar]

- Liu, R.; Yuan, Z.; Liu, T.; Xiong, Z. End-to-end lane shape prediction with transformers. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV 2021), Online, 5–9 January 2021; pp. 3694–3702. [Google Scholar]

- Rassabin, M.; Yagfarov, R.; Gafurov, S. Approaches for Road Lane Detection. In Proceedings of the 2019 3rd School on Dynamics of Complex Networks and their Application in Intellectual Robotics, Innopolis, Russia, 9–11 September 2019. [Google Scholar]

- Satzoda, R.K.; Sathyanarayana, S.; Srikanthan, T. Hierarchical additive Hough transform for lane detection. IEEE Embed. Syst. Lett. 2010, 2, 23–26. [Google Scholar] [CrossRef]

- Abegaz, B.W.; Shah, N. Sensors based Lane Keeping and Cruise Control of Self Driving Vehicles. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York City, NY, USA, 28–31 October 2020; pp. 0486–0491. [Google Scholar]

- Gong, Y.; Jiang, X.; Wang, L.; Xu, L.; Lu, J.; Liu, H.; Lin, L.; Zhang, X. TCLaneNet: Task-Conditioned Lane Detection Network Driven by Vibration Information. IEEE Trans. Intell. Veh. 2024, 1–14. [Google Scholar] [CrossRef]

- Zhang, X.; Gong, Y.; Li, Z.; Liu, X.; Pan, S.; Li, J. Multi-modal attention guided real-time lane detection. In Proceedings of the 2021 6th IEEE International Conference on Advanced Robotics and Mechatronics (ICARM), Chongqing, China, 3–5 July 2021; pp. 146–153. [Google Scholar]

- Liu, P.; Yang, M.; Wang, C.; Wang, B. Multi-lane detection via multi-task network in various road scenes. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 2750–2755. [Google Scholar]

- Ling, J.; Chen, Y.; Cheng, Q.; Huang, X. Zigzag Attention: A Structural Aware Module For Lane Detection. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 4175–4179. [Google Scholar]

- TuSimple: TuSimple Benchmark. Available online: https://www.kaggle.com/datasets/manideep1108/tusimple?resource=download (accessed on 1 March 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).