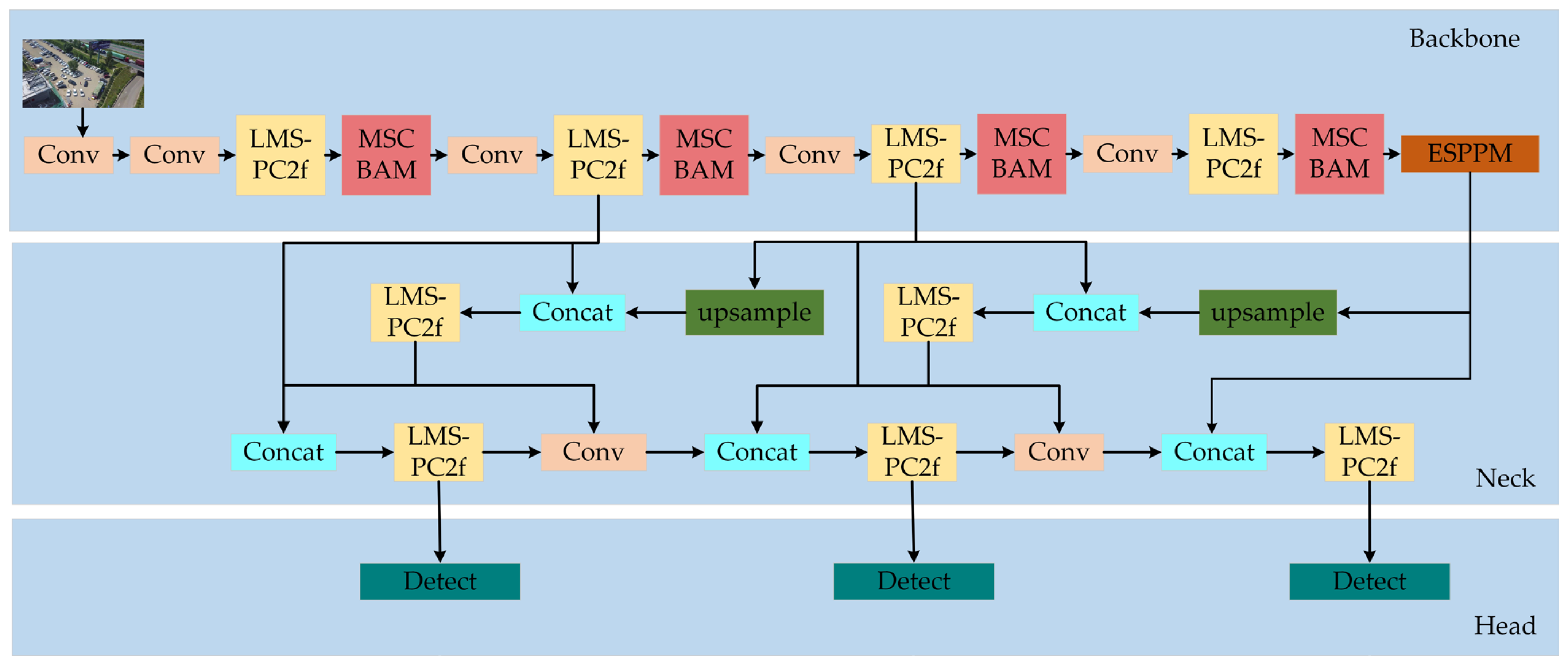

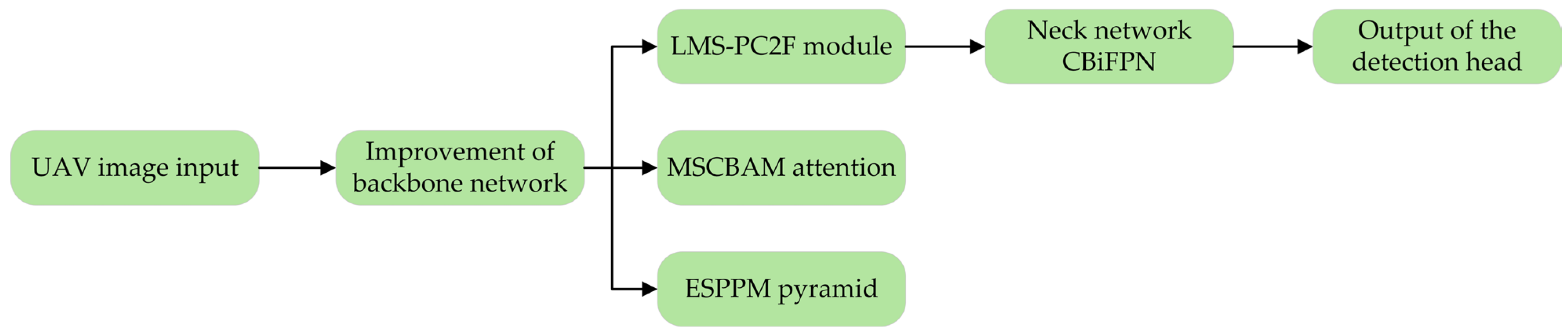

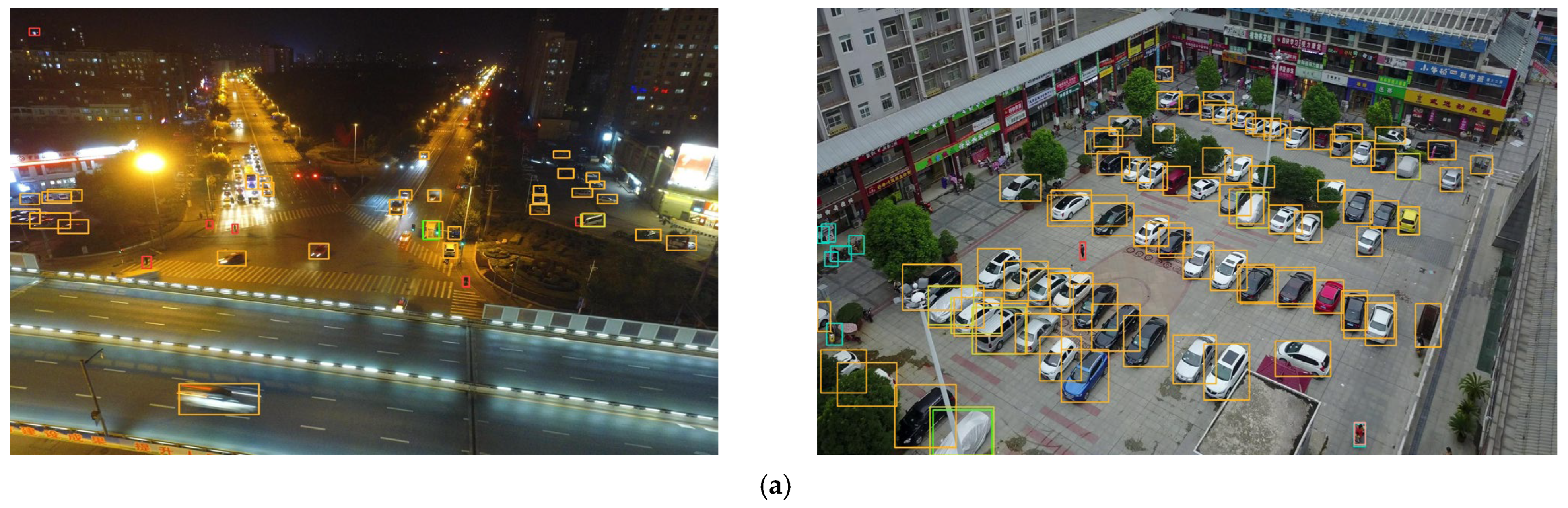

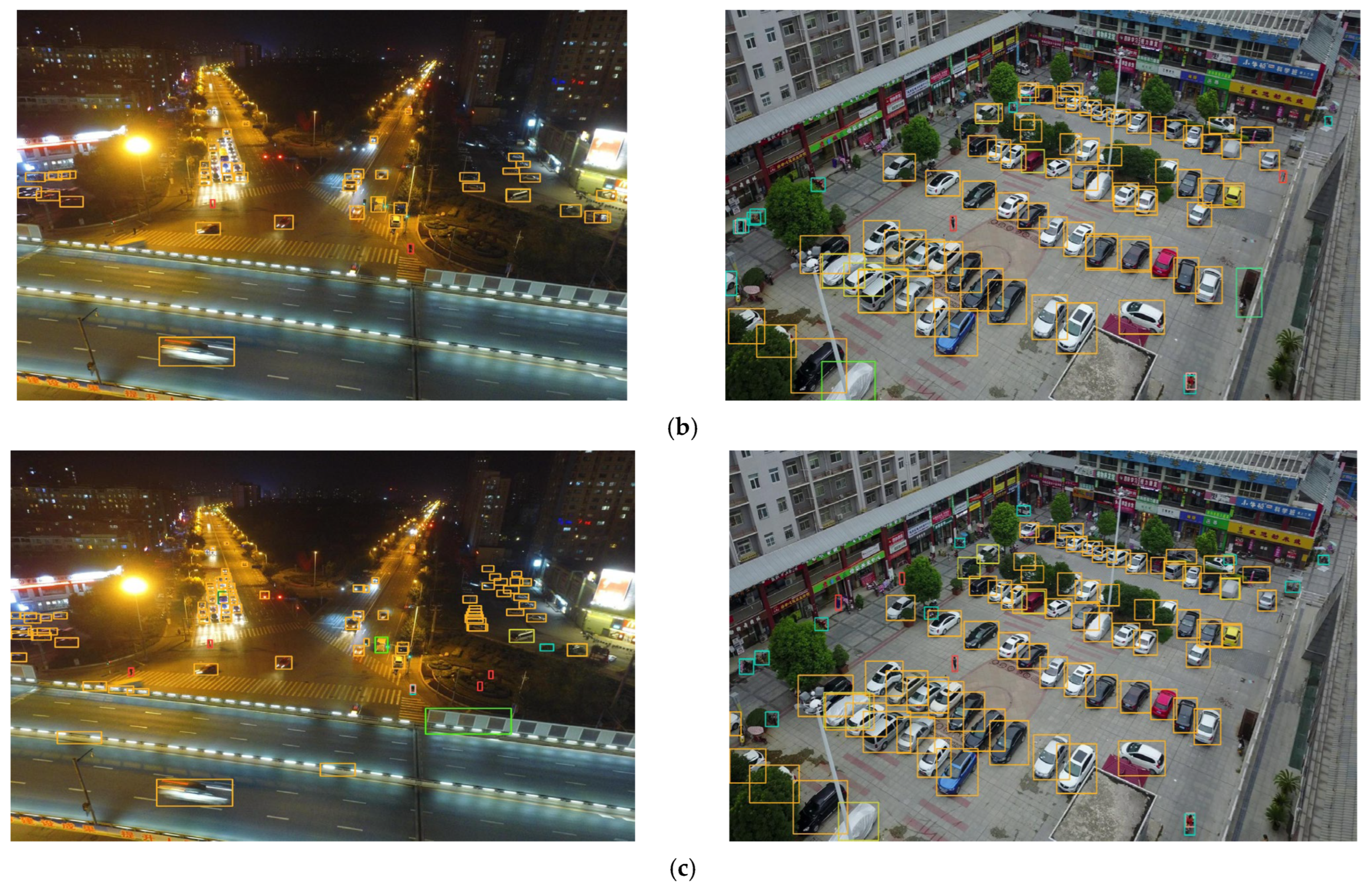

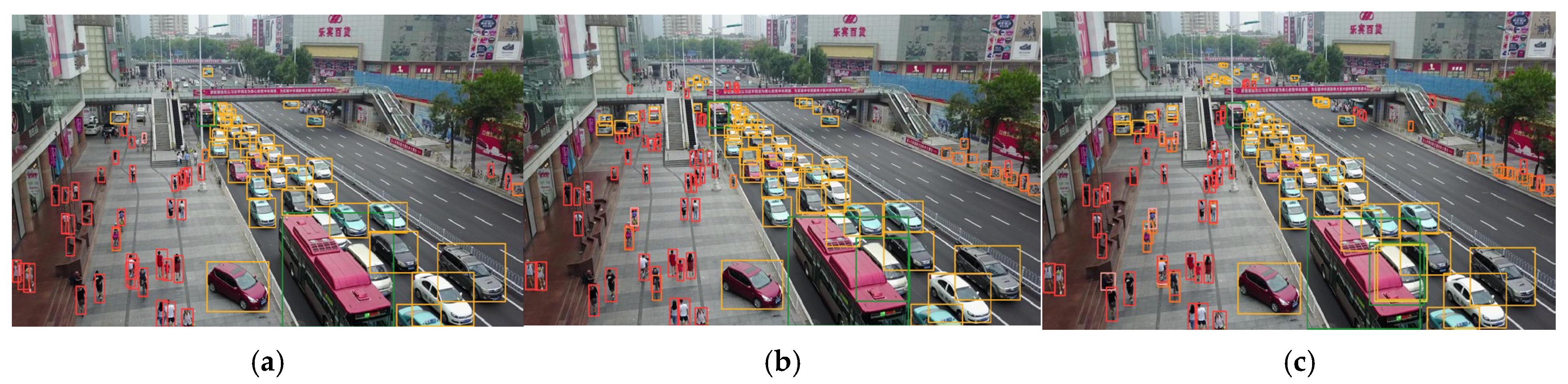

To address the limitations of inaccurate localization, missed detections, and false positives in drone imagery object detection with the YOLOv8n baseline model, this paper proposes LMEC-YOLOv8—an enhanced YOLOv8n-based model for UAV target detection, whose architecture is depicted in

Figure 2. The methodology begins by designing a Lightweight Multi-scale Convolution Module (LMSCM) to replace the second convolution in the C2F bottleneck, integrating Partial Convolution (PConv) to form the Lightweight Multi-scale Fusion of PConv-based C2F (LMS-PC2F), thereby improving feature representation accuracy. Subsequently, a Multi-scale Convolutional Block Attention Module (MSCBAM) is developed through enhancements to CBAM, which is embedded into the backbone network to augment detection precision, robustness, and multi-scale feature extraction capabilities. Further innovation involves the design of an Enhanced Spatial Pyramid Pooling Module (ESPPM), where adaptive average pooling and Large Separable Kernel Residual Attention Mechanisms are introduced into the SPPF structure to preserve fine-grained image features while enabling efficient multi-scale feature fusion. Finally, the original neck network is replaced with a Cross-layer Bidirectional Feature Pyramid Network (CBiFPN), which facilitates hierarchical semantic interaction through weighted cross-level feature fusion, effectively addressing challenges posed by scale variations and occlusions. The research process is shown in

Figure 3.

LMEC-YOLOv8 synergizes in the following ways: (1) LMS-PC2F reduces parameters while capturing multi-scale features; (2) MSCBAM suppresses noise via multi-scale attention; (3) ESPPM enhances long-range dependency modeling; and (4) CBiFPN resolves occlusion via cross-layer fusion. This unified design addresses UAV-specific challenges holistically.

3.2.1. LMS-PC2F Module

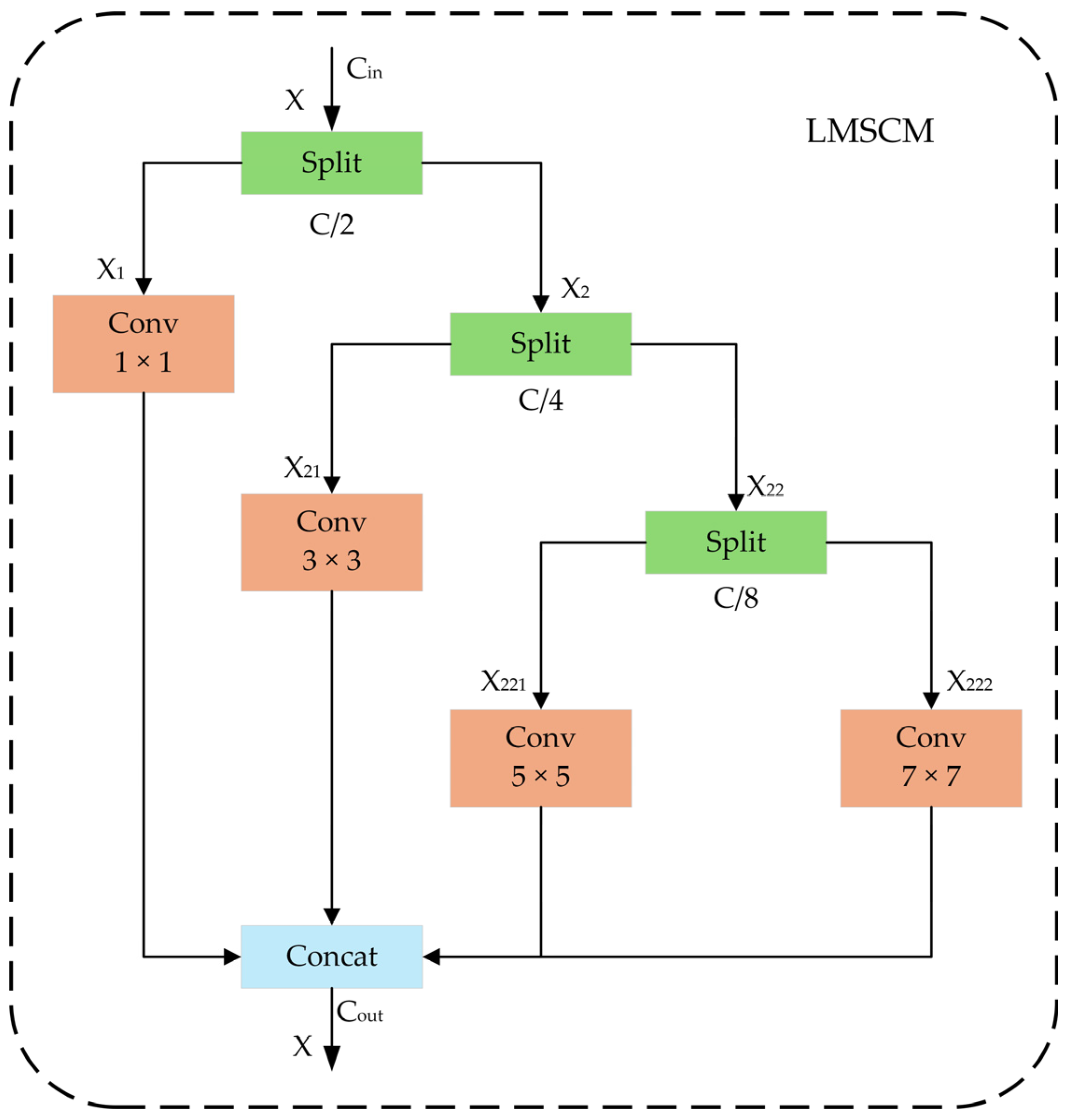

The C2F module in the YOLOv8n model enhances detection capabilities in complex backgrounds or for targets with rich details by improving feature fusion. However, due to the limited storage and computational capabilities of edge devices such as UAVs, detection models must prioritize lightweight design to maintain efficient object detection performance under constrained hardware resources. To address this, we propose the Lightweight Multi-Scale Convolution Module (LMSCM), as illustrated in

Figure 4.

The overall structure of the LMSCM resembles the pyramid architecture of SPPELAN [

6]. Let C denote the number of input channels and X the input feature map. Within this module, C is divided into three components:

C1 ∈

,

C2 ∈

, and

C3 ∈

. The feature map is hierarchically partitioned three times: First of all, X is split into

X1,

X2.

X1 are fed into 1 × 1 convolutions to extract local features. Secondly,

X2 is further divided into

X21 and

X22.

X21 undergoes a 3 × 3 convolution to capture mid-scale features, balancing broader contextual information with fine local details. Finally,

X22 is subdivided into

X221 and

X222, processed by 5 × 5 and 7 × 7 convolutions, respectively, to extract larger-scale features tailored for detecting large or blurry targets.

This pyramid structure employs multi-scale convolutional operations to simultaneously extract features at varying receptive fields, significantly enhancing the recognition of diverse targets. Despite multiple convolutional layers, parallel computing minimizes computational redundancy, improves overall efficiency, and ensures adaptability to the complexity and variability of environments.

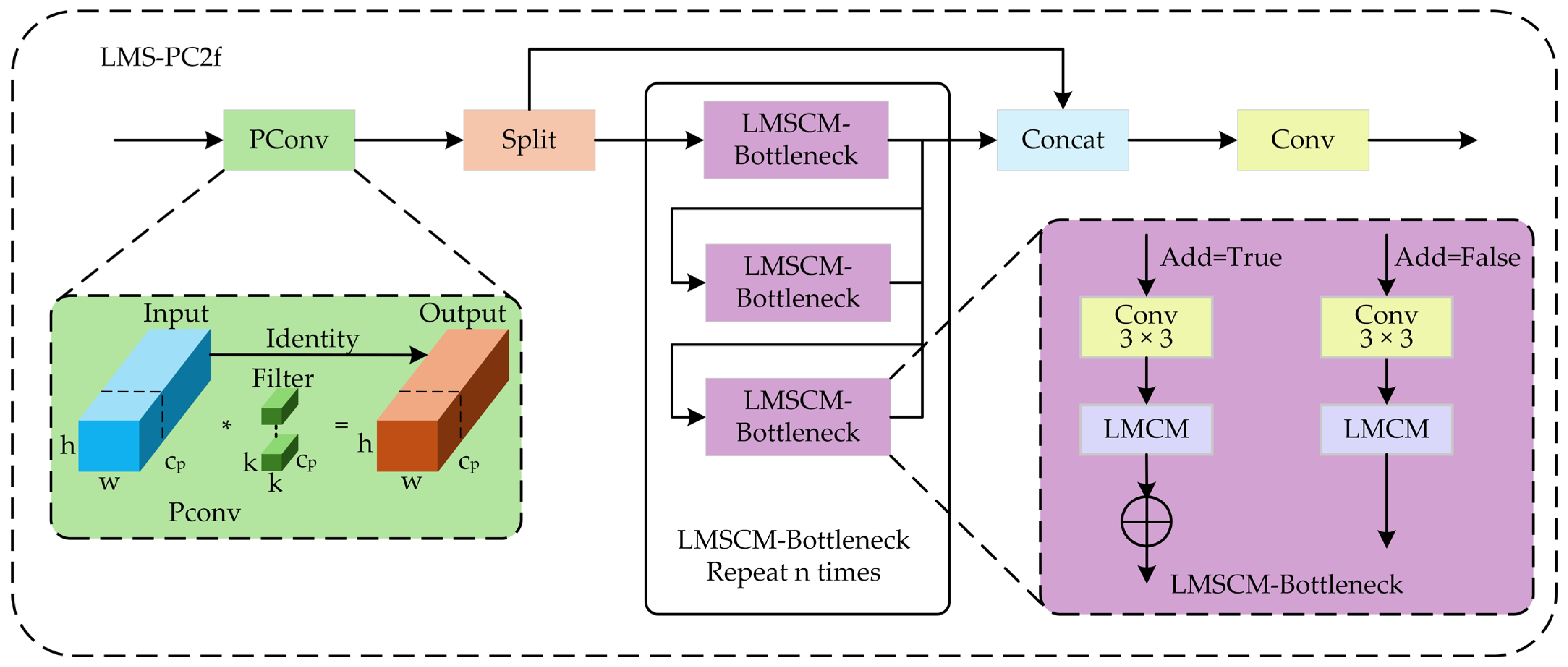

To enhance the detection performance of the C2F module, two key improvements are introduced. First, the second standard convolutional layer in the original bottleneck structure is replaced with the Lightweight Multi-Scale Convolution Module (LMSCM), forming the LMSCM-Bottleneck. This design leverages multi-scale convolutional kernels to capture rich spatial-contextual information, improving the model’s adaptability to target scale variations while maintaining parameter efficiency. It significantly boosts feature extraction capabilities without compromising computational resource constraints. Second, due to the complexity of UAV imaging environments, detected targets are often affected by occlusion. To address this, Partial Convolution (PConv) [

17] is integrated into the C2F module. PConv optimizes feature extraction by focusing on meaningful regions and ignoring sparse or noisy data (e.g., invalid zero-value areas), thereby eliminating redundant computations. This not only enhances robustness against occluded targets but also improves overall detection accuracy in UAV-based scenarios.

The improved LMS-PC2F module is shown in

Figure 5. Combining the advantages of LMSCM-Bottleneck and PConv, it can capture and fuse multi-scale features more efficiently, ensure that the output of each feature block is fully integrated, and further enhance the expressive ability and feature learning ability of the model. Additionally, the module optimizes occlusion handling in UAV imagery, improves detection precision, and reduces parameter count, making it highly suitable for deployment on storage-constrained edge devices.

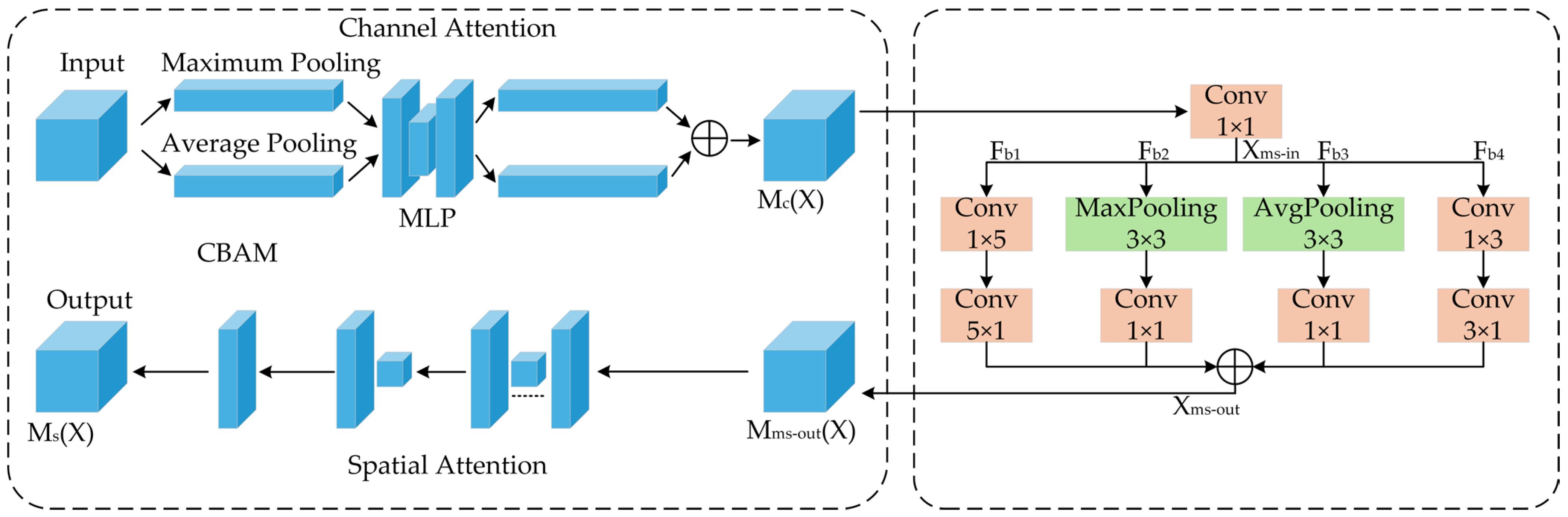

3.2.2. Lightweight Multi-Scale Attention Mechanism MSCBAM

In UAV-based object detection tasks, the complex environment and overlapping targets significantly increase the difficulty of the task. Although high-precision detection models can improve detection performance, they often come with a large number of parameters. This leads to slower computation speeds, prolonged processing times, and increased detection latency, imposing additional burdens on edge detection devices like UAVs. To address this, Wu et al. [

18] incorporated the CBAM attention mechanism into the backbone network to capture critical feature information and enhance the expressive power of the convolutional neural network. This approach effectively reduces parameter size while directing the model’s focus to essential information.

The CBAM [

19] attention mechanism consists of two sequential submodules: a channel attention module and a spatial attention module. The basic framework of CBAM can be formulated as follows: first, the input feature map is processed through the channel attention module to obtain

X′, which is then passed through the spatial attention module to obtain

X″.

Here,

represents the generated channel attention weights, and

denotes the spatial attention weights. While CBAM effectively reduces parameter size, it relies on single-scale convolutional operations to capture spatial attention. This confines feature extraction to a fixed receptive field, limiting its performance in complex scenarios requiring multi-scale information capture, which ultimately leads to suboptimal results in UAV-based object detection. To address this, we propose MSCBAM, a lightweight multi-scale attention mechanism developed as an improvement on CBAM. It effectively captures multi-scale information, enhances model performance across varying target sizes and complex environments, improves robustness to diverse inputs, and reduces reliance on network depth for performance gains, thereby further minimizing parameter size. The MSCBAM architecture is illustrated in

Figure 6.

After , a 1 × 1 convolution is applied to expand the fully connected layer in the spatial dimension, enabling cross-channel interaction and fusion. The channels are then split into four branches, . Two branches employ sequential convolution operations with dimensions 1 × 3 and 3 × 1, and 1 × 5 and 5 × 1, respectively. These horizontal and vertical convolutions help capture fine-grained local features and broader contextual information, where larger kernels better handle global features. For , a 3 × 3 max pooling layer extracts the strongest local feature responses, highlighting critical edges and corner information. A subsequent 1 × 1 convolution adjusts channel dimensions and integrates salient features, enriching discriminative information for final fusion. For , average pooling aggregates local details at smaller scales while suppressing noise, producing smoother features. A follow-up 1 × 1 convolution further refines channel relationships, extracts essential local patterns, and compresses output channels.

The multi-scale module in MSCBAM enhances the model’s capability to represent multi-scale features by capturing and fusing feature information at different scales, thereby improving its adaptability to complex scenes and diverse targets. This enables MSCBAM to achieve superior performance when processing images with varying scales and complex backgrounds. The multi-scale module can be formulated as follows:

Here, is the output of Xc after applying a 1 × 1 convolution. represent the outputs of the four branches, and is obtained by concatenating the outputs from the four branches. X″ denotes the final output after applying spatial attention to .

MSCBAM reduces parameters and retains multi-scale information through parallel multi-branch lightweight design (such as 1 × 5 + 5 × 1 replacing the 5 × 5 standard convolution). Channel segmentation (C → C/4) further reduces the computational load.

3.2.3. ESPPM Module

- (1)

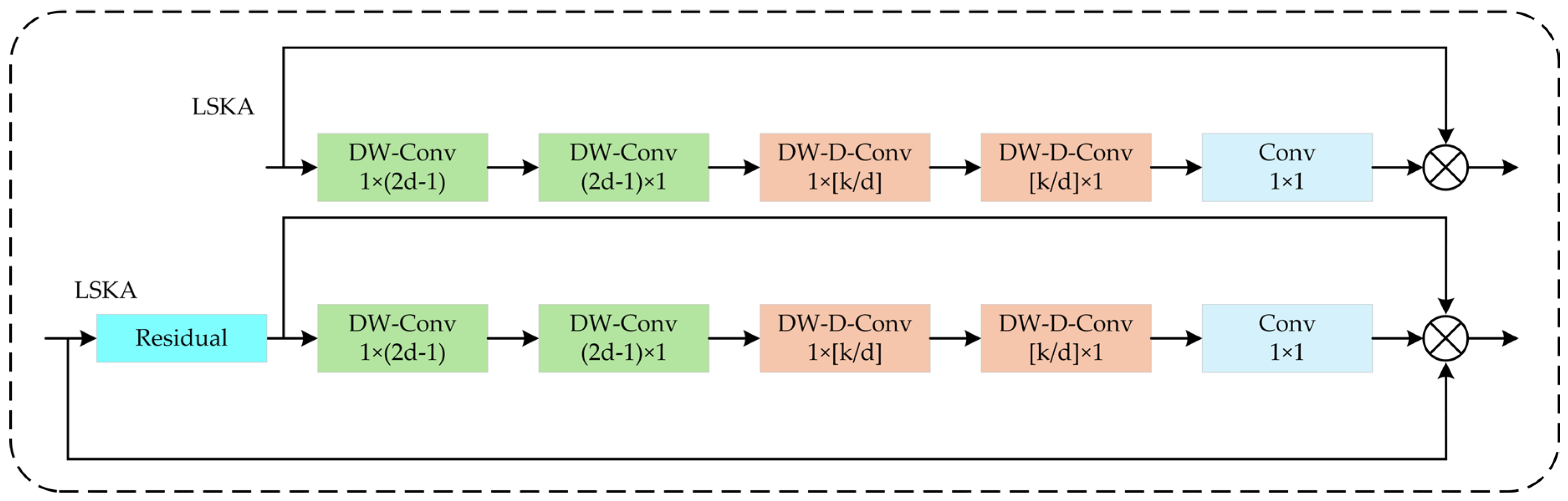

Large Separable Kernel Residual Attention Mechanism (LSKR)

Large Separable Kernel Attention (LSKA) [

20] is a technique that achieves efficient feature capture and long-range dependency modeling by decomposing large-kernel convolution operations. It splits traditional convolution into smaller kernels while retaining the advantages of large-kernel convolution in capturing global features, making it particularly effective for processing sequential data.

In

Figure 7, the left side illustrates the LSKA module, while the right side depicts the LSKR module (Large Separable Kernel Residual). The latter combines large-kernel decomposition with residual connections to mitigate the loss of critical information in long sequences.

Although LSKA excels at capturing long-range dependencies, its feature representation capability remains limited in complex scenarios, while residual connections have proven effective in mitigating the vanishing gradient problem in deep neural networks. Building on this, we propose the Large Separable Kernel Residual Attention Mechanism (LSKR). By integrating a residual structure [

21] into LSKA, LSKR maintains a direct link between the original input and output, addressing the limitations of neural networks in processing long-sequence data.

The LSKR module is defined as

where F(⋅) represents the large separable kernel decomposition operation. Specifically, for a given large kernel size K (e.g., 15 × 15), we decompose it into two smaller kernels (e.g., 15 × 1 and 1 × 15). The operation can be expressed as

Here, the two consecutive convolutions (first a vertical convolution and then a horizontal convolution) approximate the effect of a full K × K convolution but with reduced computational complexity. The output of F is then added to the original input X (residual connection), and a 1 × 1 convolution is applied to adjust the channel dimensions and integrate features.

This design allows LSKR to efficiently capture long-range dependencies while preserving the original feature information through the residual connection.

- (2)

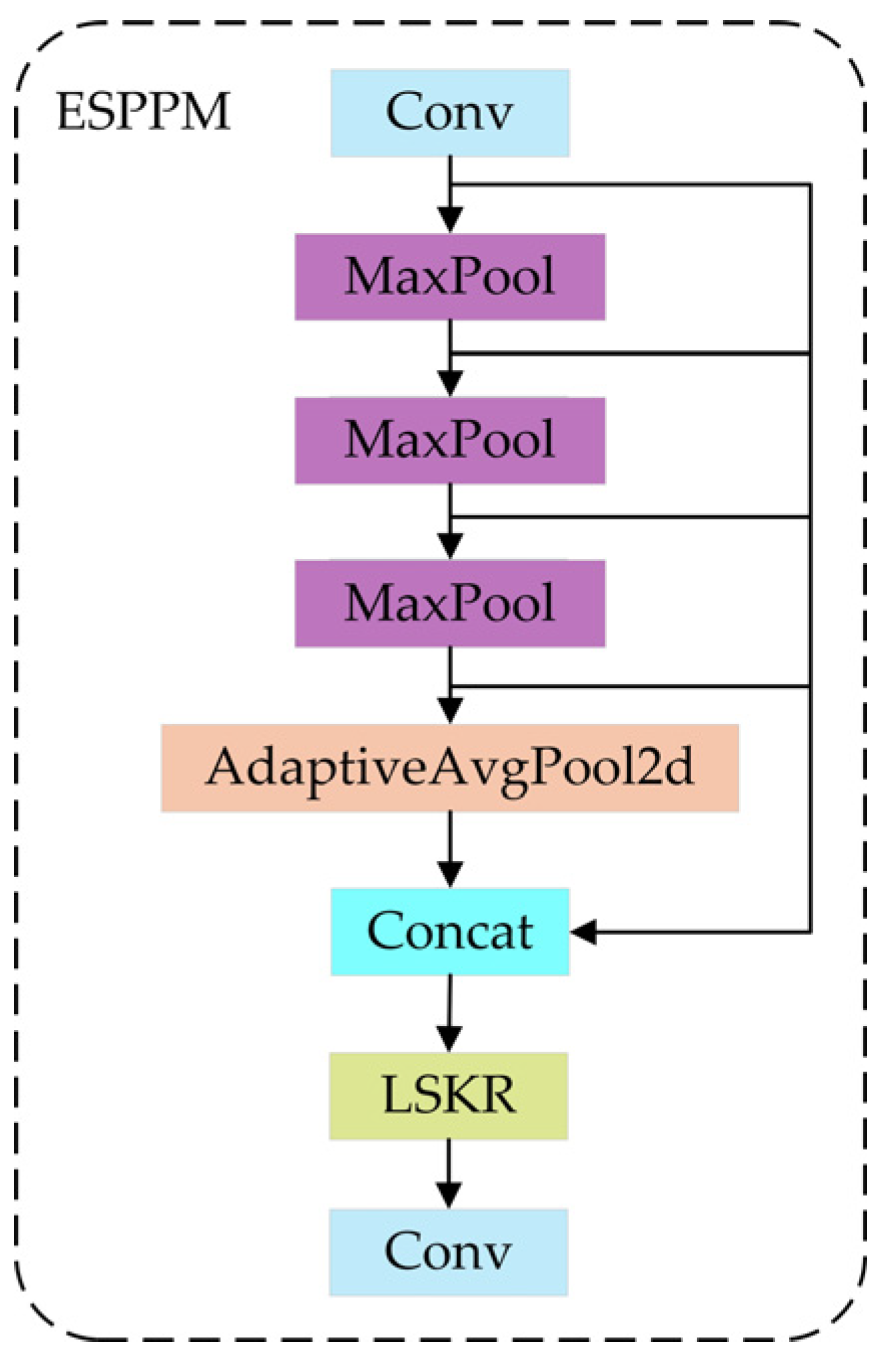

ESPPM module

The Spatial Pyramid Pooling Module (SPPF) captures multi-scale spatial information by performing parallel multi-scale max-pooling operations (e.g., 1 × 1, 3 × 3, 5 × 5, and 7 × 7 pooling windows) on feature maps. The pooled features are then fused to generate multi-scale representations.

To address the challenges of UAV imagery—high resolution, complex background textures, and multi-scale targets—we integrate the Large Separable Kernel Residual Attention Mechanism (LSKR) into SPPF and combine it with adaptive average pooling (AdaptiveAvgPool), forming the Enhanced Spatial Pyramid Pooling Module (ESPPM), as illustrated in

Figure 8.

The adaptive pooling branch generates multi-scale features by applying adaptive average pooling with different output sizes. For each scale k ∈ S

k ∈

S, the operation is

where AdaptiveAvgPool

k×k resizes the input feature map to

k ×

k spatial dimensions by averaging over each region. The subsequent 1 × 1 convolution projects the pooled features to a lower-dimensional space (with

C′ channels). To restore the spatial resolution for concatenation, each Z

k is upsampled to the original size

H ×

W using bilinear interpolation, denoted as Z

k′.

The ESPPM module can be mathematically represented as follows: Let

X ∈ R

C×H×W denote the input feature map, where

C is the number of channels, and

H and

W are the height and width, respectively. The ESPPM module processes

X through two parallel branches: the adaptive pooling branch and the LSKR branch. The outputs of these branches are concatenated to form the final output

where S = {1,3,5,7} represents the set of pooling kernel sizes (equivalent to output sizes in adaptive pooling), AdaptiveAvgPool

k(⋅) performs adaptive average pooling to a fixed size

k ×

k (followed by a 1 × 1 convolution to adjust channels to

C′, typically

C′ =

C/4 for each scale), LSKR(⋅) denotes the Large Separable Kernel Residual Attention Mechanism (detailed in Equation (12) above), and Concat concatenates the feature maps along the channel dimension. The concatenated feature map

Y is then passed through a 1 × 1 convolution to reduce the channel dimension to

C.

The outputs from the adaptive pooling branch (for each scale) and the LSKR branch are concatenated along the channel dimension:

Then, a 1 × 1 convolution is applied to fuse the concatenated features and reduce the channel dimension to match the input channel

C

where

Yout is the final output of the ESPPM module.

The Enhanced Spatial Pyramid Pooling Module (ESPPM) leverages the advantages of the Large Separable Kernel Residual (LSKR) mechanism in long-sequence modeling. By decomposing large-kernel convolution operations, ESPPM maintains efficient feature extraction. The large-kernel convolution provides a broad receptive field, enabling the capture of long-range dependencies and global information, such as extensive backgrounds and multi-scale targets in UAV imagery. Small-kernel convolutions efficiently extract local features while reducing computational complexity. By splitting large kernels into smaller ones, LSKR achieves high precision while improving computational efficiency. The residual structure enhances model stability during the processing of long-sequence data and multi-scale feature aggregation. Adaptive average pooling operations perform pooling at different scales on the feature map, generating multi-scale information to ensure comprehensive representation of features across varying resolutions.

Within the ESPPM module, the multi-scale feature maps generated through adaptive average pooling are fused with the long-range dependency features produced by the LSKR module. This fusion strengthens the multi-scale feature aggregation capability of the feature maps. Through LSKR’s long-range dependency modeling, effective information interaction between features of different scales is ensured, optimizing the module’s ability to handle complex UAV imagery with diverse backgrounds, multi-scale targets, and high-resolution details.

The inference latency of ESPPM on NVIDIA Jetson TX2 is 4.3 ms (compared with 3.9 ms of SPPF), increasing by only 10%. However, due to the multi-scale feature gain, mAP50 improves by 5.1%.

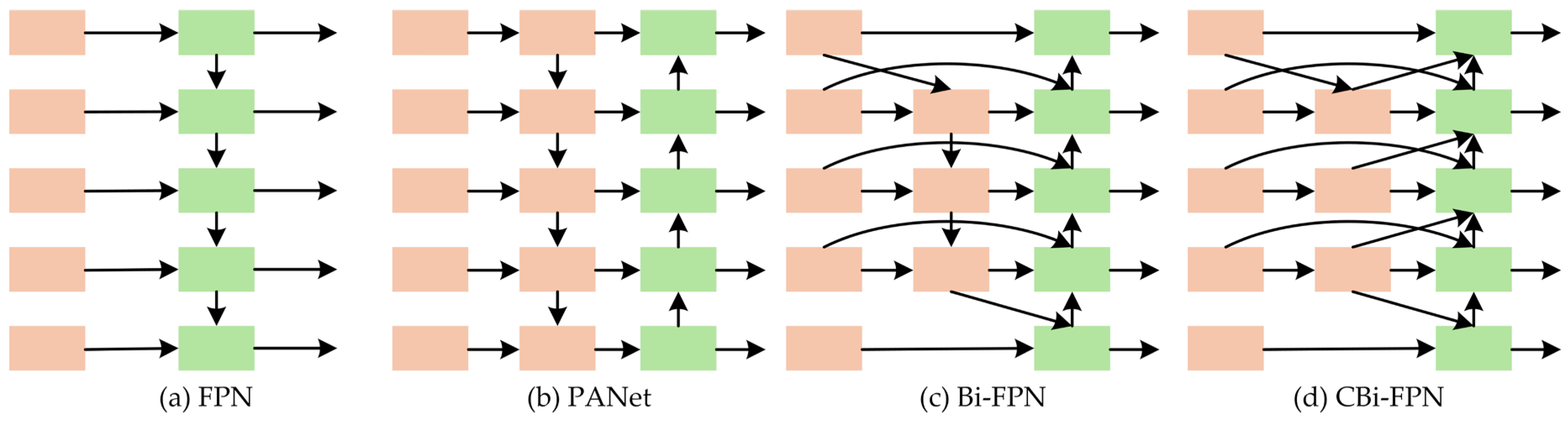

3.2.4. Cross-Layer Weighted Bidirectional Feature Pyramid Network (CBiFPN)

The fusion structure of CBiFPN with the other three features is shown in

Figure 8. In real-world scenarios, UAV imagery often suffers from cluttered backgrounds and interference from other objects. To address these challenges, this study replaces the PANet in YOLOv8n with a Cross-layer Weighted Bidirectional Feature Pyramid Network (CBiFPN).

Figure 9a shows the Feature Pyramid Network (FPN) [

22], designed to extract multi-scale features. FPN introduces lateral connections and a top-down pathway into existing convolutional neural networks to construct a pyramid-like feature hierarchy. First, convolutional operations are applied to feature maps at different hierarchical levels. Next, features are laterally transferred into a top-down fusion pathway. Finally, upsampling merges high-level semantic features with low-level detailed features, enriching multi-scale representations.

Figure 9b depicts the Path Aggregation Network (PANet), which enhances traditional FPN with a more complex fusion mechanism combining top-down and bottom-up pathways. Bidirectional feature propagation and fusion allow prediction layers to integrate features from both high and low levels, shortening information paths and improving detection performance.

Figure 9c presents the Bidirectional Feature Pyramid Network (BiFPN) [

10]. BiFPN optimizes PANet by selectively retaining effective blocks for multi-scale weighted fusion and cross-scale operations, enabling more efficient feature fusion and propagation. This reduces computational and memory costs, improves network efficiency, enhances inter-layer feature transfer, and better preserves fine-grained details and semantic information across hierarchical features.

However, as network depth increases, the aforementioned feature fusion structures lead to partial loss of detailed features across network layers, resulting in insufficient accuracy in recognizing small target characteristics within complex scenes and ultimately lower detection precision. To address this issue, this study introduces a Cross-layer Weighted Bidirectional Feature Pyramid Network (CBiFPN) to replace PANet. Building upon BiFPN, CBiFPN incorporates an additional cross-layer data flow connection (as shown in

Figure 9d), which reduces the loss of fine-grained feature information for small targets and significantly enhances the network’s expressive capability.

Compared to BiFPN, CBiFPN demonstrates several distinct advantages. First, by introducing a cross-layer connection mechanism, it enables the cross-fusion of image features from different network hierarchies, enhancing feature diversity and richness, thereby achieving more thorough and balanced feature integration. Second, CBiFPN exhibits stronger information flow dynamics, effectively propagating and fusing low-level and high-level features. This addresses challenges such as target size variations, occlusions, blurred boundaries, and interference from other objects, significantly improving the detection performance for small targets in complex scenarios. Third, CBiFPN maintains lower computational complexity and memory consumption, enhancing the operational efficiency of the detection model while preserving performance. These innovations collectively optimize the model’s ability to handle cluttered UAV imagery with high precision and real-time responsiveness.

The structural details of CBiFPN (

Figure 9d) highlight its bidirectional pathways and cross-layer interactions, emphasizing its adaptability to resource-constrained edge devices like UAVs. CBiFPN fuses shallow details and deep semantics through cross-layer connections (such as P3 → P5) to alleviate the feature loss of occluding targets. Experiments show that the recall rate increases by 7.3% in occluded scenarios.

CBiFPN reduces redundancy through the sparsification of cross-layer connections (only fusing key layers). Compared with BiFPN, the number of parameters increased by only 0.2 M, but mAP50 increased by 3.5%.

For training stability, cosine annealing learning rate scheduling (initial lr = 0.01) and gradient clipping (threshold = 1.0) were adopted. Loss function weighting (classification: regression = 1:2) was used to balance small objective optimization, and no gradient explosion or overfitting was observed.