Mechatronic Anticollision System for Electric Wheelchairs Based on a Time-of-Flight Sensor

Abstract

1. Introduction

2. Materials and Methods

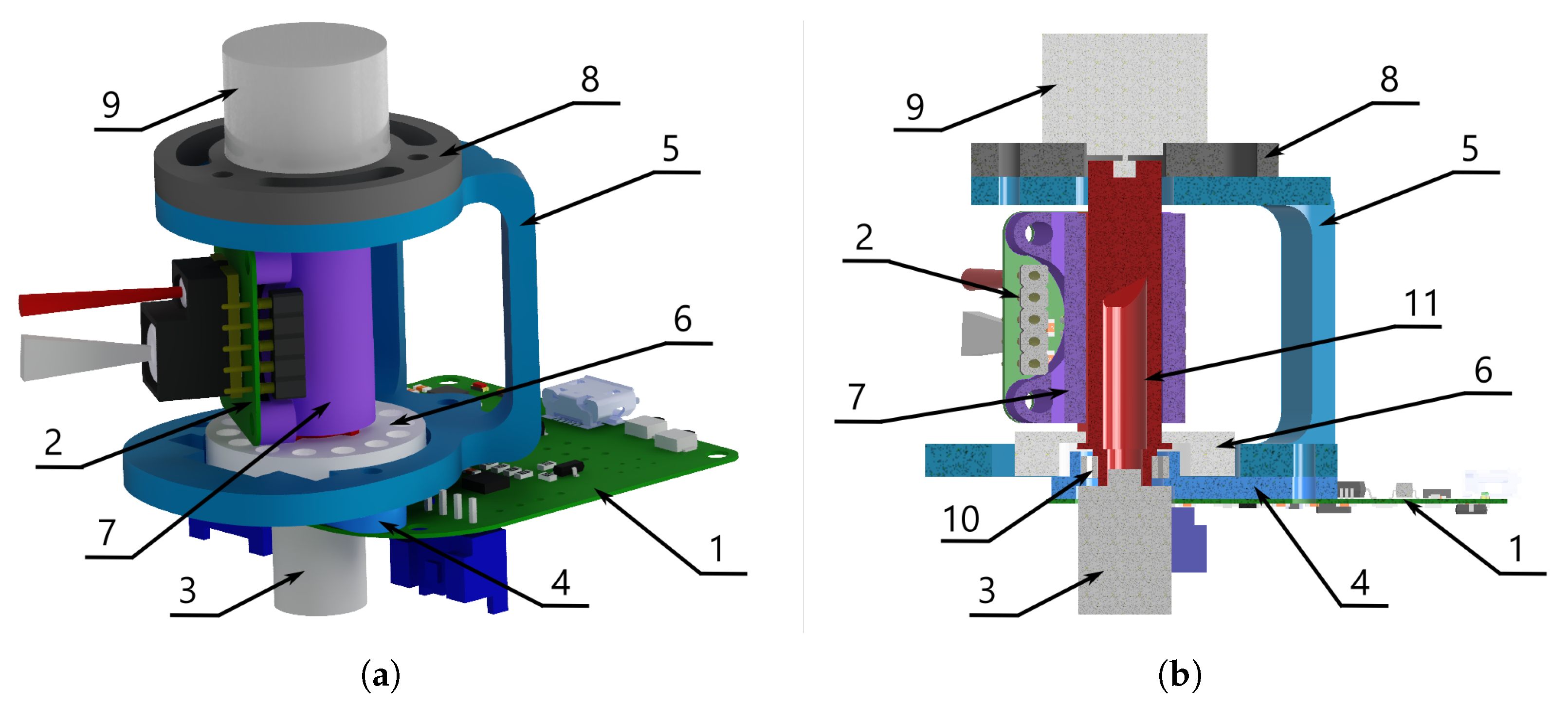

2.1. Description of the Sensor

2.2. Design and Manufacturing

2.3. Control System

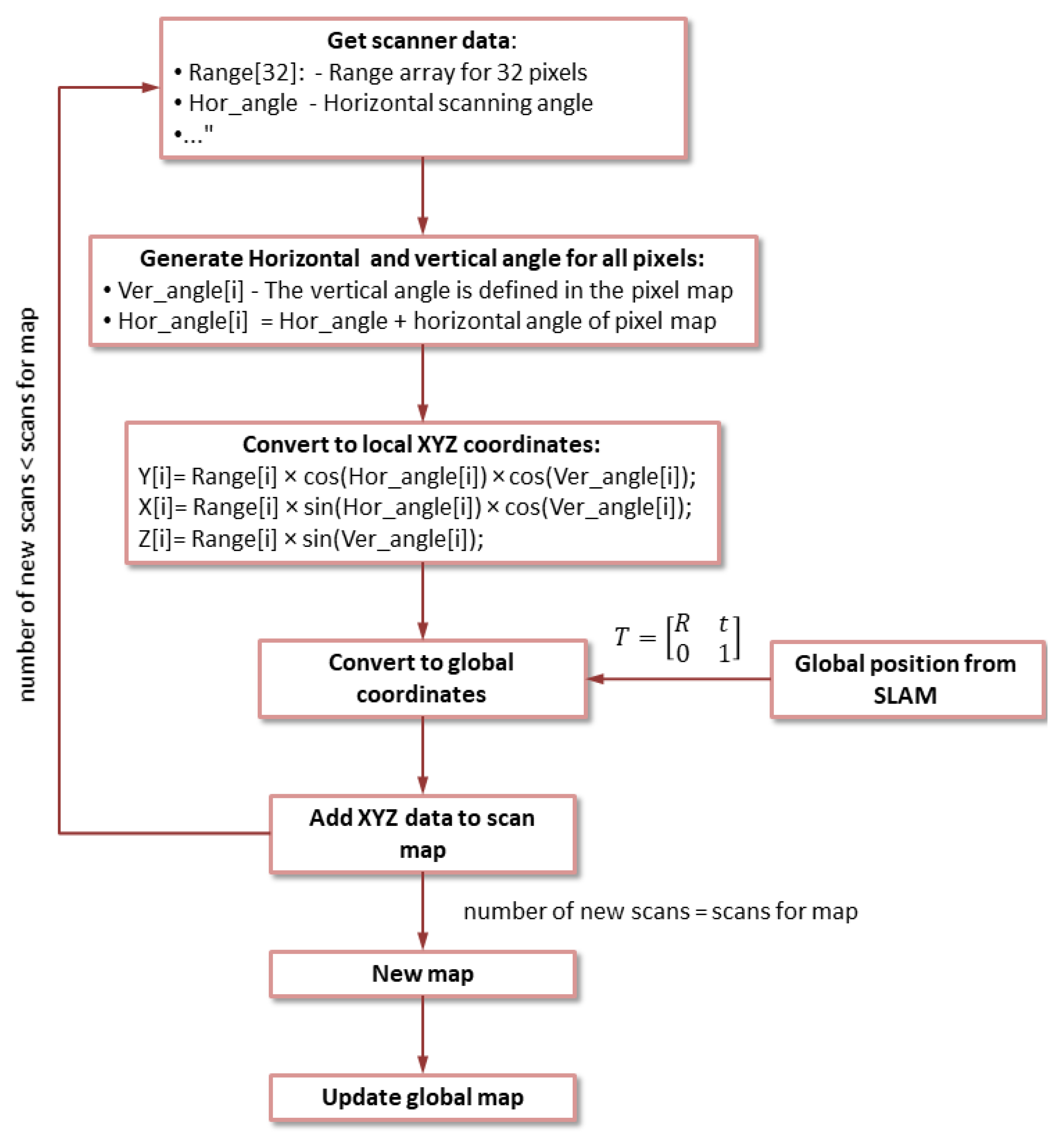

2.4. Data Acquisition

3. Results

3.1. System Evaluation

3.1.1. Evaluation of System Accuracy

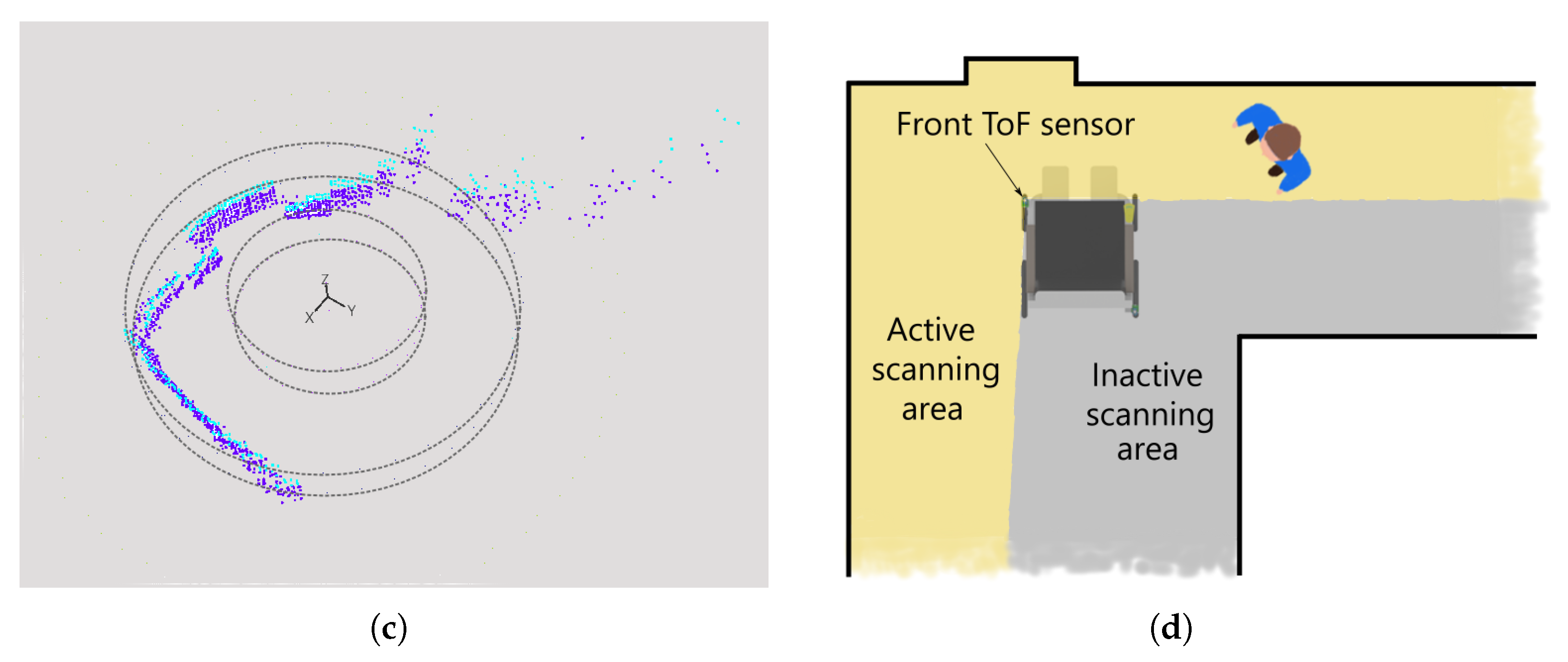

3.1.2. Evaluation of Mapping Procedure

4. Discussion

4.1. Analysis of the Results

4.2. Comparison to Previous Model

4.3. Comparison with Existing Systems

4.4. Limitations

- Limited vertical field of view: The current sensor setup offers a vertical field of view of only 12.4°, which may result in missing low-lying obstacles (for example, curbs) or overhead hazards.

- Susceptibility to environmental conditions: Real-world factors such as dynamic lighting, reflective or transparent surfaces, and multiple reflections have not yet been fully evaluated. These factors may significantly affect distance measurement reliability.

- Reduced accuracy on low-reflectivity surfaces: Preliminary tests show a decreased measurement accuracy for dark objects beyond 3 m, which limits the detection of black clothing or furniture in environments with such objects.

- Motion-induced distortions and latency: The current system has not yet been quantitatively tested for distortions during motion or delays in response to fast-moving obstacles, both of which are critical for real-time navigation.

- Variable performance due to Dynamic Configuration Adaptation (DCA) mode: The initial static sensor configuration proved inadequate in challenging scenarios—such as dark or reflective surfaces or objects at extreme distances—where measurements suffered from pixel saturation or weak return signals. To address this, the system employs DCA. This mechanism dynamically adjusts sensor parameters (e.g., integration depth, gain, and output power) to optimize measurement quality under difficult conditions. While DCA enhances robustness, it introduces variability in scanning time, potentially affecting real-time responsiveness.

- Encoder inaccuracies: The current system relies on encoder-based position determination using a stepper motor, assuming accurate and repeatable sensor positioning (see Section 2.3). However, at higher rotational speeds, the stepper motor is prone to step loss, which introduces angular inaccuracies during scanning. This issue becomes more pronounced during partial scans—used to track nearby or moving obstacles—where full rotations are skipped and the step counter is not reset. Over time, accumulated step loss without periodic recalibration degrades angular precision, negatively affecting obstacle localization and the overall reliability of real-time navigation.

- Lack of user-level integration: The current system evaluation is limited to a technical validation of the system’s core components, including 3D mapping, measurement accuracy, and hardware design. No user interaction studies or wheelchair integration tests have been conducted.

4.5. Future Work

- Sensor augmentation: To expand the vertical field of view of the system (currently 12.4°) and improve the detection of obstacles such as low curbs and overhead objects, additional ToF sensors will be integrated in the next development phase. The USB-based architecture of the system enables seamless sensor expansion without major hardware modifications, supporting broader coverage in diverse environments, such as homes, hospitals, shopping centers, and outdoor pathways. These enhancements will undergo real-world testing to validate their effectiveness for assistive mobility applications.

- Motion-related performance evaluation: Comprehensive tests will be conducted to quantify motion-induced distortions, system latency, and accuracy in dynamic scenarios with moving obstacles. These evaluations will provide essential metrics to ensure robust real-time operation in real-world environments. Further details on the planned test scenarios will be reported in subsequent studies.

- Improved detection of dark surfaces: The system will be enhanced using additional sensors and optimized signal processing algorithms to increase the robustness against low-reflectivity surfaces. Dedicated experiments will be conducted under varied lighting conditions.

- Dynamic Configuration Adaptation (DCA) validation: Real-world performance of DCA will be evaluated in varied environments such as crowded indoor spaces and outdoor settings, focusing on the trade-off between reliability and scanning time.

- Encoder system redesign: The encoder will be redesigned to improve position tracking accuracy. Detailed analysis will be conducted to assess angular errors and their impact on overall surveying precision.

- Integration with collision avoidance systems: The device will be integrated with an advanced collision avoidance system, such as the one proposed by Pieniążek and Szaj [37], which we intend to further develop into an autonomous solution. This integration will enable the avoidance of predictive obstacles using motion control algorithms, enhancing both safety and user experience.

- Usability and interface development: Future versions will prioritize user-centered design. This includes intuitive control interfaces, automatic obstacle response (slowing/stopping), and potential autonomous navigation functionalities. The system will be optimized for compact integration on wheelchairs, ensuring it is lightweight and non-intrusive.

- Long-term validation and benchmarking: Following this preliminary study, extended testing will be conducted in both controlled and real-world environments under varied conditions (e.g., lighting scenarios) to evaluate long-term performance stability. In addition, a comparative evaluation with alternative sensing solutions will be performed to benchmark the accuracy, latency, and robustness of the system.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DCA | Dynamic Configuration Adaptation |

| FOV | Field of View |

| IR | Infrared |

| LiDAR | Light Detection and Ranging |

| MCU | Microcontroller |

| PCB | Printed Circuit Board |

| SLAM | Simultaneous Localization and Mapping |

| SPI | Serial Peripheral Interface |

| ToF | Time of Flight |

| WHO | World Health Organization |

Appendix A

| White | Brown | Black | ||||

|---|---|---|---|---|---|---|

| Pixel Number | Mean Value [m] | SD [m] | Mean Value [m] | SD [m] | Mean Value [m] | SD [m] |

| 0 | 0.541 | 0.004 | 0.538 | 0.003 | 0.571 | 0.012 |

| 1 | 0.534 | 0.004 | 0.530 | 0.003 | 0.554 | 0.012 |

| 2 | 0.502 | 0.005 | 0.526 | 0.003 | 0.552 | 0.011 |

| 3 | 0.479 | 0.003 | 0.518 | 0.003 | 0.543 | 0.009 |

| 4 | 0.536 | 0.004 | 0.536 | 0.004 | 0.558 | 0.015 |

| 5 | 0.531 | 0.004 | 0.522 | 0.003 | 0.553 | 0.012 |

| 6 | 0.507 | 0.008 | 0.517 | 0.006 | 0.560 | 0.021 |

| 7 | 0.486 | 0.004 | 0.521 | 0.004 | 0.533 | 0.014 |

| 8 | 0.535 | 0.004 | 0.528 | 0.003 | 0.553 | 0.010 |

| 9 | 0.525 | 0.005 | 0.511 | 0.005 | 0.551 | 0.024 |

| 10 | 0.515 | 0.009 | 0.519 | 0.011 | 0.572 | 0.035 |

| 11 | 0.487 | 0.004 | 0.526 | 0.007 | 0.551 | 0.016 |

| 12 | 0.527 | 0.003 | 0.522 | 0.002 | 0.550 | 0.008 |

| 13 | 0.519 | 0.006 | 0.514 | 0.005 | 0.546 | 0.016 |

| 14 | 0.521 | 0.009 | 0.528 | 0.012 | 0.588 | 0.039 |

| 15 | 0.486 | 0.004 | 0.519 | 0.006 | 0.560 | 0.018 |

| 16 | 0.528 | 0.004 | 0.521 | 0.003 | 0.547 | 0.008 |

| 17 | 0.522 | 0.005 | 0.508 | 0.004 | 0.548 | 0.014 |

| 18 | 0.513 | 0.009 | 0.508 | 0.010 | 0.577 | 0.029 |

| 19 | 0.482 | 0.005 | 0.526 | 0.012 | 0.570 | 0.027 |

| 20 | 0.527 | 0.003 | 0.518 | 0.003 | 0.547 | 0.008 |

| 21 | 0.519 | 0.005 | 0.502 | 0.004 | 0.545 | 0.016 |

| 22 | 0.506 | 0.006 | 0.509 | 0.004 | 0.545 | 0.016 |

| 23 | 0.481 | 0.005 | 0.513 | 0.006 | 0.553 | 0.017 |

| 24 | 0.527 | 0.004 | 0.516 | 0.002 | 0.550 | 0.009 |

| 25 | 0.523 | 0.004 | 0.509 | 0.003 | 0.584 | 0.010 |

| 26 | 0.512 | 0.004 | 0.514 | 0.003 | 0.561 | 0.014 |

| 27 | 0.487 | 0.004 | 0.513 | 0.003 | 0.550 | 0.011 |

| 28 | 0.523 | 0.005 | 0.512 | 0.003 | 0.679 | 0.009 |

| 29 | 0.528 | 0.004 | 0.515 | 0.003 | 0.563 | 0.013 |

| 30 | 0.508 | 0.007 | 0.511 | 0.004 | 0.607 | 0.010 |

| 31 | 0.484 | 0.004 | 0.512 | 0.003 | 0.551 | 0.010 |

Appendix B

References

- World Health Organization. Global Report on Health Equity for Persons with Disabilities; License: CC BY-NC-SA 3.0 IGO; World Health Organization: Geneva, Switzerland, 2022. [Google Scholar]

- Labbé, D.; Mortenson, W.B.; Rushton, P.W.; Demers, L.; Miller, W.C. Mobility and participation among ageing powered wheelchair users: Using a lifecourse approach. Ageing Soc. 2018, 40, 626–642. [Google Scholar] [CrossRef]

- Sahoo, S.K.; Choudhury, B.B. A review on smart robotic wheelchairs with advancing mobility and independence for individuals with disabilities. J. Decis. Anal. Intell. Comput. 2023, 3, 221–242. [Google Scholar] [CrossRef]

- Erturk, E.; Kim, S.; Lee, D. Driving Assistance System with Obstacle Avoidance for Electric Wheelchairs. Sensors 2024, 24, 4644. [Google Scholar] [CrossRef] [PubMed]

- Orozco-Magdaleno, E.C.; Cafolla, D.; Castillo-Castañeda, E.; Carbone, G. A hybrid legged-wheeled obstacle avoidance strategy for service operations. SN Appl. Sci. 2020, 2, 329. [Google Scholar] [CrossRef]

- Habib, M.K. Real Time Mapping and Dynamic Navigation for Mobile Robots. Int. J. Adv. Robot. Syst. 2007, 4, 35. [Google Scholar] [CrossRef]

- Rojas, M.; Ponce, P.; Molina, A. A fuzzy logic navigation controller implemented in hardware for an electric wheelchair. Int. J. Adv. Robot. Syst. 2018, 15. [Google Scholar] [CrossRef]

- Okonkwo, C.; Awolusi, I. Environmental sensing in autonomous construction robots: Applicable technologies and systems. Autom. Constr. 2025, 172, 106075. [Google Scholar] [CrossRef]

- Dahmani, M.; Chowdhury, M.E.H.; Khandakar, A.; Rahman, T.; Al-Jayyousi, K.; Hefny, A.; Kiranyaz, S. An Intelligent and Low-Cost Eye-Tracking System for Motorized Wheelchair Control. Sensors 2020, 20, 3936. [Google Scholar] [CrossRef]

- Mulyanto, A.; Borman, R.I.; Prasetyawana, P.; Sumarudin, A. Implementation 2d lidar and camera for detection object and distance based on ros. JOIV Int. J. Inform. Vis. 2020, 4, 231–236. [Google Scholar] [CrossRef]

- Chandran, N.K.; Sultan, M.T.H.; Łukaszewicz, A.; Shahar, F.S.; Holovatyy, A.; Giernacki, W. Review on Type of Sensors and Detection Method of Anti-Collision System of Unmanned Aerial Vehicle. Sensors 2023, 23, 6810. [Google Scholar] [CrossRef]

- Sui, J.; Yang, L.; Zhang, X.; Zhang, X. Laser Measurement Key Technologies and Application in Robot Autonomous Navigation. Int. J. Pattern Recognit. Artif. Intell. 2011, 25, 1127–1146. [Google Scholar] [CrossRef]

- Adams, M.; Wijesoma, W.; Shacklock, A. Autonomous navigation: Achievements in complex environments. IEEE Instrum. Meas. Mag. 2007, 10, 15–21. [Google Scholar] [CrossRef]

- Wu, Z.; Meng, Z.; Xu, Y.; Zhao, W. A Vision-Based Approach for Autonomous Motion in Cluttered Environments. Appl. Sci. 2022, 12, 4420. [Google Scholar] [CrossRef]

- Lopac, N.; Jurdana, I.; Brnelić, A.; Krljan, T. Application of Laser Systems for Detection and Ranging in the Modern Road Transportation and Maritime Sector. Sensors 2022, 22, 5946. [Google Scholar] [CrossRef]

- Szaj, W.; Fudali, P.; Wojnarowska, W.; Miechowicz, S. Mechatronic Anti-Collision System for Electric Wheelchairs Based on 2D LiDAR Laser Scan. Sensors 2021, 21, 8461. [Google Scholar] [CrossRef]

- Delmas, S.; Morbidi, F.; Caron, G.; Albrand, J.; Jeanne-Rose, M.; Devigne, L.; Babel, M. SpheriCol: A Driving Assistance System for Power Wheelchairs Based on Spherical Vision and Range Measurements. In Proceedings of the 2021 IEEE/SICE International Symposium on System Integration (SII), Iwaki, Japan, 11–14 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 505–510. [Google Scholar] [CrossRef]

- Patar, M.; Ramlee, N.; Mahmud, J.; Lee, H.; Hanafusa, A. Cost-Effective Vision based Obstacle Avoidance System integrated Multi Array Ultrasonic sensor for Smart Wheelchair. Int. J. Recent Technol. Eng. 2019, 8, 6888–6893. [Google Scholar]

- Rifath Ahamed, E.; Muthukrishnan, R.; Karthik, S.; Vijayakumar, G.; Salman Hari, A.; Vinoth Kumar, M. Vision Controlled Motorised Wheelchair. Int. J. Res. Appl. Sci. Eng. Technol. 2023, 11, 7–17. [Google Scholar] [CrossRef]

- Luo, H.; Cao, X.; Dong, Y.; Li, Y. Simulation and experimental study on the stability and comfortability of the wheelchair human system under uneven pavement. Front. Bioeng. Biotechnol. 2023, 11, 1279675. [Google Scholar] [CrossRef]

- Derasari, P.M.; Sasikumar, P. Motorized Wheelchair with Bluetooth Control and Automatic Obstacle Avoidance. Wirel. Pers. Commun. 2021, 123, 2261–2282. [Google Scholar] [CrossRef]

- Njah, M. Safety Wheelchair Navigation System. J. Microcontroll. Eng. Appl. 2020, 7, 6–11. [Google Scholar]

- Safy, M.; Metwally, M.; Thabet, N.E.; Ahmed Ibrahim, N.; Abo El ELa, G.; Bayoumy, A.; Ashraf, M. Low-cost Smart wheelchair to support paraplegic patients. Int. J. Ind. Sustain. Dev. 2022, 3, 1–9. [Google Scholar] [CrossRef]

- Pacini, F.; Dini, P.; Fanucci, L. Design of an Assisted Driving System for Obstacle Avoidance Based on Reinforcement Learning Applied to Electrified Wheelchairs. Electronics 2024, 13, 1507. [Google Scholar] [CrossRef]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2018, 36, 416–446. [Google Scholar] [CrossRef]

- Horaud, R.; Hansard, M.; Evangelidis, G.; Ménier, C. An overview of depth cameras and range scanners based on time-of-flight technologies. Mach. Vis. Appl. 2016, 27, 1005–1020. [Google Scholar] [CrossRef]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R. Characterization of Time-of-Flight Data. In Time-of-Flight Cameras; Springer: London, UK, 2012; pp. 1–28. [Google Scholar] [CrossRef]

- Romero-Godoy, D.; Sánchez-Rodríguez, D.; Alonso-González, I.; Delgado-Rajó, F. A low cost collision avoidance system based on a ToF camera for SLAM approaches. Rev. Tecnol. Marcha 2022, 35, 137–144. [Google Scholar] [CrossRef]

- Naveenkumar, G.; Suriyaprakash, M.V.; Prem Anand, T.P. Autonomous Drone Using Time-of-Flight Sensor for Collision Avoidance. In Intelligent Communication Technologies and Virtual Mobile Networks; Springer Nature: Singapore, 2023; pp. 57–73. [Google Scholar] [CrossRef]

- Arditti, S.; Habert, F.; Saracbasi, O.O.; Walker, G.; Carlson, T. Tackling the Duality of Obstacles and Targets in Shared Control Systems: A Smart Wheelchair Table-Docking Example. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, HI, USA, 1–4 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 4393–4398. [Google Scholar] [CrossRef]

- Mihailidis, A.; Elinas, P.; Boger, J.; Hoey, J. An Intelligent Powered Wheelchair to Enable Mobility of Cognitively Impaired Older Adults: An Anticollision System. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 136–143. [Google Scholar] [CrossRef]

- YDLIDAR. YDLIDAR G4 360° Laser Scanner. 2024. Available online: https://www.ydlidar.com/products/view/27.html (accessed on 24 February 2025).

- Hackaday. XLidar Is a Merry-Go-Round of Time-of-Flight Sensors. 2018. Available online: https://hackaday.com/2018/06/29/xlidar-is-a-merry-go-round-of-time-of-flight-sensors/ (accessed on 24 February 2025).

- Broadcom Inc. Time-of-Flight Sensor Module for Distance and Motion Measurement; Datasheet for AFBR-S50MV85I Sensor; Broadcom Inc.: San Jose, CA, USA, 2022. [Google Scholar]

- Szaj, W.; Pieniazek, J. Vehicle localization using laser scanner. In Proceedings of the 2020 IEEE 7th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Pisa, Italy, 22–24 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 588–593. [Google Scholar]

- Ikeda, T.; Araie, T.; Kakimoto, A.; Takahashi, K. Development of Compact and Lightweight Stand-up Powered Wheelchair—Fall Risk Analysis When at Rest and Decelerating. Int. J. Model. Optim. 2019, 9, 329–333. [Google Scholar] [CrossRef]

- Pieniazek, J.; Szaj, W. Augmented wheelchair control for collision avoidance. Mechatronics 2023, 96, 103082. [Google Scholar] [CrossRef]

| Sensing Technology | Strengths | Weaknesses | Source |

|---|---|---|---|

| Ultrasonic sensor | Reduced impact due to reflecting surface Unaffected by dust and other optical obstructions Suitable for environments with variable light conditions | Low resolution Decreased accuracy at long ranges Affected by temperature changes Blind to objects that are extremely close | [6,7,8,9] |

| LiDAR | High precision Comprehensive 3D point cloud generation Long range coverage Generating data in real time Suitable for dark environment Unaffected by temperature changes Unaffected by acoustic interference | High cost Data analysis with high computing power Not energy efficient Direct laser exposure may damage the eye Sensitive to environmental factors, like reflections and dust | [5,10,11,12,13,14,15,16] |

| Cameras | Cost-effectiveness Real-time visual feedback | Limited effectiveness in low-light condition Difficulty in accurately detecting transparent or reflective surfaces Vulnerability to occlusions Dependence on computational resources for image processing | [17,18,19] |

| Vision sensors | Integrated image processing Real-time operation Compact design High detection precision | High cost Limited field of view Potential issues with transparent or reflective surfaces Lighting dependency Possible processing delays | [8,19] |

| Parameters | Values |

|---|---|

| Single voltage supply | 5 V |

| Typical current consumption | 33 mA |

| Integrated laser light source | 850 nm |

| Typical optical peak output power | 40 mW |

| Typical optical average output power | <0.6 mW |

| Field of view per pixel | 1.55° × 1.55° |

| Dimensions of pixel | 0.15 mm |

| Shape of pixel | hexagonal |

| Numbers of pixels | 32 pixels |

| Field of view, typical | 12.4° × 5.4° |

| Transmitter beam | 13.0° × 6.0° |

| Typical distance range up to | 5 m |

| Measurement rates of up to | 1 kHz (32 pixels) |

| Distance d [m] | Horizontal FOV () [m] | Vertical FOV () [m] | Scanning Area [m2] |

|---|---|---|---|

| 1 | 0.09 | 0.22 | 0.02 |

| 2 | 0.19 | 0.43 | 0.08 |

| 3 | 0.28 | 0.65 | 0.18 |

| 4 | 0.38 | 0.87 | 0.33 |

| 5 | 0.47 | 1.09 | 0.51 |

| Surface | Mean Value [m] | SD [m] | Max Deviation [m] | Amplitude [−] | Time [μs] |

|---|---|---|---|---|---|

| White | 0.486 | 0.004 | 0.011 | 255.6 | 4592 |

| 0.960 | 0.006 | 0.023 | 235.8 | 4614 | |

| 1.410 | 0.009 | 0.023 | 191.1 | 4614 | |

| 1.935 | 0.011 | 0.035 | 180.1 | 2920 | |

| 2.445 | 0.014 | 0.041 | 267.2 | 1828 | |

| 2.980 | 0.020 | 0.055 | 190.8 | 1280 | |

| 3.440 | 0.020 | 0.063 | 178.8 | 1280 | |

| 3.949 | 0.029 | 0.069 | 197.6 | 1280 | |

| Light brown | 0.521 | 0.003 | 0.008 | 423.5 | 4616 |

| 1.418 | 0.015 | 0.038 | 120.0 | 1544 | |

| 1.945 | 0.013 | 0.041 | 217.5 | 1280 | |

| 2.411 | 0.019 | 0.047 | 247.4 | 1280 | |

| 3.009 | 0.026 | 0.079 | 197.4 | 1281 | |

| 3.362 | 0.044 | 0.128 | 108.5 | 1285 | |

| 3.892 | 0.053 | 0.143 | 78.8 | 1280 | |

| Black | 0.560 | 0.018 | 0.034 | 137.8 | 1236 |

| 0.931 | 0.066 | 0.174 | 39.6 | 1235 | |

| 1.578 | 0.122 | 0.580 | 29.1 | 1235 | |

| 1.900 | 0.140 | 0.367 | 16.8 | 1281 | |

| 2.441 | 0.173 | 0.476 | 14.8 | 1281 | |

| 3.100 | - | - | - | - | |

| 4.040 | - | - | - | - |

| Feature | Scanner with RPLIDAR A1 | Scanner with ToF Sensor |

|---|---|---|

| Scanner size [mm] | 125 × 80 × 140 | 40 × 50 × 58 |

| Weight [g] | 253 g (scanner) + 180 g motor + 90 g balancing | 14 g motor 56 g total |

| Approximate power consumption | 300 mA scanner 600 mA motor (4.5 W) Maximum power consumption up to 14 W | 140 mA (0.7 W) |

| 360° scanning time | 100–200 ms per rotation (1 scanning plane) 8 planes (800–1600 ms for eight planes) | 214 ms and 358 ms (for eight planes) |

| Measurement range [m] | 6 m (depending on the scanner used) | 5 m |

| Number of scanners on the platform | 3 | 2 |

| Control system | myRIO | STM32F401RCT6 |

| Power supply method | Requires an additional power source | USB-powered, no additional power source required |

| Feature | Developed Solution | YDLIDAR T-mini Plus | xLIDAR (DIY) |

|---|---|---|---|

| Detection Range | Up to 4 m, optimal for wheelchairs. | Up to 12 m, more than needed. | Up to 1 m, insufficient for wheelchairs. |

| Vertical FOV | High vertical FOV 12.4°. Detects obstacles at various heights, which is crucial for wheelchair users. | Very small vertical FOV 1.5°. Scans in a horizontal plane; does not detect objects below or above the scanning plane. | Very small vertical FOV; sensor designed for 1D measurement. |

| Communication Interface | Powered and controlled via USB. | Standard communication interface—UART. | Uses I2C interface and additional signals connections. |

| Power usage | 140 mA | 450 mA | 160 mA—estimation |

| Cost | Designed with cost-efficiency in mind, making it an attractive solution for wheelchair users. | Cost varies depending on the model and supplier; may be higher than DIY solutions but offers professional support and warranty. | Budget-friendly DIY solution; however, potential additional costs may arise from the need for specialized tools or components and the time spent on assembly and calibration. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Szaj, W.; Wanic, M.; Wojnarowska, W.; Miechowicz, S. Mechatronic Anticollision System for Electric Wheelchairs Based on a Time-of-Flight Sensor. Electronics 2025, 14, 2307. https://doi.org/10.3390/electronics14112307

Szaj W, Wanic M, Wojnarowska W, Miechowicz S. Mechatronic Anticollision System for Electric Wheelchairs Based on a Time-of-Flight Sensor. Electronics. 2025; 14(11):2307. https://doi.org/10.3390/electronics14112307

Chicago/Turabian StyleSzaj, Wiesław, Michał Wanic, Wiktoria Wojnarowska, and Sławomir Miechowicz. 2025. "Mechatronic Anticollision System for Electric Wheelchairs Based on a Time-of-Flight Sensor" Electronics 14, no. 11: 2307. https://doi.org/10.3390/electronics14112307

APA StyleSzaj, W., Wanic, M., Wojnarowska, W., & Miechowicz, S. (2025). Mechatronic Anticollision System for Electric Wheelchairs Based on a Time-of-Flight Sensor. Electronics, 14(11), 2307. https://doi.org/10.3390/electronics14112307