Abstract

Extensive research has been carried out on reinforcement learning methods. The core idea of reinforcement learning is to learn methods by means of trial and error, and it has been successfully applied to robotics, autonomous driving, gaming, healthcare, resource management, and other fields. However, when building reinforcement learning solutions at the edge, not only are there the challenges of data-hungry and insufficient computational resources but also there is the difficulty of a single reinforcement learning method to meet the requirements of the model in terms of efficiency, generalization, robustness, and so on. These solutions rely on expert knowledge for the design of edge-side integrated reinforcement learning methods, and they lack high-level system architecture design to support their wider generalization and application. Therefore, in this paper, instead of surveying reinforcement learning systems, we survey the most commonly used options for each part of the architecture from the point of view of integrated application. We present the characteristics of traditional reinforcement learning in several aspects and design a corresponding integration framework based on them. In this process, we show a complete primer on the design of reinforcement learning architectures while also demonstrating the flexibility of the various parts of the architecture to be adapted to the characteristics of different edge tasks. Overall, reinforcement learning has become an important tool in intelligent decision making, but it still faces many challenges in the practical application in edge computing. The aim of this paper is to provide researchers and practitioners with a new, integrated perspective to better understand and apply reinforcement learning in edge decision-making tasks.

1. Introduction

Reinforcement learning [1,2,3] is a learning method inspired by behaviorist psychology that attempts to maintain a balance between exploration and exploitation, focusing on online learning. The point of difference with other machine learning methods is that in reinforcement learning, no data need to be given in advance; the learning information is obtained by receiving rewards or feedback from the environment for the actions, which enables the updating of the model parameters. As a class of framework methods, it has a certain degree of versatility; it can be widely integrated in such areas as cybernetics [4], game theory [5], information theory [6], operations research [7], simulation optimization [8], multiagent systems [9], collective intelligence [10], statistics [11], and other fields of results; and it is very suitable for complex intelligent decision-making problems. Compared to centralized intelligent decision making, the approach of syncing the computation and decision-making processes in reinforcement learning at the edge has additional advantages. On the one hand, intelligent decision making at the edge can be closer to the data source and end users, which reduces the delay of data transmission, lowers the network cost of information transmission, and improves the guarantee of privacy and data security. On the other hand, because the edge device can generate decisions independently, it avoids the risk of system collapse caused by the failure of the central server or other nodes; improves the reliability and robustness of the system; and is able to partially take offline decision-making tasks. In recent years, more and more researchers have moved their attention to the problem of intelligent decision making at the edge, and it has been successful in many fields, such as the Internet of Things [12], intelligent transportation [13], and industry [14].

However, in the current practice of intelligent decision making at the edge based on reinforcement learning, it is usually just a simple choice to use the existing reinforcement learning methods according to their respective decision characteristics; for example, some algorithms are suitable for solving continuous space or continuous action problems [15,16], some other algorithms have a very good training effect for few-shot learning problems [17,18], and so on. However, intelligent decision making at the edge often requires consideration of several aspects of characterization, limited by its own configuration conditions and task types. These unique characteristics as well as the diversity and complexity of the requirements lead to a higher degree of difficulty in solving [19] and limit the generalizability of solutions across these problems. Compared with intelligent decision problems, such as the game of gaming in data centers, it is difficult to meet the needs of the actual application at the edge by using a single type of reinforcement learning method for strategy training in intelligent decision problems, which reveals the problems of nonconvergence of the training and the inefficiency and low accuracy of the training model [20,21]. In some cases, the demand for certain specific types of decision-making tasks can be satisfied, but the shortcomings of other elements still restrict its application in actual decision making at the edge. For example, can the limited storage capacity and computing power at the edge support the collection and utilization of training data required for reinforcement learning [22]? In terms of efficiency, the limited computational resources of edge devices may lead to a decrease in training efficiency, and reinforcement learning algorithms at the edge are more sensitive to real-time requirements and need to make decisions in real or near-real time. Therefore, algorithms must operate efficiently under limited resources to ensure responsiveness and real-time performance [23]. In terms of generalization, in edge environments, due to the limited data volume, algorithms may struggle to generalize sufficiently and may exhibit poorer performance in new environments and tasks [24]. Additionally, factors such as data security and privacy may also need to be considered when running reinforcement learning algorithms at the edge [25].

The solution to the above problems cannot simply rely on the optimization and upgrading of individual intelligence. Therefore, this paper tries to find other paths that can solve these challenges. Considering that since a single reinforcement learning method at the edge always has shortcomings in one way or another, is it possible to better cope with these problems if multiple types of reinforcement learning methods are integrated together? As a matter of fact, the integration of multiple methods in the same domain has been explored in other fields of AI, and some classical integration frameworks have been formed, such as the integration of random forests into multiple decision trees [26], bootstrapping, bagging, boosting, and other machine learning combination algorithms [27]. However, in the field of reinforcement learning, there is no systematic theory to support this. Most research on intelligent decisions based on reinforcement learning still focuses on a single technique or family of techniques. Although these techniques have a common form of knowledge representation, they can only be applied to solve individual isolated problems, and it is difficult to meet the realistic requirements and challenges of adaptive adaptation generalization for complex scenarios at the edge. In addition, the integration of these methods in artificial intelligence heavily relies on analyzing expert knowledge of the target task. As a result, these methods often lack sufficient generalization and flexibility, making them inapplicable to other tasks. Therefore, this paper aims to provide a general integration design framework and solution for edge intelligence decision making based on reinforcement learning.

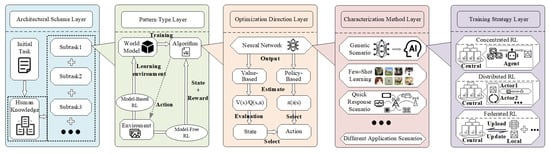

To this end, this paper first analyzes the current problems and challenges in the direct application of reinforcement learning to intelligent decision in edge computing. Subsequently, based on the definition and analysis of the design ideas of the integration framework and the collation of the focuses of current researchers in related studies, it is observed that current reinforcement learning-based strategy training methods primarily concentrate on five key aspects: architectural methods, pattern types, optimization directions, characterization methods, and training strategies. Meanwhile, by analyzing the inherent nested relationships among these methods, the decision-making characteristics of different reinforcement learning approaches are summarized. Finally, by integrating the technical characteristics and decision-making features of reinforcement learning, as well as the general requirements for intelligent decision, a corresponding integration framework for reinforcement learning is designed. The key issues to be solved in each link of the integration framework are analyzed to better meet the requirements of key capabilities. We aim to provide a clear and practical guide through the format of a survey, assisting beginners and those new to engineering design in better understanding and utilizing reinforcement learning methods. Our goal is to facilitate the integration and development of reinforcement learning techniques in the field of engineering, fostering a deeper connection between engineering practices and reinforcement learning technology.

It is worth noting that this paper is not intended to comprehensively analyze all reinforcement learning methods but rather to organize the vast research outcomes and system architectures of reinforcement learning from an engineering perspective, understanding the choices of technical routes in different modules and their impacts. This aims to assist relevant researchers and practitioners in realizing the trade-offs across various aspects and carefully selecting the most practical options. In this paper, we design a general integrated framework consisting of five main modules and examine the most representative algorithms in each module. This framework is expected to provide more direct assistance for the design of solutions in edge intelligent environments, thereby guiding researchers in developing reinforcement learning solutions tailored to specific research problems. The contributions of this paper are summarized as follows:

- This paper presents a generic integrated framework for designing edge reinforcement learning methods. It decomposes the solution design for intelligent decision-making problems into five steps, enhancing the robustness of the framework in different edge environments by integrating different reinforcement learning methods in each layer. Additionally, it can provide a general guidance framework for researchers in related fields through process-oriented solution design.

- This paper surveys the existing research studies related to the five layers of the integration framework, respectively, including architectural schemes, pattern types, optimization directions, characterization methods, and training strategies. The current mainstream research directions and representative achievements of each layer are summarized, and the emphases of different mainstream methods are analyzed. This provides a fundamental technical solution for various layers of integration frameworks. At the same time, it also provides a theoretical foundation for designing and optimizing edge intelligence decision making.

- This paper demonstrates the working principle of the proposed framework through a design exercise as a case study. In the design process, this paper provides a detailed introduction to the workflow of the integration framework, including how to properly map the decision problem requirements to reinforcement learning components and how to select suitable methods for different components. Furthermore, we further illustrate the practicality and flexibility of the integration framework by using AlphaGo as a real-world example.

- The architecture proposed in this paper integrates existing reinforcement learning systems and frameworks at a higher level, going beyond the limitations of existing surveys that are restricted to a particular area of research in reinforcement learning. Instead, it adopts an integrated perspective, focusing on the deployment and design of reinforcement learning methods in real-world applications. This paper aims to create a more accessible tool for researchers and practitioners to reference and apply. It also provides them with a new perspective and approach for engaging in related research and work.

After analyzing the basic requirements of reinforcement learning framework design from an integrated perspective and giving a high-level framework design idea in Section 2, we introduce the five key parts of the framework design species, architectural scheme, pattern type, optimization direction, characterization method, and training strategy in Section 3, Section 4, Section 5, Section 6 and Section 7, respectively, to provide a systematic overview of the reinforcement learning system. In order to demonstrate the applicability of the integrated framework, we selected representative research achievements in various typical application scenarios of reinforcement learning in Section 8, and we describe their solutions using the integrated framework. Additionally, this section provides an example of generating a reinforcement learning solution to showcase the flexibility and workflow of our framework. Subsequently, in Section 9, several noteworthy issues are discussed. Finally, in Section 10, we summarize the work presented in this paper. The terminology and symbols used in this paper are summarized in Table 1.

Table 1.

Explanation of formula terms.

2. Framework Design from Integrated Perspective

Most of the mature edge intelligent decision-making solutions focus on designing, optimizing, and adapting to a single problem (e.g., automated car driving [28] and some board games [29]); rely heavily on expert knowledge; and are still limited to a single technology or family of technologies. Despite the common knowledge representation of these technologies, the solution-building process still lacks systematic design guidance and can only analyze each isolated problem separately, making it difficult to provide generalized solutions for edge intelligent decision making. It is increasingly recognized that the effective implementation of intelligent decision making requires both the fusion of multiple forms of experience, rules, and knowledge data and the ability to combine multiple technological means of learning, planning, and reasoning. These combined capabilities are necessary for interactive systems (cyber or physical) that need to operate in uncertain environments and communicate with the subjects who need to make decisions [30]. Therefore, this section designs a framework for reinforcement learning in edge computing through an integrated perspective by characterizing reinforcement learning methods. The design ideas and characterization will be explored in detail in the following sections.

2.1. Related Work

Reinforcement learning, as a branch highly regarded in the field of artificial intelligence, has attracted considerable attention and investment from researchers in recent years. Surveys dedicated to reinforcement learning have shown a thriving trend, encompassing a comprehensive range of topics from fundamental theories to practical applications. In terms of fundamental theories, researchers have conducted extensive studies based on various types of reinforcement learning methods. To address the challenges of difficulty in data acquisition and insufficient training efficiency, Narvekar et al. designed a curriculum learning framework for reinforcement learning [31]. Gronauer et al. focused on investigating training schemes for multiple agents in reinforcement learning, considering behavioral patterns in cooperative, competitive, and mixed scenarios [32]. Pateria et al. discussed the autonomous task decomposition in reinforcement learning, outlining challenges in hierarchical methods, policy generation, and other aspects from a hierarchical reinforcement learning perspective [33]. Samasmi et al. discussed potential research directions in deep reinforcement learning from a distributed perspective [34]. Ramirez et al. started from the principle of reinforcement learning and discussed methods and challenges in utilizing expert knowledge to enhance the performance of model-free reinforcement learning [35]. In contrast, Moerland et al. provided a comprehensive overview and introduction to model-based reinforcement learning methods [36]. These studies focused on how to adjust reinforcement learning methods to generate more powerful models. In practical applications, Moerland et al. focused on the emotional aspects of reinforcement learning in robotics, investigating the emotional theories and backgrounds of reinforcement learning agents [37]. Chen et al. approached the topic from the perspective of daily life, exploring the application of reinforcement learning methods in recommendation systems and discussing the key issues and challenges currently faced [38]. Meanwhile, Luong et al. emphasized addressing communication issues, surveying the current state of research in dynamic network access, task offloading, and related areas [39]. Haydari et al. summarized the work of reinforcement learning methods in the field of traffic control [40]. Elallid et al. investigated the current state of deep reinforcement learning in autonomous driving technology [41]. Similar types of work have also emerged in healthcare [42], gaming [43], the Internet of Things (IoT) [44], and security [45]. These studies focused on conducting a systematic investigation of the application of reinforcement learning methods in different domains. However, these surveys concentrated on the microlevel of the reinforcement learning field, specifically discussing the strengths and weaknesses of different reinforcement learning methods within specific domains or task scenarios.

At a macrolevel, some researchers have made achievements, such as Stapelberg et al., who discussed existing benchmark tasks in reinforcement learning to provide an overview for beginners or researchers with different task requirements [46]. Aslanides et al. attempted to organize existing results of general reinforcement learning methods [47]. Arulkumaran et al. reviewed deep reinforcement learning methods based on value and policy and highlighted the advantages of deep neural networks in the reinforcement learning process [48]. Sigaud et al. conducted an extensive survey on policy search problems under continuous actions, analyzing the main factors limiting sample efficiency in reinforcement learning [49]. Recht et al. introduced the representation, terminology, and typical experimental cases in reinforcement learning, attempting to make contributions to generality [16]. The aforementioned works either focus on specific types of problems or provide simple introductions from a macroperspective, lacking a systematic analysis of the inherent connections between different key techniques. Such a situation can only provide beginners or relevant researchers with an introductory perspective, but it still lacks a comprehensive understanding of the relationships and integration methods among different technical systems, and it is not able to provide systematic and effective guidance for rapidly constructing reinforcement learning solutions in real-world tasks. For a newly emerging reinforcement learning task, researchers still need to explore and generate a solution from numerous methods, lacking a structured framework to provide appropriate solution approaches. Therefore, this paper aims to provide researchers with more in-depth and comprehensive references and guidance to help them better understand the complexity of the reinforcement learning field and conduct further research.

2.2. Framework Design Ideas

For researchers, although there are currently methods to develop the capabilities of reinforcement learning in individual tasks, there is still a lack of understanding and experience in how to construct intelligent systems that integrate these functionalities, relying on expert knowledge for discussions on research problems [50,51]. A key open question is what the best overall organizational method for multiagent systems is: homogeneous nonmodular systems [52]; fixed, static modular systems [53]; dynamically reconfigurable modular systems over time [54]; or other types of systems. In addition to the organizational methods, there are challenges in the transformation and coexistence of alternative knowledge representation approaches, such as symbolic, statistical, neural, and distributed representations [55], because the different representation techniques vary in their expressiveness, computational efficiency, and interpretability. Therefore, in practical applications, it is necessary to select the appropriate knowledge representation method according to the specific problem and scenario. Furthermore, the optimal way to design the workflow for integrated systems is still subject to discussion, i.e., how AI systems manage the sequencing of tasks and parallel processes between components. Both top-down, goal-driven and bottom-up, data-driven control strategies may be suitable for different problem scenarios. Although there are expected interdependencies among the components, rich information exchange and coordination are still necessary between them.

To address these issues, we specify the basic requirements for framework design under the integration perspective presented in this section. The purpose of this paper on framework design under the integration perspective is to provide a formal language to specify the organization of an integrated system, similar to the early work performed when building a computer system. The core of the research in this section is to provide a framework for analyzing, comparing, classifying, and synthesizing integration methods that support abstraction and formalization and that can provide a generic foundation for subsequent formal and empirical analyses.

In terms of the design philosophy of a framework, an integrated framework should minimize complexity while meeting the corresponding design requirements. This requires considering how to correctly map the requirements to the components of reinforcement learning during the design process, as well as selecting an architecture that can accommodate these components. Modularizing the method modules within the integrated framework is a feasible solution [56]. By assembling various algorithms as modules into the framework, the efficiency and simplicity of the integrated reinforcement learning framework can be greatly improved. Furthermore, there exist integration relationships among some reinforcement learning methods [57], where certain algorithms can serve as submodules within other algorithms [58], providing a solid foundation for implementing the integrated framework. In summary, an integrated framework for reinforcement learning involves organically integrating multiple modules (different types of intelligent methods) into a versatile composite intelligent system. Different integration modules can be used for different task requirements. In the context of this paper’s application domain, the framework design must fully consider the key capability requirements for achieving intelligent decision making. This section aims to provide specific methods for optimizing the crucial steps of an innovative integration framework in order to better adapt to the demands of intelligent decision making.

2.3. Integrated Characteristic Analysis

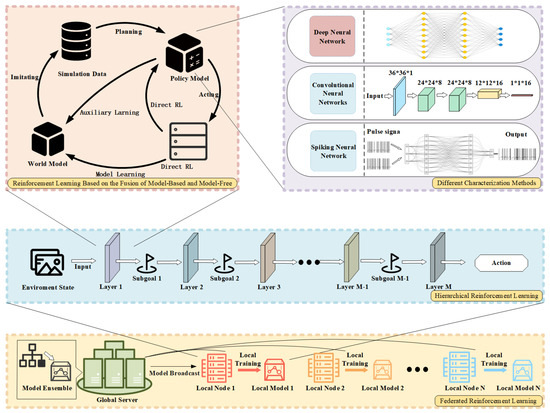

To design a more reasonable integration method framework around reinforcement learning, it is important to understand the characteristics of reinforcement learning and interpret the underlying logic and integration relationships among them. In order to make the various parts of the framework self-consistent and integrated, our research has extensively reviewed the relevant literature and categorized these studies based on their different research focuses on reinforcement learning methods. From the application perspective of reinforcement learning, as Yang et al. [59] first divided the research scope into single-agent scenarios and multiagent scenarios in the exploration of reinforcement learning strategies; the integration framework also needs to set the research scope of the problem reasonably. After clarifying the research scope, the next issue to be addressed is data acquisition, which is the foundation of all machine learning methods. The most significant difference between reinforcement learning and other machine learning methods is that its training data need to be continuously generated through agent–environment interactions, as described in [35,36]. Then, these data are used to upgrade the current policy model. Ref. [60] briefly introduces the work in this field and tries to integrate several main methods. Additionally, choosing suitable representation methods is an important part of transforming these theories into implementations. Ref. [61] analyzes the characteristics of different types of artificial neural networks, while [62] investigates research on spiking neural networks that are more inclusive of time series. Finally, after determining the plans for all the above aspects, the integration framework needs to select an appropriate training strategy for reinforcement learning according to task requirements. This approach is similar to [63,64], which investigate different research areas in training strategies in the field of machine learning. Specifically, this paper categorizes the research from five aspects: architecture design, model types, optimization direction, representation methods, and training strategies:

- Architectural scheme: The architectural scheme aims to achieve the division of labor and collaboration between different methods and agents by decomposing and dividing complex tasks to accomplish a complex overall goal. One direction is to deconstruct the problem based on the scale of the task, e.g., a complex total problem is divided into several subproblems, and the optimal solution of each subproblem is combined to make the optimal solution of the total problem (realizing the idea of divide and conquer, which can significantly reduce the complexity of the problem) [33]. Another direction is to deconstruct the task based on the agents, e.g., to use multiple agents to cooperate to complete a task at the same time (multirobotic arms to assemble a car task, etc.). Common reinforcement learning methods that have been researched around architectural schemes include meta-reinforcement learning, hierarchical reinforcement learning, multi-intelligent reinforcement learning, etc. [32].

- Pattern type: Pattern type is used to describe the policy generation pattern adopted by reinforcement learning for a task environment. Different policy generation patterns have different complexities and learning efficiencies. The pattern type needs to be selected based on the complexity of the task and the cost of trial and error. The difference lies in the ability to construct a world model that resembles the real world based on the environment and state space for the task simulation beforehand. Common reinforcement learning methods that center on pattern types include model-based reinforcement learning algorithms, model-free reinforcement learning algorithms, and reinforcement learning algorithms that integrate model and model-free reinforcement learning algorithms.

- Optimization direction: The optimization direction starts from different concepts, condenses the formal features, and designs a more efficient strategy gradient updating method to accomplish the task. Policy gradient updating methods from different optimization directions have distinct characteristics, strengths, and weaknesses. Additionally, there are often multiple options for policy gradient updating methods within the same optimization direction, depending on the focus. Common optimization directions for reinforcement learning include value-based methods, policy-based methods, and fusion-based methods.

- Characterization method: The characterization method involves selecting encoders, policy mechanisms, neural networks, and hardware architectures with distinct features to develop targeted reinforcement learning algorithms tailored to specific application scenarios. Common reinforcement learning characterization methods include brain-inspired pulse neural networks, Bayesian analysis, deep neural networks, and so on.

- Training strategy: The training strategy is based on the perspective of the actual task, considering the characteristics of the edge and choosing a more reasonable and efficient training strategy to complete the training. Different training strategies have different applicable scenarios and characteristics and can cope with different types of challenges; for example, federated reinforcement learning can better protect the privacy of training data. Common reinforcement learning training strategies include centralized reinforcement learning, distributed reinforcement learning, and federated reinforcement learning.

After categorizing reinforcement learning in these five aspects, it is not difficult to find that there is a significant integration relationship among these five research directions (see Figure 1 for details). Although no study has yet synthesized reinforcement learning from an integration perspective and proposed a unified integration system, past research on different directions is not entirely disparate. The integration relationship between reinforcement learning provides the basis for the design of our integration framework in this paper.

Figure 1.

Schematic diagram of integrated framework components.

The design of the integration framework should start with an architectural scheme to choose a suitable architecture to deconstruct and divide the complex task. Reinforcement learning methods centered on architecture are a direct match for this and can be used directly in the outermost architecture of the integration framework.

Second, the tasks of deconstruction can be viewed as a number of independent modules. For different modules, we can choose the pattern type to solve the problem according to the differences in the task environment. For example, for some environments, we need to build a world model first and then, based on the model, let the agent interact with it; or for environments that do not need to build a world model, we can let the agent learn directly in the environment through the model-free reinforcement learning method.

Then, after determining the pattern type, we choose the appropriate optimization direction for different algorithms to make the algorithms more robust and able to perform, such as optimizing the value function in the algorithm or optimizing the policy.

Then, at the bottom of the integration framework, facing different application scenarios, we can adopt different ways to deal with the input and output objects, i.e., selecting the characterization method to complete the input–output transformation.

Finally, after determining the characterization method, we choose the appropriate training strategy to fit the task at hand more closely and to improve the training efficiency while reducing the expenditure. For example, federated reinforcement learning is chosen for tasks with high privacy requirements.

It is worth noting that the integrated framework designed in this article is precisely based on the organic integration of these five different research directions in reinforcement learning, where the components are nested and complement each other. The process of selecting components in the integrated framework is itself an analysis of practical application problems. We hope that through this process, researchers or practitioners can quickly understand the mainstream research methods and characteristics of each component, reducing the reliance on expert knowledge. Additionally, the framework includes task decomposition at a macrolevel to strategy updates at a microlevel, taking into account the limitations of software algorithms and hardware devices. It is able to cover various requirements in real-world applications.

3. Architecture-Scheme Layer

Reinforcement learning methods that have been studied in an architectural scheme include meta-reinforcement learning [65], hierarchical reinforcement learning [33], and multiagent reinforcement learning [66]. In the design of the reinforcement learning integration framework, in order to ensure the normal operation, the architectural scheme layer should be designed around these three methods. This classification stems from a generalization after sorting out the different types of problems targeted by current reinforcement learning models. The family of techniques represented by meta-learning focuses on the generalization aspect of reinforcement learning, enabling trained agents to adapt to new environments or tasks. The system represented by hierarchical reinforcement learning, on the other hand, aims to address the complexity of reinforcement learning problems, attempting to make reinforcement learning algorithms effective in more complex scenarios. Multiagent reinforcement learning, as the name suggests, tackles the collaboration or competition among multiple agents in a shared environment, including adversarial games and cooperative scenarios. When designing an integrated framework for reinforcement learning methods, it is crucial to select appropriate architectural design principles as the foundation for subsequent work to ensure the smooth operation of the integrated framework.

3.1. Meta-Reinforcement Learning

Another term for meta-learning, learning to learn is the idea of “learning how to learn”, which, unlike general reinforcement learning, emphasizes the ability to learn to learn, knowing that learning a new task relies on past knowledge as well as experience [67]. Unlike traditional reinforcement learning methods, which generally train and learn for specific tasks, meta-learning aims to learn many tasks and then use these experiences to learn new tasks quickly in the future [68].

Meta-reinforcement learning is a research area that applies meta-learning to reinforcement learning [69]. The central concept is to leverage the acquired prior knowledge from learning a large number of reinforcement learning tasks, with the hope that AI can learn faster and more effectively when faced with new reinforcement learning tasks and adapt to the new environment accordingly [70].

For instance, if a person has an understanding of the world, they can quickly learn to recognize objects in a picture without having to start from scratch the way a neural network does, or if they have already learned to ride a bicycle, they can quickly pick up riding an electric bicycle (analogical learning—learning new things through similarities and experiences). In contrast, current AI systems excel at mastering specific skills but struggle when asked to perform simple but slightly different tasks [71]. Meta-reinforcement learning addresses this issue by designing models that can acquire diverse knowledge and skills based on past experience with only a small number of training samples (or even zero samples for initial training) [72]. The fine-tuning process can be represented as

where represents the fine-tuned model parameters. When the initial model is applied to a new task , the model parameters are updated through gradient descent ∇. denotes the step size of the model update.

The core of meta-learning is that AI owns core values so as to realize fast learning, i.e., so that AIs (agents) can form a core value network after learning various tasks and can utilize the existing core value network to accelerate its learning speed when facing new tasks in the future. Meta-reinforcement learning belongs to a branch of meta-learning, a technique for learning inductive bias. It consists roughly of two phases: one is the training phase (metatraining), which learns knowledge through the past Markov process (MDP); the second is the adaptation phase (meta-adaptation), which involves how to quickly change the network to adapt to a new task (task). Meta-reinforcement learning mainly tries to solve the problems of deep reinforcement learning (DRL) [73], such as the DRL algorithm’s low sample utilization; final performance of the DRL model being oftentimes unsatisfactory; the overfitting to the environment; and the existence of model instability.

The advantage of meta-reinforcement learning is that it can quickly learn a new task by building a core guidance network (and training several similar tasks at the same time) so that the agent can learn to face a new task by keeping the guidance network unchanged and building a new action network. The disadvantage of meta-reinforcement learning, however, is that the algorithm does not allow the intelligence to learn how to specify action decisions on its own, i.e., meta-reinforcement learning is unable to accomplish the type of tasks that require the intelligence to make decisions.

3.2. Hierarchical Reinforcement Learning

Hierarchical reinforcement learning is a popular research area in the field of reinforcement learning that addresses the challenge of sparse rewards [74]. The main focus of this area is to design hierarchical structures that can effectively capture complex and abstracted decision processes in an agent. The direct application of traditional reinforcement learning methods to real-world problems with sparse rewards can result in the nonconvergence or divergence of the algorithm due to the increasing complexity of the action space and state space. This issue arises from the need for the agent to learn from limited and sparse feedback. Just as when humans encounter a complex problem, they often decompose it into a number of easy-to-solve subproblems, and then once divided into subproblems, they work step by step to solve the subproblems, the idea of hierarchical reinforcement learning is derived from this. Simply put, hierarchical reinforcement learning methods use a hierarchical architecture to solve sparse problems (dividing the complete task into several subtasks to reduce task complexity) by increasing intrinsic motivation (e.g., intrinsic reward) [75,76].

Currently, hierarchical reinforcement learning can be broadly categorized into two approaches. One is a goal-conditioned algorithm [77,78]; its main step is to use a certain number of subgoals to train the agent toward these subgoals, and after the final training, the agent can complete the set total goal. The difficulty in this way is how to select suitable subgoals to assist the algorithm in reaching the final goal. The other type is based on multilevel control, which abstracts different levels of control, with the upper level controlling the lower level (generally divided into two levels; the upper level is called the metacontroller and the lower level is called the controller) [79,80]. These abstraction layers may be called different terms in different articles, such as the common option, skill, macroaction, etc. The metacontroller at the upper level gives a higher-level option and then passes the option to the lower-level controller layer, which takes an action based on the received option, and so on until the termination condition is reached.

The specific algorithms for goal-based hierarchical reinforcement learning include HIRO (hierarchical reinforcement learning with off-policy correction) [81], HER (hindsight experience replay) [82], etc. The specific algorithms for hierarchical reinforcement learning based on multilevel control include option critic [83], A2OC (asynchronous advantage option critic) [84], etc.

Hierarchical reinforcement learning can solve the problem of poor performance in scenarios with sparse rewards encountered by ordinary reinforcement learning (through the idea of hierarchical strategies at the upper and lower levels), and the idea of layering can also significantly reduce the “dimensional disaster” problem during training. However, hierarchical reinforcement learning is currently in the research stage, and hierarchical abstraction still requires a human to set goals to achieve good results, which means it has not yet reached the level of “intelligence”.

3.3. Multiagent Reinforcement Learning

Multiagent reinforcement learning refers to a type of algorithm where multiple agents interact with the environment and other agents simultaneously in the same environment [85]. It will construct a multiagent system (MAS) composed of multiple interactive agents in the same environment [86]. This system is commonly used to solve problems that are difficult to solve for independent agents and single-layer systems. The agents can be implemented by functions, methods, processes, algorithms, etc. Multiagent reinforcement learning has been widely applied in fields such as robot cooperation, human–machine chess, autonomous driving, distributed control resource management, collaborative decision support systems, autonomous combat systems, and data mining [87,88,89].

In multiagent reinforcement learning, each intelligence makes sequential decisions through a trial-and-error process of interacting with the environment, similar to the single-agent case. A major difference is that the agents need to interact with each other, so that the state of the environment and the reward function actually depend on the joint actions of all the agents. There is an intuitive notion: for one of the agents, the state actions, etc., of the other agents are also modeled as the environment is, and then the algorithms in the single-agent domain are trained and learn separately. Then the training of each agent is summarized into the system for analysis and learning.

A part of the algorithms of multiagent reinforcement learning is the solving of the cooperative/adversarial game problem, which can be categorized into four types according to the different requirements: (1) Complete competition between agents, i.e., one party’s gain is the other party’s loss, such as the predator and the prey or the two sides of the chess game. The general complete competition relationship is a zero-sum game or a negative-sum game (the two sides obtain the combined reward of 0 or less than 0). (2) Complete cooperation between agents, mainly for industrial robots or robotic arms to cooperate with each other to manufacture products or complete tasks. (3) Mixed relationship between agents (i.e., semicooperative and semicompetitive), such as in soccer robots, where the agents are on the same team and against the other team, which shows the two kinds of relationships; these are, respectively, cooperating with the intelligences for the cooperative and competitive relationships [32]. (4) Self-interest, where each agent only wants to maximize its own reward, regardless of others, such as in the automatic trading system of stocks and futures [90].

Compared with the shortcomings of single-agent reinforcement learning in some aspects, multiagent reinforcement learning can solve the problems of the algorithm being difficult to converge or the learning time being too long. At the same time, it can also complete cooperative or antagonistic game tasks that cannot be solved by single-agent reinforcement learning.

However, there are a number of problems with directly introducing a multi-intelligent system into the more successful algorithms of single-intelligent reinforcement learning, most notably the fact that the strategy of each intelligence changes as training progresses and is nonstationary from the point of view of any of the independent intelligences, which contradicts the static nature of the MDP. Similarly in the case of reinforcement learning, compared to a single intelligence, multi-intelligence reinforcement learning will have the following limitations: (1) Environmental uncertainty: while one intelligence is making a decision, all other intelligences are taking action while the state of the environment changes, and the joint action of all the intelligences and the associated instability result in the state of the environment and the state-space dimension rising. (2) Limitations in obtaining information: a single agent may only have local observation information, cannot obtain global information, and cannot know the observation information, action, and reward information of other agents. (3) Individual goal consistency: the goal consistency of each subject may not overlap. It may be the global optimal reward or the local optimal reward. In addition, high-dimensional state space and action space are usually involved in large-scale multiagent systems, which puts higher demands on the model representation ability and computational power in real scenarios. This may have certain scalability difficulties.

4. Pattern-Type Layer

Reinforcement learning can be divided into model-free [91] and model-based [92] categories in terms of the type of model, the main differences being whether the current state is known or not, whether the action is shifted to the next state or not, and what the distribution of rewards is. When distribution is provided directly to the reinforcement learning method, these are called model-based algorithms (i.e., whether or not they rely on a learned model for exploration and exploitation, the algorithm first interacts with the real environment to fit a model and then predicts what it will see afterward and plans the path of action ahead of time based on it), and and the opposite are are called model-free algorithms. The classification criteria in this category are based on the classic article [1].

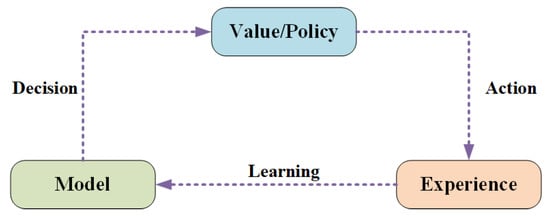

4.1. Model-Based Reinforcement Learning

Model-based reinforcement learning (MBRL) is a method of learning a model (environment model) by first obtaining data from the environment and then optimizing the policy based on the learned model [93]. The model is a simulation of the real world. In MBRL, one of the most central steps is the construction of an environment model, and the complete learning steps are summarized in Figure 2. First, a model is built from the experience of interacting with the real world. Second, the model is learned and updated with value functions and strategies, drawing on the methods used in MDP, TD, and other previous approaches. Subsequently, the learned value functions and strategies are used to interact with the real world, i.e., a course of action is planned in advance, and then the agent follows the course of action and explores the real environment to gain more experience (updating the value functions and strategies through the error values returned to update and make the environment model more accurate).

Figure 2.

Flow of the model-based reinforcement learning algorithm.

MBRL can be broadly categorized into two implementations. The first is the learn-the-model approach and the second is the given-the-model approach. The difference is that the given-the-model approach learns and trains based on an existing model, whereas the learn-the-model approach allows an agent to construct a world model of the environment by first exploring and developing it. Then the agent interacts with the constructed environment model (learning and training) and then iteratively updates the environment model to make the agent more sufficiently explore the real environment more accurately. A specific algorithm for the given-the-model approach is AlphaZero [94], and specific algorithms for learn the model are MBMF [95], MBVE [95], I2As [96], and so on.

The model-based algorithm has its own significant advantages: (1) based on supervised learning, it can effectively learn environmental models; (2) it is able to effectively learn using environmental models; (3) it reduces the impact of inaccurate value functions; (4) researchers can directly use the uncertainty of the environmental model to infer some previously unseen samples [97].

The model-based algorithm requires learning the environment function first and then constructing the value function, resulting in a higher time cost than the model-free algorithm. The biggest drawback of model-based learning is that it is difficult for agents to obtain a real model of the environment (i.e., there may be a significant error in the environment model compared to the real world).

Because there are two approximation errors in the algorithm, this can lead to the accumulation of errors and can affect the final performance. For example, there are errors from the differences between the real environment and the learned model, which may result in the expected performance of the intelligent agent in the real environment being far inferior to the excellent results in the model.

4.2. Model-Free Reinforcement Learning

Model-free reinforcement learning is the opposite of model-based; it completely does not rely on environmental models and can directly interact with the environment to gradually learn and explore. It is often difficult to obtain the state transitions, types of environmental states, and reward functions in real-life scenarios; therefore, if model-based reinforcement learning is still used, the error resulting from the difference between the learned model and the real environment will often be large, leading to an increasing accumulation of errors when the agent learns in the model and resulting in the model achieving far from the expected effect. Model-free algorithms are easier to implement and adjust because they interact with and learn directly from the environment [98].

In model-free learning, the agent cannot obtain complete information about the environment because it does not have a mode. It needs to interact with the environment to collect information about the trajectory data to update the policy and value function so that it can obtain more rewards in the future. Compared with model-based reinforcement learning, model-free algorithms are generally easier to implement and adjust because they take the approach of sampling the environment and then fitting and can update the policy and value function more quickly. Algorithmically specific, unlike Monte Carlo methods, the temporal-difference learning method guesses and continually updates the results of the guessed episodes after learning its own bootstrapping and incomplete episodes.

Model-free algorithms can be broadly classified into three categories: the unification of value iteration and strategy iteration, Monte Carlo methods, and temporal-difference learning, as follows: (1) Strategy iteration is actually used first to evaluate each strategy to obtain the value function, and then the greedy strategy is used to obtain the promotion of the strategy. The value iteration does not evaluate any but simply iterates over the value function to obtain the optimal value function and the corresponding optimal policy [99]. (2) The Monte Carlo method assumes that the value function of each state takes a value equal to the average of the returns of multiple episodes that must be executed in the termination state [100]. The value function of each state is the expectation of the payoff, and under the assumption of Monte Carlo reinforcement learning, the value function takes a value simplified from the expectation to the mean value. (3) Like Monte Carlo learning, temporal-difference learning from episode learning is the direct active experimentation with the environment to obtain the corresponding “experience” [101].

The advantage of model-free algorithms is that they are much easier to implement than model-based algorithms because they do not need to model the environment (the modeling process is prone to errors in modeling the real-world environment, which can affect the accuracy of the model); therefore, they are better than the model-based algorithms in terms of the generalizability of the problem. However, model-free algorithms also have shortcomings: the sampling efficiency of such algorithms is very low, a large number of samples is needed to learn the algorithm (high time cost), etc.

4.3. Reinforcement Learning Based on the Fusion of Model-Based and Model-Free Algorithms

Both model-based reinforcement learning methods and model-free reinforcement learning methods have their own characteristics; the advantage of model-based methods is that their generalization ability is relatively strong, while the sampling efficiency is high. The advantages of model-free methods are that they are universal, the algorithms are relatively simple, and they do not need to construct models, so they are suitable for solving problems that are difficult to model, and at the same time, they can guarantee that the optimal solution is obtained. However, both of them have their own limitations: for example, the sampling efficiency of model-free methods is low and their generalization ability is weak; model-based methods are not universal, they cannot model some problems, and their algorithmic errors are sometimes large. When encountering actual complex problems, modeled or model-less methods alone may not be able to completely solve the problem.

Therefore, the new idea is to combine model-based and model-free ideas to form an integrated architecture, that is, to fuse reinforcement learning based on model-based and model-free approaches, utilizing the advantages of both to solve complex problems. When an environment model is constructed, the agent can have two sources from which to obtain experience: one is actual experience (real experience) [35] and the other is simulated experience (simulated experience) [36]. It is expressed by the formula

where represents the state of the environment at the next moment, while real experience refers to the trajectory obtained by the agent interacting with the actual environment, and the state transition distribution and reward are obtained from feedback in the real environment. Simulated experiences rely on the environment model to generate trajectories based on state transition probabilities and reward functions.

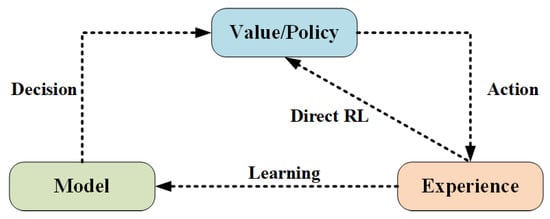

The Dyna architecture was the first to combine real experiences that are not model-based with simulated experiences obtained from model-based sampling [102]. the algorithm learns from real experiences to obtain a model and then uses the real and simulated experiences jointly to learn while updating the value and policy functions. The flowchart of the Dyna architecture is shown in detail in Figure 3.

Figure 3.

The framework of Dyna.

The Dyna algorithmic framework is not a specific reinforcement learning algorithm but rather a series of a class of algorithmic frameworks, which differs from the flowchart of the modeled approach by the addition of a “direct RL” arrow. The Dyna algorithmic framework is used in combination with different model-free RLs to obtain specific fusion algorithms. If Q-learning based on value functions is used, then the Dyna-Q algorithm can be obtained [103]. In the Dyna framework, in each iteration, the environment is first interacted with and the value function and/or the policy function is updated. This is followed by n predictions of the model, again updating the value function, and the policy function. This allows for the experience of interacting with the environment and the predictions of the model to be utilized simultaneously.

The fusion algorithm integrates the advantages of both model-based and model-free algorithms, such as strong generalization ability, high sampling efficiency, and the ability to maintain a relatively fast training speed. This also makes it a popular type of algorithm in reinforcement learning, expanding a series of studies such as the Dyna-2 algorithm framework.

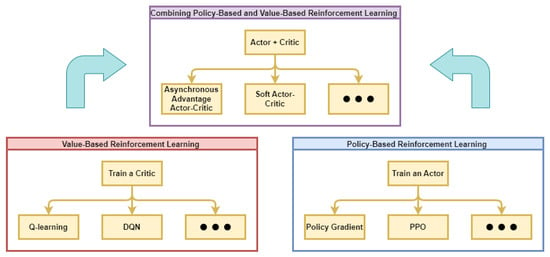

5. Optimization-Direction Layer

From the optimization direction, reinforcement learning can be classified into three main categories: algorithms based on value optimization [104], algorithms based on policy optimization [105], and algorithms combining value optimization and policy optimization [106]. Typical value-optimization algorithms are Q-learning and SARSA, and typical policy-optimization algorithms are TRPO (trust region policy optimization) and PPO (proximal policy optimization). The current research trend of algorithms combining value optimization and policy optimization is mainly based on the actor–critic (AC) method for improved optimization (the actor models the policy and the critic models the value function). These classifications stem from the classic textbooks in the field of reinforcement learning [107]. They represent two distinct approaches to thinking about and solving problems in reinforcement learning.

5.1. Optimization of Value-Based Reinforcement Learning

Specific examples of value-optimization-based algorithms are SARSA [108] and Q-learning [109]; these were introduced in the previous subsections. This type of reinforcement learning is based on the dynamic planning of the Q-table and introduces the notion of the Q-value, which represents the expectation of the value of the total reward and relies on the expectation of the currently required action to be evaluated. The core of the algorithm is to select a more optimal strategy for exploring and utilizing the environment in order to collect a more comprehensive model.

Value-based reinforcement learning (represented by Q-learning) actively separates the exploration and exploitation parts, taking randomized actions to sample the entire state space, making the exploration sufficient for the overall problem [109]. The idea of DP is then used to update the sampled state using the maximum value, and for complex problems, neural networks are often used for value function approximation.

The value-based optimization method has a high sampling rate, and the algorithm is easy to implement. However, the algorithm still has some limitations [110], including the following: (1) The maximum value function used for updating the value function has the challenge that it is difficult to find the same function for the corresponding operation in the continuous action, so it cannot solve these problems. (2) Due to the use of a completely random exploration strategy, it makes the computation of the value function very unstable. These problems have led researchers to constantly search for methods with better scene adaptation, such as the reinforcement learning methods based on policy optimization cited below.

5.2. Optimization of Policy-Based Reinforcement Learning

Due to the above reasons, reinforcement learning based on value optimization cannot be adapted to all scenarios, so the methods based on policy optimization (PO) are born [111]. Policy optimization reinforcement learning methods are usually divided by the determinism of the policy, where the deterministic policy approach will only choose a fixed action at each step and then enter the next state; furthermore, the policy of the stochastic policy approach is a probability distribution of an action (one out of several actions), and the agent will choose one according to the current state with a certain policy and then enter the next state.

Generally, for strategy optimization, the strategy gradient algorithm is used, which is implemented in two steps: (1) The gradient is derived based on the output of the strategy network. (2) The strategy network is updated using statistics on the total strategy distribution using the data generated by the interaction of the agents with the environment. Specific algorithms based on policy optimization include PPO [112], TRPO [113], etc. In particular, the PPO algorithm proposed by OpenAI has a very high degree of dissemination and is a commonly used method in industry today.

Since the policy-optimization approach uses a policy network for the reward expectation estimation of actions, no additional cache is needed to record redundant Q-values, and it can adapt to the stochastic nature of the environment. The disadvantage of policy optimization is that it may be undertrained when training stochastic policies.

5.3. Optimization of Combining Policy-Based and Value-Based Reinforcement Learning

Because both value optimization and policy optimization have their own drawbacks and limitations, using one of them alone may not always achieve good results, so some current research combines the two to form the actor–critic (AC) framework as shown in Figure 4. Most of the current research is based on the AC framework for improvement, and the experimental results are usually better than value optimization or strategy optimization alone. For example, the SAC (soft actor–critic) algorithm [114] is an improved algorithm based on the AC framework, which applies to continuous state space and continuous action space and is an off-policy algorithm developed for maximum entropy RL. SAC applies stochastic policy, which has some advantages over deterministic policy. Deterministic policy algorithms end the learning process after finding an optimal path, whereas maximum entropy stochastic policies need to explore all possible optimal paths.

Figure 4.

Value-based and policy-based reinforcement learning.

The learning objective of ordinary DRL is to learn a strategy to maximize the return, i.e., to maximize the cumulative reward [48], which is expressed by the formula

Furthermore, the concept of maximum entropy is introduced in SAC (not only is the reward value required to be maximized but also the entropy is required); the larger the entropy, the larger the uncertainty of the system, that is, the probability of the action is made to be spread out as much as possible instead of being concentrated on one action. The core idea of maximum entropy is to explore more actions and trajectories, while the DDPG algorithm is trained to obtain a deterministic policy, i.e., a policy that considers only one optimal action for an agent [114]. The optimal policy formula for SAC is

where stands for return, stands for entropy, and is a temperature parameter used to control whether the optimization objective focuses more on reward or entropy, which has the advantage of encouraging exploration while also learning near-optimal actions. The purpose of combining the reward and maximum entropy is to explore more regions, so that the original strategy does not stick to the fully explored local optimum and give up the wider exploration space. At the same time, maximizing the return ensures that the overall exploration of the agent remains at a high level of exploration.

Overall, SAC has the following advantages: (1) The maximum entropy term provides SAC with a broader exploration tendency, which makes the exploration space of SAC more diversified and brings better generalizability and adaptability. (2) The off-policy strategy is used to improve the sample efficiency (the full off policy in DDPG is difficult to apply to high-dimensional tasks), while SAC combines the off policy and the stochastic actor. (3) The training speed is faster. The maximum entropy makes the exploration more uniform and ensures the stability of the training and the effectiveness of the exploration.

6. Characterization-Method Layer

In reinforcement learning, characterization methods refer to how information such as states, actions, and value functions is represented, enabling agents to learn effectively and make decisions. The representation of these methods in reinforcement learning encompasses various approaches, including those based on spiking neural networks, Gaussian processes, and deep neural networks. It is important to note that it does not encompass all types of representation methods; other types, such as neural decision trees, are also included. Furthermore, the three approaches mentioned in this paper are the three most widely used different options in many current studies after extensive surveys. Therefore, we present these three methods as fundamental choices in this layer.

6.1. Spiking Neural Network

Compared with traditional artificial neural networks, a spiking neural network [115,116] has a working mechanism closer to that of the neural networks of the human brain and is therefore more suitable for revealing the nature of intelligence. Not only can spiking neural networks be used to model the brain neural system, they can also be applied to solve problems in the field of AI. The spiking neural network has a more solid biological foundation, such as its nonlinear accumulation of membrane potential, pulse discharge after reaching the threshold, and cooling during the nondeserved period after the discharge, etc. These characteristics, while providing a more complex information processing capability to the spiking neural network, also bring challenges and difficulties for its training and optimization.

The traditional learning method based on loss back-propagation has been shown to optimize artificial neural networks [117]; however, it requires the entire network and neuron nodes to be differentiable everywhere. Therefore, the use of traditional loss back-propagation methods is not suitable for the optimization of spiking neural networks, and its principles are at odds with the learning laws of the biological brain. There is currently no general training method available for spiking neural networks. The state of the spiking neural networks is recognized as the third generation of neural networks after the second generation of artificial neural networks (ANNs) based on the existing MLP (multilayer perceptron) [118].

Although traditional neural networks have achieved many good and excellent results in various tasks, their principles and computational processes are still far from the real human brain information process. The main differences can be summarized as follows: (1) Traditional neural network algorithms still use high-precision floating-point operations for arithmetic, which the human brain does not use. In the human sensing system and brain, information is transmitted, received, and processed in the form of action voltages, or electric spikes. (2) The training process of an ANN relies heavily on the back-propagation algorithm (gradient descent); however, in the real human brain’s learning process, scientists have not observed the human brain using gradient descent for learning. (3) ANNs usually require a large labeled dataset to drive the fitting of the network. This is quite different from the usual way of learning because the perception and learning process in many cases is unsupervised. Moreover, the human brain usually does not need such a large amount of repeated data to learn the same thing, and only a small amount of data is needed for training [115,119].

To summarize, to make neural networks closer to the human brain, the SNN was born, inspired by the way the biological brain processes information—in spikes. SNNs are not traditional neural network structures like CNNs and RNNs; SNN is a collective term for new neural network algorithms that are closer to the human brain and have better performance than CNNs and RNNs.

6.2. Gaussian Process

In recent years, Gaussian process regression [120,121] has become a widely used regression method. More precisely, the GP is a distribution of functions, which is a joint Gaussian distribution for any finite set of function values. It can be solved with an analytical solution and expressed in probabilistic form. The obtained mean and covariance are used for regression and uncertainty estimation, respectively. The advantage of GP regression is that overfitting can be avoided while still finding functions complex enough to describe any observed phenomenon, even in noisy or unstructured data.

In probability theory and statistics, a Gaussian process is a stochastic process in which observations appear in a continuous domain (e.g., time or space). In a Gaussian process, each point in a continuous input space is associated with a normally distributed random variable. Moreover, each finite set of these random variables has a multivariate normal distribution; in other words, any finite linear combination of them is normally distributed. The distribution of a Gaussian process is the joint distribution of all those (infinitely many) random variables, and because of that, it is the distribution of a function over a continuous domain (e.g., time or space). A Gaussian process is considered a machine learning algorithm that is learned in an inert manner using a measure of homogeneity between points as a kernel function in order to predict the value of an unknown point from the input training data.

Gaussian processes are often used in statistical modeling, and models using Gaussian processes can yield properties of Gaussian processes. For example, if a stochastic process is modeled as a Gaussian process, we can show that the distribution of various derivatives can be derived, such as the mean of the stochastic process over a range of counts or the error of the mean prediction using a small range of sample counts and sample values.

6.3. Deep Neural Network

Deep learning is a research direction in artificial intelligence, and the deep neural network [122,123] is a framework for deep learning; it is a type of neural network with at least one hidden layer. Similar to shallow neural networks, deep neural networks are able to provide modeling for complex nonlinear systems. However, the extra layers provide a higher level of abstraction for the model, thus increasing its capabilities. The properties of DNNs dictate that they are groundbreaking for speech recognition and image recognition and thus are often deployed in applications ranging from self-driving cars to cancer detection to complex games. In these areas, DNNs are able to outperform human accuracy.

The benefit of deep learning is that it replaces manual feature acquisition with unsupervised or semisupervised feature learning and efficient algorithms for hierarchical feature extraction, which improves the efficiency of feature extraction as well as reduces the time of acquisition. The goal of deep neural networks is to seek better representations and create better models to learn these representations from large-scale unlabeled data. Representations come from neuroscience and are loosely created to resemble information processing and understanding of communication patterns in the nervous system, such as neural coding, which attempts to define relationships between the responses of pulling neurons and between the electrical activity of neurons in the brain.

Based on deep neural networks, several new deep learning frameworks have been developed, such as convolutional neural networks [124], deep belief networks [125], and recursive neural networks [126], which have been applied to computer vision, speech recognition, natural language processing, audio recognition, and bioinformatics with excellent results.

7. Training-Strategy Layer

Reinforcement learning is categorized in terms of training strategies including different approaches such as centralized reinforcement learning, distributed reinforcement learning, and federated reinforcement learning. The intelligent decision-making problem at the edge needs to focus on selecting appropriate training strategies. When designing a program for an intelligent decision problem, the design of the content in this layer of the framework should be centered on these three methods according to the actual task state. This classification is based on [64]. With the advancement of computing devices, the training of reinforcement learning has gradually shifted from traditional centralized computation to distributed computation. Additionally, due to the increased consideration for data privacy and other factors this year, federated learning, as a new training method, has also received widespread attention.

7.1. Concentrated Reinforcement Learning

Concentrated reinforcement learning is one of the most traditional methods and has been skillfully used in both single-agent reinforcement learning and multiagent reinforcement learning scenarios. Single-agent reinforcement learning refers to the learning of an agent using standard reinforcement learning. It optimizes the decision of the agent by learning the value function or the policy function and is able to deal with complex situations such as continuous action space, high-dimensional state space, and so on. According to the characteristics and requirements of the problem, choosing a suitable centralized reinforcement learning method can improve the learning effect and decision quality of the agent. Common algorithms include Q-learning, DQNs (deep Q-networks) [127], policy gradient methods [128], proximal policy optimization, etc. Q-learning is a basic centralized reinforcement learning method to make optimal decisions by learning a value function. A DQN uses deep neural networks to approximate the Q-value function. It has made significant breakthroughs in complex environments such as images and videos. Policy gradient methods are a class of centralized reinforcement learning methods that directly optimize the policy function. These methods compute the gradient of the policy function by sampling trajectories and update the policy function according to the direction of the gradient. The representative algorithm PPO uses two policy networks, an old policy network for collecting experience and a new policy network for computing gradient updates. The update magnitude is adjusted by comparing the difference between the two strategies to achieve a more stable training process.

In the application of multiagent reinforcement learning, all agents in this training mode share the same global observation state, and decisions are made in a global manner. This means that there can be effective collaboration and communication between agents, but it also increases the complexity of training and decision making. Centralized reinforcement learning is suitable for tasks that require global information sharing and collaboration, such as multirobot systems or team gaming problems. For example, the neural network communication algorithm [129] is based on a deep neural network to model information exchange between agents in a multirobot system. Each agent decides its own actions through the observed state of the environment and partial information from other agents. This approach can be undertaken by connecting the observations of the agents to a common neural network that enables them to share and transfer information. A multiagent deep deterministic policy gradient [130] makes the actor network utilize the policies of the other agents as inputs to select an action, and at the same time, it receives its own observations and historical actions as inputs. The critic network estimates the joint value function of all agents. QMIX is a value function decomposition method for centralized reinforcement learning [131]. It estimates the local value function of each agent by using a hybrid network and synthesizes the global value function through a hybrid operation. The QMIX algorithm guarantees the monotonicity of the global value function, which promotes cooperative behavior.

However, in centralized reinforcement learning, it is often necessary to maintain a global state that contains all observation information. The global state can be a complete representation of the environment information or a combination of multiple agent observations and partial environment information. More importantly, the central controller is the core component of centralized reinforcement learning, which is responsible for processing the global state and making decisions. The central controller can be a neural network model that accepts the global state as input and outputs the actions of each agent; while this model is capable of generating better performing decision models for certain scenarios, the process still often requires a significant amount of time. Centralized reinforcement learning represents an inefficient way for the agent to interact with the environment, makes it difficult to generate a sufficient number of historical trajectories to update the policy model, and has a high demand on computational power that is difficult to meet at the edge. In addition, the use of old models is often not well adapted to new environments.

7.2. Distributed Reinforcement Learning

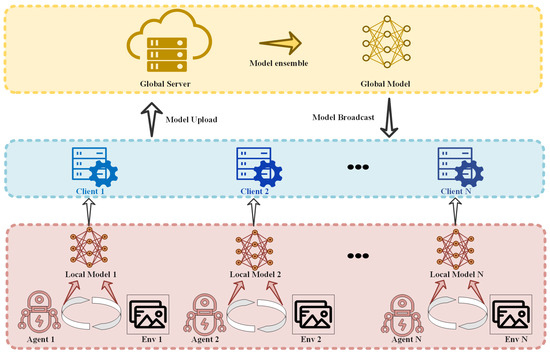

The common feature in various machine learning methods is that they all employ complex, large-scale learning models that rely on a large amount of training data. However, the training of these models is far from being satisfied by the edge, in terms of both computation and storage requirements. Therefore, distributed parallel computing has become a mainstream way to solve the large-scale network training problem. Distributed reinforcement learning can perform task allocation and collaborative decision making among multiple agents, thus improving performance and efficiency in large-scale, complex environments. Compared with the traditional centralized reinforcement learning, distributed reinforcement learning can learn and make decisions simultaneously on multiple computer clusters, which can effectively improve the efficiency of sample collection and the iteration rate of the model [132]. Meanwhile, since distributed reinforcement learning can gain experience from multiple agents at the same time, it can better cope with noise and uncertainty and improve the overall robustness. In addition, because of the way it utilizes information exchange and collaboration among multiple agents, the whole system has a higher learning ability and intelligence level. Therefore, distributed reinforcement learning can be easily extended to situations with more agents and more complex environments with better adaptability and flexibility.