1. Introduction

With multiple videos being submitted to social media every day, an effective and efficient video understanding algorithm is becoming increasingly important. Temporal action localization (TAL) plays a crucial role in video interpretation, particularly for applications such as video surveillance, content analysis and summarization, and human–computer interaction. In long, untrimmed videos, TAL is essential to not only detect temporal proposal boundaries of action instances but also to identify action categories.

TAL has great similarity with object detection; TAL is aimed at accurately generating temporal proposals to segment action instances, while object detection is aimed at precisely producing spatial proposals to delineate object instances. Therefore, many methods of TAL are based on the extension of the object detection framework, including anchor-based [

1,

2,

3,

4,

5,

6], actionness-guided [

7,

8,

9,

10,

11], and the anchor-free (boundary-based) method [

12,

13], which is inspired by the flexibility and efficiency of anchor-free object detectors, such as CornerNet [

14], FCOS [

15], and BorderDet [

16]. Presently, anchor-based methods in temporal action localization (TAL) are generally classified into two distinct types: two-stage framework [

2,

3,

4] with higher accuracy and one-stage framework [

1,

5,

6] with higher efficiency. The two-stage framework studies proposal and classification. The former is divided into the task of temporary action proposal (TAP), and the latter is more similar to action recognition.

Methods that are anchor-free and known for their accuracy in defining boundaries, their adaptability in duration, and produce a single proposal at each temporal point throughout the video sequence. This proposal is represented by a pair of values indicating the distance from the current location to the start and end times. Among these, the Anchor-Free Saliency-based Detector (AFSD) has made notable strides, pioneering the use of frame-level features and saliency cues to enhance boundary detection. Although the above methods have achieved good results, there is still some distance from the goal of accurately localizing the action, the most difficult aspect of which is the ambiguity of action boundaries. Due to the continuity of the action, the action does not start suddenly at a certain moment, but “gradually”. If the “action value” is used to distinguish the beginning (or end) of an action, then the “action value” accumulates until the threshold (i.e., the moment of the action) before the action begins (or after it ends). Therefore, the initiation point of actions (which is identical to the termination time) must exhibit two critical characteristics: (1) Relatively small variation in the characteristics of the boundary area. (2) There is an objective threshold, which is used to distinguish the boundaries of actions.

To this end, we introduce the boundary awareness network (BAN) as a refinement to the existing anchor-free framework for temporal action localization (TAL), focusing on achieving a more accurate detection of action boundaries within videos. This refinement is executed through a comprehensive architecture comprising a feature encoding network, a coarse pyramidal detection module, and a fine-grained detection mechanism powered by the Gaussian boundary module (GBM). Compared to the anchor-free method AFSD, the BAN introduces innovative approaches for boundary detection, aiming to address the ambiguities of action boundaries more effectively; while the AFSD laid the groundwork for anchor-free TAL by simplifying the detection process and reducing dependency on hyperparameters, the BAN builds upon this by enhancing the precision of boundary detection through the GBM and a novel learning strategy, Boundary Differentiated Learning (BDL).

Feature encoding network. Utilizing the I3D [

17], renowned for its effectiveness in capturing rich, dynamic features from video data, this network serves as the cornerstone of our approach. By extracting robust spatial–temporal features, it lays a solid foundation for the precise detection and localization of actions within videos.

Coarse pyramidal detection module. To address the spatial–temporal dimensions of video data comprehensively, we employ a feature pyramid network equipped with various temporal convolutions. This module is pivotal in generating initial action proposals by amalgamating information across multiple layers, thereby facilitating a multi-scale understanding of potential action instances.

Fine-grained detection. Then, rough estimates of the start and end boundary distances are derived through projection. After that, the predicted temporal regions are used to generate fine-grained features with the Gaussian boundary module, which utilizes Gaussian boundary pooling to precisely locate the action boundary. Since the action is continuous, its boundary has a fuzzy property. Accurate action boundary detection requires discriminative boundary features. However, the core of obtaining such boundary features is how to correlate the moment boundary features with relevant features. A useful but non-differentiable method is to extract the moment-level boundary feature from the frame feature with the maximum activation value; while the boundary feature contains the most discriminating features, most useful information is lost. For example, the features around the salient features also contain boundary information.

Gaussian Boundary Module. To tackle the challenges in capturing high-quality boundary features for action prediction, we introduce the Gaussian boundary module (GBM). This module features a novel boundary pooling method named Gaussian boundary pooling (GBP), employing a Gaussian kernel function to aggregate features around the action boundary effectively. The kernel, centered on the boundary time stamp from coarse predictions, ensures that features closer to the boundary have a higher influence, optimizing the quality of boundary features extracted. It is important to note the distinction between our Gaussian kernels and those used in GTAN, which aims to represent action proposals of varying durations, whereas our focus is on extracting moment range boundary features for precise action localization.

Boundary Differentiated Learning. To further enhance our model’s ability to discern complex actions from background clutter in videos, we propose a novel strategy named Boundary Differentiated Learning (BDL). This strategy innovatively transforms the classification task of distinguishing action boundaries into a regression task focused on identifying the ’actionability’ of a scene. By re-scaling the ground truth labels to guide the model, BDL allows for a more nuanced understanding of action presence, facilitating the discrimination between action and non-action segments. Our extensive experiments on datasets such as THUMOS14 and ActivityNet-1.3 demonstrate the effectiveness of the BDL strategy, significantly improving our model’s performance and establishing new state-of-the-art results.

Our key contributions can be outlined as follows:

1. In this study, our enhanced anchor-free framework for temporal action localization modestly increases computational demands relative to the AFSD method, yet it offers a more precise detection of action boundaries. This methodical refinement bolsters the framework’s precision without significantly impacting computational efficiency, thereby advancing the state of the art in temporal action localization.

2. For a more accurate delineation of action boundaries, we have developed a unique boundary feature extraction method using the Gaussian boundary module. This is utilized in conjunction with coarse pyramidal detection to yield more detailed predictions. Additionally, we have devised a Boundary Contrastive Learning approach to further refine the accuracy of boundary information.

3. In our thorough assessments on the THUMOS14 and ActivityNet v1.3 datasets, the boundary awareness network (BAN) has shown advancements in mean Average Precision (mAP) when compared with the existing state-of-the-art methods, underscoring the enhanced performance of our proposed approach.

2. Related Work

Anchor-based. Current anchor-based approaches in the field can be categorized into two distinct frameworks: the one-stage and the two-stage framework. The two-stage framework includes TURN-TAP [

2], R-C3D [

3], TAL-Net [

4], etc. TURN-TAP [

2] cuts the untrimmed video into multiple non-overlapping clips, uses the pyramid method to construct unit features, and independently regresses the temporal boundary on each unit. R-C3D [

3], taking inspiration from Faster-RCNN [

18], proposes an end-to-end network to generate TAP and action classification in two stages. TAL-Net [

4] proposes multi-scale anchors to adapt to the diversified duration of action instances. The main drawback of the two-stage framework is that the boundaries of action instances have been fixed during the classification step. To address this issue, a one-step framework has been proposed in recent years, including SSAD [

1], GTAN [

5], and PBR-Net [

6]. SSAD [

1] uses 1D temporal convolution to generate multiple temporal action anchors, and captures actions with different durations from three hierarchical levels. GTAN [

5] represents actions with different durations through the mixture of Gaussian kernels. PBR-Net [

6] designs three series regression modules to modify the boundary information gradually and integrates the fine-grained frame-level features method and anchor-based method.

The above anchor-based methods heavily rely on predefined anchors, which lack flexibility and are sensitive to anchor parameters. To address these problems, A2Net [

19] combines anchor-based and anchor-free method to accurately detects TAP. However, it depends on predefined anchors to some extent. Our method differs, in that it does not require predefined anchors, and is thus more efficient and flexible.

Actionness-guided. Anchor-based TAL method can be regarded as a top–down algorithm, whereas the actionness-guided TAL method is bottom–up. It indicates the probability of potential actions utilizing the frame-level actionness score, then combines all start and end moments into proposals, and, finally, performs category predictions for each proposal. Compared with the anchor-based method, the actionness-guided method detects proposals in a more flexible manner. SSN [

7] models the different stages (start, course, and end) of the action, and evaluates the completeness of proposals. CDC [

11] predicts the category of action frame by frame, which makes the boundary of action more accurate. BSN [

8] locates action boundaries via actionness score and starting and ending probability. BMN [

9], an extension of BSN, combines the temporary boundary probability sequence and bounding-matching mechanism to get the final proposals, and designs the threshold to screen proposals. However, the boundary matching strategy of BMN only uses the low-level boundary information and ignores the overall information of action, which performs poorly in dealing with complex actions and fuzzy backgrounds. To overcome this limitation, DBG [

10] assesses comprehensive boundary confidence maps across all proposals and introduces features sensitive to action for a thorough evaluation of action completeness.

In contrast to these approaches, anchor-free methods like SALAD [

13] and the AFSD [

12] demonstrate efficiency and flexibility by eschewing predefined anchors. The AFSD, in particular, has pioneered an entirely anchor-free framework for TAL, emphasizing moment-level feature identification for action boundaries. Despite its innovations, the AFSD encounters challenges in capturing comprehensive boundary features, often losing valuable information surrounding salient features.

Building on the AFSD’s foundation, we introduce the boundary awareness network (BAN) equipped with the Gaussian boundary module (GBM) to enhance action boundary localization precision. Unlike the AFSD, the BAN leverages Gaussian boundary pooling (GBP) within the GBM to aggregate features around action boundaries more effectively, ensuring that high-quality boundary features are captured without losing peripheral information. This targeted approach allows the BAN to address the limitations observed in both anchor-based and actionness-guided methods, marking a significant step forward in the domain of anchor-free temporal action localization.

3. Method

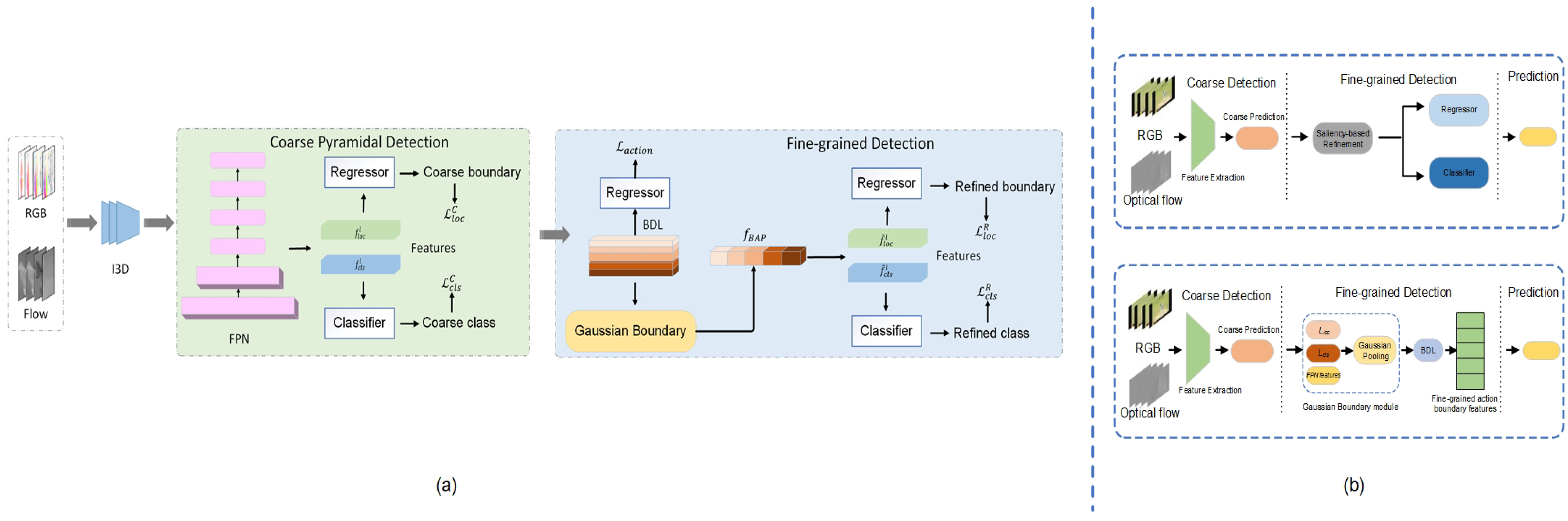

In this section, the detailed structure of our newly developed boundary awareness network (BAN) is discussed. The comprehensive architecture tailored for temporal action localization is depicted in

Figure 1.

3.1. Problem Definition

In the context of an unedited video

V, comprising

T frames, we segment it into

n sections, each containing a fixed frame count. These segments are represented as

wherein

symbolizes the

n-th frame within

X. The temporal labeling set of

X is constituted by a collection of temporal action instances, defined as

with

denoting the total number of actual action instances,

being the start time of the action instance

, and

representing its end time. Given these annotations for video

V, our objective with the model is to accurately predict proposals, complete with class scores, ensuring both high recall and precision.

3.2. Boundary Awareness Network

The architecture of the proposed boundary awareness network is illustrated in

Figure 1. The detection process of the BAN consists of three steps, including feature encoding, coarse pyramidal detection, and fine-grained detection.

Feature Encoding. In our approach, we have opted for the I3D [

17] architecture as backbone, leveraging both RGB and optical flow frames as inputs. This choice is based on I3D’s proven efficacy in both action recognition and temporal action localization tasks. Specifically, for a given input video V, we initially derive clip-level features

from consecutive clips. Here, T, C, H, and W represent the time step, channel, height, and width, respectively. We then compress the features along the last three dimensions into a one-dimensional feature sequence

, encapsulating both the appearance and motion aspects of the video. In our study, the outputs from the final two layers are utilized as the predictive features.

Coarse Pyramidal Detection. To integrate the spatial dimension and aggregate the temporal dimension in multiple layers, we use a feature pyramid network with several temporal convolutions. Following recent methods [

12], after feature encoding, we obtain a feature map

from raw video clips V, and then use

to generate FPN-level feature

with a series of convolutional layers, where l denotes a layer of the FPN level. The objective of the feature pyramid network (FPN) layer is to generate a representation of features across multiple scales, ensuring that each level of the feature maps possesses robust semantic details pertinent to temporal action localization. Then, we generate coarse prediction features by projecting these features into

and

, which contain action classification information and action position information, respectively. We can get coarse start and end boundary relative distances according to following formula:

where

is one layer of temporal convolution shared among all FPN layers. Finally, we can calculate the absolute boundary position as follows:

Subsequently, at each specific location, the predicted temporal regions are used to generate fine-grained features with Gaussian boundary pooling.

Fine-grained Detection. To amend the drawbacks of coarse pyramidal detection, we constructed a fine-grained feature via the Gaussian boundary module, as shown in

Figure 2. Concatenating these features, we can get a boundary-awareness feature as follows:

where

denote a frame-level feature, the start and end frame-level features, and start and end features, as detailed in

Section 3.3. Additionally, we implemented Boundary Differentiated Learning (BDL) as a strategy to differentiate between action and non-action segments, effectively increasing the distinction between the characteristic features of action sequences and the background, as detailed in

Section 3.4.

3.3. Gaussian Boundary Module

The accurate localization of action boundaries within videos is a paramount challenge in temporal action localization, primarily due to the inherent ambiguity of action boundaries. The Gaussian boundary module (GBM) is designed to tackle this challenge by aggregating a comprehensive range of features to extract highly discriminative boundary features for accurate action prediction. These features encompass frame features along with start and end features, which are crucial for delineating the precise extents of actions.

The cornerstone of the GBM is the innovative use of Gaussian boundary pooling, a technique that significantly differs from traditional convolutional methods. This choice is motivated by our dual detection network structure, which incorporates both coarse-grained and fine-grained detection mechanisms. In this context, the coarse-grained detection outcomes serve not only as preliminary action proposals but also as critical guides for the subsequent fine-grained detection phase. This synergy is essential for refining the detection process and achieving high precision in boundary localization.

Gaussian boundary pooling operates by applying a Gaussian kernel centered on the predicted action boundaries from the coarse detection phase. The kernel’s function is to weight the features according to their spatial–temporal proximity to the center, with closer features receiving higher weights. This method ensures that the boundary features are not just moment-level snapshots, but rather encompass a segment range, capturing the nuanced transitions that characterize the start and end of actions. The Gaussian kernel’s unique property of emphasizing features near the predicted boundary while smoothly decreasing the influence of distant features allows the GBM to extract boundary features that are both highly localized and contextually enriched.

This approach is particularly effective in capturing discriminative features that are vital for distinguishing between closely spaced actions and for accurately identifying action boundaries amidst complex background activities. To address this issue, we propose a novel differentiable pooling method called Gaussian boundary pooling, which is defined as follows:

where · is matrix multiplication. The function

is specified as follows:

where

is an automatically generated continuous sequence, and

consist of the k-th start and end time stamp, which is the result calculated by the coarse pyramidal detection stage. We empirically set the standard deviation

to 0.5.

It is important to note that, although GTAN [

5] has a similar structure, they are fundamentally different. In terms of design, the Gaussian kernel module employed in our work is derived from course results and serves the function of extracting the moment-range boundary feature. Furthermore, GTAN [

5] employs Gaussian kernels to characterize action proposals with varying durations.

3.4. Boundary Differentiated Learning

In the realm of action detection within continuous video streams, the precision in delineating action boundaries is a nuanced challenge due to the inherently fuzzy nature of these boundaries. Traditional approaches often rely on discrete feature representation for action-versus-background discrimination, inadvertently leading the model to emphasize features that might not be critical for distinguishing subtle variations in action boundaries. This issue stems from the binary classification framework, which inherently lacks the granularity to accommodate the continuous variation observed in the temporal dynamics of actions.

To address this challenge and enhance the model’s sensitivity to the continuum of action boundary features, we introduced Boundary Differentiated Learning (BDL). At its core, BDL re-envisions the task of action boundary detection by transitioning from a binary classification paradigm to a regression framework. This shift allows for a more nuanced representation of boundary features, reflecting the continuous nature of actions and their transitions.

The BDL strategy employs a novel approach to label assignment, where action boundaries are treated as a spectrum rather than discrete points. Specifically, we reformulated the label assignment process by introducing a continuous label space for the boundary regions. This is achieved through a re-scaling function applied to the ground truth labels, effectively transforming the binary classification task into a regression problem. The re-scaling function is designed to gradually adjust the label values based on their proximity to the action boundaries, thereby creating a gradient of importance that mirrors the fuzzy nature of these boundaries in real-world scenarios. The formula for re-measured boundary labels is as follows:

where set

contains the predefined boundary area, and

is a re-measure function:

where

is the length of the action, and x represents the position of the label. After such a re-measurement process, the boundary between action and background is no longer clear-cut but slowly changing. During model training, such a regression task enables the model to extract more robust boundary features. Following this, we proceed to compute the cross entropy:

where CE denotes the cross entropy loss function. In addition, to further improve the model accuracy and to facilitate the comparison with the baseline, we also used the Boundary Consistency Learning [

12] strategy.

The incorporation of BDL into our model framework significantly bolsters its capability to discern and precisely localize action boundaries, even in challenging scenarios characterized by ambiguous or subtle transitions. By acknowledging and effectively leveraging the continuous nature of action boundaries, BDL adds substantial value to our model, enhancing its overall performance in temporal action localization tasks. This refined learning strategy, coupled with our model’s architecture, marks a significant advancement in the field, pushing the boundaries of what is achievable in action detection within continuous video streams.

3.5. Training and Inference

Label Assignment. Following the AFSD [

12], we evaluate the temporal Intersection over Union (tIoU) between each initial boundary prediction and its respective ground truth. A specific location i is considered positive if its tIoU score exceeds 0.5. Let

and

represent the count of positive samples for the initial and refined predictions, respectively. For the initial prediction, we label each location i as a positive sample corresponding to ground truth j if the condition

is met. Moreover, within the bounding region, we allocate position i to the recalibrated ground truth

.

Training Objective. The BAN was trained using a composite multitask loss function, which encompasses the losses for coarse detection, fine-grained detection, action classification, and an L2 regularization term:

where

is the hyperparameters.

is set to 0.001.

Loss of coarse detection. In coarse detection, we directly process the FPN-level features with convolution to obtain the class of the action and the boundary time stamp. The optimization of the coarse detection can be achieved using the subsequent objective function:

where

is set to 1, and

is set to 10 and 1 on THUMOS14 and ActivityNet-1.3.

is a softmax focal loss. We represent tIoU loss as

.

Loss of fine-grained detection. In fine-grained detection, the loss function can be optimized through the application of the following specific function:

where

and

settings are the same as in coarse detection.

is set to 10 and 1 on THUMOS14 and ActivityNet-1.3, separately. The composition of

is consistent with that described in the AFSD [

12]. We represent L1 loss as

.

Inference. In the prediction phase, the BAN is utilized as outlined in the subsequent methodology:

where

denotes the action start time, which comes from the result of coarse-grained prediction.

denotes the fine-grained action start time. The end time is expressed in the same way as the start time. Subsequently, we compile all the predictions and apply Soft-NMS [

20] for the elimination of overlapping proposals.

4. Experiments

4.1. Datasets

THUMOS14 [

21] and ActivityNet1.3 [

22] were adopted to demonstrate the performance of our BAN against numerous SOTA methods.

THUMOS14 [

21]. The dataset includes 1010 videos in the validation set and 1574 in the testing set, spanning across 20 distinct categories. For temporal action localization specifically, it features 200 untrimmed videos in the validation subset and 212 in the testing subset, each marked with temporal annotations. Our model was trained using the validation subset and assessed on the test subset.

ActivityNet-1.3 [

22]. For our purposes, we utilized version 1.3 of the dataset, which comprises 10,024 training videos, 4926 validation videos, and 5044 testing videos, each encompassing 200 distinct activity types. The majority of these videos feature instances of single-class activities occupying a substantial portion of their duration. In comparison to THUMOS14, it stands out as a more extensive dataset, both in the diversity of activities and the total volume of video content. The distribution ratio of the training, validation, and testing sets adheres to a 2:1:1 proportion.

4.2. Implementation Details

In our work with the THUMOS14 dataset [

21], video encoding was performed at a rate of 10 frames per second (fps) and a spatial resolution of 96 × 96. In line with Lin’s 2021 methodology, consecutive video clips were produced using sliding windows. Each clip was set to a temporal length of 256 frames, with an overlapping of 30 frames in the training phase and 128 frames during testing. For the ActivityNet1.3 dataset [

22], we adjusted the frame sampling rates to guarantee that each video contained a total of 768 frames. As a result, this led to each video being represented by a single clip, consisting of 768 frames. The frame resolution was 96 × 96, which is the same as the size of THUMOS14. In training, data augmentation strategies such as random cropping and horizontal flipping were utilized. For feature extraction, we used the I3D [

17] as a backbone to implement our framework, which was initialized with the parameters pre-trained on the Kinetics [

23] dataset. Our experiment utilized a modified version of the ASFD model’s code [

12], specifically tailored to support our advanced boundary detection and learning features, while maintaining a comparative baseline with the original model.

Our approach was implemented in Python-3.8 using Pytorch on a GPU server with NVIDIA GeForce 1080Ti GPU(NVIDIA Corporation, Santa Clara, CA, USA). The total training epoch was set as 16 for both THUMOS14 and ActivityNet1.3. We utilized the Adam [

24] optimizer with a learning rate set at

and a weight decay of

. Across various experiments, we consistently used a batch size of 1. During the training phase, the loss weight

was adjusted to 10 for THUMOS14 and 1 for ActivityNet1.3, while

was fixed at 10. In the testing phase, we averaged the results from both RGB and optical flow frames to determine the final locations and class scores. The temporal Intersection over Union (tIoU) threshold for Soft-NMS was set at 0.5 for THUMOS14 and 0.85 for ActivityNet1.3.

4.3. Metrics

For the task of temporal action detection, the mean Average Precision (mAP) served as the primary evaluation metric. In the case of ActivityNet-1.3, we utilized mAP at IoU thresholds of 0.5, 0.75, and 0.95, along with an average mAP calculated over a range of IoU thresholds from 0.5 to 0.95 in increments of 0.05. For THUMOS14, the mAP was assessed at IoU thresholds of 0.3, 0.4, 0.5, 0.6, and 0.7.

4.4. Main Results

Our model was benchmarked against the leading methods in the field, as shown in

Table 1 and

Table 2, across two demanding datasets, with a detailed report on the specifics employed by each approach, including BSN [

8], GTAN [

5], BMN [

9], and the AFSD [

12]. In the evaluation of our boundary awareness network (BAN) for temporal action localization, we conducted an extensive comparison with existing state-of-the-art methods, with a particular focus on the AFSD method, recognized for its leading performance in the domain.

Our BAN method introduced a novel Gaussian boundary module (GBM) and Boundary Differentiated Learning (BDL) strategy, specifically designed to enhance the detection of action boundaries in video sequences. This approach demonstrated superior performance on the challenging benchmark dataset THUMOS14 and showed promising results on ActivityNet-1.3, indicating its effectiveness across different contexts.

On the THUMOS14 dataset, the BAN achieved a mean Average Precision (mAP) of 56.4% at an IoU threshold of 0.5, outperforming the AFSD method, which recorded an mAP of 55.5%. This improvement is even more pronounced at higher IoU thresholds. For instance, at an IoU threshold of 0.7, the BAN achieved an mAP of 32.7%, which is a significant increase from the AFSD’s 31.1%. Such results highlight the BAN’s enhanced ability to accurately identify action boundaries, even in more stringent evaluation settings.

For ActivityNet v1.3, as detailed in

Table 2, the BAN demonstrates a competitive performance with an mAP of 52.5% at an IoU threshold of 0.5 and shows significant improvement in more challenging conditions, with an mAP of 35.5% at an IoU threshold of 0.75 and an average mAP of 34.6%. These metrics not only affirm the model’s robustness across varied datasets but also underscore its capacity to excel in identifying precise action boundaries amidst diverse and complex video contents.

The advantages of the BAN are attributed to its GBM, which effectively aggregates features around action boundaries, providing a richer and more discriminative representation of boundary features. Moreover, the BAN’s Boundary Differentiated Learning (BDL) strategy facilitates the model in distinguishing between action and non-action segments more effectively, enhancing the overall precision of action localization.

In conclusion, the comparative analysis underscores the BAN’s advancements over existing methods, including the AFSD. By focusing on the intricate detection of action boundaries and employing a refined learning strategy, the BAN sets a new benchmark for temporal action localization, promising significant improvements for real-world applications.

4.5. Ablation Study

We conducted multiple ablation tests on THUMOS14 to demonstrate the impact of key components of the BAN. In this section, we present experiments with the goal of identifying the main elements that contribute to high-quality temporal action detection.

The Effect of the Gaussian Boundary Module. To ascertain the specific contribution of the Gaussian boundary module (GBM) to temporal action localization (TAL), we devised a comprehensive series of ablation experiments. These experiments were designed to evaluate two primary aspects: (1) the impact of varying the range of boundary features on detection accuracy, and (2) the efficacy of different Gaussian boundary pooling methods within the GBM.

The results, detailed in

Table 3, illustrate a clear trend: as the boundary feature range narrows (indicated by a decrease in

), the localization accuracy significantly improves, peaking at

. This parameter,

, represents the variance within the Gaussian function, with lower values indicating a more focused aggregation of features around the action boundaries. The optimal performance at

underscores the necessity for boundary features to be scope-specific, enabling the precise delineation of action start and end points, as opposed to being broadly defined or moment-specific.

Impact of Boundary Differentiated Learning (BDL). Further, to quantify the influence of Boundary Differentiated Learning (BDL) on the model’s performance, we compared the BAN’s effectiveness with and without the implementation of BDL. This comparison revealed that BDL significantly enhances the model’s ability to distinguish between action and non-action segments, thereby improving the overall accuracy of action localization. The integration of BDL into the BAN led to a marked increase in mean Average Precision (mAP) across various IoU thresholds, particularly highlighting its role in refining the model’s predictive capabilities in complex video sequences where action boundaries may be less distinct.

Combined Influence of the GBM and BDL. In addition to evaluating the individual contributions of the GBM and BDL, our study also examined their combined effect on the model’s performance. The full incorporation of both the GBM and BDL into the BAN resulted in the highest observed improvements in localization accuracy, demonstrating the synergistic impact of these components. This combined configuration significantly outperformed other variations, including models with either component omitted, thereby validating the integral role of both the GBM and BDL in enhancing the efficacy of the BAN for TAL.

The findings from these ablation studies are encapsulated in

Table 4, which presents a detailed breakdown of performance metrics under various configurations of the BAN model. Through this rigorous analysis, we have established the critical contributions of both the GBM and BDL to the overall performance of the BAN, affirming their value in our proposed framework for temporal action localization.

4.6. Comparison of Inference Speed

In this section, we compare the detection speeds of our model with two other leading methods. The inference speed on the THUMOS14 dataset was assessed in terms of frames per second (fps) across various models, as outlined in

Table 5. RGB frames were employed as inputs for all networks to ensure a balanced comparison. Our findings indicate that our model operates significantly faster than most existing methods, with only a marginal difference compared to the benchmark model AFSD. Specifically, the execution speed of our model was recorded at 3197 fps on a single RTX-1080ti (NVIDIA Corporation, Santa Clara, CA, USA) GPU for temporal action localization tasks, which escalated to an impressive 4291 fps on the more advanced RTX-2080ti (NVIDIA Corporation, Santa Clara, CA, USA) GPU.

While our model demonstrated a high inference speed, making it suitable for near-real-time applications, we acknowledge the ongoing need to enhance its efficiency for real-time processing in live video streams. Future optimizations may include algorithmic improvements, model simplification, and leveraging edge computing technologies to further reduce latency and computational requirements. These efforts aim to expand the applicability of our model to real-time scenarios, ensuring it can deliver a high performance in a wider range of practical applications.

4.7. Visualization Results

In this section, we compare the visualization results of our method and the state-of-the-art method AFSD [

12] on the THUMOS14 test set and the results are shown in

Figure 3. Our proposed method has a greater ability to find action boundaries, according to the results.

5. Conclusions

In this study, we introduced a novel action localization framework named BAN, which achieves state-of-the-art performance on standard benchmarks. Our method generates precise boundary proposals by aggregating features around the boundary and refines these predictions to make more accurate boundary determinations. We proposed the Gaussian boundary module (GBM) to obtain accurate boundary features, coupled with a novel Boundary Differentiated Learning (BDL) strategy to discern discriminative action information effectively. Extensive experiments conducted on well-known benchmarks, including ActivityNet-1.3 and THUMOS14, underscore the BAN’s advanced capabilities in comparison with existing state-of-the-art methods, including the AFSD. This contribution significantly enriches the community’s toolbox for video understanding, offering a powerful means to tackle the nuanced challenges of action localization with unprecedented precision. Moreover, by setting new benchmarks, the BAN paves the way for future research to build upon our findings, fostering innovation and further exploration in the realm of video analysis.

Acknowledging the limitations of our approach is crucial for setting realistic expectations and guiding future work; while the BAN represents a significant advancement in temporal action localization, its performance is contingent upon the quality of feature representations and may encounter challenges in cluttered scenes where actions are less distinct. These limitations highlight the importance of ongoing research efforts to enhance the robustness of the BAN, particularly in complex scenarios. Future directions include exploring advanced techniques for feature extraction and representation, aiming to improve the model’s adaptability and effectiveness across a broader range of video content. Moreover, addressing these challenges will involve developing methods that can more effectively navigate the complexities of video scenes, thereby ensuring the BAN’s relevance and applicability as video analysis challenges evolve. To further improve performance in complex scenes, we plan to explore the potential of integrating context-aware models, such as scene segmentation or object recognition. These models could provide richer contextual cues for action boundary detection, thereby improving action localization accuracy in visually complex scenes. By introducing this level of context understanding, we anticipate future iterations of our framework to better handle the subtle distinctions between actions and backgrounds, offering deeper insights for video analysis. Additionally, we aim to incorporate more advanced deep learning techniques, like attention mechanisms or transformers, in future iterations to more accurately capture the temporal dynamics of videos, further enhancing our model’s ability to discern and localize actions within complex video sequences. To address the dependence on quality feature representations, we also intend to explore unsupervised or semi-supervised learning methods in future work. These approaches could enhance the model’s ability to learn from unlabeled data, potentially reducing the reliance on extensively annotated datasets and improving the model’s generalization capabilities.

In light of the advancements in temporal action localization (TAL) demonstrated by our model, it is crucial to acknowledge the ethical implications associated with its application. The potential for enhancing understanding and interaction with video content comes with a responsibility to consider privacy concerns and guard against misuse. As TAL technologies become increasingly capable, their application in surveillance, media analysis, and beyond must be approached with a commitment to ethical standards and privacy protection. We advocate for ongoing dialogue and the establishment of robust guidelines to ensure that the benefits of TAL are realized in a manner that respects individual rights and societal values.