Dynamic Client Clustering, Bandwidth Allocation, and Workload Optimization for Semi-Synchronous Federated Learning

Abstract

1. Introduction

- We propose semi-synchronous FL to resolve the drawbacks of synchronous and asynchronous FL.

- We propose dynamic workload optimization in semi-synchronous FL and prove that dynamic workload optimization outperforms uniform local training in semi-synchronous FL via extensive simulations.

- To resolve the challenges in semi-synchronous FL, we formulate an optimization problem and design Dynamic client clustering, bandwidth allocation, and workload optimization for semi-synchronous Federated learning (DecantFed) algorithm to solve the problem.

2. Related Work

3. System Models and Problem Formulation

3.1. Computing Latency

3.2. Uploading Latency

3.3. Waiting Time

3.4. Problem Formulation

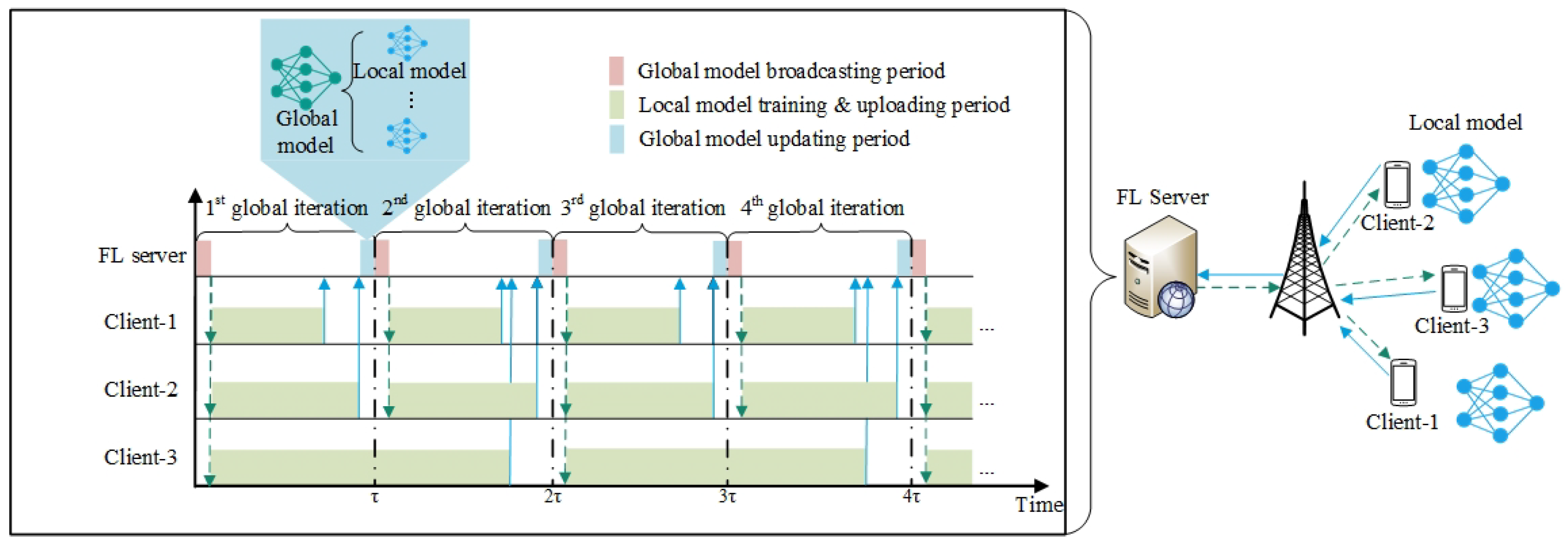

4. The DecantFed Algorithm

4.1. Client Clustering and Bandwidth Allocation

- Each client’s uploading time is determined by its tier. Additionally, tier bandwidth is determined by the number of clients in the corresponding tier, i.e., . Then,

- In the iteration, we intend to assign as many clients as possible to the tier, while satisfying Constraint (5). Let denote the set of clients who have not been clustered into any tier yet, i.e., . We sort the clients in in descending order based on their computing latency. Let denote the set of these sorted clients, i.e., , where is the index of the clients in . Assume that all the clients in can be assigned in tier j, i.e., . We then iteratively check the feasibility of all the clients in tier j starting from the first client, i.e., whether each client in tier j can really be assigned to tier j to meet Constraint (5) or not. If a client currently in tier j cannot meet Constraint (5), this client will be removed from tier j, i.e., . Note that removing a client reduces the bandwidth allocated to tier j in Step 13, which may lead to the clients, who were previously feasible to be assigned to tier j to meet Constraint (5), no longer feasible because of the decreasing of . As a result, we have to go back and check the feasibility of all the clients in tier j starting from the first client again, i.e., in Step 14.

- Once the client clustering in tier j is finished, we start to assign clients to tier by following the same procedure in Steps 5–19. The client clustering ends when all clients have been assigned to the existing tiers, i.e., .

| Algorithm 1: LEAD algorithm |

|

4.2. Dynamic Workload Optimization

4.3. Summary of DecantFed

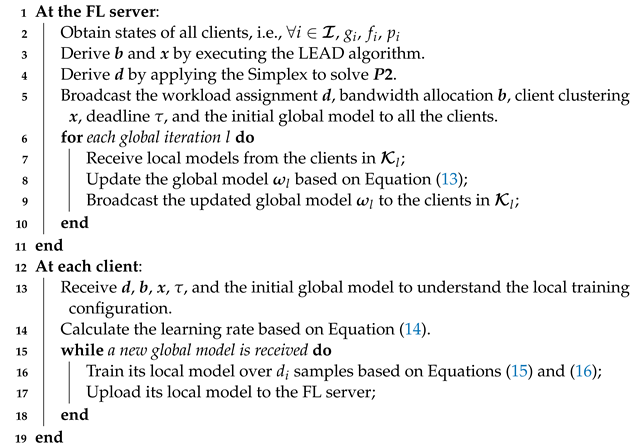

| Algorithm 2: DecantFed algorithm |

|

- Dynamic learning rate. Similar to asynchronous FL, the model staleness problem may also exist in DecantFed, although it is mitigated. The reason for having staleness in DecantFed is that clients in high tiers may train their local models based on outdated global models. To further mitigate the model staleness problem in DecantFed, we adopt the method in [31] to set up different learning rates for the clients in different tiers, i.e.,where is the learning rate of the clients in tier j, is the learning rate of the clients in tier 1, and () is a hyperparameter to adjust the changes in learning rates among tiers. Note that both and are given before the FL training starts. Basically, Equation (14) indicates that clients in a higher tier would have a larger learning rate (i.e., ) to update their local models more aggressively such that these clients can catch up with the model update speed of the clients in the lower tiers. Note that Equation (14) also ensures that is less than 0.1 to avoid the clients in higher tiers overshooting the optimal model that minimizes the loss function. Once the learning rate is determined, client i updates its local model based on the gradient descent method, i.e.,where is the local model of client j during the t and local iterations, respectively, in the global iteration, is the input-output pair for client i’s data sample, implies the learning rate of client i, and is the loss function of the model given the model parameter and data sample . A typical loss function that has been widely applied in image classification is cross-entropy loss.

- Clipping the loss function values. Owing to the non-IID and dynamic workload optimization, the data samples in a client could be highly uneven. For example, a client has one thousand images labeled as dogs but only two images labeled as cats. If this client has high computing capability and the FL server would assign a high workload in terms of training a machine-learning model to classify dogs and cats by selecting all the images for many epochs, then the local model may overfit the client’s local dataset. After being trained by numerous dog images, the local model may diverge if a cat image is fed into the local model to generate an excessive loss value. For example, if the loss function is defined as the cross-entropy loss, then the loss function is , where is the probability of labeling the image as a cat by the local model. Infinite loss values lead to large backpropagation gradients, which can subsequently turn both weights and biases into ‘NaN’. Although regularization methods can reduce the variance of model updates, especially in IID scenarios, they cannot resolve the infinite loss issues that normally happen when the data distribution is highly non-IID. In such cases, loss clipping serves as an effective and computationally efficient solution to constrain model update, clipping the loss value into a reasonable range, i.e.,where is a hyperparameter that defines the maximum loss function value.

5. Simulations

5.1. Simulation Setup

5.1.1. Non-IID Dataset

5.1.2. Global Model Design

5.1.3. Comparison Methods

5.2. Simulation Results

5.2.1. Performance Comparison Among Different FL Algorithms

5.2.2. Performance of DecantFed by Varying

5.2.3. Performance of DecantFed by Optimizing the Workload Among Clients

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ansari, N.; Sun, X. Mobile Edge Computing Empowers Internet of Things. IEICE Trans. Commun. 2018, E101.B, 604–619. [Google Scholar] [CrossRef]

- Sun, X.; Ansari, N. EdgeIoT: Mobile Edge Computing for the Internet of Things. IEEE Commun. Mag. 2016, 54, 22–29. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; Volume 54, pp. 1273–1282. [Google Scholar]

- Kalloori, S.; Srivastava, A. Towards cross-silo federated learning for corporate organizations. Knowl.-Based Syst. 2024, 289, 111501. [Google Scholar] [CrossRef]

- Amini, M.R.; Feofanov, V.; Pauletto, L.; Hadjadj, L.; Devijver, E.; Maximov, Y. Self-training: A survey. arXiv 2022, arXiv:2202.12040. [Google Scholar]

- Zhang, X.; Huang, X.; Li, J. Joint Self-Training and Rebalanced Consistency Learning for Semi-Supervised Change Detection. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 5406613. [Google Scholar] [CrossRef]

- Guo, J.; Liu, Z.; Chen, C.L.P. An Incremental-Self-Training-Guided Semi-Supervised Broad Learning System. IEEE Trans. Neural Netw. Learn. Syst. 2024; early access. [Google Scholar] [CrossRef]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Fu, X.; Shi, S.; Wang, Y.; Lin, Y.; Gui, G.; Dobre, O.A.; Mao, S. Semi-Supervised Specific Emitter Identification via Dual Consistency Regularization. IEEE Internet Things J. 2023, 10, 19257–19269. [Google Scholar] [CrossRef]

- Wu, Z.; He, T.; Xia, X.; Yu, J.; Shen, X.; Liu, T. Conditional Consistency Regularization for Semi-Supervised Multi-Label Image Classification. IEEE Trans. Multimed. 2024, 26, 4206–4216. [Google Scholar] [CrossRef]

- Vu, T.T.; Ngo, D.T.; Ngo, H.Q.; Dao, M.N.; Tran, N.H.; Middleton, R.H. Straggler Effect Mitigation for Federated Learning in Cell-Free Massive MIMO. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Albelaihi, R.; Sun, X.; Craft, W.D.; Yu, L.; Wang, C. Adaptive Participant Selection in Heterogeneous Federated Learning. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, J.; Joshi, G. Cooperative SGD: A unified framework for the design and analysis of communication-efficient SGD algorithms. arXiv 2018, arXiv:1808.07576. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the ICC 2019–2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Xu, C.; Qu, Y.; Xiang, Y.; Gao, L. Asynchronous federated learning on heterogeneous devices: A survey. arXiv 2021, arXiv:2109.04269. [Google Scholar]

- Damaskinos, G.; Guerraoui, R.; Kermarrec, A.M.; Nitu, V.; Patra, R.; Taiani, F. FLeet: Online Federated Learning via Staleness Awareness and Performance Prediction. In Proceedings of the 21st International Middleware Conference, New York, NY, USA, 7–11 December 2020; pp. 163–177. [Google Scholar] [CrossRef]

- Rodio, A.; Neglia, G. FedStale: Leveraging stale client updates in federated learning. arXiv 2024, arXiv:2405.04171. [Google Scholar]

- Gao, Z.; Duan, Y.; Yang, Y.; Rui, L.; Zhao, C. FedSeC: A Robust Differential Private Federated Learning Framework in Heterogeneous Networks. In Proceedings of the 2022 IEEE Wireless Communications and Networking Conference (WCNC), Austin, TX, USA, 10–13 April 2022; pp. 1868–1873. [Google Scholar] [CrossRef]

- Albaseer, A.; Abdallah, M.; Al-Fuqaha, A.; Erbad, A. Data-Driven Participant Selection and Bandwidth Allocation for Heterogeneous Federated Edge Learning. IEEE Trans. Syst. Man, Cybern. Syst. 2023, 53, 5848–5860. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated Optimization in Heterogeneous Networks. In Proceedings of the Machine Learning and Systems, Austin, TX, USA, 2–4 March 2020; Volume 2, pp. 429–450. [Google Scholar]

- Lee, J.; Ko, H.; Pack, S. Adaptive Deadline Determination for Mobile Device Selection in Federated Learning. IEEE Trans. Veh. Technol. 2022, 71, 3367–3371. [Google Scholar] [CrossRef]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečný, J.; Mazzocchi, S.; McMahan, H.B.; et al. Towards Federated Learning at Scale: System Design. arXiv 2019, arXiv:1902.01046. [Google Scholar] [CrossRef]

- Albelaihi, R.; Yu, L.; Craft, W.D.; Sun, X.; Wang, C.; Gazda, R. Green Federated Learning via Energy-Aware Client Selection. In Proceedings of the GLOBECOM 2022–2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 13–18. [Google Scholar] [CrossRef]

- Yu, L.; Albelaihi, R.; Sun, X.; Ansari, N.; Devetsikiotis, M. Jointly Optimizing Client Selection and Resource Management in Wireless Federated Learning for Internet of Things. IEEE Internet Things J. 2021, 9, 4385–4395. [Google Scholar] [CrossRef]

- Xu, J.; Wang, H. Client Selection and Bandwidth Allocation in Wireless Federated Learning Networks: A Long-Term Perspective. IEEE Trans. Wirel. Commun. 2021, 20, 1188–1200. [Google Scholar] [CrossRef]

- Nguyen, J.; Malik, K.; Zhan, H.; Yousefpour, A.; Rabbat, M.; Malek, M.; Huba, D. Federated Learning with Buffered Asynchronous Aggregation. In Proceedings of the 25th International Conference on Artificial Intelligence and Statistics, Virtual, 28–30 March 2022; Volume 151, pp. 3581–3607. [Google Scholar]

- Wang, Z.; Zhang, Z.; Tian, Y.; Yang, Q.; Shan, H.; Wang, W.; Quek, T.Q.S. Asynchronous Federated Learning over Wireless Communication Networks. IEEE Trans. Wirel. Commun. 2022, 21, 6961–6978. [Google Scholar] [CrossRef]

- Jiang, J.; Cui, B.; Zhang, C.; Yu, L. Heterogeneity-Aware Distributed Parameter Servers. In Proceedings of the 2017 ACM International Conference on Management of Data, New York, NY, USA, 14–19 May 2017; SIGMOD ’17. pp. 463–478. [Google Scholar] [CrossRef]

- Zhang, W.; Gupta, S.; Lian, X.; Liu, J. Staleness-Aware Async-SGD for Distributed Deep Learning. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; AAAI Press: Washington, DC, USA, 2016. IJCAI’16. pp. 2350–2356. [Google Scholar]

- Xie, C.; Koyejo, S.; Gupta, I. Asynchronous federated optimization. arXiv 2019, arXiv:1903.03934. [Google Scholar]

- Chen, Y.; Ning, Y.; Slawski, M.; Rangwala, H. Asynchronous Online Federated Learning for Edge Devices with Non-IID Data. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 15–24. [Google Scholar] [CrossRef]

- Briggs, C.; Fan, Z.; Andras, P. Federated learning with hierarchical clustering of local updates to improve training on non-IID data. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Kim, Y.; Hakim, E.A.; Haraldson, J.; Eriksson, H.; da Silva, J.M.B.; Fischione, C. Dynamic Clustering in Federated Learning. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, M.; Saad, W.; Hong, C.S.; Shikh-Bahaei, M.; Poor, H.V.; Cui, S. Delay Minimization for Federated Learning over Wireless Communication Networks. arXiv 2020, arXiv:2007.03462. [Google Scholar] [CrossRef]

- Xu, D. Latency Minimization for TDMA-Based Wireless Federated Learning Networks. IEEE Trans. Veh. Technol. 2024, 73, 13974–13979. [Google Scholar] [CrossRef]

- Hu, Y.; Huang, H.; Yu, N. Device Scheduling for Energy-Efficient Federated Learning over Wireless Network Based on TDMA Mode. In Proceedings of the 2020 International Conference on Wireless Communications and Signal Processing (WCSP), Nanjing, China, 21–23 October 2020; pp. 286–291. [Google Scholar] [CrossRef]

- Tran, N.H.; Bao, W.; Zomaya, A.; Nguyen, M.N.H.; Hong, C.S. Federated Learning over Wireless Networks: Optimization Model Design and Analysis. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1387–1395. [Google Scholar] [CrossRef]

- Dantzig, G.B.; Thapa, M.N. Linear Programming; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- ETSI. Radio Frequency (RF) Requirements for LTE Pico Node B (3GPP TR 36.931 Version 9.0.0 Release 9); Technical Report ETSI TR 136 931 V9.0.0; European Telecommunications Standards Institute: Sophia Antipolis, France, 2011. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://bibbase.org/network/publication/krizhevsky-hinton-learningmultiplelayersoffeaturesfromtinyimages-2009 (accessed on 20 November 2024).

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Yu, L.; Sun, X.; Albelaihi, R.; Yi, C. Latency-Aware Semi-Synchronous Client Selection and Model Aggregation for Wireless Federated Learning. Future Internet 2023, 15, 352. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Background noise | dBm |

| Bandwidth (b) | 1 MHz |

| Client transmission power (p) | 0.1 watt |

| Size of the local model (s) | 100 kbit |

| Client i CPU frequency | Hz |

| Number of CPU cycles required for training one sample on client i | |

| Number of local samples | Dirichlet distribution |

| Number of local epochs | Various, dynamic local training |

| Number of local batch size | 10 |

| Methods | Workload () | Synchronous | Deadline | Clients |

|---|---|---|---|---|

| DecantFed | dynamic | semi-syn | all | |

| FedProx [20] | dynamic | syn | few | |

| FedAvg [3] | fixed | syn | ∞ | all |

| Deadline (s) | 2.5 | 5 | 10 | 20 | 40 | 80 |

|---|---|---|---|---|---|---|

| Test accuracy (%) | 69.07 | 72.59 | 73.48 | 73.45 | 73.03 | 72.61 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, L.; Sun, X.; Albelaihi, R.; Park, C.; Shao, S. Dynamic Client Clustering, Bandwidth Allocation, and Workload Optimization for Semi-Synchronous Federated Learning. Electronics 2024, 13, 4585. https://doi.org/10.3390/electronics13234585

Yu L, Sun X, Albelaihi R, Park C, Shao S. Dynamic Client Clustering, Bandwidth Allocation, and Workload Optimization for Semi-Synchronous Federated Learning. Electronics. 2024; 13(23):4585. https://doi.org/10.3390/electronics13234585

Chicago/Turabian StyleYu, Liangkun, Xiang Sun, Rana Albelaihi, Chaeeun Park, and Sihua Shao. 2024. "Dynamic Client Clustering, Bandwidth Allocation, and Workload Optimization for Semi-Synchronous Federated Learning" Electronics 13, no. 23: 4585. https://doi.org/10.3390/electronics13234585

APA StyleYu, L., Sun, X., Albelaihi, R., Park, C., & Shao, S. (2024). Dynamic Client Clustering, Bandwidth Allocation, and Workload Optimization for Semi-Synchronous Federated Learning. Electronics, 13(23), 4585. https://doi.org/10.3390/electronics13234585