An Efficient Framework for Finding Similar Datasets Based on Ontology

Abstract

1. Introduction

- Develop a Concept-Based Dataset Recommendation Algorithm: We aim to design an algorithm that can efficiently generate a Concept Matrix and a Dataset Matrix, enabling accurate and fast comparisons between the concept vectors of user queries and the registered datasets. The objective is to rank datasets in relevance to the user’s search query, enhancing both precision and recall in the search results.

- Reduce Storage Requirements through Matrix Compression: One of the critical goals is to reduce the large storage requirements of dataset matrices by implementing effective matrix compression techniques. This will help in storing large datasets more efficiently, ensuring minimal degradation in search performance while achieving a significant reduction in space usage.

- Improve Search Efficiency Using Indexing and Caching Mechanisms: To minimize the time required to process search queries, the proposed system will employ advanced indexing and caching strategies. The use of a bloom filter-based cache is intended to accelerate query response times by reducing redundant searches and utilizing fast memory access. The objective here is to cut down both computational time and storage space for frequent queries.

- Incorporate Domain-Specific Ontologies for Semantic Search: To enhance the semantic understanding of user queries, the proposed approach will integrate domain-specific ontologies. This will allow the system to interpret the underlying meaning of the search terms more effectively, leading to improved retrieval of relevant datasets that match the user’s intent.

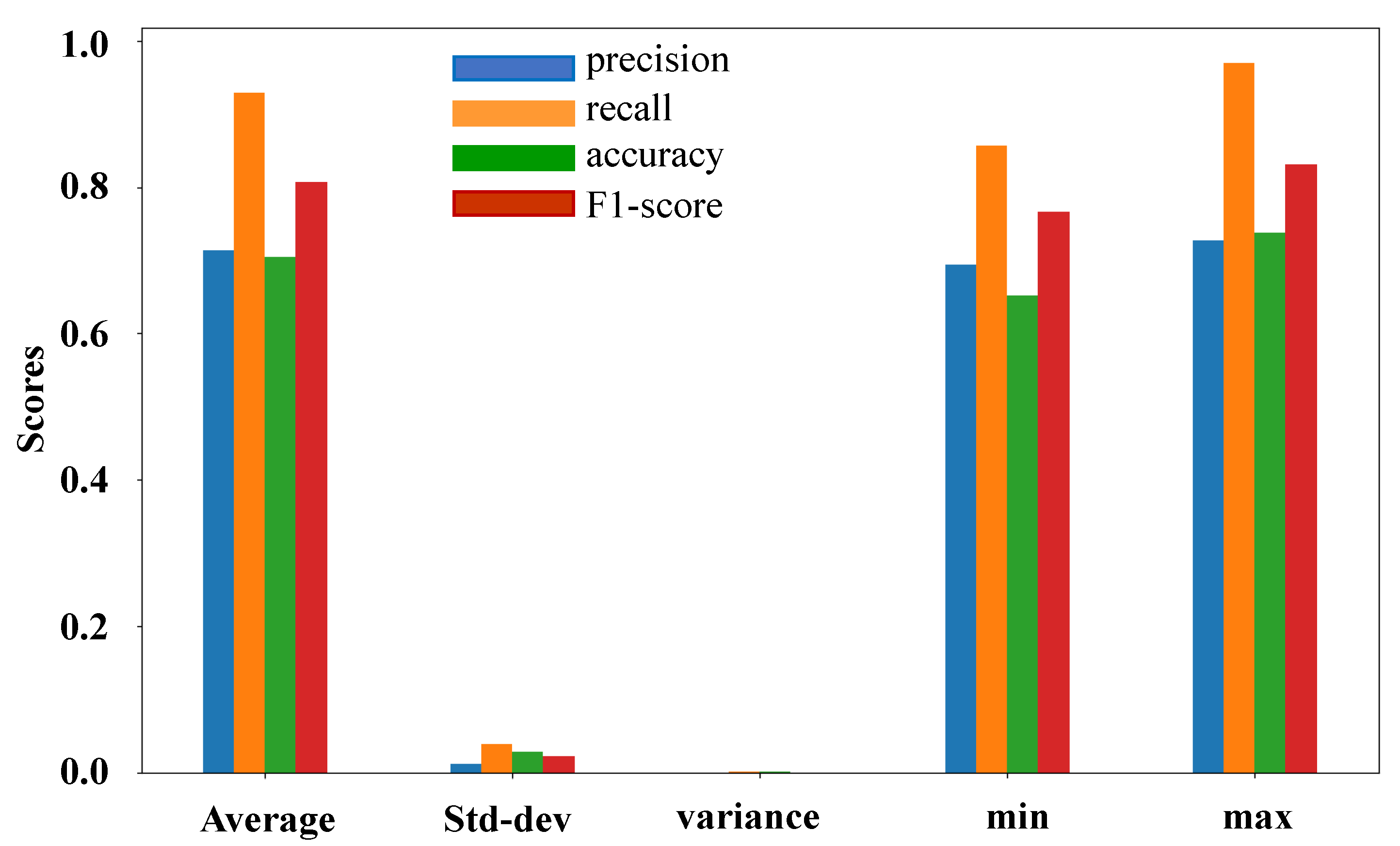

- Compare and Benchmark Performance against Existing Solutions: The final objective is to comprehensively evaluate the performance of the proposed system against existing methods such as OTD and DOS. The comparison will focus on metrics like precision, recall, F1-score, accuracy, and runtime efficiency, with the goal of demonstrating significant improvements in both storage optimization and search efficiency.

2. Related Works

2.1. Data Relationship Prediction Techniques

2.2. Recommendation Systems

2.3. Open Data Classification Systems

3. Preliminaries

3.1. Domain Category Graph (DCG)

3.2. Metadata

4. Materials and Methods

4.1. Process for Generating Concept Matrix

| Algorithm 1: Path length calculation algorithm to generate CM |

|

4.2. Process for Generating Dataset Matrix

| Algorithm 2: DM algorithm |

|

Materialized Dataset Matrix

4.3. Compression

| Algorithm 3: Compression algorithm |

|

4.4. Indexing

4.5. Prefetch Documents

4.6. Caching

4.7. Semantic Search

5. Performance Evaluation

5.1. Datasets and Experimental Setups

5.2. Experimental Details

5.3. Comparative Analysis

- Future Proposals

- −

- Enhanced Dataset Documentation Standards:Building upon the findings regarding the values in dataset documentation from the computer vision study, future work could focus on developing standardized guidelines for documenting datasets across various domains. These guidelines could emphasize the importance of context, positionality, and ethical considerations in data collection, aligning with the call for integrating “silenced values”. This would not only enhance transparency but also improve the quality of datasets used in machine learning models.

- −

- Ontology-Driven DBMS Selection:The development of an OWL 2 ontology for DBMSs opens opportunities for automated decision-making tools that leverage this ontology to recommend the most suitable database systems for specific use cases. By integrating our results with existing knowledge from DBMS literature, we can develop a user-friendly interface that allows practitioners to input their requirements and receive tailored DBMS suggestions, thus optimizing database management tasks in various applications.

- −

- Local Embeddings for Custom Data Integration:Our research on local embeddings could be further explored in conjunction with the insights from deep learning applications in data integration. Future work could develop a hybrid framework that combines our graph-based representations with pre-trained embeddings to enhance integration tasks across diverse datasets. This approach would allow organizations to efficiently merge enterprise data while preserving the unique vocabulary and context of their datasets.

- Practical Applications

- −

- Improving Computer Vision Applications:The insights gained regarding dataset documentation in computer vision could lead to improved practices in creating datasets for applications such as facial recognition, object detection, and autonomous driving. By prioritizing contextuality and care in dataset creation, developers can create more robust models that perform well across varied real-world scenarios, thereby increasing trust and reliability in AI systems.

- −

- Semantic Web Integration:The ontology developed for DBMSs can serve as a foundation for integrating semantic web technologies into database management practices. Organizations could utilize this ontology to create a semantic layer on top of their existing databases, enhancing data interoperability and allowing for richer queries and analytics that span multiple data sources.

- −

- Advanced Data Integration Frameworks:The proposed algorithms for local embeddings can be applied to various data integration tasks beyond schema matching and entity resolution. For instance, they can facilitate the integration of heterogeneous data sources in healthcare, finance, and logistics by ensuring that the contextual relationships within data are maintained. This would support better decision-making and operational efficiencies across industries.

- Connecting to Previous Literature By integrating the findings from the provided literature, we can see how our proposals align with and build upon existing research. For instance, the emphasis on dataset documentation in the computer vision paper resonates with our call for improved standards, while the ontology’s design in the DBMS study complements our vision of enhancing database selection processes. Furthermore, leveraging local embeddings in conjunction with existing deep learning techniques ties back to the current trends in machine learning and data integration, showcasing a cohesive evolution of ideas in the field.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hendler, J.; Holm, J.; Musialek, C.; Thomas, G. US government linked open data: Semantic. data. gov. IEEE Intell. Syst. 2012, 27, 25–31. [Google Scholar] [CrossRef]

- Kassen, M. A promising phenomenon of open data: A case study of the Chicago open data project. Gov. Inf. Q. 2013, 30, 508–513. [Google Scholar] [CrossRef]

- Burwell, S.M.; VanRoekel, S.; Park, T.; Mancini, D.J. Open data policy—Managing information as an asset. Exec. Off. Pres. 2013, 13, 13. [Google Scholar]

- Brickley, D.; Burgess, M.; Noy, N. Google Dataset Search: Building a search engine for datasets in an open Web ecosystem. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 1365–1375. [Google Scholar]

- Bizer, C.; Volz, J.; Kobilarov, G.; Gaedke, M. Silk-a link discovery framework for the web of data. In Proceedings of the 18th International World Wide Web Conference. Citeseer, Madrid, Spain, 20–24 April 2009; Volume 122. [Google Scholar]

- Suchanek, F.M.; Abiteboul, S.; Senellart, P. Paris: Probabilistic alignment of relations, instances, and schema. arXiv 2011, arXiv:1111.7164. [Google Scholar] [CrossRef]

- Azoff, E.M. Neural Network Time Series Forecasting of Financial Markets; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1994. [Google Scholar]

- Chapman, A.; Simperl, E.; Koesten, L.; Konstantinidis, G.; Ibáñez, L.D.; Kacprzak, E.; Groth, P. Dataset search: A survey. VLDB J. 2020, 29, 251–272. [Google Scholar] [CrossRef]

- Maier, D.; Megler, V.; Tufte, K. Challenges for dataset search. In Proceedings of the International Conference on Database Systems for Advanced Applications, Bali, Indonesia, 21–24 April 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1–15. [Google Scholar]

- Castelo, S.; Rampin, R.; Santos, A.; Bessa, A.; Chirigati, F.; Freire, J. Auctus: A dataset search engine for data discovery and augmentation. Proc. VLDB Endow. 2021, 14, 2791–2794. [Google Scholar] [CrossRef]

- Sultana, T.; Lee, Y.K. gRDF: An Efficient Compressor with Reduced Structural Regularities That Utilizes gRePair. Sensors 2022, 22, 2545. [Google Scholar] [CrossRef]

- Sultana, T.; Lee, Y.K. Efficient rule mining and compression for RDF style KB based on Horn rules. J. Supercomput. 2022, 78, 16553–16580. [Google Scholar] [CrossRef]

- Sultana, T.; Lee, Y.K. Expressive rule pattern based compression with ranking in Horn rules on RDF style kb. In Proceedings of the 2021 IEEE International Conference on Big Data and Smart Computing (BigComp), Jeju Island, Republic of Kore, 17–20 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13–19. [Google Scholar]

- Slimani, T. Description and evaluation of semantic similarity measures approaches. arXiv 2013, arXiv:1310.8059. [Google Scholar] [CrossRef]

- Hagelien, T.F. A Framework for Ontology Based Semantic Search. Master’s Thesis, NTNU, Trondheim, Norway, 2018. [Google Scholar]

- Jiang, S.; Hagelien, T.F.; Natvig, M.; Li, J. Ontology-based semantic search for open government data. In Proceedings of the 2019 IEEE 13th International Conference on Semantic Computing (ICSC), Newport Beach, CA, USA, 30 January–1 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7–15. [Google Scholar]

- Rasel, M.K.; Elena, E.; Lee, Y.K. Summarized bit batch-based triangle listing in massive graphs. Inf. Sci. 2018, 441, 1–17. [Google Scholar] [CrossRef]

- Wu, D.; Wang, H.T.; Tansel, A.U. A survey for managing temporal data in RDF. Inf. Syst. 2024, 122, 102368. [Google Scholar] [CrossRef]

- Arenas-Guerrero, J.; Iglesias-Molina, A.; Chaves-Fraga, D.; Garijo, D.; Corcho, O.; Dimou, A. Declarative generation of RDF-star graphs from heterogeneous data. Semant. Web 2024, 1–19. [Google Scholar] [CrossRef]

- Sultana, T.; Hossain, M.D.; Morshed, M.G.; Afridi, T.H.; Lee, Y.K. Inductive autoencoder for efficiently compressing RDF graphs. Inf. Sci. 2024, 662, 120210. [Google Scholar] [CrossRef]

- Ngomo, A.C.N.; Auer, S. LIMES—A time-efficient approach for large-scale link discovery on the web of data. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- Araujo, S.; Tran, D.T.; de Vries, A.P.; Schwabe, D. SERIMI: Class-based matching for instance matching across heterogeneous datasets. IEEE Trans. Knowl. Data Eng. 2014, 27, 1397–1440. [Google Scholar] [CrossRef]

- Araújo, T.B.; Stefanidis, K.; Santos Pires, C.E.; Nummenmaa, J.; Da Nóbrega, T.P. Schema-agnostic blocking for streaming data. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 30 March–3 April 2020; pp. 412–419. [Google Scholar]

- Nikolov, A.; Uren, V.; Motta, E.; Roeck, A.d. Integration of semantically annotated data by the KnoFuss architecture. In Proceedings of the International Conference on Knowledge Engineering and Knowledge Management, Acitrezza, Italy, 29 September–2 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 265–274. [Google Scholar]

- Efthymiou, V.; Papadakis, G.; Stefanidis, K.; Christophides, V. MinoanER: Schema-agnostic, non-iterative, massively parallel resolution of web entities. arXiv 2019, arXiv:1905.06170. [Google Scholar]

- Papadakis, G.; Tsekouras, L.; Thanos, E.; Pittaras, N.; Simonini, G.; Skoutas, D.; Isaris, P.; Giannakopoulos, G.; Palpanas, T.; Koubarakis, M. JedAI3: Beyond batch, blocking-based Entity Resolution. In Proceedings of the EDBT, Copenhagen, Denmark, 30 March–2 April 2020; pp. 603–606. [Google Scholar]

- Pelgrin, O.; Galárraga, L.; Hose, K. Towards fully-fledged archiving for RDF datasets. Semant. Web 2021, 1–24. [Google Scholar] [CrossRef]

- De Meester, B.; Heyvaert, P.; Arndt, D.; Dimou, A.; Verborgh, R. RDF graph validation using rule-based reasoning. Semant. Web 2021, 12, 117–142. [Google Scholar] [CrossRef]

- Kettouch, M.S.; Luca, C. LinkD: Element-based data interlinking of RDF datasets in linked data. Computing 2022, 104, 2685–2709. [Google Scholar] [CrossRef]

- Deepak, G.; Santhanavijayan, A. OntoBestFit: A best-fit occurrence estimation strategy for RDF driven faceted semantic search. Comput. Commun. 2020, 160, 284–298. [Google Scholar] [CrossRef]

- Niazmand, E.; Sejdiu, G.; Graux, D.; Vidal, M.E. Efficient semantic summary graphs for querying large knowledge graphs. Int. J. Inf. Manag. Data Insights 2022, 2, 100082. [Google Scholar] [CrossRef]

- Ferrada, S.; Bustos, B.; Hogan, A. Extending SPARQL with Similarity Joins. In Proceedings of the International Semantic Web Conference, Athens, Greece, 2–6 November 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 201–217. [Google Scholar]

- Sultana, T.; Hossain, M.D.; Umair, M.; Khan, M.N.; Alam, A.; Lee, Y.K. Graph pattern detection and structural redundancy reduction to compress named graphs. Inf. Sci. 2023, 647, 119428. [Google Scholar] [CrossRef]

- Umair, M.; Sultana, T.; Lee, Y.-K. Pre-Trained Language Models for Keyphrase Prediction: A Review. ICT Express 2024, 10, 871–890. [Google Scholar] [CrossRef]

- Lorenzi, F.; Bazzan, A.L.; Abel, M.; Ricci, F. Improving recommendations through an assumption-based multiagent approach: An application in the tourism domain. Expert Syst. Appl. 2011, 38, 14703–14714. [Google Scholar] [CrossRef]

- Salehi, M.; Kmalabadi, I.N. A hybrid attribute–based recommender system for e–learning material recommendation. Ieri Procedia 2012, 2, 565–570. [Google Scholar] [CrossRef][Green Version]

- Kardan, A.A.; Ebrahimi, M. A novel approach to hybrid recommendation systems based on association rules mining for content recommendation in asynchronous discussion groups. Inf. Sci. 2013, 219, 93–110. [Google Scholar] [CrossRef]

- Miles, A.; Bechhofer, S. SKOS simple knowledge organization system reference. W3C Recommendation. 2009. Available online: https://www.w3.org/TR/skos-reference/ (accessed on 4 November 2024).

- Göğebakan, K.; Ulu, R.; Abiyev, R.; Şah, M. A drug prescription recommendation system based on novel DIAKID ontology and extensive semantic rules. Health Inf. Sci. Syst. 2024, 12, 27. [Google Scholar] [CrossRef]

- Oliveira, F.d.; Oliveira, J.M.P.d. A RDF-based graph to representing and searching parts of legal documents. Artif. Intell. Law 2024, 32, 667–695. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.W. The Ontology Based, the Movie Contents Recommendation Scheme, Using Relations of Movie Metadata. J. Intell. Inf. Syst. 2013, 19, 25–44. [Google Scholar]

- Lee, W.P.; Chen, C.T.; Huang, J.Y.; Liang, J.Y. A smartphone-based activity-aware system for music streaming recommendation. Knowl.-Based Syst. 2017, 131, 70–82. [Google Scholar] [CrossRef]

- Dong, H.; Hussain, F.K.; Chang, E. A service concept recommendation system for enhancing the dependability of semantic service matchmakers in the service ecosystem environment. J. Netw. Comput. Appl. 2011, 34, 619–631. [Google Scholar] [CrossRef]

- Mohanraj, V.; Chandrasekaran, M.; Senthilkumar, J.; Arumugam, S.; Suresh, Y. Ontology driven bee’s foraging approach based self adaptive online recommendation system. J. Syst. Softw. 2012, 85, 2439–2450. [Google Scholar] [CrossRef]

- Chen, R.C.; Huang, Y.H.; Bau, C.T.; Chen, S.M. A recommendation system based on domain ontology and SWRL for anti-diabetic drugs selection. Expert Syst. Appl. 2012, 39, 3995–4006. [Google Scholar] [CrossRef]

- Torshizi, A.D.; Zarandi, M.H.F.; Torshizi, G.D.; Eghbali, K. A hybrid fuzzy-ontology based intelligent system to determine level of severity and treatment recommendation for Benign Prostatic Hyperplasia. Comput. Methods Programs Biomed. 2014, 113, 301–313. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Cheng, G.; Lin, T.; Xu, J.; Pan, J.Z.; Kharlamov, E.; Qu, Y. PCSG: Pattern-coverage snippet generation for RDF datasets. In Proceedings of the International Semantic Web Conference, Virtual, 24–28 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 3–20. [Google Scholar]

- Obe, R.O.; Hsu, L.S. PostgreSQL: Up and Running: A Practical Guide to the Advanced Open Source Database; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Velasco, R. Apache Solr: For Starters, 2016. Available online: https://dl.acm.org/doi/10.5555/3126424 (accessed on 4 November 2024).

- Robertson, S.; Zaragoza, H. The probabilistic relevance framework: BM25 and beyond. Found. Trends® Inf. Retr. 2009, 3, 333–389. [Google Scholar] [CrossRef]

- Liu, T.Y. Learning to rank for information retrieval. Found. Trends® Inf. Retr. 2009, 3, 225–331. [Google Scholar] [CrossRef]

- Wu, Z.; Palmer, M. Verb semantics and lexical selection. arXiv 1994, arXiv:cmp-lg/9406033. [Google Scholar]

- Wu, H.C.; Luk, R.W.P.; Wong, K.F.; Kwok, K.L. Interpreting tf-idf term weights as making relevance decisions. ACM Trans. Inf. Syst. (TOIS) 2008, 26, 1–37. [Google Scholar] [CrossRef]

- Muja, M.; Lowe, D.G. Fast approximate nearest neighbors with automatic algorithm configuration. VISAPP 2009, 2, 2. [Google Scholar]

- Silpa-Anan, C.; Hartley, R. Optimised KD-trees for fast image descriptor matching. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska, 23–28 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–8. [Google Scholar]

- Shah, K.; Mitra, A.; Matani, D. An O (1) algorithm for implementing the LFU cache eviction scheme. No 2010, 1, 1–8. [Google Scholar]

- Eklov, D.; Hagersten, E. StatStack: Efficient modeling of LRU caches. In Proceedings of the 2010 IEEE International Symposium on Performance Analysis of Systems & Software (ISPASS), White Plains, NY, USA, 28–30 March 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 55–65. [Google Scholar]

- Luo, L.; Guo, D.; Ma, R.T.; Rottenstreich, O.; Luo, X. Optimizing bloom filter: Challenges, solutions, and comparisons. IEEE Commun. Surv. Tutorials 2018, 21, 1912–1949. [Google Scholar] [CrossRef]

- Leacock, C.; Chodorow, M. Combining local context and WordNet similarity for word sense identification. WordNet Electron. Lex. Database 1998, 49, 265–283. [Google Scholar]

- Scheuerman, M.K.; Hanna, A.; Denton, E. Do datasets have politics? Disciplinary values in computer vision dataset development. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–37. [Google Scholar] [CrossRef]

- Buraga, S.C.; Amariei, D.; Dospinescu, O. An owl-based specification of database management systems. Comput. Mater. Contin 2022, 70, 5537–5550. [Google Scholar] [CrossRef]

- Cappuzzo, R.; Papotti, P.; Thirumuruganathan, S. Creating embeddings of heterogeneous relational datasets for data integration tasks. In Proceedings of the 2020 ACM SIGMOD International Conference on Management of Data, Portland, OR, USA, 14–19 June 2020; pp. 1335–1349. [Google Scholar]

| Query_Id | Details | |

|---|---|---|

| 1. | URL | :transport-road-transport-in-europe |

| Title | Transport Road Transport in Europe | |

| Description | Road transport statistics for European countries. This dataset was prepared by Google based on data downloaded from Eurostat. | |

| 2. | URL | :transport-exports-by-mode-of-transport-1966 |

| Title | Transport Exports by Mode of Transport, 1966 | |

| Description | License Rights under which the catalog can be reused are outlined in the Open Government License - Canada Available download formats from providers jpg, pdf Description Contained within the 4th Edition (1974) of the Atlas of Canada is a graph and two maps. | |

| 3. | URL | :transport-bus-breakdown-and-delays |

| Title | Transport Bus Breakdown and Delays | |

| Description | The Bus Breakdown and Delay system collects information from school bus vendors operating out in the field in real time. | |

| 4. | URL | :transport-motor-vehicle-output-truck-output |

| Title | Transport Motor vehicle output: Truck output | |

| Description | Graph and download economic data for motor vehicle output: Truck output (A716RC1A027NBEA) from 1967 to 2018 about output, trucks, vehicles, GDP, and USA. | |

| 5. | URL | :trans-national-public-transport-data-repository-nptdr |

| Title | National Public Transport Data Repository (NPTDR) | |

| Description | The NPTDR database contains a snapshot of every public transport journey in Great Britain for a selected week in October each year. | |

| Task | Average Time (s) | Standard Deviation |

|---|---|---|

| Index search | 0.70 | 0.20 |

| Decompression of rows | 0.25 | 0.13 |

| DM row calculation | 0.50 | 0.30 |

| Total time without cache(s) | 1.40 | 0.63 |

| Total time with cache hit(s) | ∼0.02 | |

| Technical Term | Abbreviation | Description |

|---|---|---|

| Ontology | N/A | A formal representation of a set of concepts within a domain and the relationships between them. |

| Least Common Subsumer | LCS | The lowest node in a taxonomy that is a hypernym of two concepts. |

| Wu and Palmer Similarity | N/A | A method to compute the relatedness of two concepts by considering the depth of the synsets and their least common subsumer. |

| Concept Matrix | CM | A matrix where each element represents the structural similarity between two concepts in an ontology. |

| Taxonomy | N/A | A hierarchical structure of categories or concepts. |

| Semantic Web | N/A | A framework that allows data to be shared and reused across application, enterprise, and community boundaries. |

| Resource Description Framework | RDF | A framework for representing information about resources on the web. |

| Simple Knowledge Organization System | SKOS | A common data model for sharing and linking knowledge organization systems via the web. |

| Hypernym | N/A | A word with a broad meaning that more specific words fall under; for example, “vehicle“ is a hypernym of “car”. |

| Structural Similarity | N/A | A measure of how similar two concepts are based on the structure of the ontology. |

| Information Content | N/A | The amount of information a concept contains, often used to calculate similarities in ontologies. |

| Synset | N/A | A set of one or more synonyms that are interchangeable in some context. |

| Path Length | N/A | The shortest distance between two concepts in an ontology, often measured in the number of edges. |

| Hierarchical Structure | N/A | A system of elements ranked one above another, typically seen in ontologies. |

| Similarity Score | N/A | A numerical value representing the similarity between two concepts. |

| Graph-based Representation | N/A | A way to model data where entities are nodes, and relationships are edges in a graph. |

| Ontology Matching | N/A | The process of finding correspondences between semantically related entities in different ontologies. |

| Least Number of Edges | N/A | The minimum number of edges between two nodes (concepts) in a graph or ontology. |

| Knowledge Base | KB | A database that stores facts and rules about a domain, used for reasoning and inference. |

| Natural Language Processing | NLP | A field of AI that focuses on the interaction between computers and humans using natural language. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sultana, T.; Qudus, U.; Umair, M.; Hossain, M.D. An Efficient Framework for Finding Similar Datasets Based on Ontology. Electronics 2024, 13, 4417. https://doi.org/10.3390/electronics13224417

Sultana T, Qudus U, Umair M, Hossain MD. An Efficient Framework for Finding Similar Datasets Based on Ontology. Electronics. 2024; 13(22):4417. https://doi.org/10.3390/electronics13224417

Chicago/Turabian StyleSultana, Tangina, Umair Qudus, Muhammad Umair, and Md. Delowar Hossain. 2024. "An Efficient Framework for Finding Similar Datasets Based on Ontology" Electronics 13, no. 22: 4417. https://doi.org/10.3390/electronics13224417

APA StyleSultana, T., Qudus, U., Umair, M., & Hossain, M. D. (2024). An Efficient Framework for Finding Similar Datasets Based on Ontology. Electronics, 13(22), 4417. https://doi.org/10.3390/electronics13224417