Rethinking the Non-Maximum Suppression Step in 3D Object Detection from a Bird’s-Eye View

Abstract

:1. Introduction

- This paper proposes a BEV circular search method (B-Grouping) that utilizes variable distance thresholds to search for all relevant prediction boxes that require processing.

- This paper introduces a BEV IoU computation method (B-IoU) based on relative position and absolute spatial information. This method allows for the calculation of IoU values between non-overlapping boxes without the need for scaling factors.

- This paper proposes a BEV-NMS strategy for post-processing prediction boxes in the BEV view, based on B-Grouping and B-IoU. The effectiveness of this strategy is validated on the nuScenes dataset, showing improvements in various detection metrics across multiple algorithms after applying BEV-NMS.

2. Related Work

2.1. Vision-Based 3D Object Detection

2.2. Various NMS Strategies

3. Method

3.1. BEV-NMS Overview

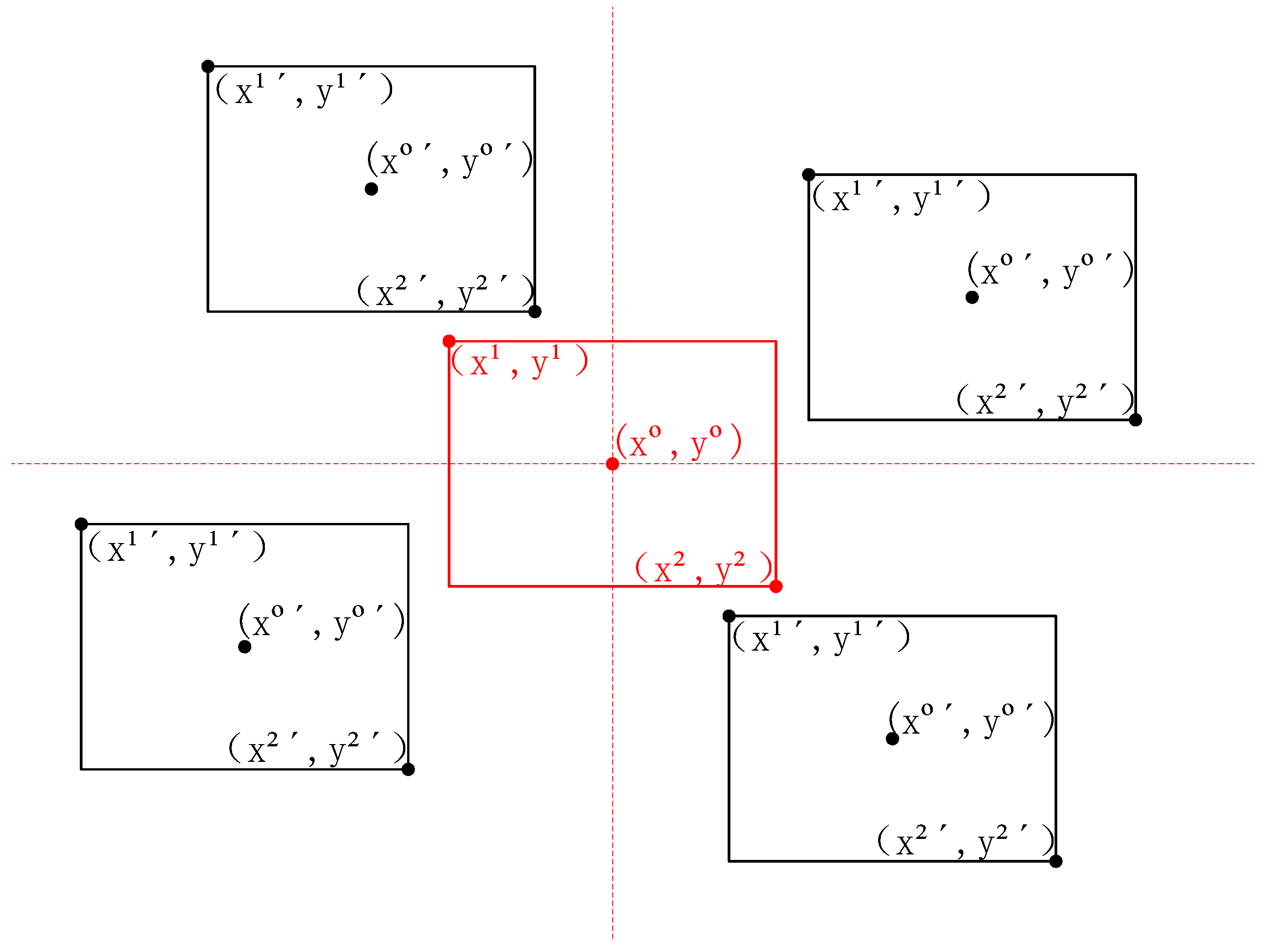

3.2. BEV IoU Computation Method (B-IoU)

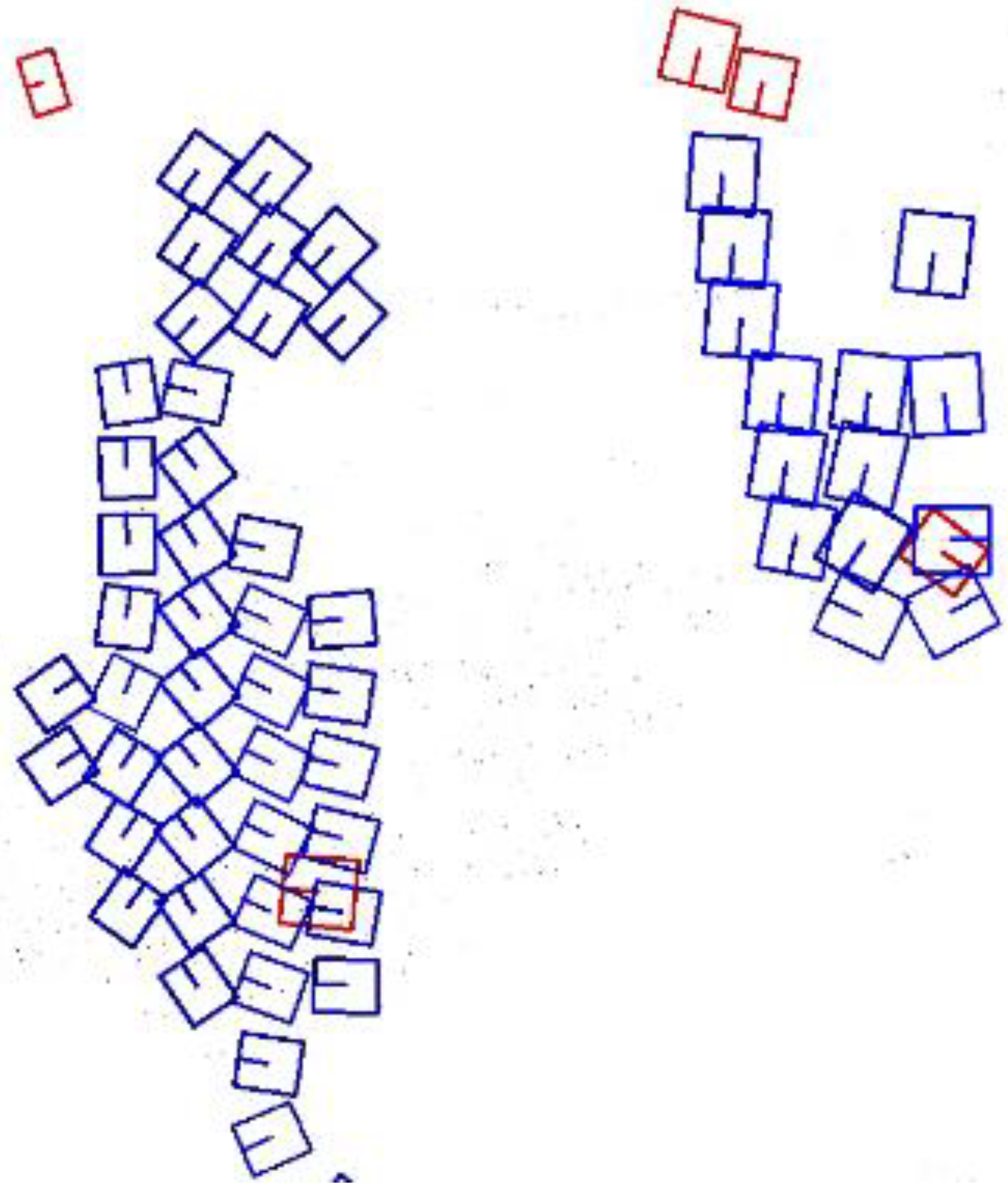

4. BEV Circular Search Method (B-Grouping)

5. BEV-NMS Strategy

| Algorithm 1 Improved NMS with Rough Distance Filtering |

|

6. Experiments

6.1. Dataset

6.2. Evaluation Metrics

6.3. Implementation Details

6.4. Main Results

6.5. Ablation Study

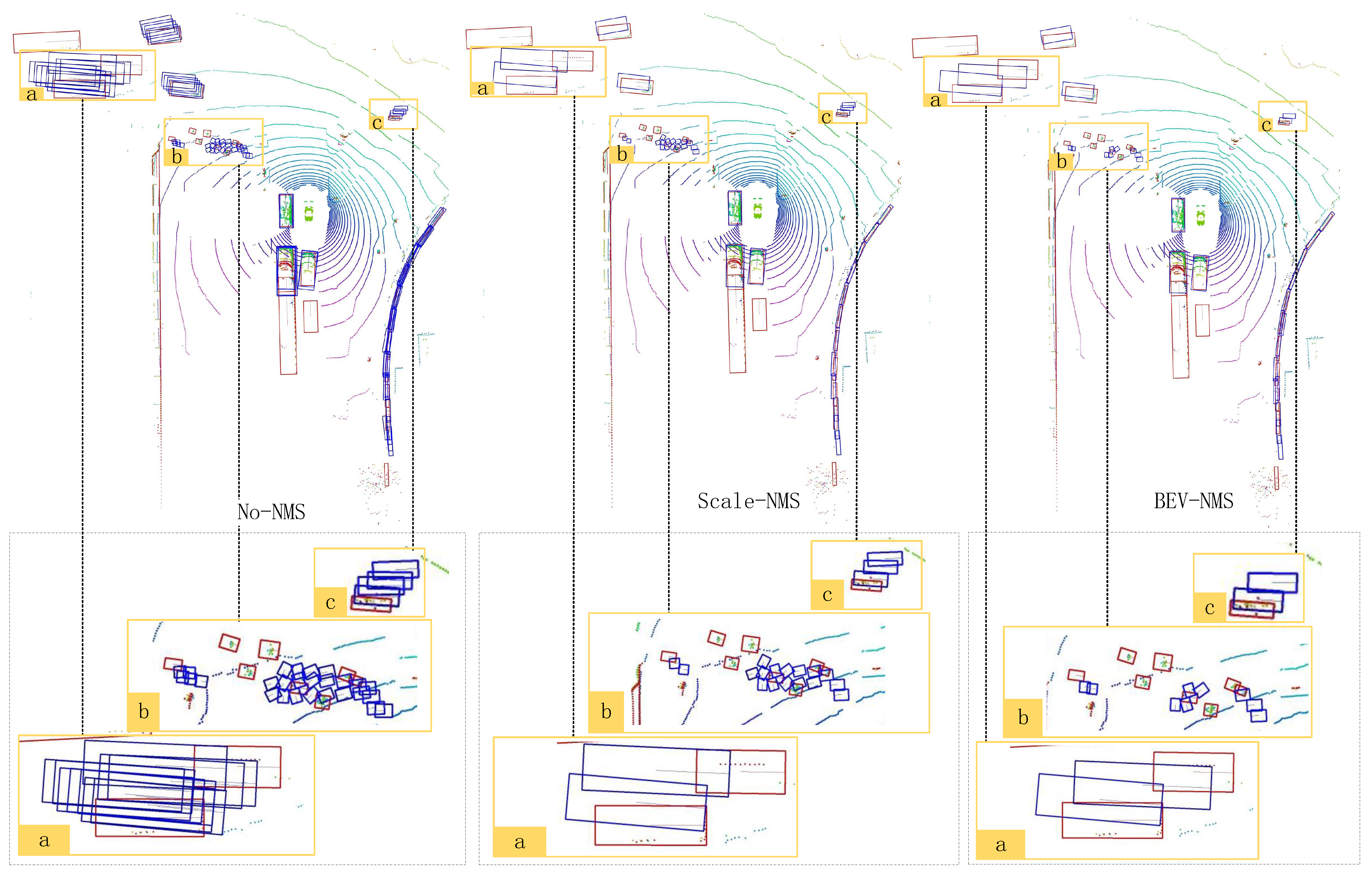

6.6. Qualitative Results and Analysis

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, Z.; Li, Z.; Zhang, S.; Fang, L.; Jiang, Q.; Zhao, F.; Zhou, B.; Zhao, H. Autoalign: Pixel-instance feature aggregation for multi-modal 3D object detection. arXiv 2022, arXiv:2201.06493. [Google Scholar]

- Wang, Y.; Mao, Q.; Zhu, H.; Deng, J.; Zhang, Y.; Ji, J.; Li, H.; Zhang, Y. Multi-modal 3D object detection in autonomous driving: A survey. Int. J. Comput. Vis. 2023, 131, 2122–2152. [Google Scholar] [CrossRef]

- Antonello, M.; Carraro, M.; Pierobon, M.; Menegatti, E. Fast and robust detection of fallen people from a mobile robot. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4159–4166. [Google Scholar]

- Wohlgenannt, I.; Simons, A.; Stieglitz, S. Virtual reality. Bus. Inf. Syst. Eng. 2020, 62, 455–461. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3D object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel r-cnn: Towards high performance voxel-based 3D object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 1201–1209. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- He, C.; Li, R.; Li, S.; Zhang, L. Voxel set transformer: A set-to-set approach to 3D object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8417–8427. [Google Scholar]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. Bevdet: High-performance multi-camera 3D object detection in bird-eye-view. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. FCOS3D: Fully convolutional one-stage monocular 3D object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 913–922. [Google Scholar]

- Wang, Y.; Guizilini, V.C.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. DETR3D: 3D object detection from multi-view images via 3D-to-2D queries. In Proceedings of the Conference on Robot Learning, PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 180–191. [Google Scholar]

- Huang, J.; Huang, G. BEVDet4D: Exploit temporal cues in multi-camera 3D object detection. arXiv 2022, arXiv:2203.17054. [Google Scholar]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Qiao, Y.; Dai, J. Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 1–18. [Google Scholar]

- Liu, Y.; Wang, T.; Zhang, X.; Sun, J. Petr: Position embedding transformation for multi-view 3D object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 531–548. [Google Scholar]

- Park, D.; Ambrus, R.; Guizilini, V.; Li, J.; Gaidon, A. Is pseudo-lidar needed for monocular 3D object detection? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3142–3152. [Google Scholar]

- Wang, T.; Pang, J.; Lin, D. Monocular 3D object detection with depth from motion. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 386–403. [Google Scholar]

- Philion, J.; Fidler, S. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3D. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16. Springer: Cham, Switzerland, 2020; pp. 194–210. [Google Scholar]

- Li, Y.; Huang, B.; Chen, Z.; Cui, Y.; Liang, F.; Shen, M.; Liu, F.; Xie, E.; Sheng, L.; Ouyang, W.; et al. Fast-BEV: A Fast and Strong Bird’s-Eye View Perception Baseline. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2021, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Zhu, C.; Wang, J.; Savvides, M.; Zhang, X. Bounding box regression with uncertainty for accurate object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2888–2897. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Adaptive nms: Refining pedestrian detection in a crowd. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6459–6468. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3D object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11784–11793. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11621–11631. [Google Scholar]

- Yu Haibao, L.Y.; Mao, S. Dair-v2x: A large-scale dataset for vehicle-infrastructure cooperative 3D object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 21361–21370. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

| NMS Type | mAP | NDS | mATE | mASE | mAOE | mAVE | mAAE |

|---|---|---|---|---|---|---|---|

| None | 0.237 | 0.352 | 0.760 | 0.281 | 0.602 | 0.781 | 0.226 |

| Scale-NMS | 0.308 | 0.394 | 0.715 | 0.280 | 0.597 | 0.778 | 0.230 |

| BEV-NMS | 0.336 | 0.433 | 0.608 | 0.285 | 0.531 | 0.732 | 0.195 |

| NMS Type | mAP | NDS | mATE | mASE | mAOE | mAVE | mAAE |

|---|---|---|---|---|---|---|---|

| None | 0.403 | 0.492 | 0.636 | 0.337 | 0.357 | 0.427 | 0.339 |

| Scale-NMS | 0.421 | 0.545 | 0.579 | 0.258 | 0.329 | 0.301 | 0.191 |

| BEV-NMS | 0.462 | 0.569 | 0.513 | 0.260 | 0.332 | 0.312 | 0.189 |

| Model | mAP | NDS | mATE | mASE | mAOE | mAVE | mAAE | FPS |

|---|---|---|---|---|---|---|---|---|

| FCOS3D | 0.343 | 0.415 | 0.725 | 0.263 | 0.422 | 1.292 | 0.153 | 1.7 |

| DD3D | 0.418 | 0.477 | 0.572 | 0.249 | 0.368 | 1.041 | 0.124 | - |

| DETR3D | 0.412 | 0.479 | 0.641 | 0.255 | 0.394 | 0.845 | 0.133 | 2.0 |

| BEVFormer | 0.445 | 0.535 | 0.631 | 0.257 | 0.405 | 0.435 | 0.143 | 1.7 |

| BEVDet | 0.397 | 0.477 | 0.595 | 0.257 | 0.355 | 0.818 | 0.188 | 1.9 |

| BEVDet4D | 0.421 | 0.545 | 0.579 | 0.258 | 0.329 | 0.301 | 0.191 | 1.9 |

| PETR | 0.421 | 0.524 | 0.681 | 0.267 | 0.357 | 0.377 | 0.186 | 3.4 |

| BEVDepth | 0.412 | 0.535 | 0.565 | 0.266 | 0.358 | 0.331 | 0.190 | - |

| FAST-BEV | 0.413 | 0.535 | 0.584 | 0.279 | 0.311 | 0.329 | 0.206 | 19.7 |

| Ours | 0.462 | 0.569 | 0.513 | 0.260 | 0.332 | 0.312 | 0.189 | 1.1 |

| Method | Car (IoU = 0.5) | Ped. (IoU = 0.25) | Cuc. (IoU = 0.25) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Easy | Mid | Hard | Easy | Mid | Hard | Easy | Mid | Hard | |

| BEVDet | 58.23 | 48.05 | 47.94 | 13.27 | 12.89 | 12.71 | 19.37 | 18.89 | 18.61 |

| BEVDet4D | 59.63 | 51.97 | 49.06 | 15.28 | 14.54 | 14.32 | 20.59 | 20.57 | 20.36 |

| BEVFormer | 61.37 | 50.73 | 50.73 | 16.89 | 15.82 | 15.95 | 22.16 | 22.13 | 22.06 |

| BEVDepth | 75.50 | 63.58 | 63.67 | 34.95 | 33.42 | 33.27 | 55.67 | 55.47 | 55.34 |

| Ours | 76.71 | 64.79 | 64.60 | 40.18 | 38.29 | 38.19 | 59.43 | 59.17 | 59.03 |

| Model | mAP | NDS | mATE | mASE | mAOE | mAVE | mAAE | |

|---|---|---|---|---|---|---|---|---|

| Large Size | Small Size | |||||||

| 0.2 | 2.4 | 0.437 | 0.555 | 0.526 | 0.261 | 0.340 | 0.315 | 0.189 |

| 0.5 | 2.4 | 0.462 | 0.569 | 0.513 | 0.260 | 0.332 | 0.312 | 0.189 |

| 1.0 | 2.4 | 0.461 | 0.568 | 0.518 | 0.261 | 0.336 | 0.315 | 0.190 |

| 1.5 | 2.4 | 0.459 | 0.569 | 0.509 | 0.260 | 0.330 | 0.311 | 0.190 |

| 0.5 | 0.5 | 0.449 | 0.563 | 0.521 | 0.261 | 0.336 | 0.313 | 0.189 |

| 0.5 | 1.0 | 0.459 | 0.568 | 0.518 | 0.261 | 0.336 | 0.313 | 0.189 |

| 0.5 | 1.5 | 0.460 | 0.568 | 0.518 | 0.261 | 0.337 | 0.314 | 0.190 |

| 0.5 | 2.4 | 0.462 | 0.569 | 0.513 | 0.260 | 0.332 | 0.312 | 0.189 |

| 0.5 | 3.0 | 0.461 | 0.568 | 0.518 | 0.261 | 0.336 | 0.315 | 0.190 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Song, S.; Ai, L. Rethinking the Non-Maximum Suppression Step in 3D Object Detection from a Bird’s-Eye View. Electronics 2024, 13, 4034. https://doi.org/10.3390/electronics13204034

Li B, Song S, Ai L. Rethinking the Non-Maximum Suppression Step in 3D Object Detection from a Bird’s-Eye View. Electronics. 2024; 13(20):4034. https://doi.org/10.3390/electronics13204034

Chicago/Turabian StyleLi, Bohao, Shaojing Song, and Luxia Ai. 2024. "Rethinking the Non-Maximum Suppression Step in 3D Object Detection from a Bird’s-Eye View" Electronics 13, no. 20: 4034. https://doi.org/10.3390/electronics13204034

APA StyleLi, B., Song, S., & Ai, L. (2024). Rethinking the Non-Maximum Suppression Step in 3D Object Detection from a Bird’s-Eye View. Electronics, 13(20), 4034. https://doi.org/10.3390/electronics13204034