4.1. Datasets

COCO Datasets. The Common Objects in Context (COCO) dataset, developed by the Microsoft team, is a vital asset in computer vision research. It contains over 330,000 images that are richly annotated across 80 object categories. By providing a diverse, large-scale, and challenging benchmark, the COCO dataset has facilitated extensive research activities [

44]. Consequently, the method we proposed has been rigorously evaluated on the COCO dataset to compare its performance with leading algorithms in the field.

Table 1 summarizes the experimental setup and dataset partitioning, illustrating our method within the COCO dataset.

VOC Datasets. The PASCAL Visual Object Classes (VOC) dataset serves as a crucial benchmark in the field of computer vision, supporting tasks such as object detection. It encompasses annotations for 20 different object categories, including class labels and bounding boxes. The PASCAL VOC challenge series played a pivotal role in advancing vision-based technologies [

45]. Additionally, we evaluate the improved YOLOv5 on the VOC dataset and compare it with other popular methods.

Table 2 provides a detailed description of partitioning for the VOC dataset.

KITTI Datasets. The KITTI dataset is a crucial benchmark in the field of autonomous driving research. It encompasses a variety of real-world driving scenarios including urban, rural, and highway environments. It provides comprehensive annotations for tasks such as 3D object detection, tracking, and depth estimation [

46]. We use the KITTI dataset to validate the accuracy of our proposed method in distance estimation, and to demonstrate its applicability and effectiveness in realistic driving conditions.

4.4. Comparison Experiment

We have developed an improved YOLOv5 method, specifically optimized for detecting small objects in road scenes under various lighting and weather conditions. To evaluate the performance of our method in terms of feature representation and real-time processing capabilities, we conducted a series of experiments on the COCO and VOC datasets. Our improved methods, denoted according to scale as Ours-s, Ours-m, and Ours-l, were compared with several state-of-the-art object detection methods.

We used Precision, mAP, and Recall as the primary performance metrics. Additionally, we considered complexity by evaluating the number of parameters (Params) and floating-point operations per second (FLOPS). We performed a comparative analysis of different methods on the COCO and VOC datasets, ensuring the robustness of the experimental design and the validity of the data analysis. The results are presented in the accompanying

Table 5.

Experimental results on the COCO dataset show that our optimized Ours-m model achieved 71.6% Precision, 61.5% mAP, and 57.9% Recall. These results are the best among all methods using similar computational resources. On the VOC dataset, the model also excelled, registering 77.5% mAP@.5 and 73.4% Recall. Notably, despite having fewer parameters than the equivalent scale YOLOv5 model, Ours-s, Ours-m and Ours-l surpassed other methods in Precision and mAP. It demonstrates our success in improving performance while optimizing computational efficiency.

Compared to SSD and Faster R-CNN, Ours-m demonstrated significant advantages on both the COCO and VOC datasets. It was particularly effective in Recall for small object detection. For instance, on the COCO dataset, the Recall of Ours-l was 21.3% higher than that of SSD and 14.1% higher than Faster R-CNN, highlighting the effectiveness of the RedeCa module in enhancing recall capabilities.

Although Mask-RCNN is designed for instance segmentation, the Ours-l model excelled in object detection tasks. On the VOC dataset, it achieved an mAP@.5 of 80.0%, compared to Mask-RCNN’s 57.2%. This emphasizes that our improved model delivers outstanding detection performance, even in domains specialized by Mask-RCNN.

We also controlled for model complexity while striving for higher performance. Compared to the original YOLOv5, the Ours series demonstrated superior small object detection in complex environments with fewer parameters. On the COCO dataset, Ours-l improved mAP and Recall by 0.4% each over YOLOv5l. Our methods surpass existing methods in key performance metrics while maintaining computational efficiency, laying a solid foundation for practical applications.

We compared our method with the latest object detection methods from YOLOv6 to YOLOv10 [

47,

48,

49,

50,

51]. Our method matches YOLOv8 and YOLOv9 in terms of accuracy, and surpasses YOLOv6, v8, v9, and v10 in model lightweighting and computational load. The data presented in

Table 5 showed that while our model achieves accuracy comparable to the latest YOLO versions, it still has benefits in model lightweighting and computational load. These advantages contribute to the deployment and operational efficiency of our method in practical applications.

The RedeCa module enhances our method’s ability of representing features, especially in accurately handling edge and detail information, which is crucial for small object detection. It also increases the adaptability to varying lighting and weather conditions, thereby boosting robustness in complex environments.

In road traffic scenarios, real-time performance is crucial. We evaluated our method against popular methods in terms of FPS and parameter count, as detailed in

Table 5. Our method’s parameter count and computational complexity are markedly lower than those of Faster R-CNN and Mask-RCNN. Compared to the baseline YOLOv5, our method achieves comparable performance while reducing both parameter count and computational complexity.

The experimental results show that by adding the RedeCa module to YOLOv5, we improve small object detection accuracy markedly while maintaining high recall rates. These findings support strongly further exploration of deep learning methods for small object detection in complex environments, confirming the effectiveness and potential of our enhancements.

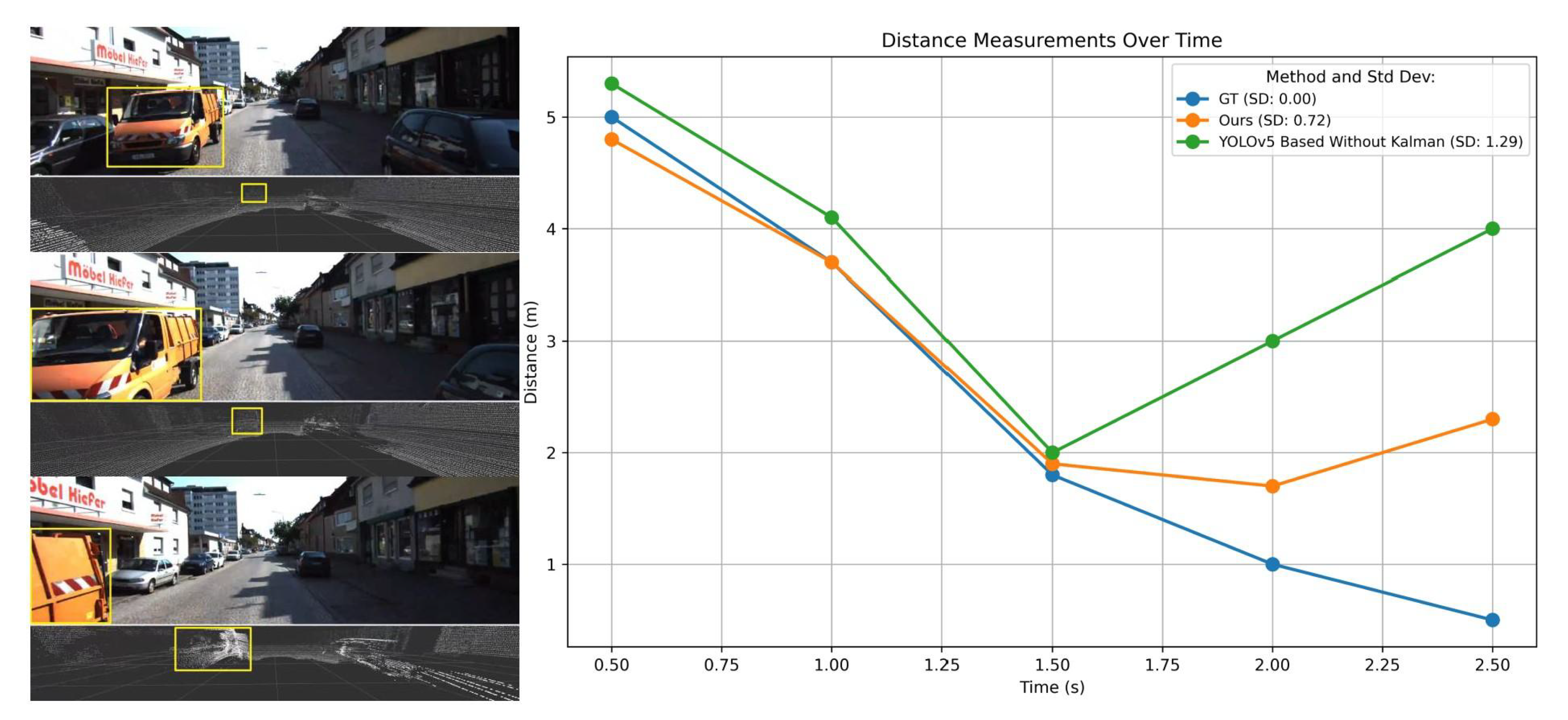

To validate the superior accuracy and robustness of our method, we conducted a comprehensive quantitative and qualitative comparative analysis. We compared our method with popular monocular distance measurement methods in road traffic scenarios. The results are shown in

Figure 7.

As shown in

Figure 7, our monocular distance measurement method effectively reduces potential errors caused by sudden changes in the detection bounding box size. This leads to reduced dispersion in distance measurements, ensuring they remain more consistent with actual distances over time.

We also conducted a qualitative analysis to compare our method with mainstream methods. Unlike methods that generate depth maps for the entire image to obtain object distances, our method detects objects and measures their distances simultaneously, improving efficiency. The distance measurement results are shown in

Figure 8.

4.5. Ablation Experiment

We adopted the ablation experiment method to evaluate the impact of different modules on the detection performance of YOLOv5m model on VOC dataset. The experimental results are shown in

Table 6. We investigate the effect of depthwise separable convolution (DW) on model performance. In addition, we examine the impact of effective channel attention (ECA). We also explore the direct combination of DW and ECA. Finally, we assess the performance of the RedeCa module.

As shown in

Table 6, the baseline YOLOv5m model achieved a Precision of 82.5%, mAP of 74.3%, and IOU of 67.2% on the VOC dataset. It had 21.0 million parameters and 48.5 GFLOPS.

After adding Depthwise Separable Convolutions (DW) to the baseline model, Precision decreased to 70.5%, mAP dropped to 62.9%, and IOU significantly declined to 38.4%. However, the number of parameters and FLOPS were reduced to 9.1 million and 18.3 GFLOPS, respectively. This indicates that while the DW module effectively reduces model complexity, it negatively impacts detection performance.

Incorporating the Efficient Channel Attention (ECA) module resulted in a slight improvement in Precision to 82.7%, an increase in mAP to 76.3%, and a significant rise in IOU to 74.1%. The number of parameters and FLOPS slightly increased, demonstrating that the ECA module enhances detection performance without significantly increasing the computational burden.

Using Depthwise Separable Convolutions (DW) and Efficient Channel Attention (ECA) directly, the model saw slight improvements in Mean Average Precision (mAP) and Intersection over Union (IoU). But the increase was minimal, and there was no significant reduction in the network’s parameters and computational load. However, by effectively integrating DW and ECA into the RedeCa module, the model’s accuracy slightly decreased to 81.9%, but the mAP increased to 77.5%, and the IoU rose to 73.4%, while the parameters and FLOPS were reduced to 17.2 M and 34.6 G, respectively. This indicates that the use of the RedeCa module can balance detection performance and model complexity to some extent, providing an effective solution for real-time object detection applications.

To verify that the use of an anomaly jump filter helps smooth distance variations and eliminate potential errors caused by sudden changes in the detection bounding box size, we conducted a comparative analysis. We compared the algorithm architecture with the anomaly jump filter to the one without it.

As shown in

Figure 9, using a Kalman filter integrated with the time series effectively eliminates potential errors caused by sudden changes in detection bounding box size. This reduces the dispersion in distance measurements, making them more consistent with the actual distances over time.