Improved Feature Point Extraction Method of VSLAM in Low-Light Dynamic Environment

Abstract

1. Introduction

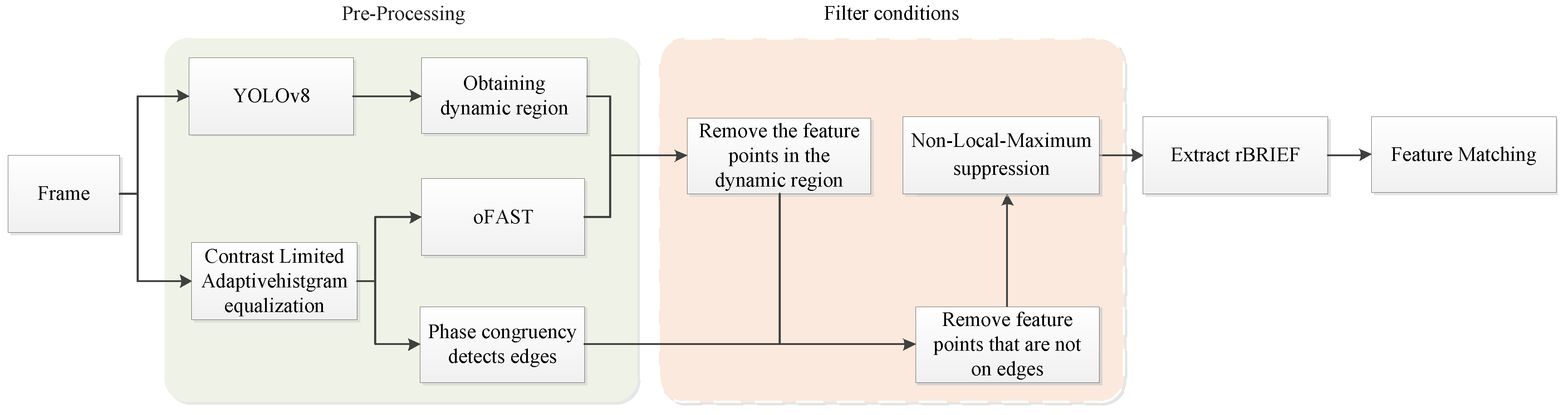

- (1)

- Contrast-limited adaptive histogram equalization (CLAHE) is adopted in the preprocessing process to enhance the contrast in a frame, addressing the low-light problem.

- (2)

- The number of extracted feature points increases after the brightness is increased. In order to eliminate redundant feature points, three screening conditions are proposed.

- (a)

- The feature points in the dynamic region are removed to prevent interference from dynamic objects. The dynamic regions are detected using YOLOv8 during pre-processing.

- (b)

- The feature points are basically located where changes are obvious, such as on the edges. The second filter condition determines whether the feature points fall on the edge. The edge is detected using the phase congruency method during pre-processing.

- (c)

- The third screening condition involves non-local-maximum suppression to remove redundant points and retain the optimal points in a localized region.

2. Related Work

2.1. Traditional Method

2.2. Deep Learning Method

3. Methodology

3.1. Pre-Processing

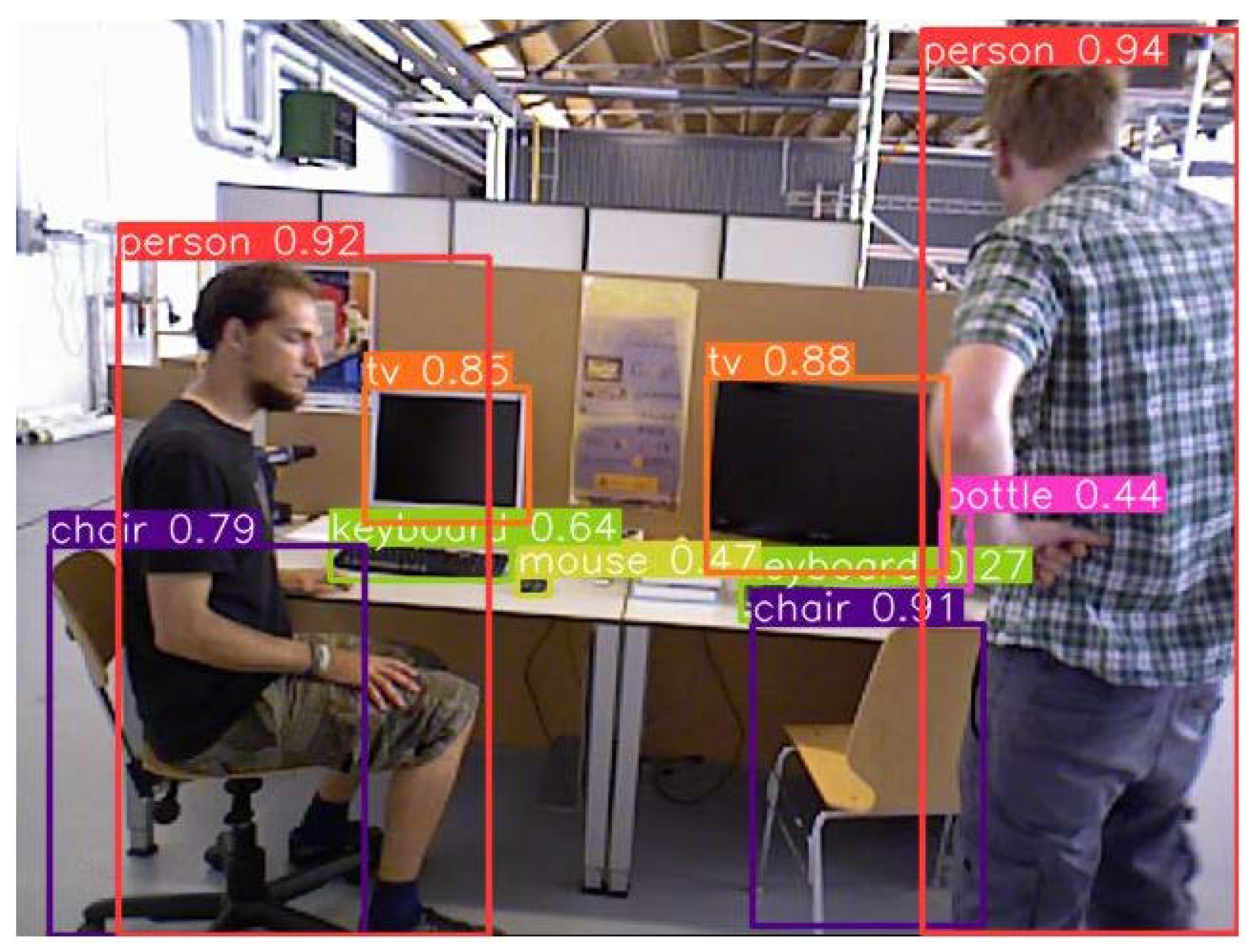

3.1.1. YOLOv8

3.1.2. Contrast-Limited Adaptive Histogram Equalization

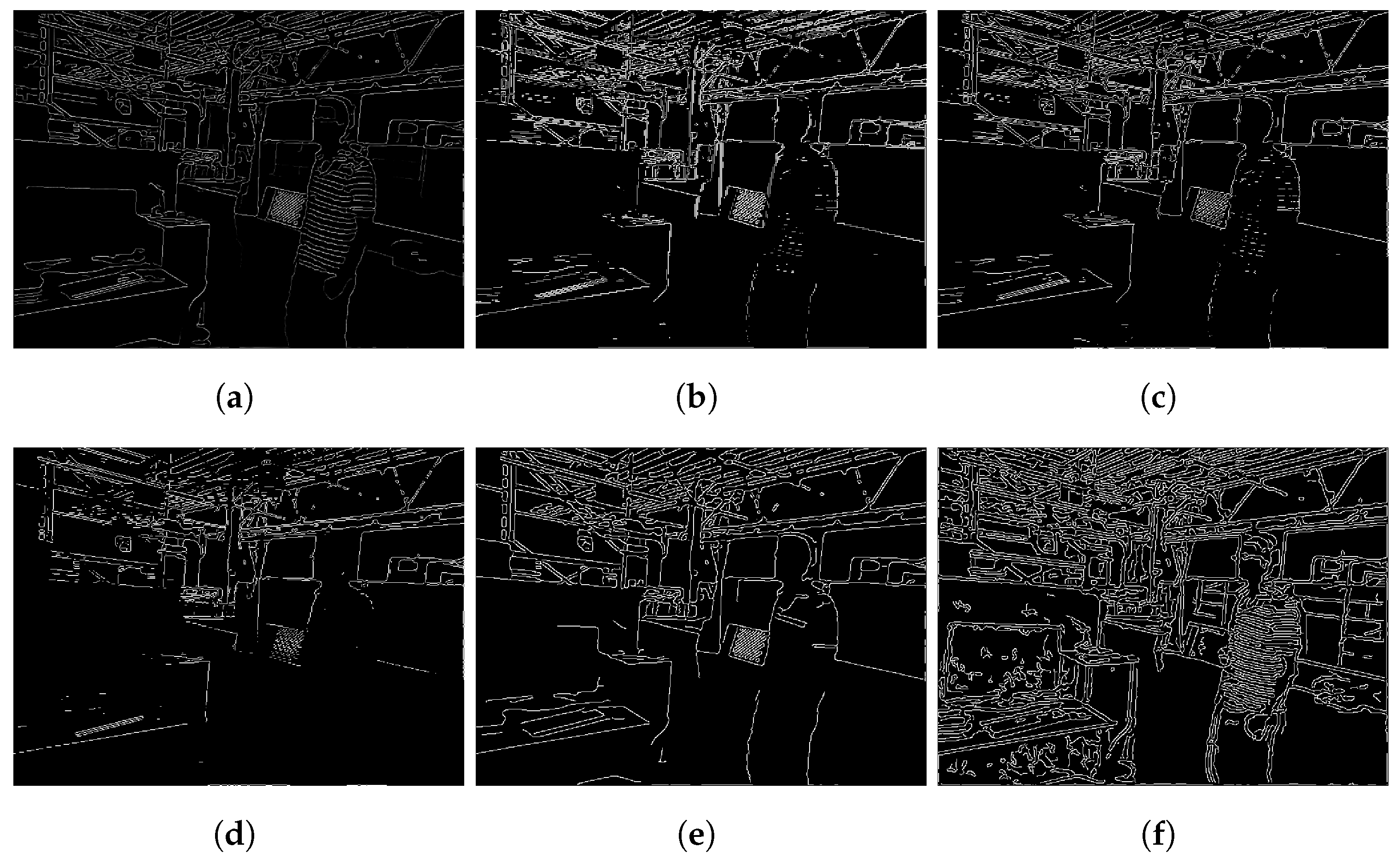

3.1.3. Phase Concurrency Edge Detection

3.2. Feature Points Filtering

- (1)

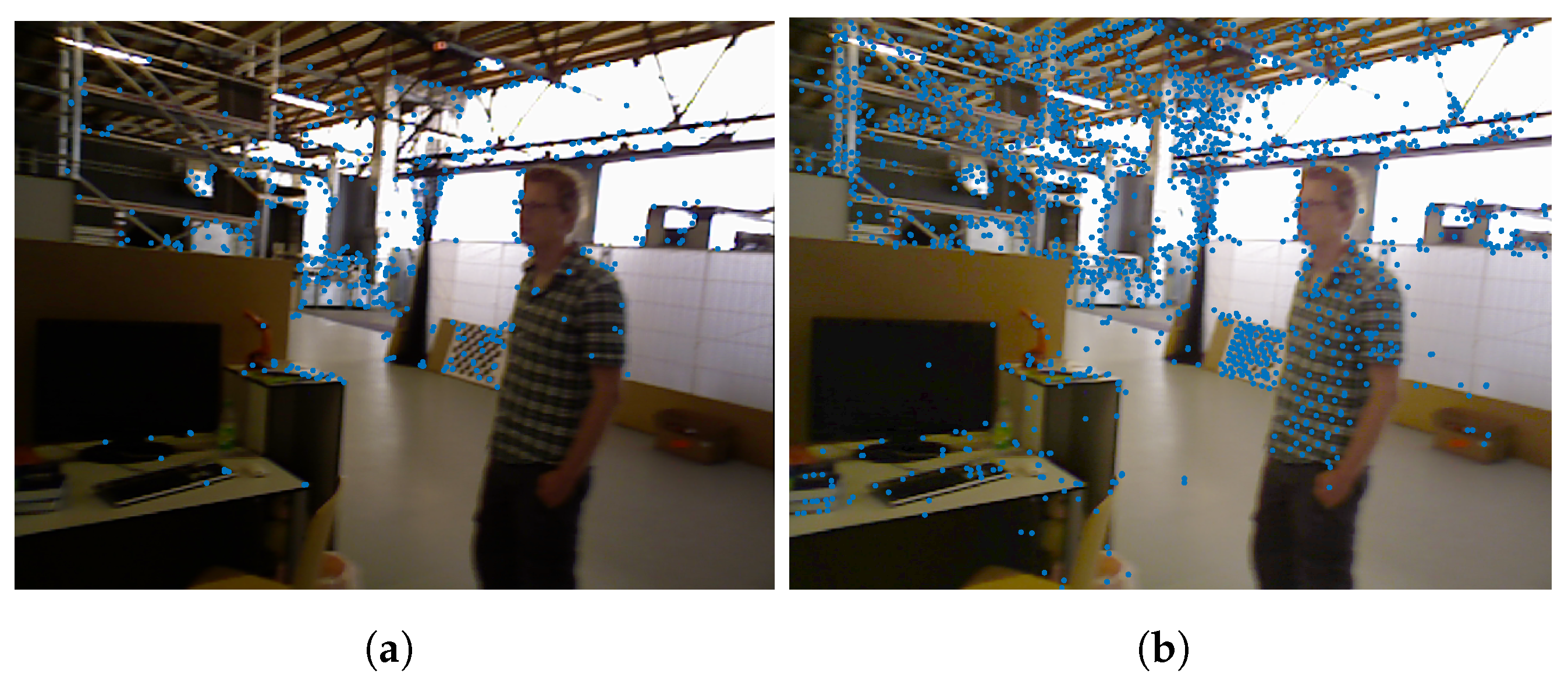

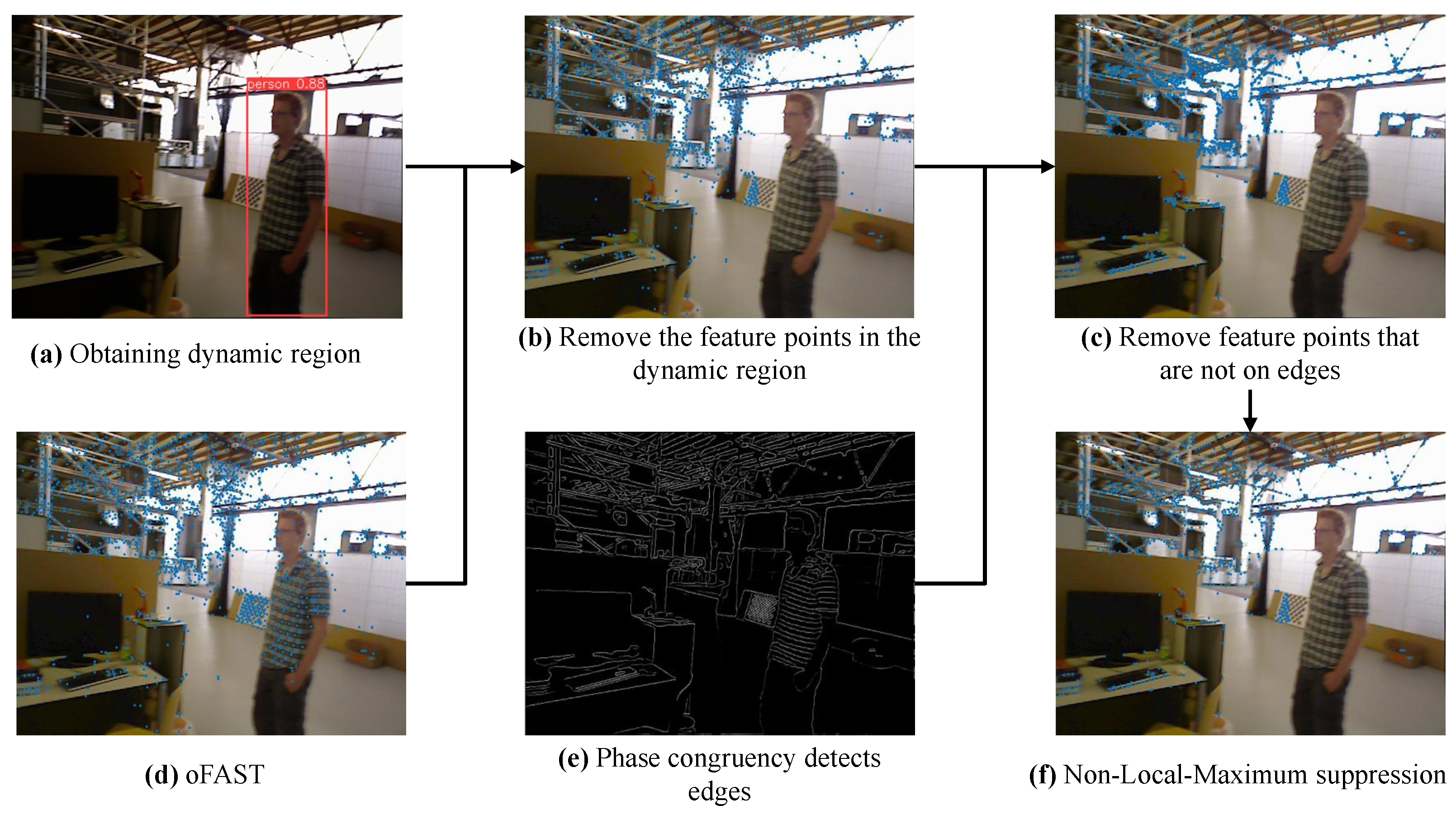

- Remove the feature points in the dynamic region.The feature points on dynamic objects can interfere with the localization of mobile robots. Therefore, the first screening involves removing the feature points in the dynamic region. The dynamic regions are detected using YOLOv8n during the pre-processing stage. After extracting the oFAST feature points, the feature points in the dynamic region are removed by combining them with the results of object detection (Figure 6a). The results are shown in Figure 6b.

- (2)

- Determine whether the FAST feature points fall on the edge.In general, the gray values of the pixel point p will be compared to 16 pixel points in the surrounding neighborhood. If the difference is large, this pixel point p is a FAST point. Therefore, the FAST corner points are generally in places where changes are obvious, such as on the edges. Therefore, one of the screening conditions is to determine whether the FAST points are on the edge of the phase coherence extraction. We combined the results of phase concurrency edge detection (Figure 6e) to retain only the feature points on the edge, and the results are shown in Figure 6c.

- (3)

- Non-Local-Maximum suppression.Non-local-maximum suppression is used as the third screening condition to obtain the best feature. During non-local-maximum suppression, we choose a detection box of size 3 to traverse the FAST feature points. Then, the FAST feature point with the largest response value in the detection box is reserved. This screening condition not only preserves the best point in the detection box but also ensures spare FAST feature points. The final results are shown in Figure 6f.

4. Experimental

4.1. Experimental Datasets

4.2. Feature Point Performance

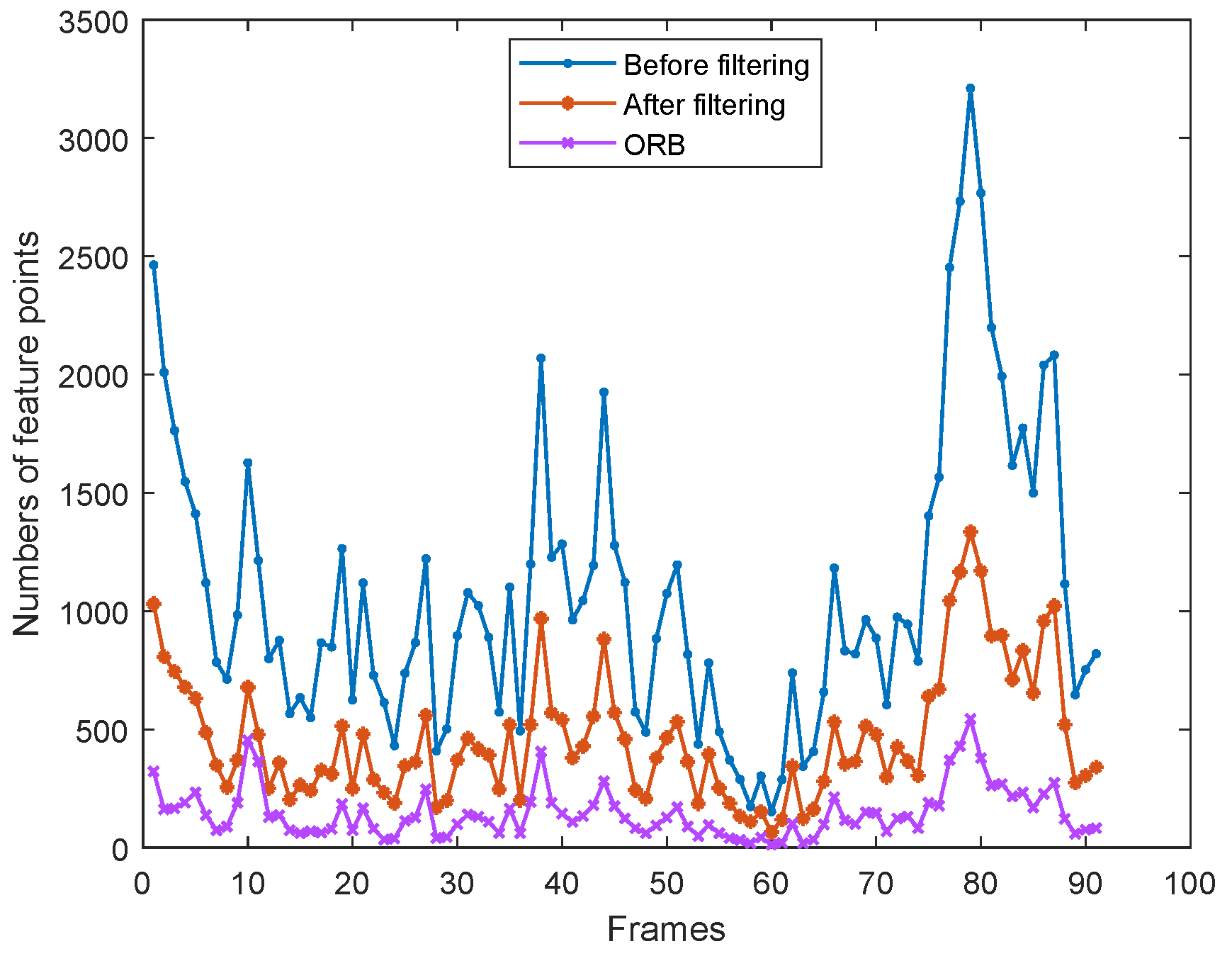

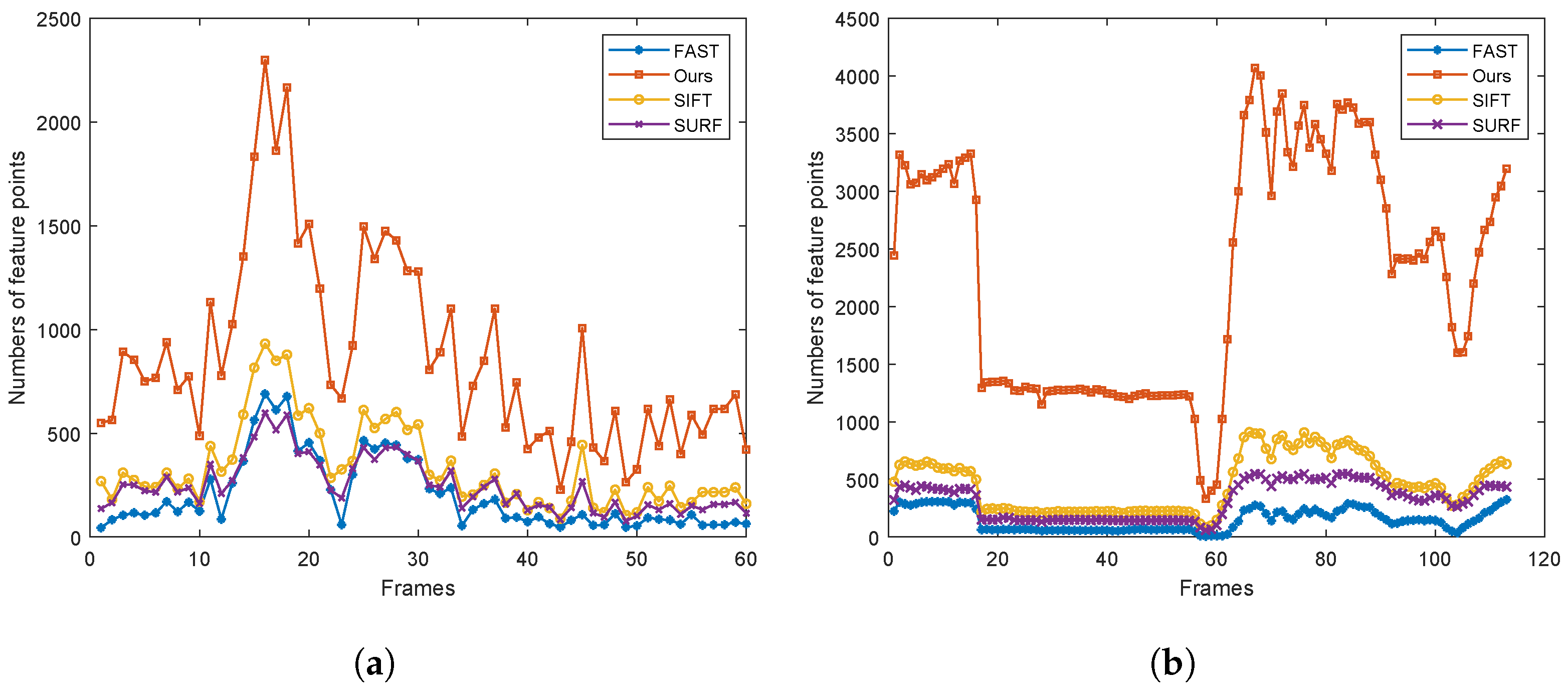

4.2.1. Feature Points Detection Performance

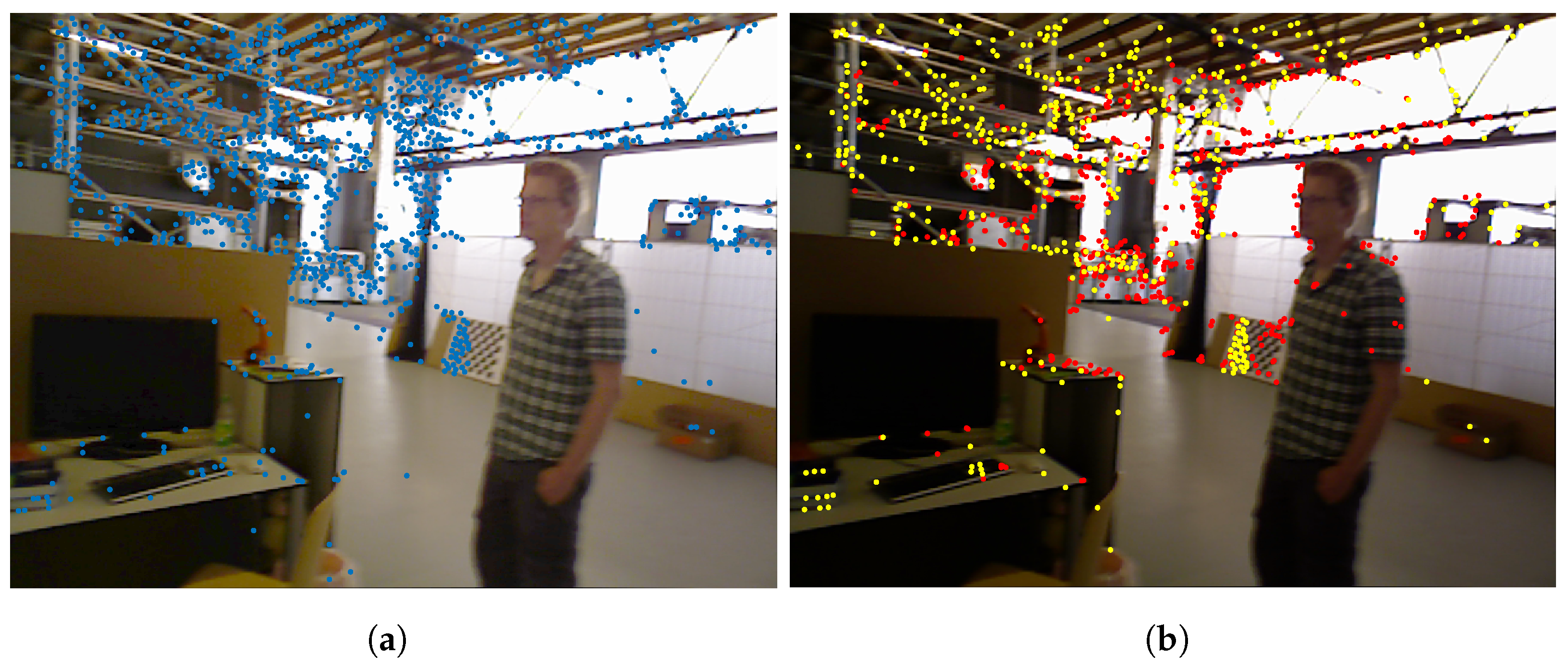

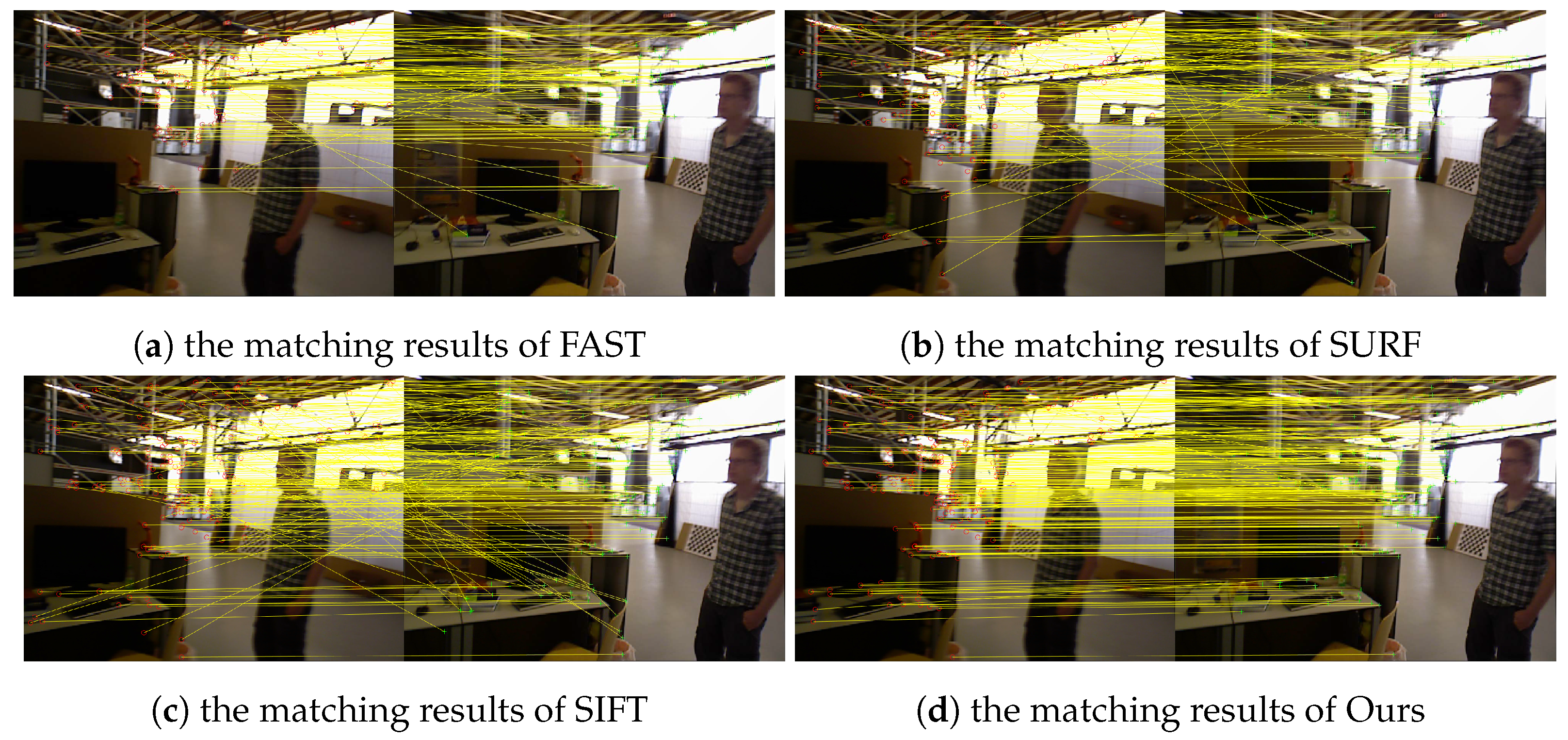

4.2.2. Feature Point Matching Performance

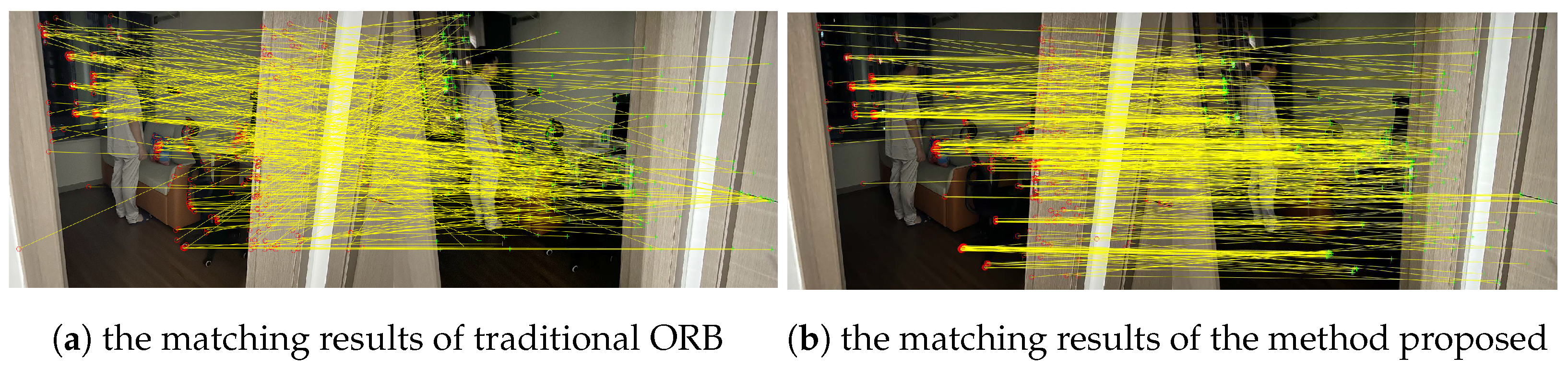

4.3. Scene Testing

4.4. Vslam Performance

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I 9. Springer: Berlin/Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2564–2571. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 593–600. [Google Scholar]

- Zhou, W.; Zhou, R. Vision SLAM algorithm for wheeled robots integrating multiple sensors. PLoS ONE 2024, 19, e0301189. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Shang, G.; Hu, K.; Zhou, C.; Wang, X.; Fang, G.; Ji, A. A Monocular-Visual SLAM System with Semantic and Optical-Flow Fusion for Indoor Dynamic Environments. Micromachines 2022, 13, 2006. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Li, Y.; Chen, P. TSG-SLAM: SLAM Employing Tight Coupling of Instance Segmentation and Geometric Constraints in Complex Dynamic Environments. Sensors 2023, 23, 9807. [Google Scholar] [CrossRef] [PubMed]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2014; pp. 834–849. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuniga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Qiao, C.; Bai, T.; Xiang, Z.; Qian, Q.; Bi, Y. Superline: A robust line segment feature for visual slam. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5664–5670. [Google Scholar]

- Li, Y.; Brasch, N.; Wang, Y.; Navab, N.; Tombari, F. Structure-slam: Low-drift monocular slam in indoor environments. IEEE Robot. Autom. Lett. 2020, 5, 6583–6590. [Google Scholar] [CrossRef]

- Yang, Z.; He, Y.; Zhao, K.; Lang, Q.; Duan, H.; Xiong, Y.; Zhang, D. Research on Inter-Frame Feature Mismatch Removal Method of VSLAM in Dynamic Scenes. Sensors 2024, 24, 1007. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Yu, H.; Wang, X.; Yang, Z.; He, Y.; Zhang, H.; Mian, A. Fast ORB-SLAM without keypoint descriptors. IEEE Trans. Image Process. 2021, 31, 1433–1446. [Google Scholar] [CrossRef] [PubMed]

- Peng, Q.; Xiang, Z.; Fan, Y.; Zhao, T.; Zhao, X. RWT-SLAM: Robust visual SLAM for highly weak-textured environments. arXiv 2022, arXiv:2207.03539. [Google Scholar]

- Lin, S.; Zhuo, X.; Qi, B. Accuracy and efficiency stereo matching network with adaptive feature modulation. PLoS ONE 2024, 19, e0301093. [Google Scholar] [CrossRef] [PubMed]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-net: A trainable cnn for joint detection and description of local features. arXiv 2019, arXiv:1905.03561. [Google Scholar]

- Revaud, J.; De Souza, C.; Humenberger, M.; Weinzaepfel, P. R2d2: Reliable and repeatable detector and descriptor. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 12414–12424. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2018; pp. 224–236. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar]

- Fang, Y.; Shan, G.; Wang, T.; Li, X.; Liu, W.; Snoussi, H. He-slam: A stereo slam system based on histogram equalization and orb features. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4272–4276. [Google Scholar]

- Yang, W.; Zhai, X. Contrast limited adaptive histogram equalization for an advanced stereo visual slam system. In Proceedings of the 2019 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Guilin, China, 17–19 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 131–134. [Google Scholar]

- Gu, Q.; Liu, P.; Zhou, J.; Peng, X.; Zhang, Y. Drms: Dim-light robust monocular simultaneous localization and mapping. In Proceedings of the 2021 International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 8–10 January 2021; IEEE: lPiscataway, NJ, USA, 2021; pp. 267–271. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Yadav, G.; Maheshwari, S.; Agarwal, A. Contrast limited adaptive histogram equalization based enhancement for real time video system. In Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Delhi, India, 24–27 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 2392–2397. [Google Scholar]

- Cherian, A.K.; Poovammal, E.; Philip, N.S.; Ramana, K.; Singh, S.; Ra, I.H. Deep learning based filtering algorithm for noise removal in underwater images. Water 2021, 13, 2742. [Google Scholar] [CrossRef]

- Kryjak, T.; Blachut, K.; Szolc, H.; Wasala, M. Real-Time CLAHE Algorithm Implementation in SoC FPGA Device for 4K UHD Video Stream. Electronics 2022, 11, 2248. [Google Scholar] [CrossRef]

- Sturm, J.; Engelhard, N.; Endres, F.; Burgard, W.; Cremers, D. A benchmark for the evaluation of RGB-D SLAM systems. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 573–580. [Google Scholar]

- Sturm, J.; Burgard, W.; Cremers, D. Evaluating Egomotion and Structure-from-Motion Approaches Using the TUM RGB-D Benchmark. In Proceedings of the Workshop on Color-Depth Camera Fusion in Robotics at the IEEE/RJS International Conference on Intelligent Robot Systems (IROS), Portugal, 2012; Volume 13. Available online: https://cvg.cit.tum.de/_media/spezial/bib/sturm12iros_ws.pdf (accessed on 1 January 2012).

- Shi, X.; Li, D.; Zhao, P.; Tian, Q.; Tian, Y.; Long, Q.; Zhu, C.; Song, J.; Qiao, F.; Song, L.; et al. Are we ready for service robots? The openloris-scene datasets for lifelong slam. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3139–3145. [Google Scholar]

| Classification | Objects |

|---|---|

| Highly Dynamic | People |

| Medium Dynamic | Chairs, Books |

| Low Dynamic | Desks, TVs |

| Matching Rate | FAST | SURF | SIFT | Ours |

|---|---|---|---|---|

| fre3_w_rpy_60 | 0.765 | 0.791 | 0.814 | 0.853 |

| Office-7 | 0.586 | 0.597 | 0.691 | 0.779 |

| Sequence | DS-SLAM | DynaSLAM | Ours | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | Mean | Median | S.D. | RMSE | Mean | Median | S.D. | RMSE | Mean | Median | S.D. | |

| fre3_w_rpy | 0.4442 | 0.3768 | 0.2835 | 0.2350 | 0.3541 | 0.2583 | 0.1967 | 0.1952 | 0.2348 | 0.1463 | 0.1015 | 0.0896 |

| Office-7 | 0.6017 | 0.6841 | 0.6429 | 0.6429 | 0.5841 | 0.5469 | 0.4786 | 0.4537 | 0.3215 | 0.4672 | 0.4107 | 0.3863 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Zhang, Y.; Hu, L.; Ge, G.; Wang, W.; Tan, S. Improved Feature Point Extraction Method of VSLAM in Low-Light Dynamic Environment. Electronics 2024, 13, 2936. https://doi.org/10.3390/electronics13152936

Wang Y, Zhang Y, Hu L, Ge G, Wang W, Tan S. Improved Feature Point Extraction Method of VSLAM in Low-Light Dynamic Environment. Electronics. 2024; 13(15):2936. https://doi.org/10.3390/electronics13152936

Chicago/Turabian StyleWang, Yang, Yi Zhang, Lihe Hu, Gengyu Ge, Wei Wang, and Shuyi Tan. 2024. "Improved Feature Point Extraction Method of VSLAM in Low-Light Dynamic Environment" Electronics 13, no. 15: 2936. https://doi.org/10.3390/electronics13152936

APA StyleWang, Y., Zhang, Y., Hu, L., Ge, G., Wang, W., & Tan, S. (2024). Improved Feature Point Extraction Method of VSLAM in Low-Light Dynamic Environment. Electronics, 13(15), 2936. https://doi.org/10.3390/electronics13152936