1. Introduction

The exploitation of software vulnerabilities by malicious actors is a real risk to everyone who uses software. Vulnerabilities in corporate systems have regularly led to the exfiltration of intellectual property and the loss of money. Similar faults in consumer-oriented applications expose private information, such as location, banking details, and login credentials [

1]. It is insufficient to rely on developers to write secure code due to the risks of releasing vulnerable software and the human propensity to make mistakes. As such, Software Vulnerability Detection (SVD) is a critical component of secure software development. SVD requires a trade-off between cost and accuracy [

2]. Peer code reviews require valuable developer time. An inexperienced reviewer may be less expensive but fail to catch vulnerabilities. Static code analysis is fast and relatively inexpensive but suffers from a high false positive rate requiring manual review to triage the results [

3]. Techniques such as fuzzing and dynamic analysis have a low false positive rate but are computationally expensive and require expertise to set up effectively.

The application of machine learning to vulnerability detection has increased as machine learning has demonstrated its ability to perform computer vision and natural language processing tasks with near- or super-human performance [

4]. Unfortunately, such applications have been met with limited success. Machine Learning–Assisted Software Vulnerability Detection (MLAVD) models are computationally expensive, requiring high-performance GPUs to train and serve the models. The models also face issues with accuracy and frequently fail to generalize to unseen data. Despite these challenges, MLAVD has focused on models with hundreds of millions of parameters—the large language models (LLM) CodeBERT [

5] and CoTexT [

6] each use over 125 million. With the rise of publicly available generative AI models, MLAVD models are growing to parameters in the billions and trillions [

7]. This is not a theoretical concern which poses practical application challenges. For example, Zhou et al. [

8] limited their exploration due to the cost of querying LLMs. The limited real-world deployment of these models indicates that their cost-effectiveness is not yet viable [

9].

Interestingly, Chen et al. [

10] found that code-specific pre-training tasks affected a model’s ability more than size. Thus, the trend to improve performance by expanding models seems to have floundered. Larger models—such as LLMs—are not the exclusive path to better results. Rather, there is a need to tailor models and methods to the vulnerability detection task. Many MLAVD models have used architectures and techniques imported from the fields of computer vision and natural language processing. CodeBERT is based on the BERT architecture designed for language understanding and CoTexT’s T5 was originally used for text generation. Some attempts to convert code into images before training have been made [

11]. Neither vision nor natural languages are strong analogs for modern code. A common approach is to convert the code into a graph representation [

12,

13,

14,

15]. However, these techniques rely on fragile code parsing and struggle to learn core vulnerability detection activities [

16]. Srikant et al. [

17] found the multiple-demand system in human brains decodes execution-related properties while the language system primarily decodes syntax-related properties. The multiple-demand system is associated with fluid intelligence [

18]. The authors suggest that human behavior is similar to the activity of computer programs combining operations to achieve a goal. Perhaps then the vision- and language-inspired architectures previously applied are ill-suited to capturing the semantics of vulnerabilities. If so, a model better aligned with the human brain’s processes may be both more efficient and effective.

In this paper, we seek an efficient and effective MLAVD model—such that it could feasibly run on an economy developer machine to scan and evaluate code during development. Rather than “just-in-time” vulnerability detection on commit, we might refer to this work as in-line vulnerability detection [

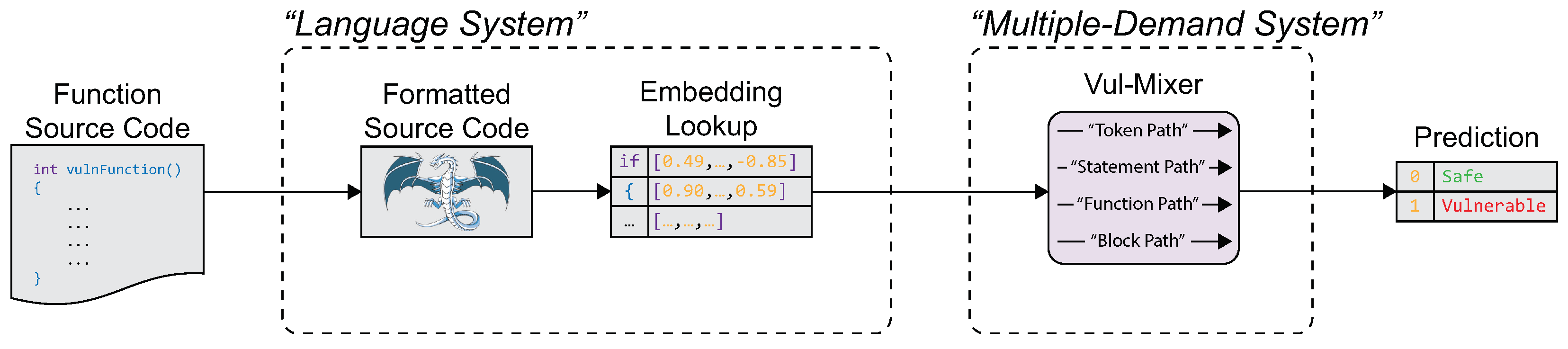

19]. More specifically, in-line vulnerability detection could not only prevent vulnerabilities from being added to software but also prevent them from being written in the first place. Also, we build the detection architecture around code instead of modifying the code format to fit the architecture. Accordingly, we present a new machine learning model,

Vul-Mixer, designed specifically for MLAVD. We draw inspiration from current research on how the brain understands code and combine that understanding with the task-specific details of vulnerability detection. To this end, we design a series of paths through a Mixer-style architecture that is selected to promote divergent learning of vulnerability-relevant code features. Further, we design these paths to maximize the resource efficiency (e.g., parameters, training/inference time) of the model.

When a model performs well on a training dataset but does not generalize outside of it, the model has overfit the data [

20]. Because overfitting and generalizability are key problems in MLAVD, we integrate them as a core component of our metrics and results. To provide a realistic and unbiased estimate of Vul-Mixer’s performance—rather than its ability to fit a particular dataset—we perform extensive experiments on and across multiple SVD datasets. This is a common practice in other machine learning fields [

5,

21]. Our experiments demonstrate that Vul-Mixer outperforms all resource-efficient baselines by 6 to

. It is an efficient and effective model which is shown by outperforming multiple MLAVD baselines. Vul-Mixer maintains

of CodeBERT’s generalization performance while being only

of its size. It is the most cost-effective model as measured against multiple ratios. When evaluated against real-world vulnerabilities, Vul-Mixer is superior to all baselines with an

score that is 6 to

higher. We make the following contributions:

We perform the first known study of resource-efficient MLAVD, showing that resource-efficient models can be competitive with MLAVD baseline models.

We design Vul-Mixer, a resource-efficient architecture, inspired by the multiple-demand system and research on how humans process code.

We produce an efficient and effective model, demonstrating the vastly superior resource-effectiveness and maintained generalizability of Vul-Mixer compared to strong and up-to-date MLAVD baselines.

5. Experimental Results and Analysis

In this section, we aim to evaluate our model for vulnerability detection to answer the following research questions.

RQ1: How efficient is Vul-Mixer compared to baselines?

RQ2: How effective is Vul-Mixer compared to resource-efficient baselines?

RQ3: How effective is Vul-Mixer compared to MLAVD baselines?

RQ4: How cost-effective is Vul-Mixer compared to baselines?

RQ5: Is Vul-Mixer useful in real-world settings?

5.1. RQ1: How Efficient Is Vul-Mixer Compared to the Baselines?

Table 4 shows the number of parameters associated with each baseline and Vul-Mixer. Additionally shown is the time it takes to run training or inference on one million samples randomly drawn from the Wild C dataset [

34]. As initialized, all resource-efficient baselines meet the definition presented in

Section 2.

Result 1: Vul-Mixer is 492–980x smaller than MLAVD baselines. Vul-Mixer has parameters, which is slightly more than the resource-efficient baselines with the fewest parameters Avg Pool and Shift, both with . Shift and Avg Pool do not have any trainable parameters in their token mixers. Thus, Vul-Mixer has only 22,000. While MLP-Mixer is a single order of magnitude smaller than the MLAVD baselines, Vul-Mixer and the other resource-efficient baselines are two orders of magnitude smaller. When run with a batch size of 1, to estimate the minimum requirements, Vul-Mixer requires only 173 MB of memory—small enough to run on any modern system.

Result 2: Vul-Mixer is 3–60x faster than MLAVD baselines. We time each model on functions sampled from the Wild C dataset. It is important to note that the times shown are not the total training time required for the models. In practice, the time required to train the models is dependent upon the size of the dataset, the number of epochs, the hardware, and other factors. Based on our timings, Vul-Mixer takes more time to train and infer than other resource-efficient models by 29 and 1 s, respectively. This represents an increase in training time and a increase in inference time. Despite this increase, Vul-Mixer is two times faster to train and three times faster to infer than the fastest baseline, ReGVD. CodeBERT and LineVul are two orders of magnitude slower than Vul-Mixer while CoTexT is three orders of magnitude slower to train and nearly three to infer.

5.2. RQ2: How Effective Is Vul-Mixer Compared to Resource-Efficient Baselines?

We first compare Vul-Mixer to the strong resource-efficient baselines trained and tested on the MLAVD task. Each model is trained and tested on three datasets: CodeXGLUE, D2A, and Draper VDISC. The models are tested on all three datasets regardless of the model on which they were trained. The corresponding

and

metrics are displayed in

Table 5. The

and

are lower when trained on CodeXGLUE and D2A because the models have low generalization precision and Draper VDISC is an imbalanced dataset.

Result 3: Vul-Mixer has a MAP that is 6–13% higher than resource-efficient baselines. Vul-Mixer consistently outperforms the resource-efficient baselines. This is most notable on CodeXGLUE where Vul-Mixer achieves a of – higher than the second-ranked TCA. When calculated over all training datasets, Vul-Mixer achieves a of which is higher than the next model.

Result 4: Vul-Mixer generalizes 7–14% better than resource-efficient baselines. Vul-Mixer is also able to generalize better than resource-efficient baselines. It has the highest with a score of , which is higher than the next-highest . Note, that the second ranked models for and are not the same.

5.3. RQ3: How Effective Is Vul-Mixer Compared to MLAVD Baselines?

We next compare Vul-Mixer to baseline models from existing MLAVD literature using the same process as RQ2. The results are shown in

Table 6. The MLAVD baseline results vary across datasets. For instance, CodeBERT is the top overall baseline but ranks third for both

and

when trained on CodeXGLUE.

Result 5: Vul-Mixer has a MAP 97–120% that of MLAVD baselines. Vul-Mixer ranks third by amongst the MLAVD baselines, outperforming CoTexT and ReGVD. Because CodeBERT and LineVul share a common architecture, Vul-Mixer is the second-ranked overall. It retains of CodeBERT’s performance with a of .

Result 6: Vul-Mixer generalizes 98–121% as well as MLAVD baselines. Vul-Mixer effectively closes the gap between resource-efficient and MLAVD baselines with a that is of CodeBERT. There is only a absolute difference and a relative difference between their scores. This confirms that Vul-Mixer performs significantly better than both CoTexT and ReGVD.

A visualization of the

and

scores with the number of parameters

is shown in

Figure 3. Vul-Mixer is aligned with CodeBERT and LineVul for the top

and

scores and is separated from all other baselines. However, the size of the plotted points shows a clear separation in size between the models. In summary, Vul-Mixer is the resource-efficient model that is as effective as state-of-the-art MLAVD baselines.

5.4. RQ4: How Cost-Effective Is Vul-Mixer Compared to Baselines?

We now quantify the trade-off between efficiency and efficacy using cost-effectiveness and incremental cost-effectiveness ratios (ICER) [

44]. The results are shown in

Table 7.

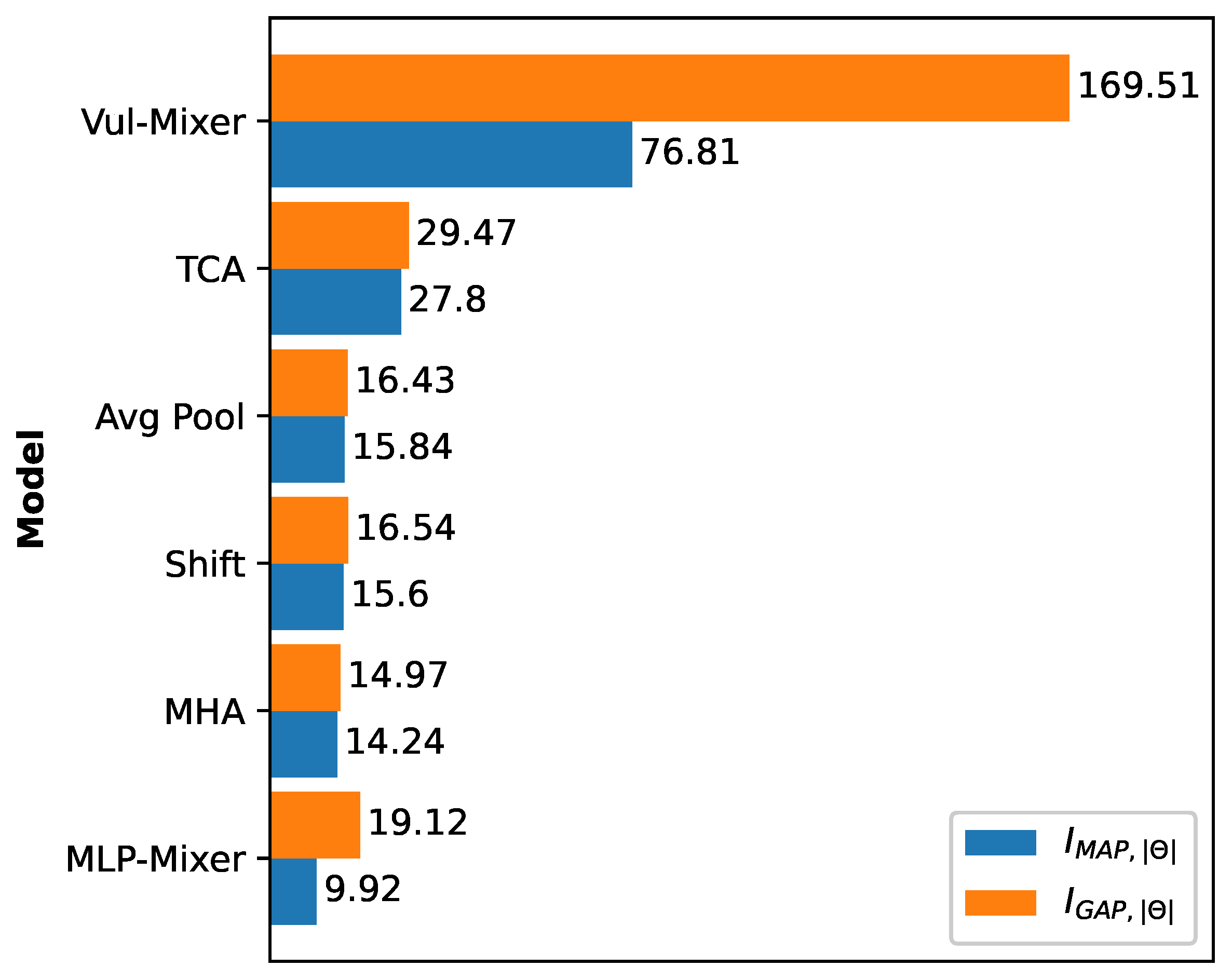

Result 7: Vul-Mixer is the most cost-effective MLAVD model. Across all ratios of effectiveness, Vul-Mixer obtains the top score. In other words, Vul-Mixer uses parameters and computational time more efficiently than all MLAVD and resource-efficient baselines. This is an indication that the architecture has been well-designed to promote the learning of features relevant to vulnerabilities. While TCA is the second most efficient architecture, it has far lower

and

scores than Vul-Mixer. The comparative results are further visualized in

Figure 4.

5.5. RQ5: Is Vul-Mixer Useful in Real-World Settings?

Finally, we wish to evaluate if Vul-Mixer is useful in a real-world setting. Rather than demonstrate Vul-Mixer’s ability to find a handful of vulnerabilities within a project, we quantify its performance in terms of

, precision, and recall on a collection of previously unseen vulnerabilities using the Big-Vul and DiverseVul datasets. They are both collected from high-quality sources providing high-accuracy true positives. We use three pieces of data from Big-Vul: (1) the vulnerable functions before being fixed, (2) the vulnerable functions after being fixed, and (3) the safe functions. The inclusion of functions before and after fixing intentionally increases the difficulty of the dataset. The prediction results for Vul-Mixer and the MLAVD baselines on this formulation of Big-Vul are shown in

Table 8.

Result 8: Vul-Mixer is state-of-the-art for generalizing to Big-Vul. Vul-Mixer obtains the highest score of which is a improvement over LineVul’s . This includes a increase in precision ( to ) and a increase in recall ( to ). Precision and recall can often be too abstract to evaluate actual performance. To translate this into more interpretable numbers, assume a project contains 100 vulnerabilities. Vul-Mixer would have 62 true positives and 1588 false positives. LineVul would have 47 true positives and 1654 false positives. CodeBERT, which has the highest precision at , would have 26 true positives and 1526 false positives.

Result 9: Vul-Mixer is state-of-the-art for generalizing to DiverseVul. Although a direct comparison with Chen et al. [

10] is not possible due to a lack of details, these metrics are in line with their results testing the generalization of baselines trained on a combination of datasets slightly smaller than Draper VDISC. Vul-Mixer performs similarly on DiverseVul to Big-Vul. It posts the top score demonstrating the robustness of these results.

We suspect that the lower overall metrics are a continued symptom of biased datasets. However, the low cost of Vul-Mixer makes it particularly useful for prioritizing functions for more accurate but expensive vulnerability detection methods. Due to its high recall, it is well-suited to the applications discussed in

Section 2.2.

5.6. Error Analysis

To understand the errors that Vul-Mixer makes, we first perform a Kolmogorov–Smirnov test between the distribution of predictions generated from safe and vulnerable samples in the D2A validation set. With the null hypothesis that the vulnerable distribution is less than or equal to the safe distribution, we may reject the null with a p-value of . This demonstrates that Vul-Mixer separates safe and vulnerable code sufficiently to detect a distributional shift.

Due to the fact that the datasets contain limited information about the structural details of the vulnerabilities contained within the code, it is challenging to say with certainty what are the most or least frequent errors that Vul-Mixer makes. Rather than cherry-picking relevant results, we present the two worst predictions based on the discrepancy between the predicted and target values—one safe and one vulnerable.

Figure 5 depicts a function with a prediction score of

, whose ground truth, however, is safe. It is related to the FFMPEG library and heavily uses buffers and bitwise operations. Although safe, it is certainly a complex function.

Similarly,

Figure 6 illustrates a function with a prediction score of

that is actually vulnerable. This function relates to OpenSSL’s verification of an X.509 digital certificate, which allocates space with

OPENSSL_malloc but never releases it. In the context of this function, this counts as a memory leak. It is worth noting that this pattern is commonly used by the OpenSSL library, and similar functions remain in its current code base (

https://github.com/openssl/openssl/blob/master/crypto/x509/x509_vpm.c#L82, accessed on 23 June 2024). Given that D2A labels data through a differential analysis, it is arguable whether this function should be truly labeled as a vulnerability.

6. Discussion: Threats to Validity

The primary threat to validity is whether vulnerability detection datasets are sufficiently diverse and unbiased for a model to learn the vulnerability detection task. For instance, Grahn et al. [

34] found MLAVD datasets have a tendency towards code that uses registers and the

extern keyword. Juliet, a common MLAVD dataset that was not used in this work, includes significant pre-split data augmentation. In addition, Zheng et al. [

45] also identified some data issues such as duplicated data, incomplete information, and inaccurate labels. Biases present in the dataset may result in models learning those misleading patterns rather than the underlying task. To mitigate these issues, we train and test each model across multiple datasets, ensuring a more comprehensive evaluation and reducing the impact of any single dataset’s biases. Previous research indicates that the dataset biases are inconsistent, and the ability of a model to generalize to unseen datasets is a better indicator of its overall performance [

16].

Furthermore, we perform a set of tests directly on known CVE vulnerabilities. We believe that the aggregate metrics provide a more accurate and unbiased representation of how each model performs on the task, independent of the dataset, programming language, or vulnerability type. Therefore, when trained on a sufficiently large and diverse dataset, we expect each model’s relative ranking to remain consistent.

Additionally, a lack of understanding of how the brain processes code (either on our part or in current research) could mean that this architecture is not well aligned with how the brain functions. While we argue that better-aligned models would perform better, the motivation of this paper was to draw inspiration from the brain’s functionality, not necessarily to emulate it. The results presented are not affected by how well-aligned the model is with brain processes.