Abstract

Generalized zero-shot learning (GZSL) aims to simultaneously recognize both seen classes and unseen classes by training only on seen class samples and auxiliary semantic descriptions. Recent state-of-the-art methods infer unseen classes based on semantic information or synthesize unseen classes using generative models based on semantic information, all of which rely on the correct alignment of visual–semantic features. However, they often overlook the inconsistency between original visual features and semantic attributes. Additionally, due to the existence of cross-modal dataset biases, the visual features extracted and synthesized by the model may also mismatch with some semantic features, which could hinder the model from properly aligning visual–semantic features. To address this issue, this paper proposes a GZSL framework that enhances the consistency of visual–semantic features using a self-distillation and disentanglement network (SDDN). The aim is to utilize the self-distillation and disentanglement network to obtain semantically consistent refined visual features and non-redundant semantic features to enhance the consistency of visual–semantic features. Firstly, SDDN utilizes self-distillation technology to refine the extracted and synthesized visual features of the model. Subsequently, the visual–semantic features are then disentangled and aligned using a disentanglement network to enhance the consistency of the visual–semantic features. Finally, the consistent visual–semantic features are fused to jointly train a GZSL classifier. Extensive experiments demonstrate that the proposed method achieves more competitive results on four challenging benchmark datasets (AWA2, CUB, FLO, and SUN).

1. Introduction

Deep learning models typically necessitate extensive, heavily labeled data during training, incurring significant human and resource costs. The introduction of Zero-Shot Learning (ZSL) effectively mitigates this constraint of deep learning models by learning the mapping relationship from auxiliary (e.g., semantic) to visual space, facilitating the classification and recognition of unseen classes [1]. However, traditional ZSL settings are somewhat idealized as they assume that the test set solely comprises samples from seen classes, which is not reflective of real-world scenarios. Generalized Zero-Shot Learning (GZSL) introduces a more rigorous task where the test set can encompass samples from both seen and unseen classes, better aligning with practical needs.

Presently, research on GZSL primarily centers on two distinct strategies. Firstly, some researchers focus on methods grounded in Generative Adversarial Networks (GANs) [2,3,4,5,6,7], which employ generative models to learn the mapping relationship from semantic attributes to visual features and subsequently synthesize visual samples of unseen classes based on semantic information. Secondly, other researchers concentrate on embedding-based methods [8,9,10,11,12,13,14], striving to embed visual samples into a shared feature space to accurately reflect the semantic similarity between different classes. Through this approach, models can conduct classification reasoning using structural information in the embedding space with minimal or zero samples. Both of these strategies align visual–semantic features through either generative or embedding methods, tackling the challenges inherent in ZSL.

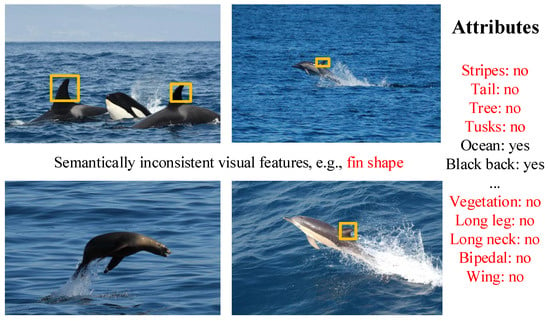

However, they introduce a new challenge: they often overlook the potential inconsistency in the visual–semantic features to be aligned. As illustrated in Figure 1, certain visual features, such as “fish fin”, demonstrate distinctiveness in discerning image samples within the visual modality. However, these features are not encompassed within the manually annotated semantic attributes, thus termed as semantically inconsistent visual features. Moreover, within the semantic modality, there may also be redundant semantic attributes that are inconsistent with visual features. For instance, classes like “dolphin”, “seal”, and “killer whales” in Figure 1 share multiple redundant semantic attributes unrelated to their visual features, such as “stripe:no”, “tree:no”, “vegetation:no”, “long legs:no”, and “long neck:no”. Most GZSL methods overlook these inconsistent visual–semantic features and forcibly align them, potentially introducing biases in visual–semantic feature alignment and undermining the recognition of unseen classes. Moreover, current GZSL approaches frequently employ pre-trained ImageNet models for extracting GZSL visual features and training generative models to synthesize visual features of unseen classes. However, the presence of cross-modal dataset bias [3] implies that the extracted and synthesized visual features might lack refinement and could stray from the visual features necessary for ZSL tasks, thereby worsening the problem of visual–semantic feature inconsistency.

Figure 1.

Illustration of visual features inconsistent with annotated attributes (highlighted in yellow boxes) and redundant annotated attributes inconsistent with visual features (highlighted in red text).

We think that extracting and synthesizing refined visual features to enhance the semantic consistency of visual features, and segregating semantic-consistent visual features and visually consistent non-redundant semantic features from raw visual–semantic features to bolster the consistency of visual–semantic features, can alleviate the aforementioned issues. Hence, this paper proposes a GZSL framework that enhances the consistency of visual–semantic features using self-distillation and disentanglement network. Specifically, we first devise a self-distillation module that leverages self-distillation technology to augment both the feature extraction model and the generative model in the context of generative GZSL. This enables them to concurrently acquire refined mid-layer features and soft label knowledge from the auxiliary self-teacher network, thereby stimulating the model to extract and synthesize refined visual features. Additionally, we devise a disentanglement network applied to the visual–semantic modality. For instance, in the visual modality, the visual disentanglement encoder projects visual features into and . To ensure the consistency of with semantic features, visual–semantic features are cross-reconstructed, and a semantic relationship-matching method is employed to calculate the compatibility score between and semantic information to guide the learning of . Furthermore, a latent representation-independent method is applied to enforce the independence between and . Ultimately, the disentanglement network attains consistent visual–semantic features, which are amalgamated to jointly train a GZSL classifier.

In summary, the contributions of this paper are as follows:

- We identified that most models typically do not handle visual–semantic inconsistent features and directly align them, which may lead to alignment bias. We propose an approach to enhance the consistency of visual–semantic features by refining visual features and disentangling original visual–semantic features.

- We designed a self-distillation embedding module, which generates soft labels through an auxiliary self-teacher network and employs soft label distillation and feature map distillation methods to refine the original visual features of seen classes and synthesized visual features of unseen classes from the generator, thereby enhancing the semantic consistency of visual features.

- We proposed a disentanglement network, which encodes visual–semantic features into latent representations and promotes visual–semantic consistent features to be separated from original features through semantic relation matching and latent representation independence methods, significantly enhancing the consistency of visual–semantic features.

- Extensive experiments on four GZSL benchmark datasets demonstrate that our model can separate refined visual–semantic features with consistency from original visual–semantic features, thereby alleviating alignment bias caused by visual–semantic inconsistency and improving the performance of GZSL models.

2. Related Work

2.1. Generative-Based Generalized Zero-Shot Learning

In recent years, numerous studies have employed generative models to bolster the efficacy of GZSL tasks. GANs or VAEs are commonly utilized in generative GZSL to synthesize visual features for unseen classes. These synthesized visual features for unseen classes are subsequently integrated with original visual features for seen classes to train classifiers. For example, Narayan et al. [15] employed VAEs and GANs to refine the quality of synthesized visual features for unseen classes. They introduced a feedback module to regulate the generator’s output, effectively diminishing ambiguity between classes. Zhang et al. [16] combined generative and embedding-based models by projecting real and synthesized samples onto an embedding space for classification, establishing a hybrid ZSL framework that effectively addresses data imbalance issues. Li et al. [17] proposed an innovative approach that integrates a Transformer model with VAE and GAN, capitalizing on the rich data representation from VAE and the diversity of data generated by GAN to mitigate dataset diversity bias, while utilizing Transformer to enhance semantic consistency. DGCNet [18] introduced a Dual Uncertainty Guided Cycle-Consistent Network, which examines the relationship between visual and semantic features through a cycle-consistent embedding framework and dual uncertainty-aware modules, effectively addressing alignment shift problems and enhancing model discriminability and adaptability. However, these methods often ignore the existence of semantically inconsistent visual features and redundant semantic attributes in the original visual–semantic features, which may affect the correct alignment of visual–semantic features. Instead, by first decoupling visual–semantic consistent features before alignment, we have improved the model’s accuracy.

2.2. Knowledge Distillation

Knowledge distillation [19] serves as a model compression technique, aiming to reduce the size and computational complexity of a model by transferring knowledge from a complex neural network (referred to as the teacher network) to a smaller neural network (referred to as the student network). Initially, the concept of knowledge distillation emerged by encouraging the student network to imitate the output log-likelihood of the teacher network [20]. Subsequent research introduced intermediate layer distillation methods, enabling the student network to acquire knowledge from the convolutional layers of the teacher network with feature map-level locality [21,22,23,24], or from the penultimate layer of the teacher network [25,26,27,28,29]. However, these methods necessitate pre-training a complex model as the teacher network, a process consuming substantial time and resources. Some recent studies have proposed self-knowledge distillation [30,31], enhancing the training of the student network by leveraging its own knowledge without requiring an additional teacher network. For instance, Zhang et al. [32] segmented the network into several parts and compressed deep-layer knowledge into shallow layers. DLB [33] utilizes instant soft targets generated in the training process of the previous iteration for distillation, achieving performance improvement without altering the model structure. FRSKD [34] introduces an auxiliary self-teacher network to refine knowledge transfer to the student’s classifier network, capable of performing self-knowledge distillation using both soft labels and feature map distillation. This paper adopts the concept proposed by FRSKD to construct a self-distillation embedding module, aiming to refine the original seen visual features and the unseen visual features synthesized by the generator.

3. Materials and Methods

3.1. Problem Definition

In GZSL, the dataset comprises visual features X, semantic attributes C, and labels Y, which can be divided into seen classes S and unseen classes U. Specifically, the visual feature set is defined as , and the corresponding label set is represented as , where and are disjoint sets. Semantic attributes are defined as . Visual features and are defined as the ith visual feature, where and . The corresponding labels for seen and unseen classes are denoted as and , while and represent the ith semantic feature, where and . Thus, the training dataset is defined as , and the testing dataset is defined as . The objective of GZSL is to learn a classifier .

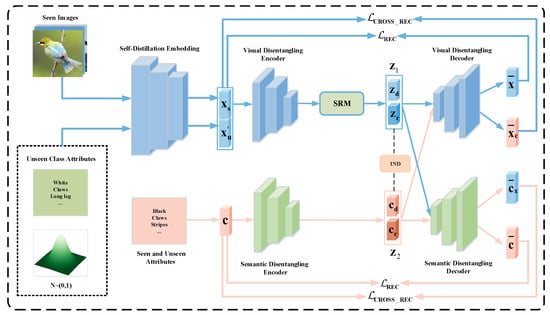

3.2. Overall Framework

The SDDN architecture primarily comprises two key modules, as depicted in Figure 2. The first module, known as the self-distillation embedding module, utilizes feature fusion techniques and an auxiliary self-teacher network to transfer refined visual features to the student network. It then employs both soft label distillation and feature map distillation to facilitate the generation of refined features by the generative model, thereby enhancing the consistency of visual–semantic features. The second module, referred to as the disentanglement network, employs semantic relationship matching (SRM) method and latent representation independent (IND) method to guide the visual–semantic disentanglement autoencoder in decoupling semantic-consistent visual features and non-redundant semantic features, further strengthening the consistency of visual–semantic features.

Figure 2.

The framework of our SDDN model.

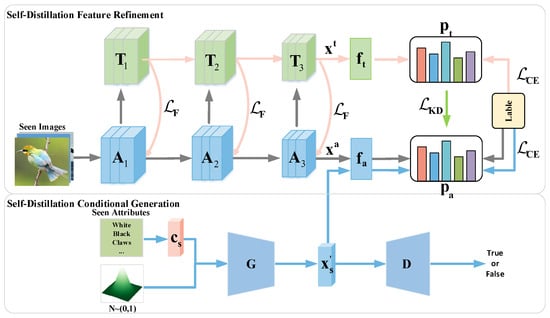

3.3. Self-Distillation Embedding Module

In order to refine the seen visual features extracted by the pre-trained ResNet101 [35] and the unseen visual features synthesized by the generator, thereby improving their semantic consistency. We designed a self-distillation embedded (SDE) module, as shown in Figure 3. The SDE module, comprised of a self-distillation feature refinement (SDFR) module and a self-distillation conditional generation (SDCG) module, was designed for this purpose. The SDFR module integrates top-down and bottom-up feature fusion methods [36] to direct the auxiliary self-teacher network in generating refined intermediate feature maps and soft labels. Following this, feature distillation and soft label distillation methods refine the visual features extracted by the student network. The SDCG module encompasses a generator and a discriminator. It trains the generator to synthesize visual features through a generative adversarial approach and employs feature map distillation and soft label distillation methods for refining the generated visual features.

Figure 3.

Architecture of the self-distillation embedding module. Here, denotes the block of the student network, represents the block of the auxiliary self-teacher network, G stands for the generator, and D signifies the discriminator. and denote the classifier layers of the auxiliary self-teacher network and the student network, respectively, while and represent the soft labels outputted by the auxiliary self-teacher network and the student network, respectively.

3.3.1. Auxiliary Self-Teacher Network

To refine the visual features extracted by the pre-trained ResNet101 and pass them to the generator of the SDCG module, we devised an auxiliary self-teacher network T based on the architecture proposed by Ji et al. [34]. This auxiliary self-teacher network, depicted in green in Figure 3, also uses the pre-trained ResNet101. Subsequently, we utilized top-down and bottom-up feature fusion methods [36] to guide the auxiliary self-teacher network in generating refined intermediate feature maps . At the layer preceding the classification layer , this network outputs the final extracted visual features , while the last classification layer outputs soft labels . Deep neural networks excel at learning representations at various levels; hence, outputs from intermediate and output layers can both contribute to training the student network. In our methodology, we employ ResNet101 (depicted in blue in Figure 3) as the student network A, enriching the visual features extracted by ResNet101 with soft labels from the output of the self-teacher network and refined feature maps from the intermediate layers. The formula for generating in the auxiliary self-teacher network is defined as follows:

Here, T is the temperature parameter [20], typically set to 1. Higher values of T result in softer class probability distributions. denotes the classifier of the auxiliary self-teacher network. The student network learns from through KL divergence, expressed as

The intermediate layer feature outputted by the ith student network block is denoted as . The student network learns the refined intermediate layer features produced by the auxiliary self-teacher network through the feature distillation method, which is implemented by the loss function . The definition of is as follows:

where represents the channel pooling function. Additionally, class predictions are made on the enhanced visual features, and the cross-entropy loss between the prediction results and the true labels is minimized to ensure the accuracy of the enhanced visual features. Finally, the loss function for refining visual features by the auxiliary self-teacher network is denoted as , which is defined as

3.3.2. Self-Distillation Conditional Generation Module

In order to synthesize refined visual features for unseen classes, thereby addressing the issue of lacking unseen visual features in ZSL, we devised a self-distillation conditional generation module. This module employs a conditional generator G and a discriminator D to form a generative adversarial network. During the training of this module, Gaussian noise and semantic descriptors of seen classes are firstly utilized as conditional inputs to G, to synthesize visual features . Subsequently, the synthesized is fed into the trained student network classifier to obtain soft label . To create a clear contrast with the synthesized fake seen class visual features , we define the real seen visual features obtained by the student network in Figure 3 as , minimizing the loss between and soft labels to ensure the consistency between and real visual features . The loss function is defined as follows:

Simultaneously, to ensure the accuracy of the synthesized visual features, we compute the cross-entropy loss between and the real labels . Additionally, the discriminator D is employed to distinguish between real seen class samples and synthesized seen class samples , minimizing their loss to ensure that the visual features synthesized by the generator are close to the real visual features.

Here, , and represents the penalty coefficient. The loss function for the SDCG module is as follows:

Finally, the overall loss of the SDE module is

3.4. Disentanglement Network

To further bolster the consistency of visual–semantic features, we propose a disentanglement network. This network utilizes a semantic relation matching method and an independent latent representation method to guide the visual–semantic disentangled autoencoder in separating visually consistent features and non-redundant semantic features from the original data. Furthermore, it aligns these features using a cross-reconstruction method to further strengthen their consistency.

3.4.1. Visual–Semantic Disentangled Autoencoder

The visual–semantic disentangled autoencoder (VSDA) comprises two parallel variational autoencoders dedicated to processing visual and semantic modalities separately. Each variational autoencoder includes a disentangled encoder and decoder. The disentangled encoder maps the feature space to the latent space, while the decoder maps the latent space back to the feature space. The primary role of the VSDA is to acquire effective latent representations, and , for visual–semantic features and to separate along the column dimension into and , and into and . Specifically, the visual disentanglement encoder and the semantic disentanglement encoder encode visual features and semantic features c into and , respectively. Taking as an example, its row dimension represents the number of samples in a batch n, while the column dimension represents m. Let l be a value along the column dimension belonging to the interval . The elements of from column dimension 0 to l are defined as , and the remaining columns from l to m are defined as . follows the same process. This procedure can be mathematically expressed as

In the case of the visual modality, it is important to note that and at this stage merely represent a simple partitioning of along its dimensions. It is necessary to subsequently use the semantic relationship matching method in Section 3.4.2 and the latent representation independent method in Section 3.4.3 to make the latent representation represented by semantically consistent, and at the same time change the latent representation represented by into semantically irrelevant.

By minimizing the KL divergence loss between the latent variable distribution and the predefined prior distribution, the VSDA can effectively learn representations in latent space. Consequently, to guarantee the validity of the latent representations and obtained by the visual–semantic disentangled autoencoder, we optimize the KL divergence loss between the latent variable distribution and the predefined prior distribution. This process can be formulated as

To reduce information loss between the visual and semantic modalities, we employ disentangled decoders to reconstruct the latent representations and , and minimize the loss between the reconstructed visual–semantic features and the real features. Specifically, we use the visual disentangled decoder to reconstruct into visual features , and the semantic disentangled decoder to reconstruct into semantic features . Subsequently, we compute the loss between the reconstructed visual features and the real visual features x, and the loss between the reconstructed semantic features and the real semantic features c. Summing these losses yields the total reconstruction loss . can be expressed as

Simultaneously, to enable the model to learn the association between visual and semantic features and reduce the deviation between modalities, cross-modal cross-reconstruction is designed. Specifically, we employ a semantic disentangling decoder to reconstruct the visual latent representation into semantic features , and a visual disentangling decoder to reconstruct the semantic latent representation into visual features . Subsequently, we minimize the reconstruction loss between , , and the original semantic and visual features c and x. This process can be mathematically formulated as follows:

Here, the mean square error (MSE) is utilized to calculate the reconstruction loss between the original visual features and the reconstructed features. Finally, the overall loss of the VSDA is

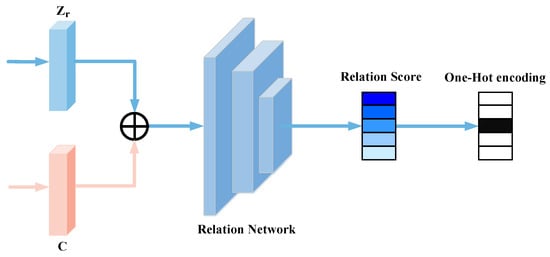

3.4.2. Semantic Relation Matching

To guide the visual disentangled encoder in separating semantically consistent latent representations into , we designed the semantic relation matching (SRM) method. This method introduces a relation network (RN) [37] to assess the matching relationship between visual and semantic features, as illustrated in Figure 4. The RN evaluates the distance between two samples by constructing a neural network, thereby measuring their matching degree. Thus, we can constrain represented latent representations to match with c using RN. This means that RN will encourage the visual disentangled encoder to encode semantically consistent visual features from the original features into , while semantically inconsistent visual features will be encoded as . In the SRM method, we first concatenate with its uniquely corresponding semantic feature c, and compute their compatibility score CS. Specifically, when the labels of and c are the same, the match is successful, and CS is set to 1. When the labels of and c are different, the match fails, and CS is set to 0. This process can be formulated as

Figure 4.

Architecture of semantic relational matching model.

Here, t and b represent the tth semantically consistent representation and the bth unique corresponding semantic feature in the training batch, and and represent the class labels of and , respectively. Utilizing CS defined in Equation (13), a RN with a sigmoid activation function learns a compatibility score ranging from 0 to 1 for each pair . Then, the following loss function is used to optimize :

Here, B denotes the size of the training batch, and n denotes the number of unique semantic features corresponding to the training batch. In each training batch, calculate the mean square error between the output of the relation score of each pair and and the ground truth CS, optimized by mean square error. This loss ensures that is a semantically consistent latent representation.

3.4.3. Independence between Latent Representations

In Section 3.4.3, we utilized the SRM method to guide the visual disentangled encoder to preliminarily transform into semantically consistent visual feature latent representations, while transforming into semantically irrelevant latent representations. To further enhance the decoupling between visually consistent latent representations and visually irrelevant ones , while also encouraging the semantic disentangled encoder to encode visually consistent and visually irrelevant semantic features into latent representations and , respectively, we devised the latent representation independence (IND) method. Specifically, from a probabilistic perspective, and can be considered to come from different conditional distributions in the visual modality, while and can be considered to come from different conditional distributions in the semantic modality:

where and are distributions for and , respectively, and and are distributions for and , respectively. Therefore, the independence between and denoted as , the independence between and denoted as , and their overall independence denoted as can be expressed as

where is the joint conditional probability of and , and similarly for the semantic modality. Taking the visual modality as an example, when , and are dependent, they are denoted as , while when , and are independent, they are denoted as . Therefore, can be represented as

We introduce a discriminator to approximate ; thus, can be approximated by the following formula:

During the training of the discriminator, we randomly shuffle and in each training batch, then concatenate them to obtain . Finally, the loss of the discriminator on the visual modality and the semantic modality is given by

In summary, the total loss of our SDDN framework is formulated as

3.5. Classification

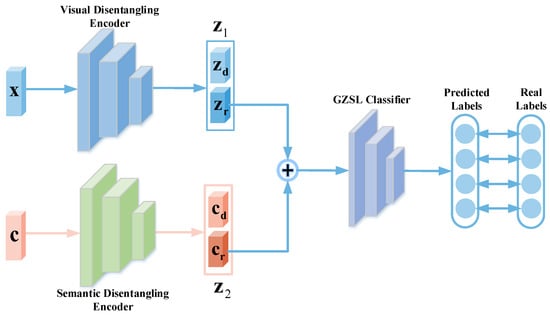

When training the classifier, the seen visual features , refined by the auxiliary self-teacher network, and the synthesized unseen visual features from the SDCG module are combined into . Next, x is processed by the trained visual disentangling encoder to yield both semantically consistent latent representation and semantically irrelevant latent representation . Similarly, the semantic attribute set c for both seen and unseen classes is fed into the trained semantic disentangling encoder, producing visually consistent latent representation and visually irrelevant latent representation . Subsequently, these representations and are fused and input into a softmax-based GZSL classifier for category prediction. Finally, the training concludes by minimizing the loss between the real and predicted labels. The whole process is shown in Figure 5.

Figure 5.

Scheme of classification.

4. Experiments

4.1. Datasets

We conducted comprehensive tests on four publicly available benchmark datasets: Caltech-UCSD Birds-200-2011 (CUB) [38], Animals with Attributes2 (AWA2) [39], SUN Attribute Dataset (SUN) [40], and Oxford Flowers (FLO) [41]. All datasets and their statistics are summarized in Table 1. CUB is a fine-grained dataset comprising 11,788 images from 200 different bird species, with 150 seen classes and 50 unseen classes. Each image in CUB is annotated with 312 dimensions of attributes. FLO is another fine-grained dataset consisting of flower images, containing 8189 images across 102 classes, including 82 seen classes and 20 unseen classes. The annotation attributes in FLO have 1024 dimensions. SUN is a fine-grained image dataset featuring various scenes, with 14,340 images covering 717 classes (645 seen classes and 72 unseen classes). Each scene in SUN is associated with 102-dimensional attributes describing its characteristics, such as lighting conditions, weather conditions, and terrain. AWA2 is a coarse-grained dataset with 37,322 animal images across 50 classes (10 seen classes and 40 unseen classes), covering a wide range of animals, including mammals, birds, and reptiles. Each image in AWA2 is labeled with attributes of 85 dimensions.

Table 1.

Statistics of the AWA2, CUB, FLO and SUN datasets, including visual feature dimension , semantic feature dimension , number of seen classes , number of unseen classes and number of all instances .

4.2. Evaluation Protocol

During testing, the accuracy is assessed on the test sets for both seen classes (S) and unseen classes (U). Here, U represents the average accuracy for each class on test images of unseen classes, indicating the model’s ability to classify samples from previously unseen classes. S represents the average accuracy for each class on test images of seen classes, reflecting the model’s ability to classify samples from seen classes. H (defined as ) represents the harmonic mean of S and U, serving as an evaluation metric for the performance of GZSL classification.

4.3. Implementation Details

SDDN mainly consists of a SDE module and a disentanglement network. The SDE module mainly consists of a student network, a self-teacher network, a generator and a discriminator. The student network is a ResNet101 model pre-trained on ImageNet and is used to extract visual features with a dimension of 2048. The self-teacher network is composed of the student network itself and the feature fusion method. In addition, the generator is implemented using a multi-layer perceptron with a hidden layer dimension of 2048, and the discriminator is implemented using a fully connected layer and activation function. The disentanglement network consists of an encoder, a decoder, a discriminator and a semantic relationship matching model. Both the encoder and decoder are multi-layer perceptrons with a single hidden layer and 2048 hidden units. The semantic relationship matching model consists of two fully connected layers activated with Smooth Maximum Unit (SMU) [42] activation function and Sigmiod function, respectively. The discriminator is implemented using a fully connected layer and SMU activation function.

The hardware environment used by SDDN is an Intel i7-10700K CPU, RTX A5000 32GB GPU; the software environment is Ubuntu 20.04 LTS operating system, cuda 11.4.0, and cudnn 8.2.4. SDDN is implemented in PyTorch 1.10.1. The Adam optimizer is used to optimize the parameters of each module. The learning rate of the Adam optimizer is set to , and . The batch size is 64. The loss weight of the semantic relation matching method, the loss weight of the latent representation independence method, and the weight of the visual–semantic discriminator are set between 0.1–25.

Comparing with the State of the Art

To validate the effectiveness of our proposed SDDN model, we computed the seen class accuracy rate S, unseen class accuracy rate U, and their harmonic mean H on the aforementioned four datasets. We compared them with 15 state-of-the-art models, and the comparison results are shown in Table 2. These 15 models are categorized into methods based on generative models and methods not based on generative models. Generative-based methods typically utilize techniques such as GANs or VAEs to generate synthetic unseen class data to augment the training dataset. These synthetic data can be used to train models in ZSL to improve their generalization capability to unseen classes. Non-generative-based methods, on the other hand, do not rely on generating synthetic data but achieve generalization to unseen classes through techniques such as feature embedding and alignment of existing data. Our method belongs to the generative-based methods.

Table 2.

Performance comparison in accuracy (%) on four datasets. Displaying the accuracies of seen and unseen classes in GZSL, denoted as U, S, and H for the harmonic mean. The methods above and below the horizontal line correspond to non-generative and generative approaches, respectively. Results in bold font indicate the highest performance.

From the comparison results in the table, firstly, our SDDN achieved the highest accuracy on U, S, and H on the FLO dataset, surpassing all compared models. Specifically, we outperformed the second-best model by 2.3% in the H metric. There was a significant improvement in the U metric, where we led the second-best by 4%. In the S metric, we were ahead of the second-best by 0.3%. On the CUB dataset, we achieved the highest accuracy on the U metric, leading the second-best by 1.1%. Additionally, we obtained the second-best accuracy on both U and S metrics, leading the third-best by 6% and 1.9%, respectively. On the SUN dataset, we attained the highest accuracy on both S and H metrics, leading the second-best by 2% in the H metric and 1.4% in the S metric.

Overall, our performance was the best in the H metric on these three fine-grained datasets, FLO, CUB, and SUN; the best on U in FLO; and the best on S in both FLO and SUN. This indicates that the richer the information in the dataset, the more effectively our proposed method can capture it through self-distillation and disentanglement techniques, separating visual–semantic consistent features and aligning them effectively.

5. Model Analysis

5.1. Ablation Study

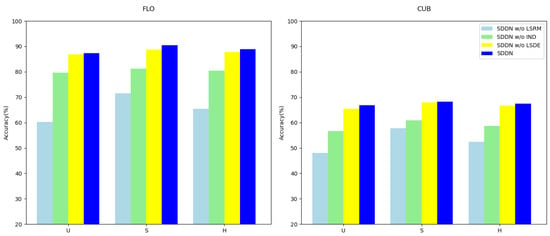

In our ablation study, we aim to isolate the key components of SDDN and assess their impact on GZSL. We remove the semantic relation matching loss (LSRM) to evaluate the contribution of the Visual–Semantic Matching module to extracting semantically consistent visual features. Omitting the Independence score (IND) allows us to evaluate its contribution to further separating visual–semantic consistent features. Additionally, we exclude the loss of the self-distillation embedding module (LSDE) and then used the pre-trained ResNet101 and regular generator without employing the self-distillation technique. This evaluation helps assess the refinement effect of SDE on extracting and synthesizing visual features, while validating the effectiveness of the disentanglement network. Our ablation experiments were conducted on the FLO and CUB datasets, with the experimental results presented in Table 3 and Figure 6.

Table 3.

Ablation study of different component combinations on FLO and CUB datasets. Results are reported in %, with the best results highlighted in bold.

Figure 6.

Ablation study on different components combinations of the FLO and CUB datasets.

The results underscore the critical importance of the semantic relation matching module (LSRM), independence score (IND), and self-distillation embedding module (LSDE) for the performance of SDDN. Firstly, LSRM is particularly crucial for visual–semantic feature alignment, as its removal leads to a significant decrease in the accuracy of seen classes (S), unseen classes (U), and the harmonic mean (H). Secondly, IND is essential for further separating visual–semantic consistent features from the original features, as its removal results in lower U, S, and H values. Additionally, LSDE helps refine the original visual features of seen classes and the synthesized features of unseen classes, as the model without LSDE performs lower in U, S, and H compared to the complete SDDN. Furthermore, when comparing Table 1, it is found that the model without SDE still achieves the highest H score on FLO and CUB, indicating the effectiveness of the disentanglement network. Finally, the complete SDDN model demonstrates superior performance across all metrics, proving its effectiveness in GZSL.

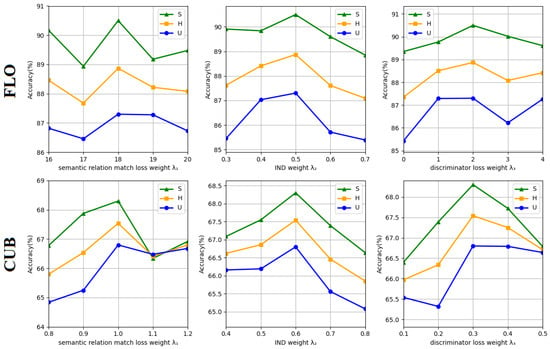

5.2. Hyper-Parameter Analysis

In this study, the optimization objective of SDDN is determined by three critical hyperparameters: the coefficient of semantic relationship matching loss (), the coefficient of independence of latent representations (), and the coefficient of discriminator loss (). To elucidate the influence of each hyperparameter on model performance, sensitivity analysis was conducted by varying the hyperparameter values in the experiments. Specifically, was varied within the range of 0.3–20.0, while and were varied within the range of 0.1–3.0. Figure 7 illustrates the significant impact of hyperparameter values , , and on the experimental outcomes. Notably, when is set to 18, is set to 0.5, and is set to 2, the model achieves its highest accuracy on the FLO dataset, whereas when is set to 1, is set to 0.6, and is set to 0.3, the model achieves its highest accuracy on the CUB dataset. These observations underscore the substantial influence of hyperparameter weights on model accuracy, indicating the model’s high sensitivity to these hyperparameters. Based on these findings, we advocate for future experiments to focus on exploring the specific impact of minor fluctuations in these three hyperparameter values on accuracy. This systematic analysis of hyperparameters will contribute to a deeper comprehension of model behavior and offer valuable insights for optimizing model performance across diverse datasets.

Figure 7.

Hyperparameter analysis: the impact of values for weights , , and on model performance is examined.

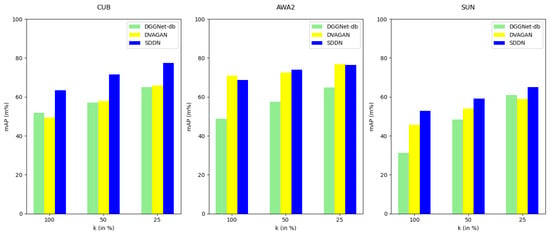

5.3. Zero-Shot Retrieval Performance

To assess the practical application performance of our SDDN framework, we conducted zero-shot retrieval experiments comparing SDDN with two other state-of-the-art generative-based GZSL frameworks: DGGNet-db and DVAGAN. The experiment follows the zero-shot retrieval protocol in SDGZSL [2]. In zero-shot retrieval experiments, we initially provide semantic features of unseen classes, followed by employing the generation modules of SDDN, DGGNet-db, and DVAGAN to synthesize a certain number of visual features for these unseen classes. Throughout this process, the average of the synthesized visual features for each category is computed as the retrieval feature. Subsequently, the cosine similarity between the retrieval features and the true features is calculated, and the true features are ranked in descending order based on this similarity. The performance of zero-shot image retrieval is evaluated using mean Average Precision (mAP). Experimental analyses are performed on three datasets: CUB, AWA2, and SUN. The results, illustrated in Figure 8, compare SDDN, DGGNet-db, and DVAGAN in terms of zero-shot retrieval performance. The horizontal coordinates 100, 50, and 25 represent the proportions of unseen category images in the test dataset, being 100%, 50%, and 25%, respectively, while the vertical coordinate represents the average retrieval accuracy. Results indicate significantly higher zero-shot retrieval performance of the SDDN framework on the CUB and SUN datasets compared to DGGNet-db and DVAGAN. In the AWA2 dataset, we achieve the best performance when the proportion of unseen category images reaches 50%, and it remains close to the best performance when the proportion reaches 100%. These zero-shot retrieval performance tests on the three datasets further validate the effectiveness of the model.

Figure 8.

Comparison of zero-shot retrieval performance.

6. Conclusions

In this paper, we propose a generalized zero-shot learning framework that utilizes a self-distillation and disentanglement network to enhance visual–semantic feature consistency. Initially, for improving the semantic consistency of visual features, we develop a self-distillation embedding framework integrating self-distillation techniques with a conditional generator to prompt the synthesis of refined visual features. Subsequently, to further promote visual–semantic feature consistency, we design a disentanglement network. We use semantic relation matching networks and latent representation independence methods to facilitate the separation of visually semantically consistent features from inconsistent features. Additionally, we devise a cross-reconstruction method to align visual and semantic features within a visual–semantic common space, thereby enhancing the semantic consistency of visual–semantic features. Extensive experiments are conducted on four widely used benchmark datasets in GZSL. We compare SDNN with current state-of-the-art methods, thereby demonstrating the superiority of the proposed SDNN framework. In future work, we intend to optimize the model further and apply it in the field of medical diagnostics to assist in identifying new disease patterns.

Author Contributions

Responsible for proposing research ideas, modeling frameworks, content planning, guidance, and full-text revisions: X.L. Responsible for literature research, research methodology, experimental design, thesis writing, and full text revision: C.W. (Chen Wang). Responsible for lab instruction, guidelines, and full text revisions: G.Y. and C.W. (Chunhua Wang); Responsible for providing guidance, revising, and reviewing full text: Y.L., J.L. and Z.Z. All authors have read and agreed to the published version ofthe manuscript.

Funding

This work was supported by National Science and Technology Major Project (2020AAA0109703), Special Fund Project for Basic Scientific Research of Zhongyuan University of Technology (K2021TD05), National Natural Science Foundation of China (62076167, U23B2029), the Key Scientific Research Project of Higher Education Institutions in Henan Province (24A520058, 24A520060), Postgraduate Education Reform and Quality Improvement Project of Henan Province (YJS2024AL053), Key Scientific Research Project of Higher Education Institutions in Henan Province (23A520022) and The Research and Innovation Project of Graduate Students in Zhongyuan University of Technology (YKY2023ZK43).

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Wang, Z.; Hao, Y.; Mu, T.; Li, O.; Wang, S.; He, X. Bi-directional distribution alignment for transductive zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19893–19902. [Google Scholar]

- Chen, Z.; Luo, Y.; Qiu, R.; Wang, S.; Huang, Z.; Li, J.; Zhang, Z. Semantics disentangling for generalized zero-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Chen, S.; Wang, W.; Xia, B.; Peng, Q.; You, X.; Zheng, F.; Shao, L. Free: Feature refinement for generalized zero-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Li, X.; Xu, Z.; Wei, K.-J.; Deng, C. Generalized zero-shot learning via disentangled representation. AAAI Conf. Artif. Intell. 2021, 35, 1966–1974. [Google Scholar] [CrossRef]

- Chen, Z.; Li, J.; Luo, Y.; Huang, Z.; Yang, Y. Canzsl: Cycle-consistent adversarial networks for zero-shot learning from natural language. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 7–11 January 2019. [Google Scholar]

- Kim, J.; Shim, K.; Shim, B. Semantic feature extraction for generalized zero-shot learning. Proc. Aaai Conf. Artif. Intell. 2022, 3, 1166–1173. [Google Scholar] [CrossRef]

- Feng, L.; Zhao, C. Transfer increment for generalized zero-shot learning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2506–2520. [Google Scholar] [CrossRef] [PubMed]

- Sun, K.; Zhao, X.; Huang, H.; Yan, Y.; Zhang, H. Boosting generalized zero-shot learning with category-specific filters. J. Intell. Fuzzy Syst. 2023, 45, 563–576. [Google Scholar] [CrossRef]

- Min, S.; Yao, H.; Xie, H.; Wang, C.; Zha, Z.-J.; Zhang, Y. Domain-aware visual bias eliminating for generalized zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Xie, G.-S.; Liu, L.; Zhu, F.; Zhao, F.; Zhang, Z.; Yao, Y.; Qin, J.; Shao, L. Region graph embedding network for zero-shot learning. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part IV 16; Springer: Berlin/Heidelberg, Germany, 2020; pp. 562–580. [Google Scholar]

- Liu, Y.; Zhou, L.; Bai, X.; Huang, Y.; Gu, L.; Zhou, J.; Harada, T. Goal-oriented gaze estimation for zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Wang, C.; Min, S.; Chen, X.; Sun, X.; Li, H. Dual progressive prototype network for generalized zero-shot learning. Adv. Neural Inf. Process. Syst. 2021, 34, 2936–2948. [Google Scholar]

- Wang, C.; Chen, X.; Min, S.; Sun, X.; Li, H. Task-independent knowledge makes for transferable representations for generalized zero-shot learning. Proc. Aaai Conf. Artif. Intell. 2021, 35, 2710–2718. [Google Scholar] [CrossRef]

- Kwon, G.; AlRegib, G. A Gating Model for Bias Calibration in Generalized Zero-Shot Learning. arXiv 2022, arXiv:2203.04195. [Google Scholar] [CrossRef] [PubMed]

- Narayan, S.; Gupta, A.; Khan, F.S.; Snoek, C.G.M.; Shao, L. Latent embedding feedback and discriminative features for zero-shot classification. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part XXII 16; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Zhang, M.; Wang, X.; Shi, Y.; Ren, S.; Wang, W. Zero-shot learning with joint generative adversarial networks. Electronics 2023, 12, 2308. [Google Scholar] [CrossRef]

- Li, N.; Chen, J.; Fu, N.; Xiao, W.; Ye, T.; Gao, C.; Zhang, P. Leveraging dual variational autoencoders and generative adversarial networks for enhanced multimodal interaction in zero-shot learning. Electronics 2024, 13, 539. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Zhang, S.; Huang, Y. Dual-uncertainty guided cycle-consistent network for zero-shot learning. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6872–6886. [Google Scholar] [CrossRef]

- Han, Z.; Fu, Z.; Chen, S.; Yang, J. Contrastive embedding for generalized zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. arXiv 2016, arXiv:1612.03928. [Google Scholar]

- Kim, J.; Park, S.; Kwak, N. Paraphrasing Complex Network: Network Compression via Factor Transfer. arXiv 2020, arXiv:1802.04977v3. [Google Scholar]

- Yim, J.; Joo, D.; Bae, J.; Kim, J. A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7130–7138. [Google Scholar]

- Koratana, A.; Kang, D.; Bailis, P.; Zaharia, M. Lit: Learned intermediate representation training for model compression. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2019; pp. 3509–3518. [Google Scholar]

- Tung, F.; Mori, G. Similarity-preserving knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Peng, B.; Jin, X.; Liu, J.; Zhou, S.; Wu, Y.; Liu, Y.; Li, D.; Zhang, Z. Correlation congruence for knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Tian, Y.; Krishnan, D.; Isola, P. Contrastive representation distillation. arXiv 2019, arXiv:1910.10699. [Google Scholar]

- Liu, Y.; Cao, J.; Li, B.; Yuan, C.; Hu, W.; Li, Y.; Duan, Y. Knowledge distillation via instance relationship graph. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Xu, T.-B.; Liu, C.-L. Data-distortion guided self-distillation for deep neural networks. Proc. Aaai Conf. Artif. Intell. 2019, 33, 5565–5572. [Google Scholar] [CrossRef]

- Zhang, L.; Song, J.; Gao, A.; Chen, J.; Bao, C.; Ma, K. Be your own teacher: Improve the performance of convolutional neural networks via self distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3713–3722. [Google Scholar]

- Shen, Y.; Xu, L.; Yang, Y.; Li, Y.; Guo, Y. Self-distillation from the last mini-batch for consistency regularization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11943–11952. [Google Scholar]

- Ji, M.; Shin, S.; Hwang, S.; Park, G.; Moon, I.-C. Refine myself by teaching myself: Feature refinement via self-knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.S.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-Ucsd Birds-200–2011 Dataset; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Xian, Y.; Lampert, C.H.; Schiele, B.; Akata, Z. Zero-shot learning—A comprehensive evaluation of the good, the bad and the ugly. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2251–2265. [Google Scholar] [CrossRef] [PubMed]

- Patterson, G.; Hays, J. Sun attribute database: Discovering, annotating, and recognizing scene attributes. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2751–2758. [Google Scholar]

- Nilsback, M.-E.; Zisserman, A. Automated flower classification over a large number of classes. In Proceedings of the 2008 6th Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 16–19 December 2008; pp. 722–729. [Google Scholar]

- Biswas, K.; Kumar, S.; Banerjee, S.; Pandey, A.K. Smooth maximum unit: Smooth activation function for deep networks using smoothing maximum technique. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 794–803. [Google Scholar]

- Jiang, H.; Wang, R.; Shan, S.; Chen, X. Transferable contrastive network for generalized zero-shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Feng, Y.; Huang, X.; Yang, P.; Yu, J.; Sang, J. Non-generative generalized zero-shot learning via task-correlated disentanglement and controllable samples synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9346–9355. [Google Scholar]

- Xian, Y.; Sharma, S.; Schiele, B.; Akata, Z. f-vaegan-d2: A feature generating framework for any-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Li, J.; Jin, M.; Lu, K.; Ding, Z.; Zhu, L.; Huang, Z. Leveraging the invariant side of generative zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kong, X.; Gao, Z.; Li, X.; Hong, M.; Liu, J.; Wang, C.; Xie, Y.; Qu, Y. En-compactness: Self-distillation embedding & contrastive generation for generalized zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9306–9315. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).