A Spatiotemporal Graph Neural Network with Graph Adaptive and Attention Mechanisms for Traffic Flow Prediction

Abstract

1. Introduction

2. Methods

2.1. Initial Graph Structure

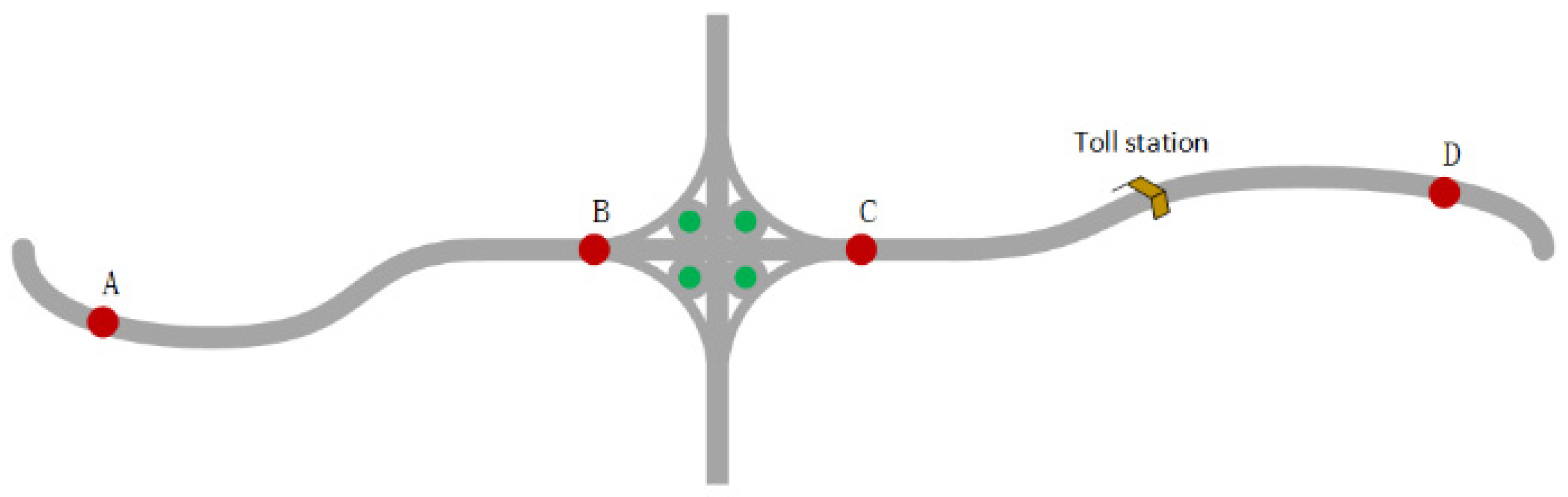

2.1.1. Node Connectivity Graph Structure

2.1.2. Distance Graph Structure

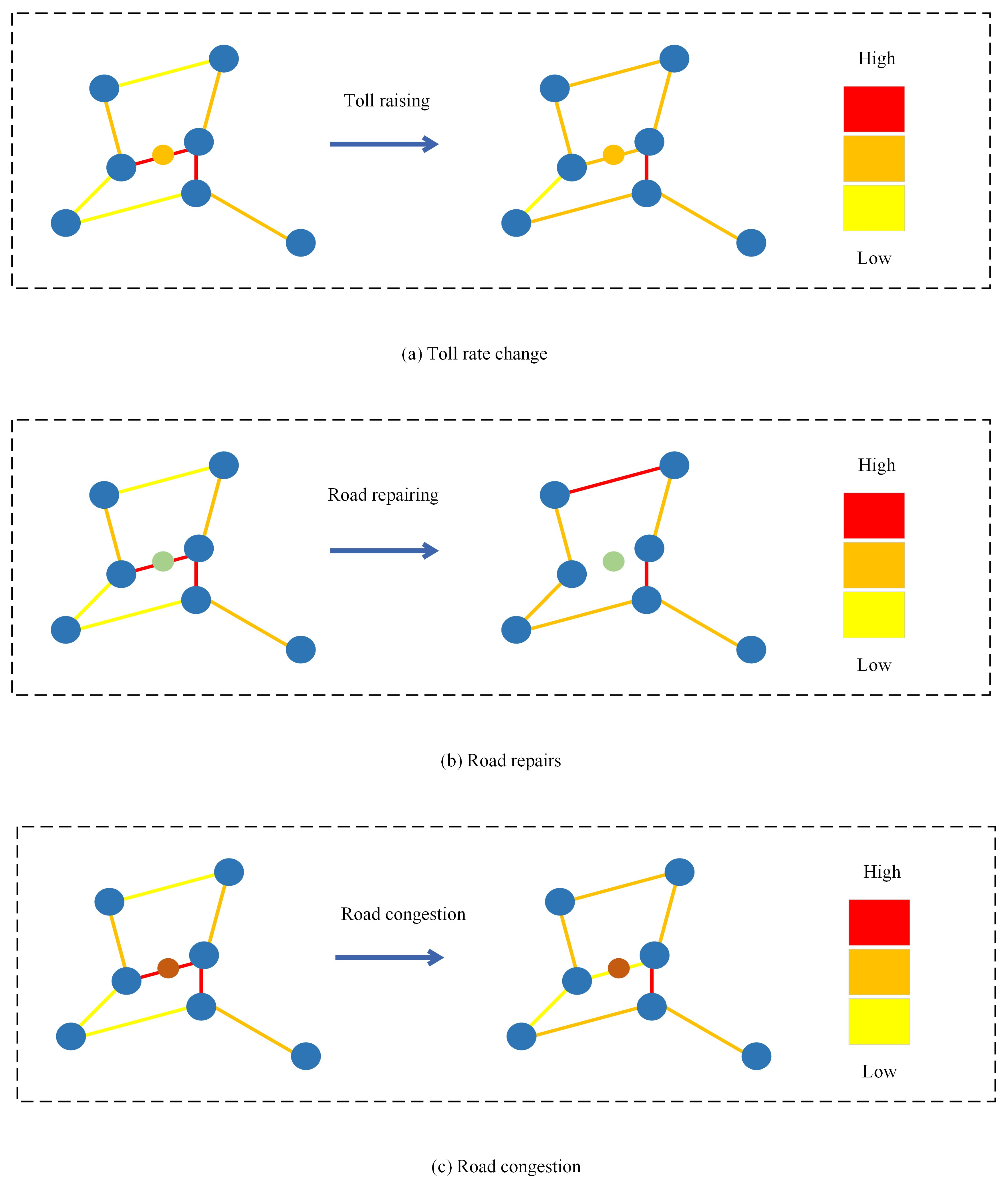

2.1.3. Toll Graph Structure

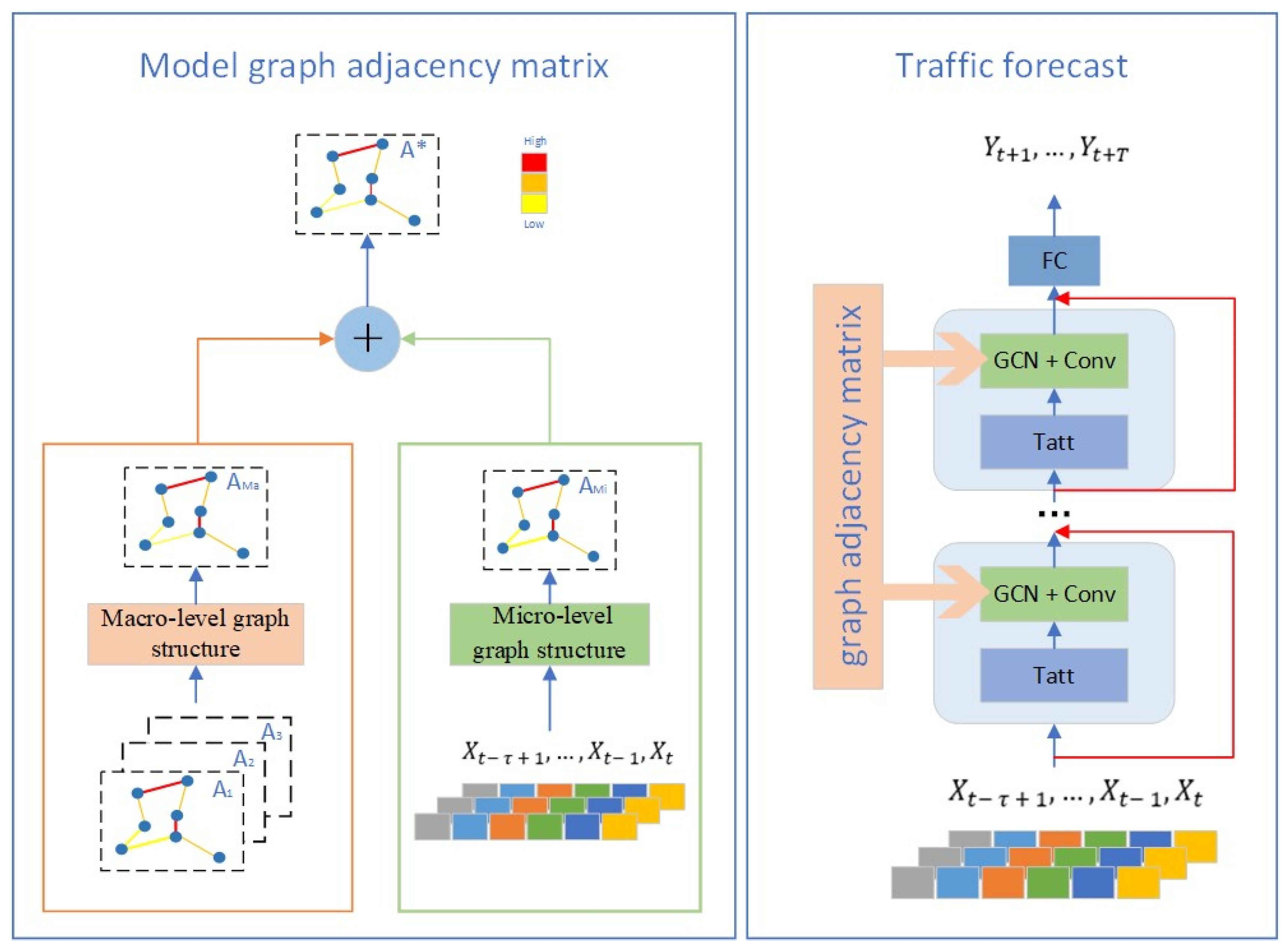

2.2. AASTGNet

2.2.1. Model the Optimal Graph Structure

Macroscopic Graph Structure

Microscopic Graph Structure

2.2.2. Graph and Temporal Dimension Convolution

3. Results and Discussion

3.1. Data Set

3.2. Baseline

3.3. Evaluation Metrics

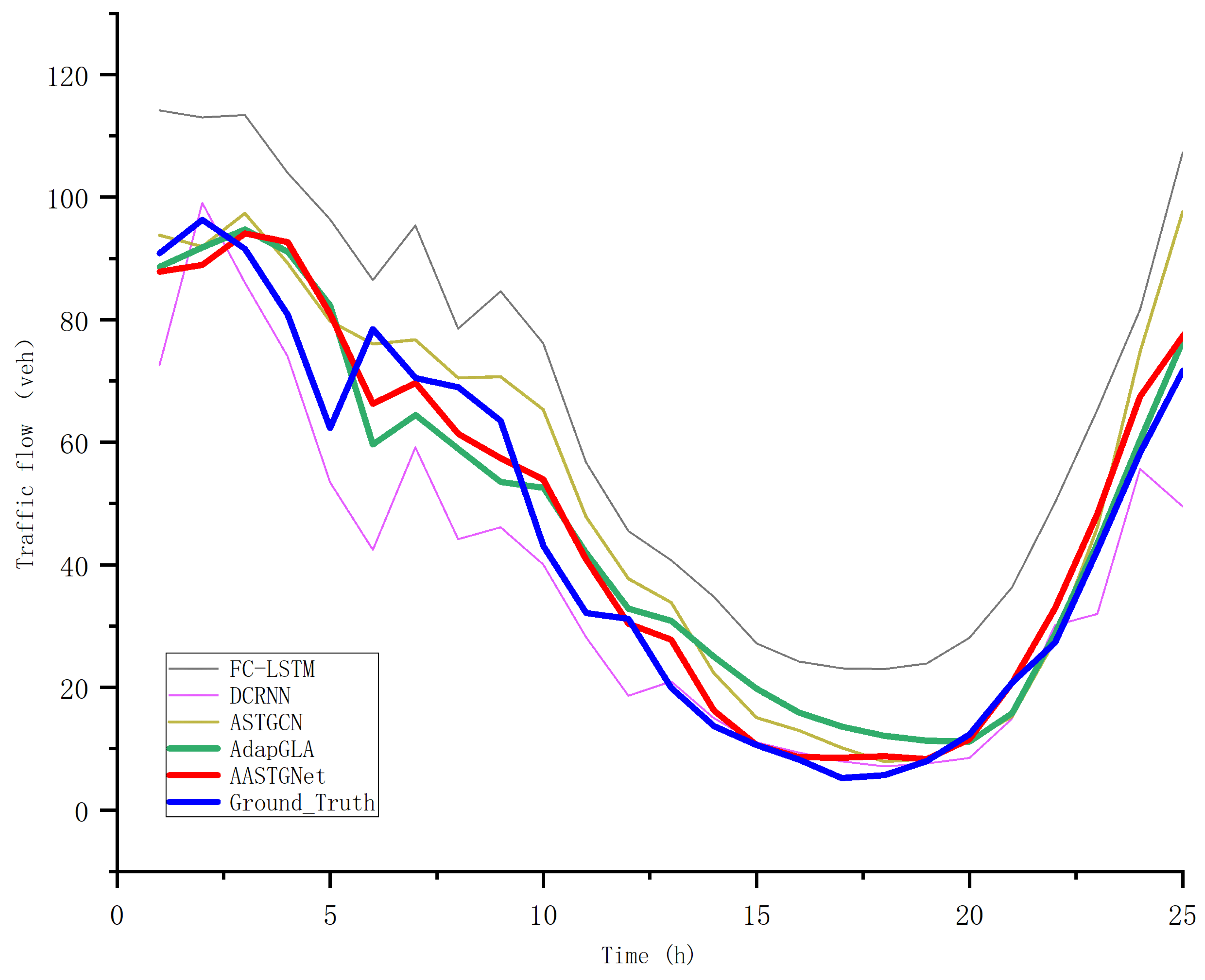

3.4. Experimental Results and Analysis

4. Conclusions

- (1)

- Optimal graph structure: AASTGNet pioneers a nuanced approach, amalgamating macroscopic and microscopic graph structures. The model dynamically refines the graph adjacency matrix, capturing intricate spatial relationships among traffic detectors;

- (2)

- Micro-level graph adjacency matrix: The introduction of a micro-level graph structure, utilizing node information for characterizing current traffic conditions, emerges as a pivotal enhancement. This addition significantly addresses sudden fluctuations in road traffic flow, enhancing predictive accuracy;

- (3)

- Integration of price information: AASTGNet seamlessly integrates toll data with traffic information, showcasing adaptability to scenarios influenced by toll stations. The model excels in capturing the dynamic relationship between price changes and traffic flow distribution, contributing to superior predictive accuracy.

- (1)

- Investigating the integration of dynamic toll pricing models to enhance the adaptability of the model to evolving toll schemes, this study explores potential patterns of road toll status changes by extrapolating future adjustments in toll prices based on the current tolling situation;

- (2)

- Researching discerning indicators based on speed and traffic volume variations for sudden road traffic congestion, this study employs fundamental principles from traffic engineering to design control measures for managing sudden road traffic congestion. The effectiveness of the proposed measures is validated through simulation or on-site verification methods;

- (3)

- Investigating factors beyond changes in toll prices that influence sudden alterations in road traffic conditions, this study aims to enhance the model’s accuracy in predicting unforeseen situations;

- (4)

- The current model requires predicting future road traffic flow based on detection data from node sensors. Enhancements to the model can be made on the input side to accommodate a more diverse range of data.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Model1 | Model2 | meandiff | p-adj | Lower | Upper | Reject |

|---|---|---|---|---|---|---|

| ASTGCN | AdapGLA | 1.0215 | 0 | 0.9168 | 1.1262 | TRUE |

| ASTGCN | AASTGNet | 1.0444 | 0 | 0.9398 | 1.1491 | TRUE |

| ASTGCN | DCRNN | −3.1472 | 0 | −3.2517 | −3.0427 | TRUE |

| ASTGCN | FC-LSTM | 0.9515 | 0 | 0.8469 | 1.0562 | TRUE |

| AdapGLA | DCRNN | −4.1687 | 0 | −4.2732 | −4.0642 | TRUE |

| AASTGNet | DCRNN | −4.1916 | 0 | −4.2961 | −4.0871 | TRUE |

| DCRNN | FC-LSTM | 4.0987 | 0 | 3.9942 | 4.2032 | TRUE |

References

- Börjesson, M.; Kristoffersson, I. The Swedish congestion charges: Ten years on. Transp. Res. Part A Policy Pract. 2018, 107, 35–51. (In English) [Google Scholar] [CrossRef]

- Li, Z.; Liang, C.; Hong, Y.; Zhang, Z. How Do On-demand Ridesharing Services Affect Traffic Congestion? The Moderating Role of Urban Compactness. Prod. Oper. Manag. 2022, 31, 239–258. (In English) [Google Scholar] [CrossRef]

- Van Dender, K. Transport Taxes with Multiple Trip Purposes. Scand. J. Econ. 2003, 105, 295–310. (In English) [Google Scholar] [CrossRef]

- Börjesson, M.; Eliasson, J.; Hugosson, M.B.; Brundell-Freij, K. The Stockholm congestion charges—5 years on. Effects, acceptability and lessons learnt. Transp. Policy 2012, 20, 1–12. (In English) [Google Scholar] [CrossRef]

- Börjesson, M.; Kristoffersson, I. The Gothenburg congestion charge. Effects, design and politics. Transp. Res. Part A Policy Pract. 2015, 75, 134–146. (In English) [Google Scholar] [CrossRef]

- Ghafelebashi, A.; Razaviyayn, M.; Dessouky, M. Congestion reduction via personalized incentives. Transp. Res. Part C Emerg. Technol. 2023, 152, 104153. (In English) [Google Scholar] [CrossRef]

- Anas, A. The cost of congestion and the benefits of congestion pricing: A general equilibrium analysis. Transp. Res. Part B Methodol. 2020, 136, 110–137. (In English) [Google Scholar] [CrossRef]

- Liang, Y.; Yu, B.; Zhang, X.; Lu, Y.; Yang, L. The short-term impact of congestion taxes on ridesourcing demand and traffic congestion: Evidence from Chicago. Transp. Res. Part A Policy Pract. 2023, 172, 103661. (In English) [Google Scholar] [CrossRef]

- Arnott, R. A bathtub model of downtown traffic congestion. J. Urban Econ. 2013, 76, 110–121. (In English) [Google Scholar] [CrossRef]

- Clements, L.M.; Kockelman, K.M.; Alexander, W. Technologies for congestion pricing. Res. Transp. Econ. 2021, 90, 100863. (In English) [Google Scholar] [CrossRef]

- Daganzo, C.F.; Lehe, L.J. Distance-dependent congestion pricing for downtown zones. Transp. Res. Part B Methodol. 2015, 75, 89–99. (In English) [Google Scholar] [CrossRef]

- Xiao, F.; Qian, Z.; Zhang, H.M. Managing bottleneck congestion with tradable credits. Transp. Res. Part B Methodol. 2013, 56, 1–14. (In English) [Google Scholar] [CrossRef]

- Barrera, J.; Garcia, A. Dynamic Incentives for Congestion Control. IEEE Trans. Autom. Control 2015, 60, 299–310. (In English) [Google Scholar] [CrossRef]

- Yu, H.; Krstic, M. Traffic congestion control for Aw–Rascle–Zhang model. Automatica 2019, 100, 38–51. (In English) [Google Scholar] [CrossRef]

- Chiabaut, N.; Faitout, R. Traffic congestion and travel time prediction based on historical congestion maps and identification of consensual days. Transp. Res. Part C Emerg. Technol. 2021, 124, 102920. (In English) [Google Scholar] [CrossRef]

- Pi, M.; Yeon, H.; Son, H.; Jang, Y. Visual Cause Analytics for Traffic Congestion. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2186–2201. (In English) [Google Scholar] [CrossRef]

- D’Andrea, E.; Marcelloni, F. Detection of traffic congestion and incidents from GPS trace analysis. Expert Syst. Appl. 2017, 73, 43–56. (In English) [Google Scholar] [CrossRef]

- Ahmad, S.A.; Hajisami, A.; Krishnan, H.; Ahmed-Zaid, F.; Moradi-Pari, E. V2V System Congestion Control Validation and Performance. IEEE Trans. Veh. Technol. 2019, 68, 2102–2110. (In English) [Google Scholar] [CrossRef]

- Li, T.; Ni, A.; Zhang, C.; Xiao, G.; Gao, L. Short-term traffic congestion prediction with Conv–BiLSTM considering spatio-temporal features. IET Intell. Transp. Syst. 2020, 14, 1978–1986. (In English) [Google Scholar] [CrossRef]

- Qu, Z.; Liu, X.; Zheng, M. Temporal-Spatial Quantum Graph Convolutional Neural Network Based on Schrödinger Approach for Traffic Congestion Prediction. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8677–8686. (In English) [Google Scholar] [CrossRef]

- Chen, M.; Yu, X.; Liu, Y. PCNN: Deep Convolutional Networks for Short-Term Traffic Congestion Prediction. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3550–3559. (In English) [Google Scholar] [CrossRef]

- Yang, H.; Li, Z.; Qi, Y. Predicting traffic propagation flow in urban road network with multi-graph convolutional network. Complex Intell. Syst. 2023, 1–13. [Google Scholar] [CrossRef]

- Smith, B.L.; Demetsky, M.J. Traffic flow forecasting: Comparison of modeling approaches. J. Transp. Eng. 1997, 123, 261–266. [Google Scholar] [CrossRef]

- Williams, B.M.; Hoel, L.A. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Zivot, E.; Wang, J. Vector autoregressive models for multivariate time series. Model. Financ. Time Ser. S-PLUS® 2006, 385–429. [Google Scholar] [CrossRef]

- Zhang, S.; Li, X.; Zong, M.; Zhu, X.; Cheng, D. Learning k for kNN Classification. ACM Trans. Intell. Syst. Technol. 2017, 8, 1–19. (In English) [Google Scholar] [CrossRef]

- Castro-Neto, M.; Jeong, Y.-S.; Jeong, M.-K.; Han, L.D. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 2009, 36, 6164–6173. (In English) [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of Recurrent Neural Network (RNN) and Long Short-Term Memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. (In English) [Google Scholar] [CrossRef]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar] [CrossRef]

- Veyrin-Forrer, L.; Kamal, A.; Duffner, S.; Plantevit, M.; Robardet, C. On GNN explainability with activation rules. Data Min. Knowl. Discov. 2022. (In English) [Google Scholar] [CrossRef]

- Davis, G.A.; Nihan, N.L. Nonparametric regression and short-term freeway traffic forecasting. J. Transp. Eng. 1991, 117, 178–188. [Google Scholar] [CrossRef]

- Jeong, Y.-S.; Byon, Y.-J.; Castro-Neto, M.M.; Easa, S.M. Supervised weighting-online learning algorithm for short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1700–1707. [Google Scholar] [CrossRef]

- Luo, X.; Li, D.; Yang, Y.; Zhang, S. Spatiotemporal traffic flow prediction with KNN and LSTM. J. Adv. Transp. 2019, 2019, 4145353. [Google Scholar] [CrossRef]

- Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; Li, Z. Deep multi-view spatial-temporal network for taxi demand prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 922–929. [Google Scholar]

- Long, W.; Xiao, Z.; Wang, D.; Jiang, H.; Chen, J.; Li, Y.; Alazab, M. Unified spatial-temporal neighbor attention network for dynamic traffic prediction. IEEE Trans. Veh. Technol. 2022, 72, 1515–1529. [Google Scholar] [CrossRef]

- Zhang, W.; Zhu, F.; Lv, Y.; Tan, C.; Liu, W.; Zhang, X.; Wang, F.Y. AdapGL: An adaptive graph learning algorithm for traffic prediction based on spatiotemporal neural networks. Transp. Res. Part C Emerg. Technol. 2022, 139, 103659. [Google Scholar] [CrossRef]

- Ta, X.; Liu, Z.; Hu, X.; Yu, L.; Sun, L.; Du, B. Adaptive spatio-temporal graph neural network for traffic forecasting. Knowl.-Based Syst. 2022, 242, 108199. [Google Scholar] [CrossRef]

- Shuman, D.I.; Narang, S.K.; Frossard; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Bruna, J.; Zaremba, W.; Szlam, A.; LeCun, Y. Spectral networks and locally connected networks on graphs. arXiv 2013, arXiv:1312.6203. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 2, 3104–3112. [Google Scholar]

| Toll Point | Monday through Friday—Southbound | Account Toll Rate | Non-Account Toll Rate |

|---|---|---|---|

| Irvine Ranch | 12:00 a.m.–6:59 a.m. | USD 2.69 | USD 3.32 |

| 7:00 a.m.–7:29 a.m. | USD 3.16 | USD 3.32 | |

| 7:30 a.m.–8:29 a.m. | USD 3.32 | USD 3.32 | |

| 8:30 a.m.–8:59 a.m. | USD 3.16 | USD 3.32 | |

| 9:00 a.m.–11:59 p.m. | USD 2.69 | USD 3.32 | |

| Chapman/Santiago Cyn Rd | 12:00 a.m.–11:59 p.m. | No Toll | No Toll |

| Irvine Blvd—West NB On | 12:00 a.m.–11:59 p.m. | USD 2.69 | USD 2.69 |

| Irvine Blvd—West NB Off | 12:00 a.m.–11:59 p.m. | USD 2.12 | USD 2.12 |

| Irvine Blvd—West SB On | 12:00 a.m.–11:59 p.m. | USD 2.12 | USD 2.12 |

| Irvine Blvd—West SB Off | 12:00 a.m.–11:59 p.m. | No Toll | No Toll |

| Portola Pkwy—West NB On | 12:00 a.m.–11:59 p.m. | USD 2.69 | USD 2.69 |

| Portola Pkwy—West NB Off | 12:00 a.m.–11:59 p.m. | No Toll | No Toll |

| Portola Pkwy—West SB On | 12:00 a.m.–11:59 p.m. | USD 2.69 | USD 2.69 |

| Portola Pkwy—West SB Off | 12:00 a.m.–11:59 p.m. | USD 2.69 | USD 2.69 |

| Name | Node | Time Windows | Node Data |

|---|---|---|---|

| PEMS—Orange County | 211 | 17,856 | Flow, Occupy, Price |

| Model | MAE | RMSE | MAPE (%) |

|---|---|---|---|

| HA | 73 | 167.19 | 44.74 |

| VAR | 24.17 | 61.03 | 15.40 |

| FC-LSTM | 12.73 | 25.45 | 0.36 |

| DCRNN | 10.54 | 17.62 | 0.26 |

| ASTGCN | 10.18 | 17.05 | 0.27 |

| AdapGLA | 8.9728 | 15.1618 | 0.2552 |

| AASTGNet | 8.6204 | 14.0779 | 0.2402 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huo, Y.; Zhang, H.; Tian, Y.; Wang, Z.; Wu, J.; Yao, X. A Spatiotemporal Graph Neural Network with Graph Adaptive and Attention Mechanisms for Traffic Flow Prediction. Electronics 2024, 13, 212. https://doi.org/10.3390/electronics13010212

Huo Y, Zhang H, Tian Y, Wang Z, Wu J, Yao X. A Spatiotemporal Graph Neural Network with Graph Adaptive and Attention Mechanisms for Traffic Flow Prediction. Electronics. 2024; 13(1):212. https://doi.org/10.3390/electronics13010212

Chicago/Turabian StyleHuo, Yanqiang, Han Zhang, Yuan Tian, Zijian Wang, Jianqing Wu, and Xinpeng Yao. 2024. "A Spatiotemporal Graph Neural Network with Graph Adaptive and Attention Mechanisms for Traffic Flow Prediction" Electronics 13, no. 1: 212. https://doi.org/10.3390/electronics13010212

APA StyleHuo, Y., Zhang, H., Tian, Y., Wang, Z., Wu, J., & Yao, X. (2024). A Spatiotemporal Graph Neural Network with Graph Adaptive and Attention Mechanisms for Traffic Flow Prediction. Electronics, 13(1), 212. https://doi.org/10.3390/electronics13010212