Abstract

The scale of the system and network applications is expanding, and higher requirements are being put forward for anomaly detection. The system log can record system states and significant operational events at different critical points. Therefore, using the system log for anomaly detection can help with system maintenance and avoid unnecessary loss. The system log has obvious timing characteristics, and the execution sequence of the system log has a certain dependency relationship. However, sometimes the length of sequence dependence is long. To handle the problem of longer sequence logs in anomaly detection, this paper proposes a system log anomaly detection method based on efficient channel attention and temporal convolutional network (ETCNLog). It builds a model by treating the system log as a natural language sequence. To handle longer sequence logs more effectively, ETCNLog uses the semantic and timing information of logs. It can automatically learn the importance of different log sequences and detect hidden dependencies within sequences to improve the accuracy of anomaly detection. We run extensive experiments on the actual public log dataset BGL. The experimental results show that the Precision and F1-score of ETCNLog reach 98.15% and 98.21%, respectively, both of which are better than the current anomaly detection methods.

1. Introduction

In a production environment, a system log is frequently the only data that can be used to record detailed system runtime information. It is a great resource for knowledge on system status monitoring and system faults diagnosing []. System log anomaly detection is crucial as systems and applications become more complex. However, conventional methods for log anomaly detection are incredibly ineffective. Based on their domain knowledge, operators find anomalies by matching regular expressions or looking for keywords (such as “failure” or “kill”). It is important to note that this strategy depends on domain expertise []. Even if abnormal worries may be predicted, it demands a large expenditure of time and money. This strategy does not apply to all large-scale systems and is unrealistic for real-world applications.

To some extent, the printing order of log entries can reflect the order in which system applications are executed. When abnormal behavior develops during system operation, irregularities in the execution path are also frequently present. Furthermore, in natural language processing (NLP), this sequence data analysis with dependencies is popular. Natural language is structured according to a specific linguistic environment and grammatical rules. It is deemed inappropriate when the linguistic environment changes or the linguistic order deviates from accepted grammar rules. As a result, we may also regard a system log as a specific category of natural language, which provides access to natural language processing for system log anomaly detection. A log anomaly detection model is constructed to model the generated log sequence vectors. The model obtained from training is then used to detect anomalies in the new input logs. If a new log does not follow the rules the model has learned, it is considered an anomaly.

With the continuous development of deep learning [], increasing numbers of scholars have used neural networks for log anomaly detection. Du et al. [] proposed the DeepLog method, which models a system log as natural language sequences using a long short-term memory network (LSTM). It constructs a model from a normal execution to find anomalies in the log data. If a detected pattern matches one of the taught patterns, it is regarded as normal; otherwise, it is seen as abnormal. To create the S-LSTM, Vinayakumar et al. [] added recursive LSTM layers to the hidden layer of an already-existing LSTM network. The model can quickly pick up temporal behavior from sparse representations. The operational log samples of normal and abnormal events occurring within a 1 min interval are modeled as time series to detect and classify the normal or abnormal events. The LogRobust approach was proposed by Zhang et al. []. This approach first extracts and represents log events’ semantic information as semantic vectors. Then, anomalies are detected using an attention-based Bi-LSTM model. The model can automatically determine the significance of various log events and extract contextual information from the log sequences.

On the other hand, a system log has distinctive temporal characteristics, and sometimes the sequences have long dependencies. For instance, some novel attacks do not instantly cause damage after implementation. Instead, the damage is not caused until some conditions are met or several regular operations are carried out, which are reflected in the log sequences as long-term dependencies. Modeling time series data’s long short-term dependencies is possible with a temporal convolutional network (TCN) []. Parallel processing is simple, and network training and validation are completed more quickly with a TCN. By modeling distant relations, a TCN also addresses the issue of gradient explosion and disappearance, particularly for long sequences. It uses less memory during training. A TCN is utilized extensively across many fields because of its exceptional time series analysis capability. Yang et al. [] used the TCN model in log anomaly detection with an adaptive global average pooling instead of a fully connected layer. The detection effect is optimized, and the overfitting issue is successfully improved. A novel lightweight log anomaly detection approach called LightLog was proposed by Wang et al. []. The approach addresses the issue of insufficient computing power on edge devices for accurate anomaly detection. LightLog considerably lowers the number of parameters and computations required by a standard TCN while enhancing detection performance.

In this paper, we propose a novel method, ETCNLog, to handle a longer sequence log. First, a cleaning step is introduced to the Drain [], which improves the parsing of semi-structured logs and offers a solid foundation for subsequent anomaly detection []. Using efficient channel attention (ECA) and a TCN as its foundation, ETCNLog is an anomaly detection model consisting primarily of dilated convolution, residual blocks and an efficient channel attention module []. An important advantage of a TCN is that it has a large receptive field to model the long-term dependencies of the logs. Combined with ECA, the model can automatically learn the importance of different log event sequences and detect hidden dependencies within sequences. Moreover, it improves the effect of time series feature learning to achieve high accuracy for longer sequence log anomaly detection. The major contributions are summarized as follows:

- By adding a cleaning step in Drain, we address the issue that the existing log templates are insufficiently accurate, which is beneficial to the subsequent log anomaly detection;

- We propose an anomaly detection model based on ECA and a TCN to mine long-term dependencies in log sequences. The residual block in a TCN is enhanced using multi-kernel pointwise convolution. The ECA module is spliced after the residual block. Longer sequence logs can be handled better by the model. Furthermore, the significance of various log event sequences is automatically learned, and hidden dependencies within the sequences are discovered to improve the accuracy of anomaly detection;

- With the BGL dataset, we run extensive experiments. The experimental results demonstrate that ETCNLog increases the accuracy of system log anomaly detection.

The rest of this paper is structured as follows: The related work is presented in Section 2. Section 3 describes the proposed log anomaly detection method ETCNLog, including the overall detection framework, log processing, and anomaly detection model. Lastly, in Section 4, we run experiments to assess the performance of the proposed model, and our work in this paper is summarized in Section 5.

2. Related Work

Log parsing, feature extraction, and anomaly detection are the three crucial phases of log anomaly detection. For each phase, we review the related work [].

2.1. Log Parsing

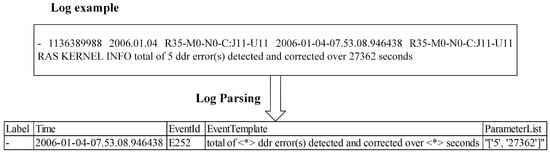

Log parsing extracts structured data from semi-structured log data. Log parsing aims to separate the constant and variable parts of the raw log to generate a uniformly formatted log template, as shown in Figure 1.

Figure 1.

Overview of log parsing.

The two primary categories of log parsing techniques are heuristic-based and clustering-based. Unsupervised learning includes clustering. The LogSig method proposed by Tang et al. [] categorizes log messages into a set of event types by searching the most representative message signatures. LogSig can handle various types of log data, and is able to incorporate human’s domain knowledge to achieve a high performance. A heterogeneous log analysis system is the Heterogeneous Log Analyzer (HLAer) suggested by Ning et al. []. First, heterogeneous logs are categorized and indexed using hierarchical clustering. Then, they perform word separation for each log type, a common procedure in natural language processing, and build a distance function between logs.

The heuristic-based log parsing approach is based on the format information of the logs or the word information in the logs to find a heuristic algorithm appropriate for the logs and extract log templates. The Spell [] uses the longest common subsequence to search online log groups. Accelerating the search with subsequence matching and prefix trees solves the problem of online extracting log templates for the first time. The Drain [] parses the original log messages in a stream. A fixed-depth parse tree guides the search of log groups. Specially designed parsing rules are also used in the parse tree nodes for compact encoding. Drain is selected to parse semi-structured log data into structured log events and generate log templates in this paper. Then, the log templates are updated by cleaning the duplicate data in the templates.

2.2. Feature Extraction

Anomaly detection depends on the feature representation of log templates, and useful features must be extracted from log templates. NLP techniques have attracted researchers’ interests in log templates vectorization, such as bag of words [], term frequency–inverse document frequency (TF-IDF) [], and Word2Vec [].

Several mapping techniques have been developed for transforming raw logs into meaningful representations. He et al. [] calculated the number of occurrences of each log event based on bag of words to form an event count vector. Meng et al. [] proposed LogClass, which improves the classical weighting method TF-IDF. By substituting the inverse localization frequency for the inverse document frequency, the feature of the template is constructed. Li et al. [] suggested the SwissLog approach to deal with the changing and unknown log formats. They employed a BERT encoder to encode log templates to extract contextual information in log statements.

Bertero et al. [] treated logs as regular text. A word-embedding technique based on the Word2Vec algorithm is first applied to map the words in the log messages to a high-dimensional vector space. The coordinates of the words are then transformed into log event vectors, which are then transformed into log sequence vectors. Meng et al. suggested the LogAnomaly approach []. The semantic vectors are extracted using the Word2Vec technique to retain more high-level semantic information. Their subsequent research suggested an application-specific log word-embedding technique []. It can extract precise semantic information and process new words that are not predefined. In actual application scenarios, the quantity of log data surges, and the format is always changing. In this study, we develop a low-dimensional semantic vector space that may provide a more compact and effective feature representation from log templates using Word2Vec and the post-processing algorithm (PPA) [].

2.3. Anomaly Detection

The anomaly detection problem can be defined as a binary classification problem that aims to determine whether the input logs are abnormal or normal. The anomaly detection models the output vector after feature extraction. Then, anomaly detection is performed on the new input data based on the model obtained from training. Anomalies are picked up by the model. We can use machine learning and deep learning methods for log anomaly detection.

Deep learning methods perform better than conventional machine learning methods for log anomaly detection. Chen et al. [] used LSTM to extract log sequential patterns. To reduce the impact of abnormal log sequence noise, they suggested a novel transmission learning method that shares a fully connected network between source and target systems. The literature [,] analyzes various LSTM models for anomaly detection: bidirectional LSTM, stacked LSTM, etc. A TCN is more accurate in prediction tasks when compared to LSTM. Chen et al. [] designed a TCN-based probabilistic prediction framework for multiple correlated time series prediction. The framework can be utilized in parametric and nonparametric scenarios to estimate the probability density. Stacked residual blocks based on dilated convolution are created to reflect the time dependency of the series better. He et al. [] trained the TCN model on a normal series, and it is then applied to forecast trends over a range of time steps. The prediction error is fitted using a Gaussian distribution to see if the sequence is abnormal.

As pointed out in [], although many methods work well for the intelligent detection of log data, with the increase in log data quantity and complexity in actual scenarios, anomaly detection from real log sequences becomes difficult. In this research, we build a system log anomaly detection model based on ECA and a TCN. The model can handle longer sequence logs more effectively while automatically learning the significance of various log event sequences, spotting hidden dependencies within the sequences and improving the accuracy of anomaly detection.

3. Methodology

In this section, we describe the proposed ETCNLog method in detail. The ETCNLog framework is presented first. After introducing the framework, we suggest a log processing step to convert the raw logs into log sequence vectors. Finally, ECA and a TCN are used to learn log sequence relationships and perform log anomaly detection by modeling.

3.1. ETCNLog Framework

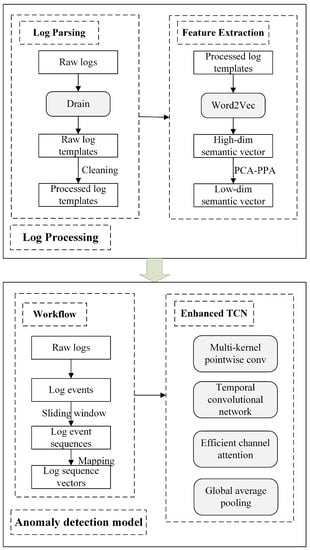

Our ETCNLog framework aims to use the semantic and sequential relationships of logs to handle longer sequence logs better. Additionally, it improves the accuracy of log anomaly detection by automatically learning the significance of various log event sequences and spotting hidden dependencies within the sequences. As shown in Figure 2, the ETCNLog framework mainly consists of log processing and an anomaly detection model.

Figure 2.

ETCNLog framework.

3.2. Log Processing

Developers can write free-text log messages in the source code, so the raw logs are usually semi-structured. Log parsing is thus the first step of log processing. The constant and variable parts are the two components of the raw logs. For example, the BGL log shown in Figure 1 is: “- 1136389988 2006.01.04 R35-M0-N0-C:J11-U11 2006-01-04-07.53.08.946438 R35-M0-N0-C:J11-U11 RAS KERNEL INFO total of 5 ddr error (s) detected and corrected over 27362 s”, and can be considered as consisting of the constants “total of <*> ddr error(s) detected and corrected over <*> seconds” and the variables “’5’, ’27362”’. Furthermore, “total of <*> ddr error(s) detected and corrected over <*> seconds” can be represented as a template for all similar log messages.

In short, log parsing is equivalent to reversing log printing and is a common processing step for semi-structured logs. Parsed log templates provide the foundation for log anomaly detection. However, while Drain is currently a better log parsing tool, it does not correctly parse the raw logs. It is challenging to obtain the best results if Drain is utilized directly to build log templates for anomaly detection.

The BGL dataset is our study object, which can more accurately reflect the ETCNLog method’s reliability. After parsing the log data through Drain, 374 different types of BGL log templates are produced, as shown in Table 1. We can see that some log templates are duplicated. For instance, there are similarities between the two log templates in {E67, E68}, and E67 may contain another one. Similarly, the two log templates in {E69, E70} are similar, and E69 can contain another one. The effectiveness of log anomaly detection will be significantly impacted if these templates are utilized to build log event sequences directly. In this research, we improve the effect of log parsing by adding the step of cleaning the duplicate data of templates.

Table 1.

Log templates obtained by parsing BGL logs through the Drain.

To improve the accuracy of log parsing, we must conduct a cleaning operation. First, we select a few representative log entries that match each log template and check if they are duplicates. According to the occurrences of log templates, if they are duplicated, they are combined into the log template that appears the most frequently. The outcomes of cleaning log templates in Table 1 are displayed in Table 2.

Table 2.

Cleaned BGL log templates.

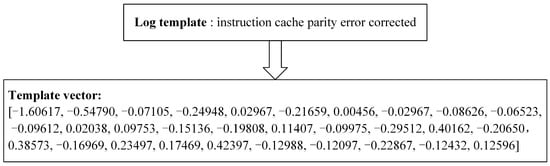

The second step of log processing is feature extraction. We use the Word2Vec [] to convert log templates into semantic vectors. The mapping function preserves the semantic similarity of the template and projects it into a 300-dimensional vector. We further decrease these semantic vectors using PPA [] to provide more compact and effective feature representations. PPA is a dimensionality reduction technique combined with principal component analysis (PCA) to further construct efficient word embeddings. Additional features are embedded from the zero space of principal component analysis but provide more concise feature representations. The algorithm is divided into two steps. The first step reduces the dimensionality of the semantic vector by PCA. The second step removes the mean vector and dominating directions from the PCA space by reprocessing the processed vectors by PPA. The hyperparameter d, set by default to 7 by following the implementation in [], determines the number of removed feature directions.

An illustration of the log template vectorization procedure is shown in Figure 3. We use Word2Vec and PCA-PPA to extract features to create a more compact and effective 30-dimensional vector from the log template. A better log vector representation can be achieved by fully using the semantic information in the log.

Figure 3.

Example of log template vectorization.

3.3. Anomaly Detection Model

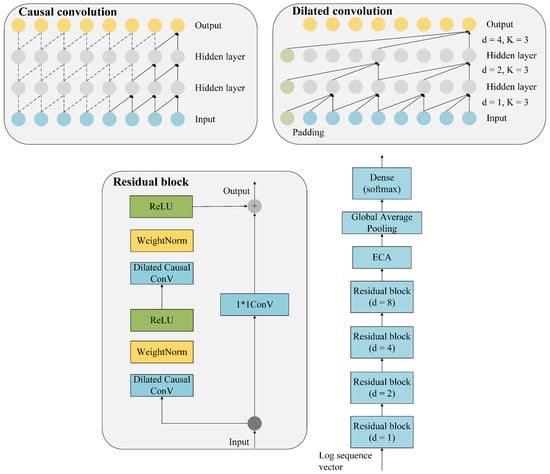

Log is an unique type of text data, and its semantic and temporal information is worthy of deeper mining and research. We thus utilize a sliding window to split the log events to acquire the log event sequences. The log event sequences are then converted into the log sequence vectors based on the semantic vectors obtained from feature extraction and the log sequence vectors are supplied to the anomaly detection model. We design a model based on ECA and TCN for log anomaly detection. Figure 4 shows the architecture of the anomaly detection model.

Figure 4.

The model architecture has four essential components: causal convolution, dilated convolution, enhanced residual block, and efficient channel attention module. The residual block consists of two layers of convolution.

A TCN is a special kind of convolutional neural network. Due to the limitation of convolutional kernel size, it is generally believed that traditional convolutional neural networks are not well suited for modeling temporal problems and unable to capture long-time dependency information well. The number of layers, convolutional kernel size and dilation rate determine the size of the receptive field of a TCN. A TCN can be flexibly customized based on the unique requirements of various tasks.

Causal convolution is required to deal with the sequence problem. Predict according to . Define causal convolution: filter , sequence , and the causal convolution at () is:

Let us assume that the last two nodes of the input layer are , respectively, and the last node of the first hidden layer is . The filter , can be calculated using the following formula:

The size of the convolutional kernel restricts the length of the causal convolution over time modeling. Longer dependencies can be captured by dilated convolution. By adding voids to the regular convolution, dilated convolution expands the receptive field. It also has an additional hyperparameter dilation rate, which refers to the number of intervals of the kernel. Dilated convolution makes the size of the effective window grow exponentially with the number of layers. With fewer layers, the convolutional network can achieve a wide receptive field in this way. The hyperparameters of dilated convolution are the dilation rate d and the kernel size K. The definition of the dilated convolution is given below, including the filter and sequence . The dilated convolution with a dilation rate equal to d at () is:

When d = 2, and K = 3, assume that are the last five nodes of the first hidden layer, respectively, and the last node of the second hidden layer is . The filter , according to the formula:

The size of the receptive field for the dilated convolution is , so increasing either K or d can increase the receptive field. In actuality, d usually increases exponentially by 2 as the number of layers in the network increases.

Deep network training has proven to be successful when using residual connection. It enables cross-layer information transfer inside the network. In this architecture, the causal convolution operation ensures that only input data before the current timestamp t are used to predict the output at timestamp t. Moreover, the dilated convolution enhances the feature representation extracted from various receptive regions. In our work, we set four dilation rates, i.e., d to be 1, 2, 4, and 8. When d = 1, the dilated convolution degenerates to normal convolution.

Without dimensionality reduction, the ECA module [] aggregates convolutional features using global average pooling (GAP) []. After the adaptive determination of the kernel size K for one-dimensional convolution, a Sigmoid function learns the channel attention. ECA uses a predetermined number of high-dimensional (low-dimensional) channels with long (short) convolution for each group. A nonlinear function represents the channel dimension C, proportional to the convolutional kernel size K. The channel dimension C is set to the kth power of 2 because the channel size is typically 2. The equation reads as follows:

The size of the convolutional kernel is:

where denotes the nearest odd number to t. and b are taken as 2 and 1, respectively.

We propose two improvements below for the anomaly detection model:

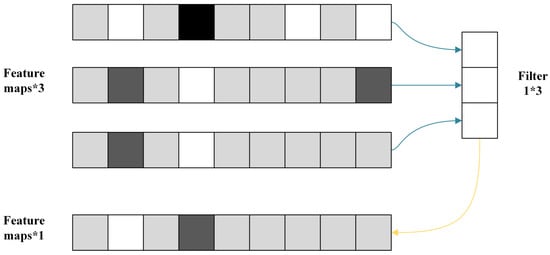

- The residual block is enhanced by adding multiple convolutional kernels to enrich the underlying feature representation. On the other hand, pointwise convolution in MobileNet [] is employed in TCN architecture to create a model with fewer parameters. The pointwise convolution operation creates a new feature mapping by performing a weighted fusion of many feature mappings from the preceding layer. Figure 5 shows three one-dimensional feature mappings are fused into a new feature mapping by a 1*3 kernel;

Figure 5. Pointwise convolution.

Figure 5. Pointwise convolution. - The ECA is spliced after the residual block. It is a lightweight attention extraction module with strong generalizability that is simple to integrate into different network frameworks. ECA can detect hidden dependencies within the sequences and automatically learn the significance of various log sequences.

The output feature mapping of the final convolutional layer is then averaged using GAP rather than a fully connected layer. The number of parameters in our model is extremely small, which prevents overfitting and increases the reliability of the model.

4. Experiment Section

ETCNLog is designed with Keras [], and its backend is TensorFlow []. We evaluate ETCNLog method in this part. The performance of the proposed ETCNLog method is evaluated by comparing it with some current log anomaly detection methods. In Section 4.1, we discuss the experiment setting, including the evaluation dataset, metrics, and experiment configuration. In Section 4.2, the control variable method is used to adjust the model hyperparameters. In Section 4.3, we compare ETCNLog with several current methods, including DeepLog [], LightLog [], and LogAnomaly [], and thus test the accuracy of the model.

4.1. Experiment Setting

The HDFS and BGL datasets are the two commonly used log datasets for research. This paper studies the anomaly detection problem for longer sequence logs. The HDFS dataset has many short sequences when divided by block ID. Furthermore, BGL dataset does not have such a division. So, we decided to use the BGL dataset as our study subject. BGL is a log collected from the Blue-Gene/L supercomputer system by IBM’s famous High-Performance Computing Laboratory Lawrence Livermore National Labs (LLNL). Table 3 shows the basic information of the BGL dataset. The BGL dataset contains 4,747,963 log messages, of which 348,460 log messages are abnormal.

Table 3.

Overview of the BGL dataset.

We assessed the model using Precision, Recall, and F1-score []. Precision is the ratio of accurately predicted abnormal logs to all predicted abnormal logs combined. The formula is as follows:

Recall refers to the ratio of correctly predicted abnormal logs to the total number of abnormal logs, which is calculated as:

The F1-score is equivalent to the weighted summed average of Precision and Recall, a comprehensive evaluation index. Changes in Precision and Recall values have an impact on F1-score adjustments. The equation provides the calculating formula.

In the above equations, TP indicates that the predicted result is the number of abnormal logs and the actual number of abnormal logs. TN indicates that the predicted result is the number of normal logs and the actual number of normal logs. FP indicates that the predicted result is the number of abnormal logs and the actual number of normal logs. FN indicates that the predicted result is the number of normal logs and the actual number of abnormal logs. The performance of the model may be assessed more effectively using the F1-score.

Our experiments were performed on a Windows machine with an Intel(R) Core(TM) i5-4200H CPU @ 2.80 GHz 2.79 GHz and 8 GB of RAM. ETCNLog was implemented with Tensorflow 1.8.0 and Keras 2.1.6. The training task is a binary classification, so the loss was calculated based on binary cross-entropy. The Adam optimizer was utilized for training the ETCNLog. We used an initial learning rate of 0.0005. In addition, we utilized Softmax as the activation function for the output layer and set the training epoch to 100.

4.2. Hyperparameter Analysis

The basic hyperparameters are initially set up as given in Table 4 below. We can see the five core hyperparameters: size, sequence_length, step, batch_size, and np_epoch. Hyperparameter size indicates the dimension of the template vector, which directly affects the running time of the experiment. Generally speaking, as the template vector’s dimension increases, the computing time required increases.

Table 4.

Initial hyperparameter configuration table of the model.

Our work divided the BGL log events using a sliding window to produce log event sequences. The unit of sliding window is a log event. The hyperparameter sequence_length indicates the size of the sliding window. The hyperparameter step indicates the step length of the sliding window, which determines the number of log event sequences obtained after segmentation. If there are any abnormal logs in this sequence, the log event sequence is marked as abnormal. The remaining two hyperparameters in the table, batch_size and np_epoch, are both hyperparameters of the TCN network. Furthermore, batch_size indicates the volume of each batch of data when the data are processed in batches, and np_epoch indicates the number of times the network is repeatedly trained.

When the model is set up using the initial hyperparameters in Table 4, it produces 51,695 normal log event sequences and 7220 abnormal log event sequences. The experiment randomly picks 80% of the log event sequences as training data and the remaining 20% as test data to simulate real-world settings where log data always change and evaluate the model performance. As a result, we tested our model on 11,783 log event sequences and trained the model on 47,132 log event sequences. The initial experiment yielded the following results: TP = 1323, FP = 505, TN = 9872, and FN = 83. We calculated Precision = 0.7237, Recall = 0.9410, and F1-score = 0.8182. It can be seen that the initial experiment is generally effective. We used the control variable method to adjust the hyperparameters size and step to improve model performance. First, the size value was adjusted by keeping the other hyperparameters unchanged. Set size value ranged from 10 to 50, increasing by 10 each time. The experimental results are shown in Table 5.

Table 5.

Effect of size on TP, FP, TN, and FN.

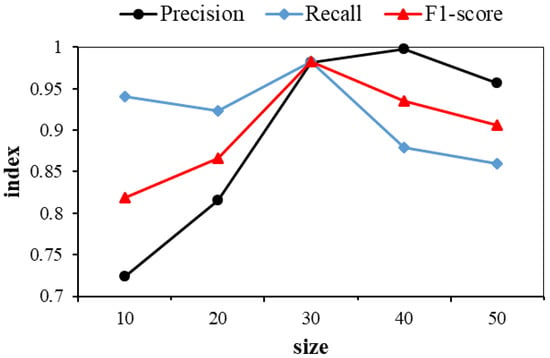

We calculated the Precision, Recall and F1-score metrics for different size values in turn. To better observe the influence of the hyperparameter size, we plotted the variation of the Precision, Recall and F1-score values with the size, as shown in Figure 6 below.

Figure 6.

Effect of size on Precision, Recall and F1-score.

Figure 6 represents the effect of the dimension of the template vector on model performance. The image shows that when size = 30, Precision and F1-score are at their highest levels. When size = 40, the Recall reaches its peak. Therefore, considering the model detection effect and a thorough evaluation of computing resources, the value of hyperparameter size should be set to 30. The initial value of the hyperparameter step is 80, and we expanded its range from 40 to 120, increasing it by 20 each time. There are also variations in the number of test log event sequences, as shown in Table 6.

Table 6.

Effect of step on the number of log event sequences.

The experimental results are shown in Table 7.

Table 7.

Effect of step on TP, FP, TN, and FN.

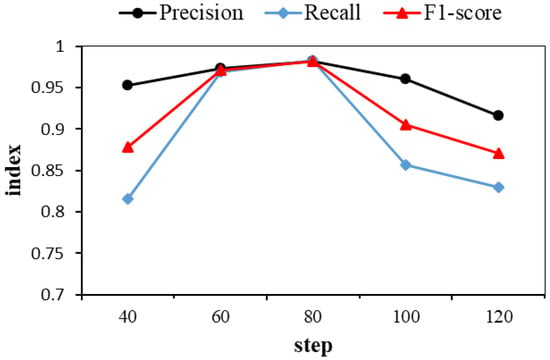

Precision, Recall and F1-score were calculated sequentially for different values of step. To visualize the effect of the step on model performance, we plotted Figure 7 to show the changes.

Figure 7.

Effect of step on Precision, Recall, and F1-score.

Figure 7 represents the effect of the step of sliding window on model performance. The figure shows that the peak of Precision, Recall, and F1-score occurs at step = 80. Consequently, the value of step should be set to 80. In conclusion, Table 8 below shows the final values for hyperparameters after the hyperparameter analysis.

Table 8.

Final hyperparameter configuration table of the model.

4.3. Performance Comparison

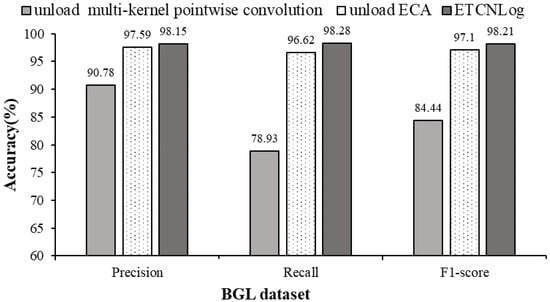

Two sets of comparison experiments were set up in this paper to analyze the effect of the innovations proposed in the model. In the two sets of comparison experiments, the multi-kernel pointwise convolution and the ECA module were not loaded, respectively. Other processes were kept the same as the original experiments, and the experimental results on the BGL dataset are shown in Figure 8 below.

Figure 8.

Comparison of model analysis.

Compared with the model without loading multi-kernel pointwise convolution, the F1-score of ETCNLog increases by 13.77%. On the one hand, it shows that adding multiple convolutional kernels in the residual block can enrich the feature representation of the underlying layer. On the other hand, it shows that pointwise convolution for the weighted fusion of multiple feature mappings of the previous layer can generate effective feature mappings. Compared with the model without loading ECA, the F1-score of ETCNLog increases by 1.11%, indicating that the ECA module can automatically learn the importance of different log sequences, detect the hidden dependencies within the sequences, and improve the accuracy of anomaly detection. In conclusion, the proposed innovations contribute to the performance of the overall model.

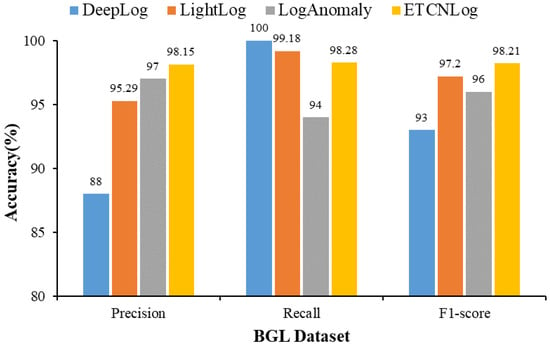

In this paper, three log anomaly detection methods, DeepLog, LightLog, and LogAnomaly, were selected as benchmarks to evaluate the performance of ETCNLog. Figure 9 displays the comparison results of different models.

Figure 9.

Comparison of different methods.

ETCNLog has the highest Precision and F1-score on the BGL dataset, with 98.15% and 98.21%, respectively. DeepLog performs slightly better than ETCNLog in Recall, but only reaches 88% in Precision, far behind ETCNLog overall. The proposed model ETCNLog performs well on the BGL dataset for anomaly detection.

5. Conclusions

In this paper, we propose a system log anomaly detection method called ETCNLog, which improves the effect of log parsing by adding a cleaning operation to obtain log templates more precisely. We employ the Word2Vec and PCA-PPA algorithms for feature extraction, taking advantage of semantic information and producing more compact and effective feature representations from log event sequences. Finally, an anomaly detection model based on efficient channel attention and a temporal convolutional network is constructed. This model can not only solve the anomaly detection problem of longer sequence logs but also detect the hidden dependencies in the sequences, and automatically learn the importance of different log event sequences to improve the accuracy of anomaly detection. The experimental results demonstrate that the F1-score of ETCNLog reaches 98.21%, which is better than the current anomaly detection methods.

The BERT model and other attention mechanisms will be used for feature extraction based on the system log to mine more comprehensive log feature representations as part of our continuous attempts to enhance the model.

Author Contributions

Conceptualization, Y.C. and N.L.; methodology, Y.C.; software, Y.C.; validation, Y.C., J.L. and Q.C.; formal analysis, Y.C.; investigation, J.L.; resources, Y.C.; data curation, Q.C.;writing—original draft preparation, Y.C.; writing—review and editing, Y.C.; visualization, J.L. and Q.C.; supervision, N.L.; project administration, N.L.; funding acquisition, N.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded in part by the National Social Science Fund of China under Grant 20&ZD293, and as part of the Innovation Environment Construction Special 355 Project of Xinjiang Uygur Autonomous Region under Grant PT1811.

Data Availability Statement

Publicly available dataset was analyzed in this study. The research data can be found on https://github.com/logpai/loghub.

Acknowledgments

The authors would like to thank the anonymous reviewers for their contributions to this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- He, P.; Zhu, J.; He, S.; Li, J.; Lyu, M.R. An evaluation study on log parsing and its use in log mining. In Proceedings of the 2016 46th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Toulouse, France, 28 June–1 July 2016; pp. 654–661. [Google Scholar]

- Yuan, D.; Mai, H.; Xiong, W.; Tan, L.; Zhou, Y.; Pasupathy, S. Sherlog: Error diagnosis by connecting clues from run-time logs. In Proceedings of the Fifteenth International Conference on Architectural Support for Programming Languages and Operating Systems, Pittsburgh, PA, USA, 13–17 March 2010; pp. 143–154. [Google Scholar]

- Phyo, P.P.; Byun, Y.C. Hybrid Ensemble Deep Learning-Based Approach for Time Series Energy Prediction. Symmetry 2021, 13, 1942. [Google Scholar] [CrossRef]

- Du, M.; Li, F.; Zheng, G.; Srikumar, V. Deeplog: Anomaly detection and diagnosis from system logs through deep learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 1285–1298. [Google Scholar]

- Vinayakumar, R.; Soman, K.; Poornachandran, P. Long short-term memory based operation log anomaly detection. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Manipal, India, 13–16 September 2017; pp. 236–242. [Google Scholar]

- Zhang, X.; Xu, Y.; Lin, Q.; Qiao, B.; Zhang, H.; Dang, Y.; Xie, C.; Yang, X.; Cheng, Q.; Li, Z.; et al. Robust log-based anomaly detection on unstable log data. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; pp. 807–817. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Yang, R.; Qu, D.; Zhu, S.; Qian, Y.; Tang, Y. Anomaly detection for log sequence based on improved temporal convolutional network. Comput. Eng. 2020, 46, 50–57. [Google Scholar]

- Wang, Z.; Tian, J.; Fang, H.; Chen, L.; Qin, J. LightLog: A lightweight temporal convolutional network for log anomaly detection on the edge. Comput. Netw. 2022, 203, 108616. [Google Scholar] [CrossRef]

- He, P.; Zhu, J.; Zheng, Z.; Lyu, M.R. Drain: An online log parsing approach with fixed depth tree. In Proceedings of the 2017 IEEE International Conference on Web Services (ICWS), Honolulu, HI, USA, 25–30 June 2017; pp. 33–40. [Google Scholar]

- Chen, Y.; Luktarhan, N.; Lv, D. LogLS: Research on System Log Anomaly Detection Method Based on Dual LSTM. Symmetry 2022, 14, 454. [Google Scholar] [CrossRef]

- Zhang, J.; Chang, Y.; Zou, J.; Fan, S. AME-TCN: Attention mechanism enhanced temporal convolutional network for fault diagnosis in industrial processes. In Proceedings of the 2021 Global Reliability and Prognostics and Health Management (PHM-Nanjing), Nanjing, China, 15–17 October 2021; pp. 1–6. [Google Scholar]

- Wang, J.; Tang, Y.; He, S.; Zhao, C.; Sharma, P.K.; Alfarraj, O.; Tolba, A. LogEvent2vec: LogEvent-to-vector based anomaly detection for large-scale logs in internet of things. Sensors 2020, 20, 2451. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Li, T.; Perng, C.S. LogSig: Generating system events from raw textual logs. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management, Glasgow, UK, 24–28 October 2011; pp. 785–794. [Google Scholar]

- Ning, X.; Jiang, G.; Chen, H.; Yoshihira, K. HLAer: A system for heterogeneous log analysis. In Proceedings of the SDM Workshop on Heterogeneous Learning, Philadelphia, PA, USA, 24–26 April 2014; p. 1. [Google Scholar]

- Du, M.; Li, F. Spell: Streaming parsing of system event logs. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining (ICDM), Barcelona, Spain, 12–15 December 2016; pp. 859–864. [Google Scholar]

- Zhang, Y.; Jin, R.; Zhou, Z.H. Understanding bag-of-words model: A statistical framework. Int. J. Mach. Learn. Cybern. 2010, 1, 43–52. [Google Scholar] [CrossRef]

- Qaiser, S.; Ali, R. Text mining: Use of TF-IDF to examine the relevance of words to documents. Int. J. Comput. Appl. 2018, 181, 25–29. [Google Scholar] [CrossRef]

- Ling, W.; Dyer, C.; Black, A.W.; Trancoso, I. Two/too simple adaptations of word2vec for syntax problems. In Proceedings of the 2015 conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1299–1304. [Google Scholar]

- He, S.; Zhu, J.; He, P.; Lyu, M.R. Experience report: System log analysis for anomaly detection. In Proceedings of the 2016 IEEE 27th International Symposium on Software Reliability Engineering (ISSRE), Ottawa, ON, Canada, 23–27 October 2016; pp. 207–218. [Google Scholar]

- Meng, W.; Liu, Y.; Zhang, S.; Pei, D.; Dong, H.; Song, L.; Luo, X. Device-agnostic log anomaly classification with partial labels. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), Banff, AB, Canada, 4–6 June 2018; pp. 1–6. [Google Scholar]

- Li, X.; Chen, P.; Jing, L.; He, Z.; Yu, G. Swisslog: Robust and unified deep learning based log anomaly detection for diverse faults. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 92–103. [Google Scholar]

- Bertero, C.; Roy, M.; Sauvanaud, C.; Trédan, G. Experience report: Log mining using natural language processing and application to anomaly detection. In Proceedings of the 2017 IEEE 28th International Symposium on Software Reliability Engineering (ISSRE), Toulouse, France, 23–26 October 2017; pp. 351–360. [Google Scholar]

- Meng, W.; Liu, Y.; Zhu, Y.; Zhang, S.; Pei, D.; Liu, Y.; Chen, Y.; Zhang, R.; Tao, S.; Sun, P.; et al. LogAnomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs. IJCAI 2019, 19, 4739–4745. [Google Scholar]

- Meng, W.; Liu, Y.; Huang, Y.; Zhang, S.; Zaiter, F.; Chen, B.; Pei, D. A semantic-aware representation framework for online log analysis. In Proceedings of the 2020 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; pp. 1–7. [Google Scholar]

- Raunak, V.; Gupta, V.; Metze, F. Effective dimensionality reduction for word embeddings. In Proceedings of the 4th Workshop on Representation Learning for NLP (RepL4NLP-2019), Florence, Italy, 2 August 2019; pp. 235–243. [Google Scholar]

- Chen, R.; Zhang, S.; Li, D.; Zhang, Y.; Guo, F.; Meng, W.; Pei, D.; Zhang, Y.; Chen, X.; Liu, Y. Logtransfer: Cross-system log anomaly detection for software systems with transfer learning. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 37–47. [Google Scholar]

- Tuor, A.; Baerwolf, R.; Knowles, N.; Hutchinson, B.; Nichols, N.; Jasper, R. Recurrent neural network language models for open vocabulary event-level cyber anomaly detection. arXiv 2017, arXiv:1712.00557. [Google Scholar]

- Chen, Y.; Kang, Y.; Chen, Y.; Wang, Z. Probabilistic forecasting with temporal convolutional neural network. Neurocomputing 2020, 399, 491–501. [Google Scholar] [CrossRef]

- He, Y.; Zhao, J. Temporal convolutional networks for anomaly detection in time series. J. Phys. Conf. Ser. 2019, 1213, 42050. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2020; pp. 11534–11542. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2018; pp. 4510–4520. [Google Scholar]

- Ketkar, N.; Ketkar, N. Introduction to keras. In Deep Learning with Python: A Hands-On Introduction; Apress: Berkeley, CA, USA, 2017; pp. 97–111. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, Savannah, GA, USA, 2–4 November 2016; Volume 16, pp. 265–283. [Google Scholar]

- Wang, R.; Li, J. Bayes test of precision, recall, and F1 measure for comparison of two natural language processing models. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 4135–4145. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).