1. Introduction

In autonomous driving, the environmental perception system relies heavily on object detection to ensure safety. Since LiDAR can obtain spatial information independent of the lighting conditions, point cloud data have become essential for detecting various objects, including vehicles, pedestrians, and other targets. However, the task of 3D object detection still remains highly challenging due to the complex geometrical structures and non-uniform densities found in real-world environments [

1,

2,

3,

4,

5,

6,

7,

8,

9].

Current 3D object detection from point cloud is divided into three streams, i.e., point-based, voxel-based and point-voxel-based approaches. For the point-based detectors, they directly operate on raw point clouds [

10,

11,

12,

13] and learn point-wise features. Meanwhile, many points are discarded during downsampling; it supports computing speed but still suffers from expensive computational costs and limited detection performance.

We notice that semantic segmentation plays a vital role in object detection and directly affects the final performance [

13,

14]. The semantics-guided point sampling algorithm uses a binary segmentation module of two-layer MLP as the side output of feature extraction, it can estimate point-wise scores and help identify foreground points during downsampling [

13,

14]. However, the simple two-layer MLP can only filter out some background points of high scores, which is better than Farthest Point Sampling (FPS), but it still cannot distinguish the foreground and background points accurately. Anyway, the degradation of real-time performance prevents it from making the most use in a real-world autonomous driving system.

Auxiliary tasks have been shown to be beneficial for improving the performance of primary tasks in various applications, including autonomous driving. We denote the candidate points as the points that have undergone downsampling and shifts operations and are used for final prediction. By taking advantage of auxiliary tasks, we propose a Segmentation-Guided Auxiliary Network (SGAN), which is composed of two special auxiliary tasks of the point-level supervision and center estimation. We aggregate intermediate features onto the candidate points rather than the raw points, and we employ local segmentation on them. SGAN can guide the downsampling module in object detection to obtain more accurate candidate points and become aware of the object structure.

Attention models have revolutionized the study of artificial intelligence. There are a number of previous works [

15,

16,

17,

18,

19] that utilize attention for point cloud analysis, but it is expensive in computation, which limits its use for large scenes. To overcome this limitation, we propose a computationally cost-effective alternative module called Point Cloud External Attention (PCEA), which calculates the global pair-wise interactions of the points obtained by the sampling module.

In our study, we propose Segmentation-Guided Single Stage Detector (SGSSD). Our method contains two modules, namely SGAN and PCEA. To make the module aware of the object structure, SGAN innovatively employs the prediction tasks of semantic segmentation and center-estimation. Since we regard them as auxiliary tasks, it is detachable in the time during inference. Meanwhile, we apply an appropriately designed external attention module PCEA at very low computation cost, substantially advancing the state of the art in point cloud understanding.

The key contributions are listed as follows:

We develop an advanced and precise 3D object detection framework named SGSSD, which stands for Segmentation-Guided Single Stage Detector. To optimize computation and maintain efficiency, we incorporate an external attention mechanism called Point Cloud External Attention (PCEA). This mechanism effectively extracts long-range dependencies between point clouds, leading to more efficient and accurate object detection.

We add the Segmentation-Guided Auxiliary Network (SGAN) to learn structural information, improving localization performance without incurring any additional costs.

Experiments demonstrate the superior efficiency and accurate detection performance on the KITTI and Waymo dataset.

2. Related Works

2.1. Point-Based Detectors

The point-based 3D object detection method uses the input point cloud to extract the features at the point level; this is achieved using a basic module such as PointNet [

20], PointNet++ [

21], or one of their variants. PointRCNN [

22] generates proposals from foreground points, and then, the point features within the proposal are aggregated for box refinement. VoteNet [

23] employs deep Hough voting to determine the instance centroids. In order to improve efficiency, the downsampling method is a widely used method in point-based detectors. 3DSSD [

10] uses a new fusion sampling strategy to obtain more representative points. Point-GNN [

11] uses the original point cloud to build the graph and aggregates features between nodes to generate predictions. Lidar-RCNN [

12] proposes a solution of the scale ambiguity. IA-SSD [

13] introduces an instance-aware downsampling strategy to efficiently select the foreground points. Point-based detection methods have the advantage of preserving the structural information of the original point cloud. However, these methods suffer from limited efficiency and insufficient learning capacity, which are their main limitations.

2.2. Voxel-Based Detectors

To process unstructured point clouds, voxel-based detectors first convert them into regular grids of voxels or pillars. The processed grids are then passed through a convolutional neural network (CNN) to detect objects in the environment. VoxelNet [

24] first uses dense 3D convolution to learn the geometric patterns present in the point cloud. SECOND [

25] presents the submanifold sparse convolution [

26,

27] in the backbone. Pointpillars [

28] further simplifies the voxels into pillars for greater efficiency. CIA-SSD [

29] uses the confidence rectification module to extract features for post-processing. Voxel-RCNN [

30] introduces Voxel ROI pooling to leverage the 3D structure of the proposal and improve the accuracy of object refinement. Centerpoint [

31,

32,

33] is the first anchor-free 3D detector with a simple structure. FocalsConv [

34] proposes to take submanifold convolution on important positions based on the scores. SST [

35] uses an all-transformer backbone to address the challenges in small object detection. In general, voxel-based methods have high efficiency, but the voxelization process may also result in performance degradation.

2.3. Point-Voxel-Based Detectors

Point-voxel-based methods aim to use a combined representation of points and voxels to learn more robust features and overcome issues related to poor memory locality and quantization loss [

36,

37,

38,

39,

40,

41]. PV-RCNN [

36] and PV-RCNN++ [

37] aggregate the keypoint features from FPS to the grid via ROI grid pooling. Pyramid-RCNN [

38] enhances the RoI grid pooling method by incorporating multiple sets of grids with varying scales and adaptive ball query radius, resulting in a high computational cost. PDV [

40] uses the weighted average of the voxel interior points as the voxel coordinates and takes the kernel density estimate as another feature. However, current point voxel-based methods tend to have long inference times due to the sampling module.

2.4. Auxiliary Task for Point Cloud Detectors

Auxiliary tasks can incorporate prior knowledge into the model, thereby improving the performance. SA-SSD [

42] converts the features of the backbone into point-level representations, aggregates all intermediate features onto the input point cloud, and performs semantic segmentation on the raw point cloud. CG-SSD [

43] uses the visibility of object corners to help the BEV features learn object information. HDNet [

44] estimates ground height and road information through HDMap to achieve better detection results. Inspired by PointNet++ [

21], PointCNN [

45], and PointConv [

46], which use feature propagation layers (FP layers) to recover the sampled points to the original input size and extract features more effectively, SSA3D [

47] regards FP layers as an auxiliary task to aid 3D object detection. While these methods perform full-resolution semantic segmentation, we only employ local segmentation on the candidate points. By aggregating intermediate features onto the candidate points rather than the original point cloud, we can obtain more accurate object structure information.

3. Method

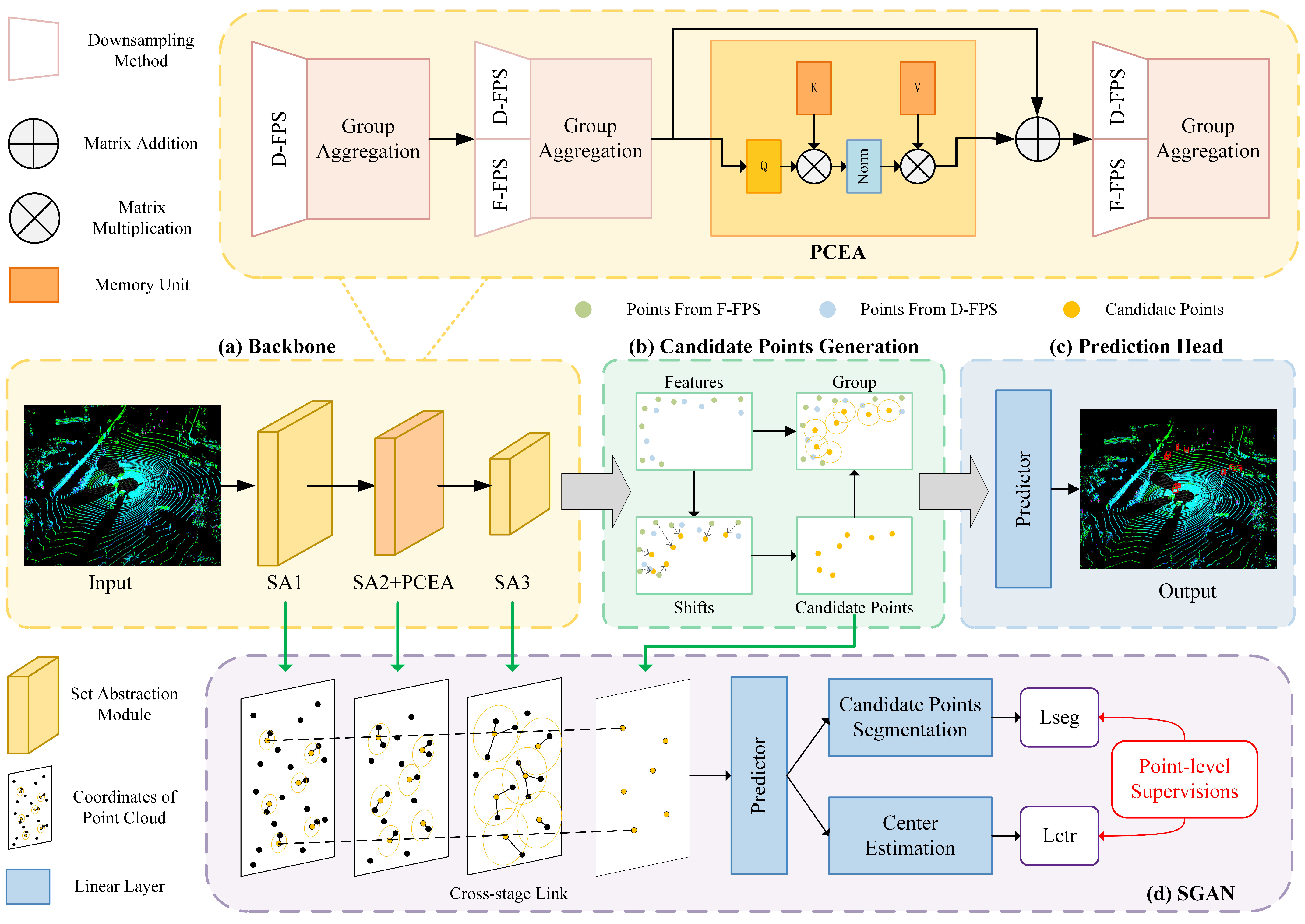

The overall architecture of SGSSD is shown in

Figure 1. Our SGSSD follows the popular encoder-only architecture used in 3DSSD [

10]. The three Set Abstraction (SA) encoding modules [

20] are classified as SA1, SA2 and SA3. The backbone takes the raw point clouds to extract point-wise features; then, it generates further input to the candidate points generation layer and the prediction head, including an anchor-based regression head and the 3D center-ness assignment strategy. We add a detachable auxiliary network SGAN, which can enrich the hidden features by two special auxiliary tasks.

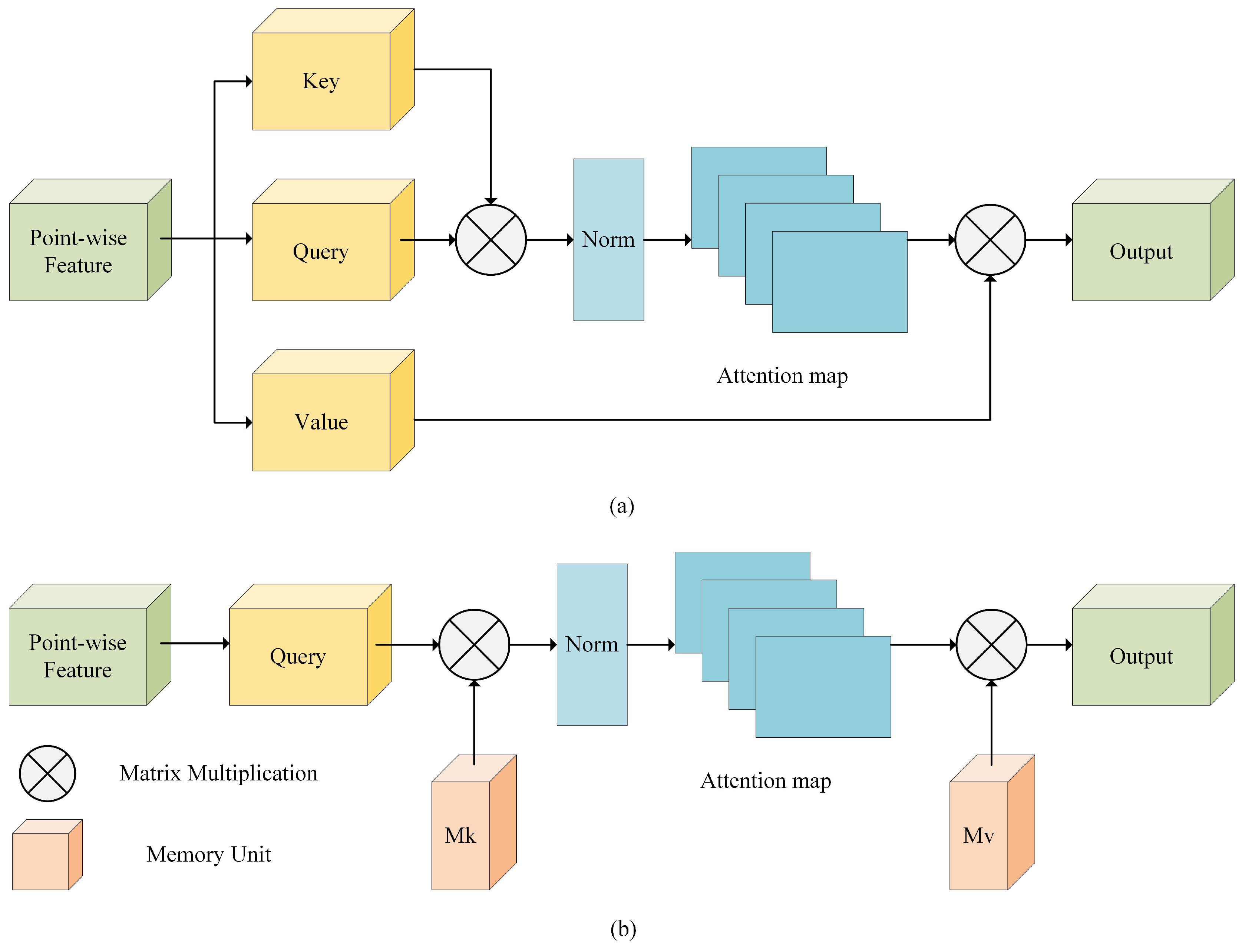

3.1. Point Cloud External Attention Module

The Farthest Point Sampling (FPS) algorithm is widely used in [

10,

11,

12,

13] and encourages the points to be uniformly distributed to the overall scene. However, the downsampling methods only consider the properties of the point itself but not those of its surrounding neighbors. The extraction step would lead to inferior performance and strongly influence the final result when there is insufficient local spatial information. If the sampling points contain much information about themselves and the surrounding neighbors, the results will improve. Attention mechanisms are widely used to enhance the semantic information of key features [

19,

48]. However, they have the high computational complexity of

and only consider the relation within a data sample, while external attention is independent of the input and explores potential relationships in different samples. The difference between self-attention and external attention is shown is

Figure 2.

External Attention. Based on this theory, we apply the PCEA (Point Cloud External Attention) module to capture the relationship between the objects after the two FPS sampling modules named SA1 and SA2. The attention is calculated as:

The matrix

M is independent of the overall dataset and acts as a memory unit to calculate the attention for each point. We use

to update

M.

and

are used as the key and value; this is consistent with self-attention.

is the attention map and

. It is sensitive to the scale of the input, so we separately normalize columns and rows by the Norm module:

Multi-head Attention. For the feature map

after the linear layer is divided into several parts

, while

are the corresponding heads, the output is written as:

where

is the output after the external attention. Here, we use a residual path, the

after the linear layer L, batch normalization B and ReLU layer R are added to the input feature

. So, the final output

is:

Complexity. The computational complexity of external attention is . Since N is the number of the input points, and d and S are hyper-parameters of the memory block, it is positively correlated with the number of input points. Thus, it brings less computation cost when compared with the self-attention of . In this study, we set a small , which works well in experiments. Such a structure is quite effective where the vehicle, pedestrian, and other objects are clearly associated with the background.

3.2. Segmentation-Guided Auxiliary Module for Training

We propose a detachable Segmentation-Guided Auxiliary Network (SGAN) with point-wise supervision to guide the candidate points to get fine-grained information. The auxiliary network is detachable and introduces no extra computational cost for inference. The auxiliary network is shown in

Figure 3. It mainly contains three steps: the interpolated features generation, the candidate points segmentation task and the center estimation task.

Interpolated Features Generation. First, we denote

as the candidate points where

p represents the coordinates and

f is the feature vector. We use a three-point linear interpolation with the inverse distance weighted average among all the points in a neighboring region [

21], and the three nearest neighbors are chosen to calculate the interpolated features. We denote

as the points after downsampling module SA1, SA2, SA3, and

as the interpolated feature.

is a ball region in each stage, so the feature vector is:

The above aggregation module allows features at different levels to be concatenated and generates multi-scale features

of candidate points:

The Candidate Points Segmentation Task. We propose a segmentation task to improve the accuracy of object detection. By guiding the backbone to learn the object boundaries, we aim to identify objects in complex environments and utilize a segmentation branch that employs a sigmoid function to predict the probability

of the foreground/background for each point:

We can optimize the foreground segmentation task by using a focal loss [

49], and

and

,

is the number of foreground points.

where

The Center Estimation Task. We employ another auxiliary task that focuses on learning the relative position of each candidate point in relation to the object center. Specifically,

is the output and

is the distance between the candidate points and the corresponding object center. We optimize this task using the following smooth-

loss function:

where

is an indicator function, and it works only on foreground points.

By integrating the candidate points segmentation and center estimation tasks into our object detection framework, SGAN is capable of learning structure-aware features. This integration allows the backbone to gain a deeper understanding of the spatial relationships between points, resulting in improved localization accuracy. By leveraging both tasks, we can more effectively identify the shape and position of objects within a scene. This is particularly important in real-world applications, where objects may have complex geometries and be situated in challenging environments. The combination of these tasks is a key factor in the success of our object detection system and helps to ensure that it is both robust and accurate.

3.3. Loss Function

The total loss function is composed of the auxiliary segmentation loss , center estimation loss , classification loss , and regression loss .

The overall loss

is defined as:

In order to optimize both the detection task and the auxiliary tasks, we utilize a combination of the focal loss, smooth-L1 loss, and cross-entropy loss in

and

. These losses are optimized by a gradient descent method Adam [

50] to reduce the risk of becoming stuck in a local optimum. A hyper-parameter

is also employed to balance the impact of the auxiliary tasks on the overall optimization process. In

Section 5.2, we will conduct experiments to determine the appropriate values of

that will yield the best results. By jointly optimizing all of these tasks, we can create a robust and accurate object detection system that is capable of performing well in a variety of scenarios.

4. Experiments

We evaluate our proposed method on two datasets. They are the KITTI Dataset [

51] and Waymo Open Dataset [

52].

4.1. Dataset

The

KITTI Dataset [

51] is composed of 7481 training samples and 7518 testing samples. By adopting the OpenPCDet [

53] setting, the training and validation splits comprised 3712 and 3769 samples, respectively. In order to submit to the KITTI official benchmark server, we randomly select 80% of the training samples to be included in the train split, while the remaining 20% are designated for the validation split. This ensures that our model is tested on a diverse range of data and is capable of generalizing to new scenarios.

The dataset has three difficulty levels (easy, moderate, and hard) based on the object size, occlusion, and truncation levels. Average precision (AP) is the main evaluation metric for object detection models. The evaluations of the test and validation set use 40 recall positions, which are represented as .

The

Waymo Open Dataset [

52] is one of the biggest autonomous driving datasets including 798 training sequences with around 158,361 samples and 202 sequences with around 40,077 samples in the validation set.

The Waymo Open Dataset uses standard 3D mean Average Precision (mAP) and mAP weighted by heading accuracy (mAPH) as its evaluation metrics. Both metrics rely on an IoU threshold of 0.7 for vehicles and 0.5 for other categories. The objects predicted are divided into two levels. LEVEL 1 comprises ground-truth objects with at least five inside points, while LEVEL 2 includes 3D labels with at least one inside point.

4.2. Experiment Details

Implementation Parameters. For the KITTI dataset, the detection range is set to be (−70 m, 70 m) for the X and Y axis, and it is (−3 m, 1 m) for the Z axis. The number of downsampled point clouds is (16,384). The radius of is 0.05 m, 0.1 m and 0.2 m. For the Waymo Open Dataset, the detection range is set to be (−75.2 m, 75.2 m) for both the X axis and the Y axis, and (−2 m, 4 m) for the Z axis. The number of downsampled point clouds is (65,536→ 16,384 ). For a fair comparison, we change the number of input points from 16,384 to 65,536 and increase the sampling points at different level to four times, while the rest is unchanged.

Meanwhile, we increase the width of backbone and double the parameters of aggregation layers for better feature extraction. Here, we call it Wider Aggregation Layers (WAL). It is more computationally effective to widen the layers, as GPU is much more efficient in parallel computations on large tensors. Thus, it can obtain more accurate results.

Data Enhancement. The dataset is augmented by random flipping, rotating, scaling the point cloud and GT-AUG [

25]. The rotation angle is subject to a uniform distribution between

and

, and the whole point cloud scales with a random scaling factor between 0.95 and 1.05.

Training Details. Experiments are conducted on a system running Ubuntu 20.04 equipped with 8 NVIDIA RTX 3090 24 GB GPUs and dual Intel Xeon Gold 5128 CPU. The training and testing are performed using Python 3.8 and Pytorch 1.8.1. All models train 80 epochs with a batch size of 32 on the KITTI Dataset and 30 epochs with a batch size of 8 on the Waymo Open Dataset. Adam [

50] is used as an optimizer with the learning rate of 0.003 and weight decay of 0.01. To eliminate redundant boxes, an NMS threshold of 0.1 is employed for both Waymo and KITTI datasets in the post-processing step.

5. Results and Discussion

5.1. Benchmarking Result

Evaluation on KITTI Dataset. We evaluate SGSSD on the KITTI test split and compare it with other state-of-the-art methods of 40 recall position

. The results are presented in

Table 1.

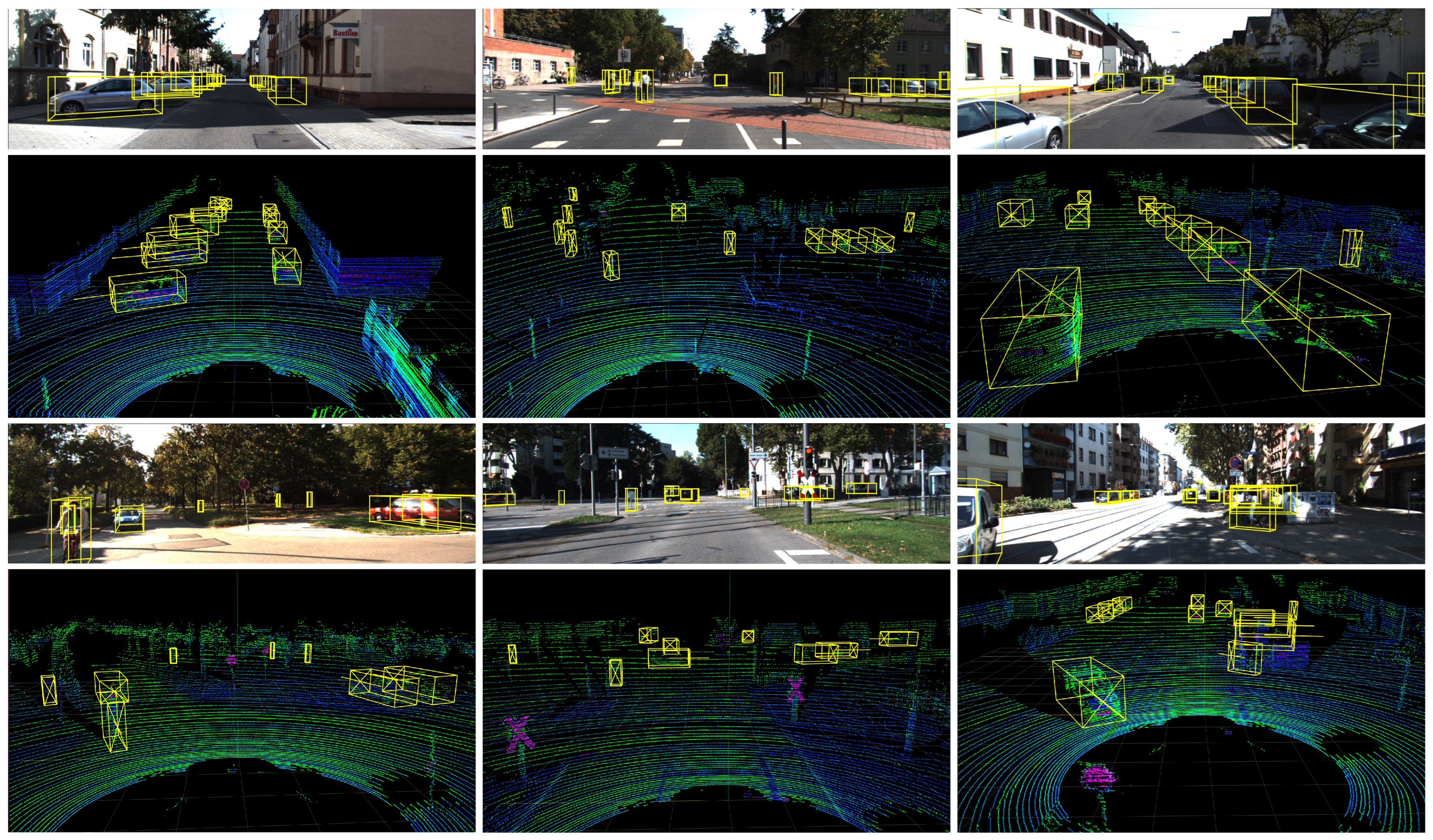

Figure 4 demonstrates some prediction results; our method generates accurate 3D bounding boxes in different scenes. Note that since 3DSSD [

10] only provides a pre-trained model for car, we report the results achieved by 3DSSD [

10] in their paper, the best reproduced results, and the results achieved by OpenPCDet [

53] implementation. The proposed method surpasses other point-based detectors on cyclist and pedestrian, and it exhibits a competitive performance on car, outperforming 3DSSD [

10] by 1.32%, 2.13%, and 2.21% mAP on

easy,

moderate and

hard levels, respectively. The improvement in performance is attributed mainly to the segmentation module in the auxiliary network, which accurately preserves foreground points and enables the detection of small objects. Thanks to its single-stage architecture and proper attention mechanism, our SGSSD can operate at 25 FPS, which is faster than most existing methods.

For full validation, we also evaluate our method on the validation set in

Table 2. For car, pedestrian, cyclist detection, the AP achieves 84.42%, 62.40%, and 73.02% on

moderate, achieving a competitive detection performance among all classes. The results show that SGSSD outperforms 3DSSD in every aspect. Our SGSSD is characterized by its efficiency and simplicity, as it is a single-stage model that can detect multiple classes of objects using only one network.

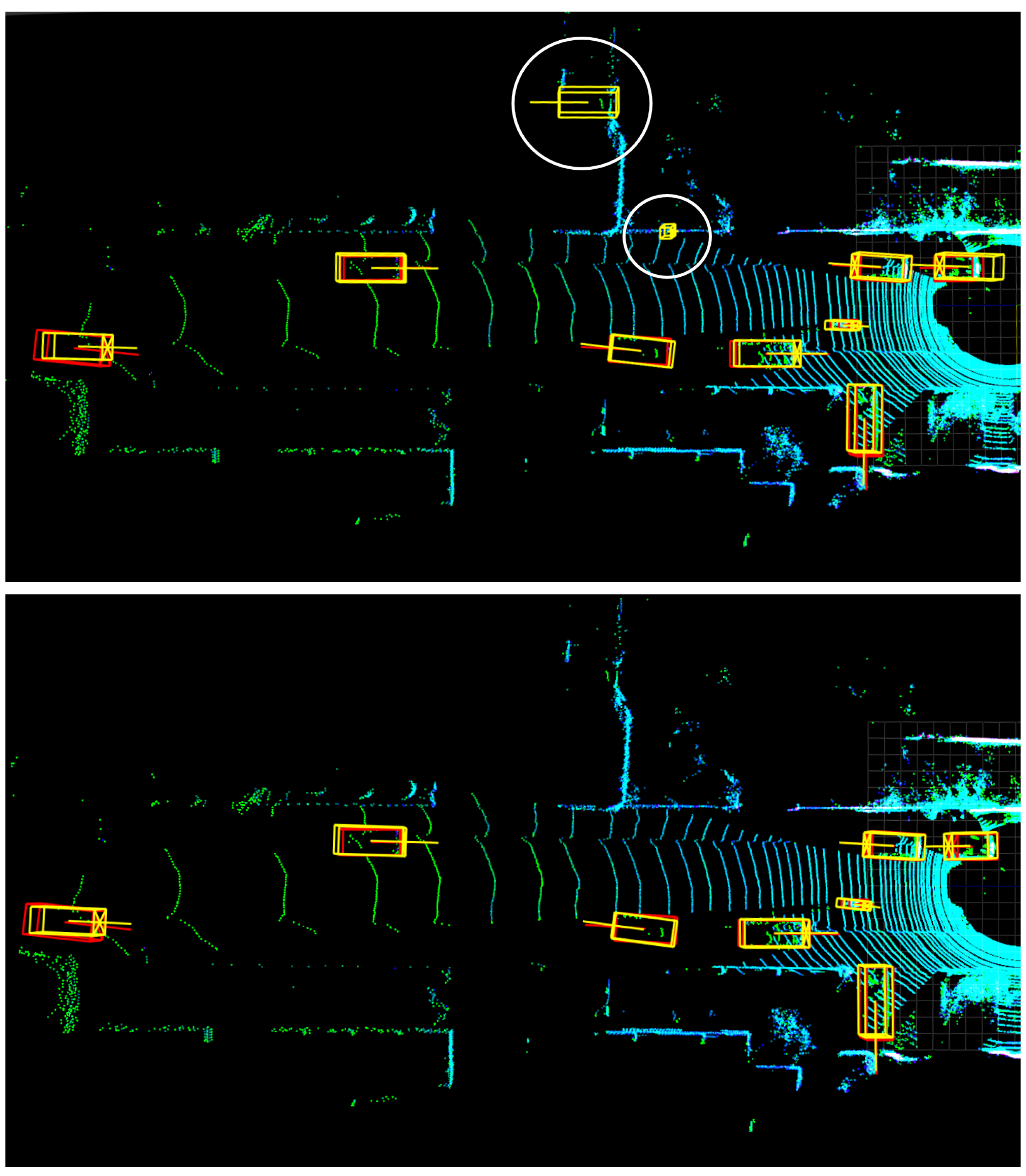

To demonstrate the effectiveness of our SGSSD, we provide qualitative examples in

Figure 5. The images show that 3DSSD mistakenly detects many small objects. Note that 3DSSD does not consider the relationship between the points during downsampling and may regard some sparse points as pedestrians or cars, whereas our method fully considers the relationship between samples and can accurately detect them and achieve the best performance in terms of detection.

Evaluation on Waymo Open Dataset. The detection performance of our SGSSD on the Waymo dataset is further evaluated in

Table 3. Our SGSSD outperforms other strong baselines on cyclists, indicating that the proposed auxiliary network effectively enhances the perception of small objects. The use of middle features in SGAN to generate multi-scale semantic information allows our SGSSD to enrich hidden features from auxiliary tasks. However, we also observe slightly lower detection performance compared to other two-stage methods, as we do not use the point cloud with full resolution in the second stage to produce more accurate detections.

5.2. Ablation Study

In the following ablation studies, we conduct a comprehensive analysis of our method. We use 3DSSD as the baseline, while the Wider Aggregation Layers module (WAL), the Point Cloud External Attention module (PCEA), and the Segmentation-Guided Auxiliary Network (SGAN) are sequentially added to the 3DSSD model. All experiments are conducted on the KITTI validation set, and the results are shown in

Table 4.

Effect of Sampling Point Numbers. To improve the accuracy of our model, we experiment with changing the number of input and sampling points to

M times, where

M is 1/2, 2 and 4. As shown in

Table 5, increasing the number of input points also increases the inference time, and both increasing or decreasing the number of input points can lead to decreased accuracy. After conducting experiments, we found that our method performs better with

.

Effect of WAL Module. A wider layer can further improve the performance by 1.51%, 2.24%, and 2.25% on the three moderate subsets, validating the effectiveness of the wider network while extracting features. Since GPU is much more efficient in parallel computations on large tensors, it brings a small computation cost.

Effect of PCEA Module. By adding the external attention module, the accuracy is improved by 0.44%, 0.06% and 2.01%, respectively. The attention mechanism weakens the effect of the outline points and makes the module more robust.

Table 6 shows the results of adding PCEA in different stages. Since PCEA enriches output features, it is reasonable to be used in middle stages that make effects on subsequent feature learning. Applying PCEA after SA2 has a better balance between precision and speed. Adding it too early means that the point itself aggregates too much information around neighbors; the boundary points would lose the ability to distinguish foreground/background and lead to a decline on the precision of small objects such as pedestrians. Empirically, usage after SA2 is the best choice. It is thus used as the default setting in our experiments.

Effect of SGAN Module. Leveraging segmentation auxiliary task contributes to a performance improvement of around 3.60% and 0.50% on the pedestrian and cyclist moderate subsets as well as 0.05%, 3.34%, and 1.27% on hard separately. The improvement is mainly on hard; it is consistent with our expectation that when the data points are relatively sparse, learning the object structure is essential for candidate points to determine the object scale and shape.

Weight Selection of SGAN Tasks. The weight

presented in

Section 3.3 plays a crucial role in determining the impact of the auxiliary task on the main task, making it an important hyperparameter in our method. In order to determine the optimal value, we perform experiments with varying values. The results shown in

Table 7 demonstrate that the performance is improved when the weight lies within a certain range. A weight that is too small is unable to contribute to the main task, while a weight that is too large can degrade performance by distorting the feature representation. Based on these experiments, we have chosen to use

in all subsequent experiments.

5.3. Runtime Analysis

The runtime analysis is performed with a single GPU of NVIDIA RTX 3090 24GB and dual Intel Xeon Gold 5128 CPU, and all experiments are conducted on the KITTI validation set with

. We evaluate the runtime of each step during inference in

Table 4. The inference time of baseline 3DSSD is 31.9 ms, and an auxiliary network introduces no extra computational cost. The WAL and PCEA module incur costs of 12 ms and 1.3 ms separately. Combining the two modules will increase the cost by 15.5 ms. We also compare the inference time of other methods in

Table 8. As many codes are not open-sourced, we partially reproduce some commonly used methods at the same platform. Notably, our method achieves a better balance between precision and speed among all point-based methods. Since the baseline 3DSSD is not currently the most competitive model, our method still has much potential that can be further investigated for acceleration.

6. Future Work

To confirm the effectiveness of the proposed method, the quantitative evaluation should be carried out using 3D range data collected in a real road environment by high-definition LIDAR. We have already organized our own LiDAR dataset; it is collected by a Pandar128E3X lidar on the front of the vehicle parallel to the ground and at a height of 2 m. It covers with an angular resolution of , has a scanning range of 0.3 to 200 m with a systematic error of cm, and spins at 10 Hz. The lasers are connected to the central control system through an Ethernet connection. At its current rotation speed (10 Hz), the LIDAR produces more than 1 million points per revolution. The dataset is composed of 8 scenarios in stationary scenes where people queue for a Nucleic Acid Test. The total number of frames is 103. This dataset has 1873 people with various clothing and belongings, such as a briefcase and a backpack. The measurement range is also up to 100 m.

In future work, we will change the backbone from 3DSSD to a more efficient network and further study how to use a learning-based sampling strategy instead of FPS sampling to reduce the inference time. Then, the model will be transferred to our own dataset.

7. Conclusions

In this study, we propose a Segmentation-Guided Single-Stage Detector (SGSSD) that utilizes the PCEA and SGAN modules to enhance object detection performance. The PCEA module extracts structure information using less memory and computation compared to previous attention-based methods. The SGAN module provides more accurate detection results for candidate points without significant computational overhead. Experimental results on the KITTI and Waymo detection benchmarks demonstrate that our approach achieves higher performance while maintaining high efficiency. Our future work will focus on further improving the efficiency of point-based methods.

Author Contributions

Conceptualization, X.W.; methodology, X.W. and D.Z.; software, X.W.; validation, X.W. and D.Z.; formal analysis, X.W.; investigation, X.W. and H.N.; resources, H.N.; data curation, D.Z.; writing—original draft preparation, X.W.; writing—review and editing, X.W., D.Z. and H.N.; visualization, D.Z.; supervision, X.L.; project administration, X.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China, grant Number 61827803.

Data Availability Statement

Conflicts of Interest

The authors declare no conflict of interest.

References

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep learning for 3d point clouds: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4338–4364. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, X.; Zheng, Z.; Liu, X. Unsupervised Domain Adaptive 3-D Detection with Data Adaption From LiDAR Point Cloud. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5705814. [Google Scholar] [CrossRef]

- Qian, H.; Wu, P.; Sun, B.; Su, S. AGS-SSD: Attention-Guided Sampling for 3D Single-Stage Detector. Electronics 2022, 11, 2268. [Google Scholar] [CrossRef]

- Navarro, P.J.; Fernandez, C.; Borraz, R.; Alonso, D. A machine learning approach to pedestrian detection for autonomous vehicles using high-definition 3D range data. Sensors 2016, 17, 18. [Google Scholar] [CrossRef] [PubMed]

- Yin, L.; Tian, W.; Wang, L.; Wang, Z.; Yu, Z. SPV-SSD: An Anchor-Free 3D Single-Stage Detector with Supervised-PointRendering and Visibility Representation. Remote Sens. 2022, 15, 161. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, R.; An, H.; Tao, C.; Lu, K. Anti-Noise 3D Object Detection of Multimodal Feature Attention Fusion Based on PV-RCNN. Sensors 2023, 23, 233. [Google Scholar] [CrossRef]

- Shuang, F.; Huang, H.; Li, Y.; Qu, R.; Li, P. AFE-RCNN: Adaptive feature enhancement RCNN for 3D object detection. Remote Sens. 2022, 14, 1176. [Google Scholar] [CrossRef]

- Zhai, Z.; Wang, Q.; Pan, Z.; Gao, Z.; Hu, W. Muti-Frame Point Cloud Feature Fusion Based on Attention Mechanisms for 3D Object Detection. Sensors 2022, 22, 7473. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3d single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Shi, W.; Rajkumar, R. Point-gnn: Graph neural network for 3d object detection in a point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1711–1719. [Google Scholar]

- Li, Z.; Wang, F.; Wang, N. Lidar r-cnn: An efficient and universal 3d object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7546–7555. [Google Scholar]

- Zhang, Y.; Hu, Q.; Xu, G.; Ma, Y.; Wan, J.; Guo, Y. Not all points are equal: Learning highly efficient point-based detectors for 3d lidar point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18953–18962. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. Sasa: Semantics-augmented set abstraction for point-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 1. [Google Scholar]

- Xie, S.; Liu, S.; Chen, Z.; Tu, Z. Attentional shapecontextnet for point cloud recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4606–4615. [Google Scholar]

- Liu, X.; Han, Z.; Liu, Y.S.; Zwicker, M. Point2sequence: Learning the shape representation of 3d point clouds with an attention-based sequence to sequence network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8778–8785. [Google Scholar]

- Yang, J.; Zhang, Q.; Ni, B.; Li, L.; Liu, J.; Zhou, M.; Tian, Q. Modeling point clouds with self-attention and gumbel subset sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3323–3332. [Google Scholar]

- Li, G.; Muller, M.; Thabet, A.; Ghanem, B. Deepgcns: Can gcns go as deep as cnns? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9267–9276. [Google Scholar]

- Guo, M.H.; Liu, Z.N.; Mu, T.J.; Hu, S.M. Beyond self-attention: External attention using two linear layers for visual tasks. arXiv 2021, arXiv:2105.02358. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection From Point Cloud. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ding, Z.; Han, X.; Niethammer, M. Votenet: A deep learning label fusion method for multi-atlas segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Brisbane, Australia, 18–22 September 2022; Springer: Berlin/Heidelberg, Germany, 2019; pp. 202–210. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef]

- Graham, B.; van der Maaten, L. Submanifold sparse convolutional networks. arXiv 2017, arXiv:1706.01307. [Google Scholar]

- Liu, B.; Wang, M.; Foroosh, H.; Tappen, M.; Pensky, M. Sparse convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 806–814. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Zheng, W.; Tang, W.; Chen, S.; Jiang, L.; Fu, C.W. Cia-ssd: Confident iou-aware single-stage object detector from point cloud. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 3555–3562. [Google Scholar]

- Wang, H.; Chen, Z.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. Voxel-RCNN-Complex: An effective 3-D point cloud object detector for complex traffic conditions. IEEE Trans. Instrum. Meas. 2022, 71, 2507112. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 11784–11793. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6569–6578. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14September 2018; pp. 734–750. [Google Scholar]

- Chen, Y.; Li, Y.; Zhang, X.; Sun, J.; Jia, J. Focal Sparse Convolutional Networks for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5428–5437. [Google Scholar]

- Fan, L.; Pang, Z.; Zhang, T.; Wang, Y.X.; Zhao, H.; Wang, F.; Wang, N.; Zhang, Z. Embracing single stride 3D object detector with sparse transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8458–8468. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Mao, J.; Niu, M.; Bai, H.; Liang, X.; Xu, H.; Xu, C. Pyramid r-cnn: Towards better performance and adaptability for 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 2723–2732. [Google Scholar]

- Noh, J.; Lee, S.; Ham, B. Hvpr: Hybrid voxel-point representation for single-stage 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, BC, Canada, 11–17 October 2021; pp. 14605–14614. [Google Scholar]

- Hu, J.S.; Kuai, T.; Waslander, S.L. Point density-aware voxels for lidar 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8469–8478. [Google Scholar]

- Zhang, D.; Wang, X.; Zheng, Z.; Liu, X.; Fang, G. ARFA: Adaptive Reception Field Aggregation for 3D Detection from LiDAR Point Cloud. IEEE Sens. J. 2022; Early Access. [Google Scholar] [CrossRef]

- He, C.; Zeng, H.; Huang, J.; Hua, X.S.; Zhang, L. Structure aware single-stage 3d object detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11873–11882. [Google Scholar]

- Ma, R.; Chen, C.; Yang, B.; Li, D.; Wang, H.; Cong, Y.; Hu, Z. CG-SSD: Corner guided single stage 3D object detection from LiDAR point cloud. ISPRS J. Photogramm. Remote Sens. 2022, 191, 33–48. [Google Scholar] [CrossRef]

- Yang, B.; Liang, M.; Urtasun, R. Hdnet: Exploiting hd maps for 3d object detection. In Proceedings of the Conference on Robot Learning, Zürich, Switzerland, 29–31 October 2018; pp. 146–155. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. In Proceedings of the 32nd Conference on Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. Pointconv: Deep convolutional networks on 3d point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9621–9630. [Google Scholar]

- Huang, S.; Cai, G.; Wang, Z.; Xia, Q.; Wang, R. SSA3D: Semantic Segmentation Assisted One-Stage Three-Dimensional Vehicle Object Detection. IEEE Trans. Intell. Transp. Syst. 2021, 23, 14764–14778. [Google Scholar] [CrossRef]

- Guo, M.H.; Cai, J.X.; Liu, Z.N.; Mu, T.J.; Martin, R.R.; Hu, S.M. Pct: Point cloud transformer. Comput. Vis. Media 2021, 7, 187–199. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Team, O.D. OpenPCDet: An Open-Source Toolbox for 3D Object Detection from Point Clouds. Available online: https://github.com/open-mmlab/OpenPCDet (accessed on 9 April 2020).

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection From Point Cloud With Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- Qian, R.; Lai, X.; Li, X. BADet: Boundary-Aware 3D Object Detection from Point Clouds. In Proceedings of the Pattern Recognition, New York, NY, USA, 15–17 June 1993; Elsevier: Amsterdam, The Netherlands, 2022; Volume 125, p. 108524. [Google Scholar]

- Zhao, L.; Wang, M.; Yue, Y. Sem-aug: Improving camera-lidar feature fusion with semantic augmentation for 3d vehicle detection. IEEE Robot. Autom. Lett. 2022, 7, 9358–9365. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 1951–1960. [Google Scholar]

- Jiang, T.; Song, N.; Liu, H.; Yin, R.; Gong, Y.; Yao, J. Vic-net: Voxelization information compensation network for point cloud 3d object detection. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13408–13414. [Google Scholar]

- Li, J.; Luo, S.; Zhu, Z.; Dai, H.; Krylov, A.S.; Ding, Y.; Shao, L. 3D IoU-Net: IoU guided 3D object detector for point clouds. arXiv 2020, arXiv:2004.04962. [Google Scholar]

Figure 1.

Illustration of SGSSD framework. (a) Backbone network. It takes the raw point cloud as input and generates global features through several SA layers, PCEA is added after SA2. D-FPS and F-FPS mean FPS in Euclidean and feature space, respectively. (b) Candidate points generation layer. The points after shifts are used for prediction, we call them candidate points. (c) Prediction head. (d) Auxiliary module SGAN. It is detachable after training.

Figure 1.

Illustration of SGSSD framework. (a) Backbone network. It takes the raw point cloud as input and generates global features through several SA layers, PCEA is added after SA2. D-FPS and F-FPS mean FPS in Euclidean and feature space, respectively. (b) Candidate points generation layer. The points after shifts are used for prediction, we call them candidate points. (c) Prediction head. (d) Auxiliary module SGAN. It is detachable after training.

Figure 2.

Illustration of the point cloud external attention module. (a) Self-attention. (b) External attention. The difference is that external attention uses a memory unit M as a memory of the whole training dataset, which is independent of the input.

Figure 2.

Illustration of the point cloud external attention module. (a) Self-attention. (b) External attention. The difference is that external attention uses a memory unit M as a memory of the whole training dataset, which is independent of the input.

Figure 3.

Illustration of the auxiliary network. The features of candidate points are concatenated by different levels.

Figure 3.

Illustration of the auxiliary network. The features of candidate points are concatenated by different levels.

Figure 4.

Qualitative results on the KITTI test set. The predicted bounding boxes are shown in yellow. The predictions are projected onto the images for better visualization.

Figure 4.

Qualitative results on the KITTI test set. The predicted bounding boxes are shown in yellow. The predictions are projected onto the images for better visualization.

Figure 5.

Comparison between 3DSSD and SGSSD on the KITTI validation set. The first and second lines are the detection results of 3DSSD and SGSSD, respectively. The red box represents the ground truth. The yellow box indicates the test results. White circles indicate false positive results.

Figure 5.

Comparison between 3DSSD and SGSSD on the KITTI validation set. The first and second lines are the detection results of 3DSSD and SGSSD, respectively. The red box represents the ground truth. The yellow box indicates the test results. White circles indicate false positive results.

Table 1.

Quantitative detection performance achieved by different methods on the KITTI test set. The results of our SGSSD are shown in bold, and the best results are underlined.

Table 1.

Quantitative detection performance achieved by different methods on the KITTI test set. The results of our SGSSD are shown in bold, and the best results are underlined.

| | Method | Reference | Type | Car (IoU = 0.7) | Ped. (IoU = 0.5) | Cyc. (IoU = 0.5) |

|---|

| Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard |

|---|

| Voxel-based | SECOND [25] | Sensors 2018 | 1-stage | 84.65 | 75.96 | 68.71 | 45.31 | 35.52 | 33.14 | 75.83 | 60.82 | 53.67 |

| PointPillars [28] | CVPR 2019 | 1-stage | 82.58 | 74.31 | 68.99 | 51.45 | 41.92 | 38.89 | 77.1 | 58.65 | 51.92 |

| Part-A2 [54] | TPAMI 2020 | 2-stage | 87.81 | 78.49 | 73.51 | 53.1 | 43.35 | 40.06 | 79.17 | 63.52 | 56.93 |

| SA-SSD [42] | CVPR 2020 | 1-stage | 88.75 | 79.79 | 74.16 | - | - | - | - | - | - |

| CIA-SSD [29] | AAAI 2021 | 1-stage | 89.59 | 80.28 | 72.87 | - | - | - | - | - | - |

| Voxel R-CNN [30] | AAAI 2021 | 2-stage | 90.90 | 81.62 | 77.06 | - | - | - | - | - | - |

| PDV [40] | CVPR 2022 | 2-stage | 90.43 | 81.86 | 77.36 | - | - | - | 83.04 | 67.81 | 60.46 |

| BADet [55] | PR 2022 | 2-stage | 89.28 | 81.61 | 76.58 | - | - | - | - | - | - |

| Sem-Aug [56] | IRAL2022 | 1-stage | 89.41 | 80.77 | 75.9 | - | - | - | - | - | - |

| PV-based | STD [57] | ICCV 2020 | 2-stage | 87.95 | 79.71 | 75.09 | 53.29 | 42.47 | 38.35 | 78.69 | 61.59 | 55.30 |

| PV-RCNN [36] | CVPR 2020 | 2-stage | 90.25 | 81.43 | 76.82 | 52.17 | 43.29 | 40.29 | 78.60 | 63.71 | 57.65 |

| VIC-Net [58] | ICRA 2021 | 1-stage | 88.25 | 80.61 | 75.83 | 43.82 | 37.18 | 35.35 | 78.29 | 63.65 | 57.27 |

| HVPR [39] | CVPR 2021 | 1-stage | 86.38 | 77.92 | 73.04 | 53.47 | 43.96 | 40.64 | - | - | - |

| Point-based | PointRCNN [22] | CVPR 2019 | 2-stage | 86.96 | 75.64 | 70.70 | 47.98 | 39.37 | 36.01 | 74.96 | 58.82 | 52.53 |

| 3D IoU-Net [59] | Arxiv 2020 | 2-stage | 87.96 | 79.03 | 72.78 | - | - | - | - | - | - |

| 3DSSD [10] | CVPR 2020 | 1-stage | 88.36 | 79.57 | 74.55 | - | - | - | - | - | - |

| 3DSSD † | CVPR 2020 | 1-stage | 87.73 | 78.58 | 72.01 | 35.03 | 27.76 | 26.08 | 66.69 | 59.00 | 55.62 |

| 3DSSD ‡ | CVPR 2020 | 1-stage | 87.91 | 79.55 | 74.71 | 3.63 | 3.18 | 2.57 | 27.08 | 21.38 | 19.68 |

| SASA [14] | CVPR 2020 | 1-stage | 88.76 | 82.16 | 77.16 | - | - | - | - | - | - |

| IA-SSD [13] | CVPR 2022 | 1-stage | 88.34 | 80.13 | 75.04 | 46.51 | 39.03 | 35.6 | 78.35 | 61.94 | 55.7 |

| | Ours | - | 1-stage | 89.68 | 81.70 | 76.76 | 46.01 | 39.35 | 36.81 | 79.66 | 64.11 | 58.03 |

Table 2.

Quantitative comparison of different approaches on the validation split of the KITTI dataset. The mean average precision is measured with 11 recall positions . The results of our SGSSD are shown in bold, and the best results are underlined.

Table 2.

Quantitative comparison of different approaches on the validation split of the KITTI dataset. The mean average precision is measured with 11 recall positions . The results of our SGSSD are shown in bold, and the best results are underlined.

| Method | Reference | Type | Car Mod

(IoU = 0.7) | Ped. Mod

(IoU = 0.5) | Cyc. Mod

(IoU = 0.5) |

|---|

| SECOND [25] | Sensors 2018 | 1-stage | 76.48 | - | - |

| PointPillars [28] | CVPR 2019 | 1-stage | 77.98 | - | - |

| PointRCNN [11] | CVPR 2019 | 2-stage | 78.63 | - | - |

| Part-A2 [54] | TPAMI 2020 | 2-stage | 79.47 | 63.84 | 73.07 |

| PV-RCNN [36] | CVPR 2020 | 2-stage | 83.90 | - | - |

| 3DSSD [10] | CVPR 2020 | 1-stage | 79.45 | - | - |

| SA-SSD [42] | CVPR 2020 | 1-stage | 79.91 | - | - |

| SASA [14] | CVPR 2020 | 1-stage | 85.76 | 58.02 | 72.61 |

| CIA-SSD [29] | AAAI 2021 | 1-stage | 79.81 | - | - |

| IA-SSD [13] | CVPR 2022 | 1-stage | 79.57 | 58.91 | 71.24 |

| Ours | - | 1-stage | 84.42 | 62.40 | 73.02 |

Table 3.

Quantitative detection performance achieved on the Waymo validation set. The results of our SGSSD are shown in bold, and the best results are underlined.

Table 3.

Quantitative detection performance achieved on the Waymo validation set. The results of our SGSSD are shown in bold, and the best results are underlined.

| | Method | Veh. (LEVEL 1) | Veh. (LEVEL 2) | Ped. (LEVEL 1) | Ped. (LEVEL 2) | Cyc. (LEVEL 1) | Cyc. (LEVEL 2) |

|---|

| mAP | mAPH | mAP | mAPH | mAP | mAPH | mAP | mAPH | mAP | mAPH | mAP | mAPH |

|---|

| 2-stage | PART-A2 [54] | 71.82 | 71.29 | 64.33 | 63.82 | 63.15 | 54.96 | 54.24 | 47.11 | 65.23 | 63.92 | 62.61 | 61.35 |

| PV-RCNN [36] | 74.06 | 73.38 | 64.99 | 64.38 | 62.66 | 52.68 | 53.80 | 45.14 | 63.32 | 61.71 | 60.72 | 59.18 |

| Voxel-RCNN [30] | 75.59 | - | 66.59 | - | - | - | - | - | - | - | - | - |

| Pyramid-PV [38] | 76.30 | 75.68 | 67.23 | 66.68 | - | - | - | - | - | - | - | - |

| PDV [40] | 76.85 | 76.33 | 69.30 | 68.81 | 74.19 | 65.96 | 65.85 | 58.28 | 68.71 | 67.55 | 66.49 | 65.36 |

| 1-stage | PointPillars [28] | 60.67 | 59.79 | 52.78 | 52.01 | 43.49 | 23.51 | 37.32 | 20.17 | 35.94 | 28.34 | 34.6 | 27.29 |

| SECOND [25] | 68.03 | 67.44 | 59.57 | 59.04 | 61.14 | 50.33 | 53.00 | 43.56 | 54.66 | 53.31 | 52.67 | 51.37 |

| IA-SSD [13] | 70.53 | 69.67 | 61.55 | 60.80 | 69.38 | 58.47 | 60.30 | 50.73 | 67.67 | 65.30 | 64.98 | 62.71 |

| | Ours | 71.19 | 70.42 | 62.51 | 61.83 | 69.59 | 59.52 | 61.02 | 52.07 | 67.24 | 65.09 | 64.70 | 62.63 |

Table 4.

Ablation studies on proposed modules: SGAN, PCEA, and WAL. The mean average precision is measured with 40 recall positions . The results of our SGSSD are shown in bold, and the best results are underlined.

Table 4.

Ablation studies on proposed modules: SGAN, PCEA, and WAL. The mean average precision is measured with 40 recall positions . The results of our SGSSD are shown in bold, and the best results are underlined.

| Condition | Car (IoU = 0.7) | Ped. (IoU = 0.5) | Cyc. (IoU = 0.5) | Speed (ms) |

|---|

| Baseline | WAL | PCEA | SGAN | Easy | Mod. | Hard | Easy | Mod. | Hard | Easy | Mod. | Hard |

|---|

| ✓ | | | | 91.28 | 83.28 | 81.82 | 61.32 | 56.14 | 51.42 | 85.61 | 68.56 | 64.73 | 31.9 |

| ✓ | | | ✓ | 91.73 | 84.84 | 82.37 | 61.12 | 57.65 | 53.00 | 90.56 | 72.70 | 68.02 | 31.9 |

| ✓ | | ✓ | | 91.52 | 84.39 | 82.12 | 61.97 | 58.12 | 53.22 | 88.90 | 70.69 | 66.48 | 33.2 |

| ✓ | ✓ | | | 92.01 | 84.79 | 82.36 | 63.63 | 58.38 | 53.26 | 90.32 | 70.81 | 66.45 | 44.0 |

| ✓ | ✓ | ✓ | | 91.94 | 85.23 | 82.70 | 62.81 | 58.42 | 53.07 | 91.21 | 72.80 | 67.77 | 47.4 |

| ✓ | ✓ | ✓ | ✓ | 91.81 | 85.23 | 82.75 | 67.40 | 62.02 | 56.41 | 92.36 | 73.30 | 69.04 | 47.5 |

Table 5.

Performance of different point numbers. M means the shrink time compared to the baseline. The best results are underlined.

Table 5.

Performance of different point numbers. M means the shrink time compared to the baseline. The best results are underlined.

| M | The Number of Subsampled Points | Car Mod (IoU = 0.7) | Ped. Mod (IoU = 0.5) | Cyc. Mod (IoU = 0.5) | Speed (ms) |

|---|

| 1/2 | [] | 79.63 | 56.21 | 65.27 | 29.8 |

| 1 | [] | 85.23 | 62.02 | 73.30 | 47.5 |

| 2 | [] | 85.48 | 59.85 | 70.56 | 80.7 |

| 4 | [] | 83.32 | 60.15 | 69.88 | 190.8 |

Table 6.

Performance of PCEA after different stages. PCEA1 and PCEA2 mean adding the PCEA module after SA1 and SA2 separately. The AP value is calculated with 40 recall positions on moderate subsets. The best results are underlined.

Table 6.

Performance of PCEA after different stages. PCEA1 and PCEA2 mean adding the PCEA module after SA1 and SA2 separately. The AP value is calculated with 40 recall positions on moderate subsets. The best results are underlined.

| | Car Mod (IoU = 0.7) | Ped. Mod (IoU = 0.5) | Cyc. Mod (IoU = 0.5) | Speed (ms) |

|---|

| PCEA1 | 85.29 | 57.12 | 74.41 | 89.3 |

| PCEA2 | 85.23 | 58.42 | 72.80 | 47.4 |

Table 7.

The selection weights for auxiliary tasks on car moderate. means the weight of the auxiliary tasks to the main task. The best results are underlined.

Table 7.

The selection weights for auxiliary tasks on car moderate. means the weight of the auxiliary tasks to the main task. The best results are underlined.

| 0.2 | 0.5 | 1 | 2 | 4 |

|---|

| 85.09 | 85.22 | 85.23 | 85.07 | 84.95 |

Table 8.

Runtime and performance of different methods on KITTI validation set. The mean average precision is measured with 40 recall positions

. All results are reproduced by OpenPCDet [

53] implementation. The results of our SGSSD are shown in bold, and the best results are underlined.

Table 8.

Runtime and performance of different methods on KITTI validation set. The mean average precision is measured with 40 recall positions

. All results are reproduced by OpenPCDet [

53] implementation. The results of our SGSSD are shown in bold, and the best results are underlined.

| | Method | Car | Ped. | Cyc. | Speed (ms) |

|---|

| Point-based | 3DSSD [10] | 83.28 | 56.14 | 68.56 | 31.9 |

| SASA [14] | 84.96 | 60.91 | 74.58 | 32.1 |

| IA-SSD [13] | 79.21 | 55.90 | 71.37 | 23.6 |

| Others | SECOND [25] | 78.35 | 46.78 | 65.14 | 37.8 |

| PointRCNN [22] | 80.31 | 57.89 | 72.03 | 100.0 |

| PointPillar [28] | 78.11 | 47.78 | 62.61 | 31.0 |

| PV-RCNN [36] | 84.37 | 54.51 | 70.39 | 142.0 |

| Voxel-RCNN [24] | 84.70 | - | - | 74.0 |

| | Ours | 85.23 | 62.02 | 73.30 | 47.4 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).