Facial Pose and Expression Transfer Based on Classification Features

Abstract

1. Introduction

2. Related Work

2.1. Facial Pose Transfer

2.2. Facial Pose and Expression Transfer

3. Proposed Method

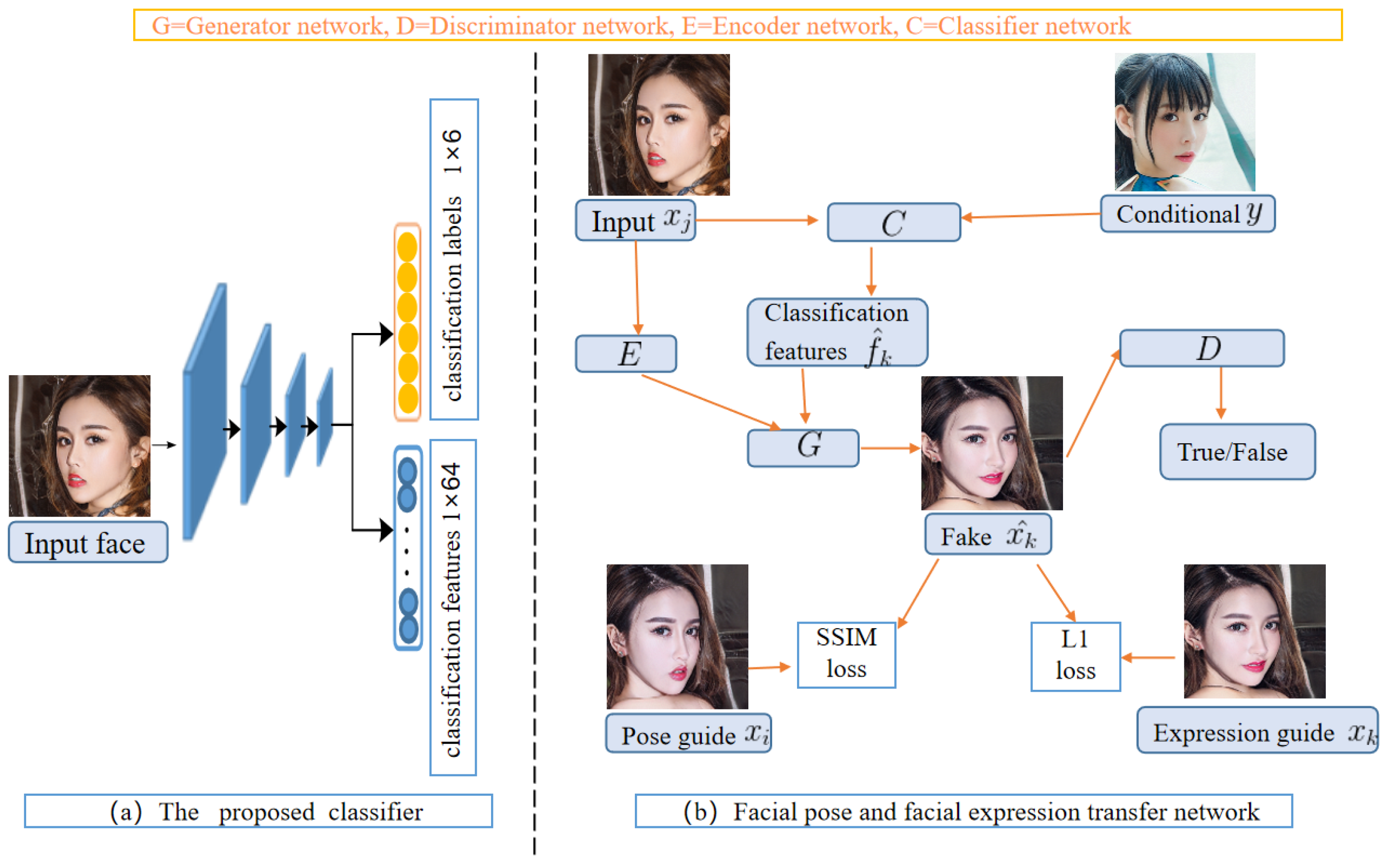

3.1. Proposed Formula for Classifier

3.2. Proposed Formula for Generator

3.3. Proposed Network Architecture

3.4. Overall Objective

3.5. Our Algorithm

| Algorithm 1 Facial pose and happy expression transfer algorithm. |

Require:

|

Ensure:

|

4. Experiments

4.1. Datasets and Preprocessing

4.2. Baselines

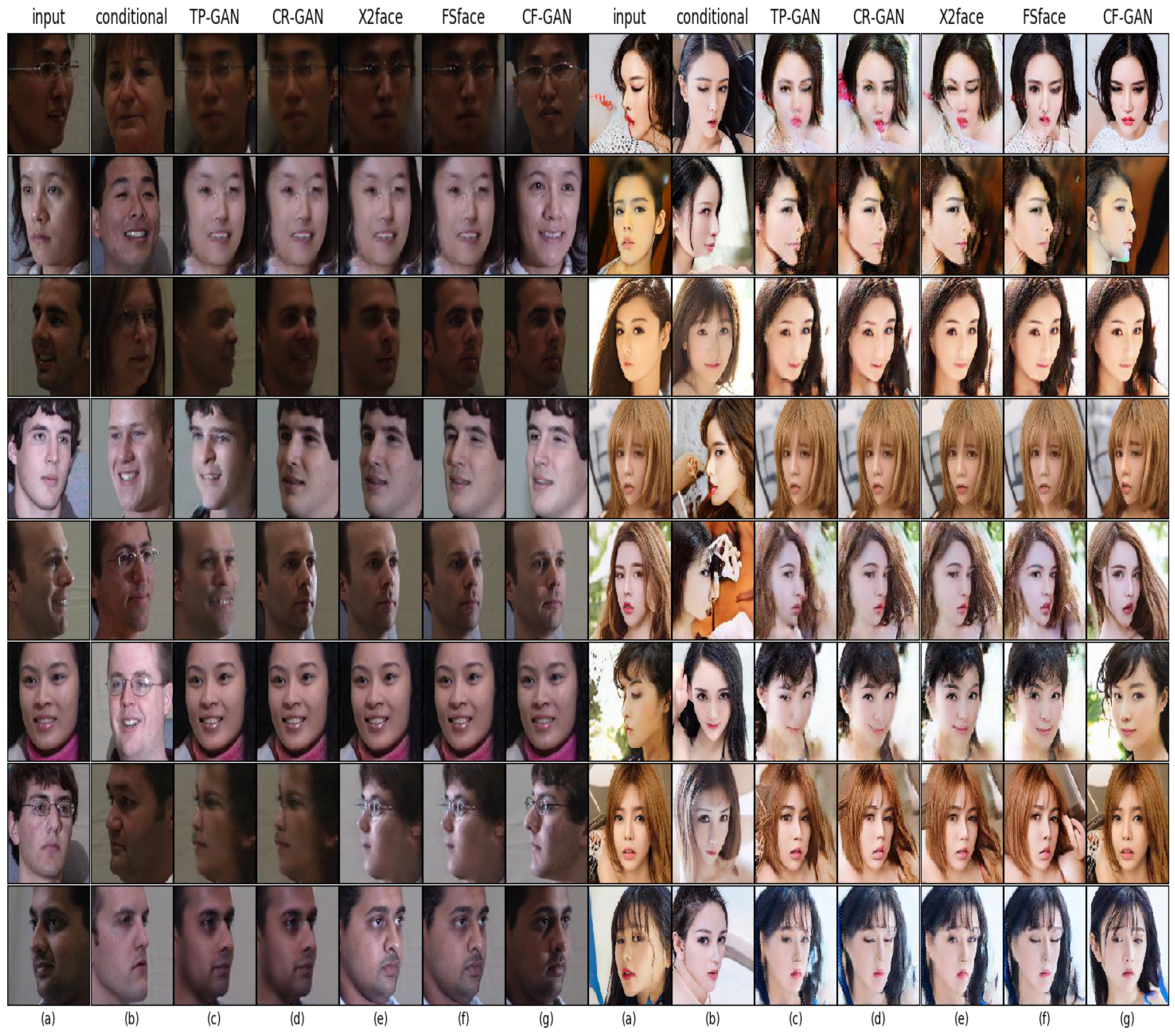

- TP-GAN uses two pathways to capture global features and local features for pose transfer. Since the TP-GAN model cannot generate faces based on the condition face y, in order to compare fairly with it, we obtained classification labels when testing.

- CR-GAN introduces a two-path architecture to learn complete representations for all poses. For the CR-GAN model, we also obtained classification labels when testing.

- X2face introduces a self-supervised network architecture that allows the pose and expression of a given face to be controlled by another face.

- Few-ShotFace (short for FSface) introduces a meta-learning architecture, which involves an embedded network and a generator network. For a fair comparison, we only compared the results according to a single source face with the FSface model.

4.3. Evaluation Index

- AMT. For these tasks, we ran “real vs. fake” perceptual studies on Amazon Mechanical Turk (AMT) to assess the realism of our outputs. We followed the same perceptual study protocol from Isola et al. [31], and we gathered data from 50 participants per algorithm we tested. Participants were shown a sequence of pairs of faces, one real face and one fake (generated by our algorithm or a baseline), and were asked to click on the real face to be considered.

- Classification (Cf for short). We trained Xception Version 3 [36]-based binary classifiers for each face dataset. The baseline is classification accuracy in real faces. Higher classification accuracy means that the transferred faces may be easier to distinguish.

- Consistency (Cs for short). We compared domain consistency between real faces and transferred faces by calculating average distance in feature space. Cosine similarity was used to evaluate the perceptual distance in the feature space of the VGG-16 network [37] that pre-trained in faceNet [38]. We calculated the average difference of the five convolution layers preceding the pool layers. The larger average value will lead to a smaller cosine similarity value, meaning the few similarities between the two faces. In the test stage, we randomly sampled the real face and the transferred face from the same person to make up data pairs and compute the average cosine similarity distance between each pair.

- Convergence time (TIME for short). For these tasks, we recorded the time required to reach the final state of convergence under the same data set and GPU conditions for different models for comparison. The recorded results can reflect the time-consuming model training. The unit of TIME is an hour.

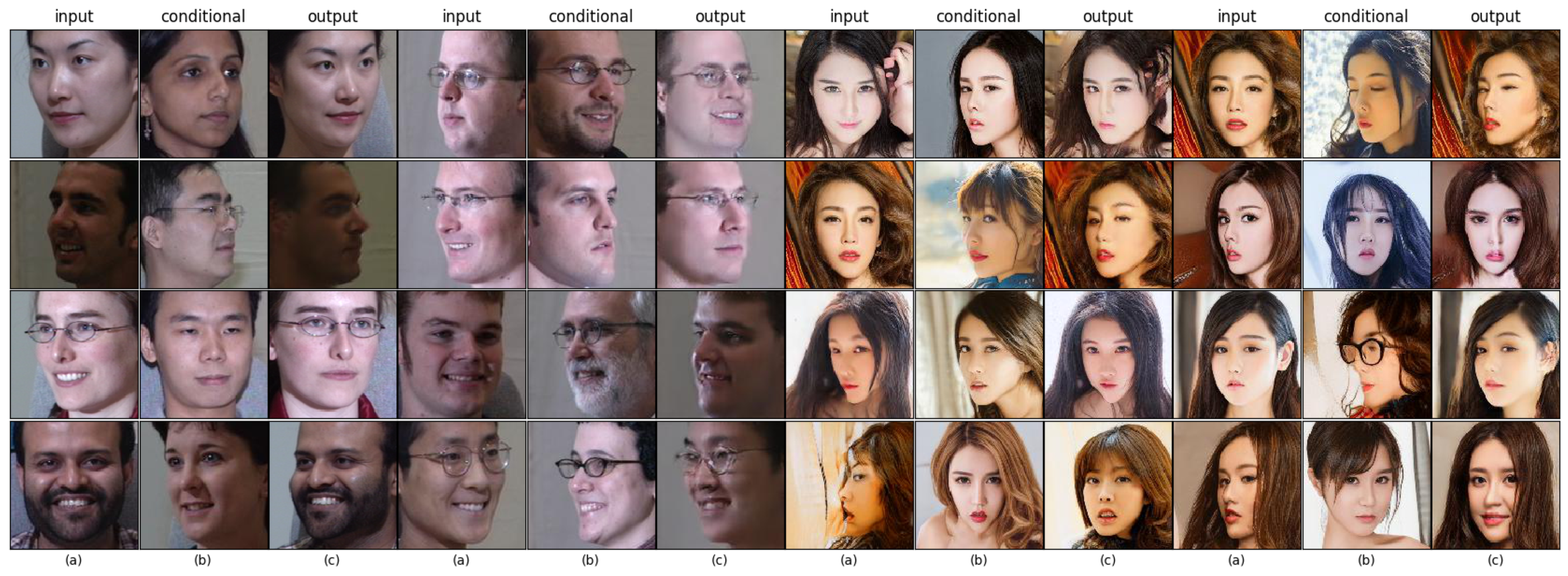

4.4. Experimental Results

4.5. Base Model Comparison

4.6. Quantitative Evaluations

4.7. Limitation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Tian, Y.; Peng, X.; Zhao, L.; Zhang, S.; Metaxas, D.N. CR-GAN: Learning complete representations for multi-view generation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; AAAI Press: Palo Alto, CA, USA, 2018; pp. 942–948. [Google Scholar]

- Chang, H.; Lu, J.; Yu, F.; Finkelstein, A. Pairedcyclegan: Asymmetric style transfer for applying and removing makeup. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 40–48. [Google Scholar]

- Chen, Y.C.; Lin, H.; Shu, M.; Li, R.; Tao, X.; Shen, X.; Ye, Y.; Jia, J. Facelet-bank for fast portrait manipulation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3541–3549. [Google Scholar]

- Zhang, Y.; Zhang, S.; He, Y.; Li, C.; Loy, C.C.; Liu, Z. One-shot Face Reenactment. In Proceedings of the British Machine Vision Conference (BMVC), Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Zakharov, E.; Shysheya, A.; Burkov, E.; Lempitsky, V. Few-shot adversarial learning of realistic neural talking head models. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9459–9468. [Google Scholar]

- Zhuang, N.; Yan, Y.; Chen, S.; Wang, H.; Shen, C. Multi-label learning based deep transfer neural network for facial attribute classification. Pattern Recognit. 2018, 80, 225–240. [Google Scholar] [CrossRef]

- Sankaran, N.; Mohan, D.D.; Lakshminarayana, N.N.; Setlur, S.; Govindaraju, V. Domain adaptive representation learning for facial action unit recognition. Pattern Recognit. 2020, 102, 107–127. [Google Scholar] [CrossRef]

- Bozorgtabar, B.; Mahapatra, D.; Thiran, J.P. ExprADA: Adversarial domain adaptation for facial expression analysis. Pattern Recognit. 2020, 100, 107–111. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8789–8797. [Google Scholar]

- Dumoulin, V.; Belghazi, I.; Poole, B.; Mastropietro, O.; Lamb, A.; Arjovsky, M.; Courville, A. Adversarially learned inference. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

- Tran, L.; Yin, X.; Liu, X. Disentangled representation learning gan for pose-invariant face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1415–1424. [Google Scholar]

- Zhao, B.; Wu, X.; Cheng, Z.Q.; Liu, H.; Jie, Z.; Feng, J. Multi-view image generation from a single-view. In Proceedings of the 2018 ACM Multimedia Conference on Multimedia Conference, Seoul, Republic of Korea, 22–26 October 2018; ACM: New York, NY, USA, 2018; pp. 383–391. [Google Scholar]

- Hannane, R.; Elboushaki, A.; Afdel, K. A Divide-and-Conquer Strategy for Facial Landmark Detection using Dual-task CNN Architecture. Pattern Recognit. 2020, 107, 107504. [Google Scholar] [CrossRef]

- Huang, R.; Zhang, S.; Li, T.; He, R. Beyond face rotation: Global and local perception gan for photorealistic and identity preserving frontal view synthesis. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2439–2448. [Google Scholar]

- Ramamoorthi, R. Analytic PCA construction for theoretical analysis of lighting variability in images of a Lambertian object. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1322–1333. [Google Scholar] [CrossRef]

- Lee, K.C.; Ho, J.; Kriegman, D.J. Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 684–698. [Google Scholar] [PubMed]

- Kent, M.G.; Schiavon, S.; Jakubiec, J.A. A dimensionality reduction method to select the most representative daylight illuminance distributions. J. Build. Perform. Simul. 2020, 13, 122–135. [Google Scholar] [CrossRef]

- Savelonas, M.A.; Veinidis, C.N.; Bartsokas, T.K. Computer Vision and Pattern Recognition for the Analysis of 2D/3D Remote Sensing Data in Geoscience: A Survey. Remote Sens. 2022, 14, 6017. [Google Scholar] [CrossRef]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8798–8807. [Google Scholar]

- Hosoi, T. Head Pose and Expression Transfer Using Facial Status Score. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; IEEE: New York, NY, USA, 2017; pp. 573–580. [Google Scholar]

- Pumarola, A.; Agudo, A.; Martinez, A.M.; Sanfeliu, A.; Moreno-Noguer, F. Ganimation: Anatomically-aware facial animation from a single image. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 818–833. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Wu, W.; Zhang, Y.; Li, C.; Qian, C.; Change Loy, C. Reenactgan: Learning to reenact faces via boundary transfer. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 603–619. [Google Scholar]

- Yang, H.; Huang, D.; Wang, Y.; Jain, A.K. Learning face age progression: A pyramid architecture of gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 31–39. [Google Scholar]

- Wiles, O.; Sophia Koepke, A.; Zisserman, A. X2face: A network for controlling face generation using images, audio, and pose codes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 670–686. [Google Scholar]

- Liu, M.Y.; Huang, X.; Mallya, A.; Karras, T.; Aila, T.; Lehtinen, J.; Kautz, J. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10551–10560. [Google Scholar]

- Yang, S.; Jiang, L.; Liu, Z.; Loy, C.C. Unsupervised image-to-image translation with generative prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18332–18341. [Google Scholar]

- Xu, Y.; Xie, S.; Wu, W.; Zhang, K.; Gong, M.; Batmanghelich, K. Maximum Spatial Perturbation Consistency for Unpaired Image-to-Image Translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18311–18320. [Google Scholar]

- Pugliese, R.; Regondi, S.; Marini, R. Machine learning-based approach: Global trends, research directions, and regulatory standpoints. Data Sci. Manag. 2021, 4, 19–29. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 5967–5976. [Google Scholar]

- Cao, Z.; Niu, S.; Zhang, J.; Wang, X. Generative adversarial networks model for visible watermark removal. IET Image Process. 2019, 13, 1783–1789. [Google Scholar] [CrossRef]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vis. Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef] [PubMed]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4401–4410. [Google Scholar]

- Valdenegro-Toro, M.; Arriaga, O.; Plöger, P. Real-time Convolutional Neural Networks for emotion and gender classification. In Proceedings of the ESANN, Bruges, Belgium, 27–29 April 2019. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 60, 1097–1105. [Google Scholar] [CrossRef]

| Tasks | Baselines | AMT | Cf | Cs | TIME |

|---|---|---|---|---|---|

| A | TP-GAN | 24 ± 1.0% | 0.71 | 0.69 | 36 |

| CR-GAN | 26 ± 1.0% | 0.79 | 0.75 | 36 | |

| X2face | 24 ± 1.0% | 0.71 | 0.69 | 48 | |

| FSface | 26 ± 1.0% | 0.80 | 0.76 | 48 | |

| B | CF-GAN | 39 ± 1.0% | 0.92 | 0.80 | 22 |

| TP-GAN | 23 ± 1.0% | 0.71 | 0.67 | 48 | |

| CR-GAN | 26 ± 1.0% | 0.76 | 0.70 | 48 | |

| X2face | 23 ± 1.0% | 0.71 | 0.67 | 61 | |

| FSface | 26 ± 1.0% | 0.78 | 0.71 | 61 | |

| CF-GAN | 28 ± 1.0% | 0.82 | 0.77 | 28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Z.; Shi, L.; Wang, W.; Niu, S. Facial Pose and Expression Transfer Based on Classification Features. Electronics 2023, 12, 1756. https://doi.org/10.3390/electronics12081756

Cao Z, Shi L, Wang W, Niu S. Facial Pose and Expression Transfer Based on Classification Features. Electronics. 2023; 12(8):1756. https://doi.org/10.3390/electronics12081756

Chicago/Turabian StyleCao, Zhiyi, Lei Shi, Wei Wang, and Shaozhang Niu. 2023. "Facial Pose and Expression Transfer Based on Classification Features" Electronics 12, no. 8: 1756. https://doi.org/10.3390/electronics12081756

APA StyleCao, Z., Shi, L., Wang, W., & Niu, S. (2023). Facial Pose and Expression Transfer Based on Classification Features. Electronics, 12(8), 1756. https://doi.org/10.3390/electronics12081756