Abstract

Traditional trajectory-planning methods are unable to achieve time optimization, resulting in slow response times to unexpected situations. To address this issue and improve the smoothness of joint trajectories and the movement time of quadruped robots, we propose a trajectory-planning method based on time optimization. This approach improves the whale optimization algorithm with simulated annealing (IWOA-SA) together with adaptive weights to prevent the whale optimization algorithm (WOA) from falling into local optima and to balance its exploration and exploitation abilities. We also use Markov chains of stochastic process theory to analyze the global convergence of the proposed algorithm. The results show that our optimization algorithm has stronger optimization ability and stability when compared to six representative algorithms using six different test function suites in multiple dimensions. Additionally, the proposed optimization algorithm consistently constrains the angular velocity of each joint within the range of kinematic constraints and reduces joint running time by approximately 6.25%, which indicates the effectiveness of this algorithm.

1. Introduction

Compared with wheeled robots, legged robots are more suitable for tough terrain and complex environments [1]. The quadruped robots can freely select contact points while making contact with the environment [2]. Therefore, they can be used in the wild rescue field, to carry payloads in construction sites, and to climb stairs [3]. Moreover, the operating environment of the legged robot is mostly uneven, and the quadruped robot can adapt to most of the non-flat terrain; it also has strong flexibility and is not easy to roll over [4,5]. Hence, to make quadruped robots move flexibly, it is necessary to plan the trajectory of the robot leg.

Trajectory planning methods such as Bessel curves are mainly used in the path planning of autonomous vehicles [6] or space robots [7]. In addition, the control points of the Bessel curve are not on the trajectory, which is not intuitive. Trajectories generated by point-to-point trajectory-planning methods [8,9] are relatively simple and cannot be used in complicated situations. As a result, this paper uses a mixed polynomial interpolation algorithm to generate the joint trajectory. QIANG H et al. [10] proposed a gait synthesis method, and the polynomial interpolation was used to fit the track of the foot. To make a robot move in the optimal amount of time, under a certain kinematic constraint, in this paper, we adopt an intelligent optimization algorithm to optimize the time variable based on a time optimization approach. Widely used intelligent optimization algorithms are listed in Table 1.

Table 1.

Some related intelligent optimization algorithms.

Nowadays, the WOA has been widely used in many fields, such as epidemiology [24] and navigation [25]. The advantages of the WOA are few parameters, simple calculation, and easy execution; and its disadvantages are low precision, slow convergence speed, and ease of falling into a local optimum [26,27]. For better optimization results, WANG T et al. [28] combined the differential evolution algorithm (DE) with the WOA to improve the initialization step of the WOA by simulating the variation and selection operations in the DE. This produces a more representative population. Mohammad H N et al. [18] combined a moth–flame optimization algorithm with the WOA to improve the problems which the WOA has. ELHOSSEINI M A et al. [29] improved the A parameter and C parameter in the WOA, which balances the global search and local search capabilities, but they still could not address the problem of the WOA being prone to falling into a local optimum.

The simulated annealing (SA) algorithm has the remarkable feature of a probabilistic jump, which is inspired by the physical process of annealing solids. It can gradually anneal based on the Metropolis criterion and converge on the global optimal solution with a certain probability [30]. The key idea behind this approach is using a local search strategy to dynamically improve the global best point determined in each WOA cycle.

In this paper, we generate the optimal trajectory of a foot by using mixed polynomial interpolation. The objective is to achieve smooth operation under motion constraints while moving in optimal time by applying the IWOA-SA algorithm. The main contributions of this paper are as follows:

- First, the SA algorithm has significant characteristics of probability jumps, gradually anneals according to the Metropolis criterion, and converges to the global optimum with a certain probability. The SA algorithm is combined with the WOA to prevent the latter from easily falling into local optima. We also use the Markov chain to prove the global convergence of the proposed algorithm.

- Second, the adaptive inertia weight with exponential change is introduced. In the early stage of the algorithm, a larger weight is used, and the convergence is slow to ensure the search range, which improves the exploration ability of proposed algorithm. As the iteration numbers increase, the weight value decreases, and the convergence is faster when it approaches the optimal solution, which improves the convergence speed of the algorithm. With the introduced adaptive inertia weight, the exploration and exploitation ability of the proposed algorithm are balanced.

- Finally, the constrained optimization problem is transformed into an unconstrained optimization problem by a penalty function, and a speed limit is imposed for the joint angular velocity of the robot.

2. Trajectory Planning of the Quadruped Robot

2.1. Kinematic Modeling Analysis

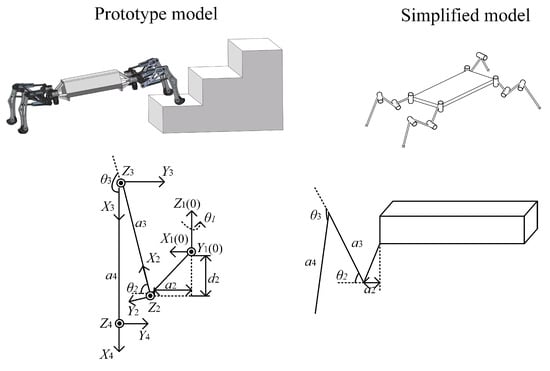

Before trajectory planning, a kinematic analysis of the target is needed. First, the single-leg structure of the prototype must be simplified. Then, the D-H parameter table is obtained according to the D-H coordinate system of the robot [31]. A kinematic analysis is carried out to prepare for the next step of trajectory planning. The D-H coordinate system is shown in Figure 1.

Figure 1.

D-H coordinate system of the single-leg structure of the prototype and the simplified model.

Figure 1 shows the D-H coordinate system of the single-leg structure, where = 98.5 mm, = 350 mm, = 420 mm, and = 152 mm; and the circle represents the vector facing outward from the vertical paper.

The D-H parameter table of the leg is shown in Table 2:

Table 2.

D-H parameterTable.

All the symbols and abbreviations can be seen in the Abbreviations section. By using the D-H parameters, the kinematic solution of the robot can be found.

Robotic inverse kinematics involves the calculation of joint-angle solutions with a known terminal pose [32]. The inverse solution results are:

where .

2.2. A Penalty Function That Optimizes the Limit of the Angular Velocity

The goal of trajectory planning for the robot is to generate a suitable joint motion rule without violating the additional constraints to complete the task. In this paper, a 3-3-5 mixed polynomial algorithm (3-3-5 algorithm) is used to fit robot trajectory [33].

To realize the constraint on the angular velocity of each joint of the robot in the algorithm and reduce the impact on the motor, a penalty function is introduced to limit the maximum angular velocity of each joint. Additionally, in the case of the penalty function introduced in this paper, the joint motion law can be represented as a fitness function that satisfies the inequality constraint and the equation constraint. ZHENG K M et al. [34] adopted a penalty function to take maximum torque as a constraint condition and ensured that the trajectory of each joint of the manipulator was within a safe range by introducing a penalty function.

To ensure that the running speed of each joint of the robot does not exceed the speed limit, we set the maximum angular velocity Vmax for each joint. Since this robot hardware can support a maximum motor speed of 25°/s, to ensure safety, the speed limit was set to 20°/s, which is = 0.349 rad/s. By introducing the penalty function, the maximum speed of each joint is restricted to the speed limit. The penalty function formula is as follows:

where , and is a constraint, which can be rewritten as and is equal to . The updated fitness function formula is obtained as follows:

where is the updated time-dependent fitness function; ; and M is the penalty factor, which must be a large nonnegative integer. Using a particularly large penalty factor M is consistent with the result obtained by taking a relatively small penalty factor [35]. The penalty function algorithm is shown in Algorithm 1:

| Algorithm 1 Plenty function. |

| Input: , M, , , , Output:

|

3. Brief Introduction to the Whale Optimization Algorithm

The whale optimization algorithm is a new type of bionic intelligent optimization algorithm, proposed by Mirjalili and Lewis [36] in 2016. The algorithm simulates the bubble net feeding behavior of whales. When whales detect the location of the target, they produce spiral-shaped bubbles that surround the prey and move along the bubbles. The WOA process can be divided into three parts: encircling prey, foaming attack, and random searching. Given the issue that the convergence speed of the WOA cannot adjusted, and it converges too fast, the adaptive weight is introduced into the WOA to balance the exploration and exploitation ability of the algorithm.

3.1. Encircling Prey

In the whale optimization algorithm, assuming that the optimal position in the current population is prey, and the other whale individuals in the population are close to the optimal individual, and each position is updated according to the optimization rules, the mathematical expression is:

where t is the number of iterations, is the current optimal solution position of the population (target position), X is the whale position, and D is the distance between the target location and the whale position. The expressions for A and C are as follows:

where is a random number between [0, 1] and a is the convergence factor, which decreases linearly from 2 to 0 as the number of iterations increases. The advantage of defining a like this is to make individuals gradually converge to target, which meets the requirements of encircling prey.

3.2. Bubble-Net Attacking Method

During the hunt, the whale approaches the prey in a spiral path and sends out bubbles to attack. To describe the mathematical model of the process, shrinking the encircling mechanism and spiral updating of the position are introduced to describe it.

3.2.1. Shrinking Encircling Mechanism

The shrinkage encircling mechanism reflects the local search aspect of the algorithm, which is achieved by reducing the value of a. If A is in the interval [−1, 1], the whale, after the updated position, is restricted to the current position and the prey position, thereby achieving the encirclement of the prey.

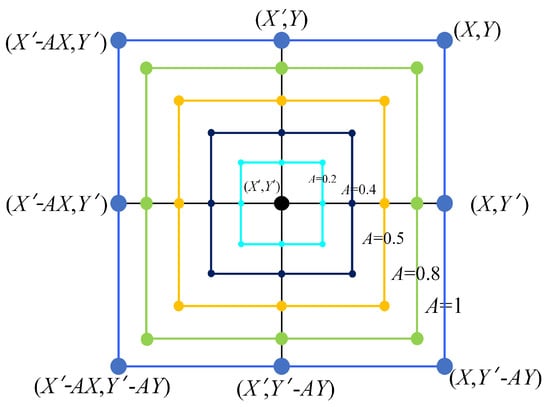

Figure 2 shows the possible positions from toward (,), which can be achieved by in a 2D space.

Figure 2.

Shrinking encircling mechanism ( is the current optimal solution).

3.2.2. Spiral Updating of Position

As shown in Figure 2, to simulate the spiral updating of position, it is necessary to first calculate the distance between each whale and the prey (,) and then simulate the way the whale moves by using a spiral mathematical model, which is modeled as follows:

where is the distance between the individual and the current optimal position; b is the logarithmic helix shape constant; l is a random number on [−1, 1]. As shown in Figure 3, the whales swim alongside their prey within a shrinking circle while swimming in spiral paths. To model this behavior, assume that the probability of choosing between shrinkage enveloping mechanisms or spiral update locations is 50%. The mathematical model for the position updating of the whale optimization algorithm is shown in Equation (11).

Figure 3.

Process of spiral updating of position.

3.2.3. Search for Prey

In fact, whales search randomly based on each other’s location. Therefore, the process is represented by the random variable A. If A is beyond the range of [−1, 1], the whale is far away from the current optimal individual and updates its own position according to others’ positions. The mathematical model is as follows.

where is the position of a random individual in the current population.

4. Hybrid Improved-Whale-Optimization–Simulated-Annealing Algorithm

The traditional polynomial-interpolation trajectory-planning algorithm requires setting conditions such as time velocity and acceleration at the interpolation point before planning, so time optimization cannot be achieved [7]. To address the above problems, we propose an improved IWOA-SA algorithm to optimize the trajectory generated by the 3-3-5 polynomial interpolation algorithm based on time optimization. By introducing adaptive weights, the exploration and exploitation capabilities of the algorithm are balanced and combined with the simulated annealing algorithm, and the problem of easily falling into a local optimum is avoided.

The introduction of SA into the WOA is a combination of the solid annealing principle and bionics. The main idea is to put randomness into the process of the WOA iteration; at the same time, this kind of randomness has to converge in the final stage of iteration; otherwise, the whole algorithm will be in a divergent state. ELHOSSEINI M A [29] set an adaptive random parameter C, but its convergence ability is relatively small. In addition, whether this kind of algorithm can fall into a local optimum is not proven.

4.1. Improved Whale Optimization Algorithm

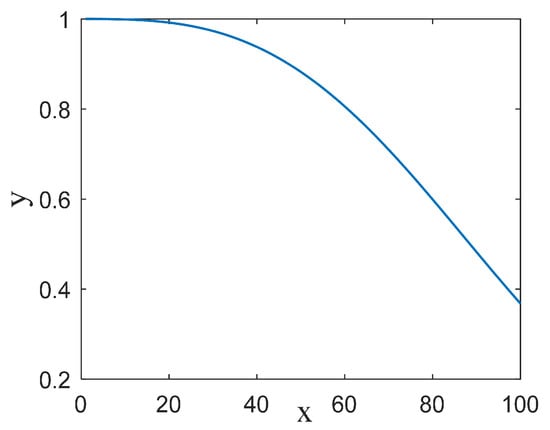

To balance the exploration and exploitation ability of the WOA, adaptive weights are introduced. The adaptive weight formula is shown in Equation (11).

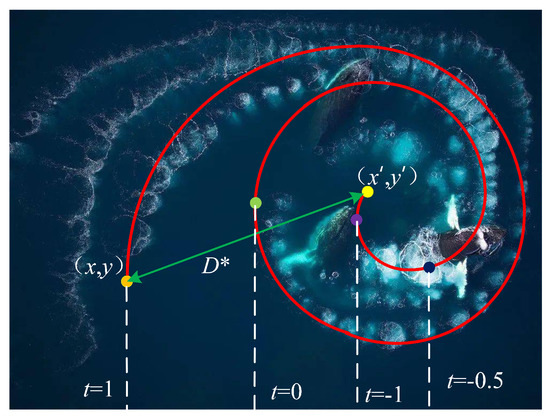

When the maximum number of iterations is 100, and the adaptive weight curve is shown in Figure 4:

Figure 4.

Function curve of .

As shown in Figure 4, in the initial stage of the iteration, the weight is larger and the slope is smaller, guaranteeing an appropriate search range; in the end stage, the weight is smaller and the slope is larger, which improves the optimization ability of the algorithm and accelerates the convergence speed. It also balances the exploration and exploitation ability of the algorithm. After adopting the adaptive weight, the mathematical model for the position update of the whale optimization algorithm is shown in Equation (15).

4.2. Simulated Annealing Algorithm

The simulated annealing algorithm (SA) was first proposed in 1953 by N Metropolis. S Kirkpatrick introduced the idea of annealing into the field of combinatorial optimization in 1983 [37]. The Metropolis acceptance criterion was integrated into the SA algorithm. The Metropolis criterion is used to describe the equilibrium set of atoms at a particular temperature and is able to accept higher energies with a certain probability [38].

The algorithm idea of SA algorithm is as follows: start cooling from a high initial temperature , make gradually lower the temperature and search at each temperature. It accepts a solution worse than the current one with probability p in each round of searching until temperature equilibrium is reached. The acceptance probabilities used in this article are:

where f is the fitness function.

is the position of the new particle spawned near ; if is less than , the new solution a is accepted (probability is 1), and update the velocity and position of the particle; if is greater than , it means that deviates further from the global optimal value. At this point, the algorithm does not immediately discard the new solution but determines it based on the acceptance probability p.

In the case of a high initial temperature, the probability of an inferior solution being accepted is larger, and with a decreasing temperature, the probability of accepting an inferior solution gradually decreases.

4.3. Hybrid Improved-Whale-Optimization–Simulated-Annealing Algorithm

In this paper, on the basis of inheriting the advantages of the WOA, the simulated annealing mechanism is introduced. In each update iteration, the Metropolis criterion is used to accept the better solution while accepting the worse solution with a certain probability, which makes the WOA jump out of the local optimization.

The global optimal position obtained in the SA algorithm is used to replace the global optimal position in the WOA, and the position-update equation after replacement is as shown in Equations (17)–(19):

If the initial temperature is high enough, the high-quality solution can be obtained, but the running time will be too long. If is too small, it will affect the quality of the solution; therefore, one should choose a reasonable and cooling coefficient . The value of the is between 0.4 and 0.99 [39].

Referring to the work done by P J van Laarhoven [40] and Yang Dan et al. [39], the initial temperature in this paper is shown in Equation (20):

where is the fitness value corresponding to the global optimal position obtained by the IWOA-SA algorithm population initialization, and takes a value of approximately one, which is the initial acceptance rate of the new solution. The main loop of the IWOA-SA algorithm is shown in Algorithm 2.

As shown in Algorithm 2, the combination of the SA algorithm with the improved WOA can give the randomness of the WOA, making it not just a simple hillclimber. As we can see from Step 7, we first compare the current optimal solution with ( is a random unit in SA algorithm). When the current best solution is better than the , we do not just accept the solution. Instead, we calculate the probability p and compare it with a random number between 0 and 1 and accept a new value near (can see from the Step 11 to 14), and we take the new value into the WOA. By this method, we successfully add the randomness into the proposed algorithm; as a result, the proposed algorithm can jump out of the local optimum.

| Algorithm 2 IWOA-SA main loop. |

| Input: , , //space dimensionality; iteration number; population number Output: // global best position vector

|

4.4. Convergence Proof of the IWOA-SA Algorithm

The probability-measure method can be used to prove the global convergence of IWOA-SA. According to the global convergence criterion and theorem [41], to prove that the algorithm can converge into the global optimum, the algorithm needs to meet the following two conditions:

Theorem 1.

where f is fitness function, W is the algorithm, and x is a point of the subset S of the solution space , which can minimize the value of the function or produce an acceptable infimum of the function’s value on S. is the solution searched in the iterative search of algorithm W.

and if , then

Theorem 2.

where is the probability measure of the result of the kth iteration of algorithm W on the set A. The significance of this assumption is, for subset A in S, after infinite iterations of the algorithm, it is impossible to miss the solution space S of any Borel subset A; that is, the probability that an algorithm which satisfies the condition does not search for an approximate global optimum for an infinite number of consecutive iterations is zero.

For any Borel subset A in S, have:

Corollary 1

where R is the global optimal set, and is the probability that the result of the kth generation of the algorithm falls in R.(Global Search [41]). Suppose that f is a measurable function, S is a measurable subset of , and Theorem 1 Theorem 2 are satisfied. is a sequence generated by the algorithm. Then:

Proof of Theorem 1.

According to description of IWOA-SA, define D as:

where represents the position of whale individual i after the tth update after the second interpolation operation and is the location of the current global optimal solution. The SA annealing process shows that at the end stage of the algorithm, the probability of accepting a worse solution is very small; as a result, the value of the fitness function is monotonic and does not increase. In addition, it gradually converges to the infimum of the solution space. □

Proof of Theorem 2.

Suppose that the individual i of the IWOA-SA algorithm in discrete space in time t has , , B is state space, is the state of individual i at time t. The sequence of state is a discrete random variable in discrete space. We can see from the population update formula (15) of IWOA-SA, the current individual state is only related to the state of the previous moment and has no connection with the number of present iterations; therefore, sequence is a homogeneous Markov chain.

Assuming that the individual i of IWOA-SA falls into local optimal state in time t, the one-step transfer probability of population sequence is:

where is the optimal solution after SA replacement. Due to the parameter A in this paper not being zero, the IWOA-SA algorithm does not easily fall into local optima.

Set B as a Borel subset in solution space S. If individual i of IWOA-SA cannot be involved in disaggregation B at iteration time t, there will be:

As IWOA-SA is an absorbing Markov process, as a result, , so Equation (25) can be transformed into:

It is known from the one-step transfer probability of IWOA-SA that it cannot fall into a local optimum; as a result, individual i will reach the globally optimal solution with a certain probability in the iteration process, which is:

Equation (26) can be changed into:

As a result:

As , when the number of iterations tends to infinity, there will be:

Therefore, , which is .

Certification established. □

As the result, we can know from the Corollary 1 that IWOA-SA has global convergence.

5. Experimental Verification

To verify the feasibility of the method, six unconstrained optimization problems were solved by simulation, and the trajectory generated by the 3-3-5 mixed polynomial interpolation equation (Appendix A) was optimized by the proposed optimization algorithm. The IWOA-SA algorithm and the WOA, PSO, and GWO algorithms used for comparative analysis were programmed by MATLAB R2017b. The global optimal solution obtained by optimization was used to evaluate the effectiveness and stability of IWOA-SA, and the average (MEAN) and standard deviation (STD) of the optimal solution were used as the evaluation statistics. The formula is shown in (31) and (32):

where is the optimal solution obtained in the ith operation, and N is the number of runs. The smaller the values of the mean and the standard deviation are, the more reliable and stable the solution provided by the algorithm [42].

5.1. Validate the Algorithm by Testing Functions

Six classical unconstrained optimization functions include the unimodal function (-), fixed-dimensional peak function (-), and variable-dimensional peak function (-). The details of the test function are shown in Table 3. There is only one optimal solution in the unimodal function to evaluate the convergence speed and exploitation ability of the algorithm. However, there is a global optimal solution in the multipeak function, which contains several local optimal solutions to evaluate the exploration ability of the algorithm. Each algorithm ran 10 times, the number of populations was set to 100, and the number of iterations was set to 600. The inertial weight of PSO was set to 0.8, and the learning factor was 1.5. The subpopulation of NHWOA was set to 4.

Table 3.

Details of the test functions.

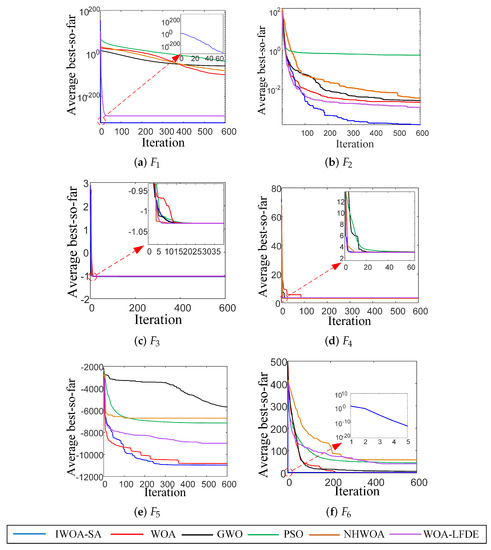

Table 4 shows that the IWOA-SA algorithm has advantages in global searching, can effectively avoid falling into a local optimum, and has better performance under the unimodal function (the mean value was the lowest and was the most stable one among six algorithms) and the variable-dimensional peak function (the mean value was lowest, about 33.6% lower than that of WOA-LFDE, and the stability was 38.2% higher than that of WOA; it was the most stable algorithm among six algorithms). The proposed algorithm has higher stability compared with WOA and GWO under fixed-dimensional peak functions. Our algorithm performed better in this experiment and demonstrated greater stability than the other algorithms. Therefore, the IWOA-SA effectively balances exploration and exploitation abilities.

Table 4.

Comparison of test-function results.

In Figure 5, the convergence curves of IWOA-SA, WOA, GWO, PSO, WOA-LFDE [43] and NHWOA [44] are compared. The “average” denotes the average of 10 runs. The WOA has advantages in local searching, but it easily falls into local optima. The GWO algorithm had good search performance for low-dimensional functions but poor search ability for high-dimensional functions. The optimization abilities of the PSO, WOA-LFDE, and NHWOA under the variable-dimensional peak function were poor, and they needed to be iterated more times to reach the optimal solution. Compared with the other five algorithms, the IWOA-SA algorithm converged quickly and could always obtain the best results among all six algorithms.

Figure 5.

Convergence curves of the IWOA-SA, WOA, GWO, and PSO algorithms. (a) F1, (b) F2, (c) F3, (d) F4, (e) F5, (f) F6.

5.2. IWOA-SA Solves the Problem of Time-Optimal Trajectory Planning

The trajectory planning of the quadruped robot mentioned above was carried out, and the interpolation points selected in the experiment are shown in Table 5. At the same time, considering the actual situation of the robot, the maximum angular velocity of the joint was set to 20°/s through a penalty function. For all algorithms, the number of populations was 100, the number of iterations was 200, and each algorithm ran 10 times.

Table 5.

Interpolation points selected in the experiment.

The comparison of the optimization results of IWOA-SA, WOA, GWO, PSO, WOA-LFDE, and NHWOA are shown in Table 6.

Table 6.

Comparison of experimental results of trajectory planning.

Table 6 shows that the average time taken to reach the optimal solution by IWOA-SA was the smallest (over 10 runs), which shows that the IWOA-SA algorithm has strong optimization ability and is more stable than the others.

To verify whether the time taken by IWOA-SA is significantly different from that of the other five algorithms, we used the Kruskal–Wallis test. The statistic can be tested using a chi-square distribution.

If there are knot values in the sample (number of data with the same rank value), the correction factor C is:

Therefore, the expression for the sample statistic is:

In cases of large samples, , the larger is n, the more approximately obeys the cardinal distribution with degrees of freedom under the null hypothesis, so the statistic can be tested using the cardinal distribution. Assumptions:

H0:

There was no significant difference in the running times obtained by the six algorithms;

H1:

There was a significant difference in the running times obtained by the six algorithms.

After bringing in the parameters of this paper for calculation, = 45.3268 and = 45.431.

By checking the chi-square test table, the chi-square value was 12.833 for the degrees of freedom of and a significance level of 0.05. . Therefore, the original hypothesis was rejected, and the six data groups were considered to be significantly different.

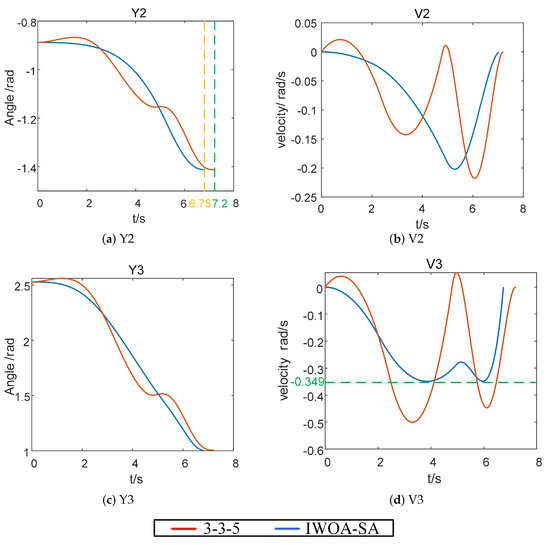

In Figure 6, the motion curves of each joint of the robot before and after optimization by the IWOA-SA algorithm are compared.

Figure 6.

Running curve of each joint after using the optimization algorithm. (a) Y2, (b) V2, (c) Y3, (d) V3.

Table 7 shows the joint running-time comparison before and after using the optimization algorithm.

Table 7.

Time comparison before and after using the optimization algorithm.

In Table 7 and Figure 6, we can see that: (1) The IWOA-SA algorithm ensures that the angular velocity of each joint is within the velocity limit ( rad/s). (2) The running time of each joint is reduced by about 6.25% compared with the 3-3-5 algorithm. As shown in Figure 6d, before using the optimization algorithm, the angular velocity of each joint of the robot easily exceeds the speed limit, and after using the optimization algorithm, the angular velocity of each joint is limited to the velocity range. Figure 7 shows the foot-end trajectory curve after using the IWOA-SA optimization algorithm.

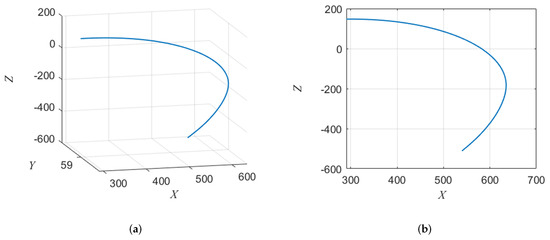

Figure 7.

Foot-end trajectory curve. (a) Foot-end trajectory curve, (b) Trajectory curve in two-dimensional plane.

Figure 7 illustrates that when simulating a downstairs process, a retraction can be observed at the end of the foot-end trajectory curve, which effectively reduces the landing impact during touchdown. upon touchdown.

6. Conclusions

In this paper, an IWOA-SA algorithm was proposed to achieve the optimal time movement of the quadruped robot. The proposed algorithm adopts the simulated annealing mechanism of the SA algorithm to “jump” out of the local optima of the WOA. Adaptive weights were also introduced to balance the exploration and exploitation capabilities of the algorithm. To solve the time-optimal trajectory planning problem under certain kinematic constraints, we introduced a penalty function to transform unconstrained optimization problems into constrained optimization problems. Using Markov chains of stochastic process theory, we proved that our algorithm converges to the global optimal value as the number of iterations approaches infinity. Simulation results demonstrated the effectiveness of our method and the correctness of our theoretical analysis. Compared to other mainstream algorithms, our IWOA-SA algorithm performs better, being on average 33.6% better than the WOA-LFDE and having 38.2% higher stability than the WOA.

Additionally, our algorithm always constrains the angular velocity of each joint within the range of kinematic constraints, reducing joint running time by 6.25%. Our method can be effectively applied to the robot trajectory planning field, and future research will focus on extending IWOA-SA to optimize other trajectory models and address the dynamical problems of quadruped robots, such as the shifting of the center of mass.

Author Contributions

Conceptualization, C.Z. and R.X.; methodology, R.X.; software, R.X.; validation, J.L.; formal analysis, J.H. and X.H.; writing—original draft preparation, R.X.; writing—review and editing, R.X.; project administration, C.Z.; funding acquisition, C.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 62073264) and the Key Research and Development Project of Shaanxi Province (No. 2021ZDLGY01-01).

Data Availability Statement

Data sharing not applicable. No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare that they have no known competing financial interest or personal relationship that could have appeared to influence the work reported in this paper. The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| distance along from to | |

| angle around the axis that rotates from to | |

| distance along from to | |

| angle around that rotates from to | |

| Statistic Quantity | |

| Sample Statistic | |

| C | correction factor |

| the Number of the i th Knot | |

| n | Number of Sample Groups |

| Vumber of Samples in Each Group |

Appendix A

The theory of the 3-3-5 algorithm is shown in Equation (A1). represents the first trajectory of the 3-3-5 algorithm, and and represent the second and third trajectories, respectively.

At the same time, the following constraints are required for the angle, angular velocity, and angular acceleration at the interpolation points :

where, , , and are the time required for the first trajectories, second trajectories, and third trajectories, respectively.

The matrix expressions T and J of the 3-3-5 algorithm are easily deduced from Formulas (A1) and (A2).

where , , , and correspond to the rotation angles of the ith joint at the starting point, the two interpolation points, and the end point, respectively.

Among them, the starting point and the end point can be obtained by the kinematic inverse solution from formulas (1) through (3). The interpolation point is determined by using the start and end points and the requirements for the trajectory of the robot’s end.

References

- Li, X.; Zhou, H.; Feng, H.; Zhang, S.; Fu, Y. Design and Experiments of a Novel Hydraulic Wheel-Legged Robot (WLR). In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3292–3297. [Google Scholar]

- Zeng, X.; Zhang, S.; Zhang, H.; Li, X.; Zhou, H.; Fu, Y. Leg Trajectory Planning for Quadruped Robots with High-Speed Trot Gait. Appl. Sci. 2019, 9, 1508. [Google Scholar] [CrossRef]

- Hwangbo, J.; Lee, J.; Dosovitskiy, A.; Bellicoso, D.; Tsounis, V.; Koltun, V.; Hutter, M. Learning agile and dynamic motor skills for legged robots. Sci. Robot. 2019, 4, eaau5872. [Google Scholar] [CrossRef]

- Kolter, J.Z.; Ng, A.Y. The Stanford LittleDog: A learning and rapid replanning approach to quadruped locomotion. Int. J. Robot. Res. 2011, 30, 150–174. [Google Scholar] [CrossRef]

- Basile, F.; Caccavale, F.; Chiacchio, P.; Coppola, J.; Curatella, C. Task-oriented motion planning for multi-arm robotic systems. Robot. -Comput.-Integr. Manuf. 2012, 28, 569–582. [Google Scholar] [CrossRef]

- Chen, C.; He, Y.Q.; Bu, C.G.; Han, J.D. Feasible trajectory generation for autonomous vehicles based on quartic Bézier curve. Zidonghua Xuebao/Acta Autom. Sin. 2015, 41, 486–496. [Google Scholar]

- Wang, M.; Luo, J.; Walter, U. Trajectory planning of free-floating space robot using Particle Swarm Optimization (PSO). Acta Astronaut. 2015, 112, 77–88. [Google Scholar] [CrossRef]

- Haddad, M.; Khalil, W.; Lehtihet, H.E. Trajectory Planning of Unicycle Mobile Robots with a Trapezoidal-Velocity Constraint. IEEE Trans. Robot. 2010, 26, 954–962. [Google Scholar] [CrossRef]

- Dinçer, Ü.; Çevik, M. Improved trajectory planning of an industrial parallel mechanism by a composite polynomial consisting of Bézier curves and cubic polynomials. Mech. Mach. Theory 2019, 132, 248–263. [Google Scholar] [CrossRef]

- Huang, Q.; Yokoi, K.; Kajita, S.; Kaneko, K.; Arai, H.; Koyachi, N.; Tanie, K. Planning walking patterns for a biped robot. IEEE Trans. Robot. Autom. 2001, 17, 280–289. [Google Scholar] [CrossRef]

- Xiong, C.; Chen, D.; Lu, D.; Zeng, Z.; Lian, L. Path planning of multiple autonomous marine vehicles for adaptive sampling using Voronoi-based ant colony optimization. Robot. Auton. Syst. 2019, 115, 90–103. [Google Scholar] [CrossRef]

- Srinivas, T.; Madhusudhan, A.K.K.; Manohar, L.; Stephen Pushpagiri, N.M.; Ramanathan, K.C.; Janardhanan, M.; Nielsen, I. Valkyrie—Design and Development of Gaits for Quadruped Robot Using Particle Swarm Optimization. Appl. Sci. 2021, 11, 7458. [Google Scholar] [CrossRef]

- Seo, J.H.; Im, C.H.; Kwak, S.Y.; Lee, C.G.; Jung, H.K. An Improved Particle Swarm Optimization Algorithm Mimicking Territorial Dispute Between Groups for Multimodal Function Optimization Problems. IEEE Trans. Magn. 2008, 44, 1046–1049. [Google Scholar] [CrossRef]

- Sharma, A.; Sharma, A.; Pandey, J.K.; Ram, M. Swarm Intelligence: Foundation, Principles, and Engineering Applications; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Malyshev, D.; Cherkasov, V.; Rybak, L.; Diveev, A. Synthesis of Trajectory Planning Algorithms Using Evolutionary Optimization Algorithms. In Proceedings of the Advances in Optimization and Applications: 13th International Conference, OPTIMA 2022, Petrovac, Montenegro, 26–30 September 2022; Springer Nature: Cham, Switzerland, 2023; pp. 153–167. [Google Scholar]

- Husnain, G.; Anwar, S. An Intelligent Probabilistic Whale Optimization Algorithm (i-WOA) for Clustering in Vehicular Ad Hoc Networks. Int. J. Wirel. Inf. Netw. 2022, 29, 1–14. [Google Scholar]

- Nadimi-Shahraki, M.H.; Fatahi, A.; Zamani, H.; Mirjalili, S.; Oliva, D. Hybridizing of Whale and Moth-Flame Optimization Algorithms to Solve Diverse Scales of Optimal Power Flow Problem. Electronics 2022, 11, 831. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Fatahi, A.; Zamani, H.; Mirjalili, S.; Abualigah, L. An Improved Moth-Flame Optimization Algorithm with Adaptation Mechanism to Solve Numerical and Mechanical Engineering Problems. Entropy 2021, 23, 1637. [Google Scholar] [CrossRef]

- Abualigah, L.; Al-Betar, M.A.; Mirjalili, S. Migration-Based Moth-Flame Optimization Algorithm. Processes 2021, 9, 2276. [Google Scholar]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 2022, 392, 114616. [Google Scholar] [CrossRef]

- Sharma, A.; Sharma, A.; Chowdary, V.; Srivastava, A.; Joshi, P. Cuckoo Search Algorithm: A Review of Recent Variants and Engineering Applications. In Metaheuristic and Evolutionary Computation: Algorithms and Applications; Springer Nature: Singapore, 2021; pp. 177–194. [Google Scholar]

- Grenko, T.; Šegota, S.B.; Anđelić, N.; Lorencin, I.; Štifanić, D.; Štifanić, J.; Car, Z. On the Use of a Genetic Algorithm for Determining Ho–Cook Coefficients in Continuous Path Planning of Industrial Robotic Manipulators. Machines 2023, 11, 167. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. QANA: Quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 2021, 104, 104314. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, H.; Li, N.; Yu, Y.; Su, Z.; Liu, Y. Time-optimal memetic whale optimization algorithm for hypersonic vehicle reentry trajectory optimization with no-fly zones. Neural Comput. Appl. 2018, 32, 2735–2749. [Google Scholar] [CrossRef]

- Lv, H.; Feng, Z.; Wang, X.; Zhou, W.; Chen, B. Structural damage identification based on hybrid whale annealing algorithm and sparse regularization. J. Vib. Shock 2021, 40, 85–91. [Google Scholar]

- Wang, T.; Xin, Z.J.; Miao, H.; Zhang, H.; Chen, Z.Y.; Du, Y. Optimal Trajectory Planning of Grinding Robot Based on Improved Whale Optimization Algorithm. Math. Probl. Eng. 2020, 2020, 1–8. [Google Scholar] [CrossRef]

- El-Hosseini, M.A.; Haikal, A.Y.; Badawy, M.M.; Khashan, N. Biped robot stability based on an A-C parametric Whale Optimization Algorithm. J. Comput. Sci. 2019, 31, 17–32. [Google Scholar] [CrossRef]

- Locatelli, M. Convergence properties of simulated annealing for continuous global optimization. J. Appl. Probab. 1996, 33, 1127–1140. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, H.; Liu, W.; Zhang, H. A learning-based multiscale modelling approach to real-time serial manipulator kinematics simulation. Neurocomputing 2020, 390, 280–293. [Google Scholar] [CrossRef]

- Zheng, C.; Su, Y.; Müller, P.C. Simple online smooth trajectory generations for industrial systems. Mechatronics 2009, 19, 571–576. [Google Scholar] [CrossRef]

- Xu, R.; Tian, J.; Zhai, X.; Li, J.; Zou, J. Research on Improved Hybrid Polynomial Interpolation Algorithm for Rail Inspection Robot. In Proceedings of the 2021 5th International Conference on Electronic Information Technology and Computer Engineering, Xiamen, China, 22–24 October 2021; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1207–1213. [Google Scholar]

- Zheng, K.; Hu, Y.; Wu, B. Trajectory planning of multi-degree-of-freedom robot with coupling effect. J. Mech. Sci. Technol. 2019, 33, 413–421. [Google Scholar] [CrossRef]

- Si, C.Y.; Lan, T.; Hu, J.J.; Wang, L.; Wu, Q.D. Penalty parameter of the penalty function method. Control. Decis. 2014, 29, 1707–1710. [Google Scholar]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Javidrad, F.; Nazari, M.H. A new hybrid particle swarm and simulated annealing stochastic optimization method. Appl. Soft Comput. 2017, 60, 634–654. [Google Scholar] [CrossRef]

- Borkar, V.S. Equation of State Calculations by Fast Computing Machines. Resonance 2022, 27, 1263–1269. [Google Scholar] [CrossRef]

- Yang, D.; Lu, T.; Guo, W.X.; Wang, X. MIT Image Reconstruction Method Based on Simulated Annealing Particle Swarm Algorithm. J. Northeast. Univ. 2021, 42, 531–537. [Google Scholar]

- Laarhoven, P.J.V.; Aarts, E.H.L. Simulated Annealing: Theory and Applications; Springer: Berlin/Heidelberg, Germany, 1987. [Google Scholar]

- Solis, F.J.; Wets, R.J.B. Minimization by Random Search Techniques. Math. Oper. Res. 1981, 6, 19–30. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S.M. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Liu, M.; Yao, X.; Li, Y. Hybrid whale optimization algorithm enhanced with Lévy flight and differential evolution for job shop scheduling problems. Appl. Soft Comput. 2020, 87, 105954. [Google Scholar] [CrossRef]

- Lin, X.; Yu, X.; Li, W. A heuristic whale optimization algorithm with niching strategy for global multi-dimensional engineering optimization. Comput. Ind. Eng. 2022, 171, 108361. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).