Abstract

There are many techniques for faking videos that can alter the face in a video to look like another person. This type of fake video has caused a number of information security crises. Many deep learning-based detection methods have been developed for these forgery methods. These detection methods require a large amount of training data and thus cannot develop detectors quickly when new forgery methods emerge. In addition, traditional forgery detection refers to a classifier that outputs real or fake versions of the input images. If the detector can output a prediction of the fake area, i.e., a segmentation version of forgery detection, it will be a great help for forensic work. Thus, in this paper, we propose a GAN-based deep learning approach that allows detection of forged regions using a smaller number of training samples. The generator part of the proposed architecture is used to synthesize predicted segmentation which indicates the fakeness of each pixel. To solve the classification problem, a threshold on the percentage of fake pixels is used to decide whether the input image is fake. For detecting fake videos, frames of the video are extracted and it is detected whether they are fake. If the percentage of fake frames is higher than a given threshold, the video is classified as fake. Compared with other papers, the experimental results show that our method has better classification and segmentation.

1. Introduction

The creation of fake videos and images using digital manipulation has become a serious social problem. In recent years, with the vigorous development of deep learning, models with powerful learning capabilities such as CNN and GAN are becoming increasingly accessible. Due to the development of automatic and easy-to-obtain deep learning models and high-speed GPUs, it is easy to use deep learning methods to forge fake videos. For example, in the literature, a technique called Deepfakes [1] uses deep learning technology to replace (swap) the faces in videos with other faces. Because the face is an important feature of one’s personality, the ability to replace the identity of a person in a video with another person brings many unexpected applications and information security issues. This type of facial manipulation technique has caused serious social issues when combined with voice synthesis technology, which can confuse identity recognition [2]. For example, the researchers fabricated a video using President Obama’s voice, wherein he was shown delivering a speech that he had never actually spoken [3].

Several automatic methods for detecting facial manipulation images and videos have been proposed [4,5,6,7,8,9,10,11]. However, the major problem of these methods is that the detectors are trained on the fake images produced from a well-known manipulation method and they are good at detecting images produced by the same method. Then, when we use this detector to detect images which are generated by new forgery techniques, the performance of detecting fake images drops significantly. For example, the detecting method proposed by Nguyen et al. [10] trains and detects the Face2Face dataset of FaceForensics++ with a performance accuracy of 92.77. However, the accuracy drops greatly to 52.32 when using the same trained model to detect the Deepfakes data et of FaceForensics++. See Tables 3 and 5 in [10]. Since the forgery method can be improved to evade the known detection methods, it is generally believed that it is difficult to design a forgery detection system that is good at detecting any new forgery method. Thus, in this paper, we aim to find a methodology to detect the fakeness of images produced by a new forgery method before we can collect a large number of images to make a large training set for retraining the model. That is, the proposed detecting methodology collects a small training set to identify a new emerging forgery method. There was a similar situation in the confrontation of secret communications during World War II. If the Germans changed the wiring on the encryption machine Enigma, Britain would need to re-collect a large amount of ciphertext encrypted with the new code and corresponding plaintext. Thus, British intelligence work would be greatly slowed down. To collect a large amount of ciphertext encrypted by the new method and the corresponding plaintext is similar to training a forgery detector. It takes a long time to collect a large number of fake images of the new forgery method and the corresponding original images. To be more precise, in this paper, we propose a GAN approach neural network for manipulated facial images classification and segmentation with few training images. To achieve this few-shot learning, we use a small set of training fake images to fine-tune the model which is trained by training images from different domains. The traditional machine learning approaches require large amounts of training data to train models effectively. In contrast, few-shot learning methods can learn to recognize fake faces using only a small amount of training data. The idea behind few-shot learning is to leverage prior knowledge learned from other related forgery detection tasks to aid in the learning the detection of a specific fake face method. This is performed by training the model on a diverse set of forgery detection tasks, which allows it to learn a generalizable representation that can be adapted to new fake face detection tasks with few new training samples.

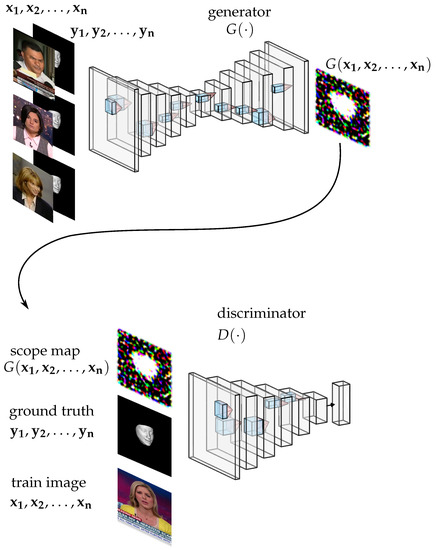

The proposed method uses a generator network to generate a score map of predicted probabilities of fakeness for each pixel of an input image. A discriminator network is then used in the training stage to determine the similarity between the score map and the ground truth, and the generator’s parameters are adjusted accordingly to produce a more realistic segmentation. In the inference stage, the generator produces a score map for the input image, which is used to identify manipulated pixels. See Figure 1 for the architecture of the proposed method.

Figure 1.

The architecture of the proposed method. The proposed method generates a score map using a generator network to predict fakeness probability for each pixel of the input image, and a discriminator network is used in the training stage to adjust the generator’s parameters to produce a realistic segmentation. The score map is used in the inference stage to determine manipulated pixels in the given image.

One potential application scenario involves the discovery of numerous images on social media platforms that are difficult to distinguish between real and fake. Due to limited human resources, it is impossible for the authentication personnel to manually inspect each image to determine its authenticity. Using detectors trained on previously collected fake images proves futile when dealing with these new images. However, by obtaining, say 100, new fake images and their original counterparts through certain sources, our proposed method can be utilized to train a detector that is capable of detecting new methods of forgery. This detector can then be used to identify possible forged areas, which can be carefully examined by authentication personnel.

The primary contributions of this paper can be summarized as follows.

- 1.

- The significance of this work lies in addressing the limitations of traditional forgery detection methods that require a large amount of training data and are slow to develop detectors for new forgery methods. Instead, the proposed method detects forged regions using fewer training samples.

- 2.

- Traditional forgery detection methods are limited in that they can only classify an image as either real or fake. In contrast, the proposed approach is designed to detect forged regions and can provide a segmentation version of forgery detection, which is beneficial for forensic work. The generator component of the proposed architecture synthesizes a predicted segmentation, indicating the fakeness of each pixel.

- 3.

- The proposed method shows that the GAN architecture outperforms other methods in both classification and segmentation, particularly against unseen forgery methods.

2. Related Work

Numerous methods for falsifying faces have been described in the literature. A facial re-enactment system, called Face2Face, was proposed by Thies et al. [12] to construct 3D models from source video streams. The constructed 3D models can be used to render target videos with altered facial movements. A computer graphics-based approach [13], called FaceSwap, first detects facial landmarks of source faces, and maps these landmarks to a 3D template model. The model is projected to the target image using the lectures of the input image. Deepfakes [1] uses two autoencoders with a shared encoder to swap faces of images. The method first crops the faces from source images. Then, it creates fake images by applying a trained encoder and decoder of the source faces to the target faces.

The CNN-based forgery detection has been proposed in recent literature [6,7,9]. Rössler et al. [9] proposed a CNN-based detection method fitted to facial manipulations. They replace the final fully connected layer of XceptionNet with two outputs to transfer XceptionNet to solve the forgery detection as a binary classification problem. In addition to treating forgery detection as a classification problem, Nguyen et al. [10] also treated forgery detection as a segmentation problem, i.e., the detector indicates whether each pixel of a given image has been modified. They used autoencoders with a Y-shaped decoder to detect and segment manipulated facial images. Their method utilized information sharing among the classification and segmentation. Unlike Nguyen’s method, which uses the Y-shaped decoder, our proposed method uses a GAN-based model to produce the segmentation images and analyses the result of segmentation to classify whether the input is a fake image.

There are two approaches for detecting fake videos. One is to extract the frames of the videos and detect whether they are fake or not for each individual frame. Then, if the percentage of fake frames is higher than a certain threshold, the video is classified as fake. Another way of detecting fake videos is to detect correlations between frames in the videos. For example, discontinuity of face poses between frames, chrome–key compositions, duplicated frames, copy–move manipulations, and dropped frames are studied to detect fake videos [14,15,16]. Our proposed method is applicable to the first approach: obeying the percentage of fake frames to decide the fakeness of videos. That is, the proposed detecting method of fake images is used to decide the fakeness of frames and videos.

Another approach to deal with images forged by unseen forgery methods is to enhance the generality of detection models. Chen et al. [17] used the generator part of the GANs to synthesize the forged images by dynamically generating the forgeries. Their method focused on synthesizing forgery images from a large pool of configurations. Thus, the discriminator part is trained by these large diversity forgery images to obtain the generalizations for detection. However, it is possible that a new unseen forgery method uses a synthesis strategy that the generator lacks which makes generalization difficult [18]. To overcome this difficulty, we focus on efficiently learning the features of unseen forgery methods by a few training images and by exploiting this feature to identify forged images.

Currently, to the best of our knowledge, the Y-shaped decoder proposed by Nguyen et al. [10] is the research closest to our approach reported in the literature. They also address the segmentation version of the forgery detection problem but with a different architecture. However, from the comparison in this paper, it can be observed that the proposed GAN-based method has better performance than Nguyen’s Y-shape CNN approach. It cannot be said that GAN-based architecture consistently outperforms Y-shape CNN in every scenario, but at least for the problem we are addressing, using the GAN-based approach we proposed is superior to the method using Y-shape CNN. Furthermore, some newest studies [17,19] have attempted to create forgery detection models with universal capability to detect forged images. However, these latest studies only judge whether the input image is forged or not, without indicating the specific forged regions. These latest studies [17,19] have neither detected any forged areas nor utilized only a few training samples to achieve detection forged area of images produced by the unseen forged techniques.

3. The Proposed Scheme

A fake image is an image

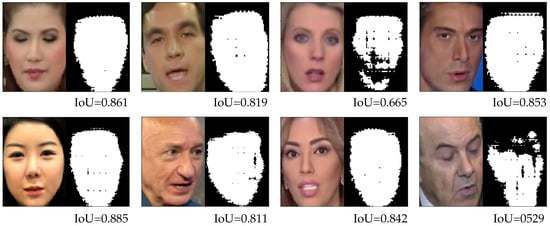

Intuitively, is modified from an original image , where and , w is the width and h is the height of the image, such that some pixels () of are kept the same as , but some pixels () are modified by . The coordinates of the modified pixels are collected in set . The fake image detection problem is that given an image , to decide if there exist a set of modified coordinates such that if , and if , . In this paper, we consider a special case of the problem that is the subset of the human face area. Rather than just deciding whether a given image is a fake image, we also segment the forged area from the given image i.e., to find from a given fake image. The segmentation result, also called a mask image, can offer more reasonable information in forensics. For example, if the predicted segmentation of a given image is a rectangle, it is highly possible that the alternation method is a specific method called Deepfakes. See Figure 2 which illustrate the relation between the shape of predicted segmentation and the image forgery method. Formally, the segmentation version of the fake face detection problem is that given , there exist a such that , to find the forged area .

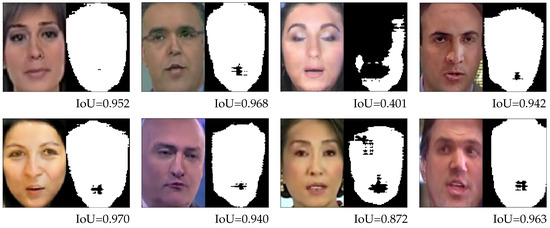

Figure 2.

Randomly selected images from the result of detecting the fake images by the model trained with the Deepfakes dataset. The left side of every pair of sub-images is the fake image and the right side of every pair of sub-images is the prediction result.

Given an image, the proposed method first uses a network, called a generator, to generate a predicted probability of fakeness for each pixel of the input image. In this paper, the collection of these predicted probabilities of fakeness is called a score map and it is used to generate the segmentation result in the inference stage. In the training stage, another network, called a discriminator, is used to decide the similarity of the score map and the ground truth. Then, this similarity is used to adjust the parameters of the generator to produce a more realistic segmentation. After the training stage, in the inference stage, the trained generator receives the input image and produces the score map which is used to decide whether the pixels of the given image are the manipulated pixels. Finally, a threshold based on the percentage of fake pixels is applied to determine whether an input image is fake. Specifically, the fakeness of each pixel is initially decided, and if the number of identified fake pixels exceeds a pre-defined threshold percentage of the total number of pixels in the image, the image is classified as a fake. Finally, a threshold on the percentage of fake pixels is used to decide whether the input image is fake. That is, we first decide the fakeness of each pixel, then we use the percentage of fake pixels to solve the classification problem of fake images.

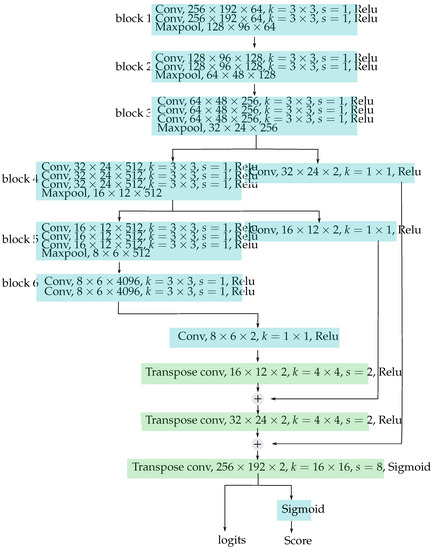

Now, we are ready to describe the architecture of the proposed method in detail. The input image is sent to the generator and the predicted score map , also called a mask image or predicted mask in this paper, is produced. In the training stage, the gradient descent process aims to optimize the model parameters such that the similarity between the predicted mask and the ground truth mask is the maximum. The architecture of the generator is illustrated in Figure 3. The input images are first resized to and the value of each pixel is normalized to the range 0–1 for each R, G, and B channel by a normal image processing procedure. Then the normalized images are dispatched to be the input of generator . The generator consists of a sequence of convolutional blocks and transposed convolutional blocks. See Figure 3 for the detailed structure of generator . The first two layers of block 1 are convolutional layers with 64 kernels, where each kernel size is . The stride of these convolutional layers is 1 and the activation function of these convolutional layers is . The maxpool layers are used to downsample the output of these convolutional layers. The resulting tensors of the maxpool layers are dispatched to the following convolutional blocks. The structure of blocks 1–6 is similar but with different sizes of input and the number of convolutional layers. The intuitive purpose of convolution blocks 1–6 is to encode the features of the face area, and these encoded vectors are used to deduce the predicted mask area in the following transpose convolutional layers. Because we need to extract the features of the fake image and the locations of fake pixels, two bypass paths are added to blocks 3 and 4 for passing the high-resolution location information to the transpose convolutional layers. The transpose convolutional layers succeed after they are used to construct the predicted fake mask. The first transpose convolutional layer is of size with a kernel size of and stride 2. The outputs of the first and second transpose convolutional layers are concatenated with the outputs of blocks 4 and 5, respectively. Then, the activation function sigmoid, also called the logistic function, is used to transform the output to range 0–1 as the output of the generator .

Figure 3.

The architecture of the generator. The network has three convolutional layers followed by two fully connected layers.

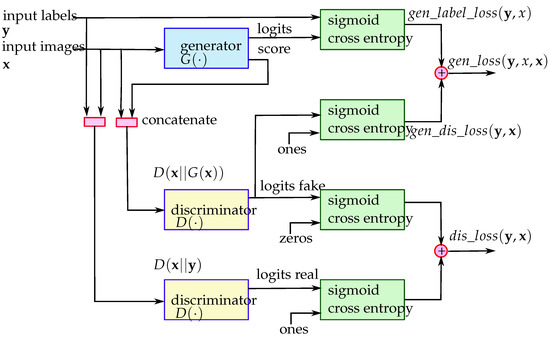

The discriminator accepts the score map output from the generator to deduce the similarity between the ground truth and the output of the generator. The concatenation of the input image label, i.e., the ground truth concatenated with the predicted image is sent to the discriminator to judge the performance of the generator. See the output of the upper discriminator of Figure 4 to illustrate this data path. The term logits used in Figure 4 and the following paragraphs is the vector of non-normalized predictions that the generator and the discriminator generated. The difference in the generated masks and the input labels is counted in the loss function of the generator. See the upper output of the generator shown in Figure 4 to illustrate this data path. In this paper, element-wise addition and subtraction are adopted for vector operations. In cases where scalar operations are performed on vectors, the scalar value is computed with each element in the vector, which is a commonly used notation in the field of deep learning. The generator loss is composed of the generator label loss and the generator-discriminated loss. The generator label loss is the cross-entropy loss of the score maps and the input image labels. Let x be the outcome logits of the generator and be the labels (masks). The generator label loss is defined as

Figure 4.

The flow of data and loss functions between the generator and discriminator.

The activation function is defined as . Similarly, the generator-discriminated loss is the sigmoid cross-entropy loss of the array of ones and the output logits of the discriminator denoted as logit fakes for discriminating the predictions of the generator. Let be the output logits of the discriminator when its input is the concatenation of the output score map of generator and the input label y where denotes the concatenation operator. The generator discriminated loss is defined as

Then the generator loss is defined as

where and are used to adjust the weight of the generator-discriminated loss and generator label loss, respectively.

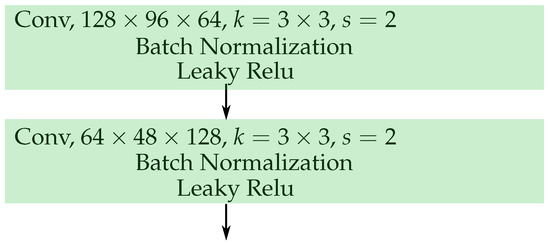

The structure of the discriminator is composed from two convolutional layers. See Figure 5 to see the illustrated structure of the discriminator. The discriminator loss is composed from the loss of discriminated real labels and the loss of discriminated predicted labels of the generator. That is

Figure 5.

The architecture of the discriminator.

4. Experiment and Comparison

4.1. Experimental Design and Data Collection

To evaluate the proposed method, the dataset FaceForensics++ [9] is used to demonstrate the ability of our fake face detection method. The dataset FaceForensics++ contains 1000 videos. The authors of the dataset downloaded these 1000 videos from YouTube. Figure 3 of paper [9] shows that there are slightly more female characters in the videos compared to male characters. Every video is compressed to two different quality levels: C23 (constant rate quantization parameter equal to C23) and C0 (constant rate quantization parameter equal to C0). Each video is manipulated by three manipulation methods Deepfakes [1], Face2Face [12], and FaceSwap [13]. The 1.5 million frames are extracted from the manipulated videos. To compare our experiments with the results mentioned in [9,10], we use the same partition settings of the training and testing sets as conducted in the experiments in [9,10]. That is, the videos 0–719 are adopted to be the training set and the videos 860–999 are used as the testing set. The dataset FaceForensics++ comprises ground truth masks that serve as indicators of pixel modifications, denoting whether a pixel has been altered or not. We use ground truth masks to train our model. All experiments in this thesis are conducted using a personal computer equipped with an Intel i7-6850K CPU running at 3.60 GHz and a Nvidia 2080ti GPU. The algorithms for training and inference are implemented using the Python programming language with the TensorFlow library. During model training and fine-tuning, the batch size is set to 10 and the learning rate to 0.0001. The optimizer used for training is “Admin”, and the termination condition for training is fixed at 400 epochs. If the training epochs large than 1000, overfitting appears. The web-based inference of the proposed method is available at https://ai.nptu.edu.tw/fsg (accessed on 1 February 2023).

Interested readers can utilize the proposed method through a web browser on a personal computer.

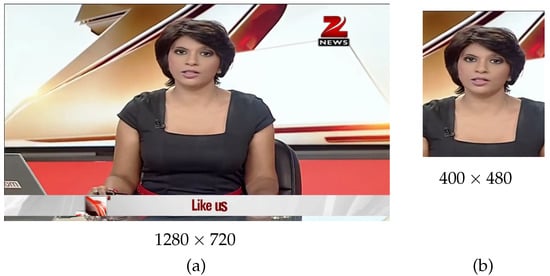

Since it is possible that the difference of the feature pattern contained in the background and face area can help the detection of a fake face, we evaluate the effect of different size background areas by setting two versions, fullsize and crop, for the training and testing sets. The size of images in the crop version is and the size of images in the fullsize version is . The crop version is formed by cropping the area centred on the face from each image in fullsize. The cropped areas are decided from the locations of the fake mask in the ground truth images provided by FaceForensics++. See Figure 6 for an illustration of the relation between the crop and fullsize images.

Figure 6.

A randomly selected image from the Face2Face part of FaceForensics++. (a) The fullsize image of size . (b) The crop image of size cropped from the full size image.

In this paper, the performance of solving the classification problem is evaluated by the metrics accuracy and the area under the receiver operating characteristic curve (AUC). Metric accuracy is widely used to measure the performance of diagnosis objects. For example, metric accuracy is used to evaluate the potential of deep learning methods in thyroid cancer diagnosis [20]. However, accuracy may not be an appropriate metric for certain types of datasets or tasks, especially when the classes are imbalanced, e.g., when a set of real images have significantly fewer samples than a set of fake images. In such cases, a model that always predicts the ‘real’ would achieve high accuracy. However, our test set is balanced, containing an equal number of real and fake images. As such, we can safely use the metric accuracy. The performance of the segmentation problem is evaluated by the intersection over union (IoU) [21]. The two evaluation metrics, IoU and Dice, are commonly used to compare the differences between the ground truth and predicted shapes. For instance, in study [22], the Dice coefficient was utilized to quantify the predicted brain tumour segmentation. The IoU is defined as the area of the intersection between two objects divided by the area of their union. The Dice coefficient is defined as twice the area of the intersection between two objects divided by the sum of the areas of the two objects. According to the formula for the Dice coefficient, it can be observed that a high value is obtained when there is a balance between the true positive rate (TPR) and the positive predictive value (PPV). Since the medical field emphasizes the balance between TPR and PPV in research, the Dice coefficient is often utilized to assess performance in related segmentation studies in the medical field. However, in our work, the ground truth images are generated during the forgery stage that clearly delineate the forgery area. Thus, we do not require the aforementioned properties of the Dice coefficient. Another reason for using IoU in forgery detection research is that its definition is more intuitive and easier to understand in the context of segmentation of the forgery area.

The accuracy is defined as

where TP denotes true positives, TN denotes true negatives, FN denotes false negatives, and FP denotes false positives. Here, TP, TN, FN, and FP are calculated in the fake image classification result. A receiver operating characteristic curve (ROC curve) is a curve plotting the true positive rate fraction of the false positive rate at various thresholds. The area under the receiver operating characteristic curve (AUC) is the area under the ROC curve.

To evaluate the performance of solving the segmentation problem, the metric pixel-wise accuracy is used in some research [10]. However, when the background area is larger than the target object, the term TN in the accuracy result from Formula (1) dominates the metric value. Since annotations of the fake area focus on the fake face, the pixel-wise accuracy is not suitable for evaluating the performance of finding the fake area. Thus, in this paper, the segmentation of the fake area is evaluated by intersection over union (IoU) (also called the Jaccard similarity coefficient) [21]. The IoU is defined as

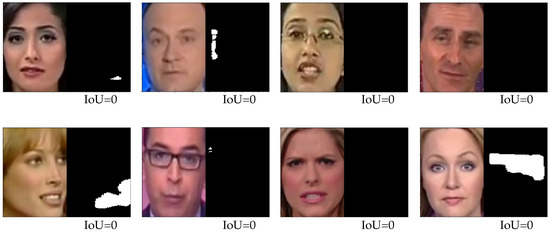

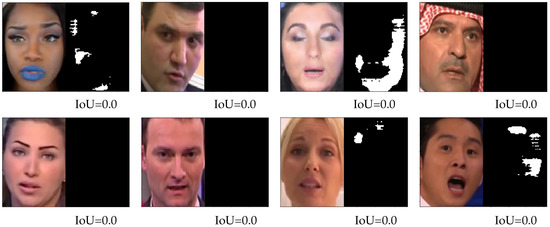

where TP, TN, FN, and FP are calculated in the per pixel classification result and the is a small value used to avoid division by zero. The problem of the IoU metric described by Equation (2) is that when we send a real image into the proposed detector, the detector outputs a black image which is exactly the same as the ground truth, but the IoU of this perfect output black image and ground truth is 0. This situation can easily happen when we are testing a real image. If the input is a real image and the prediction of the proposed method is a small group of pixels, the IoU metric of the result and ground truth is 0. In fact, based on the IoU from Formula (2), if the input is a real image, any segmentation result of the proposed method is measured as 0. Thus, the IoU metric is only used to measure the result of the detected fake image in this paper. See Figure 7 for an illustrating of the detection result performed on real images. The left side of every pair of two subi-mages is the real image and the right side of every pair of sub-images is the detection result. As the figure illustrates, the proposed method is good at detecting real images. However, the resulting image is a perfect black image or if it contains a little white area, the IoU of the prediction and the ground truth is 0.

Figure 7.

Randomly selected images from detecting real images by the model trained with the Deepfakes dataset. The left side of every pair of sub-images is the real image and the right side of every pair of sub-images is the prediction result.

4.2. Data Analysis and Results

On the other hand, the metric IoU is suitable for measuring the images which contain the detection targets, which in our problem is fake faces. Figure 2 and Figure 7 show eight randomly selected images from the result of detecting the Deepfakes fake and real images by the model trained with the Deepfakes dataset. The left side of every pair of sub-images is the test image and the right side of every pair of sub-images is the prediction result. It is obvious that the score of IoU matches the visualization of the prediction results which contain fake images. Figure 8 and Figure 9 show similar results of detecting the Face2Face dataset. Also, Figure 10 and Figure 11 show similar results of detecting the FaceSwap dataset. Based on the comparison of Figure 2 and Figure 8, the IoU measuring the results of detecting crop images in the Face2Face dataset is lower than the IoU of measuring the result of images in the Deepfakes on average.

Figure 8.

Randomly selected images from the result of detecting the fake images by the model trained with the Face2Face dataset. The left side of every pair of sub-images is a fake image and the right side of every pair of sub-images is the prediction result.

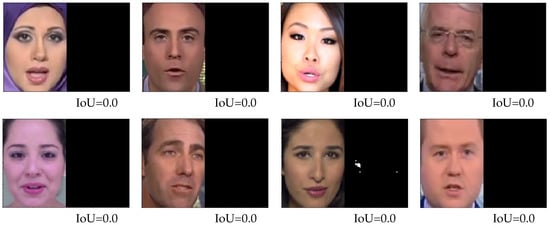

Figure 9.

Randomly selected images from the result of detecting the real images by the model trained with the Face2Face dataset. The left side of every pair of sub-images is the fake images and the right side of every pair of sub-images is the prediction result.

Figure 10.

Randomly selected images from the result of detecting the fake images by the model trained with the FaceSwap dataset. The left side images of every pair of sub-images is the fake images and the right side images of every pair of sub-images is the prediction result.

Figure 11.

Randomly selected images from the result of detecting the real images by the model trained with the FaceSwap dataset. The left side of every pair of sub-images is the fake images and the right side of every pair of sub-images is the prediction result.

We first experiment with images forged by the same forgery method in the training and testing sets. The result of testing the crop C23 and C0 dataset altered by the three manipulation methods using the different trained models is shown in Table 1. That is, we use the model trained by the Deepfakes training set to test the testing set altered by the Deepfakes method. Furthermore, we use the model trained by the Face2Face training set to test the testing set produced by the Face2Face method. Furthermore, we use the model trained by the FaceSwap training set to test the testing set produced by the FaceSwap method. The same experiment performed on the fullsize dataset is shown in Table 2. A comparison of the average IoU of images in the datasets Deepfakes, FaceSwap, and Face2Face is shown in Table 1 and Table 2. The average IoU of detecting C23 and C0 quality images of crop FaceSwap is 0.883 and 0.892, respectively. The average IoU of detecting the C23 and C0 of crop Face2Face is 0.720 and 0.750, respectively. The average IoU of detecting the C23 and C0 of crop Deepfakes is 0.938 and 0.941, respectively. These results show that the fakeness of C23 images is more difficult to detect than C0 images. As expected, the fuzzier the pictures, the harder it is to tell if they are fakes. That is, fainter images are less likely to be identified as forgeries.

Table 1.

Results for detecting crop images altered by three manipulation methods.

Table 2.

Results for detecting fullsize images altered by three manipulation methods.

To measure the effect of the background area, the average IoU of detecting fullsize FaceSwap and Deepfakes datasets are lower than detecting the crop version. The average IoU of detecting C23 and C0 quality fullsize FaceSwap images is 0.748 and 0.755, respectively. The average IoU of detecting C23 and C0 quality fullsize Face2Face images is 0.744 and 0.787, respectively. The average IoU of detecting C23 and C0 quality fullsize Deepfakes images is 0.853 and 0.861, respectively. In the fullsize version dataset, showing the same trend as the crop version, the result shows that the fakeness of C23 images is more difficult to detect than C0 images. Based on the above observation, we believe that detecting the fullsize datasets is harder than the crop one. Based on Table 1, the fake images altered by the Face2Face method are harder to detect than Deepfakes and FaceSwap. The compression level in C23 images also makes the fake images altered by Face2Face more difficult to detect than the C0 level. The accuracy of deciding C23 and C0 images altered by Face2Face is 0.835 and 0.873, respectively.

Table 2 records the experiments performed on the fullsize dataset. Based on the comparison of Table 1 and Table 2, the fullsize dataset is more difficult to detect than the crop dataset. The large background area cannot help the proposed model to make the right decision, but it confuses the decision. A possible explanation is that the large background area dilutes the statistical features of the fake area. Furthermore, based on the comparison of the IoU in Table 1 and Table 2, among the three manipulation methods, the fake area segmentation of Face2Face is harder than the images altered by the other two methods whenever input is crop or fullsize.

To test the generalization ability of the proposed method, we test the three altered methods with the models trained by different datasets. The number of images in the training and testing sets is fixed for all experiments. In Table 3, Table 4, Table 5, Table 6 and Table 7, we vary the size of the fine-tuning set to evaluate the performance of our method under different fine-tuning set sizes. We tested all combinations of training and testing/fine-tuning sets. Specifically, in any experimental design, images used as a testing set or fine-tuning are not used for training, and images used for fine-tuning are not included in the testing set. For example, in the first row of Table 7, we test Deepfakes images with models trained by Face2Face and FaceSwap, respectively. Furthermore, in the second row of Table 7, we use the FaceSwap testing set to test the performance of the proposed models trained by the Deepfakes and Face2Face training sets, respectively. Furthermore, in the third row of Table 7, we use the Face2Face testing set to evaluate the performance of the proposed models trained by FaceSwap and Deepfakes, respectively. Based on Table 7, the performance of the detector drops dramatically to a very low level when the detectors meet the unseen altered method. In order to solve this problem, we use a small number of images produced by new forgery methods to adjust old models that have already been trained by the training set produced by other forgery methods. That is, a few images generated by an unseen method are used to adjust the trained model to suit the newly emerged altered method.

Table 3.

Results for few-shot learning of detecting C23 crop images altered by the Deepfakes manipulation methods with difference-based models. In this table, acc-pixel stands for pixel-wise accuracy.

Table 4.

Results for few-shot learning of detecting C23 crop images altered by FaceSwap manipulation methods with difference-based models. In this table, acc-pixel stands for pixel-wise accuracy.

Table 5.

Results for few-shot learning of detecting C23 crop images altered by Face2Face manipulation methods with difference-based models. In this table, acc-pixel stands for pixel-wise accuracy.

Table 6.

Improvement in few-shot learning for detecting C23 crop images altered by Face2Face, FaceSwap, and Deepfakes manipulation methods with difference-based models.

Table 7.

Results for testing C23 crop images altered by the Deepfakes, Face2Face, and Face2Face manipulation methods with difference-based models.

In Table 3, we show the classification accuracy, IoU, and pixel-wise accuracy for detecting C23 crop images altered by the Deepfakes manipulation method using the Face2Face-, FaceSwap-, and none-based models and fine-tuned with small 700, 100, and 20 size Deepfakes training sets. Here, we use the none-based model to denote that the model was only trained by 700, 100, or 20 images without the base model. In order to compare with the results of other papers, we also calculated the pixel-wise accuracy. In Table 3, Table 4 and Table 5, acc-pixel stands for pixel-wise accuracy.

In Table 4, we show the classification accuracy, IoU, and pixel-wise accuracy for detecting C23 crop images altered by the FaceSwap manipulation method using the Face2Face-, Deepfakes- and none-based models and fine-tuned with small 700, 100, and 20 size FaceSwap training sets. In Table 5, we show the classification accuracy, IoU, and pixel-wise accuracy for detecting C23 crop images altered by the Face2Face manipulation method using the Face2Face-, FaceSwap- and Deepfakes-based models and fine-tuned with small 700, 100, and 20 size Face2Face training sets.

The percentage of performance improvement brought by few-shot learning is summarized in Table 6. For example, the accuracy of the model trained by the Face2Face to detect images forged by the Deepfakes method is 0.522 (see Table 7). The accuracy of the same model but fine-tuned with 700 Deepfakes samples becomes 0.881 (see Table 3). Therefore, the percentage increase is . Based on Table 6, few-shot learning with only 20 samples can bring a 29 to 380% percentage increase in the IoU metric. This indicates that our GAN architecture is well suited for few-shot learning.

Due to the fact that the main contribution of the proposed method is utilizing a small number of samples to achieve the training goal, we are particularly interested in the improvement per fine-tune sample. In Table 8, we present the combinations of each training and fine-tune set and calculate the improvement per sample. In other words, Table 8 contains the values from Table 6 divided by the number of fine-tune samples. From Table 8, it can be observed that when the fine-tuning sample size is small, the training performance achieved per sample is higher than when the sample size is large. This result indicates that the proposed method is indeed effective for few-shot learning.

Table 8.

Improvement per sample in few-shot learning for detecting C23 crop images altered by Face2Face, FaceSwap, and Deepfakes manipulation methods with difference-based models. In the presented table, the variable N represents the quantity of fine-tune samples.

In Table 9, we compare the classification accuracy of our scheme with the methods proposed in Cozzolino et al. [7], Rahmouni et al. [8], Bayar et al. [6], MesoNet [9], and Full Image XceptionNet [9] for detecting either raw, C23 crop or high-quality images altered by the Face2Face, FaceSwap, and Deepfakes manipulation methods using the same difference-based models. That is, we use the Face2Face-trained model to detect the Face2Face testing set, the FaceSwap-trained model to detect the FaceSwap testing set and so on. The proposed method has the best accuracy of 0.917, 0.873, and 0.929 on the Deepfakes, Face2Face, and FaceSwap testing sets, respectively.

Table 9.

Comparison of the results for detecting Face2Face, FaceSwap, Deepfakes manipulation methods trained on low-quality images. These values, other than the proposed method, are obtained from Table 2 of the reference [9].

In Table 10, we compare our scheme with the methods proposed by Cozzolino et al. [23], and Nguyen et al. [10] using classification accuracy, IoU, and pixel-wise accuracy to detect C23 crop images altered by the FaceSwap manipulation methods using the Face2Face-based models and fine-tuned with FaceSwap. The classification accuracy of Nguyen’s “New” method has the best accuracy of 0.837 and our proposed method has the second best result of 0.835, almost the same as the best. On the other hand, the proposed method has the best accuracy of 0.930 and Nguyen’s “New” method has the second best result of 0.926 when the metric pixel-wise accuracy is used to measure the result. In papers [10,23], the IoU was not used to measure the performance of segmentation, thus in Table 10, we use “-” to denote that there is no metric value.

Table 10.

Comparison of the results to detect C23 crop images altered by FaceSwap manipulation methods using Face2Face-based models and fine-tuned with FaceSwap. In this table, acc-pixel stands for pixel-wise accuracy.

Our approach can provide a forgery region prediction for suspicious images to forensic examiners when new forgery methods have just emerged and only a few training samples are available. This can enhance the practicality of forensic work when dealing with new forgery techniques. A limitation of our approach is that it still requires collecting a small amount of training data. However, until a method emerges that can recognize all unknown forgery techniques without the need for further training, collecting a small number of training samples is an acceptable approach. Another limitation of our method is that the image needs to be first identified as a human face. If the forgery method used renders the facial detection system unable to detect the position of the face, the proposed method cannot be effective.

5. Conclusions

We have shown that the proposed GAN-based fine-tune approach can detect fake faces with few-shot training. Against unseen forgery methods, the proposed method achieves substantial improvements in detection performance using only a small number of training samples. The proposed method performs well in both classification and segmentation. Especially for the segmentation problem, the forgery regions predicted by the proposed method are helpful for identifying forgery methods.

The approach we propose is capable of predicting regions of forgery in suspicious images, particularly in cases where new forgery methods have emerged and only a limited number of training samples are available. This can be useful in enhancing the practicality of forensic work when dealing with new forgery techniques. However, a limitation of our approach is that it still requires a small amount of training data. Nonetheless, until a method is developed that can recognize all unknown forgery techniques without further training, collecting a limited number of training samples remains an reasonable approach. Our future work will focus on a meta-learning approach for a few-shot training of face forgery detection.

Author Contributions

Conceptualization, Y.-K.L.; methodology, Y.-K.L.; software, Y.-K.L. and H.-L.S.; validation, Y.-K.L. and H.-L.S.; formal analysis, Y.-K.L.; resources, Y.-K.L.; writing—original draft preparation, Y.-K.L.; writing—review and editing, Y.-K.L. and H.-L.S.; visualization, Y.-K.L.; supervision, Y.-K.L.; project administration, Y.-K.L.; funding acquisition, Y.-K.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology under grant number MOST-109-2221-E-153-003, Taiwan.

Acknowledgments

This research was supported by the Ministry of Science and Technology under grant number MOST-109-2221-E-153-003, Taiwan. We thank the anonymous referees for making valuable suggestions and comments which greatly improved the contents as well as the presentation of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deepfakes. 2018. Available online: https://github.com/deepfakes/faceswap (accessed on 1 January 2021).

- Lee, D. Deepfakes Porn Has Serious Consequences. BBC News. 2018. Available online: https://www.bbc.com/news/technology-42912529 (accessed on 3 January 2021).

- Suwajanakorn, S.; Seitz, S.M.; Kemelmacher-Shlizerman, I. Synthesizing Obama: Learning lip sync from audio. ACM Trans. Graph. (ToG) 2017, 36, 1–13. [Google Scholar] [CrossRef]

- Tanaka, M.; Shiota, S.; Kiya, H. A Detection Method of Operated Fake-Images Using Robust Hashing. J. Imaging 2021, 7, 134. [Google Scholar] [CrossRef] [PubMed]

- Afchar, D.; Nozick, V.; Yamagishi, J.; Echizen, I. Mesonet: A compact facial video forgery detection network. In Proceedings of the 2018 IEEE International Workshop on Information Forensics and Security (WIFS), Hong Kong, China, 11–13 December 2018; pp. 1–7. [Google Scholar]

- Bayar, B.; Stamm, M.C. A deep learning approach to universal image manipulation detection using a new convolutional layer. In Proceedings of the 4th ACM Workshop on Information Hiding and Multimedia Security, Galicia, Spain, 20–22 June 2016; pp. 5–10. [Google Scholar]

- Cozzolino, D.; Poggi, G.; Verdoliva, L. Recasting residual-based local descriptors as convolutional neural networks: An application to image forgery detection. In Proceedings of the 5th ACM Workshop on Information Hiding and Multimedia Security, Philadelphia, PA, USA, 20–22 June 2017; pp. 159–164. [Google Scholar]

- Rahmouni, N.; Nozick, V.; Yamagishi, J.; Echizen, I. Distinguishing computer graphics from natural images using convolution neural networks. In Proceedings of the 2017 IEEE Workshop on Information Forensics and Security (WIFS), Rennes, France, 4–7 December 2017; pp. 1–6. [Google Scholar]

- Rössler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Nießner, M. FaceForensics++: Learning to Detect Manipulated Facial Images. In Proceedings of the International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar]

- Nguyen, H.H.; Fang, F.; Yamagishi, J.; Echizen, I. Multi-task learning for detecting and segmenting manipulated facial images and videos. arXiv 2019, arXiv:1906.06876. [Google Scholar]

- Li, Y.; Yang, X.; Sun, P.; Qi, H.; Lyu, S. Celeb-df: A large-scale challenging dataset for deepfake forensics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 19 June 2020; pp. 3207–3216. [Google Scholar]

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2387–2395. [Google Scholar]

- Faceswap. 2018. Available online: https://github.com/Markkowalski/FaceSwap (accessed on 1 January 2021).

- D’Amiano, L.; Cozzolino, D.; Poggi, G.; Verdoliva, L. A patchmatch-based dense-field algorithm for video copy–move detection and localization. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 669–682. [Google Scholar] [CrossRef]

- Bestagini, P.; Milani, S.; Tagliasacchi, M.; Tubaro, S. Local tampering detection in video sequences. In Proceedings of the 2013 IEEE 15th International Workshop on Multimedia Signal Processing (MMSP), Pula, Italy, 30 September–2 October 2013; pp. 488–493. [Google Scholar]

- Gironi, A.; Fontani, M.; Bianchi, T.; Piva, A.; Barni, M. A video forensic technique for detecting frame deletion and insertion. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 6226–6230. [Google Scholar]

- Chen, L.; Zhang, Y.; Song, Y.; Liu, L.; Wang, J. Self-supervised Learning of Adversarial Example: Towards Good Generalizations for Deepfake Detection. arXiv 2022, arXiv:2203.12208. [Google Scholar]

- Khodabakhsh, A.; Ramachandra, R.; Raja, K.; Wasnik, P.; Busch, C. Fake face detection methods: Can they be generalized? In Proceedings of the 2018 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 26–28 September 2018; pp. 1–6. [Google Scholar]

- Shiohara, K.; Yamasaki, T. Detecting deepfakes with self-blended images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18720–18729. [Google Scholar]

- Anari, S.; Tataei Sarshar, N.; Mahjoori, N.; Dorosti, S.; Rezaie, A. Review of deep learning approaches for thyroid cancer diagnosis. Math. Probl. Eng. 2022, 2022, 5052435. [Google Scholar] [CrossRef]

- Rahman, M.A.; Wang, Y. Optimizing intersection-over-union in deep neural networks for image segmentation. In Proceedings of the International Symposium on Visual Computing. Springer, Las Vegas, NV, USA, 12–14 December 2016; pp. 234–244. [Google Scholar]

- Ranjbarzadeh, R.; Bagherian Kasgari, A.; Jafarzadeh Ghoushchi, S.; Anari, S.; Naseri, M.; Bendechache, M. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 2021, 11, 10930. [Google Scholar] [CrossRef] [PubMed]

- Cozzolino, D.; Thies, J.; Rössler, A.; Riess, C.; Nießner, M.; Verdoliva, L. Forensictransfer: Weakly-supervised domain adaptation for forgery detection. arXiv 2018, arXiv:1812.02510. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).