Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach

Abstract

1. Introduction

- We construct a mathematical model to formulate the task assignment problem for UAV swarm saturation. Furthermore, we consider the task assignment model as a Markov Decision Process from the reinforcement learning perspective;

- We build a task assignment framework based on the deep neural network to generate solutions for adversarial scenarios. The policy network uses the attention mechanism to pass information and guarantees the effectiveness and flexibility of our algorithm under different problem scales;

- We propose a training algorithm based on the policy gradient method so that our agent can learn an effective task assignment policy from the simulation data. We also design a critic baseline to reduce the variance of the gradients and increase the learning speed.

2. Problem Formulation

2.1. Scenario Description and Assumptions

- In general, mission planning for UAV swarm operation can be broken down into two phases: arrive at the mission area from the base and then begin executing specific tasks. In this paper, UAVs are assumed to have completed infiltration and have reached the perimeter of the target area. The next step is to carry out the attack task, which is the focus of this paper;

- We assume that the flight of the UAVs can be restricted to a plane at a given altitude and that the UAVs avoid collisions by the stratification of the altitude. Furthermore, in this paper, the flight paths of the UAVs are simplified to straight lines, and each UAV flies at a constant velocity;

- In the UAV swarm saturation attack model proposed in this paper, the vessels are treated as stationary targets. We present this assumption for two main reasons: firstly, the flight speeds of the fixed-wing UAVs are much greater than the vessel’s speed, and the saturation attack is carried out in a very short period of time, so that the vessels’ movement is insignificant; secondly, the UAVs attack the target vessels via anti-ship missiles, and the strike can be executed only if the target is within the missile’s effective range. Thus, the slight movement of the vessels does not affect the effectiveness of the attack.

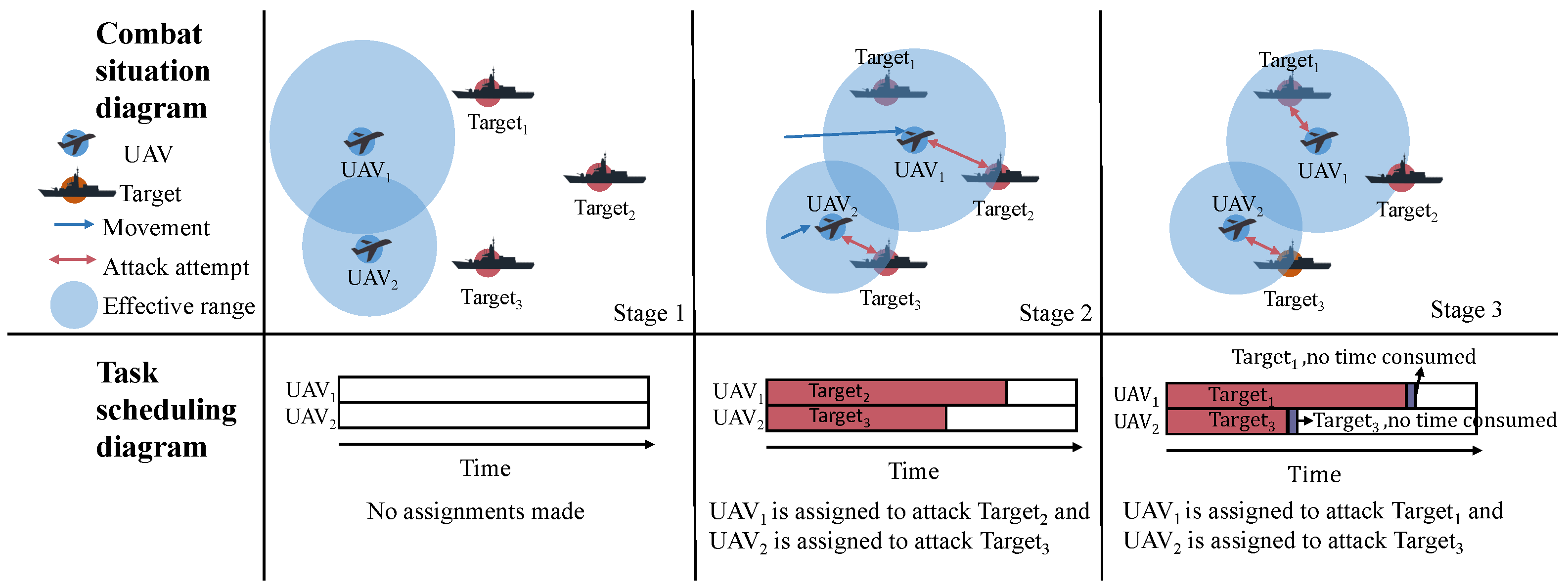

2.2. UAV Swarm Saturation Attack Model

2.2.1. Vehicles

2.2.2. Targets and Tasks

2.2.3. Combinatorial Optimization Problem

2.3. Markov Decision Process for Task Assignment

3. Proposed Method

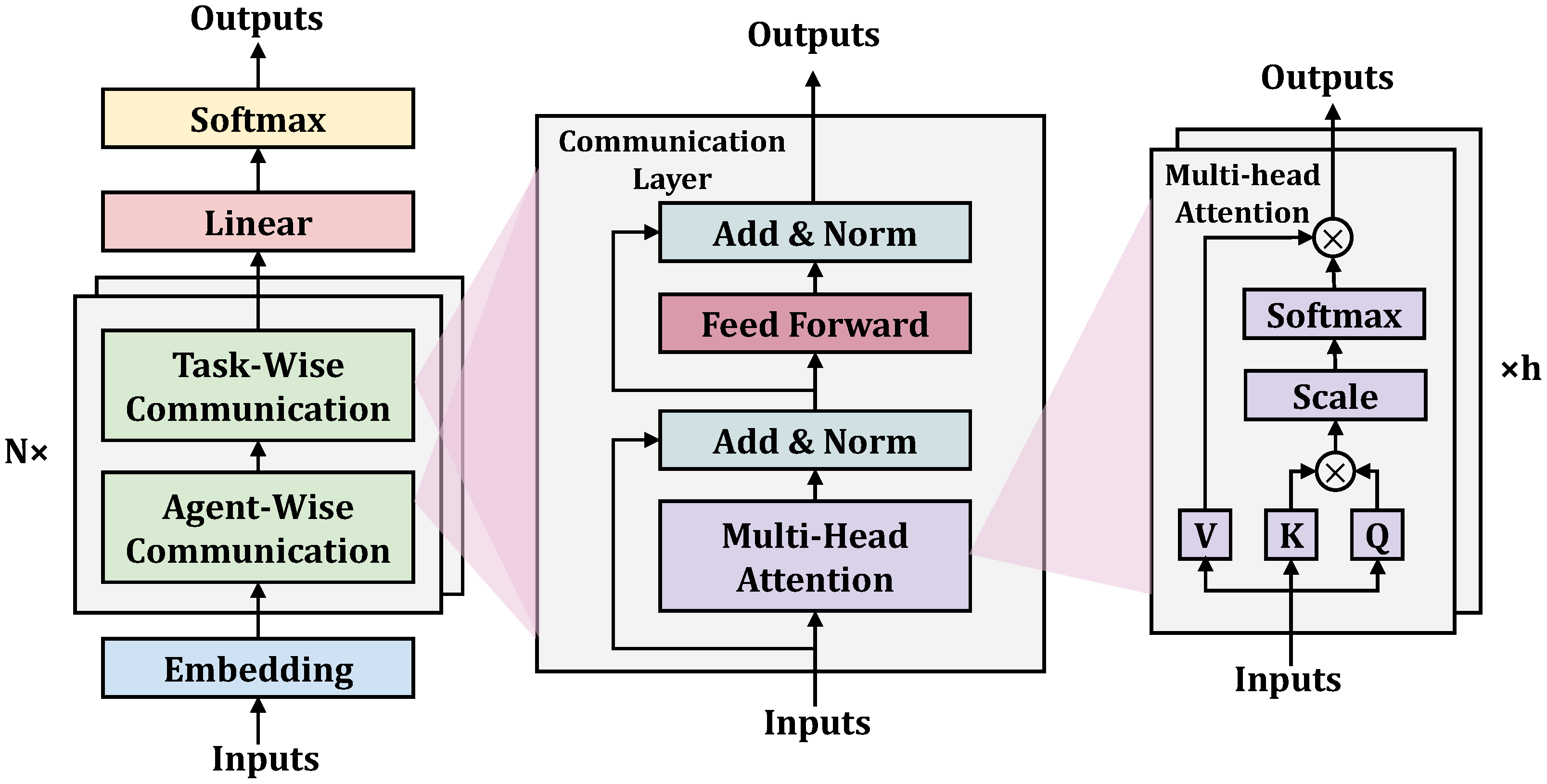

3.1. Network Architecture for Task Assignment

3.2. Optimization with Policy Gradients

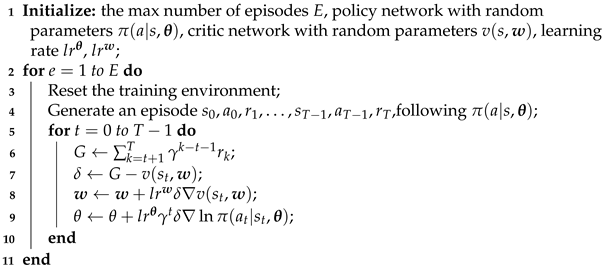

| Algorithm 1: REINFORCE with critic baseline |

|

3.3. Searching Strategy

4. Simulation and Analysis

4.1. Setting Up

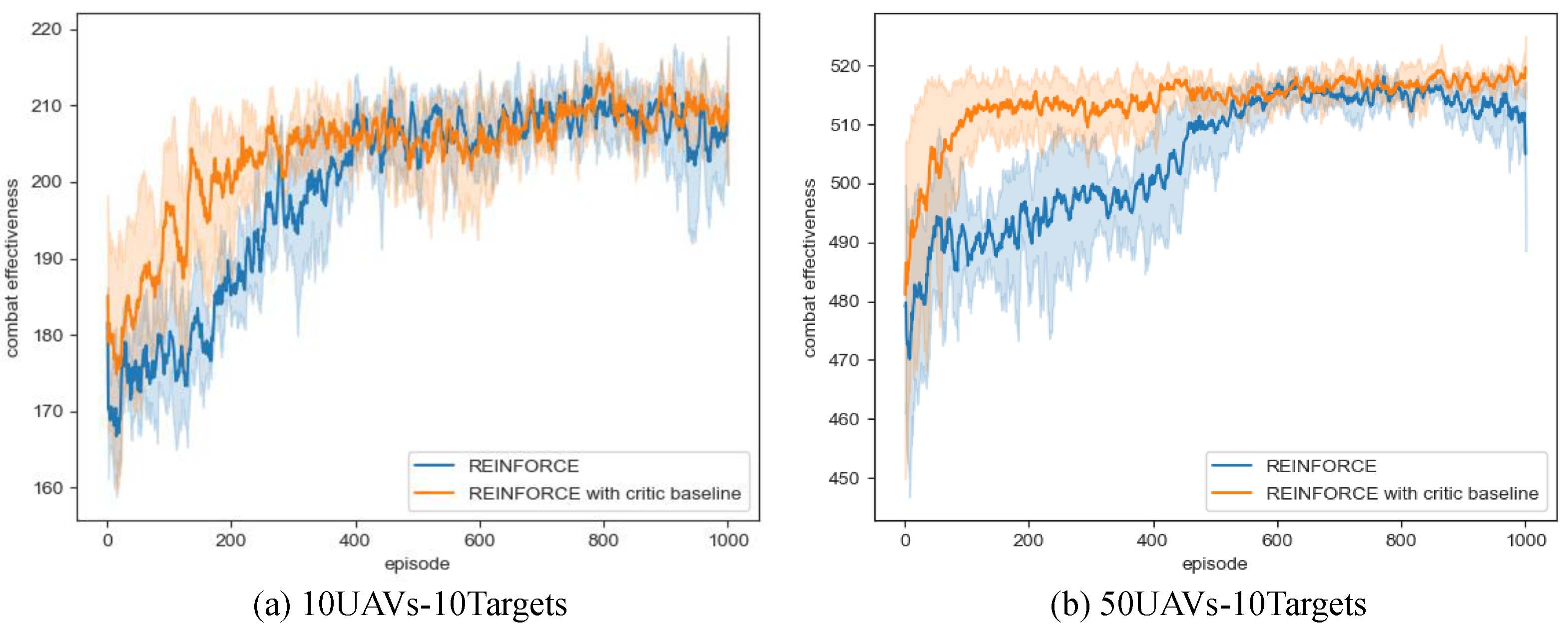

4.2. Convergence of Deep Reinforcement Learning

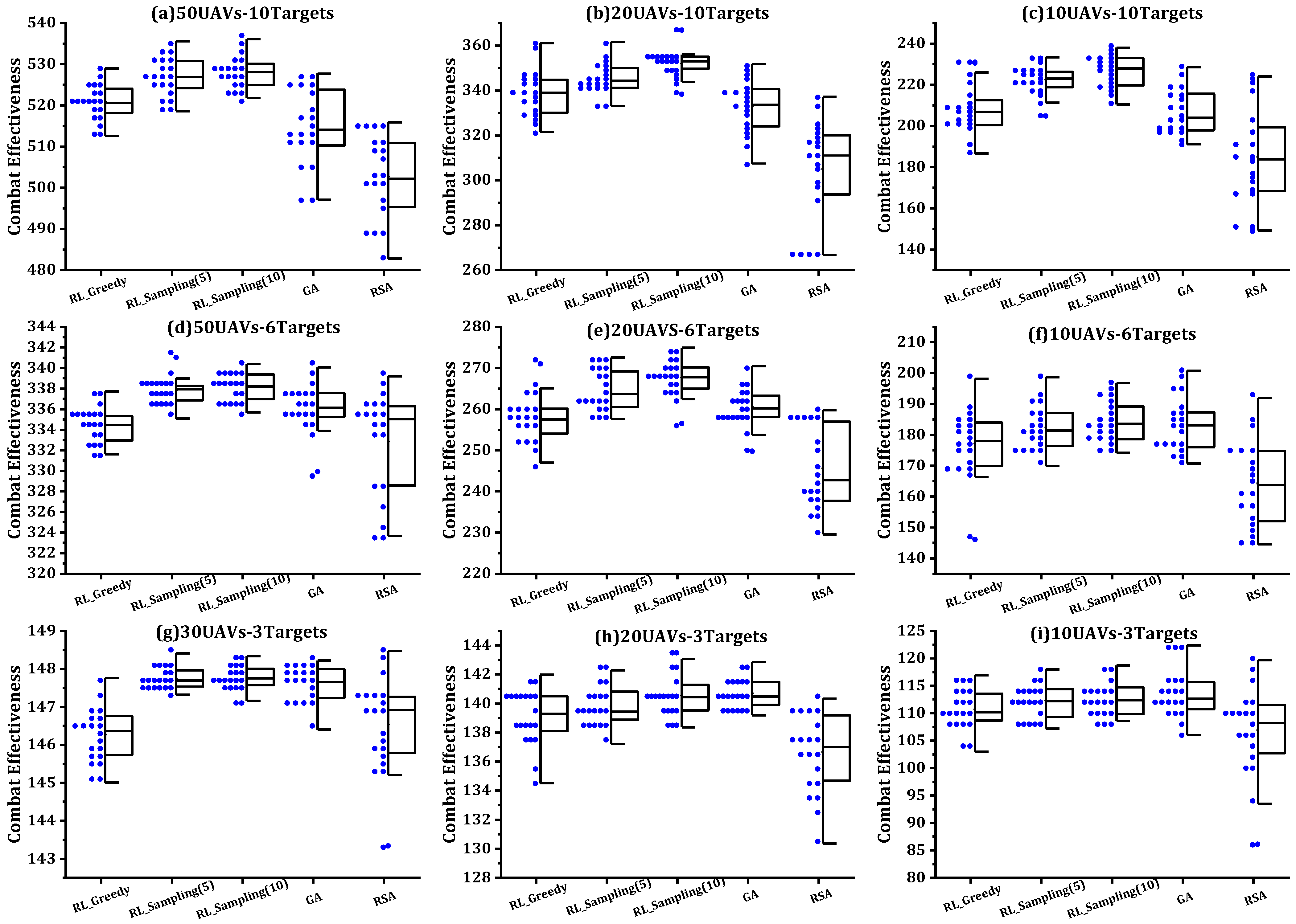

4.3. Performance Comparison on Solution Quality

4.4. Cost-Effectiveness Analysis on Different Number of UAVs

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Otto, R.P. Small Unmanned Aircraft Systems (SUAS) Flight Plan: 2016–2036. Bridging the Gap between Tactical and Strategic; Technical report; Air Force Deputy Chief of Staff: Washington, DC, USA, 2016. [Google Scholar]

- Deng, Q.; Yu, J.; Wang, N. Cooperative task assignment of multiple heterogeneous unmanned aerial vehicles using a modified genetic algorithm with multi-type genes. Chin. J. Aeronaut. 2013, 26, 1238–1250. [Google Scholar] [CrossRef]

- Shima, T.; Rasmussen, S.J.; Sparks, A.G.; Passino, K.M. Multiple task assignments for cooperating uninhabited aerial vehicles using genetic algorithms. Comput. Oper. Res. 2006, 33, 3252–3269. [Google Scholar] [CrossRef]

- Darrah, M.; Niland, W.; Stolarik, B. Multiple UAV Dynamic Task Allocation Using Mixed Integer Linear Programming in a SEAD Mission; Infotech@Aerospace: Arlington, VA, USA, 2005; p. 7164. [Google Scholar]

- Schumacher, C.; Chandler, P.; Pachter, M.; Pachter, L. Constrained optimization for UAV task assignment. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Monterey, CA, USA, 5–8 August 2004; p. 5352. [Google Scholar]

- Nygard, K.E.; Chandler, P.R.; Pachter, M. Dynamic network flow optimization models for air vehicle resource allocation. In Proceedings of the IEEE 2001 American Control Conference (Cat. No. 01CH37148), Arlington, VA, USA, 25–27 June 2001; Volume 3, pp. 1853–1858. [Google Scholar]

- Ye, F.; Chen, J.; Sun, Q.; Tian, Y.; Jiang, T. Decentralized task allocation for heterogeneous multi-UAV system with task coupling constraints. J. Supercomput. 2021, 77, 111–132. [Google Scholar] [CrossRef]

- Shima, T.; Rasmussen, S.J.; Sparks, A.G. UAV cooperative multiple task assignments using genetic algorithms. In Proceedings of the IEEE 2005, American Control Conference, Portland, OR, USA, 8–10 June 2005; pp. 2989–2994. [Google Scholar]

- Jia, Z.; Yu, J.; Ai, X.; Xu, X.; Yang, D. Cooperative multiple task assignment problem with stochastic velocities and time windows for heterogeneous unmanned aerial vehicles using a genetic algorithm. Aerosp. Sci. Technol. 2018, 76, 112–125. [Google Scholar] [CrossRef]

- Zhu, Z.; Tang, B.; Yuan, J. Multirobot task allocation based on an improved particle swarm optimization approach. Int. J. Adv. Robot. Syst. 2017, 14, 1729881417710312. [Google Scholar] [CrossRef]

- Zhao, W.; Meng, Q.; Chung, P.W. A heuristic distributed task allocation method for multivehicle multitask problems and its application to search and rescue scenario. IEEE Trans. Cybern. 2015, 46, 902–915. [Google Scholar] [CrossRef] [PubMed]

- Zhen, Z.; Xing, D.; Gao, C. Cooperative search-attack mission planning for multi-UAV based on intelligent self-organized algorithm. Aerosp. Sci. Technol. 2018, 76, 402–411. [Google Scholar] [CrossRef]

- Fan, C.; Han, S.; Li, X.; Zhang, T.; Yuan, Y. A modified nature-inspired meta-heuristic methodology for heterogeneous unmanned aerial vehicle system task assignment problem. Soft Comput. 2021, 25, 14227–14243. [Google Scholar] [CrossRef]

- Xia, C.; Yongtai, L.; Liyuan, Y.; Lijie, Q. Cooperative task assignment and track planning for multi-UAV attack mobile targets. J. Intell. Robot. Syst. 2020, 100, 1383–1400. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Fortunato, M.; Jaitly, N. Pointer networks. Adv. Neural Inf. Process. Syst. 2015, 28, 3134. [Google Scholar]

- Bello, I.; Pham, H.; Le, Q.V.; Norouzi, M.; Bengio, S. Neural combinatorial optimization with reinforcement learning. arXiv 2016, arXiv:1611.09940. [Google Scholar]

- Kool, W.; Van Hoof, H.; Welling, M. Attention, learn to solve routing problems! arXiv 2018, arXiv:1803.08475. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Zhao, X.; Zong, Q.; Tian, B.; Zhang, B.; You, M. Fast task allocation for heterogeneous unmanned aerial vehicles through reinforcement learning. Aerosp. Sci. Technol. 2019, 92, 588–594. [Google Scholar] [CrossRef]

- Tian, Y.T.; Yang, M.; Qi, X.Y.; Yang, Y.M. Multi-robot task allocation for fire-disaster response based on reinforcement learning. In Proceedings of the IEEE 2009 International Conference on Machine Learning and Cybernetics, Hebei, China, 12–15 July 2009; Volume 4, pp. 2312–2317. [Google Scholar]

- Yang, H.; Xie, X.; Kadoch, M. Intelligent resource management based on reinforcement learning for ultra-reliable and low-latency IoV communication networks. IEEE Trans. Veh. Technol. 2019, 68, 4157–4169. [Google Scholar] [CrossRef]

- Luo, W.; Lü, J.; Liu, K.; Chen, L. Learning-based policy optimization for adversarial missile-target assignment. IEEE Trans. Syst. Man Cybern. Syst. 2021, 52, 4426–4437. [Google Scholar] [CrossRef]

- Liang, X.; Du, X.; Wang, G.; Han, Z. A deep reinforcement learning network for traffic light cycle control. IEEE Trans. Veh. Technol. 2019, 68, 1243–1253. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.J.A. Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Trans. Mob. Comput. 2019, 19, 2581–2593. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, UK, 1998; Volume 135. [Google Scholar]

- Xin, B.; Wang, Y.; Chen, J. An efficient marginal-return-based constructive heuristic to solve the sensor–Weapon–Target assignment problem. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2536–2547. [Google Scholar] [CrossRef]

- Gibbons, D.; Lim, C.C.; Shi, P. Deep learning for bipartite assignment problems. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 2318–2325. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning (PMLR), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Reinf. Learn. 1992, 8, 5–32. [Google Scholar]

| Current position* | Position | Damage probability |

| Velocity | Expected remaining value* | Time cost* |

| Number of available attacks* | Infeasibility flag* | |

| Effective range | ||

| Available time* |

| Target 1 | Target 2 | Target 3 | Target 4 | Target 5 | Target 6 | Target 7 | Target 8 | Target 9 | Target 10 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Formation | Position/km | (10, 15) | (20, 20) | (20, 10) | |||||||

| I | Value | 50 | 50 | 50 | |||||||

| Formation | Position/km | (0, 15) | (10, 25) | (10, 5) | (20, 15) | (30, 25) | (30, 5) | ||||

| I | Value | 50 | 50 | 50 | 100 | 50 | 50 | ||||

| Formation | Position/km | (0, 20) | (0, 10) | (10, 30) | (10, 0) | (15, 20) | (15, 10) | (20, 30) | (20, 0) | (30, 20) | (30, 10) |

| I | Value | 50 | 50 | 50 | 50 | 100 | 100 | 50 | 50 | 50 | 50 |

| Velocity(km/h) | Number of Attacks | Effective Range (km) | |

|---|---|---|---|

| Type I | 400 | 1 | 15 |

| Type II | 500 | 2 | 20 |

| Type III | 600 | 3 | 25 |

| Hyperparameters of Network Architecture | Hyperparameters of Training | ||

|---|---|---|---|

| Hidden size | 128 | Training episodes | 1000 |

| Number of stacks | 2 | Learning rate | 1 × |

| Attention heads | 4 | Discount rate | 0.98 |

| Initializer | Random normal | Optimizer | Adam |

| Activation | ReLU | ||

| RL_Greedy | RL_Sampling (5) | RL_Sampling (10) | GA | RSA | ||

|---|---|---|---|---|---|---|

| 50 UAVs-10Targets | Performance | 520.86 ± 4.26 | 526.85 ± 4.72 | 528.16 ± 3.97 | 514.92 ± 9.06 | 502.76 ± 10.20 |

| Time | 1.09 s | 5.37 s | 10.75 s | 5.47 s | 4.60 ms | |

| 20 UAVs–10Targets | Performance | 338.80 ± 10.47 | 345.22 ± 6.86 | 352.08 ± 5.80 | 332.87 ± 11.61 | 304.90 ± 22.17 |

| Time | 0.25 s | 1.22 s | 2.42 s | 2.24 s | 2.00 ms | |

| 10 UAVs–10Targets | Performance | 208.15 ± 11.74 | 222.23 ± 6.95 | 226.61 ± 7.79 | 206.88 ± 10.84 | 184.98 ± 23.61 |

| Time | 0.11 s | 0.44 s | 0.88 s | 1.15 s | 1.00 ms | |

| 50 UAVs–6Targets | Performance | 334.42 ± 1.64 | 337.81 ± 1.21 | 338.12 ± 1.34 | 336.25 ± 2.16 | 332.84 ± 5.03 |

| Time | 0.76 s | 3.76 s | 7.50 s | 4.31 s | 3.50 ms | |

| 20 UAVs–6Targets | Performance | 257.77 ± 5.58 | 264.86 ± 4.97 | 267.68 ± 4.43 | 260.52 ± 4.53 | 245.58 ± 9.91 |

| Time | 0.18 s | 0.92 s | 1.86 s | 1.73 s | 1.40 ms | |

| 10 UAVs–6Targets | Performance | 177.31 ± 10.84 | 182.58 ± 7.23 | 184.48 ± 6.74 | 183.37 ± 8.92 | 164.26 ± 14.00 |

| Time | 0.08 s | 0.37 s | 0.74 s | 0.89 s | 1.00 ms | |

| 30 UAVs–3Targets | Performance | 146.27 ± 0.73 | 147.75 ± 0.30 | 147.77 ± 0.33 | 147.62 ± 0.48 | 146.55 ± 1.21 |

| Time | 0.27 s | 1.32 s | 2.63 s | 2.15 s | 1.55 ms | |

| 20 UAVs–3Targets | Performance | 139.15 ± 1.89 | 139.82 ± 1.33 | 140.54 ± 1.38 | 140.71 ± 1.02 | 136.66 ± 2.72 |

| Time | 0.17 s | 0.76 s | 1.55 s | 1.45 s | 1.10 ms | |

| 10 UAVs–3Targets | Performance | 110.72 ± 3.68 | 112.17 ± 3.21 | 112.61 ± 3.03 | 113.54 ± 4.34 | 106.91 ± 7.93 |

| Time | 0.08 s | 0.31 s | 0.64 s | 0.73 s | 1.00 ms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, F.; Su, K.; Liang, X.; Zhang, K. Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach. Electronics 2023, 12, 1292. https://doi.org/10.3390/electronics12061292

Qian F, Su K, Liang X, Zhang K. Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach. Electronics. 2023; 12(6):1292. https://doi.org/10.3390/electronics12061292

Chicago/Turabian StyleQian, Feng, Kai Su, Xin Liang, and Kan Zhang. 2023. "Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach" Electronics 12, no. 6: 1292. https://doi.org/10.3390/electronics12061292

APA StyleQian, F., Su, K., Liang, X., & Zhang, K. (2023). Task Assignment for UAV Swarm Saturation Attack: A Deep Reinforcement Learning Approach. Electronics, 12(6), 1292. https://doi.org/10.3390/electronics12061292