CEEMD-MultiRocket: Integrating CEEMD with Improved MultiRocket for Time Series Classification

Abstract

1. Introduction

- (1)

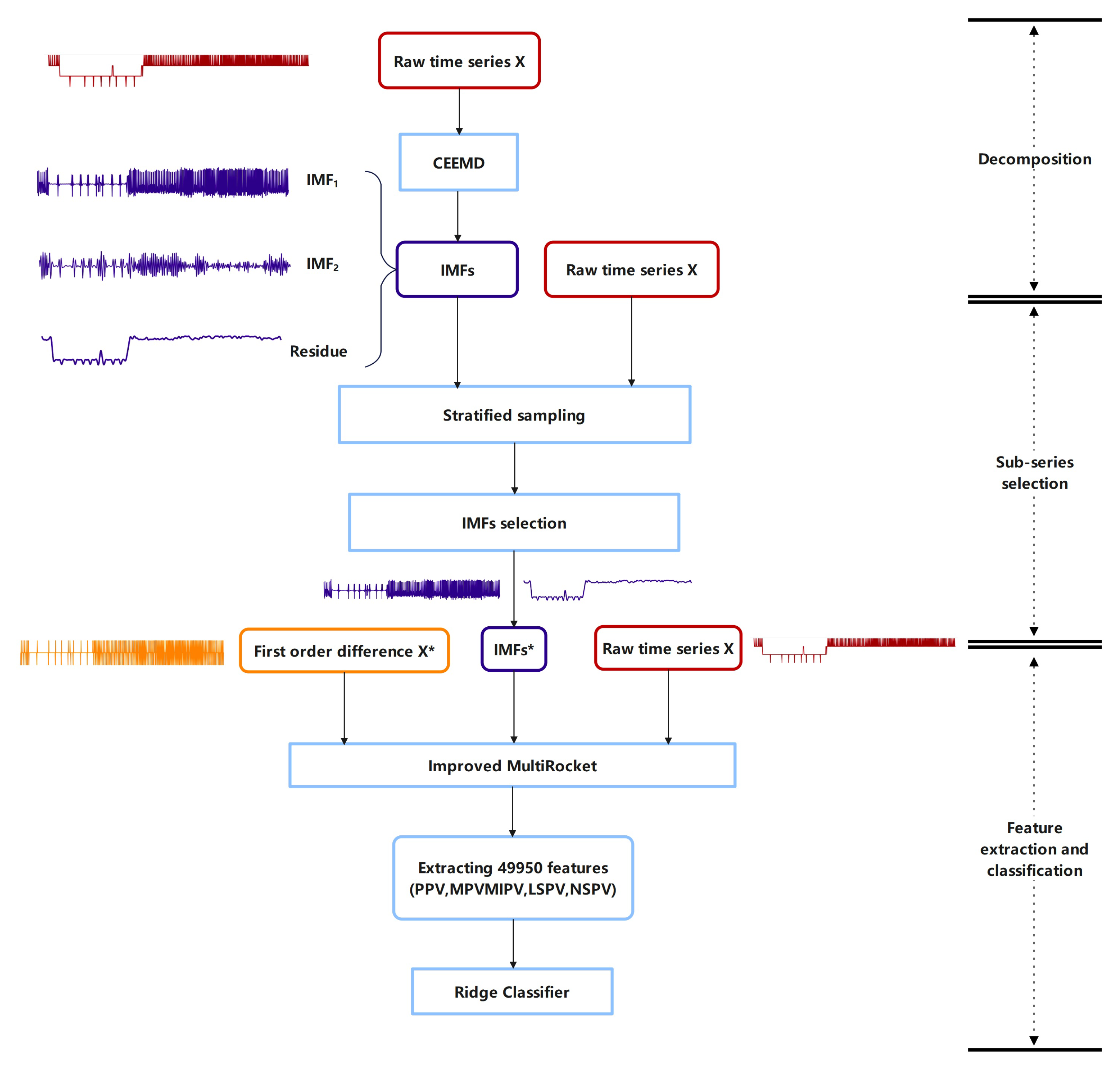

- A novel hybrid TSC model that integrates CEEMD and improved MultiRocket is proposed. Raw time series is decomposed into high-, medium- and low-frequency portions, and convolution kernel transform is utilized to derive features from the raw time series, the decomposed sub-series and the first-order difference of raw time series. This kind of transformation is able to obtain more detailed and discriminative information of time series from various aspects.

- (2)

- A sub-series selection method is proposed based on the whole known training data. This method selects the more crucial sub-series and prunes the redundant and less important ones, which helps to further enhance classification performance and also reduce computational complexity.

- (3)

- The length and number of convolution kernels are modified, and one additional pooling operator is applied to convolutional outputs in our improved MultiRocket. These improvements contribute to the enhancement of classification accuracy.

- (4)

- Extensive experiments demonstrate that the proposed classification algorithm is more accurate than most SOTA algorithms for TSC.

- (5)

- We further analyze some characteristics of the proposed CEEMD-MultiRocket for TSC, including the CEEMD parameter settings, the selection of decomposed sub-series, the design of convolution kernel and pooling operators.

2. Related Works

2.1. Complementary Ensemble Empirical Mode Decomposition

- (1)

- Add two equal-amplitude, opposite-phase white noises to the signal , to obtain the following sequences.where is the white noise superimposed in the ith stage, and denote the sequence after adding noise in the ith stage.

- (2)

- CEEMD firstly breaks down the sequence’s noise to generate the components , and the trend surplus .

- (3)

- In the same way, process the white noise with opposing symbols in step (1) to generate the components and .

- (4)

- Repeat steps (1)∼(3) n times to obtain n sets.

- (5)

- The ultimate result is chosen as the average of the components of two sets of residual positive and negative white noise acquired by repeated decomposition, i.e.,

2.2. MultiRocket

3. The Proposed CEEMD-MultiRocket

3.1. CEEMD and Sub-Series Selection

- (1)

- Utilize CEEMD to decompose raw time series into two IMFs and a residue. For convenience, we refer to three of them as IMFs.

- (2)

- Perform stratified sampling to subdivide the original training set and its corresponding three IMFs into new training sets and testing sets, respectively, and ensure that the new training and testing sets contain all labels (the split ratio is 1:1).

- (3)

- Apply improved MultiRocket to the newly generated training set of original training time series, and then obtain the testing classification accuracy on the newly generated testing set. Perform the corresponding operations for each IMF and obtain the testing classification accuracy

- (4)

- Select the IMFs whose testing accuracies are more than as the inputs of improved MultiRocket. We refer to the selected IMFs as throughout the paper, which may contain 0–3 sub-series generated by CEEMD. The threshold is set to 0.9 by default.

3.2. Improved MultiRocket

3.2.1. Convolution Kernels

- Kernel length and weight setting: To simplify the computation complexity as much as possible, the number of convolution kernels ought to be as small as possible [37]. Therefore, our proposed CEEMD-MultiRocket tries to employ 15 convolution kernels with length 6 instead of the 84 kernels with length 9 in the original MultiRocket. The convolution kernel weights are restricted to two values, and , and there are = 64 possible dual-valued kernels with a length of 6. Improved MultiRocket employs the subset of convolution kernels that have two values of , and this provides a total of fixed kernels, which strikes a good balance between computing efficiency and classification accuracy. In the improved MultiRocket, we set the weight = −1 and = 2. As long as and increase by multiples, equivalently, that is , it has no effect on the results, because bias and features are extracted from the output of convolution [37]. Since the original MultiRocket uses 84 kernels with length 9, the number of kernels used in our improved MultiRocket is less than a fifth of the number of kernels in the original MultiRocket, effectively decreasing computing load.

- Dilation: The dilations used by each kernel are the same and fixed. The total number of dilations of each kernel n depends on the number of features, where and f represent the total number of features (50 k by default). Dilations are specified in range , where the exponent obeys a uniform distribution between 0 and , where is the length of the kernel and is the length of input time series.

- Bias: Bias values are determined by the convolutional outputs for each kernel/dilation combination. For a kernel/dilation combination, we randomly select a training example and calculate its convolutional output, then sample from the convolutional output based on many quantiles to obtain bias, in which the quantiles are drawn from a low-discrepancy sequence.

- Padding: Each combination of kernel and dilation alternates between using and not using padding, with half of the combinations using padding.

3.2.2. Pooling Operators

3.3. Feature Extraction

4. Experimental Results

4.1. Datasets

4.2. Experimental Settings

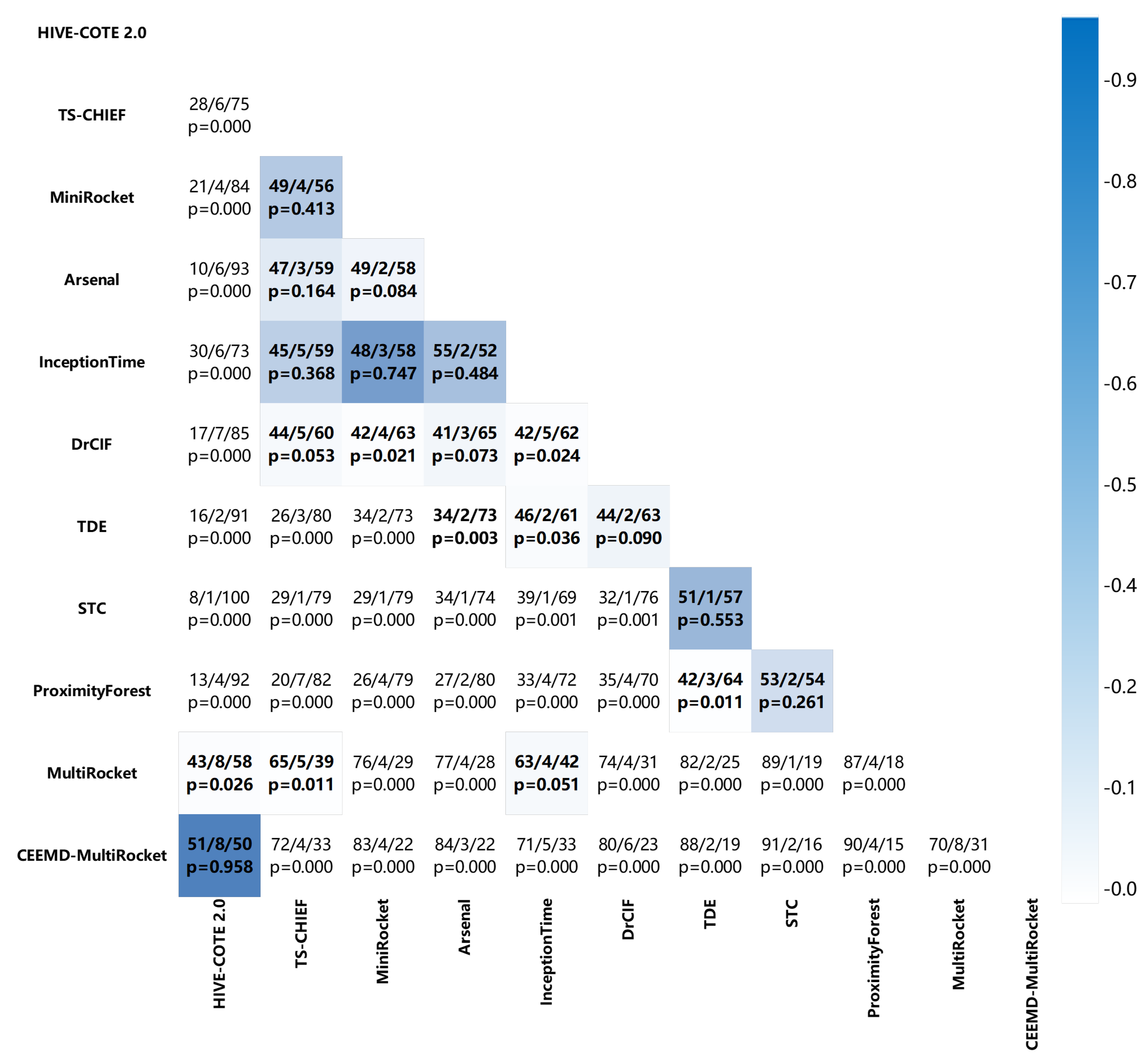

4.3. Results and Analysis

4.3.1. Classification Results

4.3.2. Runtime Analysis

5. Discussion

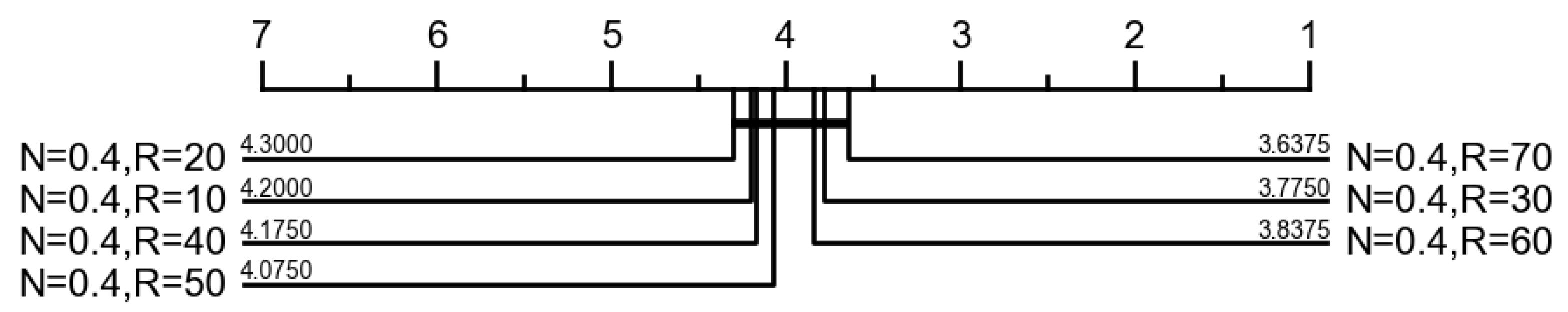

5.1. CEEMD Parameter Settings

5.2. Sub-Series Selection

5.3. Convolution Kernel Design

5.4. Pooling Operators

5.5. Summary

- (1)

- Decomposing raw time series into sub-series and extracting features from them can obtain more detailed and discriminative information from various aspects, which significantly contributes to the enhancement of classification accuracy.

- (2)

- Selecting the more crucial sub-series and pruning the redundant and less important ones can both enhance classification performance and reduce computational complexity.

- (3)

- The optimization in convolution kernel design can generate more efficient transform, which helps to improve the overall classification accuracy.

- (4)

- The additional pooling operator NSPV enriches the discriminatory power of derived features.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rezaei, S.; Liu, X. Deep learning for encrypted traffic classification: An overview. IEEE Commun. Mag. 2019, 57, 76–81. [Google Scholar] [CrossRef]

- Susto, G.A.; Cenedese, A.; Terzi, M. Time-series classification methods: Review and applications to power systems data. Big Data Appl. Power Syst. 2018, 179–220. [Google Scholar] [CrossRef]

- Li, T.; Qian, Z.; Deng, W.; Zhang, D.; Lu, H.; Wang, S. Forecasting crude oil prices based on variational mode decomposition and random sparse Bayesian learning. Appl. Soft Comput. 2021, 113, 108032. [Google Scholar] [CrossRef]

- Chao, L.; Zhipeng, J.; Yuanjie, Z. A novel reconstructed training-set SVM with roulette cooperative coevolution for financial time series classification. Expert Syst. Appl. 2019, 123, 283–298. [Google Scholar] [CrossRef]

- Ebrahimi, Z.; Loni, M.; Daneshtalab, M.; Gharehbaghi, A. A review on deep learning methods for ECG arrhythmia classification. Expert Syst. Appl. X 2020, 7, 100033. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, T.; Li, T. Detecting epileptic seizures in EEG signals with complementary ensemble empirical mode decomposition and extreme gradient boosting. Entropy 2020, 22, 140. [Google Scholar] [CrossRef]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep learning for electroencephalogram (EEG) classification tasks: A review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, Y.F. Early detection of fake news on social media through propagation path classification with recurrent and convolutional networks. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Pantiskas, L.; Verstoep, K.; Hoogendoorn, M.; Bal, H. Taking ROCKET on an efficiency mission: Multivariate time series classification with LightWaves. arXiv 2022, arXiv:2204.01379. [Google Scholar]

- Nishikawa, Y.; Sannomiya, N.; Itakura, H. A method for suboptimal design of nonlinear feedback systems. Automatica 1971, 7, 703–712. [Google Scholar]

- Lucas, B.; Shifaz, A.; Pelletier, C.; O’Neill, L.; Zaidi, N.; Goethals, B.; Petitjean, F.; Webb, G.I. Proximity forest: An effective and scalable distance-based classifier for time series. Data Min. Knowl. Discov. 2019, 33, 607–635. [Google Scholar] [CrossRef]

- Flynn, M.; Large, J.; Bagnall, T. The contract random interval spectral ensemble (c-RISE): The effect of contracting a classifier on accuracy. In Proceedings of the International Conference on Hybrid Artificial Intelligence Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 381–392. [Google Scholar]

- Deng, H.; Runger, G.; Tuv, E.; Vladimir, M. A time series forest for classification and feature extraction. Inf. Sci. 2013, 239, 142–153. [Google Scholar] [CrossRef]

- Middlehurst, M.; Large, J.; Bagnall, A. The canonical interval forest (CIF) classifier for time series classification. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 188–195. [Google Scholar]

- Lubba, C.H.; Sethi, S.S.; Knaute, P.; Schultz, S.R.; Fulcher, B.D.; Jones, N.S. catch22: CAnonical Time-series CHaracteristics: Selected through highly comparative time-series analysis. Data Min. Knowl. Discov. 2019, 33, 1821–1852. [Google Scholar] [CrossRef]

- Schäfer, P. The BOSS is concerned with time series classification in the presence of noise. Data Min. Knowl. Discov. 2015, 29, 1505–1530. [Google Scholar] [CrossRef]

- Middlehurst, M.; Large, J.; Cawley, G.; Bagnall, A. The temporal dictionary ensemble (TDE) classifier for time series classification. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2020, Ghent, Belgium, 14–18 September 2020; Part I. Springer: Berlin/Heidelberg, Germany, 2021; pp. 660–676. [Google Scholar]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef]

- Lines, J.; Davis, L.M.; Hills, J.; Bagnall, A. A shapelet transform for time series classification. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 289–297. [Google Scholar]

- Bostrom, A.; Bagnall, A. Binary shapelet transform for multiclass time series classification. In Transactions on Large-Scale Data- and Knowledge-Centered Systems XXXII: Special Issue on Big Data Analytics and Knowledge Discovery; Springer: Berlin/Heidelberg, Germany, 2017; pp. 24–46. [Google Scholar]

- Bagnall, A.; Flynn, M.; Large, J.; Lines, J.; Middlehurst, M. On the usage and performance of the hierarchical vote collective of transformation-based ensembles version 1.0 (hive-cote v1.0). In Proceedings of the Advanced Analytics and Learning on Temporal Data: 5th ECML PKDD Workshop, AALTD 2020, Ghent, Belgium, 18 September 2020; Revised Selected Papers 6. Springer: Berlin/Heidelberg, Germany, 2020; pp. 3–18. [Google Scholar]

- Middlehurst, M.; Large, J.; Flynn, M.; Lines, J.; Bostrom, A.; Bagnall, A. HIVE-COTE 2.0: A new meta ensemble for time series classification. Mach. Learn. 2021, 110, 3211–3243. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. Inceptiontime: Finding alexnet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Shifaz, A.; Pelletier, C.; Petitjean, F.; Webb, G.I. TS-CHIEF: A scalable and accurate forest algorithm for time series classification. Data Min. Knowl. Discov. 2020, 34, 742–775. [Google Scholar] [CrossRef]

- Längkvist, M.; Karlsson, L.; Loutfi, A. A review of unsupervised feature learning and deep learning for time-series modeling. Pattern Recognit. Lett. 2014, 42, 11–24. [Google Scholar] [CrossRef]

- Bengio, Y.; Yao, L.; Alain, G.; Vincent, P. Generalized denoising auto-encoders as generative models. Adv. Neural Inf. Process. Syst. 2013, 26. [Google Scholar]

- Hu, Q.; Zhang, R.; Zhou, Y. Transfer learning for short-term wind speed prediction with deep neural networks. Renew. Energy 2016, 85, 83–95. [Google Scholar] [CrossRef]

- Gallicchio, C.; Micheli, A. Deep echo state network (deepesn): A brief survey. arXiv 2017, arXiv:1712.04323. [Google Scholar]

- Pascanu, R.; Mikolov, T.; Bengio, Y. Understanding the exploding gradient problem. arXiv 2012, arXiv:1211.5063. [Google Scholar]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Hatami, N.; Gavet, Y.; Debayle, J. Classification of time-series images using deep convolutional neural networks. In Proceedings of the Tenth International Conference on Machine Vision (ICMV 2017), Vienna, Austria, 13–15 November 2017; SPIE: Bellingham, WA, USA, 2018; Volume 10696, pp. 242–249. [Google Scholar]

- Tripathy, R.; Acharya, U.R. Use of features from RR-time series and EEG signals for automated classification of sleep stages in deep neural network framework. Biocybern. Biomed. Eng. 2018, 38, 890–902. [Google Scholar] [CrossRef]

- Wang, Z.; Oates, T. Imaging time-series to improve classification and imputation. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Nweke, H.F.; Teh, Y.W.; Al-Garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Tan, C.W.; Dempster, A.; Bergmeir, C.; Webb, G.I. MultiRocket: Multiple pooling operators and transformations for fast and effective time series classification. Data Min. Knowl. Discov. 2022, 36, 1623–1646. [Google Scholar] [CrossRef]

- Dempster, A.; Petitjean, F.; Webb, G.I. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Discov. 2020, 34, 1454–1495. [Google Scholar] [CrossRef]

- Dempster, A.; Schmidt, D.F.; Webb, G.I. Minirocket: A very fast (almost) deterministic transform for time series classification. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Singapore, 14–18 August 2021; pp. 248–257. [Google Scholar]

- Torres, M.E.; Colominas, M.A.; Schlotthauer, G.; Flandrin, P. A complete ensemble empirical mode decomposition with adaptive noise. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 4144–4147. [Google Scholar]

- Zhou, Y.; Li, T.; Shi, J.; Qian, Z. A CEEMDAN and XGBOOST-based approach to forecast crude oil prices. Complexity 2019, 2019, 1–15. [Google Scholar] [CrossRef]

- Li, T.; Qian, Z.; He, T. Short-term load forecasting with improved CEEMDAN and GWO-based multiple kernel ELM. Complexity 2020, 2020, 1–20. [Google Scholar] [CrossRef]

- Wu, Z.; Huang, N.E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009, 1, 1–41. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. London. Ser. A Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Li, S.; Zhou, W.; Yuan, Q.; Geng, S.; Cai, D. Feature extraction and recognition of ictal EEG using EMD and SVM. Comput. Biol. Med. 2013, 43, 807–816. [Google Scholar] [CrossRef] [PubMed]

- Tang, B.; Dong, S.; Song, T. Method for eliminating mode mixing of empirical mode decomposition based on the revised blind source separation. Signal Process. 2012, 92, 248–258. [Google Scholar] [CrossRef]

- Wu, J.; Chen, Y.; Zhou, T.; Li, T. An adaptive hybrid learning paradigm integrating CEEMD, ARIMA and SBL for crude oil price forecasting. Energies 2019, 12, 1239. [Google Scholar] [CrossRef]

- Li, T.; Zhou, M. ECG classification using wavelet packet entropy and random forests. Entropy 2016, 18, 285. [Google Scholar] [CrossRef]

- Dau, H.A.; Bagnall, A.; Kamgar, K.; Yeh, C.C.M.; Zhu, Y.; Gharghabi, S.; Ratanamahatana, C.A.; Keogh, E. The UCR time series archive. IEEE/CAA J. Autom. Sin. 2019, 6, 1293–1305. [Google Scholar] [CrossRef]

- Chai, J.; Wang, Y.; Wang, S.; Wang, Y. A decomposition–integration model with dynamic fuzzy reconstruction for crude oil price prediction and the implications for sustainable development. J. Clean. Prod. 2019, 229, 775–786. [Google Scholar] [CrossRef]

- Demšar, J. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

| Rocket | MiniRocket | MultiRocket | |

|---|---|---|---|

| kernel length | 7, 9, 11 | 9 | 9 |

| weights | −1, 2 | −1, 2 | |

| bias | from convolutional output | from convolutional output | |

| dilation | random | fixed (range , ⋯, ) | fixed (range , ⋯, ) |

| padding | random | fixed | fixed |

| pooling operators | PPV, MAX | PPV | PPV, MPV, MIPV, LSPV |

| num. features | 20 K | 10 K | 50 K |

| MultiRocket | Improved MultiRocket | |

|---|---|---|

| kernel length | 9 | 6 |

| num. kernels | 84 | 15 |

| weights | −1, 2 | −1, 2 |

| bias | from convolutional output | from convolutional output |

| dilation | fixed (range , ⋯, ) | fixed (range , ⋯, ) |

| padding | fixed | fixed |

| pooling operators | PPV, MPV, MIPV, LSPV | PPV, MPV, MIPV, LSPV, NSPV |

| num. features | 50 K | 50 K |

| Convolutional Outputs | PPV | MPV | MIPV | LSPV | NSPV |

|---|---|---|---|---|---|

| A = [1, 1, 0, 0, 0, 1, 1, 0, 0, 0, 1, 1] | 0.5 | 1 | 5.5 | 2 | 3 |

| B = [1, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 1] | 0.5 | 1 | 5.5 | 2 | 1 |

| C = [1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1, 1] | 0.5 | 1 | 5.5 | 2 | 2 |

| TSC Algorithm | Total Training Time |

|---|---|

| MiniRocket (10 k features) | 3.61 min |

| MultiRocket (50 k features) | 23.65 min |

| Rocket (20 k features) | 4.25 h |

| CEEMD-MultiRocket (50 k features) | 4.88 h |

| Arsenal | 27.91 h |

| DrCIF | 45.40 h |

| TDE | 75.41 h |

| STC | 115.88 h |

| HIVE-COTE 2.0 | 340.21 h |

| HIVE-COTE 1.0 | 427.18 h |

| TS-CHIEF | 1016.87 h |

| Threshold | Number of IMFs Selected | |||

|---|---|---|---|---|

| 0 | 1 | 2 | 3 | |

| 0 | 0 | 0 | 0 | 109 |

| 0.3 | 0 | 3 | 3 | 103 |

| 0.5 | 0 | 14 | 7 | 88 |

| 0.7 | 0 | 31 | 13 | 65 |

| 0.8 | 1 | 40 | 22 | 46 |

| 0.9 | 16 | 48 | 17 | 28 |

| 1 | 60 | 31 | 11 | 7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Wu, J.; Wei, Y.; Li, T. CEEMD-MultiRocket: Integrating CEEMD with Improved MultiRocket for Time Series Classification. Electronics 2023, 12, 1188. https://doi.org/10.3390/electronics12051188

Wang P, Wu J, Wei Y, Li T. CEEMD-MultiRocket: Integrating CEEMD with Improved MultiRocket for Time Series Classification. Electronics. 2023; 12(5):1188. https://doi.org/10.3390/electronics12051188

Chicago/Turabian StyleWang, Panjie, Jiang Wu, Yuan Wei, and Taiyong Li. 2023. "CEEMD-MultiRocket: Integrating CEEMD with Improved MultiRocket for Time Series Classification" Electronics 12, no. 5: 1188. https://doi.org/10.3390/electronics12051188

APA StyleWang, P., Wu, J., Wei, Y., & Li, T. (2023). CEEMD-MultiRocket: Integrating CEEMD with Improved MultiRocket for Time Series Classification. Electronics, 12(5), 1188. https://doi.org/10.3390/electronics12051188