HRFP: Highly Relevant Frequent Patterns-Based Prefetching and Caching Algorithms for Distributed File Systems

Abstract

1. Introduction

- Proposed highly relevant frequent patterns-based prefetching algorithm to prefetch the file blocks based on pattern relevancy.

- Proposed multi-level caching algorithm to fill the data node caches and caches of global cache node with prefetched file blocks in an efficient manner.

- Introduced a novel relevancy-based replacement policy to replace the caches of data nodes and global cache node whenever there is a need to create space for storing the incoming patterns.

2. Related Work

2.1. Literature on Prefetching and Caching Techniques

2.2. Replacement Policies

3. Architecture of Distributed File System

4. Proposed Work

4.1. Identification of HRFPs

| Algorithm 1 Identification of local HRFPs |

|

| Algorithm 2 Identification of global HRFPs |

|

4.2. The HRFP-Based Prefetching and Caching Algorithm

4.2.1. Prefetching and Caching in Dnodes

| Algorithm 3 Prefetching and Caching in Dnodes |

|

4.2.2. Prefetching and Caching in Gnode

| Algorithm 4 Prefetching and Caching in Gnode |

|

4.3. Procedures for Read and Write in the DFS

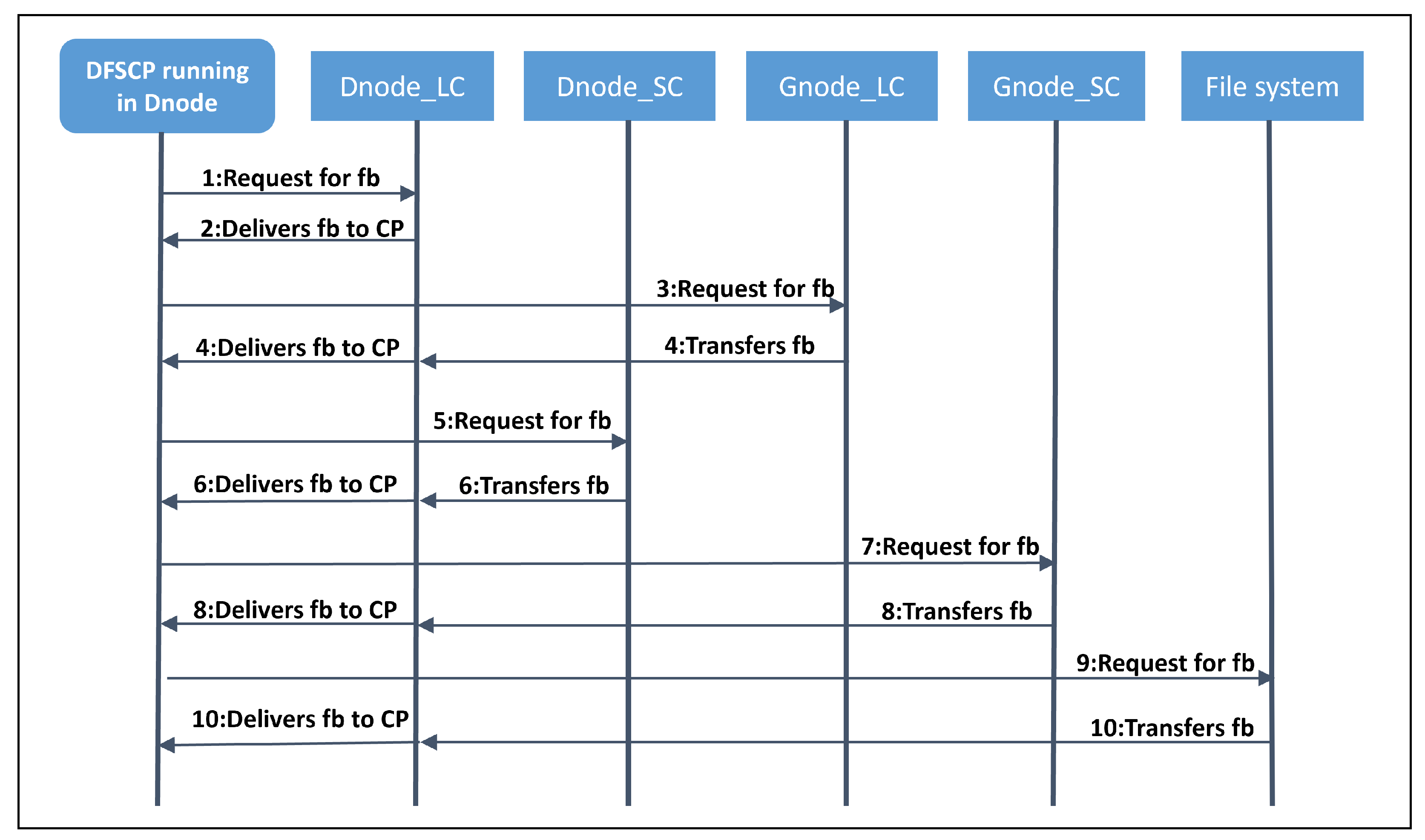

4.3.1. Procedure for Reading from DFS

- The DFS client software (DFSCP) installed in Dnode makes communication with Nnode on behalf of CP to get the addresses of Dnodes where the fb was present.

- The Nnode sends the Dnode addresses where the fb was stored.

- After receiving the addresses from Nnode, DFSCP communicates with the nearby Dnode for reading fb and delivering it to the CP.

- Checks for fb in Dnode_LC.

- fb is delivered to CP.

- Checks for fb in Gnode_LC.

- fb is transferred to Dnode_LC and delivered to CP.

- Checks for fb in Dnode_SC.

- fb is transferred to Dnode_LC and delivered to CP.

- Checks for fb in Gnode_SC.

- fb is transferred to Dnode_LC and delivered to CP.

- Contacts Nnode and checks in the file system for fb.

- fb is transferred to Dnode_LC and delivered to CP.

| Algorithm 5 Proposed read algorithm |

|

4.3.2. Relevancy-Based Replacement Policy

| Algorithm 6 Relevancy-based replacement policy |

|

4.3.3. Write Procedure

- The DFSCP on behalf of the CP running in the Dnode communicates with the Nnode to retrieve addresses of Dnodes where it has to write the fb.

- Based on the replication factor, Nnode gives the addresses of the Dnodes.

- The CP begins to write data in the Dnode where it is requested after obtaining the Dnode addresses. It transfers the data to the remaining Dnodes for writing purposes in a pipelined fashion.

- If fb already exists, invalidate all the existing entries in the Dnode and Gnode caches.

- If fb does not exist, then the default write procedure is followed to write fb.

| Algorithm 7 Proposed write algorithm |

|

4.4. Re-Initiation of Prefetching

5. Simulation Experiments

5.1. List of Assumptions

- DFS with one lakh files and ten thousand file blocks per file.

- File block size was considered as 32 KB.

- Time to fetch a file block from local cache of Dnode is 0.0008 milliseconds (ms) [50].

- Time to fetch a file block from SSD of Dnode is 0.0104 milliseconds [51].

- Reading from disk of Dnode requires 3.5 ms [52].

- 0.032 ms to read a file block from remote memory of same rack and 0.045 ms to read from different rack.

- The delay to transfer file block from remote memory is 0.04 ms [53].

5.2. Simulation

5.2.1. Data Set Generation

5.2.2. Experimental Setup

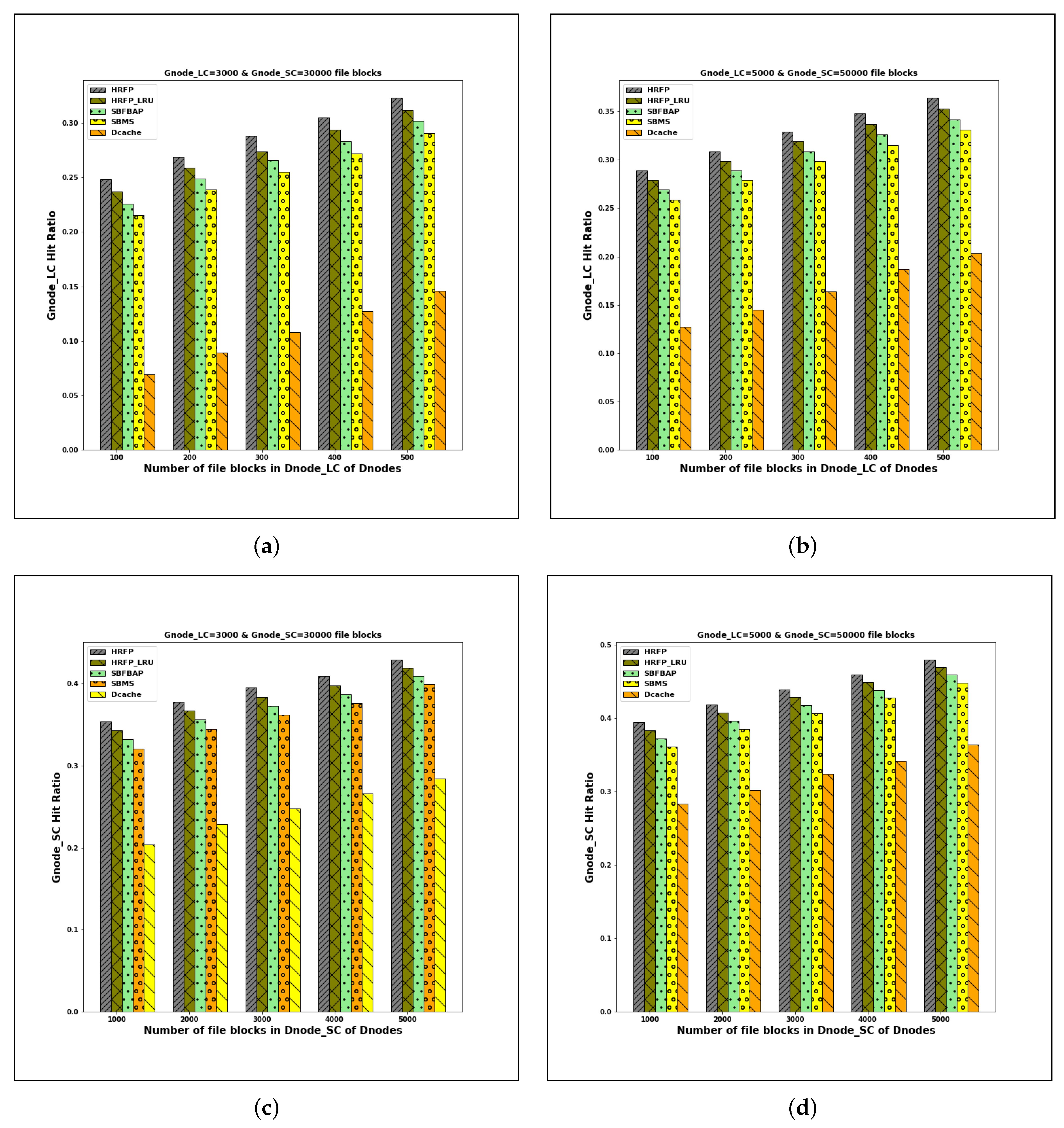

5.2.3. Experimental Results

5.2.4. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Stein, T.; Chen, E.; Mangla, K. Facebook immune system. In Proceedings of the 4th Workshop on Social Network Systems, Salzburg, Austria, 10–13 April 2011; pp. 1–8. [Google Scholar]

- Wildani, A.; Adams, I.F. A case for rigorous workload classification. In Proceedings of the 2015 IEEE 23rd International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems, Atlanta, GA, USA, 5–7 October 2015; pp. 146–149. [Google Scholar]

- Mittal, S. A survey of recent prefetching techniques for processor caches. ACM Comput. Surv. (CSUR) 2016, 49, 1–35. [Google Scholar] [CrossRef]

- Ali, W.; Shamsuddin, S.M.; Ismail, A.S. A survey of web caching and prefetching. Int. J. Adv. Soft Comput. Appl 2011, 3, 18–44. [Google Scholar]

- Kasavajhala, V. Solid state drive vs. hard disk drive price and performance study. Proc. Dell Tech. White Pap. 2011, 8–9. Available online: https://profesorweb.es/wp-content/uploads/2012/11/ssd_vs_hdd_price_and_performance_study-1.pdf (accessed on 11 November 2022).

- Zhang, J.; Jiang, B. A kind of Metadata Prefetch Method for Distributed File System. In Proceedings of the 2021 International Conference on Big Data Analysis and Computer Science (BDACS), Kunming, China, 25–27 June 2021; pp. 115–121. [Google Scholar]

- Chen, H.; Zhou, E.; Liu, J.; Zhang, Z. An rnn based mechanism for file prefetching. In Proceedings of the 2019 18th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Wuhan, China, 8–10 November 2019; pp. 13–16. [Google Scholar]

- Liao, J. Server-side prefetching in distributed file systems. Concurr. Comput. Pract. Exp. 2016, 28, 294–310. [Google Scholar] [CrossRef]

- Liao, J.; Trahay, F.; Gerofi, B.; Ishikawa, Y. Prefetching on storage servers through mining access patterns on blocks. IEEE Trans. Parallel Distrib. Syst. 2015, 27, 2698–2710. [Google Scholar] [CrossRef]

- Liao, J.; Trahay, F.; Xiao, G.; Li, L.; Ishikawa, Y. Performing initiative data prefetching in distributed file systems for cloud computing. IEEE Trans. Cloud Comput. 2015, 5, 550–562. [Google Scholar] [CrossRef]

- Chen, Y.; Li, C.; Lv, M.; Shao, X.; Li, Y.; Xu, Y. Explicit data correlations-directed metadata prefetching method in distributed file systems. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 2692–2705. [Google Scholar] [CrossRef]

- Al Assaf, M.M.; Jiang, X.; Qin, X.; Abid, M.R.; Qiu, M.; Zhang, J. Informed prefetching for distributed multi-level storage systems. J. Signal Process. Syst. 2018, 90, 619–640. [Google Scholar] [CrossRef]

- Kougkas, A.; Devarajan, H.; Sun, X.H. I/O acceleration via multi-tiered data buffering and prefetching. J. Comput. Sci. Technol. 2020, 35, 92–120. [Google Scholar] [CrossRef]

- Gopisetty, R.; Ragunathan, T.; Bindu, C.S. Support-based prefetching technique for hierarchical collaborative caching algorithm to improve the performance of a distributed file system. In Proceedings of the 2015 Seventh International Symposium on Parallel Architectures, Algorithms and Programming (PAAP), Nanjing, China, 12–14 December 2015; pp. 97–103. [Google Scholar]

- Gopisetty, R.; Ragunathan, T.; Bindu, C.S. Improving performance of a distributed file system using hierarchical collaborative global caching algorithm with rank-based replacement technique. Int. J. Commun. Netw. Distrib. Syst. 2021, 26, 287–318. [Google Scholar] [CrossRef]

- Jiang, S.; Ding, X.; Xu, Y.; Davis, K. A prefetching scheme exploiting both data layout and access history on disk. ACM Trans. Storage (TOS) 2013, 9, 1–23. [Google Scholar] [CrossRef]

- Li, Z.; Chen, Z.; Srinivasan, S.M.; Zhou, Y. C-Miner: Mining Block Correlations in Storage Systems. FAST 2004, 4, 173–186. [Google Scholar]

- Theis, T.N.; Wong, H.S.P. The end of moore’s law: A new beginning for information technology. Comput. Sci. Eng. 2017, 19, 41–50. [Google Scholar] [CrossRef]

- Huang, S.; Wei, Q.; Feng, D.; Chen, J.; Chen, C. Improving flash-based disk cache with lazy adaptive replacement. ACM Trans. Storage (TOS) 2016, 12, 1–24. [Google Scholar] [CrossRef]

- Yoon, S.K.; Yun, J.; Kim, J.G.; Kim, S.D. Self-adaptive filtering algorithm with PCM-based memory storage system. ACM Trans. Embed. Comput. Syst. (TECS) 2018, 17, 1–23. [Google Scholar] [CrossRef]

- Yoon, S.K.; Youn, Y.S.; Burgstaller, B.; Kim, S.D. Self-learnable cluster-based prefetching method for DRAM-flash hybrid main memory architecture. ACM J. Emerg. Technol. Comput. Syst. (JETC) 2019, 15, 1–21. [Google Scholar] [CrossRef]

- Niu, N.; Fu, F.; Yang, B.; Yuan, J.; Lai, F.; Wang, J. WIRD: An efficiency migration scheme in hybrid DRAM and PCM main memory for image processing applications. IEEE Access 2019, 7, 35941–35951. [Google Scholar] [CrossRef]

- Byna, S.; Breitenfeld, M.S.; Dong, B.; Koziol, Q.; Pourmal, E.; Robinson, D.; Soumagne, J.; Tang, H.; Vishwanath, V.; Warren, R. Exahdf5: Delivering efficient parallel i/o on exascale computing systems. J. Comput. Sci. Technol. 2020, 35, 145–160. [Google Scholar] [CrossRef]

- Yun, J.T.; Yoon, S.K.; Kim, J.G.; Kim, S.D. Effective data prediction method for in-memory database applications. J. Supercomput. 2020, 76, 580–601. [Google Scholar] [CrossRef]

- Herodotou, H. Autocache: Employing machine learning to automate caching in distributed file systems. In Proceedings of the 2019 IEEE 35th International Conference on Data Engineering Workshops (ICDEW), Macao, China, 8–12 April 2019; pp. 133–139. [Google Scholar]

- Yoshimura, T.; Chiba, T.; Horii, H. Column Cache: Buffer Cache for Columnar Storage on HDFS. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 282–291. [Google Scholar]

- Zhang, X.; Liu, B.; Gou, Z.; Shi, J.; Zhao, X. DCache: A Distributed Cache Mechanism for HDFS based on RDMA. In Proceedings of the 2020 IEEE 22nd International Conference on High Performance Computing and Communications; IEEE 18th International Conference on Smart City; IEEE 6th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), Cuvu, Fiji, 14–16 December 2020; pp. 283–291. [Google Scholar]

- Laghari, A.A.; He, H.; Laghari, R.A.; Khan, A.; Yadav, R. Cache performance optimization of QoC framework. EAI Endorsed Trans. Scalable Inf. Syst. 2019. [Google Scholar] [CrossRef]

- Shin, W.; Brumgard, C.D.; Xie, B.; Vazhkudai, S.S.; Ghoshal, D.; Oral, S.; Ramakrishnan, L. Data Jockey: Automatic data management for HPC multi-tiered storage systems. In Proceedings of the 2019 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Rio de Janeiro, Brazil, 20–24 May 2019; pp. 511–522. [Google Scholar]

- Wadhwa, B.; Byna, S.; Butt, A.R. Toward transparent data management in multi-layer storage hierarchy of hpc systems. In Proceedings of the 2018 IEEE International Conference on Cloud Engineering (IC2E), Orlando, FL, USA, 17–20 April 2018; pp. 211–217. [Google Scholar]

- He, S.; Wang, Y.; Li, Z.; Sun, X.H.; Xu, C. Cost-aware region-level data placement in multi-tiered parallel I/O systems. IEEE Trans. Parallel Distrib. Syst. 2016, 28, 1853–1865. [Google Scholar] [CrossRef]

- Ren, J.; Chen, X.; Liu, D.; Tan, Y.; Duan, M.; Li, R.; Liang, L. A machine learning assisted data placement mechanism for hybrid storage systems. J. Syst. Archit. 2021, 120, 102295. [Google Scholar] [CrossRef]

- Thomas, L.; Gougeaud, S.; Rubini, S.; Deniel, P.; Boukhobza, J. Predicting file lifetimes for data placement in multi-tiered storage systems for HPC. In Proceedings of the Workshop on Challenges and Opportunities of Efficient and Performant Storage Systems, Online Event, 26 April 2021; pp. 1–9. [Google Scholar]

- Shi, W.; Cheng, P.; Zhu, C.; Chen, Z. An intelligent data placement strategy for hierarchical storage systems. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 2023–2027. [Google Scholar]

- Cao, T. Popularity-Aware Storage Systems for Big Data Applications. Ph.D. Thesis, Auburn University, Auburn, AL, USA, 2022. [Google Scholar]

- Dessokey, M.; Saif, S.M.; Eldeeb, H.; Salem, S.; Saad, E. Importance of Memory Management Layer in Big Data Architecture. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 1–8. [Google Scholar] [CrossRef]

- Kim, T.; Choi, S.; No, J.; Park, S.S. HyperCache: A hypervisor-level virtualized I/O cache on KVM/QEMU. In Proceedings of the 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), Prague, Czech Republic, 3–6 July 2018; pp. 846–850. [Google Scholar]

- Einziger, G.; Friedman, R.; Manes, B. Tinylfu: A highly efficient cache admission policy. ACM Trans. Storage (ToS) 2017, 13, 1–31. [Google Scholar] [CrossRef]

- Blankstein, A.; Sen, S.; Freedman, M.J. Hyperbolic caching: Flexible caching for web applications. In Proceedings of the 2017 USENIX Annual Technical Conference (USENIX ATC 17), Santa Clara, CA, USA, 12–14 July 2017; pp. 499–511. [Google Scholar]

- Akbari Bengar, D.; Ebrahimnejad, A.; Motameni, H.; Golsorkhtabaramiri, M. A page replacement algorithm based on a fuzzy approach to improve cache memory performance. Soft Comput. 2020, 24, 955–963. [Google Scholar] [CrossRef]

- Sabeghi, M.; Yaghmaee, M.H. Using fuzzy logic to improve cache replacement decisions. Int J Comput Sci Netw. Secur. 2006, 6, 182–188. [Google Scholar]

- Aimtongkham, P.; So-In, C.; Sanguanpong, S. A novel web caching scheme using hybrid least frequently used and support vector machine. In Proceedings of the 2016 13th International Joint Conference on Computer Science and Software Engineering (JCSSE), Khon Kaen, Thailand, 13–15 July 2016; pp. 1–6. [Google Scholar]

- Wang, Y.; Yang, Y.; Han, C.; Ye, L.; Ke, Y.; Wang, Q. LR-LRU: A PACS-oriented intelligent cache replacement policy. IEEE Access 2019, 7, 58073–58084. [Google Scholar] [CrossRef]

- Ma, T.; Qu, J.; Shen, W.; Tian, Y.; Al-Dhelaan, A.; Al-Rodhaan, M. Weighted greedy dual size frequency based caching replacement algorithm. IEEE Access 2018, 6, 7214–7223. [Google Scholar] [CrossRef]

- Chao, W. Web cache intelligent replacement strategy combined with GDSF and SVM network re-accessed probability prediction. J. Ambient Intell. Humaniz. Comput. 2020, 11, 581–587. [Google Scholar] [CrossRef]

- Ganfure, G.O.; Wu, C.F.; Chang, Y.H.; Shih, W.K. Deepprefetcher: A deep learning framework for data prefetching in flash storage devices. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3311–3322. [Google Scholar] [CrossRef]

- Cherubini, G.; Kim, Y.; Lantz, M.; Venkatesan, V. Data prefetching for large tiered storage systems. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017; pp. 823–828. [Google Scholar]

- Li, H.; Ghodsi, A.; Zaharia, M.; Shenker, S.; Stoica, I. Tachyon: Reliable, memory speed storage for cluster computing frameworks. In Proceedings of the ACM Symposium on Cloud Computing, Seattle, WA, USA, 3–5 November 2014; pp. 1–15. [Google Scholar]

- Paz, H.R.; Abdala, N.C. Applying Data Mining Techniques to Determine Frequent Patterns in Student Dropout: A Case Study. In Proceedings of the 2022 IEEE World Engineering Education Conference (EDUNINE), Santos, Brazil, 13–16 March 2022; pp. 1–4. [Google Scholar]

- Vengeance, C. Corsair Vengeance LPX DDR4 3000 C15 2x16GB CMK32GX4M2B3000C15. 2019. Available online: https://ram.userbenchmark.com/Compare/Corsair~Vengeance-LPX-DDR4-3000-C15-2x16GB-vs-Group-/m92054vs10 (accessed on 11 November 2022).

- Intel. List of Intel SSDs. 2019. Available online: https://en.wikipedia.org/w/index.php?title=List_of_Intel_SSDs&oldid=898338259 (accessed on 11 November 2022).

- Seagate. Storage Reviews. 2015. Available online: https://www.storagereview.com/seagate_enterprise_performance_10k_hdd_review (accessed on 11 November 2022).

- 5020, C.N. Switch Performance in Market-Data and Back-Office Data Delivery Environments. 2019. Available online: https://www.cisco.com/c/en/us/products/collateral/switches/nexus-5000-series-switches/white_paper_c11-492751.html (accessed on 11 November 2022).

- Tang, W.; Fu, Y.; Cherkasova, L.; Vahdat, A. Medisyn: A synthetic streaming media service workload generator. In Proceedings of the 13th international workshop on Network and operating systems support for digital audio and video, Monterey, CA, USA, 1–3 June 2003; pp. 12–21. [Google Scholar]

- Nagaraj, S. Zipf’s Law and Its Role in Web Caching. Web Caching Appl. 2004, 165–167. [Google Scholar] [CrossRef]

- Intel. Resource and Design Center for Development with Intel. 2019. Available online: https://www.intel.com/content/www/us/en/design/resource.design-center.html (accessed on 11 November 2022).

- Shvachko, K.; Kuang, H.; Radia, S.; Chansler, R. The hadoop distributed file system. In Proceedings of the 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Incline Village, NV, USA, 3–7 May 2010; pp. 1–10. [Google Scholar]

- Nalajala, A.; Ragunathan, T.; Rajendra, S.H.T.; Nikhith, N.V.S.; Gopisetty, R. Improving Performance of Distributed File System through Frequent Block Access Pattern-Based Prefetching Algorithm. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–7. [Google Scholar]

| Reference | Approach | Limitations |

|---|---|---|

| J. Zhang et al. [6] | HR-Meta | Client-side prefetching was not considered. |

| H. Chen et al. [7] | RNN based prefetching | Computational Overhead is high |

| G.O. Ganfure et al. [46] | Deeprefetcher | Overhead of tranining neural network models. |

| G. Cherubini et al. [47] | Prefetching was done based on machine learning models | Computational and storage overhead are high. |

| Y. Chen et al. [11] | Data correlation based prefetching | Files which are not popular were also prefetched. |

| M.M. Al Assaf et al. [12] | IPODS | Overhead on clients to generate hints. |

| A. Kougkas et al. [13] | Hermes | Overhead on servers. |

| J. Liao et al. [8,9,10] | Initiative data prefetching | Low-level file system serves the I/O requests. |

| R. Gopisetty et al. [14,15] | Support-based prefetching | Multi-level memories were not considered. |

| S. Jiang et al. [16] | DiskSeen | Prefetching errors are high if file requests are interleaved. |

| Z. Li et al. [17] | C-miner | Prefetching may not be accurate if the file sequence access pattern changes regularly. |

| H. Herodotou et al. [25] T. Yoshimura et al. [26] | Centralized caching approach | Limited to single computer system. |

| H. Li et al. [48] | Shared Cache | |

| approach | The data was cached irrespective of popularity. | |

| S. K. Yoon et al. [20,21] S. Huang et al. [19] N. Niu et al. [22] | Flash- based caching approach | Not effective for big data applications |

| D. Akbari bengar et al. [40] M. Sabeghi et al. [41] P. Aimtongkham et al. [42] Y. Wang et al. [43] | Machine learning based replacement policies | Computational overhead is high |

| W. Ali et al. [4] | LRU, LFU, SIZE | These policies won’t consider frequency and recency in a combined manner. |

| Proposed Algorithms | Existing Algorithms | |||

|---|---|---|---|---|

| HDFS | Dcache | SBMS | SBFBAP | |

| HRFP | 53% | 41% | 34% | 22% |

| HRFP_LRU | 44% | 36% | 23% | 15% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nalajala, A.; Ragunathan, T.; Naha, R.; Battula, S.K. HRFP: Highly Relevant Frequent Patterns-Based Prefetching and Caching Algorithms for Distributed File Systems. Electronics 2023, 12, 1183. https://doi.org/10.3390/electronics12051183

Nalajala A, Ragunathan T, Naha R, Battula SK. HRFP: Highly Relevant Frequent Patterns-Based Prefetching and Caching Algorithms for Distributed File Systems. Electronics. 2023; 12(5):1183. https://doi.org/10.3390/electronics12051183

Chicago/Turabian StyleNalajala, Anusha, T. Ragunathan, Ranesh Naha, and Sudheer Kumar Battula. 2023. "HRFP: Highly Relevant Frequent Patterns-Based Prefetching and Caching Algorithms for Distributed File Systems" Electronics 12, no. 5: 1183. https://doi.org/10.3390/electronics12051183

APA StyleNalajala, A., Ragunathan, T., Naha, R., & Battula, S. K. (2023). HRFP: Highly Relevant Frequent Patterns-Based Prefetching and Caching Algorithms for Distributed File Systems. Electronics, 12(5), 1183. https://doi.org/10.3390/electronics12051183