Hierarchical Motion Excitation Network for Few-Shot Video Recognition

Abstract

1. Introduction

2. Related Work

2.1. Two-Dimensional CNN-Based Video Classification

2.2. Three-Dimensional CNN-Based Video Classification

2.3. Few-Shot Video Classification

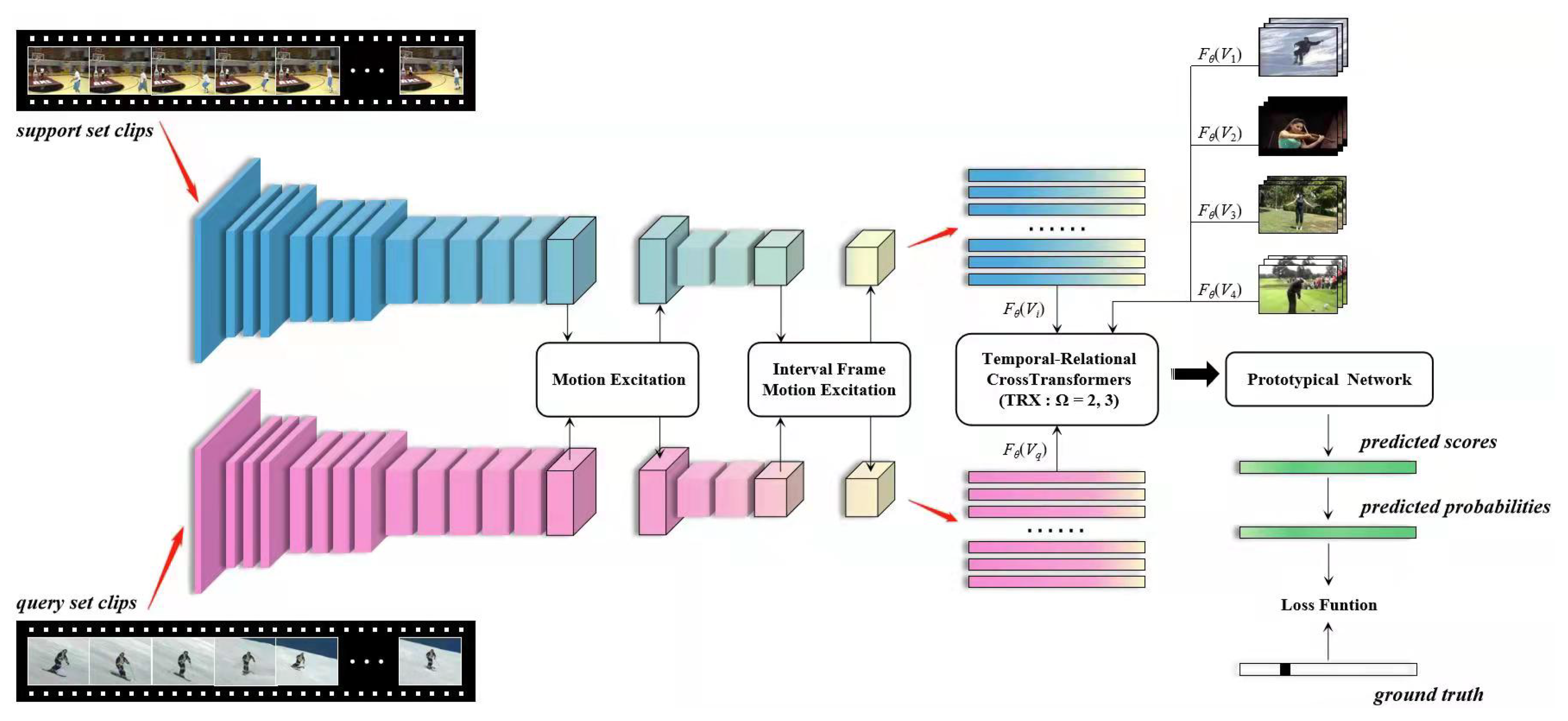

3. Method

3.1. Problem Formulation

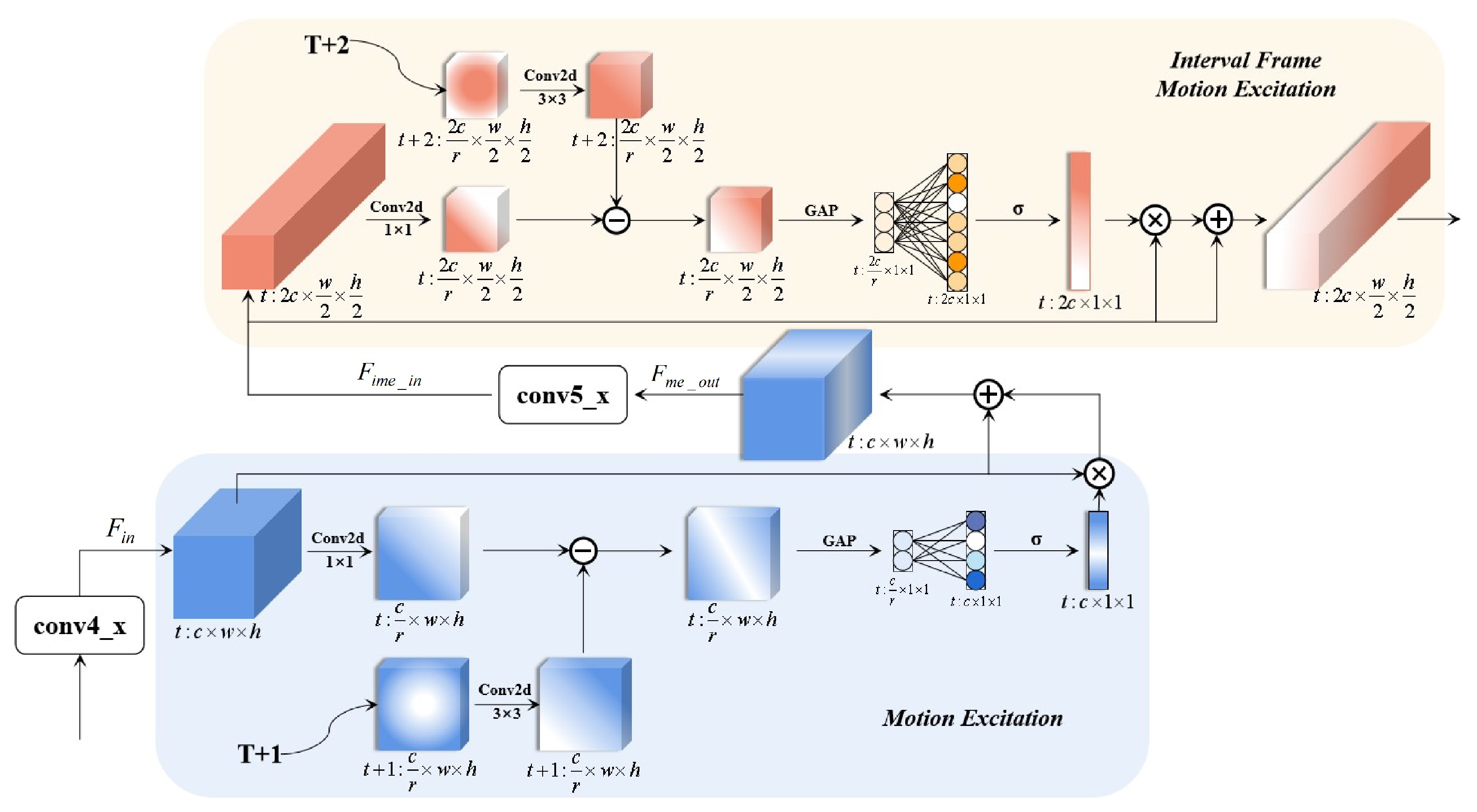

3.2. Hierarchical Motion Excitation Module

3.2.1. Motion Excitation

3.2.2. Interval Frame Motion Excitation

3.2.3. Module Aggregation for Accumulated Feature-Level Motion Differences among Multi-Frames

3.3. Few-Shot Classifier

4. Experiments

4.1. Datasets

4.1.1. UCF101

4.1.2. HMDB51

4.2. Implementation Details

4.2.1. Pre-Processing

4.2.2. Training Details

4.2.3. Evaluation Details

4.3. Experimental Results

4.4. Ablation Study

4.4.1. Effectiveness of Each Component of HME

4.4.2. HME-Net with Different r Values

4.4.3. Five-Way K-Shot Results

4.5. Visualization

4.5.1. Visualization of Feature-Level Motion Information

4.5.2. Visualization for Few-Shot Classification

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fu, Y.; Zhang, L.; Wang, J.; Fu, Y.; Jiang, Y.G. Depth Guided Adaptive Meta-Fusion Network for Few-shot Video Recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1142–1151. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese neural networks for one-shot image recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuogl, K.; Wierstra, D. Matching networks for one shot learning. Adv. Neural Inf. Process. Syst. 2016, 29, 3630–3638. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical networks for few-shot learning. arXiv 2017, arXiv:1703.05175. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Chen, Z.; Fu, Y.; Wang, Y.X.; Ma, L.; Liu, W.; Hebert, M. Image deformation meta-networks for one-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8680–8689. [Google Scholar]

- Wang, Y.; Xu, C.; Liu, C.; Zhang, L.; Fu, Y. Instance Credibility Inference for Few-Shot Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Wang, Y.; Zhang, L.; Yao, Y.; Fu, Y. How to trust unlabeled data Instance Credibility Inference for Few-Shot Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6240–6253. [Google Scholar] [CrossRef] [PubMed]

- Cao, K.; Ji, J.; Cao, Z.; Chang, C.Y.; Niebles, J.C. Few-shot video classification via temporal alignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10618–10627. [Google Scholar]

- Perrett, T.; Masullo, A.; Burghardt, T.; Mirmehdi, M.; Damen, D. Temporal-Relational CrossTransformers for Few-Shot Action Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 475–484. [Google Scholar]

- Li, Y.; Ji, B.; Shi, X.; Zhang, J.; Kang, B.; Wang, L. Tea: Temporal excitation and aggregation for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 909–918. [Google Scholar]

- Yue-Hei Ng, J.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4694–4702. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. arXiv 2014, arXiv:1406.2199. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 20–36. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks for action recognition in videos. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2740–2755. [Google Scholar] [CrossRef] [PubMed]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Li, D.; Yao, T.; Duan, L.Y.; Mei, T.; Rui, Y. Unified spatio-temporal attention networks for action recognition in videos. IEEE Trans. Multimed. 2018, 21, 416–428. [Google Scholar] [CrossRef]

- Diba, A.; Sharma, V.; Van Gool, L. Deep temporal linear encoding networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2329–2338. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Deep quantization: Encoding convolutional activations with deep generative model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6759–6768. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Z.; She, Q.; Smolic, A. ACTION-Net: Multipath Excitation for Action Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13214–13223. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3d residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5533–5541. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6450–6459. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Can spatiotemporal 3d cnns retrace the history of 2d cnns and imagenet? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6546–6555. [Google Scholar]

- Zhu, L.; Yang, Y. Compound memory networks for few-shot video classification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 751–766. [Google Scholar]

- Zhu, L.; Yang, Y. Label independent memory for semi-supervised few-shot video classification. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 273–285. [Google Scholar] [CrossRef]

- Kumar Dwivedi, S.; Gupta, V.; Mitra, R.; Ahmed, S.; Jain, A. Protogan: Towards few shot learning for action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Bishay, M.; Zoumpourlis, G.; Patras, I. Tarn: Temporal attentive relation network for few-shot and zero-shot action recognition. arXiv 2019, arXiv:1907.09021. [Google Scholar]

- Zhang, H.; Zhang, L.; Qi, X.; Li, H.; Torr, P.H.; Koniusz, P. Few-shot action recognition with permutation-invariant attention. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Proceedings, Part V 16, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 525–542. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sung, F.; Zhang, L.; Xiang, T.; Hospedales, T.; Yang, Y. Learning to learn: Meta-critic networks for sample efficient learning. arXiv 2017, arXiv:1706.09529. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Doersch, C.; Gupta, A.; Zisserman, A. Crosstransformers: Spatially-aware few-shot transfer. arXiv 2020, arXiv:2007.11498. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2556–2563. [Google Scholar]

- Mishra, A.; Verma, V.K.; Reddy, M.S.K.; Arulkumar, S.; Rai, P.; Mittal, A. A generative approach to zero-shot and few-shot action recognition. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 372–380. [Google Scholar]

- Fu, Y.; Wang, C.; Fu, Y.; Wang, Y.X.; Bai, C.; Xue, X.; Jiang, Y.G. Embodied one-shot video recognition: Learning from actions of a virtual embodied agent. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 411–419. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| UCF101 | HMDB51 | |||

|---|---|---|---|---|

| Model | 3-Shot | 5-Shot | 3-Shot | 5-Shot |

| GenApp [39] | 73.4 | 78.6 | 47.5 | 52.5 |

| ProtoGAN [29] | 75.3 | 80.2 | 49.1 | 54.0 |

| ARN [31] | - | 84.8 | - | 59.1 |

| RGB Basenet [40] | 88.7 | 92.1 | 62.4 | 67.8 |

| RGB Basenet++ [1] | 90.0 | 92.9 | 63.0 | 68.2 |

| AmeFu-Net [1] | 93.1 | 95.5 | 71.5 | 75.5 |

| TRX [10] | - | 96.1 | - | 75.6 |

| HME-Net | 94.8 | 96.8 | 72.2 | 77.1 |

| UCF101 | HMDB51 | |||

|---|---|---|---|---|

| Setting | 3-Shot | 5-Shot | 3-Shot | 5-Shot |

| BaseNet | 60.1 | 64.4 | 42.1 | 45.8 |

| BaseNet + ME | 64.2 | 67.4 | 43.3 | 47.7 |

| BaseNet + HME | 65.8 | 68.6 | 44.9 | 49.7 |

| Cardinalities | 3-Shot | Cardinalities | 3-Shot |

|---|---|---|---|

| r = 2 | 92.1 | r = 16 | 93.8 |

| r = 4 | 92.5 | r = 32 | 93.4 |

| r = 8 | 93.0 | r = 64 | 93.4 |

| Dataset | Model | K-Shot | ||||

|---|---|---|---|---|---|---|

| K = 1 | K = 2 | K = 3 | K = 4 | K = 5 | ||

| UCF101 | HME-Net | 83.3 | 91.4 | 94.8 | 96.0 | 96.8 |

| HMDB51 | HME-Net | 53.1 | 65.4 | 72.2 | 75.0 | 77.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Wang, X.; Ren, S.; Wang, W.; Shi, Y. Hierarchical Motion Excitation Network for Few-Shot Video Recognition. Electronics 2023, 12, 1090. https://doi.org/10.3390/electronics12051090

Wang B, Wang X, Ren S, Wang W, Shi Y. Hierarchical Motion Excitation Network for Few-Shot Video Recognition. Electronics. 2023; 12(5):1090. https://doi.org/10.3390/electronics12051090

Chicago/Turabian StyleWang, Bing, Xiaohua Wang, Shiwei Ren, Weijiang Wang, and Yueting Shi. 2023. "Hierarchical Motion Excitation Network for Few-Shot Video Recognition" Electronics 12, no. 5: 1090. https://doi.org/10.3390/electronics12051090

APA StyleWang, B., Wang, X., Ren, S., Wang, W., & Shi, Y. (2023). Hierarchical Motion Excitation Network for Few-Shot Video Recognition. Electronics, 12(5), 1090. https://doi.org/10.3390/electronics12051090