The Semantic Segmentation of Standing Tree Images Based on the Yolo V7 Deep Learning Algorithm

Abstract

:1. Introduction

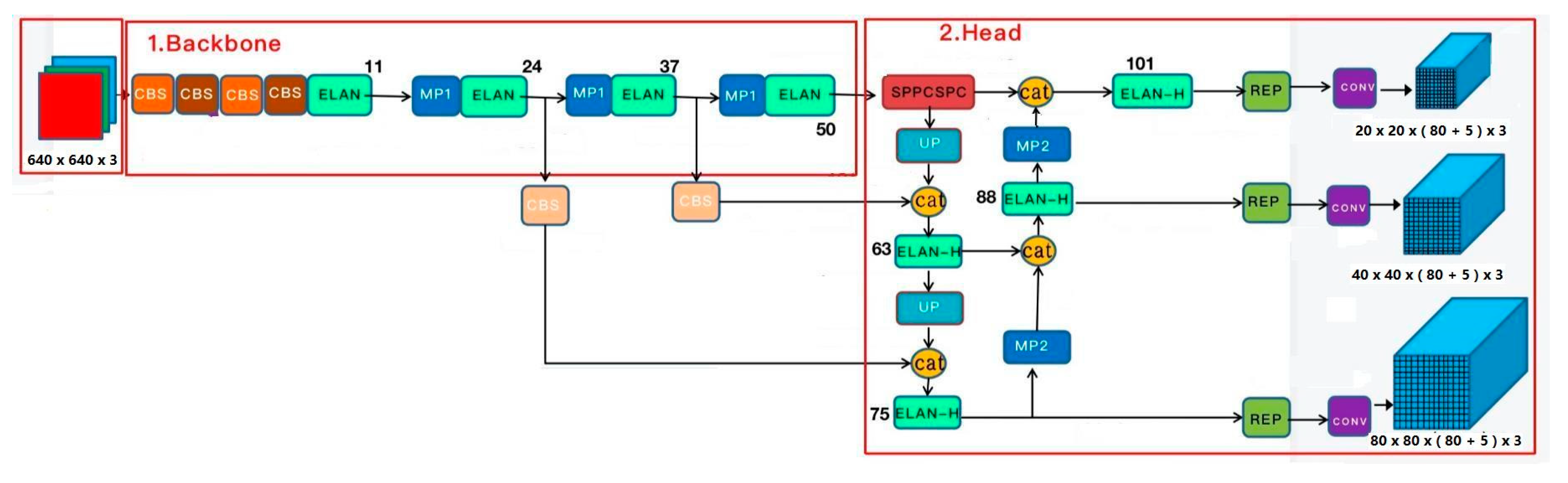

2. Yolo V7 Model

2.1. Introduction of the Yolo V7 Model

2.2. The Main Pros and Cons of Yolo V7

2.3. Convolutional Block Attention Module (CBAM)

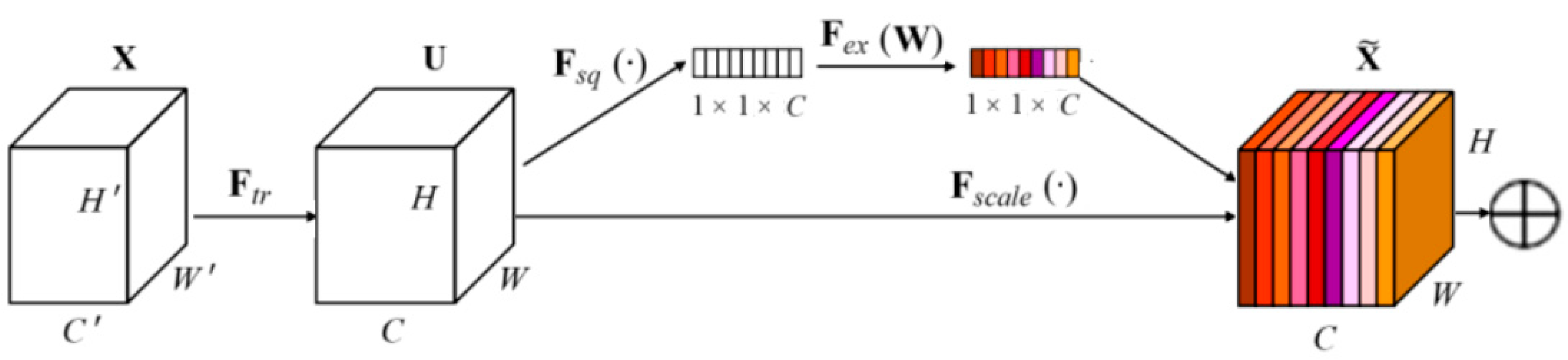

2.4. Importing the Attention Mechanism SENet

2.5. Importing the Weighted Loss Function

2.6. Detectron2

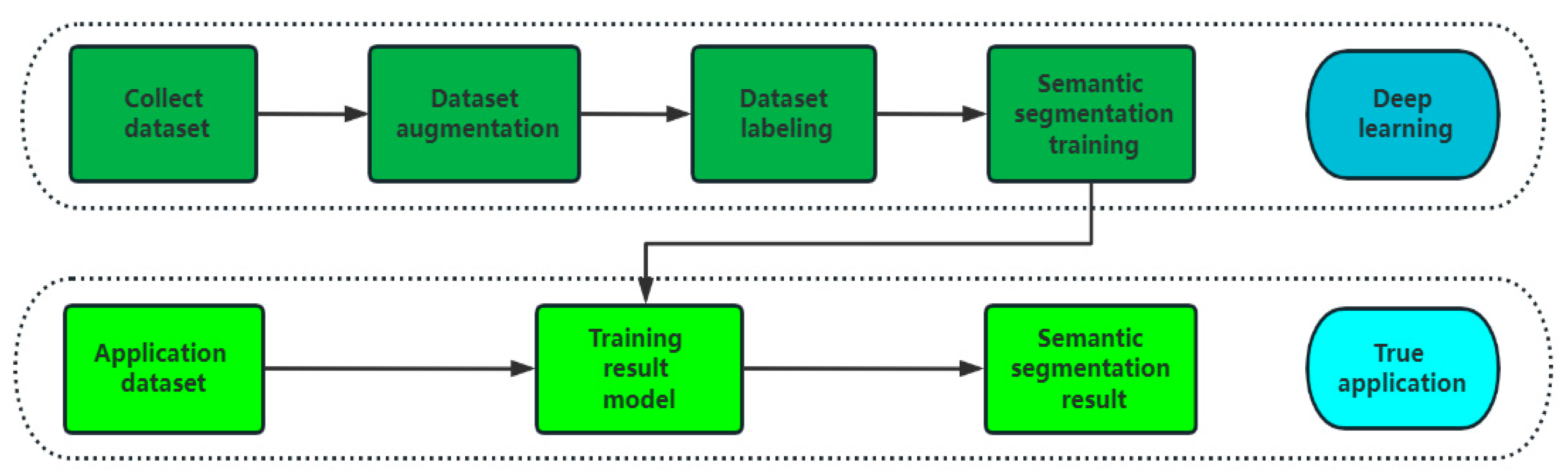

2.7. Overall Process

3. Experiments

3.1. Experimental Software and Hardware Configuration

3.2. Production of Dataset

3.2.1. Image Data Acquisition

3.2.2. Image Data Preprocessing

3.2.3. Data Enhancement Processing

3.2.4. Data Annotation Processing

3.3. Experimental Design

3.3.1. Classification Settings of Datasets

3.3.2. Experimental Effect Evaluation Index

3.3.3. Experimental Scheme Design

4. Analysis and Discussion

4.1. Visual Analysis

4.1.1. The Effect of Tree Segmentation with a Simple Background

4.1.2. The Effect of Tree Segmentation with a Complex Background

4.2. Comparison of the Segmentation Indexes of Different Models

4.2.1. Comparison of the Different Models with a Simple Background

4.2.2. Comparison of the Different Models with a Complex Background

4.3. Ablation Experiments

4.4. Computational Complexity

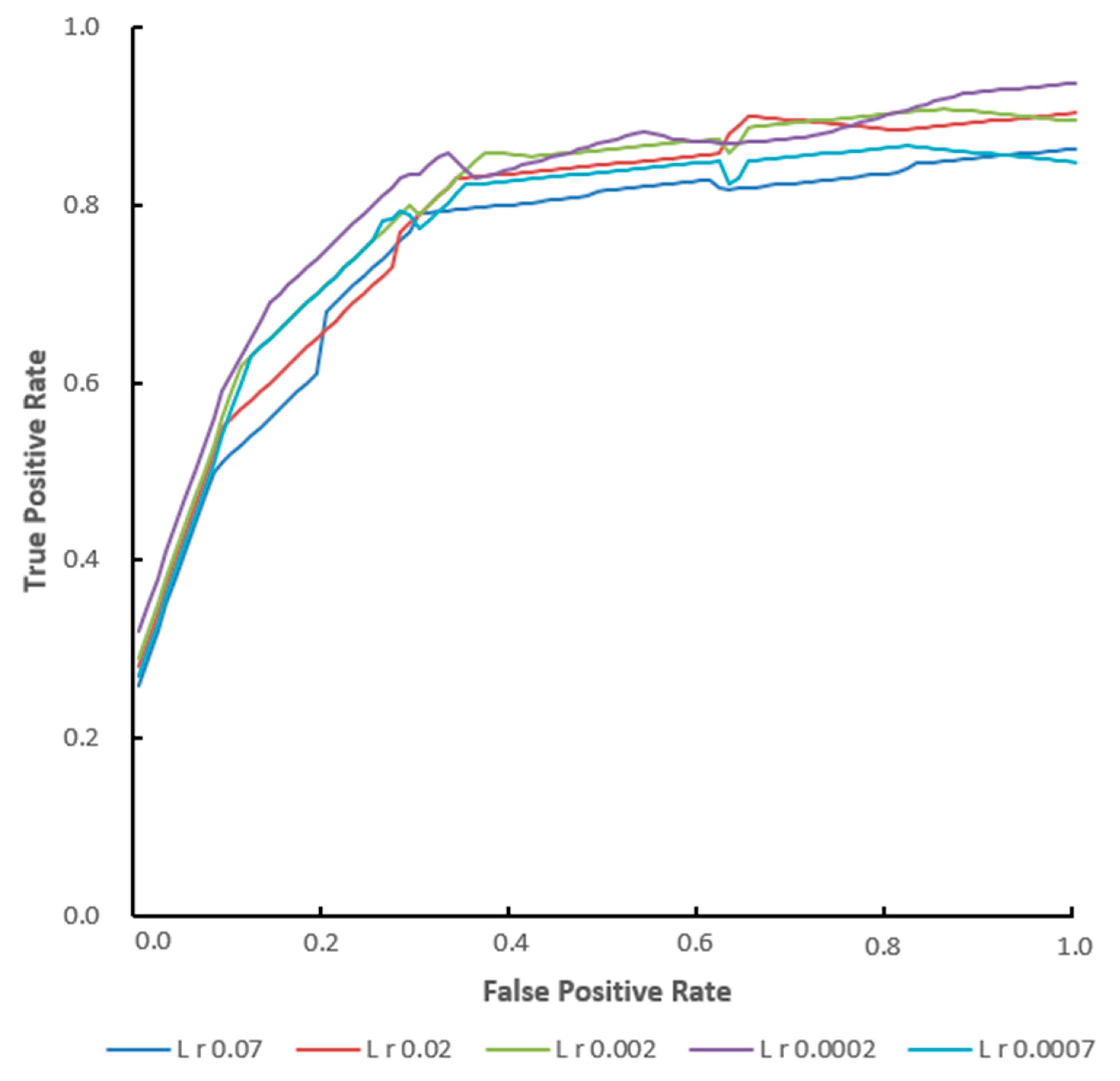

4.5. Robustness Test

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Semeraro, T.; Gatto, E.; Buccolieri, R.; Catanzaro, V.; De Bellis, L.; Cotrozzi, L.; Lorenzini, G.; Vergine, M.; Luvisi, A. How Ecosystem Services Can Strengthen the Regeneration Policies for Monumental Olive Groves Destroyed by Xylella fastidiosa Bacterium in a Peri-Urban Area. Sustainability 2021, 13, 8778. [Google Scholar] [CrossRef]

- Dechesne, C.; Mallet, C.; Le Bris, A.; Gouet-Brunet, V. Semantic segmentation of forest stands of pure species combining airborne lidar data and very high resolution multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2017, 126, 129–145. [Google Scholar] [CrossRef]

- Cong, P.; Zhou, J.; Li, S.; Lv, K.; Feng, H. Citrus Tree Crown Segmentation of Orchard Spraying Robot Based on RGB-D Image and Improved Mask R-CNN. Appl. Sci. 2023, 13, 164. [Google Scholar] [CrossRef]

- Pyo, J.; Han, K.-J.; Cho, Y.; Kim, D.; Jin, D. Generalization of U-Net Semantic Segmentation for Forest Change Detection in South Korea Using Airborne Imagery. Forests 2022, 13, 2170. [Google Scholar] [CrossRef]

- Marsocci, V.; Scardapane, S.; Komodakis, N. MARE: Self-Supervised Multi-Attention REsu-Net for Semantic Segmentation in Remote Sensing. Remote Sens. 2021, 13, 3275. [Google Scholar] [CrossRef]

- Cao, J.; Song, C.; Song, S.; Xiao, F.; Zhang, X.; Liu, Z.; Ang, M.H., Jr. Robust Object Tracking Algorithm for Autonomous Vehicles in Complex Scenes. Remote Sens. 2021, 13, 3234. [Google Scholar] [CrossRef]

- Li, M.; Li, Z.; Li, L.; Song, W. Yolo-Based Traffic Sign Recognition Algorithm. Comput. Intell. Neurosci. 2022, 2022, 2682921. [Google Scholar] [CrossRef] [PubMed]

- Quoc, H.N.; Hoang, V.T. Real-Time Human Ear Detection Based on the Joint of Yolo and RetinaFace. Complexity 2021, 2021, 7918165. [Google Scholar]

- Qi, L.; Gao, J. Small target detection based on improved Yolo v7. Comput. Eng. 2023, 49, 41–48. [Google Scholar]

- Kim, K.; Jung, S.-W. Interactive Image Segmentation Using Semi-transparent Wearable Glasses. IEEE Trans. Multimed. 2017, 20, 208–223. [Google Scholar] [CrossRef]

- Hu, T.; Yang, M.; Yang, W.; Li, A. An end-to-end differential network learning method for semantic segmentation. Int. J. Mach. Learn. Cybern. 2019, 10, 1909–1924. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, X.; Wu, R.; Kang, J.; Zhang, Y. Fully automatic image segmentation based on FCN and graph cuts. Multimed. Syst. 2022, 28, 1753–1765. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolo v4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Lin, G.; Liu, K.; Xia, X.; Yan, R. An Efficient and Intelligent Detection Method for Fabric Defects Based on Improved YOLO v5. Sensors 2023, 23, 97. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLO v6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLO v7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Chen, W.; Han, G.; Zhu, H.; Liao, L.; Zhao, W. Deep ResNet-Based Ensemble Model for Short-Term Load Forecasting in Protection System of Smart Grid. Sustainability 2022, 14, 16894. [Google Scholar] [CrossRef]

- Du, W.; Xiang, Z.; Chen, S.; Qiao, C.; Chen, Y.; Bai, T. Real-time instance segmentation with discriminative orientation maps. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 7314–7323. [Google Scholar]

- Wang, Y.; Li, J.; Chen, Z.; Wang, C. Ships’ Small Target Detection Based on the CBAM-YOLOX Algorithm. J. Mar. Sci. Eng. 2022, 10, 2013. [Google Scholar] [CrossRef]

- Yang, L.; Yan, J.; Li, H.; Cao, X.; Ge, B.; Qi, Z.; Yan, X. Real-Time Classification of Invasive Plant Seeds Based on Improved YOLOv5 with Attention Mechanism. Diversity 2022, 14, 254. [Google Scholar] [CrossRef]

- Rengasamy, D.; Jafari, M.; Rothwell, B.; Chen, X.; Figueredo, G.P. Deep Learning with Dynamically Weighted Loss Function for Sensor-Based Prognostics and Health Management. Sensors 2020, 20, 723. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 8 September 2022).

- Hangzhou Lin’an District People’s Government. Lin’an Geogr. 2022. Available online: http://www.linan.gov.cn/art/2022/3/1/art_1366301_11082111.html (accessed on 7 April 2022).

- Lishui Municipal Party History Research Office, Lishui Local Chronicles Research Office. Physical Geography.2022. Available online: http://lssz.lishui.gov.cn/art/2022/5/16/art_1229634360_7027.html (accessed on 7 April 2022).

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the IEEE International Conference on Computer Vision, 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Tian, Y.; Chen, Y.; Diming, W.; Shaoguang, Y.; Wandeng, M.; Chao, W.; Xu, C.; Long, Y. Augmentation Method for anti-vibration hammer on power transimission line based on CycleGAN. International Journal of Image and Data Fusion 2022, 13, 362–381. [Google Scholar] [CrossRef]

- Nath, V.; Yang, D.; Landman, B.A.; Xu, D.; Roth, H.R. Diminishing Uncertainty Within the Training Pool: Active Learning for Medical Image Segmentation. IEEE Trans. Med. Imaging 2020, 40, 2534–2547. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Zhang, Y.; Miao, D. Three-way confusion matrix for classification: A measure driven view. Inf. Sci. 2020, 507, 772–794. [Google Scholar] [CrossRef]

- Unnikrishnan, R.; Pantofaru, C.; Hebert, M. A measure for objective evaluation of image segmentation algorithms. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05)-Workshops, San Diego, CA, USA, 21–23 September 2005; p. 34. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Lu, Y.; Chen, Y.; Zhao, D.; Chen, J. Graph-FCN for image semantic segmentation. In Advances in Neural Networks, Proceedings of the ISNN 2019: 16th International Symposium on Neural Networks, ISNN 2019, Moscow, Russia, 10–12 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 97–105. [Google Scholar]

- Atika, L.; Nurmaini, S.; Partan, R.U.; Sukandi, E. Image Segmentation for Mitral Regurgitation with Convolutional Neural Network Based on UNet, Resnet, Vnet, FractalNet and SegNet: A Preliminary Study. Big Data Cogn. Comput. 2022, 6, 141. [Google Scholar] [CrossRef]

- De Andrade, R.B.; Mota, G.L.A.; da Costa, G.A.O.P. Deforestation Detection in the Amazon Using DeepLabv3+ Semantic Segmentation Model Variants. Remote Sens. 2022, 14, 4694. [Google Scholar] [CrossRef]

- Zhao, F.; Xie, X. An overview of interactive medical image segmentation. Ann. BMVA 2013, 7, 1–22. [Google Scholar]

- Zhou, B.; Sun, Y.; Bau, D.; Torralba, A. Revisiting the importance of individual units in cnns via ablation. arXiv 2018, arXiv:1806.02891. [Google Scholar]

- Goldreich, O. Computational complexity: A conceptual perspective. ACM Sigact News 2018, 39, 35–39. [Google Scholar] [CrossRef]

| Project | Detail |

|---|---|

| CPU | E5 2678v3 2 |

| GPU | GTX 3060Ti 8G |

| RAM | 16 GB 2 |

| Disk | HITACHI A640 3T |

| OS | Windows 11 Pro |

| Anaconda | Anaconda3-2022.10-Windows 64 |

| PyTorch | PyTorch 1.8.0 |

| Python | Python 3.8 |

| Cuda | Cuda 11.1 |

| PyCharm | PyCharm Community 2 February 2021 |

| Labelme | Labelme 1.5.1 |

| Project | Detail |

|---|---|

| Epoch | 300 |

| Batch size | 8 |

| Input shape | 1280 × 1280 |

| Model | Category | MPA (%) | MIoU (%) |

|---|---|---|---|

| FCN | Lin’an sample Lishui sample | 85.36 86.72 | 75.32 76.27 |

| SegNet | Lin’an sample Lishui sample | 86.29 85.96 | 76.39 74.18 |

| U-Net | Lin’an sample Lishui sample | 87.82 86.33 | 77.60 77.13 |

| PSPNet | Lin’an sample Lishui sample | 83.56 84.73 | 72.69 73.98 |

| DeepLabV3+ | Lin’an sample Lishui sample | 91.57 91.85 | 84.15 83.62 |

| Yolo v7 | Lin’an sample Lishui sample | 95.87 94.69 | 92.12 91.17 |

| Model | Category | MPA (%) | MIoU (%) |

|---|---|---|---|

| FCN | Lin’an complex Lishui complex | 83.21 84.16 | 72.67 74.38 |

| SegNet | Lin’an complex Lishui complex | 84.65 85.63 | 75.23 75.18 |

| U-Net | Lin’an complex Lishui complex | 84.25 83.18 | 73.64 71.59 |

| PSPNet | Lin’an complex Lishui complex | 82.62 83.67 | 72.66 72.97 |

| DeepLabV3+ | Lin’an complex Lishui complex | 90.54 91.22 | 83.62 84.58 |

| Yolo v7 | Lin’an complex Lishui complex | 94.27 93.46 | 91.28 90.23 |

| Model | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| FCN | 15.86 | 15.21 | 15.67 | 16.75 | 15.84 |

| SegNet | 15.92 | 15.34 | 16.21 | 16.32 | 16.38 |

| U-Net | 17.26 | 18.02 | 18.33 | 18.66 | 17.94 |

| PSPNet | 15.93 | 16.35 | 16.37 | 17.53 | 17.14 |

| DeepLabV3+ | 19.34 | 18.63 | 19.57 | 20.04 | 19.32 |

| Yolo v7 | 20.21 | 19.83 | 21.04 | 21.36 | 21.16 |

| Model | MPA (%) | Time (s) | Size (Mb) |

|---|---|---|---|

| Yolo v7 + O. | 87.34 | 0.13 | 74.9 |

| Yolo v7 + L. | 90.17 | 0.12 | 73.6 |

| Yolo v7 + S. | 91.51 | 0.13 | 73.8 |

| Yolo v7 | 93.75 | 0.12 | 73.3 |

| Model | Training Time (h) | Inference Time (s) |

|---|---|---|

| FCN | 24 | 0.52 |

| SegNet | 22 | 0.59 |

| U-Net | 19 | 0.34 |

| PSPNet | 26 | 0.51 |

| DeepLabV3+ | 23 | 0.47 |

| Yolo v7 | 18 | 0.12 |

| Input Shape | MIoU (%) | Speed (s) |

|---|---|---|

| 1280 × 1280 | 91.17 | 0.11 |

| 640 × 640 | 91.08 | 0.10 |

| 320 × 320 | 89.87 | 0.08 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, L.; Zheng, X.; Fang, L. The Semantic Segmentation of Standing Tree Images Based on the Yolo V7 Deep Learning Algorithm. Electronics 2023, 12, 929. https://doi.org/10.3390/electronics12040929

Cao L, Zheng X, Fang L. The Semantic Segmentation of Standing Tree Images Based on the Yolo V7 Deep Learning Algorithm. Electronics. 2023; 12(4):929. https://doi.org/10.3390/electronics12040929

Chicago/Turabian StyleCao, Lianjun, Xinyu Zheng, and Luming Fang. 2023. "The Semantic Segmentation of Standing Tree Images Based on the Yolo V7 Deep Learning Algorithm" Electronics 12, no. 4: 929. https://doi.org/10.3390/electronics12040929

APA StyleCao, L., Zheng, X., & Fang, L. (2023). The Semantic Segmentation of Standing Tree Images Based on the Yolo V7 Deep Learning Algorithm. Electronics, 12(4), 929. https://doi.org/10.3390/electronics12040929