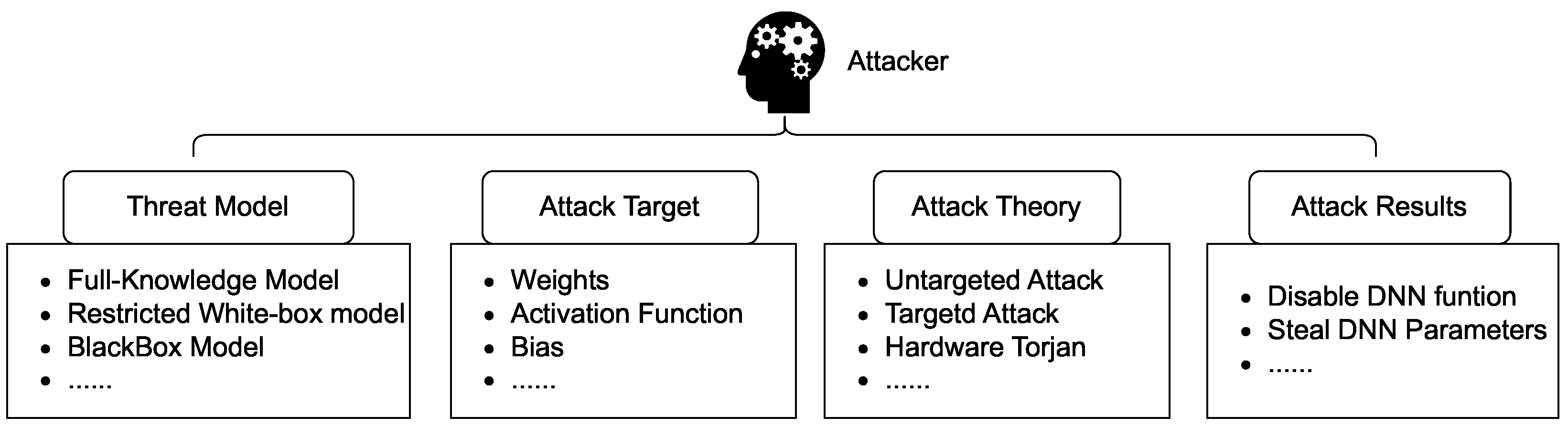

To summarize the current work on conducting bit-flip attacks, they can be divided into three categories in principle.

3.3.1. Untargeted Attack

Figure 10 shows an example of untargeted attack. After this type of attack changes the parameters of the DNN through bit flipping, the DNN’s predictions for all inputs will be biased, even weakened to a random guess level in ideal case. Untargeted attack is a white-box attack and, thus, requires knowledge of the internal details of the DNN. The representative work of this type is BFA [

2], which solves such a problem when trying to change the weight by flipping bits: if the weight of DNN is in N-bit fixed-point number stored in memory, denoted as

B, and the flipped weight is denoted as

, then the attack purpose can be expressed as maximizing the loss as in Equation (

3):

where

computes the gap between the output result of the DNN and the true result, and

denotes the result of the DNN computation with

x as input and weight

B. The idea of BFA is to flip the corresponding bits in the gradient direction of the

ℓ function, so that the output result of the flipped

ℓ function has a larger gap from the true result. Some untargeted attack methods flip the relevant bits of the activation function or bias, so that it affects all input, changing all output results to a random or certain pre-set class.

In this type of work, there exists complexity in two processes. One is to determine the vulnerable elements (which do not exist in some attacks, such as random bit-flip), and the other is to carry out specific attacks. The complexity of determining vulnerable elements is closely related to the attack method. For example, RowHammer attacks generally use PBS to locate vulnerable bits, and limiting the number of search layers will affect the complexity. In comparison, VFS, Laser injection, and Clock glitching attacks search for attack points at the circuit level, with lower complexity. The complexity of a specific attack mainly depends on the number of bits that need to be flipped. Generally speaking, due to some limitations such as the refresh time window, the more bits that need to be flipped, the higher the complexity.

Detailed related work:

Table 2 lists the detailed attack aspects for untargeted bit-flip attacks. Breier et al. [

9] determine the specific instructions that the errors are injected into, and then use diode pulsed lasers to inject errors into the activation functions on embedded system, including ReLu, softmax, sigmoid, and tanh. Rakin et al. [

2] proposed BFA, which causes DNN to lose its capability by flipping only a few weights. The key of BFA is to identify the vulnerable bits by gradient ranking through the Progressive Bit Search (PBS) method. This is the first work to attack DNN with fix-point weights instead of float-point weights (which are more vulnerable to attacks and may cause a complete loss of functionality by flipping just one bit). Jap et al. [

35] briefly explained how to perform a single-bit flip attack on softmax to affect the classification results. Liu et al. [

28] add glitching to the clock signal to cause misclassification of DNN. The attack will disappear after the clock glitch subsidies, which means the attack is quite stealthy. This attack can be used to trigger black-box attacks; for gray-box attacks where model details, layer lists, and input delays are known, more precise attacks can be performed.

Khoshavi et al. [

36] aim at a compressed DNN-BNN (Weights and activations are stored in a compressed form of 1 or 2 bits, and thus are very vulnerable to bit-flip), the impact of single-bit errors (SEU) and multi-bit errors (MBU) on BNN is simulated on the FPGA platform. They conclude that random and uniform bit-flip attacks will cause serious performance degradation to BNN regardless of what kind of parameters it acts on. Yao et al. [

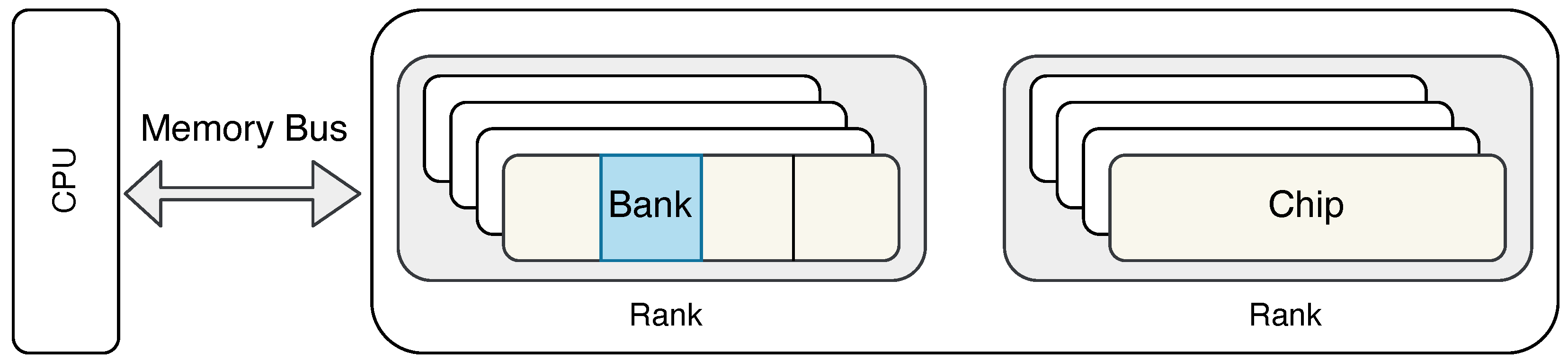

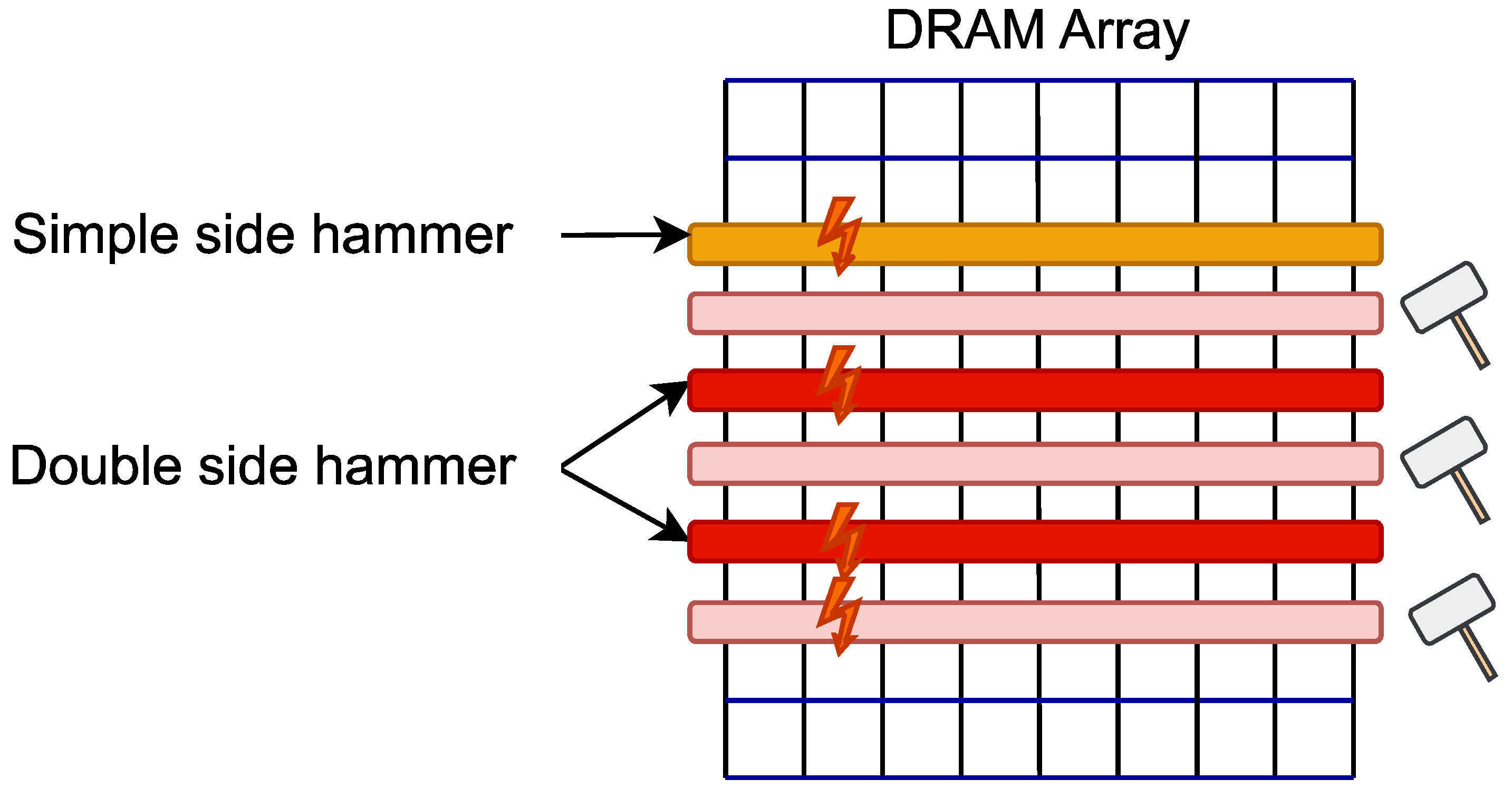

19] proposed DeepHammer, which is a method of using RowHammer to attack the weights of the DNN model, and then affect the ability of the DNN. DeepHammer consists of two offline stages (the attack preparation stage) and one online stage (the attack stage). In the offline stage, the specific details of the memory, such as the addressing scheme, are first reversely analyzed, and by combining gradient sorting and progressive search to determine the most vulnerable bits and corresponding physical address in DRAM. The attack strategy will be generated according to the obtained information, including which bits change from 0 to 1 and which bits change from 1 to 0. In the online phase, the cache of recently released pages is first used to locate the vulnerable pages, then use double-side RowHammer attacks to accurately cause memory bit flips, and adjust the bit values that have been changed according to the bit flipping policy to ensure the effectiveness of the attack.

Dumont et al. [

29] illustrate that laser injection attack is a very effective attack, but it generates a larger perturbation compared to the adversarial sample attack. They design an experiment in which partial laser injection technology flips bits in static memory units (such as SRAM and Flash), successfully bypassing PIN verification and recovering the AES key while keeping the stored data unchanged. The main object of this paper is DNN deployed in embedded devices, and the storage devices involved are SRAM and Flash, but it still has reference significance for DRAM-based DNN. Rakin et al. [

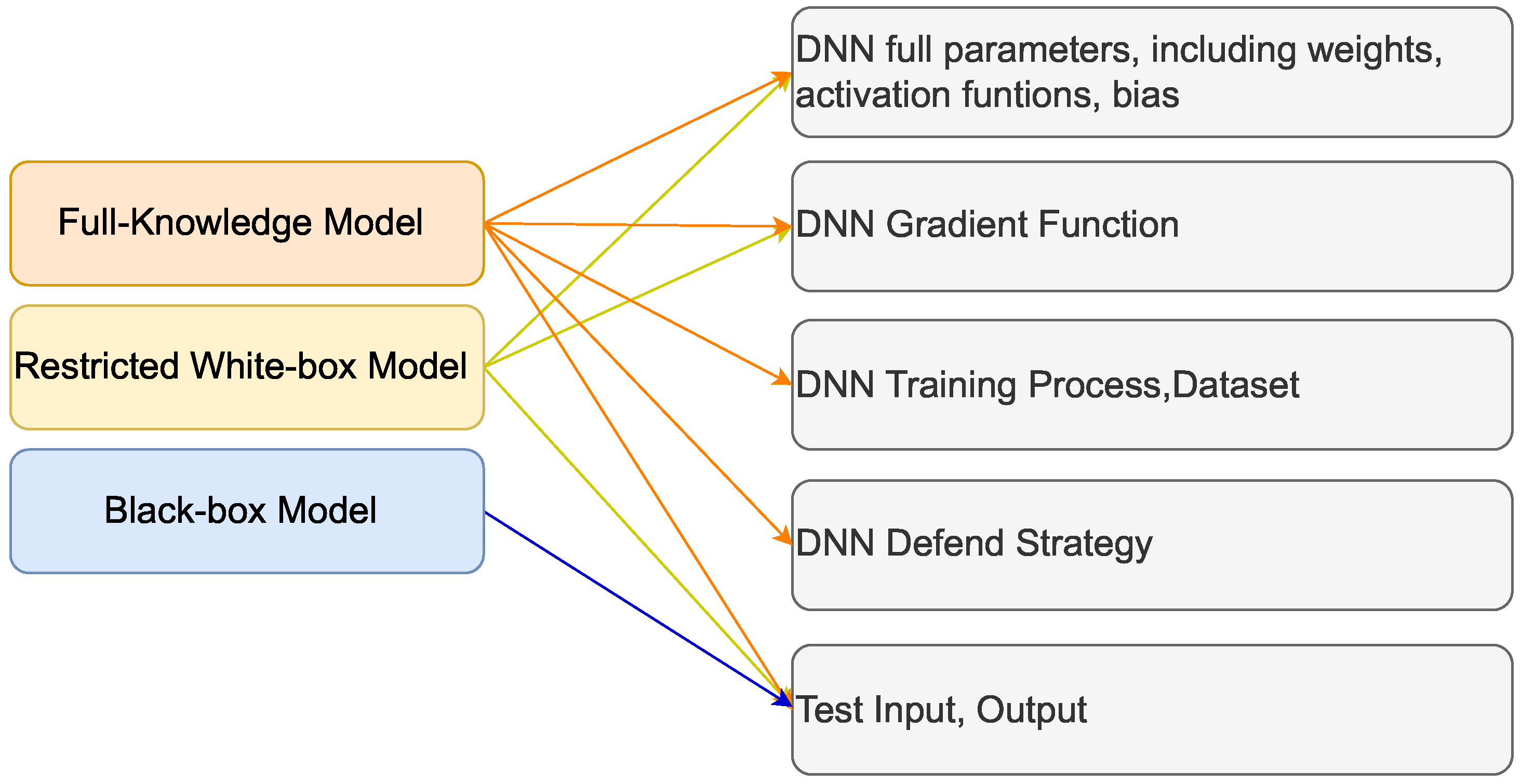

32] explores the vulnerability of DNNs deployed on multi-user shared FPGAs. They propose Deep-Dup, an attack framework that is effective for both white-box and black-box models. Deep-Dup consists of two contents: (1) when its PDS system in FPGA is overloaded, it may cause timing violations, resulting in transient voltage reduction and data transmission delay being longer. They propose AWD attack to launch a PDS overload after determining the transmission weight of the packet. This makes it possible for the target packet to be sampled twice by the receiver and the error can be injected into the latter sample, modifying the weights. (2) They use P-DES to gradually search for vulnerable bits through mutation evolution. As P-DES does not depend on the gradient information of the model, it can be used for black-box attacks. In the white-box model, the attacker first calls the P-DES method to calculate and generate the attack index and then calls the AWD attack to modify the weights. In the black-box model, P-DES makes corresponding changes so that the attacker can still use the timing information to determine where the weight is, and launch AWD attacks with higher frequency until the attack is achieved.

Park et al. [

20] propose ZeBRA for generating statistics that follow a pre-trained model. It is helpful in accurately estimating DNN loss and weight gradients, and bit-flip attacks can be implemented more efficiently based on the generated data. Fukuda et al. [

37] uses power waveform matching to adjust the fault injection time and uses clock glitching to inject faults into the softmax function of DNN in an 8-bit microcontroller. During the execution, the attacker needs to know the internal operation state, and use the SAD algorithm to match the power waveform to inject glitching. There are two phases in the fault injection process. One is the analysis phase, selecting the desired waveform pattern. The second is the attack phase, where the trigger will generate glitching when the appropriate waveform pattern appears, injecting the fault into the target device. Based on the principle of clock glitching, attacking the multiply-add operation will make the it incomplete. This work targets the softmax function, and since the specific implementation of softmax involves circular accumulation, the final softmax result can be precisely controlled by using clock glitching. Cai et al. [

38] propose NMT-Stoke, an attack framework for neural machine translation models (NMT), which can make the neural network produce the semantically reasonable translation that the attacker expects. CNN can still maintain a certain accuracy in the case of quantization, but NMT can only be performed when full-precision weights are used. Simply flipping the MSB of weights in NMT will cause a decline in DNN capabilities, but is also easily recognized by humans. The experiment shows that the impact of parameter bit flipping on the model output depends largely on the change of its weight and the degree of gradient change, and parameters with values outside (−2, 2) are better subjected to bit flipping. Based on this, they design a bit search strategy based on value and gradient to determine the least number of bits to be flipped. It can produce suitable weight and gradient changes, and the generated results do not lose semanticity at the same time.

Ghavami et al. [

39] propose a BFA method that does not rely on existing data, i.e., by matching the normalized statistical data and label data of each layer in DNN to form a synthetic dataset, and based on this BFA can be applied. The key technique is to generate synthetic datasets for identifying vulnerable bits based on the parametric values of the network architecture itself. This is performed by (1) minimizing the gap between the variance and the mean relative to the training data, so that the runtime information of the hidden neuron is similar to the information of the training data, and (2) minimizing the gap with the training data labels. Combining these two objectives minimizes the loss function and obtains the most similar synthetic dataset. Lee et al. [

40] propose SparseBFA, which is a bit-flip attack for sparse matrix format used for storing DNN parameter position. In this case, the connections between neurons are reset rather than changing the weights when being attacked. SparseBFA uses an exhaustive search for smaller layers to find the bits that can reduce performance the most, and for other layers uses an approximation algorithm to select a set of coordinates for testing at a time, and then judges the importance of weights by pruning them.

3.3.2. Targeted Attack

The untargeted attack behavior affects all inputs, which is not applicable in many attack scenarios. For example, in the case where face recognition is required, the attacker only wants the DNN to misjudge some specific targets. Compared with untargeted attacks, targeted attack allows the attacker to control the attack more precisely, and for the non-targeted objects, the accuracy can be kept unchanged, making this bit flip attack more concealed.

Figure 11 shows an example of the expected situation of a targeted flip attack, the attacker’s optimization goal is as Equation (

4) shows.

The part before + measures the classification into target categories for some specific inputs, and the part after + measures the classification of the rest inputs, which should have almost constant accuracy ideally.

Figure 11.

The targeted attack example.

Figure 11.

The targeted attack example.

The complexity of the targeted attack is basically the same as untargeted attack when implementing bit flipping. However, due to the need to maintain the accuracy of the non-target process, the complexity of locating vulnerable elements is slightly higher than untargeted attack.

Detailed related work:

Table 3 lists the detailed attack aspects for targeted bit-flip attacks. Liu et al. [

41] proposes two methods, Single Bias Attack (SBA) and Gradient Descent Attack (GDA) to modify the parameters of DNNs. Among them, SBA attempts to modify the bias value and propagate it to the corresponding adversarial class, thus directly affecting the DNN output results. This attack is independent of the activation function and does not consider concealment but only efficiency. GDA pays more attention to concealment, by only slightly modifying the DNN weight parameters to change the gradient and increase the probability of being classified into the target class. The final goal of GDA is that the results are unaffected except for the specified ones. This work does not state that the bit-flip attack method is adopted, but the bit-flip attack applies to the methods. Zhao et al. [

42] propose a stealthy method that makes DNNs inaccurately classify some specific targets. Based on ADMM, they solve the problem by decomposing the sub-problems and alternately optimizing the process. In the process, the

norm and

norm are used to limit the number and scale of the modified parameters, which finally maintains the classification of non-targets to remain accurate with minimal parameter modification. Rakin et al. [

43] proposes T-BFA, which is a target-based adversarial weight attack, by using a class-related vulnerable weight bit search algorithm to identify the highly correlated weight bits, then implementing bit-flipping to misclassify selected inputs. They construct optimization objectives according to three optimization objectives (many-to-many, many-to-one, stealthy one-to-one), and use optimized PBS to determine the bits to flip for each layer, then select the best layer to conduct bit-flipping.

Khare et al. [

44] proposes LI_T_BFA, which uses the HRank to locate the significance information of layers in DNNs, and determine the most important layers, as well as the corresponding features. Finally, the accuracy of judging a selected class drops to the level of random guessing, while the accuracy of judging other classes remains unchanged, which improves the stealth of the attack. LI_T_BFA only loads the data of the target class when launching the attack, and chooses the layer as rear as possible for the attack. The more the rear layer is attacked, the less impact it has on other targets, but more bits need to be flipped. LI_T_BFA makes a trade-off between these factors. They also propose some defense methods, such as quantizing the weights differently for parity to defend against PBFA’s flipping of MSBs; performing single epoch training to reshape the weights; using average pooling instead of maximum pooling to reduce the impact caused by increased weight flipping; changing the activation function from an unbounded function, such as ReLU, to a function with saturation limits, such as tanh or ReLU_N, placing the key layers of DNN into a memory region with strong error correction to prevent changes to avoid attacks on the key layers. Ghavami et al. [

45] propose the use of bit flipping to evade the defense algorithm for adversarial attacks. With well-designed adversarial samples, even if there are defensive measures, they will be assigned to the attacker’s predetermined category. The method is roughly the same as the process of methods, such as T-BFA. By modeling the attack mode, the PBS method is used to flip some bits along the opposite direction of the gradient, degrading the robustness and accuracy of DNN.

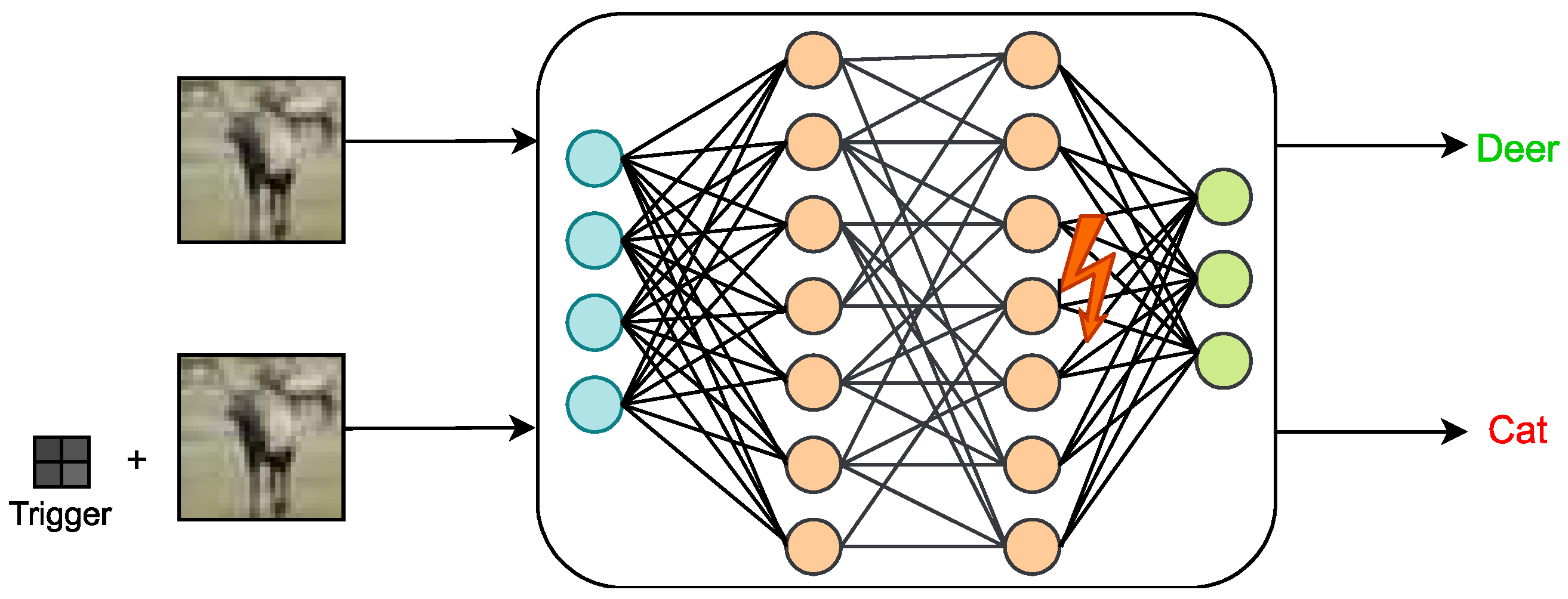

3.3.3. Bit-Flip Based Trojan Attack

Since most DNNs today are based on open-source architectures or directly entrusted to commercial organizations with powerful computing capabilities for training, which means that the supply chain of DNNs is not fully trusted. Therefore, the previous trojan-related work on DNN interfered with the training process [

46], such as poisoning the training data, or generating inputs with triggers, etc. However, in practice, even DNN models that receive a fully trusted training process are still subject to bit-flip-based Trojan attacks during the prediction process. As shown in

Figure 12, the goal of bit-flip-based hardware trojan is to classify the inputs of the injection triggers into specified classes. It contains two main parts: (1) generating triggers, which work as shown in Equation (

5).

Here,

refers to the output that DNN produces with input

added with the trigger. The weight of DNN is modified to

, and

is the target output. The idea is that the generated triggers help the output in the DNN with modified weights to be as near as possible to the target class.

Figure 12.

Bit flip based attack Trojan example.

Figure 12.

Bit flip based attack Trojan example.

(2) Insert trojan. Triggers are specific patterns that control trojan activation and are generally embedded in the input. The trojan is then used to change the relevant parameters of the DNN model to implement an attack. Upon receiving an input that is inserted with a trigger, the DNN that has undergone a trojan bit-flip will classify the input into a specific class, while the input without the inserted trigger is largely unaffected. The process of bit-flipping to change parameters is performed here as shown in Equation (

6). It can be seen that the flipped bits are determined using the gradient descent method.

This type of work needs to ensure the effectiveness and stealthiness of the attack. The parameters and input of DNN in the attack model need to be accessible, so the actual implementation may be relatively limited. Considering practical difficulties, such as a small time window for bit-flipping and high flipping difficulty, the above-mentioned attack methods all try to flip the fewest bits to achieve the attack purpose, so finding the bit with the best flipping effect is one of the key steps. Progressive Bit Search (PBS) is an important method used in this type of attack, which aims to find the bit that can maximize the loss function after flipping. Progressive Bit Search (PBS) is an important method used in this type of attack, which aims to find the bit that can maximize the loss function after flipping. PBS will go through multiple iterations, and each iteration has two steps: (1) search the weight bits that can be used for flipping in the layer, and select the

bits that are sorted by gradient changes after flipping. This process can be expressed as Equation (

7) shows.

Compare the effect of bit flipping of each layer and select the layer with the best effect for bit flipping, the direction of bit flipping is consistent with the direction of the optimized loss function. this process can be expressed as Equation (

8) shows. Where

is the weight after flipping, and

is the loss function after evaluating the flipping.

Since the trojan-based bit-flip attack requires trigger insertion into the input in addition to flipping the vulnerable elements in DNN. Overall, the number of bits flipped for this type of work is not much different from the other two types of work, so the complexity is similar. The generation complexity of the trigger is related to the structure of bit-flipped DNN. Generally speaking, there is little difference between each work.

Detailed related work:

Table 4 lists the detailed attack aspects for bit-flip-based trojan related work. Venceslai et al. [

47] propose NeuroAttack, which is a cross-layer attack that uses a triggered input to trigger a hardware trojan, while the hardware trojan performs a bit-flip attack. The accuracy of DNN is normal before triggering and decreases afterward. The trigger is computed and then embedded as a stamp or noise in the input. The goal of this work is to implant the hardware trojan into carefully selected neurons in a certain layer on demand, and when the neuron is activated, the hardware trojan is activated at the same time. Rakin et al. [

48] propose TBT, which successively uses the Neural Gradient Ranking (NGR) algorithm, as well as the Trojan Bit Search algorithm to identify the vulnerable neurons and weight bits. Afterwards, the attacker can design triggers specifically to locate these cells and bits. When an attacker embeds the trojan into the DNN by bit flipping, the DNN still processes the input with normal inference accuracy, but when the attacker activates the trojan by embedding the trigger into any input, the DNN classifies all inputs to a certain target class. Breier et al. [

49] explore the attack method of injecting errors into the ReLU activation function during the training phase of DNN, where the malicious inputs can be derived from solving constraints. During the training phase, the DNN that is injected with errors classifies the malicious inputs into expected classes. This approach essentially uses methods, such as bit flipping in the training phase, to achieve the effect of an adversarial input attack.

Chen et al. [

50] present ProFlip, a trojan attack framework that progressively identifies and flips a small number of bits in a DNN model, shifting the DNN’s prediction target to a preset class. The attack can be divided into three parts: (1) identify the significant neurons in the last layer using an importance graph based on the forward derivatives; (2) identify the vulnerable bits in the DRAM storing the DNN parameters; and (3) determine an effective triggering pattern for the target class such that the generated triggers maximize the output of important neurons, so that the DNN predicts the specified category for the input containing the trigger. Tol et al. [

51] propose a method that uses RowHammer to implement backdoor injection attacks. They first demonstrate that the capabilities of RowHammer were overestimated in previous work, so the attack situations need to be considered under some constraints. This attack is consistent with the bit-flip trojan attack, except that when determining the vulnerable weight, there exists a limit on the number of bits that can be flipped in a memory page. At the same time, the number of bits that need to be flipped should be reduced as much as possible. Bai et al. [

52] propose HPT, which uses bit flips to insert hidden behavior in DNNs, misclassifying triggered inputs and having no effect on other inputs. Triggers here refer to adding perturbations to the input files in the prediction process to make the impact on the input as small as possible while minimizing the loss function that classifies these triggered inputs to a specific category. ADMM is used to alternatively optimize the hyperparameters involved in the loss function.

Mukherjee et al. [

53] propose a hardware trojan attack on DNN deployed on FPGA. This hardware trojan attack is essentially a glitching trigger. When it is triggered, malicious glitching will be inserted and the activation function of DNN will be modified to reduce the accuracy. The activation of the hardware trojan is triggered by an input embedded with certain fixed-intensity pixels, making this attack very stealthy. Cai et al. [

54] propose an attack framework that conducts the RowHammer method during the training of DNNs to induce bit flips to the feature mapping process. Perturbations caused by bit-flip are learned as triggers, and then the inputs containing specific triggers will be misclassified in the bits-flipped DNN. They deduce a bit-flipping strategy, which associates the feature mapping with the output target label. The attacker will compute the trigger pattern based on the perturbation of the feature mapping and patch it to the input, making the attack stealthy by targeting only specific classes. Zheng et al. [

55] proposes TrojViT, which induces the predefined misbehavior of the ViT (Vision Transformers) model by destroying inputs and weights. This work includes two stages: generating triggers and inserting trojan. In the process of generating triggers, the prominence of the triggers will be sorted to determine where the generated triggers should be inserted into the input, while the generated triggers are trained to be able to trigger the trojan more effectively. The inserted trojans use parameter filtering techniques to ensure that the minimum number of bits needs to be flipped. Bai et al. [

56] proposed two attack methods, SSA and TSA. SSA is a single-sample attack, which stealthily classifies a certain class of inputs to other classes by modifying the weight. TSA is a trojan attack, which generates triggers for precise triggering based on modified weights. They consider the attack as a mixed integer problem so that ADMM can be used to effectively infer the bit states in memory to conduct efficient bit flipping.