1. Introduction

From mobile devices to data centers, energy efficiency has become one of the primary considerations for processor designers. In the design process of modern processors, it is necessary to improve the performance of the CPU without increasing power consumption as much as possible. With the end of Dennard’s scaling law [

1], it is difficult for the processor to improve the energy efficiency from the evolution of the process. Therefore, design trade-offs become more prominent in CPU design. Besides process evolution, there are many ways to reduce power consumption, such as power gating, clock gating, and dynamic frequency and voltage scaling (DVFS) [

2]. The idea of these technologies is to reduce the performance of the CPU when the CPU has no or few tasks in order to save energy consumption with little affecting the overall performance [

3,

4], which requires the cooperation of the hardware and the operating system to sense the CPU workload [

5]. Enabling these techniques at the right time can improve the processor’s energy efficiency.

In earlier processor implementations, the operating system controlled DVFS decisions, and recent processors use hardware mechanisms to manage DVFS. Hardware-managed DVFS can read memory-related performance event counters to estimate the workload’s sensitivity to frequency changes. For memory-intensive programs, reducing the frequency has little effect on the program’s performance and can significantly reduce power consumption. Compared to OS-managed DVFS, hardware-managed DVFS can obtain more information about the workloads and save power consumption in memory-intensive workloads. With the rise of the RISC-V ISA, many types of research on hardware power management strategies have emerged along with RISC-V chips. The authors in [

6] showed that hardware DVFS can achieve a granularity of 100

s. The current 100-

s granularity power management method has the following shortcomings:

Current methods cannot respond to the sub-s burst memory-intensive program phase. However, there are many such burst memory-intensive fragments in actual workloads.

Current methods cannot respond to workload changes in time, and not all phase changes in actual workloads align with 100 s. Failure to respond to workload changes promptly results in additional performance and power consumption overhead.

The solution to both problems is faster DVFS state changes and finer-grained power management. However, due to the physical limitations of the voltage controller, we cannot achieve faster DVFS state switching. This paper solves the deficiencies by adding a digital frequency divider to the DVFS system and using fast workloads sampling strategies. Both frequency dividers and fast sampling schemes require accurate workload information within a shorter sampling interval. Therefore, this paper also improves the state-of-the-art CRIT method to obtain accurate results in a shorter sampling interval.

Lastly, we modified the ChampSim simulator to simulate the performance impact of frequency changes and calculate the processor’s power consumption using the McPAT simulator. We compared the power management strategy proposed in this paper and the commonly used power management strategies on the above simulator platform. The results show that the proposed method’s power efficiency is better than that of existing power management strategies. This paper offers the following contributions:

We propose a fine-grained power management strategy that uses a digital frequency divider to handle burst memory-intensive workloads of which DVFS is incapable, and uses fast sampling to obtain the workload status for faster DVFS decision making.

We optimized the state-of-the-art CRIT approach for estimating memory time in which we could obtain a more accurate memory time estimate within a shorter sampling interval.

We implemented the above power management strategies and several existing power management strategies on the simulator and compared their performance.

The rest of this paper is organized as follows. We introduce the background in

Section 2, describe the proposed method in detail in

Section 3, analyze the evaluation results of the proposed power management strategy and its comparison with previous work in

Section 4, and summarize in

Section 5.

2. Background

DVFS [

7] is one of the most widely used power management technologies. A This technology has been applied to almost all microprocessors. In DVFS, each voltage domain of the processor supports dynamic voltage and frequency adjustment. The operating system or hardware dynamically adjusts each core’s DVFS level on the basis of the processor’s workload.

DVFS is initially directly controlled by the operating system. The hardware only provides an operator interface. The operating system judges the running state of the CPU according to the CPU utilization, and adjusts the DVFS level accordingly. When CPU utilization is low, there are not many active tasks, and the operating system reduces the voltage and frequency of the CPU. When CPU utilization is high, the CPU needs faster speed to complete computing tasks, and the operating system increases the voltage and frequency [

8]. The cpufreq module in the Linux kernel is responsible for managing DVFS. A

strategy consists of a

and a

. The

is an interface provided to the operating system to adjust the CPU frequency and voltage, and the hardware manufacturer provides the

’s code. The

specifies the DVFS management strategy. The Linux kernel use

s such as

,

, and

to manage the DVFS [

9]. The management granularity of the operating system for DVFS is about 10 ms.

The 10 ms response time of the OS-managed DVFS is relatively long, and may miss sudden transactions and opportunities to improve the processor’s power efficiency. It relies on CPU utilization to manage DVFS without further optimization for different workloads. In 2015, Intel introduced SpeedShift

™ [

10] into SkyLake processors. SpeedShift

™ is a hardware-managed technology of DVFS that has allowed for the management of DVFS to reach sub-ms granularity. The hardware management mechanism of DVFS can also read information related to the workload type in the CPU and perform more fine-grained power consumption management for different workloads.

The SpeedShift

™ technology uses the EARtH [

11] algorithm, which roughly divides the running time of the CPU into computing time and memory time. The algorithm introduces load sensitivity (SCA), which is the proportion of CPU computing time to the total running time of the program. SCA indicates the sensitivity of the workload to frequency changes. SCA close to 1 indicates that the CPU time occupies the mainstream of the program execution time. At that time, the program’s performance proportionally changes with the processor’s frequency. SCA close to 0 indicates that the program is waiting for memory access for most of its runtime, and the frequency change has little effect on its performance. So, when the SCA is small, we can reduce the frequency to obtain better energy efficiency without affecting performance. SkyLake reads the SCA value every millisecond, and automatically selects the appropriate frequency according to the user’s configuration and the value of SCA.

Skylake processors use stall-based performance counters to estimate the memory time of the CPU, but do not announce the specific technical details. There are many studies on implementing memory time estimation. In superscalar processors, CPU operations and memory access often overlap in time. Defining memory time and determining its value is also an open problem. Currently, commonly used methods for estimating memory time in processors are as follows.

The cache miss method records the number of misses in the processor’s L2 or L3 cache, and if it exceeds a certain threshold, the hardware performs a DVFS operation. The authors in [

6] implemented a hardware power management module in the RISC-V Hurricane-2 processor and used the cache miss method. The power management module reads the number of L2 cache misses in every 100

s, and reduces the frequency and voltage when the cache miss per kilo-instructions (MPKI) is greater than 1.

Leading loads are mentioned in [

12,

13,

14], which reports that the memory time of the processor is determined by the time of the first load miss of the L2 cache. This type of load is called a leading load, and there can only be at most one leading load in the cache. In [

15], this method was applied to the operating system of the AMD CPU. A modern CPU uses miss status holding registers (MSHRs) to track the status of outstanding cache misses [

16]. Since AMD processors assign the lowest-numbered inactive MSHR to new coming memory requests, this measures the number of cycles with outstanding access in MSHR0 and multiplies by the current frequency to obtain the leading load time.

Stall time is an intuitive way to estimate CPU memory time by reading the length of time during which the processor stalled [

12]. The authors in [

17] implemented the stall time method for DVFS on actual processors. Due to there being no performance counters for LLC stalls in either Intel i7 or AMD Phenom II, this work approximates LLC stalls by taking the minimum between all pipeline stalls and the worst case for the LLC stalls assuming that no miss overlapped with any other.

CRIT is a state-of-the-art memory time estimation method [

18]. CRIT assumes that two nonoverlapping memory requests are interdependent, estimates the memory time by the critical path length between memory requests, and introduces a set of hardware counters that record the critical path. CRIT maintains one global critical path counter,

, and critical path timestamps,

, for each MSHR. When a load request enters the

i-th MSHR, the mechanism copies

into

. After some cycles T, the MSHR completes its refill, and CRIT sets

= max(

,

+T). The value of

is the estimated memory access time of the previous time interval.

In current power management methods, the sampling interval for memory time is equal to the management interval of the DVFS. However, existing voltage regulators have brought restrictions on DVFS. The transition overhead of the current mainstream voltage regulators is 4–10

s [

19], and the management granularity of DVFS in the relevant studies that we reviewed was above 100

s. However, most memory access operations only take a few hundred cycles. Using 100

s as the observational interval of the memory time would miss fine-grained information about workload changes and miss the chance to save more energy.

In an actual workload, memory access is not in s-granularity, and many sub-s burst memory-intensive accesses exist. The current method cannot respond to this kind of workload.

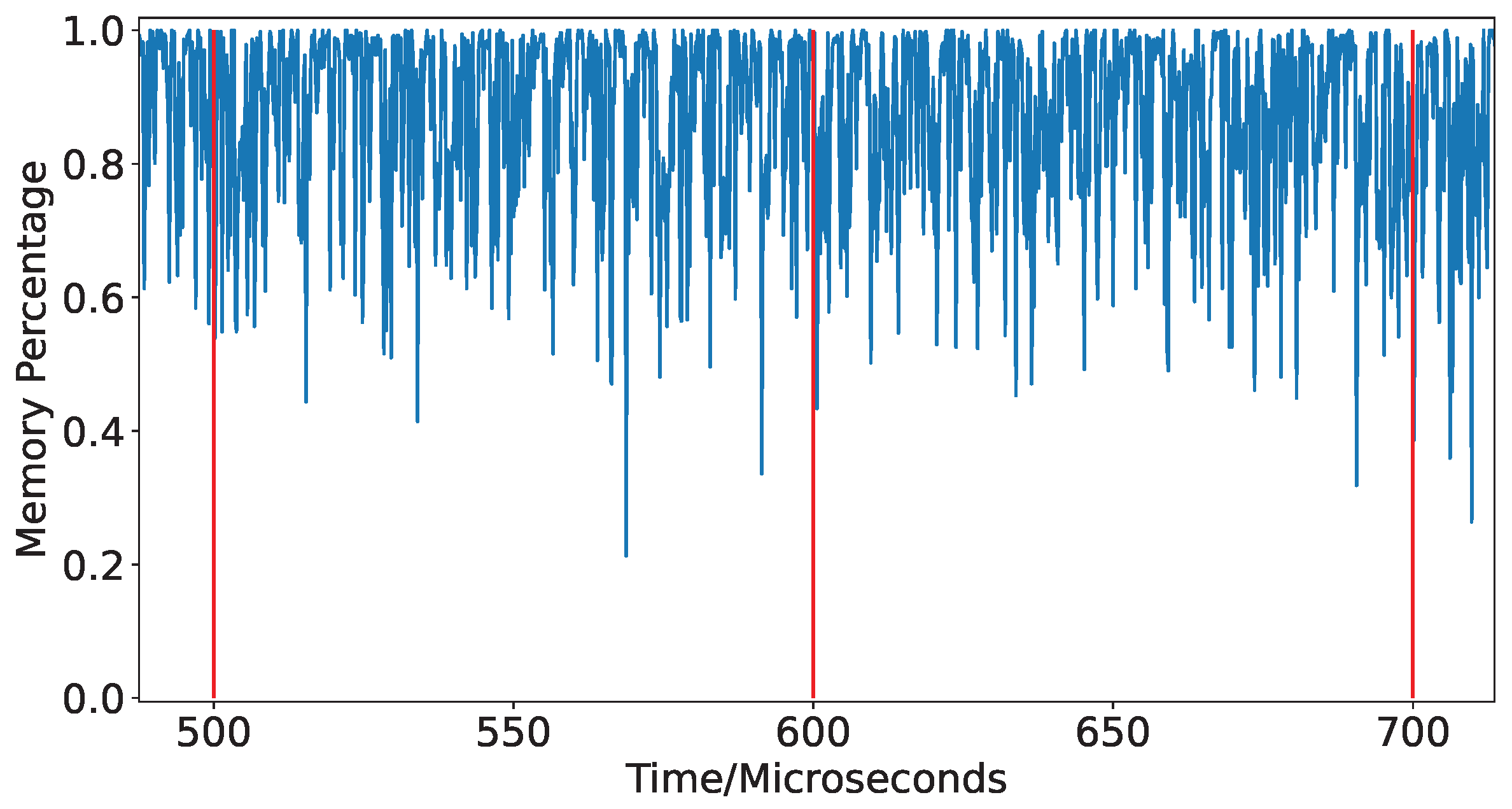

Figure 1 shows a running fragment of

605.mcf in SPEC CPU 2017. The y-chart of this figure indicates the proportion of memory time to the total running time of CPU in a 2048-cycle interval, which is also the SCA in the EARtH algorithm, and we used the CRIT method to obtain this proportion. The red vertical lines in the figure represent the memory time sampling points every 100

s. This figure shows that the proportion of memory access time suddenly increased between 290 and 300

s, lasting 3

s (about 9000 cycles). During this time, the traditional power management module could not respond to the increase in the proportion of memory access time.

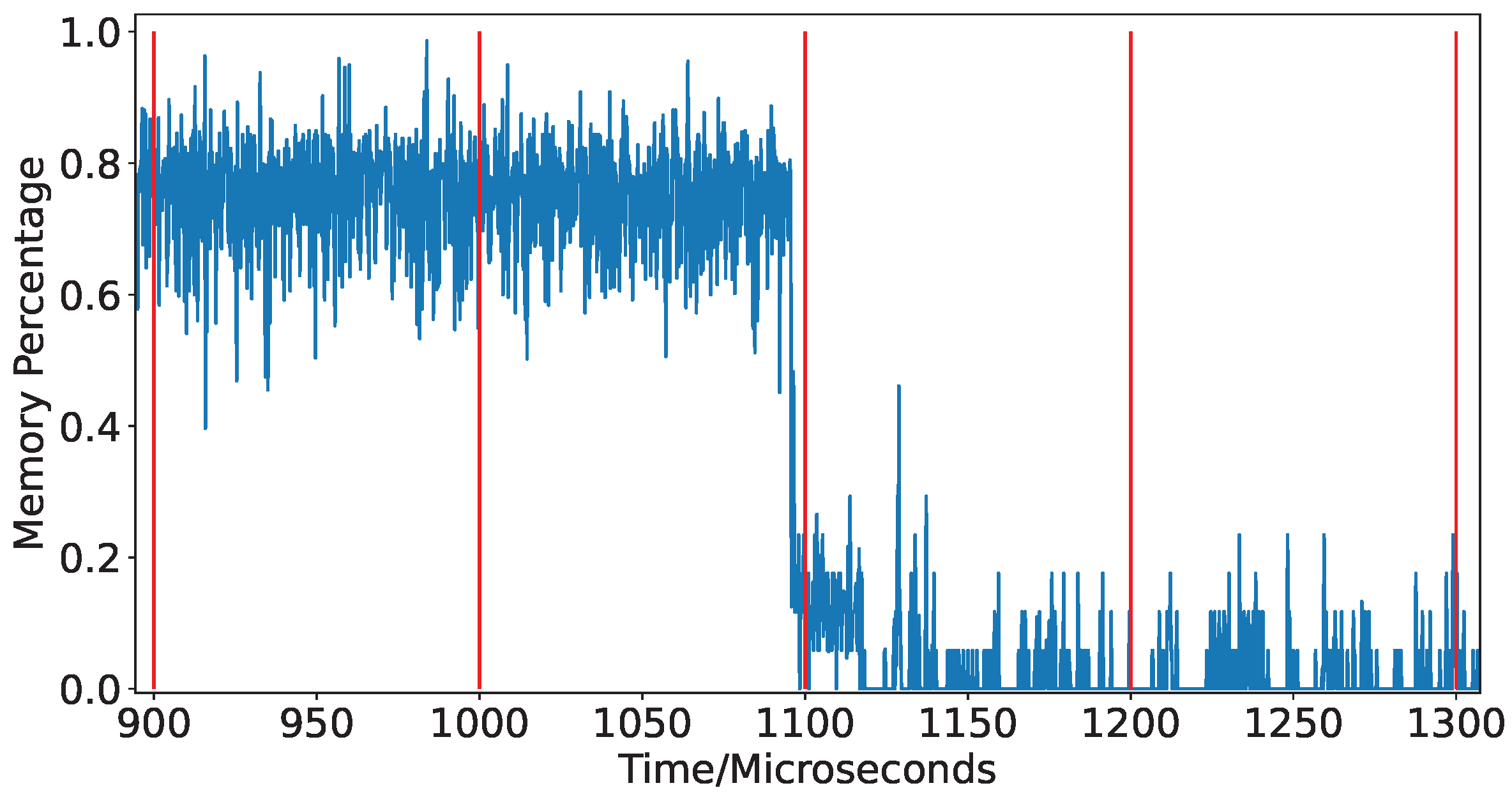

Figure 2 shows a running fragment of

619.lbm of SPEC CPU 2017. In this fragment, the processor’s memory time changed rapidly. Although many short intervals have very high memory time, their average within 100

s does not exceed the threshold for entering the next DVFS level. Due to the limitation of the voltage regulator, current DVFS technology cannot obtain further energy efficiency improvement in this kind of workload.

The second problem is that the fixed observation window cannot respond to workload changes in time.

Figure 1 shows that the power management module calculated the average memory time between 200 and 300

s at the 300th

s, and achieved low memory time. The processor did not decrease its frequency between 300 and 400

s. There is a similar lag when exiting a memory-intensive workload.

Figure 3 shows another running fragment of the same program. Although this program exited the memory-intensive phase at 1100

s, the processor maintained lower voltage and frequency until 1200

s. When the processor enters or leaves a memory-intensive program segment, the current DVFS management mechanism fails to seize opportunities to improve energy efficiency in time.

The key to the above two problems is that the sampling interval is too long, which is caused by two reasons. First, the voltage setting time of mainstream manufacturers’ voltage regulators, such as Intel’s FIVR [

20], is generally between 0.5

s and 4

s. Setting the voltage requires extra energy, so the designer of the power management strategy hopes that a DVFS switching can be maintained for a long time to balance the energy consumption caused by setting the voltage. Second, in some performance-counter-based memory time estimation methods, performance event counter and memory access are asynchronous. For example, a cache miss leads to hundreds of cycles of memory access time. When the CPU starts to stall, the memory access has been dispatching for a while. Cache misses and leading loads occur before the memory time. These memory time models require a relatively long sampling interval to avoid the inconsistency synchronization problem.

In [

21], the authors indicated that the fine-grained switching and management of DVFS levels would considerably boost the energy efficiency of the processor. However, implementing on-chip regulators presents challenges, including regulator efficiency and output voltage transient characteristics.

3. Proposed Method

3.1. Short-Term Frequency Adjustment Based on Digital Frequency Divider

Due to the limitation of voltage setting overhead, it is impossible to reduce the power consumption of sudden workload changes by reducing the adjustment granularity of DVFS. This paper proposes a dynamic frequency scaling method based on the digital frequency divider to reduce the power consumption of this kind of workload.

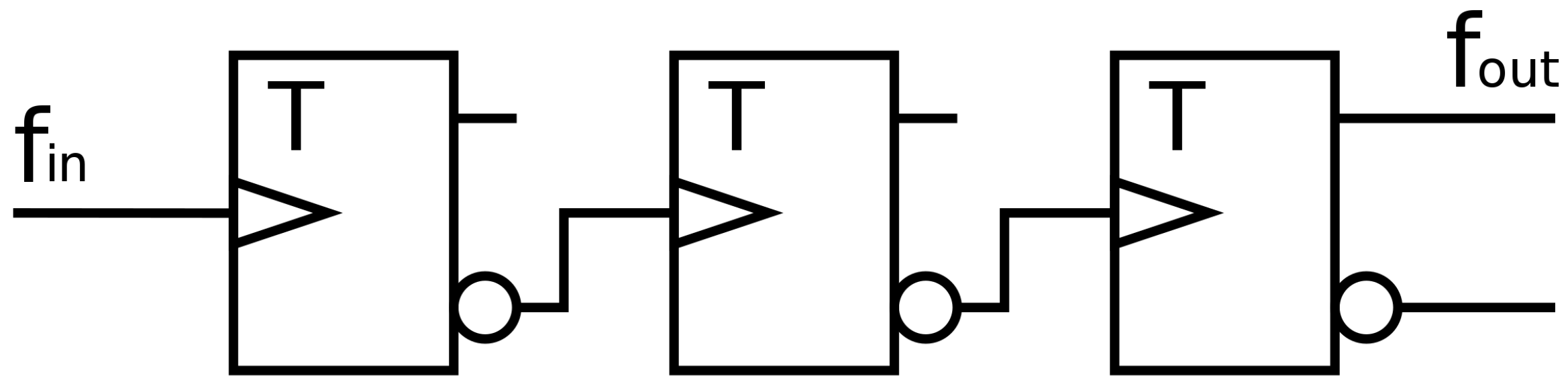

The frequency divider can divide the input frequency by a ratio and output it. The flip-flop scheme is the traditional way to implement integer division. The most straightforward configuration is cascade flip-flops, where each flip-flop can achieve division by 2. For example, a circuit with 3 flip-flops in a series can achieve division by 8, as shown in

Figure 4. Adding logic gates to the flip-flop circuit can also achieve other divider ratios. We can quickly reduce the frequency by putting a digital divider on the processor’s clock tree. Loongson added frequency controllers based on digital frequency dividers in its 3A5000 processors, which supports frequency scaling on the core and noncore components [

22].

The divider only reduces the processor’s frequency but cannot change the voltage. The power consumption reduction brought by only reducing the frequency is minimal. It only reduces the dynamic power caused by the clock network and the transition of the logic signals. In comparison, lowering the voltage can reduce both dynamic and static power. According to the estimation of McPAT tools, the ratio of static and dynamic power consumption is about 3:4 in the 22 nm process. On the basis of this ratio, we calculated the change in processor power consumption when only adjusting DVFS and adding a digital divider to DVFS.

Table 1 compares the power consumption of using only DVFS and adding a digital divider to DVFS at different DVFS levels. The table lists the frequency divider by 2 and by 8. In the actual processor, we can set any configuration of the frequency divider. When the level of DVFS is very high (1/8 of maximal frequency), the digital divider only brings a minimal increase in energy efficiency. However, when the DVFS level is relatively low (higher than 3/8 of maximal frequency), the digital frequency divider can significantly improve energy efficiency. If not using DVFS(8/8 of maximal frequency), only digital frequency division can save nearly half of the power consumption. We can use digital frequency dividers with DVFS technology to reduce the processor’s power consumption and improve energy efficiency.

3.2. Workload Response Mechanism

As described in

Section 2, the transitional overhead of the current mainstream voltage regulators is 4–10

s, and the management granularity of DVFS in the relevant studies we reviewed so far is above 100

s (in hardware management). So, in our simulation, the interval between two DVFS operations had to be greater than 100

s. Because CPU workloads may have burst memory-intensive access, we had to determine whether the observed high memory time proportion continued for more than 100

s before performing the DVFS operation.

In the actual workload, the changes in its memory access patterns seldom aligned with 100 s. In existing solutions, the management unit detects the memory access time every 100 s and uses the measured results to predict the appropriate voltage and frequency for the next 100 s. This paper proposes two methods to respond quickly to changes in memory access.

The first method uses the moving average. We examined SPEC CPU 2017 benchmarks and found that most burst memory-intensive accesses were less than 10

s, while persistent memory-intensive accesses are much greater than 100

s. Few memory-intensive accesses hold 10–100

s. We used a moving average filter with a sliding window of 25

s to filter the impact of burst memory-intensive accesses. Only if we sampled a stable moving average, and the value reached the threshold, would we perform a DVFS operation. In time

T, if there are

n sampled memory time records from time

T-25

to time

T, then the moving average of memory time in time

T is:

Like other hardware power management methods, we could implement our proposed methods through a power management unit (PMU), usually a microcontroller with a simple pipeline and power management firmware. Algorithm 1 shows our power management firmware using the moving average method. The PMU read the memory time detected by the hardware mechanism in each sampling interval and updated the moving average of past 25

s.

| Algorithm 1 Power management firmware via moving average. |

moving_average_for_DVFS ← 0 while 1 do memory_time ← get_memory_time update_moving_average_for_DVFS(memory_time) if allow_DVFS() then reset_dfs() set_dvfs(moving_average_for_DVFS) else set_dfs(memory_time) end if end while

|

Since multiple frequency configurations may occur in a moving average sampling period, for example, the first 10 of 25 s are at a higher frequency, and the latter 15 s are at a lower frequency. To accurately record the situation, we used absolute time, not the number of clock cycles in the processor, to record the processor’s running time and memory access time. The firmware maintains a memory_time_level variable. When it is stable for a specific time and meets the constraint of voltage regularity, the firmware sets a new DVFS level according to the value of this variable and the previous DVFS level. We did not perform the DVFS operation immediately after its moving average had reached the threshold, when the memory time rises or falls rapidly in a short time. Because two DVFS operations need to be separated by more than 100 microseconds, we were not sure whether the moving average of memory time would continue to increase or decrease. This paper adds a parameter named stable_limit. Only when memory_time_level is stable within a threshold for a sufficient time would the parameter stipulated by the system allow DVFS operations.

The second response mechanism is dense sampling. Although the interval between two voltage adjustments had to exceed 100

s, we could use a shorter sampling interval to detect the memory time. Algorithm 2 shows the power management firmware based on dense sampling. When the estimated memory time meets the conditions of the DVFS, the management unit performs a DVFS operation immediately. Compared with the moving average method, this method does not require complex control logic and stores less historical data, but its accuracy decreases. The above two approaches are implemented in the simulator and compared in this paper.

| Algorithm 2 Power management firmware via dense sampling. |

|

Our sampling interval needed to be greater than 256 cycles to ensure that the PMU could robustly completed the calculation task. Compared with the fixed-window power management strategy, our method could recognize a continuous memory-intensive access period as early as 25 s and enter the low-frequency state. Traditional methods take at least 100 s to identify it. Our mechanism also allows for the processor to return to a high-frequency state earlier when the memory-intensive access ends, reducing performance degradation.

3.3. Threshold Calculation

Calculating the threshold is also an essential part of the power management strategy. Assuming that, in a sampling period, the estimated memory time proportion is

, the max frequency is

, the previous frequency is

, and the target frequency is

f, our tolerance for performance loss is

x. Then, the variables should satisfy the following conditions when

.

The left-hand side of the equation is the run time of the load at the target frequency, and the right-had side of the equation is the run time of the load at the maximal frequency times the tolerance. Then, we can obtain:

To ensure that the performance did not degrade too much, we took x as 10%.

When

, we had to ensure that the memory access time did not exceed a certain threshold, which is:

The value of s depended on our settings of memory time levels. After determining these values, we could calculate the corresponding relationship between the threshold and .

For example, if we had three memory time levels:

, 1, and 2, at the frequency

, we could calculate the threshold of level

through Equation (

3), which is 90%; then, we could select a number less than 90% as the

s of Equation (

5). If we selected

s as 80%, then we could calculate the threshold of level 2, which is

. If the sampled memory time was between

and 90%, the memory time level would 1, and the processor frequency would remain unchanged. If it exceeded 90%, the system would decrease the processor’s voltage and frequency. If the sampled memory time was less than

, the voltage and frequency of the processor would increase.

The threshold of 90% seems unrealistically large, but we sampled the memory time as significantly as 90% in our simulations. The authors in [

23] analyzed the performance of SPEC 2017 programs in Intel i7-8700k, and the results showed that a considerable part of the program’s overall memory bound time was more than half. The authors in [

21] also measured the proportion of memory time of some commonly used benchmarks in the simulator. The total proportion of memory time of the programs such as

ocean-con,

fft, and

mcf4 exceeds 40%. It is not surprising that some small fragments’ proportion of memory time is more than 90%.

Table 2 shows the threshold results for power management with eight frequency levels. We first calculated the threshold when the target frequency was lower than the current frequency via Equation (

3), and then multiplied the minimal threshold by 0.8 to obtain

s in Equation (

5). Then, we could calculate the threshold when the target frequency was higher than the current frequency according to Equation (

5). When there were more frequency levels, we could use the same method to calculate the threshold for conversion between each frequency.

3.4. Fine-Grained Memory Time Estimation

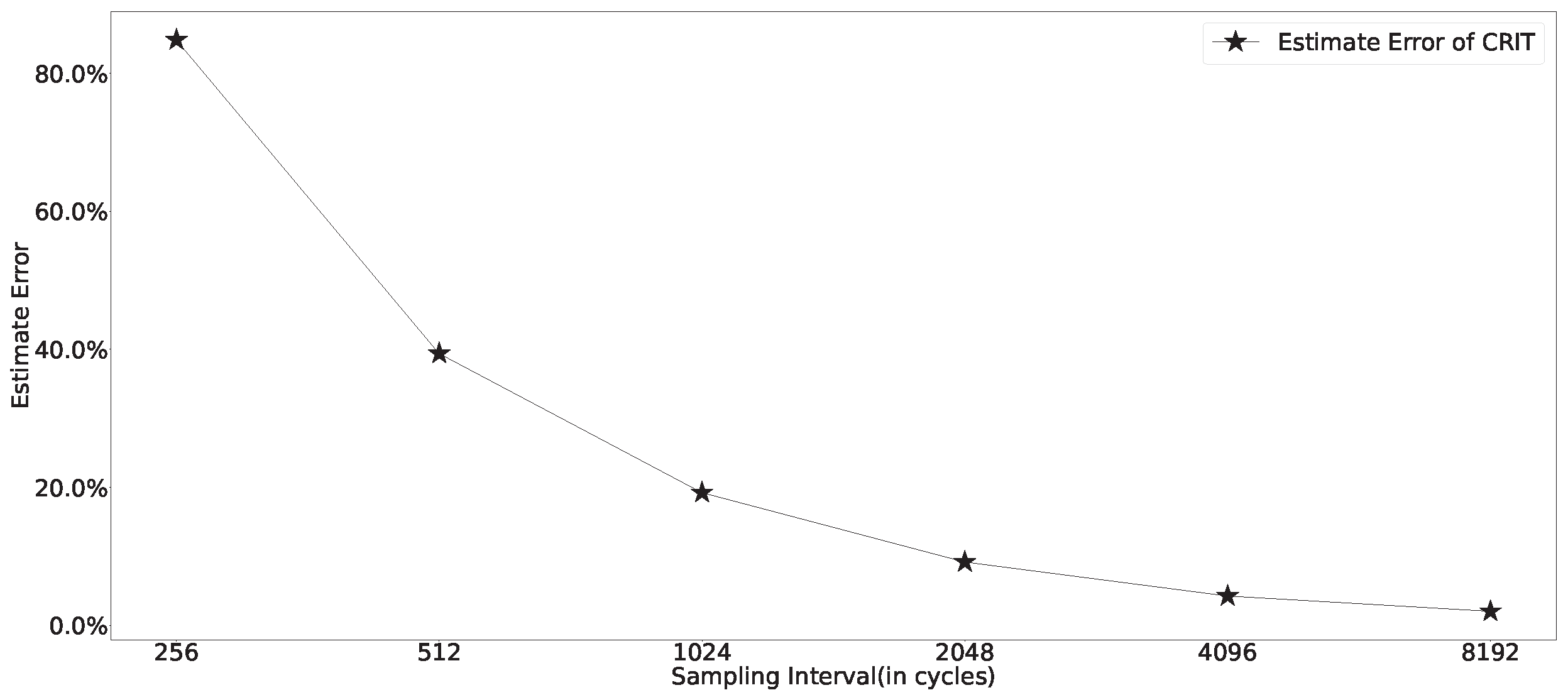

CRIT is a theoretically accurate model for estimating memory time, but it suffers from a problem when sampling densely. in the CRIT method, is only updated when the load request completes. If there are unfinished requests when sampling, then the estimated memory time is shorter than the actual value. When the sampling interval becomes shorter, the relative error of CRIT increases. Since the CPU has a fixed memory access delay time, the absolute error distribution at each sampling time is also determined. When we shorten the sampling period, the number of sampling increases, so it brings a greater error.

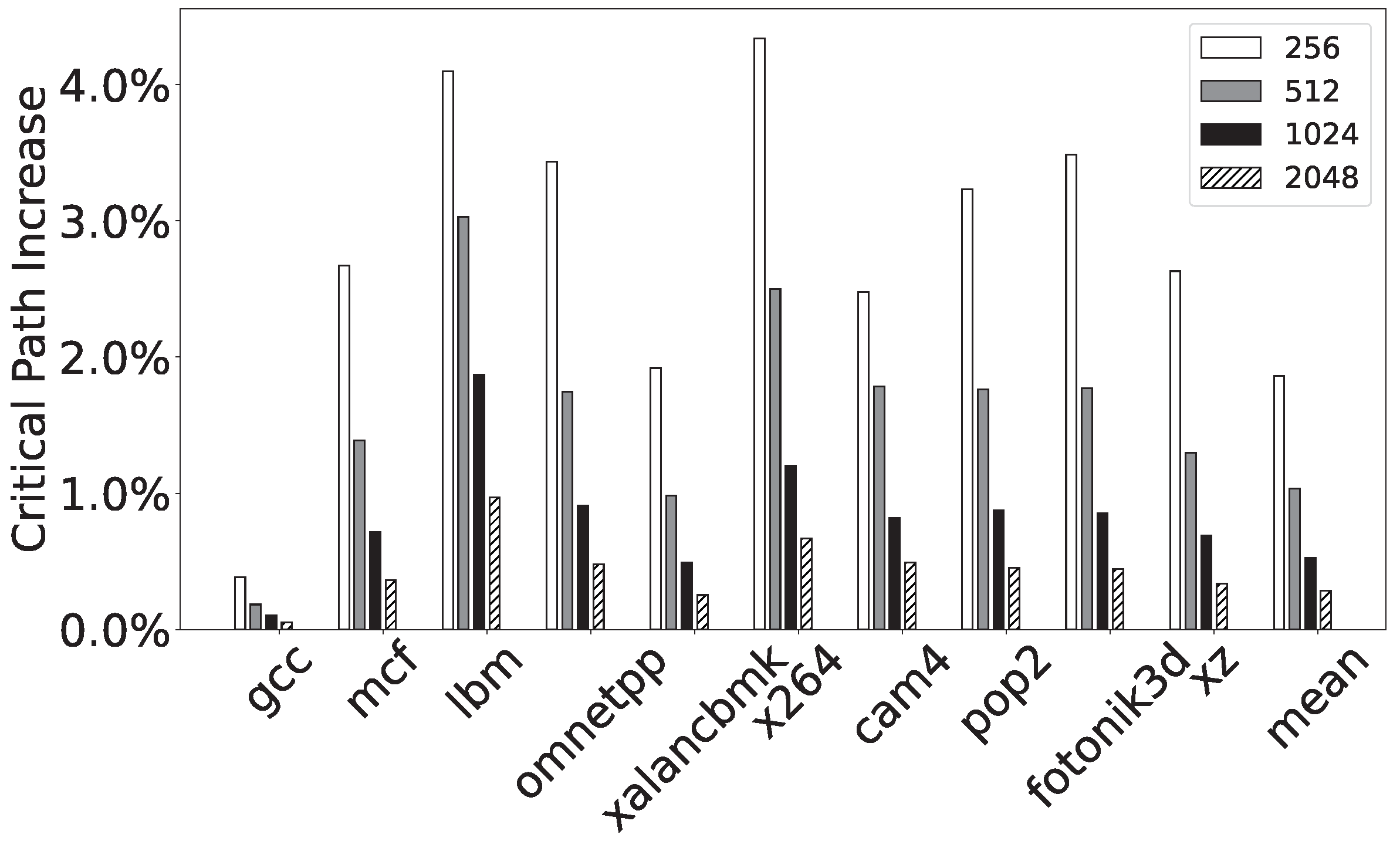

We implemented the CRIT method on the simulator platform and measured the error between the results obtained using CRIT and the actual global critical path at each sampling period, as shown in

Figure 5. The results show that, when the sampling period was 256 cycles, the average error of the CRIT method exceeded 80%. Even when the sampling period was 8192 cycles, the average error of the CRIT method was still above 2%. In some memory-intensive programs, for example, in

lbm and

cam4, its sampling error is higher than 5%.

We improved CRIT and solved the boundary problem, rendering it accessible at a finer granularity. If the largest in the CRIT was larger than , it eventually replaced the current when its corresponding MSHR completed. So, we could keep track of the largest in the CRIT method and take the largest between it and as the memory time. Comparing pairs of counters with each other brings long delays and considerable hardware overhead. To reduce this part of the overhead, we introduced a new counter . Whenever there was a larger than , was incremented by 1, and was always the maximal value between and , i.e., the estimated memory time.

Algorithm 3 shows our memory time estimation method. In every sampling interval, the mechanism first resets all counters to 0 and sends the value of

to a buffer of the CPU interface at the end. The sum of every interval’s critical path is always greater than the global critical path calculated via CRIT. For example, if we measured the critical path every cycle, all cycles where the memory access occurred were considered to be the critical path. To evaluate the impact of segmentation on accuracy, we selected sampling periods of 256, 512, 1024, and 2048 cycles, and compared the sum of their sampled local critical paths with the real critical path on the simulator. We introduce the detailed parameters of the simulator in

Section 4. As shown in

Figure 6, in SPEC CPU 2017 benchmarks, the average increase in sampled critical length was less than 2% for the four tested intervals. This shows that we could accurately obtain critical path lengths using short time intervals.

| Algorithm 3 Memory time estimation method based on critical path. |

for all MSHR do end for for all MSHR do if MSHR i begin miss then end if if MSHR i finish refill then end if if MSHR i accessing then end if end for if any then end if

|

The hardware overhead of our critical path measurement model was minimal. Assuming that we used a 2048-cycle sampling interval, we only needed to configure an 11-bit register and 11-bit adder for each counter, and an additional 11-bit comparator for each . This overhead is limited compared to the base overhead per MSHR in current processors (at least a 512-bit data register). The overhead is also more negligible than that of the original CRIT. We applied this mechanism to the Verilog code of a processor and evaluated the area using Synopsys Design Compiler, and the impact of this change in CPU area was less than 0.01%.

4. Experiments, Results, and Discussion

We used ChampSim for performance evaluation, and McPAT for power evaluation [

24]. We modified the MSHR of the L2 cache in ChampSim to support the reading of memory critical path and leading loads time and add support for dynamic frequency scaling. We achieved it by changing the delay of LLC and memory proportionally, and our frequency scaling simulation support 64 frequency levels from

to

. Among the 64 frequency levels, there were 8 DVFS levels, from 1/8 to 8/8, and in each DVFS level, there were eight digital frequency division levels. We also added PMU-related code that simulates the PMU’s control of DVFS levels. The performance events recorded by ChampSim and the time occupied by various DVFS levels were then sent to the McPAT to obtain energy consumption.

We simulated a 2.5 GHz out-of-order processor, and

Table 3 shows its configuration.

We compare the strategies of leading loads, cache miss, stall time, CRIT, and tcp. tcp is the implementation of the algorithm in Algorithm 1. The thresholds of each memory time level were calculated with Equations (

3) and (

5). We simulated tcp with 512-, 1024-, 2048-, and 4096-cycle sample intervals. Other methods use the same thresholds as tcp. For the cache miss method, we first counted the average L2 miss time in ChampSim and multiply it with the miss count to obtain memory time. Our simulation gave management granularity of 100

s for leading loads, cache miss, stall time, and CRIT.

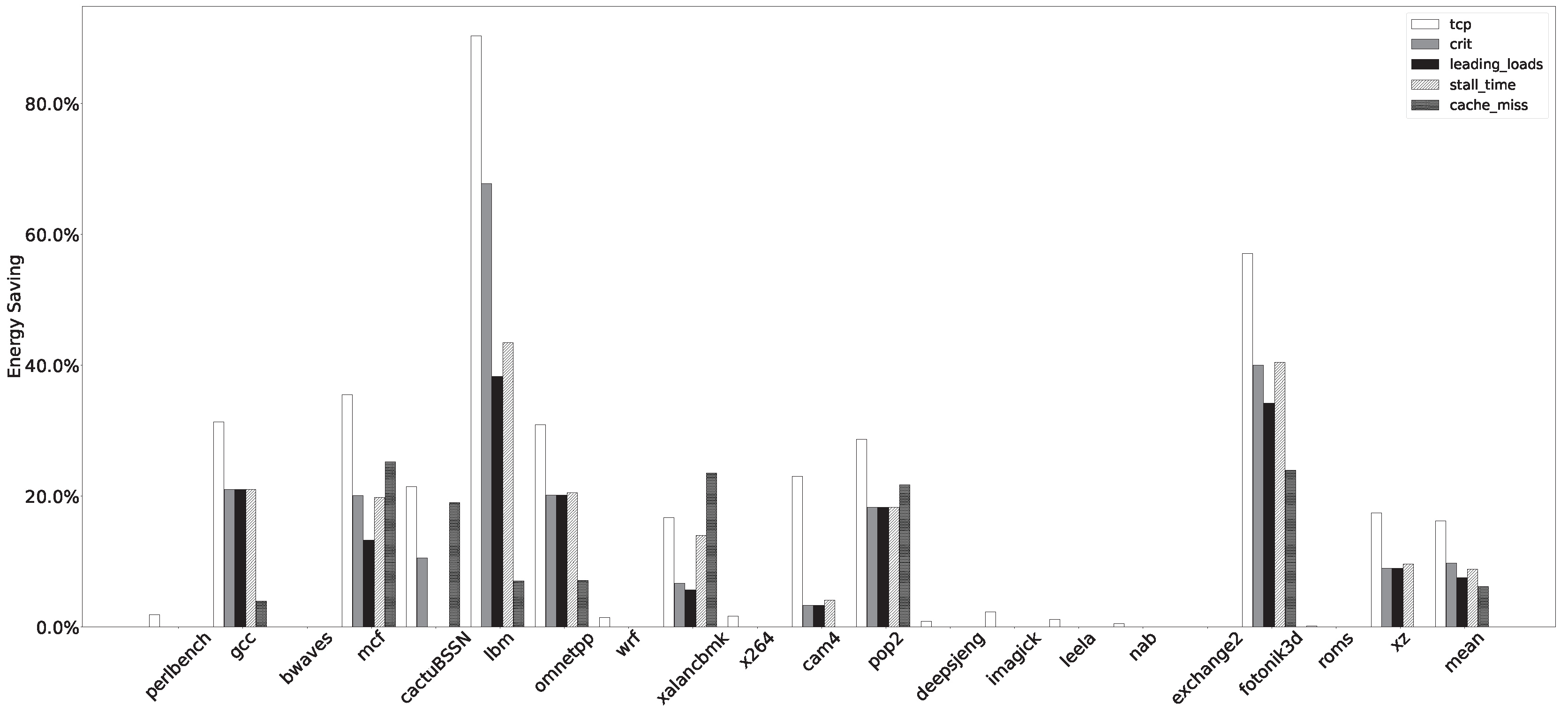

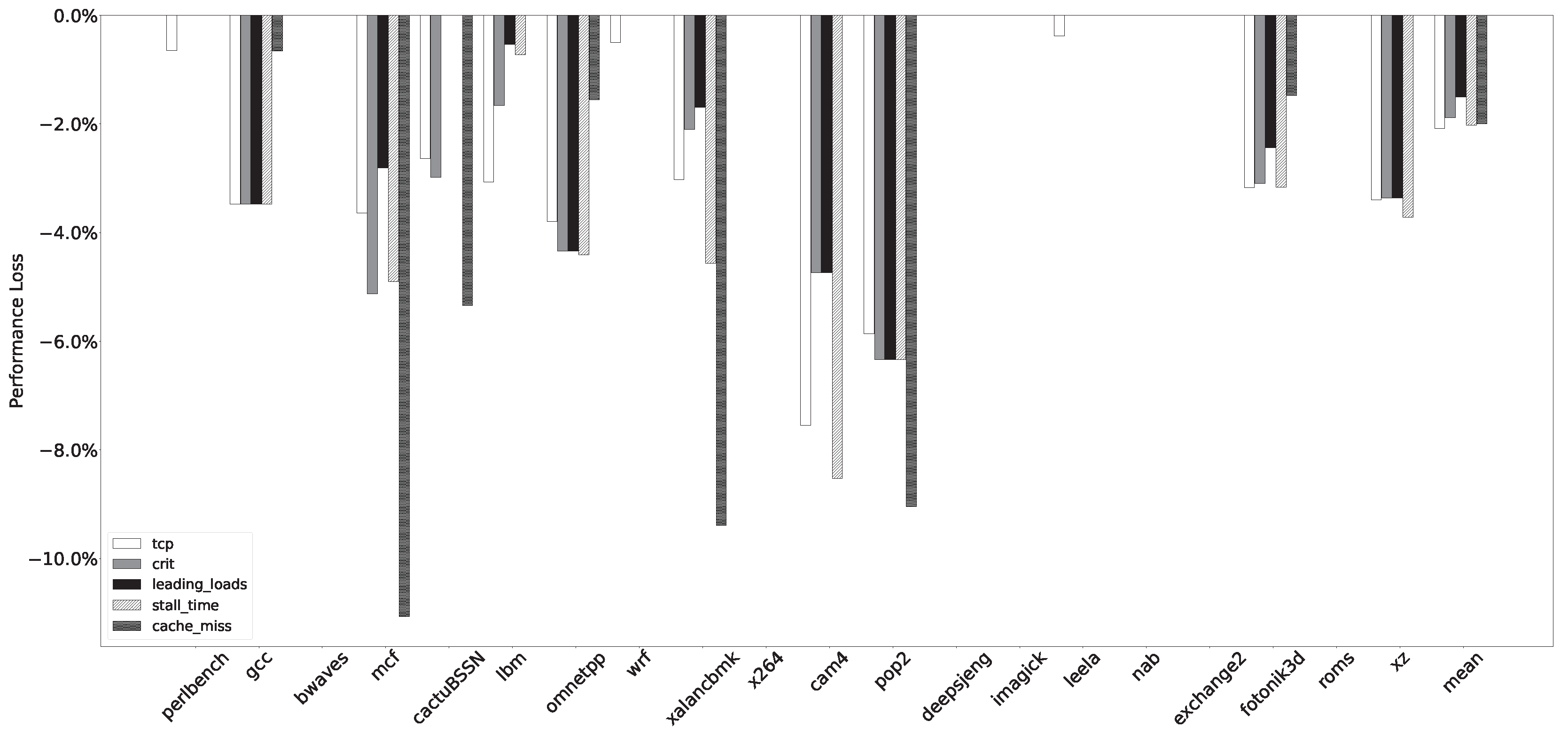

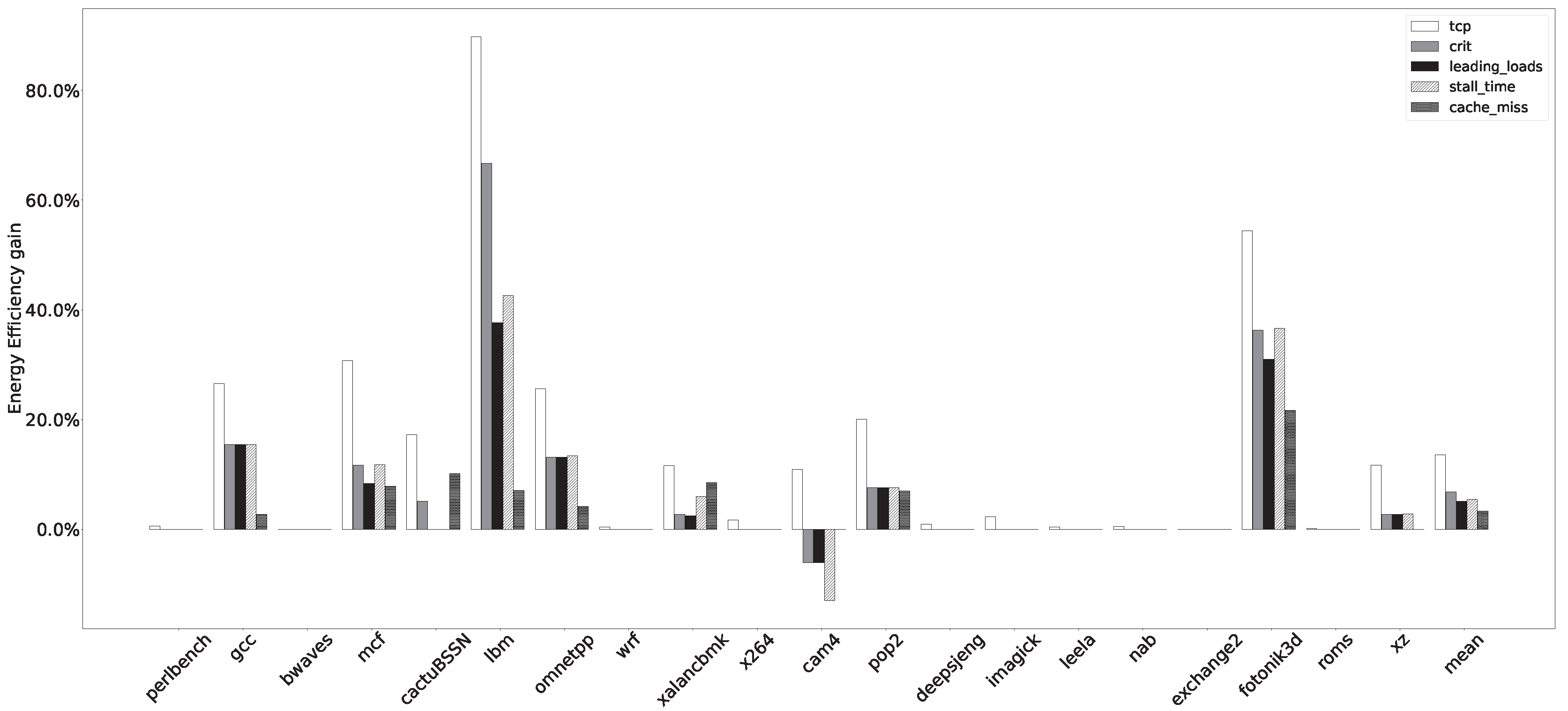

We simulated the performance of the above methods on the SPEC CPU 2017 benchmarks.

Table 4 shows these benchmarks’ average energy consumption reduction, performance degradation, and energy efficiency improvement under different power management strategies. The baseline was a non-DVFS system, and we computed the energy efficiency using ED

P. Our proposed methods based on digital frequency dividers were better than the previous method.

We used the same performance degradation tolerance when calculating these methods’ thresholds, which were 10%. However, in actual simulations, the results obtained regarding performance degradation using this threshold were around 2% because our formula guarantees a lower bound on performance degradation in the worst case, which is not always the worst case in actual test benchmarks. Because power management methods always seek to reduce power consumption with as little performance loss as possible, our experiment was fair in that the performance degradation was similar across all the methods.

Figure 7 shows the energy consumption reduction for these power management strategies. In almost all programs, the energy consumption reduction of the tcp method is superior to other power management strategies, except

xalancbmk, which was smaller than that of the cache miss method. However, this program’s performance degradation caused by the cache miss method was higher than that of our proposed method. The proposed method is still optimal in terms of energy efficiency.

On average, the CRIT method outperformed stall time, followed by leading loads and cache miss. The CRIT, stall time, and leading loads methods are stable in each benchmark, but cache miss was unstable in each program. In mcf, pop2, and xalancbmk, the cache miss method consumed less energy, but at the same time, its performance dropped more. We used the average L2 invalidation delay to calculate the threshold, and the L2 cache delay was different in different test programs. Using the same L2 failure time estimate for various programs caused the inconsistency of the cache miss method in each test program.

Figure 8 shows the performance degradation for different power management strategies. The experimental results show that, except for cache miss, the performance degradation of power management strategies was less than 10%. Although we set the performance degradation tolerance to 10% when calculating the thresholds, the number of clock cycles for a cache miss is different in different programs. As a result, in some programs with relatively short cache miss delays, the cache miss method adopts a more aggressive choice, which makes the performance degradation exceed 10% in some programs. The proposed method had finer control granularity and could change the processor’s frequency in a shorter time, thus rendering its performance slightly worse than that of other methods.

Figure 9 shows the energy efficiency of these power management strategies. We used ED

P to measure energy efficiency. Since the proposed method had slightly worse performance degradation than that of some existing methods and significantly reduces power consumption, It also significantly improves energy efficiency. The proposed method almost doubled the energy efficiency in

omnetpp and

pop2. In some programs in which the traditional method cannot improve energy efficiencies, such as

deepsjeng and

imagick, the proposed method also slightly improved energy efficiency.

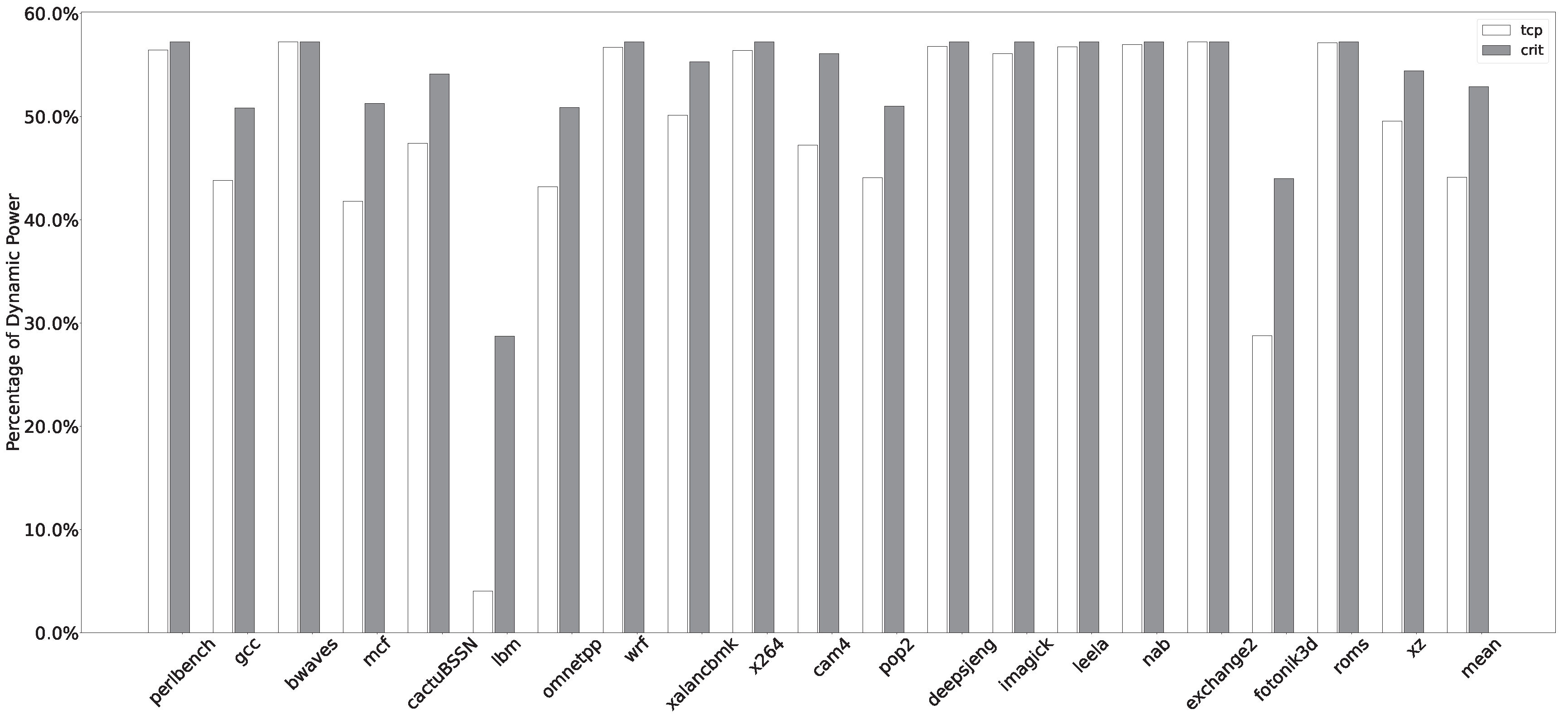

Since the proposed method based on the digital frequency divider significantly reduced the dynamic power consumption, we paid special attention to the difference in the ratio of dynamic power consumption to the total power consumption between the proposed method and the existing methods. We chose the existing optimal method CRIT to compare with our approach.

Figure 10 compares each benchmark’s proportion of dynamic power to total power consumption with the tcp and CRIT methods. The proportion of dynamic power in the proposed method in each program was lower than that in the CRIT method, and the proposed method reduced the average proportion from 52.90% of CRIT to 44.13%. However, the decrease in the proportion of dynamic power consumption was limited because the inversion of circuit signals was inevitable to complete the computing task.

4.1. Impact of Sampling Interval Length

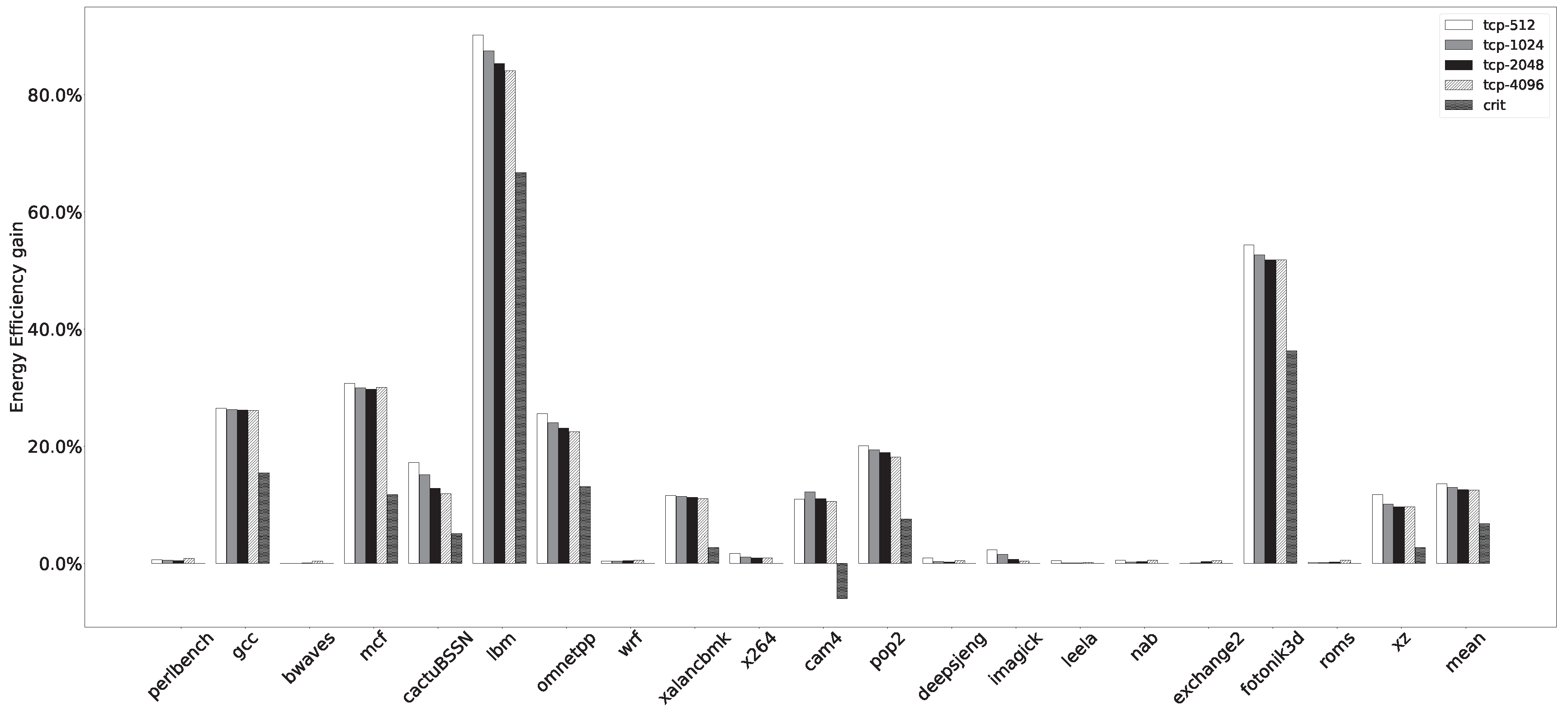

Different sampling periods impact the effect and hardware overhead of the method proposed in this paper. A more extended sample period means less fragmentation of the program, in which case we cannot capture more power-saving opportunities. Although a shorter sampling period reduces the hardware overhead of the counter, it imposes higher requirements on the computing power of the power management model. For an optimal trade-off, we compared the impact of different sampling periods on the method proposed in this paper.

Figure 11 shows the energy efficiency gains of proposed power management strategies using different sampling periods and their comparison with the CRIT method. Generally speaking, a finer-grained sampling period can obtain more opportunities for frequency adjustment, thereby improving energy efficiency. The energy efficiency of most benchmarks decreases with the increase in sampling period, but the optimal sampling period in

cam4 was 1024 cycles because, in 512 cycles, there was more performance degradation, which made the overall energy efficiency drop compared to the 1024 cycles.

4.2. Impact of Response Mechanism

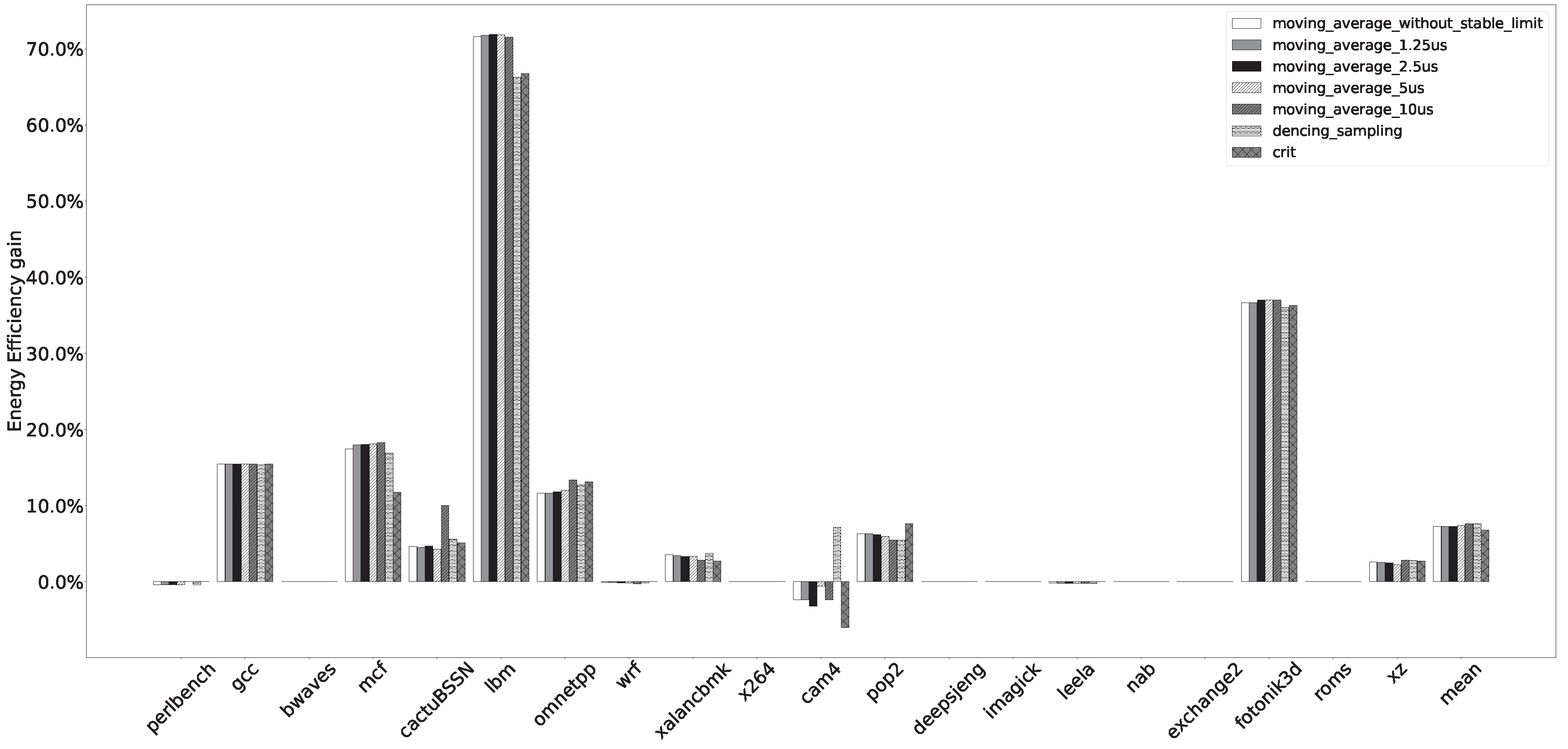

The method proposed in this paper can choose two response mechanisms, moving average and dense sampling. Intuitively, the moving average is better than dense sampling, but the overhead caused by the moving average is more significant. First, the moving average method requires the power management module to have a more considerable memory to record the memory time history. Second, the data processing program of the moving average is more complicated and requires a higher-performance power management module.

We compared the performance of the two response mechanisms regarding energy efficiency improvement.

Figure 12 compares the two response mechanisms. We used a sampling period of 1024 cycles in the moving average method and selected no

, and 1.25, 2.5, 5, and 10 microseconds

. We did not add the digital frequency divider to emphasize the energy efficiency improvement brought by the fast response mechanism. The energy efficiency improvement brought by the moving average and dense sampling methods is similar in geometric mean. However, the dense sampling method obtains the advantages only in

cam4. The moving average with a

of 10

s is better in other programs, and the overall energy efficiency when the

is 10

s is better than that of other configurations.

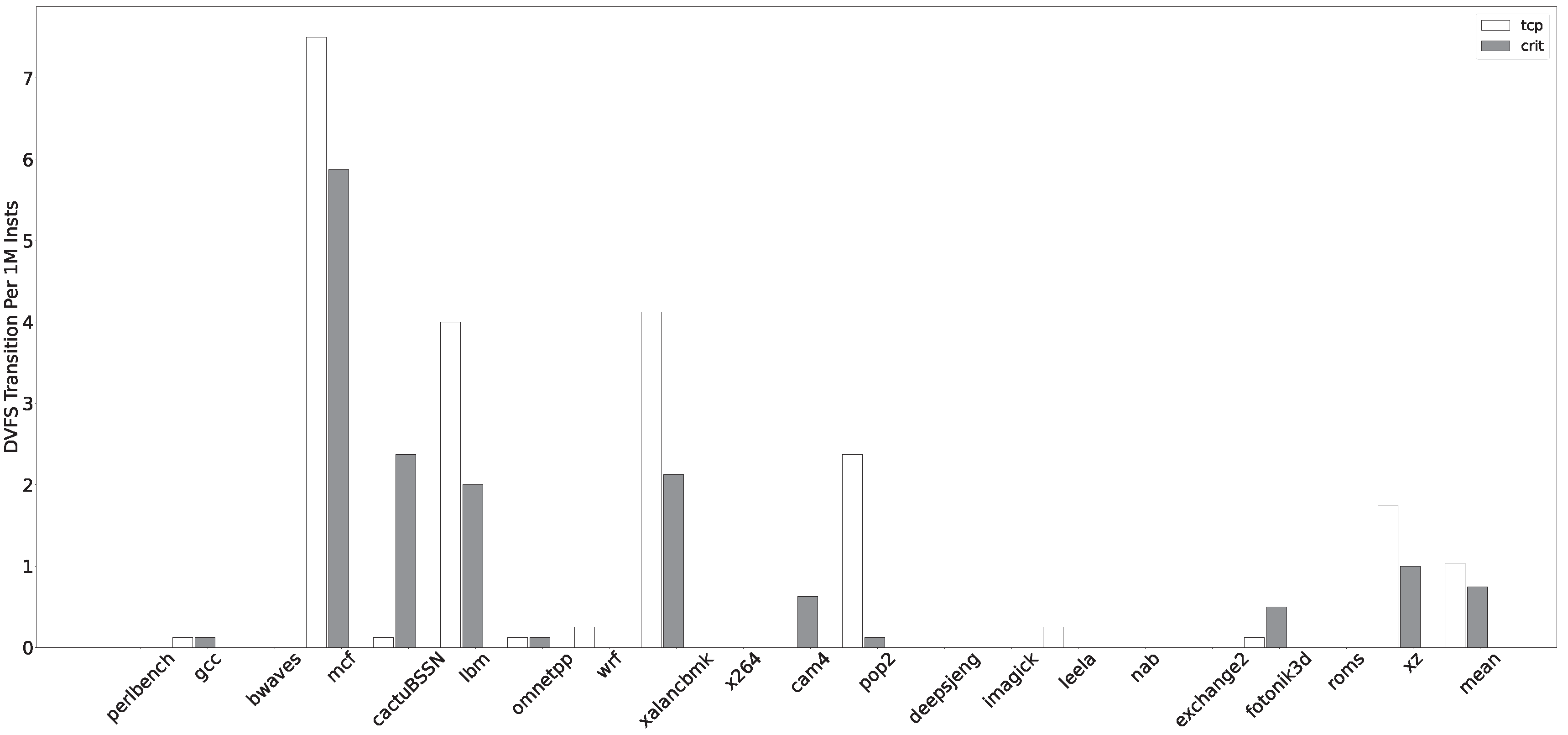

4.3. Impact to DVFS Transactions Times

Voltage changes bring additional energy consumption overhead. If the method proposed in this paper significantly increases the number of DVFS operations, it may not perform as well in actual products as it does in simulation. We statisticized the changes in the number of DVFS operations of the proposed method in each benchmark on the simulator.

Figure 13 compares the number of DVFS switchings of the proposed method and the CRIT method. We used DVFS operations per million instructions as a measure. The proposed method had an average of 1.03 voltage adjustments per million instructions, slightly higher than 0.744 for CRIT. The proposed method did not significantly increase the number of voltage transitions.